RSVM: a Region-based Software Virtual Memory for GPU

-

Upload

palmer-hale -

Category

Documents

-

view

27 -

download

2

description

Transcript of RSVM: a Region-based Software Virtual Memory for GPU

RSVM: a Region-based Software Virtual Memory for GPU

Feng Ji*, Heshan Lin†, Xiaosong Ma*‡* North Carolina State Univeristy

† Virginia Tech‡Oak Ridge National Lab

PACT 2013

2

GPU Presence Today

Compute Graphics

3

GPU Computing Challenge

• Parallel computing• Memory management

Core 0

Core 1

Core 2

Core 3

Main memory Device memory

L1/ Shared memory

PCI-E

CPU GPU

cache cache

cache cache

cache L2

SM

4

Problematic Manual GPU Memory Management

• Malloc()– Resource

limit

• Memcpy()– Hardcoded

• Working set

A

B

C

Matrix Mul C = A x B

Host Code

Device Code

5

CPU-GPU Memory Management• State of the art: host-side memory management

– GPU compilers [Jablin:PLDI11, Jabin:CGO12; Pai:PACT12]– Task scheduling runtimes [Rossbach: SOSP11]– GPU ADSM [Gelado: ASPLOS10]

• Limitations– Memory management action before/after GPU kernel– No fine-grained control in GPU code– Cannot leverage GPU online data access

GPU Runtime

VM Driver

DRAM CPU BUS GPU

Host Code Device Code

GPGPU API

Application

OS

Hardware

CPU-GPU memory management Our solution: Enabling memory

management in GPU kernel

Host-side management

Existing solution: host

side controlling

GPU memory

6

Region-based software virtual memory• Match host-side virtual memory

– Abstract memory domains– Automate data movement on demand– Swap out device memory

• Unique challenges of CPU-GPU heterogeneous memory system– No existing architecture support for VM

• Solution: software-based mechanisms

– GPU processing massively parallel• Solution: building on GPU atomic operations

– GPU and drivers being black boxes• Solution: implementing using standard GPGPU APIs

– CPU-GPU synchronization expensive• Solution: asynchronous runtimes, relaxed consistency

– GPU-initiated communication difficult• Solution: GPU callback

• Region– Repeated idea (CRL [Johnson:SOS95], ADSM [Gelado: ASPLOS10], etc.)– Finer granularity

7

Roadmap

• Introduction • RSVM: region-based software virtual memory

for GPU– Region API– Design: region table, transparent GPU swap

• Evaluation results• Conclusion

8

Region-based Software Virtual Memory for GPU (RSVM)

• User specifies via Region API– Defining RSVM managed data unit (create)– Annotating data unit access code block (map/unmap)

• RSVM manages both CPU and GPU memory– Moving data on-demand across CPU and GPU– Intra-kernel data fetching to GPU– Transparent GPU memory swapping to host

memory• For GPU kernels with excessive memory requirement

9

RSVM Design

GPU Runtime

VM Driver

DRAM CPU BUS GPU

Host Code Device Code

GPGPU API

Application

OS

Hardware

RSVM

Region API Region API

Callback Server

Region Manager

Region Table

Callback RPC

Region Manager

Region Table

Callback

10

Region as basic data unit• Decide system-managed basic data

unit– Page? One size fit all?

• Region– User-defined data block– Linear or multi-D (CUDA supports 3D

memory layout): <width, height, stride>

• Benefit– Abstracts CPU/GPU memory domain– Allows optimization of PCIe efficiency

by varying region’s definition• developers know it better than system

– No false sharing

A

B

C

11

Region API 1: define region and region collection

rgn_id r_A = rgn_create_cpu (size_A);

rgn_coll_id rc_B = rgn_coll_create (size_B, num_rgns_in_B, B_row_length, //stride threadBlock.y, //width

B_rows); //heightrgn_coll_id rc_C = rgn_coll_create

(size_C, num_rgns_in_C, C_row_length,

threadBlock.y, C_rows);

A

B

C

• Region Collection: a set of regions.

• Rgn_coll_create: iteratively create all regions in this set.

12

Region API 2: use region in the host

float *A = rgn_map_cpu (r_A, rgn_op_writeonly, NULL, NULL);

rgn_coll_meta meta_B = rgn_coll_get_meta_cpu (rc_B);

for(i: 0 to blockDim.y) { float *pB = rgn_map_cpu

(meta_B->rgns[i], rgn_op_writeonly, NULL, NULL);

} A

B

C

• Region Collection Meta: metadata of region collection.

• An array of rgn_ID’s.• Implemented as a

region Itself.

meta_B

13

Region API 3: exchange information

rsvm_sync();mmKernel<<<NB, NT>>>

(rc_C, r_A, rc_B);

A

B

C

• rsvm_sync(): host-side API.

• Exchange information across CPU-GPU.

• GPU side runtime knows r_A, rc_B, and rc_C exist.

14

Region API 4: use region in GPU kernel

int complete, req;float *dA = rgn_map_gpu

(r_A, rgn_op_readonly, &complete, &req);

if (!complete) dA = rgn_wait_map_gpu(r_A, req);

rgn_unmap_gpu(r_A);

A

B

C

Asynchronously mapping in the background.

useful work: e.g. map other regions

15

Region API 5: use region collection in GPU kernel

rgn_coll_meta meta_B = rgn_coll_get_meta_gpu (rc_B);

float *pdB = rgn_map_gpu (meta_B->rgns[blockIdx.y], rgn_op_readonly, &complete, &req);

if (!complete) pdB = rgn_wait_map_gpu(meta_B->rgns[blockIdx.y], req);

rgn_unmap_gpu(meta_B->rgns[blockIdx.y]);

A

B

C

16

Region States in RSVM

• Relaxed consistency– Host and Device runtimes asynchronously drive

state change until rsvm_sync• Protocol: MSI adapted protocol

17

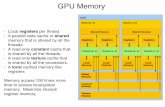

Region Table

id status version

loc_ptr far_ptr

0 mod 5 0xaaa0 0x8000

1 shared 2 0xaee0

2 shared 1 0xacd0

3 unused 0

4 unused 0

id status version

loc_ptr far_ptr

0 shared 4 0x8000 0xaaa0

1 sharing 2 0x80d0 0xaee0

2 invalid 1 0xacd0

3

4

• Table replicated on CPU and GPU– Relaxed consistency: merge at sync

• Challenge: local operation vs. avoid conflict• Table partitioned

– 4096 entries / segment, owned by one side– New region from unused entry in one’s own segment: local op.– Allocating a new segment: synchronous op.

Software TLB in the shared memory• Consistency

CPU – Owner GPU

18

GPU region fault: asynchronous map

• Rgn_map_GPU(rgn, op, *complete, *req)• Rgn_wait_map_GPU(rgn, req)

Device CodeCallback Server Callback RPC Region Manager

Map_GPU()Call_async(map)

Return (req) Return (complete, req)

Set callback flag on GPU

Wait_map_GPU(req)Call_wait(req)

PCIe data transferto GPU buffer

Call back:• Host-side polling [Stuart:Europarw10]• Avoid PCIe traffic jam• Novel collective callback

19

GPU Transparent Memory swap• Challenge: no specialized GPU thread• Solution: embedding swap in map/unmap ops

• Split operations, triggered by low memory– Operation 1: Swap

• GPU requests CPU to fetch dirty regions

– Operation 2: Reclaim• GPU frees clean buffers

• Not-Frequent-Used (NFU) counter of each region– Updated by GPU in every map op.– Sorted by CPU during swap

• Swap made re-entrant: concurrent swap requester will– Back off seeing ongoing swap– Prepare candidates list from previous completed swap

Tswap

Trec

Tendrec

20

Evaluation

• Test bed– Intel x86 Xeon E5507, 6 GB main mem– Nvidia GTX480 (15 SM, 1.5 GB dev mem), PCIe 2.0– Ubuntu 10.04 LTS, linux 2.6.32, CUDA 5.0rc

• Benchmark workload– Benchmark from CUDA SDK, Rodinia [Uva:rodinia]– Case study: MatrixMul , BFS [ORNL:SHOC]

21

Benchmarks fit in GPU

• MatrixMul– Computation-intensive, scale well with GPU cores– Overhead: device library code compiled into GPU kernel– Register file pressure:

• (# of reg / thread ): 25 -> 60• Occupancy (active threads / SM ): 1024 -> 512

22

Discussion: GPU register file for RSVM device library code

• GPU register assignment to threads– Static, equally to each thread

• Compiler reports max register count requirement for each thread• Runtime calculates occupancy, kernel launch success/fail

• GPU register file not enough for RSVM• Not all threads run into RSVM library code path

concurrently• Possible way of over-subscribing threads for register

file usage?– Dynamically managing registers among threads?

23

Case study: Graph Breadth-first Search

• Iteration (kernel) by BFS distance• Metric: traversed edges/sec (TEPS)• Dynamic memory access patter – input

dependent– DIMACS challenge [DIMACS]– GTgraph [Gtgraph]

24

BFS Input

• m/n – edge factor, number of edge/vector

(N and M in 10^6.)

25

BFS parallelism

• Graph: nodes + adjacent list (edges)– Warp -> each node to visit in current BFS iteration– Thread -> each neighbor of this node

• RSVM’s overhead– setup in each kernel (BFS iteration)– map nodes’ region, and then – map adjacent list’s region

• Overhead decreases with increased edge factor

26

Large Graphs

• Manual: – Partition graphs– Manual swapping between

GPU buffers and host buffers in each BFS iteration

– Local data access– Depend on used data in each

data partition

• CUDA Unified Virtual Address (UVA): – Use host-side 0-copy buffers– Access only needed data– PCIe bottleneck in traffic jam

RSVM Improvement due to• Caching in GPU memory• Batched PCIe data transferAdditional advantage• Single code base

UVA performs

better than Manual

Manual performs

better than UVA

27

Conclusion

• Virtual memory for CPU-GPU heterogeneous system involving GPU-side runtime is possible– GPU as computation engine, rather than co-

processor– Novel designs: region table, asynchronous region

API, CPU assisted GPU swap, software TLB in GPU shared memory

– Insight: register file pressure– Benefit dynamic memory accesses (e.g. Graph)

28

THANK YOU!

29

Reference• [Augonnet:ICPADS10] C. Augonnet, J. Clet-Ortega, S. Thibault, and R. Namyst. Data-Aware Task Scheduling on

Multi-accelerator Based Platforms. Parallel and Distributed Systems, International Conference on, 0:291–298, 2010.

• [CUDA] NVIDIA CUDA. http://www.nvidia.com/object/cuda.• [Diamos:HPDC08] G. F. Diamos and S. Yalamanchili. Harmony: an execution model and runtime for heterogeneous

many core systems. In Proceedings of the 17th international symposium on High performance distributed computing, HPDC ’08, pages 197–200, New York, NY, USA, 2008. ACM.

• [DIMACS] 10th DIMACS Implementation Challenge - Graph Partitioning and Graph Clustering.• [Eichenberger:PACT05] A. E. Eichenberger, K. O’Brien, K. O’Brien, P. Wu, T. Chen, P. H. Oden, D. A. Prener, J. C.

Shepherd, B. So, Z. Sura, A. Wang, T. Zhang, P. Zhao, and M. Gschwind. Optimizing Compiler for the CELL Processor. In Proceedings of the 14th International Conference on Parallel Architectures and Compilation Techniques, PACT ’05, pages 161–172, Washington, DC, USA, 2005. IEEE Computer Society.

• [Fatahalian:SC06] K. Fatahalian, D. R. Horn, T. J. Knight, L. Leem, M. Houston, J. Y. Park, M. Erez, M. Ren, A. Aiken, W. J. Dally, and P. Hanrahan. Sequoia: programming the memory hierarchy. In Proceedings of the 2006 ACM/IEEE conference on Supercomputing, SC ’06, New York, NY, USA, 2006. ACM.

• [Gelado: ASPLOS10] I. Gelado, J. E. Stone, J. Cabezas, S. Patel, N. Navarro, and W.-m. W. Hwu. An asymmetric distributed shared memory model for heterogeneous parallel systems. In Proceedings of the fifteenth edition of ASPLOS on Architectural support for programming languages and operating systems, ASPLOS ’10, pages 347–358, New York, NY, USA, 2010. ACM.

• [GTgraph] GTgraph: A suite of synthetic random graph generators. http://www.cse.psu.edu/ madduri/software/GTgraph/index.html.

• [HSA] The HSA Foundation. http://hsafoundation.com/.

30

References• [Jablin:CGO12] T. B. Jablin, J. A. Jablin, P. Prabhu, F. Liu, and D. I. August. Dynamically managed data for CPU-GPU

architectures. In Proceedings of the Tenth International Symposium on Code Generation and Optimization, CGO ’12, pages 165–174, New York, NY, USA, 2012. ACM.

• [Jablin:PLDI11] T. B. Jablin, P. Prabhu, J. A. Jablin, N. P. Johnson, S. R. Beard, and D. I. August. Automatic CPU-GPU communication management and optimization. In Proceedings of the 32nd ACM SIGPLAN conference on Programming language design and implementation, PLDI ’11, pages 142–151, New York, NY, USA, 2011. ACM.

• [Johnson:SOS95] K. L. Johnson, M. F. Kaashoek, and D. A. Wallach. CRL: high performance all-software distributed shared memory. In Proceedings of the 15th ACM Symposium on Operating Systems Principles (SOSP ’95), pages 213–226, Copper Mountain Resort, Colorado, December 1995.

• [Kato:USENIX12] S. Kato, M. McThrow, C. Maltzahn, and S. Brandt. Gdev: First-class GPU resource management in the operating system. In Proceedings of the USENIX Annual Technical Conference (ATC), June 2012.

• [Linderman:ASPLOS08] M. D. Linderman, J. D. Collins, H. Wang, and T. H. Meng. Merge: a programming model for heterogeneous multi-core systems. In Proceedings of the 13th international conference on Architectural support for programming languages and operating systems, ASPLOS XIII, pages 287–296, New York, NY, USA, 2008. ACM.

• [Luk:MICRO09] C.-K. Luk, S. Hong, and H. Kim. Qilin: exploiting parallelism on heterogeneous multiprocessors with adaptive mapping. In Proceedings of the 42nd Annual IEEE/ACM International Symposium on Microarchitecture, MICRO 42, pages 45–55, New York, NY, USA, 2009. ACM.

• [Menon:ISCA12] J. Menon, M. De Kruijf, and K. Sankaralingam. iGPU: exception support and speculative execution on GPUs. In Proceedings of the 39th Annual International Symposium on Computer Architecture, ISCA ’12, pages 72–83, Washington, DC, USA, 2012. IEEE Computer Society.

• [ORNL:SHOC] A. Danalis, G. Marin, C. McCurdy, J. S. Meredith, P. C. Roth, K. Spafford, V. Tipparaju, and J. S. Vetter. The Scalable Heterogeneous Computing (SHOC) benchmark suite. In Proceedings of the 3 rd Workshop on General-Purpose Computation on Graphics Processing Units, GPGPU ’10, pages 63–74, New York, NY, USA, 2010. ACM.

31

Reference• [Pai:PACT’12] S. Pai, R. Govindarajan, and M. J. Thazhuthaveetil. Fast and efficient automatic memory management

for GPUs using compiler-assisted runtime coherence scheme. In Proceedings of the 21st international conference on Parallel architectures and compilation techniques, PACT’12, pages 33–42, New York, NY, USA, 2012. ACM.

• [Rossbach: SOSP11] C. J. Rossbach, J. Currey, M. Silberstein, B. Ray, and E. Witchel. PTask: operating system abstractions to manage GPUs as compute devices. In Proceedings of the Twenty-Third ACM Symposium on Operating Systems Principles, SOSP ’11, pages 233–248, New York, NY, USA, 2011. ACM.

• [Saha:PLDI09] B. Saha, X. Zhou, H. Chen, Y. Gao, S. Yan, M. Rajagopalan, J. Fang, P. Zhang, R. Ronen, and A. Mendelson. Programming model for a heterogeneous x86 platform. In Proceedings of the 2009 ACM SIGPLAN conference on Programming language design and implementation, PLDI ’09, pages 431–440, New York, NY, USA, 2009. ACM.

• [Silberstein:ASPLOS13] M. Silberstein, B. Ford, I. Keidar, and E. Witchel. GPUfs: Integrating a File System with GPUs. In Proceedings of ASPLOS 2013, 2013.

• [Uva:Rodinia] Rodinia benchmark. https://www.cs.virginia.edu/ skadron/wiki/rodinia/ index.php/Main Page.• [Yan:OSR11] S. Yan, X. Zhou, Y. Gao, H. Chen, G. Wu, S. Luo, and B. Saha. Optimizing a shared virtual memory

system for a heterogeneous CPU-accelerator platform. SIGOPS Oper. Syst. Rev., 45:92–100, February 2011.• [Stuart:Europarw10] J. Stuart, M. Cox, and J. Owens. GPU-to-CPU Callbacks. In M. Guarracino, F. Vivien, J.

Trff, M. Cannatoro, M. Danelutto, A. Hast, F. Perla, A. Knpfer, B. Di Martino, and M. Alexander, editors, Euro-Par 2010 Parallel Processing Workshops.

32

Backup slides

• Start here….

33

Related Work

• Memory hierarchy of accelerator– Specialized programming model• StarPU [Augonnet:ICPADS10], Harmony

[Diamos:HPDC08], Sequoia [Fatahalian:SC06], Merge [Linderman:ASPLOS08], Qilin [Luk:MICRO09]

– Transparent Software Caching• CellBE: [Eichenberger:PACT05]• Larrabee: [Saha:PLDI09, Yan:OSR11]

34

Related Work (cont’d)

• Compiler assisted CPU-GPU communication– ADSM [Gelado: ASPLOS10]– CGCM [Jablin:PLDI11], DyManD [Jablin: CGO12]– AMM for X10 [Pai:PACT12]

• OS support for GPGPU– Gdev [Kato:USENIX12]– Ptask [Rossbach:SOSP11]– GPUfs [Silberstein:ASPLOS13]

35

Related Work (cont’d)

• Distributed shared memory– ADSM [Gelado:ASPLOS10]– CRL [Johnson:SOSP95]

• GPU virtual memory architecture– HSA hUMA [HSA]– GPU Exception [Menon:ISCA12]

36

Transparent or Manual?

• Ideal: transparent & good performance• In practice: making compromise

Transparent Manual

Program

Performance

easy hard

hard to reason easy to control

37

Region’s State Protocol

38

Region’s State Protocol

39

Region’s State Protocol

40

Region’s State Protocol

41

Software TLB for Region Table on GPU

TLB state

Ref. count

Rgn_id status

used 2 4138 sharing

unused 0

• Shared memory (TLB) consistency with device memory (Region table)– Write through

• Shared memory (TLB) of two SMs– A safe cache line: cache hit

• Define: sharing/modifying• Some other warp has cached it• Can safely use it

– Otherwise: cache miss• Prepare TLB• AtomInc/Dec ref. count

– Fully-associative• warp parallelism

– Cache line reuse• Shared/modified:Refcnt 0• Number configurable

id status Ref. count

…

4138

4138 sharing 2

4149 shared 0

TLB in shared memory

Region Table in dev memory

42

GPU callback

• Host-side callback server thread polling a flag [Stuart:Europarw10] – GPU code remotely sets flag (in host-side 0-copy memory)

• Challenge: GPU parallelism• Avoid PCIe traffic jam– Novel collective callback: non-parameterized requests

• GPU code detects and sends one signal for all calling threads

– Host-side callback server batches PCIe data transfers for multiple concurrent callback requests• Both incoming parameters and returning values

43

GPU callbacks in RSVM

• Handling region fault– non-collective, asynchronous, and parameterized

callback• Getting new region segment– collective, synchronous, and non-parameterized

callback• Starting swap– collective, asynchronous, re-entrant, and non-

parameterized callback

Callback Collective Synchronous Parameterized Re-entrant

Handling region fault No No Yes No

Getting new region segment

Yes Yes No No

Starting swap Yes No No Yes

44

Case 1: Matrix Multiplication

• Matrix A: single region

• Matrix B: 2-d regions

• 1280 MB GPU dev mem managed by RSVM

• RSVM: ~70% efficiency

• Swap: <10% overhead

45

Small Graph BFS• TEPS

– Traversed edges/ sec• Iteration (kernel) by BFS

distance• Parallelism

– Warp -> each visiting node– Thread -> each neighbor of

the visiting node• Overhead

– RSVM mapping regions of each visit node’s adjacent list

– RSVM setup each kernel

46

Future Work

• RSVM improvement– Region table merging optimization– CPU callback server optimization– Multiple GPU support– Multiple process support

• Compiler assisted region identification– Remove manual region creation/deletion

• Leverage vendor support for GPU faulting– Remove manual map/unmap

Johnson:SOS95

47

GPU Transparent Memory swap

Callback Server Callback RPC Region Manager

Call_async_reentrant(swap)

Set callback flag on GPU

PCIe data transferfrom GPU buffer tohost mem

Call_async_reentrant(swap)

Available dev mem resource low

Call_async_reentrant(swap)

Return (swappedregions)

Rgn states to shared,Form a candidate list

Available dev memresource keep decreasing.Reclaim candidate rgn’sbuffers.