Research-only rankings of HEIs:Is it possible to measure scientific performance?

-

Upload

ludo-waltman -

Category

Science

-

view

526 -

download

0

Transcript of Research-only rankings of HEIs:Is it possible to measure scientific performance?

Research-only rankings of HEIs:Is it possible to measure scientific performance?Ludo WaltmanCentre for Science and Technology Studies (CWTS), Leiden University

ACA European Policy Seminar “12 years with global university rankings”Brussels, October 15, 2015

2

University rankings

3

Outline

• What do we mean by scientific performance?• Measuring scientific performance in the CWTS

Leiden Ranking• Is it really possible to measure scientific

performance?

4

Indicators of scientific performance• Publications:

– Total– Per faculty– Per student– Interdisciplinary– International collaboration– Nature and Science

• Citations:– Total– Per publication– Per faculty– Highly cited researchers

• Reputation survey• Others:

– Nobel Prizes/Field Medals– PhDs awarded– PhDs awarded per faculty– Post-doc positions– Research income

5

What do we mean by scientific performance?Size-dependent concept of scientific performance:• Overall contribution of a university to science• Total number of ‘performance points’ (e.g.,

publications, citations, expert recommendations, awards)

Size-independent concept of scientific performance:• Contribution of a university to science relative to

available resources• Number of ‘performance points’ divided by

available resources (e.g., number of faculty, research budget)

7

Indicators of scientific performance• Publications:

– Total– Per faculty– Per student– Interdisciplinary– International collaboration– Nature and Science

• Citations:– Total– Per publication– Per faculty– Highly cited researchers

• Reputation survey• Others:

– Nobel Prizes/Field Medals– PhDs awarded– PhDs awarded per faculty– Post-doc positions– Research income

Size-dependent indicators Size-independent indicators

Rankings based on composite indicators

8

Mixing up different concepts of scientific performance• Shanghai, THE, QS, and US News use composite

indicators• These composite indicators combine size-

dependent and size-independent indicators

It is unclear which concept of scientific performance is measured by Shanghai, THE, QS,

and US News

9

CWTS Leiden Ranking

• Focused completely on measuring scientific performance

• Purely based on bibliometric indicators• No composite indicators• Separate indicators of size-dependent and size-

independent scientific performance

10

11

Main indicators

• Size-dependent:– P: Number of publications of a university– P(top 10%): Number of publications belonging to the top 10%

most cited of their field

• Size-independent:– PP(top 10%): Proportion of publications belonging to the top

10% most cited of their field

PP ( top 10% )=P ( top 10%)P

12

Advanced bibliometric methodology• Field classification system• Counting citations vs. counting highly cited

publications• Full counting vs. fractional counting• Bibliographic database

13

About 4000 fields of science in the Leiden Ranking

Social sciences and

humanities

Biomedical and health sciences

Life and earth sciences

Physical sciences

and engineering

Mathematics and computer science

Why count highly cited publications?• Leiden Ranking counts number of highly cited

publications (top 10%)• THE, QS, and US News count number of citations• Effect of counting number of citations:

14

Why count highly cited publications?

15

Why count highly cited publications?

16

Counting citations Counting highly cited publications

Leaving out Göttingen’s most cited

publication

17

How to handle publications co-authored by multiple institutions?• THE, QS, and US News:

– Co-authored publications are fully assigned to each co-authoring institution (full counting)

• Leiden Ranking:– Co-authored publications are fractionally assigned to each co-

authoring institution (fractional counting)

This publication is assigned to Enschede, Twente, and Leiden with a weight of 1/3 each

18

Why use fractional counting?

Full counting is biased in favor of universities with a strong biomedical focus

19

Choice of bibliographic database:Is more data always better?Database 1:• Restricted to international scientific journals• University A: P = 2000; P(top 10%) = 200; PP(top 10%) = 10%• University B: P = 1000; P(top 10%) = 100; PP(top 10%) = 10%

Database 2:• Also includes a lot of national scientific journals, trade journals,

popular magazines, etc.• University A: P = 2000; P(top 10%) = 220; PP(top 10%) = 11%• University B: P = 1500; P(top 10%) = 135; PP(top 10%) = 9%

US university

Chinese university

20

Choice of bibliographic database:Is more data always better?• Universities from China, Russia, France, Germany,

etc. may not benefit at all from having more data• Indicators should be based on a restricted

database of publications

Leiden Ranking uses Web of Science, but excludes national scientific journals, trade journals, and

popular magazines

21

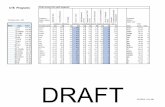

How much difference does it make? Comparing LR and THE citation scores

• Weak correlation between size-independent citation scores in Leiden Ranking and THE

• Leiden Ranking score of 10% corresponds with THE scores between 30 and 85

22

Fundamental problem of size-independent bibliometric indicators

• Same resources as Univ. B• P = 1000• P(top 10%) = 200• PP(top 10%) = 20%

• Same resources as Univ. A• P = 2000• P(top 10%) = 300• PP(top 10%) = 15%

Univ. A Univ. B

• Taking into account that both universities have the same resources, it is clear that university B has performed better

• However, according to the PP(top 10%) indicator, university A has performed better

23

Conclusions

• Is it really possible to measure scientific performance?– Size-dependent concept of scientific performance:

• Reasonable bibliometric measurements are possible– Size-independent concept of scientific performance:

• Purely bibliometric measurements are problematic

• Do not combine size-dependent and size-independent indicators of scientific performance

• Bibliometric indicators should:– Be normalized using a sufficiently large number of fields– Count the number of highly cited publications, not the number of

citations– Use fractional counting, not full counting– Be based on a restricted database of publications

24

Thank you!

![The Power of Performance Indicators: Rankings, …...In an interview, Forrest said: “Global modern slavery is hard to measure and Bill’s a measure kind of guy… [In] management](https://static.fdocuments.in/doc/165x107/5fcc942c5d0edd0d686a224d/the-power-of-performance-indicators-rankings-in-an-interview-forrest-said.jpg)