Reinforcement Learning-based NOMA Power Allocation in the … · 2018. 10. 18. · 1 Reinforcement...

Transcript of Reinforcement Learning-based NOMA Power Allocation in the … · 2018. 10. 18. · 1 Reinforcement...

-

1

Reinforcement Learning-based NOMA Power Allocation inthe Presence of Smart Jamming

Liang Xiao∗, Yanda Li∗, Canhuang Dai∗, Huaiyu Dai†, H. Vincent Poor‡∗ Dept. of Communication Engineering, Xiamen University, China. Email: [email protected]

† Dept. of Electrical and Computer Engineering, NC State University, Raleigh, NC. Email: huaiyu [email protected]‡ Dept. of Electrical Engineering, Princeton University, Princeton, NJ. Email: [email protected]

Abstract—Non-orthogonal multiple access (NOMA) systemsare vulnerable to jamming attacks, especially smart jammers whoapply programmable and smart radio devices such as softwaredefined radios to flexibly control their jamming strategy accord-ing to the ongoing NOMA transmission and radio environment.In this paper, the power allocation of a base station in a NOMAsystem equipped with multiple antennas contending with a smartjammer is formulated as a zero-sum game, in which the basestation as the leader first chooses the transmit power on multipleantennas while a jammer as the follower selects the jammingpower to interrupt the transmission of the users. A Stackelbergequilibrium of the anti-jamming NOMA transmission game isderived and the conditions assuring its existence are provided todisclose the impact of multiple antennas and radio channel states.A reinforcement learning based power control scheme is proposedfor the downlink NOMA transmission without being aware of thejamming and radio channel parameters. The Dyna architecturethat formulates a learned world model from the real anti-jamming transmission experience and the hotbooting techniquethat exploits experiences in similar scenarios to initialize thequality values are used to accelerate the learning speed ofthe Q-learning based power allocation and thus improve thecommunication efficiency of NOMA transmission in the presenceof smart jammers. Simulation results show that the proposedscheme can significantly increase the sum data rates of usersand thus the utilities compared with the standard Q-learningbased strategy.

Index Terms—NOMA, smart jamming, power allocation, gametheory, reinforcement learning

I. INTRODUCTIONBy using power domain multiplexing with successive inter-

ference cancellation, non-orthogonal multiple access (NOMA)as an important candidate for 5G cellular communications cansignificantly improve both the outage performance and userfairness compared with orthogonal multiple access systems[1]. The Multiple-input and multiple-output (MIMO) NOMAtransmission can achieve even higher spectral efficiency. Forinstance, the MIMO NOMA system as proposed in [2] ap-plies zero-forcing based beamforming and pairing to reducethe cluster size of the cellular system and interference andthus significantly increases the capacity. The MIMO NOMAscheme as developed in [3] provides fast transmission of small-size packets following the required outage performance. The

Copyright (c) 2015 IEEE. Personal use of this material is permitted.However, permission to use this material for any other purposes must beobtained from the IEEE by sending a request to [email protected].

This work was supported in part by National Natural Science Foundationof China under Grant 61671396 and in part by the U.S. National ScienceFoundation under Grants CCF-1420575, CNS-1456793, ECCS-1307949 andEARS-1444009.

MIMO-NOMA system implements successive interferencecancellation to detect and remove the signals of the weakerusers according to downlink cooperative transmission protocolsuch as those in [4] and [5].

NOMA transmission is vulnerable to jamming attacks, asan attacker can use programmable and smart radio devicessuch as software defined radios to flexibly control the jammingpower according to the ongoing communication [6]–[9]. As anextreme case, all the downlink users in a NOMA system canbe simultaneously blocked, if a jammer sends strong jammingpower on the frequency at which the users send their signals.By reducing the signal-to-interference-plus-noise ratio (SINR)of the user signals, jammers can significantly decrease the datarates of the NOMA transmission and even result in denial ofservice attacks.

Game theoretic study of anti-jamming communications inwireless networks such as [9]–[13] has provided insights intothe defense against smart jammers. In this paper, the anti-jamming transmissions of a base station (BS) in an MIMONOMA system are formulated as a zero-sum Stackelberggame. In this game, the transmit power of the BS for eachuser is first determined on each antenna and then a smartjammer chooses the jamming power in an attempt to interruptthe NOMA transmission at a low jamming cost. The Stack-elberg equilibrium (SE) of the anti-jamming MIMO NOMAtransmission game is derived, showing that the BS tends toallocate more power to the strong user but has to satisfy theminimum rate demand of weak users under full jamming-power attack of the smart jammer. Conditions under whichthe SE exists in the game are provided to disclose the impactof the radio channel states and the number of antennas on thecommunication efficiency of NOMA transmissions.

Reinforcement learning techniques, such as Q-learning canderive the optimal strategy with probability one, if all thefeasible actions are repeatedly sampled over all the statesin the Markov decision process [14]. Therefore, we proposea Q-learning based power allocation strategy that choosesthe transmit power based on the observed state of the radioenvironment and the jamming power and a quality function orQ-function that describes the discount long-term reward foreach state-action pair. This scheme is applied for the BS toderive the optimal policy for multiple users in the dynamicanti-jamming MIMO NOMA game without being aware ofthe channel model and the jamming model. A hotbootingQ-learning based power allocation exploits the experiencesin similar anti-jamming NOMA transmission scenarios to set

-

2

the initial value of the quality function for each state-actionpair and thus accelerates the convergence of the standard Q-learning algorithm that always uses zero as the initial Q-function value. Meanwhile, the Dyna architecture that formu-lates a learned world model from real experience can be usedto increase the learning speed to derive the optimal policy [15].The Dyna algorithm based power allocation scheme emulatesthe planning and reactions from hypothetical experiencesand takes extra computation for updating Q-function values.Simulation results show that the proposed power allocationschemes enable the BS to improve the sum data rate for theusers in the downlink NOMA transmission against jamming.

The contributions of this work can be summarized asfollows:• We formulate an anti-jamming MIMO NOMA transmis-

sion game and derive the SEs of the game.• We propose a hotbooting Q-learning based power allo-

cation scheme for multiple users in the dynamic NOMAtransmission game against a smart jammer without know-ing the jamming and channel models.

• The Dyna architecture and hotbooting techniques areapplied to accelerate the learning speed and thus enhancethe anti-jamming NOMA communication efficiency.

The remainder of the paper is organized as follows: Therelated work is reviewed in Section II. The anti-jammingNOMA transmission game is formulated in Section III, and theSE of the game is derived in Section IV. Two reinforcementlearning based power allocation schemes are proposed for thedynamic NOMA transmission game in Section V and VI,respectively. Simulation results are provided in Section VII.The conclusions are drawn in Section VIII.

II. RELATED WORK

Power allocation is critical for NOMA transmissions. Forinstance, the low-complexity scheme as proposed in [16]improves the ergodic capacity for a given total transmitpower constraint. The NOMA power allocation as proposedin [17] increases the sum data rates by pairing users withdistinctive channel conditions. The joint power allocation anduser pairing method proposed in [4] employs the minorization-maximization algorithm to further improve the sum data ratesin the multiple-input and single-output NOMA system. Thepower allocation method for multicarrier NOMA transmissiondesigned in [18] exploits the heterogeneity of service qualitydemand to perform the successive interference cancellationand reduce power consumption.

User fairness is one of the key challenges in the NOMApower allocation. The power allocation based on proportionalfairness proposed in [19] improves both the communicationefficiency and user fairness in a two-user NOMA system. Abisection-based iterative NOMA power allocation developedin [20] enhances user fairness with partial channel stateinformation. The cognitive radio inspired power allocationdeveloped in [5] satisfies the quality-of-service requirementsfor all the users in the NOMA system. A NOMA systemthat pairs users with independent and identically distributedrandom channel states was investigated in [21] to reduce the

outage probabilities for each user. However, most existingNOMA power allocation strategies do not provide jammingresistance, especially against smart jamming.

Game theoretic study of anti-jamming communications pro-vides insights into the design of the power allocation [9]–[11],[22]–[26]. For example, the power allocation game formulatedin [9] provides a closed-form solution to improve the ergodiccapacity of MIMO under smart jamming. The MIMO trans-mission game formulated in [10] studies the power allocationstrategy on the artificial noise against a smart attacker who canchoose active eavesdropping or jamming. The anti-jammingtransmission in a peer-to-peer network was formulated as aBayesian game in [11], in which each jammer is identifiedbased on the attack in the previous time slots. The stochasticcommunication game as analyzed in [25] proposes an intrusiondetection system to assist the transmitter and increase thenetwork capacity against both eavesdropping and jamming.

Learning based attacker type identification developed in [27]improves the transmission capacity under an uncertain type ofattacks in the time-varying radio channels for the stochasticsecure transmission game. The Q-learning based power controlproposed in [28] resists smart jamming in cooperative cog-nitive radio networks. The hotbooting learning technique asproposed in [29] exploits the historical experiences to initializethe Q values and thus accelerates the convergence rate of Q-learning. Model learning based on tree structures as proposedin [30] further improves the sample efficiency in stochasticenvironments and thus the convergence rate of the learningprocess.

The Q-learning based power control strategy proposed in[31] improves the secure capacity of the MIMO transmissionagainst smart attacks. Compared with our previous work in[31], we consider the anti-jamming MIMO NOMA trans-mission and improve the game model by incorporating thesum data rates and the minimum data rates required by eachuser in the utility function. In addition, a fast Q-based powerallocation algorithm that combines the hotbooting techniqueand Dyna architecture is proposed to improve the communi-cation efficiency compared with the standard Q-learning basedscheme as developed in [31].

III. ANTI-JAMMING MIMO NOMA TRANSMISSIONGAME

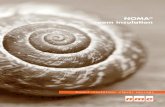

As shown in Fig. 1, we consider a time-slotted NOMAsystem consisting of M mobile users each equipped withNR antennas to receive the signals from a BS against asmart jammer. The BS uses NT transmit antennas to sendthe signal denoted by xkm to User m, with m = 1, 2, · · · ,Mand k = 1, 2, 3, · · · . A superimposed NT -dimensional signalvector xk =

∑Mn=1 x

kn is sent at time k.

The smart jammer uses NJ antennas to send jamming signaldenoted by zJ with the power constraint pkJ = E

[zTJ zJ

]. The

jamming power pkJ ∈ [0, PJ ] is chosen based on the ongoingdownlink transmit power, where PJ is the maximum jammingpower.

Let gkj,i,m denote the channel power gain from the i-th antenna of the BS to the j-th antenna of User m, and

-

3

NT antennas

UserM

BS

User 1

Signal detection

of User 1

Signal detection

of User 1

SIC of the

signals frff om the

weaker M-MM 1users

SIC of the

signals from the

weakerM-1users

Signal detection

of User M

Signal detection

of User M

...

NJ antennas

Smart jammer

...

User 22

...

...

antennasNR

antennasNR

...antennasNR

,

k

J MH

,1

k

JH

,

k

B MH

,1

k

BH

SIC of the signal

frff om User 1

SIC of the signal

from User 1Signal detection

of User 2

Signal detection

of User 2

,2

k

JH

,2

k

BH

...

Fig. 1. NOMA transmission against a smart jammer.

HkB,m = [gkj,i,m]1≤j≤NT ,1≤i≤NR denote the channel matrixbetween the BS and User m. The time index k is omitted ifno confusion occurs. Without loss of generality, the channelconditions of users are sorted as ||HB,M || > · · · > ||HB,1||,where ||X|| denotes the Frobenius norm of a matrix X, i.e.,the user rank follows the order of channel power gains,indicating that User M may stay in the cell central areawhile User 1 is at the cell-edge. More specifically, the MIMOchannel can be viewed as a bundle of independent sub-channels and thus the channel gain matrices of the users canbe ordered by the squared Frobenius norm, according to [4]and [32]. Similarly, HkJ,m denotes the channel matrix betweenthe jammer and User m. Each channel matrix is assumed tohave independently and identically distributed (i.i.d.) complexGaussian distributed elements, i.e., Hµ,m ∼ CN (0, σµ,mI),with µ = B, J and m = 1, 2, · · · ,M , and the i-th largesteigenvalue of Hµ,mHHµ,m is denoted by h

µm,i. Thus, the signal

received by User m is given by

ym = HB,mM∑n=1

xn + HJ,mzJ + nm, m = 1, 2, · · · ,M, (1)

where the noise vector nm consists of NR additive normalizedzero-mean Gaussian noise for User m.

Let θkm be the power allocation coefficient for User m, i.e.,the allocated transmit power is PT θkm. A BS has difficultyobtaining instantaneous channel state information accurately intime in the time-varying wireless environment and thus equallyallocates the transmit power PT over the NT antennas, asmentioned in [16]. On the other hand, the BS can obtain thestatistical results regarding channel states of the users via thefeedback from the users and decide the signal decoding orderaccordingly. Thus the transmit covariance matrix of User mdenoted by Qm is given by

Qm = E(xmxHm) =θmPTNT

INT , (2)

where a superscript H denotes the conjugate transpose. In thisgame, the power allocation vector chosen by the BS is denotedby θk = [θkm]1≤m≤M for all the M users with the total powerconstraint

∑1≤m≤M θ

km = 1. For simplicity, let Ω denote the

vector space of all the available power allocation strategies,with ∀θ ∈ Ω.

On the other hand, without knowing the downlink channelpower gain of the users, the jammer has to equally allocate the

TABLE ISUMMARY OF SYMBOLS AND NOTATION

M Number of usersNT/R Number of antennas of BS/RXNJ Number of jamming antennasxk Superimposed transmit signal for usersHkµ,m Channel matrixhµm,i i-th eigenvalue of channel matrixσµ,m Channel matrix covarianceθk =

[θkm]1≤m≤M Power allocation coefficient vector for users

Ω Space of feasible power allocation vectorsPJ Maximum jamming poweru Utility of the BSγ Cost coefficient of the jammerξ Feasible set of jamming powerR0 Minimum data rate required by a userL Number of the SINR quantization levelsI Number of emulated environmentsT Size of training dataΦ Observation counterτ Modeled rewardτ ′ Reward recordΨ State transition probability

jamming power, with the jamming power covariance matrixgiven by

QJ = E(zJzHJ) =pJNJ

INJ . (3)

The effective precoding and successive interference cancel-lation design for the MIMO-NOMA system have been studiedin works such as [4] and [5]. More specifically, the downlinkNOMA transmission scheme developed in [4] increases thesum data rate under the decodability constraint accordingto a simplified SIC method that orders the users based onthe norm of the channel gain vector. The signal alignmentscheme developed in [5] decomposes the multi-user MIMO-NOMA scenario into multiple separate single-antenna NOMAchannels, and orders the users based on the large-scale channelfading gain with user pairing.

In this work, we apply the successive interference cancel-lation scheme that orders the users according to the channelpower gains under jamming, similar to [16] and [33]. Thedecoding order according to the Frobenius norms of thechannel matrices is optimal if the jamming power is not strongenough to change the SIC order, i.e., for any two users j andm, hBm/h

Jm ≥ hBj /hJj holds if m > j. Nevertheless, if this

assumption does not hold as the jamming power received bya user is much stronger than that received by the user whodecodes afterwards, the NOMA system ranks the decodingorder according to the channel power gain normalized by thejamming power in SIC, i.e., User m decodes before User j,if hBm/h

Jm ≥ hBj /hJj with m > j, if not specified.

According to the singular value decomposition of the chan-nel matrix Hµ,m, µ = B, J and the assumption of the Gaussiandistributed signals, the achievable data rate of User m denotedby Rm depends on the SINR of the signal, and is given by

-

4

[16], [33] as ∀1 ≤ m ≤M − 1

Rm = log2 det

(I +

(I + HB,m

M∑n=m+1

QnHHB,m

+HJ,mQJHHJ,m

)−1HB,mQmH

HB,m

)

=

NR∑i=1

log2

1 + NJθmPThBm,iNTNJ +NJ

M∑n=m+1

θnPThBm,i +NT pJhJm,i

.(4)

Applying interference cancellation as illustrated in [33], UserM with the best channel condition can subtract the signals ofall the other M − 1 users from the superimposed signal x.Thus the data rate of User M denoted by RM is given by

RM = log2 det

(I +

(I + HJ,MQJH

HJ,M

)−1HB,MQMH

HB,M

)=

NR∑i=1

log2

(1 +

NJθMPThBM,i

NTNJ +NT pJhJM,i

).

(5)

Note that the received jamming signal is viewed as noise,and does not change the decoding sequence according to thesuccessive interference cancellation strategy in [33].

By exploiting the difference of the channel power gainsbetween the users, the BS usually increases the power alloca-tion coefficient for weak users to satisfy the quality of service(QoS) required by the user, such as the minimum data ratedemand of the user denoted by R0. Therefore, the NOMAtransmission has to ensure min(R1, · · · , RM ) ≥ R0.

The jammer aims to interrupt the NOMA transmission andmake at least one user’s data rate less than the quality ofservice requirement, i.e., Rm ≤ R0, for 1 ≤ m ≤ M ,as shown in the first term in (6). If failing to do that, thejammer has a goal to reduce the overall data rate with lessjamming power and avoid being detected, as indicated in (6).Let γ denote the jamming cost, including the jamming powerconsumption and the risk to be detected, which depends onthe intrusion detection methods used by the BS.

The anti-jamming NOMA transmissions are formulated as azero-sum Stackelberg game denoted by G, in which the BS asthe leader first chooses the power allocation coefficient vectorθ = [θm]1≤m≤M to improve the system throughput underthe user rate constraint, and a smart jammer as the followerchooses the jamming power in an attempt to interrupt theNOMA transmission at a low jamming cost.

The utility of the BS denoted by u depends on the sum datarate of the M users and the jamming cost, and is given by

u = I(

min1≤m≤M

Rm ≥ R0)( M∑

m=1

Rm + γpJ

), (6)

where I(·) is the indicator function that takes value 1 if theevent is true and 0 otherwise, and the transmission fails if theQoS is not satisfied. In summary, we have considered an anti-jamming NOMA transmission game given by G = 〈{B, J},{θ, pJ}, {u,−u}〉. For ease of reference, we also summarizeour commonly used notation in Table 1.

IV. SE OF THE NOMA TRANSMISSION GAMEAt a Stackelberg equilibrium of the anti-jamming NOMA

transmission game, the BS as the leader chooses its powerallocation strategy for all downlink users first to maximizeits utility given by (6) considering the response of the smartjammer as the follower. Then the jammer as the followerdecides the jamming power to minimize the utility u basedon the observed ongoing transmission. The SE strategies ofthe NOMA transmission game denoted by (θ∗, p∗J (θ

∗)) aregiven by definition as

p∗J (θ) = arg min0≤pJ≤PJ

u(θ, pJ) (7)

θ∗ = arg maxθ∈Ω

u(θ, p∗J (θ)). (8)

In the following, we first consider the transmission game forthe BS with NT ×NR MIMO that serves M users, and thenthe specific case with 2 users to evaluate the SE strategiesunder different anti-jamming NOMA transmission scenarios.

Lemma 1. The anti-jamming NT ×NR NOMA transmissiongame with M users has a unique SE (θ∗, p∗J) given by (9)-(10),if hBm,ih

JM,i < h

BM,ih

Jm,i,∀1 ≤ m ≤M −1,∀1 ≤ i ≤ NR and

(11) holds.

Proof. See Appendix A.

According to (9)-(10), the SE of the anti-jamming NOMAtransmission game depends on the QoS in the transmission(R0), the number of antennas

(NT and NR

), the channel

condition of the weak users and the maximum jamming power(PJ). If the jammer has good channel conditions to the weaker

users, the BS allocates more power to the strongest user (i.e.,User M ) to improve the sum data rates, and meets the QoSof the weaker users. If the total transmit power is low for thetransmission, the jammer applies full jamming power to blockall the M users in terms of the transmission QoS.

Corollary 1. The anti-jamming NT×NR NOMA transmissiongame G with M users has a unique SE (θ∗, 0), where θ∗ isgiven by ∀1 ≤ m ≤M − 1

NR∑i=1

log2

1 + θ∗mPThBm,iNT +

(1−

m∑n=1

θ∗n

)PThBm,i

= R0, (18)if hBm,ih

JM,i < h

BM,ih

Jm,i,∀1 ≤ m ≤M −1,∀1 ≤ i ≤ NR and

PT

NR∑i=1

M−1∑m=1

NT θ∗mh

Jm,ih

Bm,i(

NT +M∑

n=m+1

θ∗nPThBm,i

)(NT +

M∑n=m

θ∗nPThBm,i

)

+

(1−

M−1∑n=1

θ∗n

)hBM,ih

JM,i

NT +

(1−

M−1∑n=1

θ∗n

)PThBM,i

< NJγ ln 2.(19)

Proof. See Appendix B.

If the jamming cost is high or the jamming channel expe-riences serious fading as shown in Eq. (19), a smart jammer

-

5

NR∑i=1

log2

1 + NJθ∗mPThBm,iNTNJ +NJ

(1−

m∑n=1

θ∗n

)PThBm,i +NTPJh

Jm,i

= R0, ∀1 ≤ m ≤M − 1 (9)NR∑i=1

M−1∑m=1

NTNJθ∗mPTh

Jm,ih

Bm,i(

NTNJ +NJM∑

n=m+1

θ∗nPThBm,i +NT p

∗Jh

Jm,i

)(NTNJ +NJ

M∑n=m

θ∗nPThBm,i +NT p

∗Jh

Jm,i

)

+

NJ

(1−

M−1∑n=1

θ∗n

)PTh

BM,ih

JM,i(

NJ + p∗JhJM,i

)(NTNJ +NJ

(1−

M−1∑n=1

θ∗n

)PThBM,i +NT p

∗Jh

JM,i

) = γ ln 2 (10)

PT

NR∑i=1

M−1∑m=1

NT θ∗mh

Jm,ih

Bm,i(

NT +M∑

n=m+1

θ∗nPThBm,i

)(NT +

M∑n=m

θ∗nPThBm,i

) +(

1−M−1∑n=1

θ∗n

)hBM,ih

JM,i

NT +

(1−

M−1∑n=1

θ∗n

)PThBM,i

> NJγ ln 2(11)

NR∑i=1

log2

(1 +

NJθ∗1PTh

B1,i

NTNJ +NJ(1− θ∗1)PThB1,i +NTPJhJ1,i

)= R0 (12)

NR∑i=1

(NTNJθ

∗1PTh

J1,ih

B1,i(

NTNJ +NJ(1− θ∗1)PThB1,i +NT p∗JhJ1,i) (NTNJ +NJPThB1,i +NT p

∗Jh

J1,i

)+

NJ(1− θ∗1)PThB2,ihJ2,i(NJ + p∗Jh

J2,i

) (NTNJ +NJ(1− θ∗1)PThB2,i +NT p∗JhJ2,i

)) = γ ln 2 (13)PT

NR∑i=1

(NT θ

∗1h

J1,ih

B1,i(

NT + (1− θ∗1)PThB1,i) (NT + PThB1,i

) + (1− θ∗1)hB2,ihJ2,iNT + (1− θ∗1)PThB2,i

)> NJγ ln 2 (14)

NR∑i=1

log2

(1 +

NJ(1− θ∗1)PThB2,iNTNJ +NT p∗Jh

J2,i

)= R0 (15)

NR∑i=1

(NTNJθ

∗1PTh

J1,ih

B1,i(

NTNJ +NJ(1− θ∗1)PThB1,i +NT p∗JhJ1,i) (NTNJ +NJPThB1,i +NT p

∗Jh

J1,i

)+

NJ(1− θ∗1)PThB2,ihJ2,i(NJ + p∗Jh

J2,i

) (NTNJ +NJ(1− θ∗1)PThB2,i +NT p∗JhJ2,i

)) = γ ln 2 (16)PT

NR∑i=1

(NT θ

∗1h

J1,ih

B1,i(

NT + (1− θ∗1)PThB1,i) (NT + PThB1,i

) + (1− θ∗1)hB2,ihJ2,iNT + (1− θ∗1)PThB2,i

)> NJγ ln 2 (17)

will keep silent to reduce the power consumption. In addition,as the jamming power at SE also decreases with NT , MIMOsignificantly improves the jamming resistance.

Corollary 2. The anti-jamming NT×NR NOMA transmissiongame G with M = 2 users has a unique SE (θ∗1 , p∗J) given by(12)-(13) if hB1,ih

J2,i < h

J1,ih

B2,i,∀1 ≤ i ≤ NR and (14) holds.

Proof. See Appendix C.

As a concrete example, the utility of the BS in Fig. 2 ismaximized if the rate demand of the weak user is satisfiedunder full jamming power (i.e., (12)). Moreover, the feasibleallocation coefficient for the strong user increases with thetotal transmission power PT and the jamming power at theSE decreases with it.

Figure 3 presents the jamming power and the sum data rateof 2 users in terms of the channel power gain of User 1, σB,1.

More specifically, the average SINR of users increases withσB,1 and the number of transmission antennas NT , because thejammer will fail to interrupt the user experience and decreasethe jamming power to reduce the cost. Therefore, the sum datarate of the M users improves with σB,1 and NT .

Corollary 3. The anti-jamming NT×NR NOMA transmissiongame G with M = 2 users has a unique SE (θ∗1 , p∗J) given by(15)-(16) if hB1,ih

J2,i > h

J1,ih

B2,i +

(hB2,i − hB1,i

)NJ/p

∗J ,∀1 ≤

i ≤ NR and (17) holds.

Proof. See Appendix D.

If the jamming channel condition to the stronger user (i.e.,User 2) is high as shown in the given condition, the sumdata rate increases with more power allocated for the weakuser (i.e., User 1). On the other hand, the smart jammer, as afollower observing the ongoing transmission will apply its full

-

6

05

1015

20

0

0.5

10

5

10

15

20

Jamming po

wer

← SE

Power allocation coefficient

Util

ity o

f the

BS

(a) PT = 20W

05

1015

20

0

0.5

10

5

10

15

20

25

Jamming po

wer

← SE

Power allocation coefficient

Util

ity o

f the

BS

(b) PT = 40W

Fig. 2. Performance of the anti-jamming transmission game for the MIMONOMA system with M = 2, NT = NR = 3, NJ = 2, σB,1 = 10 dB,σB,2 = 20 dB, σJ,1 = σJ,2 = 12 dB, PJ = 20 W, R0 = 1 bps/Hz andγ = 0.5.

jamming power to interrupt the communication of the strongeruser if its transmit power is not high enough as in (15).

V. NOMA POWER ALLOCATION BASED ON HOTBOOTINGQ-LEARNING

The repeat interactions between a BS and a smart jam-mer can be formulated as a dynamic anti-jamming NOMAtransmission game. The optimal downlink power allocationdepends on the locations of the users, the radio channelstates and the jamming parameters in the time slot, whichare challenging for a BS to accurately estimate. In addition,the power allocation strategy of the BS has an impact on thejamming strategy and the received SINR of the users andthus the anti-jamming NOMA transmission process can beformulated as a finite Markov decision process. Therefore, theNOMA system can apply Q-learning, a widely-used model-free reinforcement learning technique in the power allocationwithout being aware of the instantaneous channel state infor-mation at the base station. Instead of relying on the knowledgeof the instantaneous channel state information, this NOMAsystem uses the statistical channel state information and thetransmission experience to determine the power allocation.

The proposed power allocation depends on the qualityfunction or Q-function — the discount expected reward foreach state-action pair. The state at time k that reflects the

4 5 6 7 8 9 10 11 1215

15.5

16

16.5

17

17.5

18

18.5

19

19.5

20

Channel gain of User 1, σB,1 (dB)

AverageSIN

Rofusers

σB,2 = 18 dB

σB,2 = 19 dB

σB,2 = 20 dB

NT = NR = 6

NT = NR = 4

(a) Average SINR of users

4 5 6 7 8 9 10 11 1212

14

16

18

20

22

24

26

28

Channel gain of User 1, σB,1 (dB)

Sum

data

rate

ofusers

σB,2 = 18 dB

σB,2 = 19 dB

σB,2 = 20 dB

NT = NR = 6

NT = NR = 4

(b) Sum capacity of users

Fig. 3. Performance of the anti-jamming transmission game for the MIMONOMA system at the SE with M = 2, NJ = 3, σJ,1 = σJ,2 = 12 dB,PT = PJ = 20 W, R0 = 1 bps/Hz and γ = 0.5.

environment dynamics, denoted by sk, is chosen as the lastreceived SINR of each user denoted by SINRk−11≤m≤M , i.e.,sk = [SINRk−1m ]1≤m≤M ∈ ξ, where ξ is the space of all thepossible SINR vectors. More specifically, each user quantizesthe received SINR into one of L levels for simplicity, andsends back the quantized value to the BS as the observedstate for the next time slot. Therefore, the BS uses the MLvector as feedback information to update its Q function in theQ-learning based NOMA system.

For simplicity, the feasible action set of the BS in the powerallocation, or each of the transmit power coefficient is quan-tized into L non-zero levels denoted by θkm ∈ {l/L}0≤l≤L,∀1 ≤ m ≤ M , and the power allocation strategy of the BSis denoted by θk =

[θkm]1≤m≤M ∈ Ω. The transmit signal of

the M users is sent at the power allocation given by θk.Note that the random exploration of standard Q-learning

at the beginning of the game due to the all-zero Q-valueinitialization usually requires exponentially more data than theoptimal policy [34]. Therefore, we propose a hotbooting tech-nique, which initializes the Q-value based on the training dataobtained in advance from large-scale experiments in similarscenarios. As we will see, the hotbooting Q-learning basedpower allocation can decrease the useless random explorations

-

7

Algorithm 1: Hotbooting preparation1: Q(s,θ) = 0, V (s) = 0, ∀s ∈ ξ, ∀θ ∈ Ω, and s0 = 02: for i = 1, 2, · · · , I do3: Emulate a similar environment4: for k = 1, 2, · · · ,K do5: Choose action θk at random6: Obtain the utility uk and the received SINR of each

user SINRk1≤m≤M7: sk+1 = [SINRkm]1≤m≤M8: Q∗(sk,θk) = (1−α)Q∗(sk,θk)+α(uk+δV ∗(sk+1))9: V ∗(sk) = max

θ∈ΩQ∗(sk,θ)

10: end for11: end for

in an initial environment and thus accelerate the learning speedof the Q-learning scheme in the dynamic game and improvethe communication efficiency of the anti-jamming NOMAtransmission.

The preparation stage of the hotbooting technique aspresented in Algorithm 1 performs I anti-jamming MIMONOMA transmission experiments before the start of the game.The number of experiments I is chosen as a tradeoff betweenthe convergence rate and the risk of overfitting in severalspecial scenarios. In each experiment, a BS randomly selectsthe transmit power for M users at a given state in similarradio transmission scenarios against jammers and observesthe resulting transmission status and the received utility. LetQ(s,θ) denote the quality or Q function of the BS for systemstate s and action θ, which is the expected discounted long-term reward observed by the BS. The discount factor denotedby δ ∈ [0, 1] is chosen according to the uncertainty regardingthe future gains. As shown in Algorithm 2, at each time slot,the BS updates both the Q function and the value function,given by

Q(sk,θk

)← (1− α)Q

(sk,θk

)+ α

(u(sk,θk

)+ δV (sk+1)

),

(20)

where the learning rate α ∈ (0, 1] represents the weight of thecurrent experience in the learning process. The value functionV (s) is the maximum of Q(s,θ) over all the available powerallocation vectors, given by

V (sk) = maxθ∈Ω

Q(sk,θ). (21)

The resulting Q-function denoted by Q∗ will be used toinitialize the Q-value in the hotbooting Q-learning basedscheme.

During the learning process as summarized in Algorithm2, the tradeoff between exploitation and exploration has animportant impact on the convergence performance. Therefore,according to ε-greedy policy, the power allocation coefficientθk is based on the system state sk and the Q-function and isgiven by

Pr(θ = θ̂

)=

1− ε, θ̂ = arg maxθ′∈ΩQ(sk,θ′)

ε|Ω|−1 , o.w.

. (22)

Algorithm 2: Hotbooting Q-learning based NOMApower allocation

1: Set Q(s,θ) = Q∗(s,θ), ∀s ∈ ξ, ∀θ ∈ Ω, and s0 = 02: for k = 1, 2, 3, ... do3: Choose θk via (22)4: for m = 1, 2, ...,M do5: Allocate power θkmPT for the signal to User m6: end for7: Send the superimposed signal xk over NT antennas8: Observe SINRk1≤m≤M and the utility u

k

9: sk+1 = [SINRkm]1≤m≤M10: Update Q(sk,θk) via (20)11: Update V (sk) via (21)12: end for

The BS with our proposed RL based power allocationscheme does not know the instantaneous channel state infor-mation, the jamming power and the jamming channel gains,which are required by the global optimization algorithm suchas [35]. In this way, the BS learns the jamming strategyaccording to the anti-jamming NOMA transmission historyand derives the optimal transmit power allocation strategythat improves the long-term anti-jamming communication ef-ficiency.

However, the convergence complexity of Algorithm 2 de-pends on the size of the action-state space |ξ × Ω|, whichexponentially increases with the number of users and thequantization levels of the power allocation coefficients. Thechannel time variation on the other hand, requires much fasterlearning rate of the power allocation. Therefore, we furtherpropose a fast Q-learning based power allocation scheme thatcombines the hotbooting technique and the Dyna architectureto improve the communication efficiency of Algorithm 2 indynamic radio environments in the following section.

VI. NOMA POWER ALLOCATION BASED ON FASTQ-LEARNING

The Dyna architecture formulates a learned world modelfrom the real anti-jamming NOMA transmission experiencesto accelerate the learning speed of Q-learning in dynamicradio environments. More specifically, we apply the Dynaarchitecture that emulates the planning and reactions fromhypothetical experiences to improve the anti-jamming effi-ciency in the fast Q-learning based NOMA power allocationalgorithm. The algorithm is summarized in Algorithm 3, andthe main structure is illustrated in Fig. 4.

The fast Q-learning power allocation algorithm also appliesAlgorithm 1 to initialize the Q values with the hotbootingtechnique and updates the Q functions via (20) and (21)similarly to Algorithm 2 according to the NOMA transmissionresult in each time slot. The BS observes the received SINRof each user at last time slot denoted by SINRk−11≤m≤M as thestate sk, i.e., sk = [SINRk−1m ]1≤m≤M . The power allocationstrategy θk is also chosen according to the system state andthe Q-function based on the ε-greedy policy as shown in (22).

-

8

1[SINR ]k k

m m M£ £=s

Q value updates

Wireless network with jammers

Evaluate reward from

the real experience

kuAction selection

with -greedy

Record the

real experience1( , , )k k ku+s s

Generate D

hypothetical

experiences

( , , , , )' 't tF F Y

Dyna architecture

1SINRk

m M£ £

Training in

similar NOMA

transmission

environments

BS

Send superimposed signal xk Observe

Fig. 4. Illustration of the fast Q-based NOMA power allocation scheme.

Meanwhile, the Dyna-Q based algorithm also uses the realexperiences of the NOMA transmission at time k to build ahypothetical anti-jamming NOMA transmission model. Morespecifically, the real experience of the NOMA transmission attime k is saved as an experience record for the correspondingstate-action pair, i.e., (sk,θk). The experience record stored atthe BS consists of the occurrence counter vector of the state-action pairs denoted by Φ, the occurrence counter vector ofthe next state denoted by Φ′, the reward record τ ′, and themodeled reward τ . The hypothetical experience is generatedaccording to the real experience (Φ′,Φ, τ ′, τ,Ψ). As shown inAlgorithm 3, the counter vector of the next state Φ′ is updatedaccording to the anti-jamming NOMA transmission at time k,i.e.,

Φ′(sk,θk, sk+1) = Φ′(sk,θk, sk+1) + 1. (23)

The occurrence counter vector in the hypothetical experienceΦ defined as the sum of Φ′ over all the feasible next state isthen updated with

Φ(sk,θk) =∑

sk+1∈ξ

Φ′(sk,θk, sk+1). (24)

The reward record from the real experience τ ′ in this time slotis the utility of the BS at time k given by

τ ′(sk,θk,Φ(sk,θk)

)= u(sk,θk). (25)

Based on the reward record τ ′ in (25) and the state transitionfrom sk to sk+1, we can formulate a hypothetical anti-jammingNOMA transmission model to generate several hypotheticalNOMA transmission experiences, in which the reward to theBS denoted by τ is defined as the utility of the BS averagedover all the previous real experiences and is given by

τ(sk,θk) =1

Φ(sk,θk)

Φ(sk,θk)∑n=1

τ ′(sk,θk, n

). (26)

The transition probability from sk to sk+1 in the hypothetical

Algorithm 3: NOMA power allocation with fast Q-learning

1: Set Q(s,θ) = Q∗(s,θ), ∀s ∈ ξ, ∀θ ∈ Ω, and s0 = 02: for k = 1, 2, 3, ... do3: Choose θk via (22)4: for m = 1, 2, ...,M do5: Allocate power θkmPT for the signal to User m6: end for7: θk =

[θkm]1≤m≤M

8: Send xk according to θk over NT antennas9: Observe SINRk1≤m≤M and the utility u

k

10: sk+1 = [SINRkm]1≤m≤M11: Update Q(sk,θk) via (20)12: Update V (sk) via (21)13: Calculate Φ′(sk,θk, sk+1) via (23)14: Calculate Φ(sk,θk) via (24)15: Calculate Ψ(sk,θk, sk+1) via (27)16: Calculate τ(sk,θk) via (25)17: Randomly select ŝ0 ∈ ξ and θ̂0 ∈ Ω18: for d = 1 to D do19: Select the next state ŝd+1 according to (28)20: Obtain τ(ŝd, θ̂d) via (26)21: Update Q(ŝd, θ̂d) via (29)22: Update V (ŝd) via (30)23: end for24: end for

experience denoted by Ψ is given by (23) and (24) as

Ψ(sk,θk, sk+1) =Φ′(sk,θk, sk+1)

Φ(sk,θk). (27)

The Q function is updated with D more times with theDyna architecture, in which D hypothetical experiences aregenerated according to the world model Ψ learned from theprevious real experience (Φ′,Φ, τ ′, τ,Ψ). More specifically,the system state denoted by ŝd and the hypothetical actiondenoted by θ̂d in the d-th additional update are generatedbased on the transition probability Ψ(ŝd, θ̂d, ŝd+1) given in(27), i.e.,

Pr(ŝd+1 |̂sd, θ̂d) = Ψ(ŝd, θ̂d, ŝd+1), (28)

where (ŝ0, θ̂0) is randomly chosen from ξ and Ω, respectively.The Q function is updated in the d-th hypothetical experi-

ence with d = 1, 2, · · · , D by the following:

Q(ŝd, θ̂d)← (1− α)Q(ŝd, θ̂d) + α(τ(ŝd, θ̂d) + δV (ŝd+1))

(29)

V (ŝd) = maxθ̂∈Ω

Q(ŝd, θ̂). (30)

As shown in Algorithm 3, the proposed NOMA powerallocation algorithm requires an additional memory in eachtime slot to store the experience record (Φ′,Φ, τ ′, τ,Ψ).Compared with the standard Q-learning based power allo-cation in Algorithm 2, this scheme has D more updates ofthe Q functions. However, the proposed fast Q-based power

-

9

allocation algorithm applies the Dyna architecture to increasethe convergence speed of the reinforcement learning processand thus improve the NOMA communication efficiency in thepresence of smart jamming.

VII. SIMULATION RESULTS

In this section, we evaluate the performance of the MIMONOMA power allocation scheme for M = 3 users in the dy-namic anti-jamming communication game via simulations. Ifnot specified otherwise, we set NT = NR = 5, σB,1 = 10 dB,σB,2 = 11 dB, σB,3 = 20 dB, PT = 20 W, R0 = 1 bps/Hz,γ = 0.5, α = 0.2, δ = 0.7, ε = 0.9 and I = 200. In thesimulations, a smart jammer applies Q-learning to determinethe jamming power according to the current downlink transmitpower with NJ = 3, σJ,1 = σJ,2 = 11 dB, σJ,3 = 12 dB, andPJ = 20 W.

As shown in Fig. 5, the Q-learning based power allocationscheme has significantly increased the jamming resistanceof NOMA transmissions, which can be further improved bythe hotbooting technique and the Dyna architecture. In thebenchmark Q-learning based OMA system, the BS equallyallocates the frequency and time resources to each user andchooses the transmit power according to the current state andthe Q function, similarly to Algorithm 2.

According to [36], the NOMA transmission outperformsOMA with less outage probability, which is verified by thesimulation results in Fig. 5. More specifically, the Q-learningbased NOMA system can improve the communication effi-ciency against jamming attack compared to the OMA system.For instance, the Q-learning based anti-jamming NOMA sys-tem exceeds the Q-learning based OMA system with 8.7%higher SINR, 42.8% higher sum data rate, and 7.1% higherutility of the BS at the 1000-th time slot.

The NOMA transmission performance can be further im-proved by the hotbooting technique. For instance, the NOMAsystem with hotbooting Q-learning achieves 12% higher SINR,10% higher sum data rate, and 9% higher utility, at the 1000-th time slot. The reason is that the hotbooting techniquecan formulate the emulated experiences in dynamic radioenvironments to effectively reduce the exploration trials andthus significantly improve the convergence speed of Q-learningand the jamming resistance efficiency. The power allocationperformance can be further improve by the Dyna architecturewith hypothetical experiences. For instance, the average SINR,the sum data rate, and the utility of the proposed fast Q-learning algorithm are 11%, 10%, and 7% higher at the 1000-th time slot, compared with the hotbooting Q-learning scheme.

The performance of the proposed power allocation schemein the 5× 5 MIMO NOMA system with 2 users is evaluatedwith the varying channel gains of User 1 in Fig. 6. Boththe sum capacity of users and the utility of the BS increasewith the channel gain σB,1. For instance, as the channelgain σB,1 increases from 4 to 12 dB, the average SINR, thesum data rate and the utility of the Q-learning based powerallocation increase by 9%, 14% and 17%, respectively. IfσB,1 = 12 dB, the fast Q-based power allocation achieves thebest anti-jamming communication and performance exceeds

0 1000 2000 3000 4000 5000Time slot

15

20

25

30

AverageSIN

Rofusers

NOMA with Q-learningNOMA with Hotbooting QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

(a) Average SINR

0 1000 2000 3000 4000 5000Time slot

5

10

15

20

25

Sum

datarates

NOMA with Q-learningNOMA with Hotbooting QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

(b) Sum data rates

0 1000 2000 3000 4000 5000Time slot

0

5

10

15

20

25

30

35

Utility

NOMA with Q-learningNOMA with Hotbooting QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

(c) Utility of the BS

Fig. 5. Performance of the power control schemes in the dynamic MIMONOMA transmission game in the presence of smart jamming, with M = 3,NT = NR = 5, NJ = 3, σB,1 = 10 dB, σB,2 = 11 dB, σB,3 = 20 dB,σJ,1 = σJ,2 = 11 dB, σJ,3 = 12 dB, PT = PJ = 20 W, R0 = 1 bps/Hzand γ = 0.5.

-

10

4 5 6 7 8Channel gain of User 1, σB,1 (dB)

16

18

20

22

24

26

28

Average

SIN

Rof

users

NOMA with Q-learningNOMA with Hotbooting-QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

σB,2 = 20dB

σB,2 = 24dB

(a) Average SINR

4 6 8 10 12Channel gain of User 1, σB,1 (dB)

5

10

15

20

25

30

Sum

datarates

σB,2 = 20dB

σB,2 = 24dB

NOMA with Q-learningNOMA with Hotbooting-QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

(b) Sum data rates

4 6 8 10 12Channel gain of User 1, σB,1 (dB)

10

15

20

25

30

35

Utility

σB,2 = 20dBσB,2 = 24dB

NOMA with Q-learningNOMA with Hotbooting-QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

(c) Utility of the BS

Fig. 6. Performance of the power control schemes in the dynamic MIMONOMA transmission game in the presence of smart jamming versus thechannel gain of User 1, with M = 2, NT = NR = 5, NJ = 3,σJ,1 = σJ,2 = 12 dB, PT = PJ = 20 W, R0 = 2 bps/Hz and γ = 0.5.

4 5 6 7 8Number of RX antennas, NR

16

18

20

22

24

26

28

30

32

AverageSIN

Rofusers

NOMA with Q-learningNOMA with Hotbooting QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

NJ=2

NJ = 6

(a) Average SINR

4 5 6 7 8Number of RX antennas, NR

10

20

30

40

50

60

70

Sum

data

rates

NOMA with Q-learningNOMA with Hotbooting QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

NJ = 6

NJ = 2

(b) Sum data rates

4 5 6 7 8Number of RX antennas, NR

10

20

30

40

50

60

70

80

Utility

NOMA with Q-learningNOMA with Hotbooting-QNOMA with Dyna-QNOMA with Fast QOMA with Q-learning

NJ = 6

NJ = 2

(c) Utility of the BS

Fig. 7. Performance of the power control schemes in the dynamic MIMONOMA transmission game in the presence of smart jamming versus thenumber of the RX antennas, with M = 2, NT = 10, σB,1 = 12 dB,σB,2 = 20 dB, σJ,1 = σJ,2 = 11 dB, PT = PJ = 20 W, R0 = 2 bps/Hzand γ = 0.5.

-

11

the standard Q-learning based strategy with 9% higher SINR,14% higher sum capacity, and 22% higher utility.

Fig. 7 shows that the NOMA transmission efficiency im-proves with the number of the receive antennas with NT = 10transmit antennas. The proposed power allocation schemeshave strong resistance against smart jamming even with a largenumber of jamming antennas NJ . For instance, our proposedscheme can improve the average SINR, the sum data rateand the user utility of the 10 × 8 NOMA system against ajammer with 2 antennas by 10%, 12%, and 15%, respectively,compared with the benchmark strategy.

VIII. CONCLUSION

In this paper, we have formulated an anti-jamming MIMONOMA transmission game, in which the BS determines thetransmit power to improve its utility based on the sum datarate of the users, and the smart jammer as the follower in theStackelberg game chooses the jamming power at an attemptto interrupt the ongoing transmission at a low jamming cost.The SE of the game has been derived, and the conditionsassuring its existence have been provided, showing how theanti-jamming communication efficiency of NOMA systemsincreases with the number of antennas and the channel powergains. A fast Q-based NOMA power allocation scheme thatcombines the hotbooting technique and Dyna architectureis proposed for a dynamic game to accelerate the learningand thus improve the communication efficiency against smartjamming. As shown in the simulation results, the proposedNOMA power allocation scheme can significantly improve theaverage SINR, the sum data rate and the utility of the BS soonafter the start of the game. For example, the sum data rate of3 users in the 5×5 MIMO NOMA anti-jamming transmissiongame increases by 128% after 1000 time slots, which is 21%higher than that of the standard Q-learning based scheme.

We have analyzed a simplified jamming scenario for MIMONOMA systems in this work. A future direction of our work isto extend the theoretical analysis of the NOMA transmissionto more practical scenarios with smart jamming, in which ajammer uses programmable radio devices to flexibly choosemultiple jamming policies.

REFERENCES

[1] L. Dai, B. Wang, Y. Yuan, S. Han, I. Chih-Lin, and Z. Wang, “Non-orthogonal multiple access for 5G: Solutions, challenges, opportunities,and future research trends,” IEEE Commun. Mag., vol. 53, no. 9, pp. 74–81, Sept. 2015.

[2] B. Kimy, S. Lim, H. Kim, et al., “Non-orthogonal multiple access ina downlink multiuser beamforming system,” in Proc. IEEE MilitaryCommun. Conf. (MILCOM), pp. 1278–1283, San Diego, CA, Nov. 2013.

[3] Z. Ding, L. Dai, and H. V. Poor, “MIMO-NOMA design for small packettransmission in the Internet of Things,” IEEE Access, vol. 4, pp. 1393–1405, Apr. 2016.

[4] M. F. Hanif, Z. Ding, T. Ratnarajah, and G. K. Karagiannidis, “Aminorization-maximization method for optimizing sum rate in the down-link of non-orthogonal multiple access systems,” IEEE Trans. SignalProcess., vol. 64, no. 1, pp. 76–88, Jan. 2016.

[5] Z. Ding, R. Schober, and H. V. Poor, “A general MIMO framework forNOMA downlink and uplink transmission based on signal alignment,”IEEE Trans. Wireless Commun., vol. 15, no. 6, pp. 4438–4454, June2016.

[6] K. Firouzbakht, G. Noubir, and M. Salehi, “On the performance ofadaptive packetized wireless communication links under jamming,”IEEE Trans. Wireless Commun., vol. 13, no. 7, pp. 3481–3495, July2014.

[7] Q. Wang, K. Ren, P. Ning, and S. Hu, “Jamming-resistant multiradiomultichannel opportunistic spectrum access in cognitive radio networks,”IEEE Trans. Veh. Techn., vol. 65, no. 10, pp. 8331–8344, Oct. 2016.

[8] Q. Yan, H. Zeng, T. Jiang, et al., “Jamming resilient communicationusing MIMO interference cancellation,” IEEE Trans. Inf. Forensics andSecurity, vol. 11, no. 7, pp. 1486–1499, July 2016.

[9] X. Zhou, D. Niyato, and A. Hjorungnes, “Optimizing training-basedtransmission against smart jamming,” IEEE Trans. Veh. Techn., vol. 60,no. 6, pp. 2644–2655, July 2011.

[10] A. Mukherjee and A. L. Swindlehurst, “Jamming games in the MIMOwiretap channel with an active eavesdropper,” IEEE Trans. SignalProcess., vol. 61, no. 1, pp. 82–91, Jan. 2013.

[11] A. Garnaev, Y. Liu, and W. Trappe, “Anti-jamming strategy versus a low-power jamming attack when intelligence of adversary’s attack type isunknown,” IEEE Trans. Signal Inf. Process. Netw., vol. 2, no. 1, pp. 49–56, Mar. 2016.

[12] Y. Gwon, S. Dastangoo, C. Fossa, and H. T. Kung, “Competing mobilenetwork game: Embracing antijamming and jamming strategies withreinforcement learning,” in Proc. IEEE Conf. Commun. and Netw.Security (CNS), pp. 28–36, Washington, DC, Oct. 2013.

[13] F. Slimeni, B. Scheers, Z. Chtourou, and V. L. Nir, “Jamming mitigationin cognitive radio networks using a modified Q-learning algorithm,” inProc. IEEE Int’l Conf. Military Commun. and Inf. Systems (ICMCIS),pp. 1–7, Cracow, May 2015.

[14] E. R. Gomes and R. Kowalczyk, “Dynamic analysis of multiagent Q-learning with ε-greedy exploration,” in Proc. ACM Annual Int’l Conf.Machine Learning (ICML), pp. 369–376, Montreal, Jun. 2009.

[15] R. S. Sutton, “Dyna, an integrated architecture for learning, planning,and reacting,” ACM SIGART Bulletin, vol. 2, no. 4, pp. 160–163, Aug.1991.

[16] Q. Sun, S. Han, I. Chin-Lin, and Z. Pan, “On the ergodic capacity ofMIMO NOMA systems,” IEEE Wireless Commun. Lett., vol. 4, no. 4,pp. 405–408, Aug. 2015.

[17] Z. Ding, P. Fan, and H. V. Poor, “Impact of user pairing on 5Gnonorthogonal multiple-access downlink transmissions,” IEEE Trans.Veh. Techn., vol. 65, no. 8, pp. 6010–6023, Aug. 2016.

[18] Z. Wei, D. W. K. Ng, and J. Yuan, “Power-efficient resource allocationfor MC-NOMA with statistical channel state information,” in Proc. IEEEGlobal Commun. Conf. (GLOBECOM), Washington, DC, Dec. 2016.

[19] F. Liu, P. Mähönen, and M. Petrova, “Proportional fairness-based userpairing and power allocation for non-orthogonal multiple access,” inProc. IEEE Annual Int’l Symp. Personal, Indoor, and Mobile RadioCommun. (PIMRC), pp. 1127–1131, Hong Kong, Aug. 2015.

[20] S. Timotheou and I. Krikidis, “Fairness for non-orthogonal multipleaccess in 5G systems,” IEEE Signal Process. Lett., vol. 22, no. 10,pp. 1647–1651, Oct. 2015.

[21] J. A. Oviedo and H. R. Sadjadpour, “A fair power allocation approachto NOMA in multi-user SISO systems,” IEEE Trans. Veh. Techn., 2017,DOI: 10.1109/TVT.2017.2689000.

[22] L. Xiao, J. Liu, Q. Li, N. B. Mandayam, and H. V. Poor, “User-centricview of jamming games in cognitive radio networks,” IEEE Trans. Inf.Forensics and Security, vol. 10, no. 12, pp. 2578–2590, Dec. 2015.

[23] X. Tang, P. Ren, Y. Wang, Q. Du, and L. Sun, “Securing wirelesstransmission against reactive jamming: A Stackelberg game framework,”in Proc. IEEE Global Commun. Conf. (GLOBECOM), San Diego, CA,Dec. 2015.

[24] Y. Wu, B. Wang, K. J. R. Liu, and T. C. Clancy, “Anti-jamming gamesin multi-channel cognitive radio networks,” IEEE Journal on SelectedAreas in Commun., vol. 30, no. 1, pp. 4–15, Jan. 2012.

[25] A. Garnaev, M. Baykal-Gursoy, and H. V. Poor, “A game theoreticanalysis of secret and reliable communication with active and passiveadversarial modes,” IEEE Trans. Wireless Commun., vol. 15, no. 3,pp. 2155–2163, Mar. 2016.

[26] L. Xiao, N. B. Mandayam, and H. V. Poor, “Prospect theoretic analysisof energy exchange among microgrids,” IEEE Trans. Smart Grid, vol. 6,no. 1, pp. 63–72, Jan. 2015.

[27] X. He, H. Dai, P. Ning, and R. Dutta, “A stochastic multi-channelspectrum access game with incomplete information,” in IEEE Int’l Conf.Commun. (ICC), pp. 4799–4804, London, Jun. 2015.

[28] L. Xiao, Y. Li, J. Liu, and Y. Zhao, “Power control with reinforcementlearning in cooperative cognitive radio networks against jamming,”Springer The Journal of Supercomputing, vol. 71, no. 9, pp. 3237–3257,Sept. 2015.

-

12

u =

NR∑i=1

M−1∑m=1

log2

1 + NJθmPThBm,iNTNJ +NJ

(1−

m∑n=1

θn

)PThBm,i +NT pJh

Jm,i

+ log21 + NJ

(1−

M−1∑n=1

θn

)PTh

BM,i

NTNJ +NT pJhJM,i

+ γpJ ,

(31)

∂u(θ, p∗J)

∂θm=

NR∑i=1

NJPT

(NT p

∗J

(hBm,ih

JM,i − hBM,ihJm,i

)−NJPThBm,ihBM,i

M−1∑n=m+1

θn −NTNJ(hBM,i − hBm,i

))ln 2

(NTNJ +NT p∗Jh

Jm,i +NJ

(1−

m∑n=1

θn

)PThBm,i

)(NTNJ +NT p∗Jh

JM,i +NJ

(1−

M−1∑n=1

θn

)PThBM,i

) < 0,∀1 ≤ m ≤M − 1.

(32)

u =

NR∑i=1

(log2

(1 +

NJθ1PThB1,i

NTNJ +NJ (1− θ1)PThB1,i +NT pJhJ1,i

)+ log2

(1 +

NJ (1− θ1)PThB2,iNTNJ +NT pJhJ2,i

))+ γpJ , (33)

∂u(θ, p∗J)

∂θ1=

NR∑i=1

NJPT(NT p

∗J

(hB1,ih

J2,i − hB2,ihJ1,i

)−NTNJ

(hB2,i − hB1,i

))ln 2

(NTNJ +NT p∗Jh

J1,i +NJ (1− θ1)PThB1,i

) (NTNJ +NT p∗Jh

J2,i +NJ (1− θ1)PThB2,i

) < 0. (34)

[29] X. Xiao, C. Dai, Y. Li, C. Zhou, and L. Xiao, “Energy trading gamefor microgrids using reinforcement learning,” in Proc. EAI Int’l Conf.Game Theory for Networks, Tennessee, May 2017.

[30] K. S. Hwang, W. C. Jiang, Y. J. Chen, and W. H. Wang, “Model-based indirect learning method based on dyna-q architecture,” in Proc.IEEE Int’l Conf. Systems, Man, and Cybernetics (SMC), pp. 2540–2544,Manchester, U.K., Oct. 2013.

[31] Y. Li, L. Xiao, H. Dai, and H. V. Poor, “Game theoretic study ofprotecting MIMO transmissions against smart attacks,” in IEEE Int’lConf. Commun. (ICC), London, May 2017.

[32] C. Wang, J. Chen, and Y. Chen, “Power allocation for a downlinknon-orthogonal multiple access system,” IEEE Wireless Commun. Lett.,vol. 5, no. 5, pp. 532–535, Aug. 2016.

[33] Z. Ding, Z. Yang, P. Fan, and H. V. Poor, “On the performance ofnon-orthogonal multiple access in 5G systems with randomly deployedusers,” IEEE Signal Process. Lett., vol. 21, no. 12, pp. 1501–1505, Dec.2014.

[34] L. P. Kaelbling, M. L. Littman, and A. W. Moore, “Reinforcementlearning: A survey,” Journal of Artificial Intelligence Research, vol. 4,pp. 237–285, 1996.

[35] H. H. Kha, H. D. Tuan, and H. H. Nguyen, “Fast global optimal powerallocation in wireless networks by local DC programming,” IEEE Trans.Wireless Commun., vol. 11, no. 2, pp. 510–515, Feb. 2012.

[36] J. Cui, Z. Ding, and P. Fan, “A novel power allocation scheme underoutage constraints in NOMA systems,” IEEE Signal Process. Lett.,vol. 23, no. 9, pp. 1226–1230, Sept. 2016.

APPENDIX APROOF OF LEMMA 1

According to (6), if min [Rm]1≤m≤M ≥ R0 for ∀0 ≤pJ ≤ PJ , we have (31), and thus ∂2u(θ, pJ)/∂pJ2 > 0, i.e.that u(θ, pJ) is convex in terms of pJ . By (11), we have∂u(θ, pJ)/∂pJ |pJ=0 < 0. Thus, u(θ, pJ) is minimized at∂u(θ, pJ)/∂pJ = 0, and by Eq. (7), p∗J is given by (10).Otherwise, if ∃pJ ′ ≤ PJ that satisfies min [Rm]1≤m≤M < R0,by Eq. (7), p∗J ∈ [pJ ′, PJ ].

According to the assumption that the channel conditionsof User k are stronger than User j (k > j), we havehBk,i > h

Bj,i ∀1 ≤ j < k ≤ NR. In addition, if hBm,ihJM,i <

hBM,ihJm,i,∀1 ≤ m ≤ M − 1,∀1 ≤ i ≤ NR, by (31), we

have ∂u(θm, p∗J)/∂θm < 0, i.e. (32). Clearly, u monotonicallydecreases with θm, ∀1 ≤ m ≤ M − 1. Meanwhile, ifRm(θ, PJ) < R0 ∀1 ≤ m ≤ M − 1, p∗J = PJ and

u(θ, p∗J) = 0. Therefore, u is maximized if Rm(θ, PJ) = R0,∀1 ≤ m ≤ M − 1, i.e. that θ∗ is given by (9) and thus (8)holds. Therefore, the SE (θ∗, p∗J) is given by (9)-(10).

APPENDIX BPROOF OF COROLLARY 1

Similar to the proof of Lemma 1, if min [Rm]1≤m≤M ≥ R0for ∀0 ≤ pJ ≤ PJ , by (31), ∂2u(θ, pJ)/∂pJ2 > 0. By(19), we have ∂u(θ, pJ)/∂pJ |pJ=0 > 0. Thus u(θ, pJ) isminimized at pJ = 0, and we have p∗J = 0 by Eq. (7). IfhBm,ih

JM,i < h

BM,ih

Jm,i,∀1 ≤ m ≤ M − 1,∀1 ≤ i ≤ NR,

we have (32) and thus θ∗ given by (18). Therefore, ifhBm,ih

JM,i < h

BM,ih

Jm,i,∀1 ≤ m ≤ M − 1,∀1 ≤ i ≤ NR

and (19) holds, we have the SE (θ∗, 0).

APPENDIX CPROOF OF COROLLARY 2

By (33), if hB1,ihJ2,i < h

J1,ih

B2,i,∀1 ≤ i ≤ NR and (14) holds,

we have ∂u(θ1, p∗J)/∂θ1 < 0, i.e. (34). Thus we have that umonotonically increases with θ2, 0 ≤ θ2 ≤ 1. On the otherhand, it is clear that R1(θ1, p∗J) = 0 if θ1 = 0, and R1(θ1, p

∗J)

monotonically increases with θ1. Thus u is maximized if R1 =R0, i.e. that θ∗1 is given by (12), and Eq. (8) is satisfied withθ∗1 . Therefore, the SE (θ

∗1 , p∗J) is given by (12)-(13).

APPENDIX DPROOF OF COROLLARY 3

By (32), if hB1,ihJ2,i > h

J1,ih

B2,i +

(hB2,i − hB1,i

)NJ/p

∗J ,∀1 ≤

i ≤ NR and (17) holds, we have ∂u(θ1, p∗J)/∂θ1 > 0. Itis clear that R2(θ1, p∗J) = 0 if θ1 = 1, and R2(θ1, p

∗J)

monotonically decreases with θ1. Thus u is maximized ifR2 = R0, i.e. that θ∗1 is given by (15). Therefore, the SE(θ∗1 , p

∗J) is given by (15)-(16).

-

13

Liang Xiao (M’09, SM’13) is currently a Professorin the Department of Communication Engineering,Xiamen University, Fujian, China. She has servedas an associate editor of IEEE Trans. InformationForensics and Security and guest editor of IEEEJournal of Selected Topics in Signal Processing.She is the recipient of the best paper award for2016 INFOCOM Big Security WS and 2017 ICC.She received the B.S. degree in communicationengineering from Nanjing University of Posts andTelecommunications, China, in 2000, the M.S. de-

gree in electrical engineering from Tsinghua University, China, in 2003, andthe Ph.D. degree in electrical engineering from Rutgers University, NJ, in2009. She was a visiting professor with Princeton University, Virginia Tech,and University of Maryland, College Park.

Yanda Li received the B.S. degree in communica-tion engineering from Xiamen University, Xiamen,China, in 2015, where he is currently pursuing theM.S. degree with the Department of CommunicationEngineering. His research interests include networksecurity and wireless communications.

Canhuang Dai received the B.S. degree in com-munication engineering from Xiamen University,Xiamen, China, in 2017, where he is currentlypursuing the M.S. degree with the Department ofCommunication Engineering. His research interestsinclude smart grid and wireless communications.

Huaiyu Dai (F’17) received the B.E. and M.S.degrees in electrical engineering from Tsinghua Uni-versity, Beijing, China, in 1996 and 1998, respec-tively, and the Ph.D. degree in electrical engineeringfrom Princeton University, Princeton, NJ in 2002.

He was with Bell Labs, Lucent Technologies,Holmdel, NJ, in summer 2000, and with AT&TLabs-Research, Middletown, NJ, in summer 2001.He is currently a Professor of Electrical andComputer Engineering with NC State University,Raleigh. His research interests are in the general

areas of communication systems and networks, advanced signal processing fordigital communications, and communication theory and information theory.His current research focuses on networked information processing and cross-layer design in wireless networks, cognitive radio networks, network security,and associated information-theoretic and computation-theoretic analysis.

He has served as an editor of IEEE TRANSACTIONS ON COMMUNICA-TIONS, IEEE Transactions on Signal Processing, and IEEE Transactionson Wireless Communications. Currently he is an Area Editor in charge ofwireless communications for IEEE TRANSACTIONS ON COMMUNICATIONS.He co-edited two special issues of EURASIP journals on distributed signalprocessing techniques for wireless sensor networks, and on multiuser infor-mation theory and related applications, respectively. He co-chaired the SignalProcessing for Communications Symposium of IEEE Globecom 2013, theCommunications Theory Symposium of IEEE ICC 2014, and the WirelessCommunications Symposium of IEEE Globecom 2014.

H. Vincent Poor (S’72, M’77, SM’82, F’87) re-ceived the Ph.D. degree in electrical engineeringand computer science from Princeton University in1977. From 1977 until 1990, he was on the facultyof the University of Illinois at Urbana-Champaign.Since 1990 he has been on the faculty at Princeton,where he is the Michael Henry Strater UniversityProfessor of Electrical Engineering. During 2006 to2016, he served as Dean of Princeton’s School ofEngineering and Applied Science. He has also heldvisiting appointments at several other institutions,

most recently at Berkeley and Cambridge. His research interests are in theareas of information theory and signal processing, and their applications inwireless networks, energy systems and related fields. Among his publicationsin these areas is the recent book Information Theoretic Security and Privacyof Information Systems (Cambridge University Press, 2017).

Dr. Poor is a member of the National Academy of Engineering and theNational Academy of Sciences, and is a foreign member of the ChineseAcademy of Sciences, the Royal Society and other national and internationalacademies. Recent recognition of his work includes the 2017 IEEE AlexanderGraham Bell Medal, Honorary Professorships from Peking University andTsinghua University, both conferred in 2017, and a D.Sc. honoris causa fromSyracuse University awarded in 2017.

![Learning-Assisted Optimization for Energy-Efficient ... · onds [1], [10], whereas solving NOMA resource allocation problems to a satisfactory level would require a much longer span](https://static.fdocuments.in/doc/165x107/60028df713eaa868cb151d88/learning-assisted-optimization-for-energy-efficient-onds-1-10-whereas.jpg)