quantitative and qualitative analyses of requirements elaboration

Transcript of quantitative and qualitative analyses of requirements elaboration

QUANTITATIVE AND QUALITATIVE ANALYSES

OF REQUIREMENTS ELABORATION

FOR EARLY SOFTWARE SIZE ESTIMATION

by

Ali Afzal Malik

A Dissertation Presented to the FACULTY OF THE USC GRADUATE SCHOOL UNIVERSITY OF SOUTHERN CALIFORNIA

In Partial Fulfillment of the Requirements for the Degree DOCTOR OF PHILOSOPHY

(COMPUTER SCIENCE)

August 2010

Copyright 2010 Ali Afzal Malik

iii

Acknowledgements

First and foremost, I thank the Almighty for giving me the ability to pursue

this research. Whatever good I possess is from Him alone. His blessings are

uncountable and priceless. Good health, caring family members, knowledgeable

mentors, cooperative colleagues, and generous sponsors are just a few of these

blessings.

My family’s love, care, and support has played a vital role in reaching thus

far. My late father taught me the value of education and instilled in me the desire to

strive for the best. My mother’s encouragement and moral support helped me to

overcome various obstacles in life. My elder brother took the lion’s share of

household responsibilities after my father passed away. This allowed me to give

undivided attention to my studies. My wife’s emotional and spiritual support has

provided the much-needed motivation to take this research to fruition.

I owe a lot to my research advisor and dissertation committee chair – Dr

Barry Boehm. His remarkable knowledge, vast experience, and good nature make

him one of the best mentors. Dr. Boehm’s expert advice and constructive criticism

have been invaluable in tackling this hard research problem. I am also indebted to

other members of my qualifying exam and dissertation committees i.e. Dr. Nenad

Medvidović, Dr. Bert Steece, Dr. Rick Selby, and Dr. Ellis Horowitz. Each of these

individuals has played an important role in refining this research.

A number of my colleagues have been instrumental in conducting this

research. Supannika Koolmanojwong, Pongtip Aroonvatanaporn, Dr. Ye Yang

iv

(ISCAS) and Yan Ku (ISCAS) have provided invaluable assistance in data

collection. Julie Sanchez (CSSE Administrator) and Alfred Brown (CSSE System

Administrator) have provided valuable support in a number of vital administrative

and technical maters.

Last, but certainly not the least, I thank my sponsors – Fulbright, HEC

Pakistan, and USAID – for giving me an opportunity to pursue graduate studies i.e.

MS and PhD degrees. Their comprehensive financial assistance has enabled me to

study at one of the most prestigious universities in the world i.e. USC.

v

Table of Contents

Dedication ........................................................................................................................... ii Acknowledgements............................................................................................................ iii List of Tables .................................................................................................................... vii List of Figures .................................................................................................................... ix Abbreviations................................................................................................................... xiii Abstract ............................................................................................................................. xv Chapter 1 Introduction ........................................................................................................ 1

1.1 Problem Definition.................................................................................................... 1 1.2 Research Approach ................................................................................................... 3 1.3 Research Significance............................................................................................... 4 1.4 Dissertation Outline .................................................................................................. 5

Chapter 2 Related Work...................................................................................................... 7

2.1 Sizing Methods ......................................................................................................... 7 2.2 Sizing Metrics ........................................................................................................... 9 2.3 Requirements Elaboration....................................................................................... 13

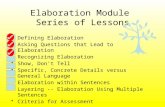

Chapter 3 From Cloud to Kite .......................................................................................... 18

3.1 Experimental Setting............................................................................................... 19 3.2 Method .................................................................................................................... 23 3.3 Results..................................................................................................................... 33 3.4 Elaboration of Capability Goals.............................................................................. 38 3.5 Utility of Elaboration Groups ................................................................................. 40 3.6 Threats to Validity .................................................................................................. 43

Chapter 4 From Cloud to Clam......................................................................................... 45

4.1 Experimental Setting............................................................................................... 46 4.2 Method .................................................................................................................... 47 4.3 Results and Discussion ........................................................................................... 51 4.4 Requirements Elaboration of Commercial Projects................................................ 56

Chapter 5 Efficacy of COCOMO II Cost Drivers in Predicting Elaboration Profiles...... 59

5.1 Method .................................................................................................................... 60 5.2 Results..................................................................................................................... 62 5.3 Discussion............................................................................................................... 75

vi

Chapter 6 Comparative Analysis of Requirements Elaboration ....................................... 78 6.1 Empirical Setting .................................................................................................... 78 6.2 Data Collection ....................................................................................................... 79 6.3 Results..................................................................................................................... 83 6.4 Comparison with Second Empirical Study............................................................. 87 6.5 Conclusions............................................................................................................. 89

Chapter 7 Determinants of Elaboration ............................................................................ 91

7.1 Stage 1 Cloud to Kite.............................................................................................. 91 7.2 Stage 2 Kite to Sea.................................................................................................. 94 7.3 Stage 3 Sea to Fish.................................................................................................. 95 7.4 Stage 4 Fish to Clam............................................................................................... 96

Chapter 8 Contributions and Next Steps........................................................................... 98

8.1 Main Contributions ................................................................................................. 98 8.2 Future Work.......................................................................................................... 100

Bibliography ................................................................................................................... 103 Appendix A Specification and Normalization of High-Level Requirements................. 108

A.1 Category vs. Instance ........................................................................................... 108 A.2 Aggregate vs. Component.................................................................................... 110 A.3 One-to-One........................................................................................................... 111 A.4 Main Concept and Related Minor Concept.......................................................... 112

Appendix B Multiple Regression Results for Small E-Services Projects....................... 115

B.1 First Stage of Elaboration..................................................................................... 115 B.2 Second Stage of Elaboration ................................................................................ 116 B.3 Third Stage of Elaboration ................................................................................... 116 B.4 Fourth Stage of Elaboration ................................................................................. 117

Appendix C COCOMO II Cost Drivers and Elaboration Profiles of SoftPM Versions. 118

C.1 COCOMO II Cost Driver Ratings........................................................................ 118 C.2 Results of Simple Regression Analyses ............................................................... 120

vii

List of Tables

Table 1: Projects used for first empirical study 21

Table 2: Metrics 29

Table 3: Values of metrics for capability goals and capability requirements 33

Table 4: Values of metrics for LOS goals and LOS requirements 34

Table 5: Elaboration determinants 42

Table 6: Projects used for second empirical study 47

Table 7: Mapping 48

Table 8: Relevant artifacts 49

Table 9: Formulae for elaboration factors 51

Table 10: Elaboration factor sets for each project 52

Table 11: Summary of elaboration results 54

Table 12: Multi-level requirements data of large IBM projects 57

Table 13: Elaboration factors of large IBM projects 57

Table 14: Cost driver rating scale 60

Table 15: COCOMO II scale factor ratings 63

Table 16: COCOMO II product factor ratings 64

Table 17: COCOMO II platform factor ratings 65

Table 18: COCOMO II personnel factor ratings 66

Table 19: COCOMO II project factor ratings 67

Table 20: Correlation between CG

CR

and COCOMO II cost drivers 69

viii

Table 21: Correlation between CR

UC

and COCOMO II cost drivers 70

Table 22: Correlation between UC

UCS

and COCOMO II cost drivers 71

Table 23: Correlation between UCS

SLOC

and COCOMO II cost drivers 72

Table 24: COCOMO II cost drivers relevant at each elaboration stage 74

Table 25: Results of multiple regression analysis 75

Table 26: Requirements levels of SoftPM 79

Table 27: Multi-level requirements data of SoftPM 83

Table 28: Elaboration profiles 84

Table 29: Summary statistics of elaboration factors 86

Table 30: Comparison of summary statistics 88

Table 31: Determinants of elaboration 99

Table 32: Scale factor ratings 118

Table 33: Product factor ratings 119

Table 34: Platform factor ratings 119

Table 35: Personnel factor ratings 119

Table 36: Project factor ratings 119

ix

List of Figures

Figure 1: Cone of uncertainty 2

Figure 2: Sizing metrics 9

Figure 3: Cockburn’s metaphor illustrating refinement of use cases 14

Figure 4: SLOC/Requirement ratios of large-scale projects 16

Figure 5: Multiple requirements levels for large IBM projects 17

Figure 6: Partial software development process 22

Figure 7: Typical high-level capability goal with its low-level capability requirements 25

Figure 8: Typical high-level LOS goal with its low-level LOS requirement 26

Figure 9: Table of contents of OCD 27

Figure 10: Table of contents of SSRD 28

Figure 11: EF ranges defining different elaboration groups 31

Figure 12: Elaboration of capability goals into capability requirements 36

Figure 13: Elaboration of LOS goals into LOS requirements 37

Figure 14: Elaboration groups for capability goals 39

Figure 15: Data collection process 47

Figure 16: CG

CR

distribution 52

Figure 17: CR

UC

distribution 53

Figure 18: UC

UCS

distribution 53

x

Figure 19: UCS

SLOC

distribution 54

Figure 20: Elaboration of use cases to use case steps 55

Figure 21: Example scenario depicting overlaps amongst versions 80

Figure 22: Example scenario after removing overlaps amongst versions 81

Figure 23: Distribution of CG to CR elaboration factor 85

Figure 24: Distribution of CR to UC elaboration factor 85

Figure 25: Distribution of UC to UCS elaboration factor 85

Figure 26: Distribution of UCS to SLOC elaboration factor 86

Figure 27: Proportion of atomic capability goals 94

Figure 28: Proportion of atomic capability requirements 95

Figure 29: Proportion of alternative use case steps to main use case steps 96

Figure 30: EF(CG, CR) vs. PREC 121

Figure 31: EF(CG, CR) vs. FLEX 121

Figure 32: EF(CG, CR) vs. RESL 122

Figure 33: EF(CG, CR) vs. TEAM 122

Figure 34: EF(CG, CR) vs. PMAT 123

Figure 35: EF(CG, CR) vs. TIME 123

Figure 36: EF(CG, CR) vs. ACAP 124

Figure 37: EF(CG, CR) vs. PCAP 124

Figure 38: EF(CG, CR) vs. PCON 125

Figure 39: EF(CG, CR) vs. APEX 125

Figure 40: EF (CR, UC) vs. PREC 126

xi

Figure 41: EF (CR, UC) vs. FLEX 126

Figure 42: EF (CR, UC) vs. RESL 127

Figure 43: EF (CR, UC) vs. TEAM 127

Figure 44: EF (CR, UC) vs. PMAT 128

Figure 45: EF (CR, UC) vs. TIME 128

Figure 46: EF (CR, UC) vs. ACAP 129

Figure 47: EF (CR, UC) vs. PCAP 129

Figure 48: EF (CR, UC) vs. PCON 130

Figure 49: EF (CR, UC) vs. APEX 130

Figure 50: EF(UC, UCS) vs. PREC 131

Figure 51: EF(UC, UCS) vs. FLEX 131

Figure 52: EF(UC, UCS) vs. RESL 132

Figure 53: EF(UC, UCS) vs. TEAM 132

Figure 54: EF(UC, UCS) vs. PMAT 133

Figure 55: EF(UC, UCS) vs. TIME 133

Figure 56: EF(UC, UCS) vs. ACAP 134

Figure 57: EF(UC, UCS) vs. PCAP 134

Figure 58: EF(UC, UCS) vs. PCON 135

Figure 59: EF(UC, UCS) vs. APEX 135

Figure 60: EF(UCS, SLOC) vs. PREC 136

Figure 61: EF(UCS, SLOC) vs. FLEX 136

Figure 62: EF(UCS, SLOC) vs. RESL 137

Figure 63: EF(UCS, SLOC) vs. TEAM 137

xii

Figure 64: EF(UCS, SLOC) vs. PMAT 138

Figure 65: EF(UCS, SLOC) vs. TIME 138

Figure 66: EF(UCS, SLOC) vs. ACAP 139

Figure 67: EF(UCS, SLOC) vs. PCAP 139

Figure 68: EF(UCS, SLOC) vs. PCON 140

Figure 69: EF(UCS, SLOC) vs. APEX 140

xiii

Abbreviations

AEF Abnormal Elaboration Factor

CG Capability Goal

CMMI Capability Maturity Model Integration

COCOMO II Constructive Cost Model Version II

COSYSMO Constructive Systems Engineering Cost Model

COTS Commercial of-the-Shelf

CR Capability Requirement

CSSE Center for Systems and Software Engineering

EF Elaboration Factor

EP Elaboration Profile

HEF High Elaboration Factor

ICM-Sw Incremental Commitment Model for Software

IOC Initial Operational Capability

IV & V Independent Verification and Validation

KSLOC Thousand Source Lines of Code

LCO Life Cycle Objectives

LCP Life Cycle Plan

LEF Low Elaboration Factor

LOS Level of Service

MBASE Model-Based (System) Architecting and Software Engineering

MEF Medium Elaboration Factor

xiv

OCD Operational Concept Description

RL Requirements Level

RUP Rational Unified Process

SAIV Schedule as Independent Variable

SE Software Engineering

SLOC Source Lines of Code

SSAD System and Software Architecture Description

SSRD System and Software Requirements Definition

UC Use Case

UCS Use Case Step

UML Unified Modeling Language

USC University of Southern California

xv

Abstract

Software size is one of the most influential inputs of a software cost estimation

model. Improving the accuracy of size estimates is, therefore, instrumental in improving

the accuracy of cost estimates. Moreover, software size and cost estimates have the

highest utility at the time of inception. This is the time when most important decisions e.g.

budget allocation, personnel allocation, etc. are taken. The dilemma, however, is that only

high-level requirements for a project are available at this stage. Leveraging this high-

level information to produce an accurate estimate of software size is an extremely

challenging task.

Requirements for a software project are expressed at multiple levels of detail

during its life cycle. At inception, requirements are expressed as high-level goals. With

the passage of time, goals are refined into shall statements and shall statements into use

cases. This process of progressive refinement of a project’s requirements, referred to as

requirements elaboration, continues till source code is obtained. This research analyzes

the quantitative and qualitative aspects of requirements elaboration to address the

challenge of early size estimation.

A series of four empirical studies is conducted to obtain a better understanding of

requirements elaboration. The first one lays the foundation for the quantitative

measurement of this abstract process by defining the appropriate metrics. It, however,

focuses on only the first stage of elaboration. The second study builds on the foundation

laid by the first. It examines the entire process of requirements elaboration looking at

each stage of elaboration individually. A general process for collecting and processing

xvi

multi-level requirements to obtain elaboration data useful for early size estimation is

described. Application of this general process to estimate the size of a new project is also

illustrated.

The third and fourth empirical studies are designed to tease out the factors

determining the variation in each stage of elaboration. The third study focuses on

analyzing the efficacy of COCOMO II cost drivers in predicting these variations. The

fourth study performs a comparative analysis of the elaboration data from two different

sources i.e. small real-client e-services projects and multiple versions of an industrial

process management tool.

1

Chapter 1 Introduction

1.1 Problem Definition

Software cost models are best described as “garbage in-garbage out devices”

[Boehm, 1981]. The quality of the outputs of these models is determined by the quality of

the inputs. Hence, if input quality is bad, output quality cannot be good. This fact clearly

implies that one of the prerequisites of improving the estimates (outputs) of these models

is to improve the quality of the inputs supplied to these models.

Different parametric cost models such as SLIM [Putnam, 1978], PRICE-S

[Freiman and Park, 1979], COCOMO II [Boehm et al., 2000], and COSYSMO [Valerdi,

2005] require a different set of inputs. One input, however, is common amongst most of

these models i.e. “an estimate of likely product size” [Fenton and Pfleeger, 1997]. In fact,

size is the most influential input of these models. The accuracy of cost estimation

“depends more on accurate size estimates than on any other cost-related parameter”

[Pfleeger, Wu, and Lewis, 2005].

The fundamental challenge of cost estimation, therefore, is “estimating the

amount (size) of software that must be produced” [Stutzke, 2005]. Addressing this

challenge lies at the crux of improving cost estimation accuracy. Producing an accurate

estimate of product size, however, is not an easy task.

The difficulty of accurate size estimation is compounded especially at the time of

inception when very little information is available. This problem is visually depicted in

the “cone of uncertainty” diagram from [Boehm et al., 2004] which is reproduced in

Figure 1. This diagram plots the relative size range versus the various phases and

2

milestones. It clearly indicates that, at the beginning of a project, estimates are far off

from the actual values. As time progresses, the error in estimation starts decreasing but so

does the utility of these estimates.

Figure 1: Cone of uncertainty

The cone of uncertainty highlights the fundamental dilemma of system and

software size estimation i.e. the tradeoff between accuracy and utility of estimates.

Estimates are of highest value at the beginning of a project. This is the time when

important decisions such as those regarding budget and personnel allocation are made.

Estimation accuracy at this point, however, is lowest. Therefore, in order to achieve

maximum utility, it is extremely important to improve the accuracy of estimates produced

at the time of inception.

3

Improving the accuracy of early size estimation necessitates leveraging high-level

project requirements available at the time of inception i.e. project goals and objectives.

Leveraging this information about the high-level requirements of a project is only

possible if one is aware of the details of the process of their elaboration into lower-level

requirements e.g. shall statements, use cases, etc. These details about the process of

elaboration are previously unavailable. This problem is succinctly summarized in the

following statement:

At the time of a project’s inception, when estimates have the highest utility, very high-level requirements are available and the elaboration process of these high-level requirements is unknown.

1.2 Research Approach

Requirements for any project can be expressed at multiple levels of detail e.g.

goals (least detailed), shall statements, use cases, use case steps, and SLOC (most

detailed). At the time of a project’s inception, requirements are usually expressed as high-

level goals. With the passage of time, these goals are elaborated into shall statements,

shall statements are transformed into use cases, use cases are decomposed into use case

steps, and use case steps are implemented by SLOC. Each subsequent level builds on the

previous by adding more detail. This progressive refinement of requirements is called

requirements elaboration. This research addresses the problem identified in the previous

section by uncovering the details of this process of requirements elaboration.

The mechanics of this abstract process of requirements elaboration are studied by

first measuring this process quantitatively using metrics specifically designed for this

4

purpose. The quantification of this process, in turn, facilitates looking at its qualitative

nuances. It helps in teasing out factors that determine its variations.

1.3 Research Significance

A deeper understanding of the quantitative and qualitative aspects of the

requirements elaboration process provides a number of benefits. First and foremost, it

helps in solving the fundamental dilemma of system and software size estimation i.e.

estimates are less accurate when their utility is more and more accurate when their utility

is less. Leveraging a project’s high-level requirements by studying the elaboration

process enables producing accurate estimates when estimation accuracy really matters i.e.

at the time of inception.

Another important benefit of this work is that quantitative information about

requirements elaboration helps in resolving inconsistencies between the level of detail of

model inputs and model calibration data. If not resolved, such inconsistencies can easily

lead to over- or under-estimation of cost. Consider, for instance, a model that has been

calibrated using lower-level requirements such as shall statements as one of the inputs.

Using higher-level requirements such as goals as an input to this model will result in an

under-estimation of cost. However, if the elaboration process of higher-level

requirements is known, these high-level goals can be converted to equivalent lower-level

shall statements expected as input by the model.

Last but not the least, quantitative measures of elaboration allow comparisons

between estimates of models that use different size parameters. As an example, consider

two cost estimation models A and B. If, for instance, model A uses shall statements as

5

input while model B uses SLOC, then the outputs of these two models can be made

comparable only after transforming the shall statements into equivalent SLOC and

plugging this value into model B. Transforming shall statements into equivalent SLOC,

however, requires understanding how shall statements elaborate into SLOC. The analyses

presented in this research help in achieving this understanding.

1.4 Dissertation Outline

The rest of this dissertation is organized as follows:

• Chapter 2 briefly describes previous work relevant to this research. It surveys

prior work in three areas i.e. software sizing, software metrics, and requirements

elaboration. Gaps and shortcomings in prior work are also highlighted in this

chapter.

• Chapter 3 describes the first empirical study on requirements elaboration. This

study analyzes the first stage of requirements elaboration. It examines the

elaboration of two different types of goals – capability and level of service (LOS)

– into respective immediately lower-level requirements. New metrics are

introduced to quantitatively measure requirements elaboration.

• Chapter 4 summarizes the second empirical study which examines the entire

process of requirements elaboration. A five-step process for gathering and

processing a project’s multi-level requirements to obtain its elaboration data is

described. The utility of summary statistics obtained from the elaboration data of

multiple similar projects in early size estimation is illustrated. A case study

6

showing the application of this approach in estimating the size of a large new

IBM project is also included.

• Chapter 5 presents the third empirical study which determines the efficacy of

COCOMO II cost drivers in predicting variations in different stages of

elaboration. For each stage of elaboration, the relationship between the values of

the relevant cost drivers and the magnitude of elaboration is analyzed.

• Chapter 6 describes the fourth and final empirical study on requirements

elaboration. This study first presents a novel approach to determine the

elaboration profiles of different versions of an industrial product. It then

compares these elaboration profiles with the elaboration profiles obtained in the

second empirical study.

• Chapter 7 contains a discussion on the determinants of elaboration. It presents the

factors responsible for variations in different stages of elaboration.

• Chapter 8 concludes this dissertation by summarizing the major contributions of

this research. It also highlights avenues for future work in this area.

7

Chapter 2 Related Work

2.1 Sizing Methods

A number of methods for estimating size have been proposed in the literature. A

good overview of these methods can be found in [Boehm, 1981], [Fenton and Pfleeger,

1997], and [Stutzke, 2005]. Most of these methods can be classified into four major

categories: expert judgment, analogy-based, group consensus, and decomposition.

As is evident from the name, size estimation based on expert judgment takes

advantage of the past experience of an expert. A seasoned developer is requested to

estimate the size of a project based on the information available about the project. One of

the main advantages of this approach is that experts can spot exceptional size drivers. The

accuracy of the estimate, however, is completely dependent on the experience and

memory of the expert.

Analogy-based size estimation methods use one or more benchmarks for

estimating the size of a new project. The characteristics of the new project are compared

with the benchmarks. The size of the new project is adjusted based on the similarities and

differences between the new project and the benchmarks. Pair-wise comparison is a

special case of analogy-based sizing which uses a single reference point. This type of

estimation can only be employed when suitable benchmarks are available. The main

advantage of this approach is that it uses relative sizing which prevents most of the

problems associated with absolute sizing such as personal bias and incomplete recall.

Group consensus techniques such as Wideband Delphi [Boehm, 1981] and

Planning Poker [Cohn, 2005] use a group of people instead of individuals to derive

8

estimates of size. Estimation activities are coordinated by a moderator who describes the

scenario, solicits estimates, and compiles the results. At the end of the first round,

divergences are discussed and individuals share rationales for their estimation values.

More rounds of estimation may be necessary to reach a consensus. The main advantage

of these techniques is that they improve understanding of the problem through group

discussion. Iteration and group coordination, however, requires more time and resources

than techniques relying on a single person.

Decomposition techniques for estimating size use a more rigorous approach. Two

complementary decomposition techniques are available: top-down and bottom-up. Top-

down estimation focuses on the product as a whole. Estimates of the overall size are

derived from the global product characteristics and are then assigned proportionally to

individual components of the product. Bottom-up estimation, on the other hand, focuses

on the individual components. Size is estimated for each individual component. The size

of the overall product is then derived by summing the size of the individual components.

Since these two techniques are orthogonal, the advantages of one are the disadvantages of

the other. The top-down approach has a system-level focus but lacks a detailed basis. The

bottom-up approach, on the other hand, has a more detailed basis but tends to ignore

overall product characteristics.

Apart from the four main size estimation method categories mentioned above,

other categories also exist. Probabilistic methods such as PERT sizing [Putnam and

Fitzsimmons, 1979] are an example. Fuzzy logic-based size estimation methods such as

those described in [Putnam and Myers, 1992] and [MacDonell, 2003] are another

example.

9

Combinations of sizing methods belonging to different categories are also

possible options. For instance, pair-wise comparisons may be used in conjunction with

top-down estimation to derive an estimate of size. Similarly, expert judgment may be

combined with bottom-up estimation.

The basic problem, which none of these methods addresses, is the high amount of

uncertainty involved in early size estimation. This uncertainty stems from the fact that

very little information is available at the time of inception. Thus, as shown in the cone of

uncertainty (see Figure 1), estimates produced at the time of inception are far off from the

actual values.

2.2 Sizing Metrics

Process Phase

Possible Measures

Primary Aids

Concept Elaboration Construction

Increasing Time and Detail

Subsystems Key Features

User Roles, Use Cases

Screens, Reports,

Files, Application

Points

Function Points Components Objects

Source Lines of Code, Logical

Statements

Product Vision,

Analogies

Operational Concept, Context Diagram

Specification, Feature List

Architecture, Top Level

Design

Detailed Design Code

Figure 2: Sizing metrics

Product size can be expressed using a number of different metrics. A vast

majority of the commonly used size metrics is captured by Figure 2 which is reproduced

10

from [Stutzke, 2005]. This figure shows the metrics available at different process phases

along with the primary aids which act as sources for deriving values for these metrics. In

the construction phase, for instance, size can be measured in terms of the number of

objects specified in the detailed design.

The possible measures listed in Figure 2 can be divided into two broad categories

i.e. functional size measures and physical size measures. Functional size refers to the size

of the software in terms of its functionality while physical size refers to the size of the

software in terms of the amount of code. Each of these measures has benefits and

shortcomings. Functional size measures are technology independent and can be obtained

earlier than physical size measures. However, it is difficult to automate the counting of

functional size. Physical size measures, on the other hand, are technology dependent but

can be obtained automatically.

Function Point Analysis [Albrecht, 1979] is the most popular functional size

measure. Many versions of Function Point Analysis are being used throughout the world.

In USA, the IFPUG version of function points [IFPUG, 2010] is the most popular

methodology. In a nutshell, IFPUG function points measure the functionality of a system

by identifying its components (i.e. inputs, outputs, data files, interfaces, and inquiries)

and rating each component according to its complexity (i.e. simple, average, and

complex). Once each component has been assigned its complexity rating, these ratings

are summed-up to obtain unadjusted function points. Unadjusted function points are

multiplied by a value adjustment factor calculated using overall application

characteristics to obtain the adjusted function points.

11

Other significantly-popular versions of Function Point Analysis (FPA) include

COSMIC Full Function Points [COSMIC, 2009], Mk II FPA [UKSMA, 1998], and

NESMA FPA [NESMA, 2004]. There are a number of conceptual and procedural

differences between IFPUG and COSMIC methods. For instance, unlike IFPUG function

points that use five functional component types, COSMIC Full Function Points use four

i.e. entry, read, write, and exit. Nonetheless, both IFPUG and COSMIC functional size

methods are international standards. Mk II FPA, used mainly in the United Kingdom,

employs only three component types i.e. inputs, outputs, and entity references. This

simple measurement model was originally developed by Charles Symons [Symons, 1991]

to overcome the weaknesses claimed to be found in the original FPA (now supported by

IFPUG) such as difficulty of usage and low accuracy. The FPA guidelines published by

the Netherlands Software Metrics Association (NESMA) and IFPUG are practically the

same. There are no major differences between these two methods.

In order to accommodate algorithmically-intensive applications such as systems

software (e.g. operating systems) and real-time software (e.g. missile defense systems)

Software Productivity Research, Inc. (SPR) developed a variant of function points called

Feature Points [SPR, 1986]. Feature Points are a superset of the classical function points

in the sense that they add one additional component i.e. algorithms. This simple extension

of function points has not gained much popularity in the industry.

A number of approaches that produce approximate yet quick counts of function

points have been proposed. David Seaver’s Fast Function Points [Seaver, 2000] and

NESMA’s Indicative Function Points [NESMA, 2010] are good representatives of this

category. These methods use a much simpler approach to obtain function points of an

12

application in the early phases of the life cycle. For example, Indicative Function Points

(also known as the “Dutch Method”) use only two components i.e. data files and

interfaces.

Apart from function points, a multitude of other functional size measures are

available. A number of these such as Use Case Points [Karner, 1993] are based on UML

[Booch, Rumbaugh, and Jacobson, 1999]. Use Case Points, which are widely used in the

industry, utilize the information about the actors and use cases of a system.

Several object-oriented size measures have also been proposed. Typical examples

include MOOSE [Chidamber and Kemerer, 1994], Predictive Object Points [Minkiewicz,

1997], and Application Points [Boehm et al., 2000]. Metrics Suite for Object-Oriented

System Environments (MOOSE) consists of six metrics i.e. weighted measures per class,

average depth of a derived class in the inheritance tree, number of children, coupling

between objects, response for a class, and lack of cohesion of methods. Predictive Object

Points use three metrics from MOOSE (i.e. weighted measures per class, number of

children, and average depth of a derived class in the inheritance tree) along with an

additional metric i.e. number of top level classes. Application Points are adapted from

Object Points [Banker, 1992]. They consider the number of screens, reports, and business

rules in the application. Business rules correspond to the algorithms or third generation

(3GL) components.

SLOC quantify the “physical length” of the software [Laird and Brennan, 2006].

This metric is amenable to automatic counting using code-parsing programs called code

counters. A plethora of free and open source code counters are available for download on

the web e.g. JavaNCSS [Lee et al., 2010] for Java, CCCC [Littlefair, 2006] for C/C++,

13

etc. A number of code counters such as Unified CodeCount [Unified CodeCount, 2009]

and SLOCCount [Wheeler, 2004] provide support for multiple programming languages.

Before any code counter is developed, the notion of SLOC must be precisely

defined. Common classifications of SLOC include physical SLOC, non-comment SLOC,

and logical SLOC. Physical SLOC refer to all lines with carriage returns. Non-comment

SLOC are obtained by excluding commented lines from physical SLOC. Multiple

definitions for logical SLOC are available such as the ones published by Carnegie-Mellon

University’s Software Engineering Institute [Park, 1992], IEEE [IEEE, 1993], and USC’s

CSSE [Nguyen et al., 2007].

The arrow at the bottom of Figure 2 indicates that with the passage of time more

and more detail becomes available to produce a more accurate measure of size. Higher-

level requirements such as product goals are elaborated into shall statements, and shall

statements are refined into use cases. This process of elaboration continues till finally the

lowest level of source lines of code (SLOC) is reached.

Using higher-level requirements such as product goals for size estimation is only

likely to produce estimates that are far off from the actual values as confirmed by the

cone of uncertainty (see Figure 1). This is primarily because the elaboration process of

higher-level requirements into lower-level ones is hitherto unknown.

2.3 Requirements Elaboration

The concept of requirements elaboration has been hitherto studied in different

settings. One of the best graphical illustrations of this concept is to be found in

Cockburn’s work [Cockburn, 2001] on writing use cases. This graphical illustration is

14

reproduced in Figure 3. It employs a powerful visual metaphor to describe the gradual

hierarchical refinement of higher-level use cases to lower-level ones. Use cases are

classified according to the level of detail contained in them. This classification is done

using a set of icons depicting multiple levels of altitude and depth vis-à-vis sea-level.

Cloud and kite icons are used to represent elevation above sea-level while fish and clam

icons are employed to indicate depth below sea-level. The sea-level itself is shown by a

waves-like icon. Each altitude- or depth-level or, in other words, each icon corresponds to

a different level of detail in a use case. The cloud icon, for instance, is used to indicate a

top-level summary use case while the fish icon is used to label a low-level sub-function

use case. The ultimate objective is to express all use cases at the sea-level since the sea-

level achieves the right balance between abstraction and detail.

Figure 3: Cockburn’s metaphor illustrating refinement of use cases

Letier and van Lamsweerde proposed an agent-based approach towards

requirements elaboration [Letier and van Lamsweerde, 2002]. Several formal tactics were

defined for refining goals and then assigning them to single agents. Single agents

15

represented human stakeholders or even software components that realized the refined

goals.

Earlier, Antón had described the Goal-Based Requirements Analysis Method

(GBRAM) and the results of its application in a practical setting [Antón, 1996]. By using

GBRAM goals were identified and elaborated for later operationalization into

requirements. At roughly the same time, Darimont and van Lamsweerde had proposed a

formal pattern-based method for refining goals [Darimont and van Lamsweerde, 1996].

These reusable, recurrent, and generic goal-refinement patterns housed in libraries

enabled, among other things, checking for completeness and consistency of refinements

and hid the complicated mathematical proofs required by formal methods.

All of the works cited above deal with facilitating and improving the elaboration

of high-level requirements into lower-level ones. None of these works focuses on

measuring the process of elaboration itself. A quantitative approach is essential in

studying the mechanics of this process and in analyzing its qualitative aspects.

Several researchers have looked at quantitative “expansion ratios” [Fenton and

Pfleeger, 1997] relating size of specification with size of code. Walston and Felix

observed an empirical relationship between program documentation size in pages and

code size in KSLOC [Walston and Felix, 1977]. Jones [Jones, 1996] and Quantitative

Software Measurement (QSM) Corporation [QSM, 2009] have reported the conversion

ratios (called backfiring ratios or gearing factors) between function points and SLOC of

several different programming languages. Selby’s work [Selby, 2009] records the ratios

of implementation size (SLOC) to requirements (shall statements) of 14 large-scale

projects. These ratios are shown in Figure 4 which is reproduced from his work. Even

16

though these works adopt a quantitative approach they do not solve the problem of

accurate size estimation at inception when very high-level requirements e.g. project goals

and objectives are available.

Figure 4: SLOC/Requirement ratios of large-scale projects

Another shortcoming of the previous quantitative approaches is that they don’t

examine the various stages of elaboration between different levels of requirements such

as those shown in Figure 5 which is reproduced from [Hopkins and Jenkins, 2008]. This

figure depicts the multiple levels (e.g. business events, use cases, etc.) at which

requirements are expressed for a certain category of large IBM projects. It also shows

ballpark figures for the number of requirements at each level. Gathering such multi-level

17

requirements data and conducting quantitative and qualitative analyses on it is crucial in

uncovering the details of the requirements elaboration process.

Figure 5: Multiple requirements levels for large IBM projects

18

Chapter 3 From Cloud to Kite

It is quite interesting to note that Figure 5 reveals an almost magical expansion

ratio of around 7 between some pairs of consecutive requirements levels. For instance,

200 use cases have about 1,500 main steps implying an expansion ratio of 7.5. Similarly,

35 subsystems have 250 components indicating an expansion ratio of about 7.1. Is this

magical expansion ratio a matter of coincidence? If not, does it hold for all types of

projects and all types of requirements? If so, what are the factors that determine the

expansion ratio? Answering these and similar questions requires an in-depth analysis of

the quantitative and qualitative aspects of requirements elaboration.

This chapter reproduces, with minor modifications, the first empirical study

[Malik and Boehm, 2008; Malik and Boehm, 2009] conducted to explore the mechanics

of the requirements elaboration process. This study focuses on the first stage of

requirements elaboration i.e. the elaboration of high-level goals into immediately lower-

level requirements. In terms of the Cockburn metaphor [Cockburn, 2001], it examines the

elaboration of cloud-level requirements into kite-level requirements. The following two

main hypotheses are tested in this empirical study:

• The magical expansion ratio of 7 holds for all types of projects.

• The magical expansion ratio of 7 holds for both functional as well as non-

functional requirements.

The entire experimental scenario, including the scope of this study and the details

of the subjects, projects, and artifacts, is described in Section 3.1. Section 3.2 explains the

method used for gathering the data required for this study. It defines the appropriate

19

metrics and indicates the rationale for their usage. Section 3.3 summarizes the results

gathered using these metrics and discusses the salient aspects of these results. An in-

depth analysis of the elaboration of capability goals is provided in Section 3.4. Section

3.5 highlights the importance of the results obtained from this analysis whereas Section

3.6 mentions the limitations of these results.

3.1 Experimental Setting

USC’s annual series of two semester-long, graduate-level, real-client, team-

project software engineering courses provides a valuable opportunity to analyze the

phenomenon of requirements elaboration. These two project-based courses – Software

Engineering I (SE I) and Software Engineering II (SE II) – allow students to get a first-

hand experience of a considerable chunk of the software development life cycle. Using

the terminology of the Rational Unified Process (RUP) [Kruchten, 2003], SE I covers the

activities of Inception and Elaboration phases whereas SE II encompasses the activities of

Construction and Transition phases.

Most of the time students work in teams of six to eight people. Each team

comprises two parts – the development core and the independent verification and

validation (IV &V) wing. The development core of a team is typically made up of four to

six on-campus students. These on-campus students are full-time students with an average

industry experience of a couple of years. The IV & V wing usually contains two off-

campus students. These off-campus students are full-time working professionals who are

pursing part-time education. Average industry experience of these off-campus students is

20

at least five years. The IV & V wing works in tandem with the development core of the

team to ensure quality of deliverables.

Projects undertaken in these two courses come from a variety of sources spanning

both industry and academia. Typical clients include USC neighborhood not-for-profit

organizations (e.g. California Science Center, New Economics for Women, etc.), USC

departments (e.g. Anthropology, Information Services, Library, etc.), and USC

neighborhood small businesses. As a first step, candidate projects are screened on the

basis of their perceived complexity and duration. Due to constraints imposed by the team

size and course duration, highly complex projects or projects which require a lot of work

are filtered out. Selected projects are then assigned to teams based on the interest

preferences of the teams. Once teams are paired with clients, negotiations between these

two parties play an important role in prioritizing the requirements. This prioritized list of

requirements, in turn, facilitates completion of these projects in two semesters.

This study deals with 20 such fixed-length real-client projects done by teams of

graduate students in the past few years [USC SE I, 2010; USC SE II, 2010]. Table 1

presents a summary of these projects. The column titled “Year” indicates the year in

which each project was initiated. The primary instructor was the same for all of these

years. Special care was exercised in selecting these projects for analysis. COTS-based

projects, for which elaboration data is not available, were filtered out. Similarly, custom-

development projects with incomplete data were also not considered. As a result, each

row in Table 1 represents a fully-documented custom-development project.

21

Table 1: Projects used for first empirical study

S# Year Project Type

1 2004 Bibliographies on Chinese Religions in Western Languages Web-based database

2 2004 Data Mining Digital Library Usage Data Data mining application 3 2004 Data Mining Report Files Data mining application 4 2005 Data Mining PubMed Results Data mining application 5 2005 USC Football Recruiting System Web-based database 6 2005 Template-based Code Generator Code generator 7 2005 Web Based XML Editing Tool Web-based editor 8 2005 EBay Notification System Pattern-matching application 9 2005 Rule-based Editor Integrated GUI

10 2005 CodeCount™ Product Line with XML and C++ Code counter tool 11 2006 California Science Center Newsletter System Web-based database 12 2006 California Science Center Event RSVP System Web-based database 13 2006 USC Diploma Order/Tracking System Web-based database 14 2006 USC Civic and Community Relations Application Web-based database 15 2006 Student's Academic Progress Application Web-based database 16 2006 New Economics for Women (NEW) Web Application Web-based database 17 2006 Web Portal for USC Electronic Resources Web-based service integration 18 2006 Early Medieval East Asian Tombs Database System Web-based database 19 2006 USC CONIPMO Cost modeling tool 20 2006 Eclipse Plug-in for Use Case Authoring IDE plug-in

22

Figure 6: Partial software development process

Client project screening

Developer self-profiling

• Developer team formation

• Project selection

• Client-developer team building

• Prototyping • WinWin

negotiation

LCO Package preparation

LCO Review

LCA Package preparation

LCA Review

{developer profiles, project preferences }

{project descriptions}

{selected client-developer teams}

{LCA package guidance}

{expanded OCD, prototypes, SSRD’, SSAD’, LCP’, FRD’}

{product development guidance}

{build-to OCD, prototypes, SSRD, SSAD, LCP, FRD}

{draft OCD}

LCO: Life Cycle Objectives LCA: Life Cycle Architecture OCD: Operational Concept Description SSRD: System and Software Requirements Definition SSAD: System and Software Architecture Description LCP: Life Cycle Plan FRD: Feasibility Rationale Description ABC’: Top-level version of document ABC

1 2

3

4

5

6

7

8

Inception

Elaboration

23

Each project considered in this study followed the MBASE/RUP [Boehm et al.,

2004; Kruchten, 2003] or LeanMBASE/RUP [Boehm et al., 2005; Kruchten, 2003]

development process. Figure 6 shows a partial view of this process. This view covers

only the first half of the process. This half encompasses the Inception and Elaboration

phases. The second half of the process, which deals with the Construction and Transition

phases, has not been shown for the sake of brevity. In Figure 6, boxes indicate major

steps carried out in this process while arrows signify the general flow of the process.

Multiple activities within a step are presented as a bulleted list. Here it must be noted that

several activities such as project planning, client-team interaction etc. that are common

across all steps have been omitted to avoid clutter in the diagram. Each step, which has

been numbered for convenience, produces a set of artifacts [B. Boehm et al., 2005] that is

used by the subsequent step as its primary input. Step 4, for instance, produces the draft

version of the Operational Concept Description (OCD) document which is used by Step 5

to come up with an expanded version of OCD along with prototypes and other documents

such as the System and Software Requirements Definition (SSRD). As explained in the

following section, the OCD and SSRD are the only documents we focus on in this study.

3.2 Method

In order to get a handle on the quantitative aspects of requirements elaboration the

relationship between two different types of goals and requirements is studied. On the one

hand, the relationship between capability goals and capability requirements is examined.

On the other, the relationship between level of service (LOS) goals and LOS

requirements is studied. Capability goals and capability requirements represent,

24

respectively, the functional goals and the functional requirements of a software product.

Figure 7 shows a representative high-level capability goal. It also shows a couple of low-

level capability requirements that are rooted in this goal. LOS goals and LOS

requirements represent, respectively, the non-functional goals and the non-functional

requirements of a software product. Figure 8 depicts a typical high-level LOS goal along

with a low-level LOS requirement that originates from this goal.

26

Figure 8: Typical high-level LOS goal with its low-level LOS requirement

A number of metrics have been devised to examine the relationship between these

two different types of goals and requirements. Documentation produced at the time of the

Life Cycle Objectives (LCO) milestone [Boehm, 1996] acts as the primary source of data

corresponding to goals-related metrics. The LCO milestone, reached at the end of the

Inception phase, indicates the presence of at least one feasible architecture for the

software product. At this point, a set of artifacts called the LCO Package is produced by

the project team. This is shown as Step 5 in Figure 6. Within the LCO Package, only one

document – the expanded OCD – provides all relevant information about both types of

goals. Figure 9 contains a sample top-level table of contents of this document. As shown

by this table of contents, information about capability goals is available in Section 3.1

while information about LOS goals is present in Section 3.3.3.

27

Figure 9: Table of contents of OCD

Documentation generated at the end of the Construction phase acts as the primary

source of data corresponding to requirements-related metrics. Completion of the

Construction phase coincides with the Initial Operational Capability (IOC) milestone

[Boehm, 1996]. At this stage, a working version of the product is ready to be transitioned

and the project team has generated a set of artifacts called the IOC Working Set.

Depending upon the number of versions released, a team may produce more than one

IOC Working Set where each subsequent Working Set builds upon the previous. All

requirements-related data is gathered from the SSRD document contained in the final

IOC Working Set. Figure 10 presents a brief summary of a typical SSRD by displaying

the high-level table of contents of such a document. As is evident from this figure,

information about capability requirements is contained in Section 3 whereas information

28

about LOS requirements is available in Section 5. Evolutionary capability requirements

and evolutionary LOS requirements, present in Section 7, are not considered in our

analyses. This is because evolutionary requirements deal with future extensions of the

software product and, therefore, are not rooted in goals specified during the Inception

phase. In the text that follows, references to OCD and SSRD should be interpreted as

references to the specific versions of OCD and SSRD as described above.

Figure 10: Table of contents of SSRD

When dealing with capability requirements, both nominal and off-nominal

requirements are examined. In other words, we consider requirements of system behavior

in normal as well as abnormal conditions. This is done to ensure consistency with the

29

analyses of capability goals since all types of goals specified in OCD are examined. No

such distinction of nominal versus off-nominal is applicable in the case of LOS

requirements for these 20 small real-client projects.

Table 2 summarizes the metrics employed by this study to accurately measure the

elaboration of cloud-level goals documented at the time of LCO milestone into kite-level

requirements documented at the time of IOC milestone. This same set of seven generic

metrics is used for both types of goals and requirements. The first four metrics are

“direct” metrics. Data corresponding to these metrics are collected directly from the

project documentation by inspection. The first two – NGI and NGR – are related to goals

whereas the next two – NRD and NRN – are related to requirements. NGI represents the

number of goals specified during inception. The value of this metric for any project can

be easily obtained by counting the high-level goals specified in OCD. NGR specifies the

number of goals that were eventually removed and not taken into consideration while

developing the final product. Finding the value of this metric requires inspecting both

OCD and SSRD documents. Any high-level goal in OCD that does not elaborate into

even a single low-level requirement in SSRD adds to the count of NGR.

Table 2: Metrics

S# Metric Description 1 NGI Number of initial goals 2 NGR Number of removed goals 3 NRD Number of delivered requirements 4 NRN Number of new requirements 5 NGA Number of adjusted goals 6 NRA Number of adjusted requirements 7 EF Elaboration Factor

NRN indicates the number of requirements that were added later-on in the project

and had no relationship to the goals included in NGI. This metric gives a crude indication

30

of the extent of the requirements- or feature-creep phenomenon. Determining the value of

this metric requires studying both OCD and SSRD documents. All low-level

requirements in SSRD that are not rooted in any high-level goal in OCD are included in

NRN. NRD records the number of requirements satisfied by the product delivered to the

client. This can be easily determined by counting the number of requirements present in

SSRD.

The last three metrics – NGA, NRA, and EF – are “derived” metrics. Unlike the

direct metrics described above, values of these metrics are not obtained by inspection of

documents. Their values are calculated from existing information instead. The following

formulae are used for this purpose:

Equation 1: RIA NGNGNG −=

Equation 2: NDA NRNRNR −=

Equation 3: A

A

NG

NREF =

NGA and NRA represent adjusted metrics while EF is a ratio of these adjusted

metrics. As is evident from Equation 1, NGA is obtained by subtracting the removed goals

from the initial set of goals. This adjustment yields only those goals that were retained till

the end of the project and achieved by the final product. NRA indicates requirements that

emerge solely from these retained goals. As shown in Equation 2, this adjustment is

accomplished by subtracting the newly introduced requirements from the set of all

delivered requirements. The EF metric shown in Equation 3 quantifies the phenomenon

of requirements elaboration by assigning it a numerical value. This value – called the “EF

31

value” of a project – indicates the average number of low-level requirements produced by

the elaboration of a single high-level goal.

Ranges of EF values can be used to classify projects in different elaboration

groups called “EF groups”. Keeping in view the nature of projects encountered in our

small-project setting and based on the data we have observed (see Section 3.4 Elaboration

of Capability Goals) we have come up with a simple criterion for defining these groups.

This criterion is depicted in Figure 11. The continuous spectrum of EF values is shown

by a horizontal line. The fact that this spectrum covers only positive real numbers can be

easily seen by revisiting Equations 1 through 3 which outline the procedure for

calculating an EF value. As shown by Equations 1 and 2, both the numerator and the

denominator of Equation 3 are positive integers since they represent adjusted counts.

Hence the EF value, which is a ratio of these positive integers, must be a positive real

number.

Figure 11: EF ranges defining different elaboration groups

1 1. 5 2 0

LEF MEF HEF AEF

Elaboration Groups AEF = Abnormal Elaboration Factor LEF = Low Elaboration Factor MEF = Medium Elaboration Factor HEF = High Elaboration Factor

32

The spectrum of EF values is divided into four sections. Each of these four

sections corresponds to a separate EF group. The first section corresponds to the

Abnormal Elaboration Factor (AEF) group. It contains projects with EF values less than

1. These projects undergo “abnormal” elaboration since their number of adjusted

requirements (NRA) is less than their number of adjusted goals (NGA). Under normal

circumstances, NRA is at least as large as NGA producing an EF value greater than or

equal to 1. Sections 3.3 and 3.4 provide some possible explanations for this seemingly

anomalous behavior.

The remaining three sections of EF values classify regular projects, i.e. projects

that undergo “normal” elaboration. The Low Elaboration Factor (LEF) group contains

projects with EF values between 1 and 1.5 (both inclusive) while the Medium

Elaboration Factor (MEF) group contains projects with EF values between 1.5 and 2

(inclusive). Finally, projects with EF values greater than 2 fall under the High

Elaboration Factor (HEF) group. In our setting, even though it is hard to imagine a

project with an EF value greater than 10, the upper bound of the range for the HEF group

has been left unspecified to accommodate cases of exceptionally high elaboration.

The ranges of EF values that define elaboration groups are not cast in stone. As

mentioned above, the ranges shown in Figure 11 were defined in accordance with the

data observed in our specific experimental setting as described in the following section. It

may be necessary to tailor these ranges based on data observed in other settings.

Nonetheless, the concept of using ranges of EF values to classify projects is applicable in

all settings.

33

3.3 Results

This section presents the results obtained by examining the elaboration of both

types of goals into their respective requirements. Values of the seven metrics introduced

in the previous section are tabulated in Tables 3 and 4 for each of the 20 projects. For the

sake of brevity, project names are omitted from these tables. Serial numbers, however,

have been retained for reference and are shown in the first column. These serial numbers

correspond to the serial numbers in Table 1. Also, for the sake of convenience, data in

Tables 3 and 4 are presented in ascending order of EF values. The last column – “Group”

– categorizes these 20 projects according to the elaboration groups defined in the

previous section.

Table 3: Values of metrics for capability goals and capability requirements

S# NGI NGR NRD NRN NGA NRA EF Group 10 14 2 10 1 12 9 0.75 19 8 1 8 2 7 6 0.86

AEF

3 3 1 7 5 2 2 1 16 5 2 3 0 3 3 1 7 10 5 6 1 5 5 1 1 12 3 12 2 9 10 1.11 8 10 2 12 2 8 10 1.25 9 7 4 8 4 3 4 1.33

LEF

2 3 0 9 4 3 5 1.67 20 5 2 7 2 3 5 1.67 17 7 1 21 10 6 11 1.83 6 4 1 7 1 3 6 2 4 5 1 14 6 4 8 2

15 5 1 11 3 4 8 2

MEF

14 3 0 10 3 3 7 2.33 18 6 0 20 5 6 15 2.5 5 4 1 12 3 3 9 3

13 2 0 11 3 2 8 4 12 6 2 19 2 4 17 4.25 11 8 5 16 3 3 13 4.33

HEF

34

Table 3 presents the data related to capability goals and capability requirements.

The first two rows represent projects that underwent abnormal elaboration since their EF

value is less than 1. A detailed examination of these two projects helped understand the

reason behind this anomalous behavior. In the case of project number 10, a few pairs of

capability goals were merged into single capability requirements. In the second case –

project number 19 – the unexpected result was caused by two factors. First of all, like in

the first project, a pair of capability goals was merged into a single capability requirement.

Secondly, the system in project number 19 was so well-understood even at inception that

capability goals were already specified at the level of capability requirements.

Table 4: Values of metrics for LOS goals and LOS requirements

S# NGI NGR NRD NRN NGA NRA EF Group 7 3 1 2 1 2 1 0.50 AEF

1 4 0 4 0 4 4 1.00 2 4 1 5 2 3 3 1.00 3 4 0 5 1 4 4 1.00 4 3 1 2 0 2 2 1.00 5 5 3 3 1 2 2 1.00 6 1 0 3 2 1 1 1.00 8 2 0 3 1 2 2 1.00

10 6 0 6 0 6 6 1.00 11 4 2 5 3 2 2 1.00 12 4 3 1 0 1 1 1.00 13 4 0 4 0 4 4 1.00 14 5 4 6 5 1 1 1.00 15 2 0 2 0 2 2 1.00 18 4 0 4 0 4 4 1.00 19 4 2 3 1 2 2 1.00 20 2 0 2 0 2 2 1.00

LEF

9 4 4 0 0 0 0 - 16 2 2 2 2 0 0 - 17 1 1 4 4 0 0 -

-

35

The data related to LOS goals and LOS requirements are provided in Table 4.

Clearly, the project represented by the first row underwent abnormal elaboration since its

EF value is less than 1. Careful scrutiny of this project’s documentation revealed that this

unexpected behavior was a direct result of merging two LOS goals into one LOS

requirement. The last three rows represent projects with undefined EF values. After

studying the documentation of these three projects, it was found that this unanticipated

result could be due to different reasons in different projects. In the case of project number

9, the client felt that the LOS goals were either too trivial to be included in LOS

requirements or were part of the evolutionary requirements of the system. Thus, no LOS

requirement was specified for the current system. In the case of project numbers 16 and

17, none of the original LOS goals was retained. This led to completely new LOS

requirements.

36

0

2

4

6

8

10

12

14

16

18

20

0 2 4 6 8 10 12 14

NGA

NR

A

(2)

(2)

Figure 12: Elaboration of capability goals into capability requirements

37

0

1

2

3

4

5

6

7

0 1 2 3 4 5 6 7

NGA

NR

A

(3)

(7)

(4)

(3)

Figure 13: Elaboration of LOS goals into LOS requirements

Figures 12 and 13 display the graphs obtained by plotting NRA against NGA from

Tables 3 and 4 respectively. By default, each dot represents a single project. In case two

or more projects have identical values of the pair (NGA, NRA), the dot is annotated with a

parenthesized number specifying the number of projects aggregated by that dot. For

instance, the dot labeled “(7)” in Figure 13 represents 7 projects. Regression lines have

also been added to these graphs for convenience. Here it must be pointed out that the y-

intercept of these lines, and all subsequent regression lines, has been set to zero. This

makes intuitive sense since a project that has no goals to start with cannot exhibit the

phenomenon of requirements elaboration.

38

A comparison of Figure 12 and Figure 13 reveals a marked difference between

the elaboration of capability goals and the elaboration of LOS goals. Figure 13 indicates

that, apart from the four special cases mentioned above (i.e. project numbers 7, 9, 16, and

17), LOS goals seem to have a strict one-to-one relationship with LOS requirements. In

other words, there is a magical expansion ratio of 1 instead of 7 for non-functional

requirements. This implies that a high-level LOS goal is not elaborated into multiple low-

level LOS requirements. This is generally the case because LOS goals and requirements

apply to the total system and not to incremental capabilities. This explains why the vast

majority of projects in Table 4 have an EF value of exactly 1. The situation, however, is

much different in the case of elaboration of capability goals.

3.4 Elaboration of Capability Goals

A glance at Figure 12 suggests a roughly increasing relationship between NRA and

NGA. This relationship, however, is not very strong and there is a lot of variation around

the fitted regression line. A careful observation of the same figure reveals something very

subtle i.e. the presence of different groups of elaboration. This subtlety is made apparent

in Figure 14 which is a modified version of Figure 12. Both figures contain the exact

same set of data points. Figure 14, however, presents a visual grouping of these data

points. It contains four regression lines along with their equations and coefficient of

determination (R2) values. The original set of data points is now clearly divided into four

distinct groups – AEF, LEF, MEF, and HEF – as defined in Section 3.2.

Projects with abnormal (i.e. less than 1) EF values are grouped by the line at the

bottom of Figure 14. Points around the solid line represent projects with low EF values (1

39

– 1.33). The dashed line fits points that correspond to projects with intermediate EF

values (1.67 – 2). Finally, points around the dotted line at the top map to projects with the

highest EF values (2.33 – 4.33). This classification is also indicated in the last column of

Table 3.

y = 0.7772x

R2 = 0.9067

y = 1.1458x

R2 = 0.9688

y = 1.8737x

R2 = 0.9447

y = 3.1446x

R2 = 0.3262

0

2

4

6

8

10

12

14

16

18

20

0 2 4 6 8 10 12 14

NGA

NR

A

AEF

LEF

MEF

HEF

(2)

(2)

Figure 14: Elaboration groups for capability goals

It is obvious from these results that, as far as the elaboration of capability goals is

concerned, there is no magical or one-size-fits-all formula. Different projects exhibit

different ratios of elaboration. Certain parameters, however, can provide some clue about

the EF group of a project at an early stage. Client experience and architectural

complexity, for instance, are few such parameters.

40

It was found that both projects in the AEF group had highly experienced clients.

These clients thoroughly understood their respective applications. As a result, even at the

time of inception, capability goals of these projects were specified at the level of

capability requirements. Therefore, no further elaboration was required. Some of the

capability goals even overlapped with each other. These were later merged into a single

capability requirement. These two factors – over-specification of capability goals and

merger of overlapping goals – caused these projects to have an abnormal EF value.

A closer examination of projects in the HEF group revealed that all of these

projects were of type “Web-based database” (see Table 1). Capability goals of these

projects underwent extensive elaboration. One possible explanation for this high

magnitude of elaboration could be that web-based databases usually consist of more

architectural tiers than regular databases and stand-alone applications. Thus,

accomplishing one high-level capability goal may require multiple tiers thereby resulting

in a larger number of requirements as compared to other applications.

Other parameters such as a project’s novelty also need to be considered. These

additional factors could explain why, for instance, all projects in the HEF group were of

type “Web-based database” but not all projects of type “Web-based database” had an EF

value greater than 2. Clearly, the goals of a web-based database that has very few novel

capabilities will not need to undergo extensive elaboration.

3.5 Utility of Elaboration Groups

A number of techniques such as Use Case Points [Karner, 1993] and Predictive

Object Points [Minkiewicz, 1997] have been proposed to come up with early estimates of

41

software development effort. All of these techniques, however, are applicable only after

some preliminary analysis or design of the software project at hand. Early determination

of a project’s EF group enables a much earlier “goals-based estimation”. Once the EF

group of a project has been determined its EF value has been bound by a narrow range.

This information together with the information about the number of goals of a project,

which is readily available even at the time of inception, can be used to come up with a

much better early estimate of a project’s size.

By definition, a larger EF value indicates a larger number of requirements per

goal than a smaller EF value. Therefore, it should not be hard to see that there is a

positive relationship between the EF value of a project and its size. Assuming everything

else is constant, a project that undergoes more elaboration of goals will be of a bigger

size than the one which undergoes less elaboration.

Accurate a priori determination of a project’s EF group also enables one to get an

insight on the sensitivity of software cost estimates. This is so because EF groups also

give a rough indication of the impact of modifications to the set of project goals.

Introduction of a new goal to the set of goals of a project in the HEF group is likely to be

accompanied by more work than a similar change to a project in the LEF group. This

indicates that a project classified under the HEF group is likely to be more risky in terms

of schedule-slippage and budget-overrun vis-à-vis a project in the LEF group. This

information can help a project manager in calculating risk reserves and coming up with

better early estimates in the presence of risks.

Albeit subtle, another important benefit of early determination of a project’s EF

group is that it provides an opportunity to save valuable time, effort, and money. For

42

instance, if it is determined early-on that a project belongs to the LEF group then it

becomes apparent that this project will exhibit very little elaboration. Therefore, for this

project, some steps of the Elaboration phase may be skipped and the Construction phase

activities may begin earlier.

Table 5: Elaboration determinants

Determinant Relationship

Project Precedentedness Negative

Client Familiarity Negative

Architectural Complexity Positive

Table 5 lists the potential determinants of a project’s EF value. This list is based

on the elaboration data obtained from analyzing the existing set of 20 small-sized real-

client projects. The second column (i.e. Relationship) indicates the direction of the

relationship between a determinant and a project’s EF value. A positive relationship

implies that, other things being constant, a higher level of the determinant will lead to a

higher EF value. A negative or inverse relationship implies that, other things being

constant, a higher level of the determinant will lead to a lower EF value. Project

precedentedness refers to the novelty of the project. Intuitively, a newer project will have

a higher EF value. Client familiarity denotes the experience of the client in the project

domain. As indicated by the two projects in the AEF group, projects with clients that are