Peters Imp

-

Upload

sriharsha-reddy -

Category

Documents

-

view

34 -

download

1

description

Transcript of Peters Imp

-

GAME THEORY

Hans Peters Dries Vermeulen

NAKE University of Maastricht

September 2005

-

iPreface

This material has been composed for use in the Ph.D. NAKE-course Game Theory,Fall 2005. It is based on several courses offered in the Econometrics program at theUniversity of Maastricht and in the Mathematics program at the RWTH Aachen,Germany. It also borrows from several textbooks on game theory, in particular APrimer in Game Theory by R. Gibbons.

Due to the potentially quite different interests, backgrounds and previous educationof students it is not easy to find the right level for a course like this one. We havechosen to start from scratch and assume not more than a basic level in mathematicsand calculus. On the one hand, for students totally unacquainted with game theorythere is a lot of material compressed in the first six weeks of this course, namely thisreader consisting of two parts: Part I on Noncooperative Game Theory (about 100pages) and Part II on Cooperative Game Theory (about 40 pages). On the otherhand, for students already more or less familiar with game theory, this first part ofthe course can be seen as a repetition of the basic theory.

The second part of the course will specialize on topics treated in this reader as wellas new topics. This may depend on the interests of the students.

Hans Peters ([email protected])Dries Vermeulen ([email protected])

-

ii

-

Contents

1 Introduction 1

1.1 Noncooperative games . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 Zerosum games . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.2 Nonzerosum games . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.3 Games in extensive form . . . . . . . . . . . . . . . . . . . . . . 6

1.2 Cooperative games . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.2.1 Transferable utility games . . . . . . . . . . . . . . . . . . . . . 9

1.2.2 Bargaining games . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.3 Concluding remarks . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

I Noncooperative Game Theory 17

2 Nash Equilibrium 19

2.1 Strategic Games . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

2.2 Finite Two-Person Games . . . . . . . . . . . . . . . . . . . . . . . . . 22

2.3 Nash Equilibrium . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

2.4 Applications . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

3 The Mixed Extension 33

3.1 Mixed Strategies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

3.2 The Mixed Extension of a Finite Game . . . . . . . . . . . . . . . . . 34

3.3 Solving Bimatrix Games . . . . . . . . . . . . . . . . . . . . . . . . . . 39

3.4 The Interpretation of Mixed Strategy Nash Equilibria . . . . . . . . . 44

4 Zero-Sum Games 47

5 Extensive Form Games: Perfect Information 57

5.1 Games in Extensive Form: the Model . . . . . . . . . . . . . . . . . . 57

5.2 The Strategic Form of an Extensive Form Game . . . . . . . . . . . . 59

5.3 Subgame Perfect Equilibria . . . . . . . . . . . . . . . . . . . . . . . . 62

5.4 Applications of Backwards Induction . . . . . . . . . . . . . . . . . . . 64

iii

-

iv CONTENTS

6 Extensive Form Games: Imperfect Information 69

6.1 Information Sets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 696.2 Mixed and Behavioral Strategies . . . . . . . . . . . . . . . . . . . . . 726.3 Equilibria . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 776.4 Perfect Bayesian Nash Equilibria . . . . . . . . . . . . . . . . . . . . . 81

7 Signaling Games 93

7.1 The Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 937.2 The Beer and Quiche Game . . . . . . . . . . . . . . . . . . . . . . . . 957.3 The Spence Signaling Model . . . . . . . . . . . . . . . . . . . . . . . . 102

-

Chapter 1

Introduction

Game Theory arose as a mathematical discipline (American Mathematical Societycode 90D). It is, however, a mathematical discipline which is inspired by economic,political, or social problems rather than by problems from science, such as physics.The first main work in Game Theory was the book Theory of Games and EconomicBehavior by John von Neumann and Oskar Morgenstern (Princeton University Press,Princeton, 1944). The title of this book reflects its main source of inspiration.

Usually a distinction is made between cooperative and noncooperative game theory.The idea is that in a cooperative game binding agreements are possible, whereas thisis not the case in a noncooperative game. The idea of binding agreements, however,is not unambiguous. Therefore, it seems preferable to put the different modeling as-sumptions at the forefront. In a noncooperative game one defines the set of players,their strategy sets, and the payoff functions, and the central solution concept is thatof Nash equilibrium, as a specific strategy combination. Thus, these games may alsobe called strategy-oriented . In a cooperative game one defines the set of players andthe payoffs that can be reached by players and coalitions of players. So such a gamemight also be called payoff-oriented. In a cooperative game, the underlying assump-tion is that solutions (e.g., payoff vectors, coalition structures) can be legalized bybinding contracts. Noncooperative game theory is mainly concerned with studyingNash equilibrium and variations thereof. The usual approach in cooperative gametheory is the axiomatic approach, and there is not one central solution concept.

This chapter gives a brief introduction to noncooperative (Section 1.1) and cooperative(Section 1.2) game theory. Some relations between the two approaches are mentionedin Section 1.3. Our introduction is based on examples, mainly from economics. Eachexample will consist of three parts: a story, a model, and possible solutions. We willnot be rigorous but, instead, try to convey the flavor of the associated mathematical(game theoretical) analysis. For a more extensive and/or rigorous treatment thereader is referred to the rest of this reader and other literature, a sample of which isincluded in the list of references at the end of this chapter.

1

-

2 CHAPTER 1. INTRODUCTION

1.1 Noncooperative games

In a noncooperative game binding agreements between the players are, in principle,not possible. Therefore, a solution of such a game should be self-enforcing in someway or another. The central solution concept, Nash equilibrium, has this property.The examples below involve only two players. More generally, however, there can beany finite or even uncountably infinite number of players.

1.1.1 Zerosum games

Zerosum games are games where the sum of the payoffs of the players is always equalto zero. In the two-person case this implies that these payoffs have opposite signs.More generally, strictly competitive games are games where the interests of the playersare strictly opposed. Zerosum games but also constant-sum games are examples ofsuch games. (See Chapter 4 for a further treatment.)Zerosum games were first explicitly studied by John von Neumann (1928) in his articleZur Theorie der Gesellschaftsspiele (Mathematische Annalen, 100, pp. 295320) inwhich he proved the famous Minimax Theorem.

The Battle of the Bismarck Sea

The story An example of a situation giving rise to a zerosum game is the Battleof the Bismarck Sea (taken from Rasmusen, 1989). The game is set in the South-Pacific in 1943. The Japanese admiral Imamura has to transport troops across theBismarck Sea to New Guinea, and the American admiral Kenney wants to bomb thetransport. Imamura has two possible choices: a shorter Northern route (2 days) or alarger Southern route (3 days), and Kenney must choose one of these routes to sendhis planes to. If he chooses the wrong route he can call back the planes and send themto the other route, but the number of bombing days is reduced by 1. We assume thatthe number of bombing days represents the payoff to Kenney in a positive sense andto Imamura in a negative sense.

A model The Battle of the Bismarck Sea problem can be modeled as in the followingtable:

(North SouthNorth 2 2South 1 3

)This situation represents a game with two players, namely Kenney and Imamura.Each player has two possible choices; Kenney (player 1) chooses a row, Imamura(player 2) chooses a column, and these choices are to be made independently andsimultaneously. The numbers represent the payoffs to Kenney. For instance, thenumber 2 up left means that if Kenney and Imamura both choose North, the payoffto Kenney is 2 and to Imamura 2. [The convention is to let the numbers denote thepayoffs from player 2 (the column player) to player 1 (the row player).] This game isan example of a zerosum game because the sum of the payoffs is always equal to zero.

-

1.1. NONCOOPERATIVE GAMES 3

A solution In this particular example, it does not seem difficult to predict whatwill happen. By choosing North, Imamura is always at least as well off as by choosingSouth, as is easily inferred from the above table of payoffs. So it is safe to assumethat Imamura chooses North, and Kenney, being able to perform this same kind ofreasoning, will then also choose North, since that is the best answer to the choice ofNorth by Imamura. Observe that this game is easy to analyse because one of theplayers has a weakly dominant choice, i.e., a choice which is always at least as good(giving always at least as high a payoff) as any other choice, no matter what theopponent decides to do.Another way to look at this game is to observe that the payoff 2 resulting from thecombination (North,North) is maximal in its column (2 1) and minimal in its row(2 2). So neither player has an incentive to deviate. When discussing the moregeneral nonzerosum game below, we will see that this is exactly the definition of aNash equilibrium. In this zerosum case, it in fact means that the row player maximizeshis minimal payoff by playing the first row (because 2 = min{2, 2} 1 = min{1, 3}),and the column player (who has to pay according to our convention) minimizes theamount that he has to pay (because 2 = max{2, 1} 3 = max{2, 3}).In the following (abstract) example:

2 3 31 4 01 0 4

neither player has a dominant or weakly dominant choice (row or column), but theentry 2 (upper row, left column) is maximal in its column and minimal in its row.Player 1 maximizes his minimal payoff whereas player two minimizes what he has topay maximally. So this is the natural solution of the game: neither player can expectto obtain more.In both these examples we say that the value of the game is equal to 2: this is whateach player can guarantee (receive minimally or pay maximally) in this game.

Matching Pennies

The story In the two-player game of matching pennies, both players have a coinand simultaneously show heads or tails. If the coins match, player 2 pays one guilderto player 1; otherwise, player 1 pays one guilder to player 2.

A model This is a zerosum game with payoff matrix[1 1

1 1]

The upper row and left column correspond to heads, the lower row and right columnto tails.

A solution Observe that in this game no player has a (weakly) dominant action,and that there is no saddlepoint : there is no entry which is simultaneously a minimum

-

4 CHAPTER 1. INTRODUCTION

in its row and a maximum in its column. Thus, there does not seem to be a naturalway to solve the game. Von Neumann (1928) proposed to solve games like thisand zerosum games in generalby allowing the players to randomize between theirchoices. In the present example of matching pennies, suppose player 1 chooses headsor tails both with probability 1

2. Suppose furthermore that player 2 plays heads with

probability q and tails with probability 1 q, where 0 q 1. In that case theexpected payoff for player 1 is equal to

1

2[q 1 + (1 q) 1] + 1

2[q 1 + (1 q) 1]

which is independent of q, namely, equal to 0. So by randomizing in this way betweenhis two choices, player 1 can guarantee to obtain 0 in expectation (of course, theactually realized outcome is always +1 or 1). Analogously, player 2, by playingheads or tails each with probability 1

2, can guarantee to pay 0 in expectation. Thus,

the amount of 0 plays a role similar to that of a saddlepoint. Again, we will say that0 is the value of this game.

Von Neumann (1928) proved that every zerosum game has a value, by proving theMinimax Theorem, which is equivalent to the Duality Theorem of Linear Program-ming. In fact, linear programming can be used to solve zerosum games in general,although for special cases with not too many choices there are other methods, forinstance geometrical methods.

1.1.2 Nonzerosum games

In a nonzerosum game the sum of the payoffs of the players does not have to be equalto zero. In particular, the interests of the players are not per se opposed.

A coordination problem

The story This example is based on Rasmusen (1989, p. 35). Two firms (Smithand Brown) decide whether to design the computers they sell to use large or smallfloppy disks. Both players will sell more computers if their disk drives are compatible.If they both choose for small disks the payoffs will be 2 for each. If they both choosefor large disks the payoffs will be 1 for each. If they choose different sizes the payoffswill be 1 for each.

A model This situation can be represented by the following table:

( Small LargeSmall 2, 2 1,1Large 1,1 1, 1

)

In this representation player 1 (e.g., Smith) chooses a row and player 2 (Brown)chooses a column. The payoffs are to (player 1,player 2).

-

1.1. NONCOOPERATIVE GAMES 5

Solutions Obviously, neither player has a dominant choice (row or column). Thecombinations (Small,Small) and (Large,Large), however, are special in the followingsense. If player 2 believes that player 1 plays Small, the choice Small is also optimalfor player 2; and vice versa. The same holds true for the combination (Large,Large).Such combinations are called Nash equilibria (Nash, 1951).Suppose the game is extended by allowing randomization. Then the combination inwhich each player plays Small with probability 2

5and Large with probability 3

5is

again a Nash equilibrium (in mixed strategies). This can be seen as follows. Supposeplayer 1 believes that player 2 will play according to these probabilities. Then player1s first row Small yields an expected payoff of 1

5, but also the second row Large

yields an expected payoff of 15. Hence, player 1 is indifferent between these rows,

any randomization yields the same expected payoff of 15, and in particular the mixed

strategy under consideration. An analogous argument can be given for the roles ofthe players reversed. So these mixed strategies are mutually optimal, i.e., they are aNash equilibrium in mixed strategies.Nash (1951) showed that a Nash equilibrium in mixed strategies always exists ingames like this. The proof is based on Brouwers or Kakutanis fixed point theorem.More generally, the problem with Nash equilibrium is multiplicity, rather than ex-istence. Much of the literature on noncooperative game theory is concerned withrefining the Nash equilibrium concept, in order to get rid of part or all of the multi-plicity; see, in particular, van Damme (1995).

The Cournot game

The story In a standard version of the Cournot game (Cournot, 1838) two firmssell a homogeneous product. They compete on the market by supplying quantities ofthis product; the total quantity supplied (offered) determines the price at which themarket clears.

A model The strategy sets of the players are S1 = S2 = [0,). A strategy qifor firm i is interpreted as: Firm i offers amount qi of the product on the market.The price-demand function (market-clearing price) is the function p = P (q1 + q2) =aq1q2, where a > 0 is a given fixed number. We further assume that the marginalcosts of firm i for producing the product are equal to c (so the same for both firms),where 0 c < a. The payoff functions for the players are given by the profit functions:

K1(q1, q2) = q1(a q1 q2) cq1and

K2(q1, q2) = q2(a q1 q2) cq2.[It is implicitly assumed here that the total quantity offered stays below a; in theequilibrium analysis to follow this will be the case.]

Solutions As in the previous example, a Nash equilibrium is a pair of strategieswhich are best replies to each other. Thus, a Nash equilibrium is a pair (q1 , q

2) such

-

6 CHAPTER 1. INTRODUCTION

thatK1(q

1 , q

2) K1(q1, q2) for all q1 S1

K2(q1 , q

2) K2(q1 , q2) for all q2 S2.

In the economic literature, such a pair (q1 , q2) is usually called a Cournot equilib-

rium. Alternatively, one may construct a model with prices instead of quantities asstrategies; then a Nash equilibrium is called a Bertrand equilibrium.

In order to calculate a Cournot equilibrium we first determine player 1s reactionfunction. This is obtained by solving, for each fixed q2 0, the problem

maxq10

K1(q1, q2).

Assuming an interior maximum, we find that this maximum is attained at q1 =1

2(a c q2). By a symmetric argument we obtain for player 2: q2 = 12 (a c q1).

The Cournot equilibrium is the unique point of intersection (q1 , q2) of the reaction

functions. It is not hard to verify that

q1 = q2 =

a c3

.

Another solution concept applied in duopoly theory is the concept of Stackelbergequilibrium. In such an equilibrium one player (say firm 1) is regarded as the leaderwhile the other player (firm 2) is the follower. This means that player 1 moves first,while player 2 observes player 1s choice and reacts optimally; player 1 knows this andchooses q1 so as to maximize profits. In order to calculate the Stackelberg equilibriumwe thus plug player 2s reaction function into player 1s profit function and maximizeover q1, i.e.,

maxq10

K1(q1, R(q1))

where

R(q1) =1

2(a c q1).

If q1 is a solution to this maximization problem, then the pair (q1, q2), where q2 =R(q1), is a Stackelberg equilibrium. One may verify that q1 =

ac2

and q2 =ac4.

1.1.3 Games in extensive form

With one exception all games considered so far are static one-shot games, where theplayers choose simultaneously. In particular they do not know each others choices(but may form beliefs about these). Such games exhibit imperfect information. Theexception is the Stackelberg equilibrium, which is not so much a different solutionconcept but rather refers to a different game; the leader moves first and the followerobserves this move and then makes his choice. Such a game therefore is said to haveperfect information.

In this section, more generally, games in extensive form are considered, which enableus to model such sequential moves.

-

1.1. NONCOOPERATIVE GAMES 7

An entry deterrence game

The story An old question in industrial organization is whether an incumbentmonopolist can maintain his position by threatening to start a price war against anynew firm that enters the market. In order to analyse this question, consider thefollowing game. There are two players, the entrant and the incumbent. The entrantdecides whether to Enter (E) or to Stay Out (O). If the entrant enters, the incumbentcan Collude (C) with him, or Fight (F ) by cutting the price drastically. The payoffsare as follows. Market profits are 100 at the monopoly price and 0 at the fightingprice. Entry costs 10. Collusion shares the profits evenly.

A model The structure of this game and its possible moves and payoffs are depictedin Figure 1.1(a). A strategy in this game is a complete plan to play the game. Thisshould be a plan, devised before the actual start of the game, and specifying what aplayer should do in every possible contingency of the game. In this case, the entranthas only two strategies (Enter and Stay Out) and also the incumbent firm has onlytwo strategies (Collude and Fight). Given these strategies, there is a correspondingsimultaneous gamecalled the strategic or normal form of the gameas follows:

(Collude FightEnter 40, 50 10, 0Stay Out 0, 100 0, 100

)

Solutions From the strategic form of the game (and limiting attention to purestrategies, i.e., without randomization) it follows that there are two Nash equilibria,namely (Enter,Collude) and (Stay Out,Fight). In the latter Nash Equilibrium one canimagine the incumbent firm threatening the potential entrant with a price war in casethat firm would dare to enter. This threat, however, is not credible; once the firmenters it is for the incumbent firm better to collude. Indeed, performing backward in-duction in the game tree in Figure 1.1(a), yields only the equilibrium (Enter,Collude).The equilibrium (Stay Out,Enter) does not survive backward induction or, in gametheoretic parlance, is not subgame perfect. Subgame perfectness (Selten, 1965, 1975)is one of the main refinements of the Nash equilibrium concept.

Entry deterrence with incomplete information

The story Consider the following variation on the foregoing entry deterrence model.Suppose that with 50% probability the incumbents payoff from Fight (F ) is equal tosome amount x rather than the 0 above, that both firms know this, but that the truepayoff is only observed by the entrant. This situation might arise, for instance, if thetechnology or cost structure of the entrant firm is private information but both firmswould make the same estimate about the associated probabilities.

A model A representation of this game is given in Figure 1.1(b). The uncertaintyis modeled by a chance move or move of Nature at the beginning of the game. Thepotential entrant observes this move but the incumbent does not, as is represented

-

8 CHAPTER 1. INTRODUCTION

(a)

(b)

u

u

u

PPPPE I

StO EnterC

F

(0, 100)

(40, 50)

(10, 0)

u

u

uu

uu

XXXX

XXXX

(0, 100)

(0, 100)

StO

StO

E

N

E

Enter

Enter

I

I

C

F

C

F

50%

50%

(40, 50)

(10, 0)

(40, 50)

(10, x)

Figure 1.1: Entry deterrence games

by the dashed line. So this is a game of imperfect information and even of incompleteinformation; the latter means that there is a move of Nature the outcome of whichis not observed by at least one player who moves after the move of Nature. Theidea of modeling incomplete information in a game in this way is basically due toHarsanyi (1967-8). In this game the entrant now has four strategies, because he hastwo choices at each of the two possible outcomes of Natures move. The incumbentstill has two strategies; since he does not observe Natures move his choice cannot bemade dependent on it. To determine the strategic form of the game one has to takeexpected outcomes. This results in the following table.

Collude Fight

Enter,Enter 40, 50 10, x2

Enter,Stay Out 20, 75 5, 50Stay Out,Enter 20, 75 5, 100+x

2

Stay Out,Stay Out 0, 100 0, 100

The two moves in each strategy of the entrant refer to the upper and lower moves ofNature in Figure 1.1(b), respectively.

Solutions Again there are two Nash equilibria in pure strategies, namely ((En-ter,Enter), Collude) (provided x 100) and ((Stay Out,Stay Out),Fight). In partic-ular if x is large, say x > 50 it is no longer possible to exclude the latter equilibriumby simple backward induction. The concept of sequential or perfect Bayesian equilib-

-

1.2. COOPERATIVE GAMES 9

rium1 (Kreps and Wilson, 1982) handles situations as this as follows. Suppose that,after observing Enter the incumbent has a belief p that the entrant is of the 0-typeand a belief 1 p that he is of the x-type. Given these beliefs, Fight is at least asgood as Collude if the expected payoff from Fight is at least as large as the expectedpayoff from Collude, i.e., if (1 p)x 50 or p x50

x. We say that ((Stay Out,Stay

Out),Fight) is a sequential equilibrium under these beliefs of the incumbent.Later literature focuses on refinements (additional conditions) on these beliefs, e.g.,the Intuitive Criterion (Cho and Kreps, 1987).

1.2 Cooperative games

In a cooperative game the focus is on payoffs and coalitions rather than on strategies.The prevailing analysis has an axiomatic flavor, in contrast to the equilibrium analysisof noncooperative theory.The examples discussed below are confined to so-called transferable utility games andto bargaining games.

1.2.1 Transferable utility games

A game with transferable utility or TU-game is a pair (N, v), where N := {1, 2, . . . , n}is the set of players, and v is a map assigning a real number v(S) to every coalitionS N , with v() = 0. The number v(S) is called the worth of coalition S.The usual assumption is that the grand coalition forms, and the basic question is howto distribute its worth v(N). One possible answer is the core, defined by

C(N, v) = {x IR|iS

xi v(S) for every nonempty coalition S,iN

xi = v(N)}.

The core (Gillies, 1953) of a TU-game (N, v) is the set of all distributions of v(N)such that no coalition has an incentive to split off. The core may be large, small, orempty. It is a polytope (defined by a number of linear inequalities) and elements ofit can be determined by Linear Programming.Other solution concepts are the Shapley value (Shapley, 1953) and the (pre)nucleolus(Schmeidler, 1969). Both the Shapley value and the nucleolus are single-valued andalways exist. The nucleolus has the advantage of always being in the core, provided thecore is non-empty. The Shapley value measures the average contribution of a playerto all coalitions in the game, where the nucleolus minimizes maximal dissatisfactionof coalitions. For both solutions axiomatic characterizations are available.2

1These concepts are not equivalent in general, but they are in this specific case.2For completeness we give here also the formal definitions. Let (N, v) be a TU-game. For a vector

x IRN with

iN xi = v(N) and a coalition 6= S 6= N let e(S, x) = v(S)

iS xi, and let e(x)be the (2n2)-dimensional vector consisting of the numbers e(S, x) rearranged in (weakly) decreasingorder. Then the nucleolus of the game (N, v) is the vector that lexicographically minimizes e() overthe set of all efficient vectors, i.e., vectors x with

iN xi = v(N).

-

10 CHAPTER 1. INTRODUCTION

power

1

2

3

100 30

140 20

50"""""

aaaaa

bbbbb

!!!!!

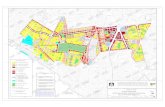

Figure 1.2: Three cooperating communities

Three cooperating communities

The story Communities 1, 2 and 3 want to be connected with a nearby powersource. The possible transmission links and their costs are shown in Figure 1.2. Eachcommunity can hire any of the transmission links.

A model The cost game (N, c) associated with this situation is given by N ={1, 2, 3} and the first two lines of the next table.

S = {1} {2} {3} {1, 2} {1, 3} {2, 3} {1, 2, 3}

c(S) = 0 100 140 130 150 130 150 150

v(S) = 0 0 0 0 90 100 120 220

The numbers c(S) are obtained by calculating the cheapest routes connecting thecommunities in the coalition S with the power source. The game (N, v) in the thirdline of the table is the cost savings game corresponding to (N, c), determined by

v(S) :=iS

c(i) c(S) for each S 2N .

The cost savings v(S) for coalition S is the difference in costs corresponding to thesituation where all members of S work alone and the situation where all members ofS work together.

The Shapley value assigns to player i N the amount

S: i6S

|S|!(|N | |S| 1)!

|N |![v(S {i}) v(S)].

-

1.2. COOPERATIVE GAMES 11

Mon Tue WedAdams 2 4 8Benson 10 5 2Cooper 10 6 4

Table 1.1: Preferences for a dentist appointment

Solutions The core of this game is the convex hull of the payoff vectors (100, 120, 0),(0, 120, 100), (0, 90, 130), (90, 0, 130), and (100, 0, 120). The Shapley value is the payoffvector (65, 75, 80), which is contained in the core. The nucleolus is the payoff vector(56 2

3, 76 2

3, 86 2

3).

The glove game

The story Each player either owns a right hand or a left hand glove. One pair ofgloves is worth one guilder. The players can form coalitions and pairs of gloves.

A model Let N = {1, 2, . . . , n} be divided into two disjoint subsets L and R.Members of L possess a left hand glove, members of R a right hand glove. Thesituation above can be described as a TU-game (N, v) where

v(S) := min{|L S|, |R S|}

for each S 2N . (The number of elements in a finite set S is denoted by |S|.)

Solutions Suppose that 0 < |R| < |L|. The core of this game consists of one payoffvector, namely the vector x IRn with xi = 0 if i L, and xi = 1|R| if i R. This isalso the nucleolus. The Shapley value also gives something to the players in L; e.g.,if n = 3, R = {1} and L = {2, 3}, then player 1 obtains 2

3and players 2 and 3 each

obtain 16.

A permutation game

The story (From Curiel, 1997, p. 54) Mr. Adams, Mrs. Benson, and Mr. Cooperhave appointments with the dentist on Monday, Tuesday, and Wednesday, respec-tively. This schedule not necessarily matches their preferences, due to different ur-gencies and other factors. These preferences (expressed in numbers) are given in Table1.1.

A model This situation gives rise to a game in which the coalitions can gain byreshuing their appointments. For instance, Adams (player 1) and Benson (player2) can change their appointments and obtain a total of 14 instead of 7. A completedescription of the resulting TU-game is given in Table 1.2.

-

12 CHAPTER 1. INTRODUCTION

S 1 2 3 12 13 23 123v(S) 2 5 4 14 18 9 24

Table 1.2: A permutation game

Solutions The core of this game is the convex hull of the vectors (15, 5, 4), (14, 6, 4),(8, 6, 10), and (9, 5, 10). The Shapley value is the vector (91

2, 6 1

2, 8), and the nucleolus

is the vector (11 12, 5 1

2, 7).

1.2.2 Bargaining games

The general model in cooperative game theory is that of a nontransferable utility game,where the possible payoffs for each coalition are described by a set. Such games derive,for instance, from classical exchange economies. TU-games as discussed above are aspecial case, where for each coalition S the set of possible payoffs is the halfspace{x IRn|iS xi v(S)}, for a game (N, v). Another special subclass are purebargaining games, where intermediate coalitions (coalitions with more than one butless than all players) do not play a role.The discussion of general cooperative games is beyond the scope of this introductorytext. An example of a bargaining game, however, is discussed next.

A division problem

The story Consider the following situation. Two players have to agree on thedivision of one unit of a perfectly divisible good. If they reach an agreement, say(, ) where , 0 and + 1, then they split up the one unit according tothis agreement; otherwise, they both get nothing. The players have preferences forthe good, described by utility functions.

The model To fix ideas, assume that player 1 has a utility function u1() = andplayer 2 has a utility function u2() =

. Thus, a distribution (, 1) of the good

leads to a corresponding pair of utilities (u1(), u2(1)) = (,1 ). By letting

range from 0 to 1 we obtain all utility pairs corresponding to all feasible distributionsof the good, as in Figure 1.3. It is assumed that also distributions summing to lessthan the whole unit are possible. This yields the whole shaded region.

A solution Nash (1950) proposed the following way to solve this bargaining prob-lem: Maximize the product of the players utilities on the shaded area. Since thismaximum will be reached on the boundary, the problem is equivalent to

max01

1 .

The maximum is obtained for = 23. So the solution of the bargaining problem in

utilities equals (23, 1

3

3). This implies that player 1 obtains 2

3of the 1 unit of the

-

1.3. CONCLUDING REMARKS 13

0 1

1

graph of 7 1

Figure 1.3: A division problem

good, whereas player 2 obtains 13. As described here, this solution comes out of the

blue. Nash, however, provided an axiomatic foundation for this solution (which isusually called the Nash bargaining solution).

1.3 Concluding remarks

This introduction to game theory has necessarily been very brief and incomplete. Forone thing, it suggests a rather strict separation between cooperative and noncooper-ative models. In the literature, however, there are many relations. Let us mentionjust a few examples.

There are many noncooperative bargaining models. Moreover, these models result inoutcomes which often are also predicted by cooperative, axiomatic models. The bestknown example of this is the Rubinstein alternating offers model (Rubinstein, 1982),the outcome of which is closely related to the Nash bargaining solution outcome. Arecent extension of this is provided by Hart and Mas-Colell (1996).

Up to now, for most cooperative solution concepts there exist noncooperative imple-mentations. This means that a parallel noncooperative game is developed, the (Nashor other) equilibrium outcomes of which coincide with the payoffs resulting from theapplication of the cooperative solution under consideration.

Other topics on which this introduction has remained silent are the building of rep-utation and cooperation through repeated play, bounded rationality, evolutionaryapproaches, and the role of knowledge in games, to mention just a few.

-

14 CHAPTER 1. INTRODUCTION

1.4 References

References from the text

Cho, I.K., and D.M. Kreps (1987): Signalling Games and Stable Equilibria, QuarterlyJournal of Economics, 102, 179221.

Cournot, A. (1838): Recherches sur les Principes Mathematiques de la Theorie des Richesses.English translation (1897): Researches into the Mathematical Principles of the Theory ofWealth. New York: Macmillan.

Curiel, I. (1997): Cooperative Game Theory and Applications: Cooperative Games Arisingfrom Combinatorial Optimization Problems. Dordrecht: Kluwer Academic Publishers.

van Damme, E. (1995): Stability and Perfection of Nash Equilibria. Berlin: Springer Verlag.

Gillies, D.B. (1953): Some Theorems on n-Person Games. Ph.D. Thesis, Princeton: Prince-ton University Press.

Harsanyi, J.C. (1967, 1968): Games with Incomplete Information played by BayesianPlayers, I, II, and III, Management Science, 14, 159182, 320334, 486502.

Hart, S., and A. Mas-Colell (1996): Bargaining and Value, Econometrica, 64, 357380

Kreps, D., and R. Wilson (1982): Sequential Equilibria, Econometrica, 50, 863894.

Nash, J.F. (1950): The Bargaining Problem, Econometrica, 18, 155162.

Nash, J.F. (1951): Non-Cooperative Games, Annals of Mathematics, 54, 286295.

von Neumann, J. (1928): Zur Theorie der Gesellschaftsspiele, Mathematische Annalen,100, 295320.

von Neumann, J., and O. Morgenstern (1944, 1947): Theory of Games and Economic Be-havior. Princeton: Princeton University Press.

Rasmusen, E. (1989): Games and Information, An Introduction to Game Theory. Oxford:Basil Blackwell.

Rubinstein, A. (1982): Perfect Equilibrium in a Bargaining Model, Econometrica, 50, 97109.

Schmeidler, D. (1969): The Nucleolus of a Characteristic Function Game, SIAM Journalof Applied Mathematics, 17, 11631170.

Selten, R. (1965): Spieltheoretische Behandlung eines Oligopolmodels mit Nachfragezeit,Zeitschrift fur Gesammte Staatswissenschaft, 121, 301324.

Selten, R. (1975): Reexamination of the Perfectness Concept for Equilibrium Points inExtensive Games, International Journal of Game Theory, 4, 2555.

Shapley, L.S. (1953): A Value for n-Person Games, Annals of Mathematics Studies, 28.

Further reading

Aumann, R.J., and S. Hart, eds. (1992, 1994, 2002): Handbook of Game Theory with Eco-nomic Applications, Vols. 1,2, and 3. Amsterdam: North-Holland.

Bierman, H.S., and L. Fernandez (1993): Game Theory with Economic Applications. Read-ing, Mass.: Addison-Wesley.

Binmore, K. (1992): Fun and Games, A Text on Game Theory. Lexington, Mass.: D.C.

-

1.4. REFERENCES 15

Heath and Company.

Brams, S.J. (1994): Theory of Moves. Cambridge, UK: Cambridge University Press.

Dixit, A., and B. Nalebuff (1991): Thinking Strategically. New York: Norton.

Friedman, J.W. (1986): Game Theory with Applications to Economics. Oxford: OxfordUniversity Press.

Fudenberg, F., and J. Tirole (1991): Game Theory. Cambridge, Mass.: The MIT Press.

Gardner, R. (1995): Games for Business and Economics. New York: Wiley.

Hargreaves Heap, S.P., and Y. Varoufakis (1995): Game Theory: A Critical Introduction.London: Routledge.

Kreps, D.M. (1990): A Course in Microeconomic Theory. Princeton: Princeton UniversityPress.

Luce, R.D., and H. Raiffa (1957): Games and Decisions: Introduction and Critical Survey.New York: Wiley.

Myerson, R.B. (1991): Game Theory: Analysis of Conflict. Cambridge, Mass.: HarvardUniversity Press.

Osborne, M.J., and A. Rubinstein (1994): A Course in Game Theory. Cambridge, Mass.:The MIT Press.

Osborne, M.J. (2004): An Introduction to Game Theory. New York: Oxford UniversityPress.

Owen, G. (1995): Game Theory. New York: Academic Press.

Peters, H., and K. Vrieze (1992): A Course in Game Theory. Aachen: Verlag der AugustinusBuchhandlung.

Shubik, M. (1982): Game Theory in the Social Sciences, Concepts and Solutions. Cam-bridge, Mass.: The MIT Press.

Shubik, M. (1984): A Game-Theoretic Approach to Political Economy. Cambridge, Mass.:The MIT Press.

Sutton, J. (1986): Non-Cooperative Bargaining Theory: An Introduction, Review of Eco-nomic Studies, 53, 709724.

Thomas, L.C. (1986): Games, Theory and Applications. Chichester: Ellis Horwood Lim-ited.

Weibull, J.W. (1995): Evolutionary Game Theory. Cambridge, Mass.: The MIT Press.Young, H.P. (2004): Strategic Learning and its Limits. Oxford, UK: Oxford UniversityPress.

-

16 CHAPTER 1. INTRODUCTION

-

Part I

Noncooperative Game Theory

17

-

Chapter 2

Nash Equilibrium

Game theory is a mathematical theory dealing with models of conflict and cooperation. Inthis first chapter we consider situations where several parties (called players) are involved ina conflict (called a game). We suppose that the players simultaneously choose an action andthat the combination of actions chosen by the players determines a payoff for each player.Nash equilibrium is a basic concept in the theory developed for such games.

2.1 Strategic Games

A strategic game is a model of interactive decision-making in which each decision makerchooses his plan of action once and for all, and these choices are made simultaneously. Themodel consists of a finite set N of players and, for each player i, a set Ai of actions and apayoff function ui defined on the set A = A1 A2 An of all possible combinations ofactions of the players. Formally,

strategic game DEFINITION An n-person game in strategic form G consists of

a finite set N = {1, 2, . . . , n} of players for each player i N a nonempty set Ai of actions available to this player for each player i N a (payoff) function ui defined on the set A =

jN Aj ; this

function assigns to each n-tuple a = (a1, a2, . . . , an) of actions the real number ui(a).

We denote this game by G = N,(Aj)jN

,(uj)jN

or more shortly by G = A, u.

A play of the game G proceeds as follows: each player chooses independently of his opponentsone of his possible actions; if ai denotes the action chosen by player i, then this player obtainsa payoff ui(a), where a = (a1, a2, . . . , an) A is a profile of actions. Under this interpretationeach player is unaware, when choosing his action, of the choices being made by his opponents.

The fact that the payoff to a player in general depends on the actions of his opponent,distinguishes a strategic game from a decision problem: each player may care not only abouthis own action but also about the actions taken by the other players.

19

-

20 CHAPTER 2. NASH EQUILIBRIUM

EXAMPLE 1 Cournot Model of Duopoly

Suppose there are two producers of mineral water. If producer i brings an amount of qiunits on the market, then his costs are ci(qi) 0 units. The price of mineral water dependson the total amount q1 + q2 brought on the market and is denoted by p(q1 + q2). FollowingCournot (1838) we suppose that the firms choose their quantities simultaneously.This duopoly situation can be modeled as the two-person game in strategic form A1, A2, u1, u2,where for each i

Ai = [0,)and

ui(q1, q2) = qip(q1 + q2) ci(qi). EXERCISE 1 A painting is auctioned among two bidders. The worth of the painting for

bidder i equals wi. Now each bidder, independently of the other, makes abid. The highest bidder obtains the painting for the amount mentioned bythe other bidder. If the two bids are the same the problem is solved withthe help of a lottery.Describe this situation as a two-person game in strategic form.

Although in our description of a game the players of a game choose their actions simulta-neously, it is also possible to model conflict situations where players act one by one. Asthe following example shows, this is just a matter of properly defining the actions of theplayers.

EXAMPLE 2 A tree game

Suppose that two players are dealing with the following situation.

-

2.1. STRATEGIC GAMES 21

1

L R

2 2

l1 r1 l2 r2

[41

] [32

] [46

] [53

]

A play proceeds as follows. First player 1 decides to go to the left (L) or to go to the right(R). Then player 2 decides to go left or right. If both players have chosen to go left, thenplayer 1 obtains 4 dollar and player 2 gets 1 dollar, etc. In this situation the behavior ofplayer 2 clearly depends on the choice of player 1: if player 1 chooses L, then player 2 willprefer r1; if player 1 chooses R, then player 2 prefers l2. So if we want to analyze thissituation we should define the actions of player 2 in such a way that this dependence isincorporated in one way or the other. This can be done by representing the strategy ofplayer 2 described before as the pair (r1, l2).

This situation can be modeled as a two-person game in strategic form A1, A2, u1, u2, where

A1 = {L,R} and A2 = {(l1, l2), (l1, r2), (r1, l2), (r1, r2)}.

The payoffs are given in the following tableau:

(l1, l2) (l1, r2) (r1, l2) (r1, r2)

L (4,1) (4,1) (3,2) (3,2)R (4,6) (5,3) (4,6) (5,3)

EXERCISE 2 Four objects O1, O2, O3 and O4 have a different worth for two players 1 and2:

O1 O2 O3 O4

worth for player 1 1 2 3 4

worth for player 2 2 3 4 1

Player 1 starts with choosing an object. After him player 2 chooses anobject followed by player 1 who takes his second object. Finally, player 2gets the object that is left.

Show that this situation can be formulated as a two-person game by de-scribing the actions available to the players.

-

22 CHAPTER 2. NASH EQUILIBRIUM

2.2 Finite Two-Person Games

For the two-person game described in Example 2 both action sets are finite sets. Thereforewe call this game a finite game.

finite game DEFINITION A game A, u is called a finite game if Ai is a finite set for all i.

Obviously the games described in Example 1 and Exercise 1 are not finite, while the gamesintroduced in Example 2 and Exercise 2 are finite.

EXAMPLE 3 Advertising

The two producers of mineral water we met in Example 1 are not allowed to cooperate. Theyboth control one half of the market. At this moment they earn 8 units of money per month.Both producers must decide at a certain moment (independently of each other) whether ornot they want to start an advertising campaign. The price of the campaign is 2 units ofmoney. We suppose that the market share for both producers does not change if they takethe same decision. In the other case the producer who has decided to start a campaigncontrols 75 % of the market in the next month.

We can write down the consequences of the decisions of the producers in the following way:dont start start

dont start (8, 8) (4, 10)

start (10, 4) (6, 6)

Of course we can index the two alternatives available to the producers:

1 : do not start an advertising campaign

2 : start an advertising campaign

This situation can be modeled as the finite two-person game A1, A2, u1, u2, where

A1 = A2 = {1, 2}

and for i, j {1, 2}u1(i, j) = aij and u2(i, j) = bij .

Here aij is the element on the position (i, j) of the matrix A =

[8 4

10 6

]and bij is the

element on the position (i, j) of the matrix B =

[8 10

4 6

].

Since the game in this example is completely determined by the two 2 2-matrices A andB, we call this game a 2 2-bimatrix game and denote it by

(A,B) =

[(8, 8) (4, 10)

(10, 4) (6, 6)

].

payoff matrices The matrices A and B are called the payoff matrices of the players.

-

2.3. NASH EQUILIBRIUM 23

bimatrix game Note that any finite two-person game A1, A2, u1, u2 with |A1| = m and |A2| = n can beseen as an m n-bimatrix game. In fact in this game player 1 chooses a row and player 2chooses a column. The general form of an m n-bimatrix game is

(a11, b11) (a12, b12) (a1n, b1n)(a21, b21) (a22, b22) (a2n, b2n)

......

. . ....

(am1, bm1) (am2, bm2) (amn, bmn)

.

EXERCISE 3 Explain that the situation described in Exercise 2 can be modeled as abimatrix game. What is the order of the game?

EXERCISE 4 Each of two firms has one job opening. Suppose that (for reasons notdiscussed here but relating to the value of filling each opening) the firms offerdifferent wages: firm i {1, 2} offers the wage wi, where 12w1 < w2 < 2w1.Imagine that there are two workers, each of whom can apply to only onefirm. The two workers simultaneously decide whether to apply to firm 1 orto firm 2. If only one worker applies to a given firm, that worker gets thejob; if both workers apply to one firm, the firm hires one worker at randomand the other worker is unemployed (which has a payoff of zero).Show that this situation can be formulated as a bimatrix game.

2.3 Nash Equilibrium

Suppose that you have to advise which action each player of a game should choose. Then ofcourse each player must be willing to choose the action advised by you. Thus the advisedaction of a player must be a best response to the advised actions of the other players. Inthat case no player can gain by unilateral deviation from the advised action. We will callsuch an advice a Nash equilibrium. Formally:

-

24 CHAPTER 2. NASH EQUILIBRIUM

Nash equilibrium DEFINITION A Nash equilibrium of an n-person strategic game G = A, u is a profilea A of actions with the property that for every player i

ui(a1, . . . , a

i1, a

i , a

i+1, . . . a

n) ui(a1, . . . , ai1, ai, ai+1, . . . , an) for all ai Ai.

So if a is a Nash equilibrium no player i has an action yielding a higher payoff to himthan ai does, given that each opponent chooses his equilibrium action. Indeed no player canprofitably deviate, given the actions of the opponents.

It is useful to describe the concept of an equilibrium in terms of best responses. In orderto do so we denote for a game G = A, u the set of actions of the opponents of player i byAi. So an element of this set is of the form

ai = (a1, . . . , ai1, ai+1, . . . , an).

For an ai AiBi(ai) =

{ai Ai| ui(ai, ai) ui(ai, ai) for all ai Ai

}best response is the set of best responses of player i against ai. Here (a

i, ai) is the profile obtained from

a by replacing ai by ai.

Obviously, a profile a of actions is a Nash equilibrium if and only if

ai Bi(ai) for all i N.Note that (i, j) is a Nash equilibrium of an m n-bimatrix game (A,B) if

aij aij for all iand bij bij for all j.So aij is the biggest number in column j

of the matrix A, while bij is the biggestnumber in row i of the matrix B.

EXAMPLE 4 The Prisoners Dilemma

Two suspects in a crime are arrested and are put into separate cells. The police lack sufficientevidence to convict the suspects, unless at least one confesses. If neither confesses, thesuspects will both be convicted of a minor offense and sentenced to one month in jail. Ifboth confess then both will be sentenced to jail for six months. If only one of them confesses,he will be freed and used as a witness against the other, who will receive a sentence of ninemonths six for the crime and three for obstructing justice. We have summarized the datain the following tableau

dont confess confess

dont confess (1,1) (9, 0)confess (0,9) (6,6)

This situation can be modeled as the 2 2-bimatrix game[(1,1) (9, 0)(0,9) (6,6)

],

-

2.3. NASH EQUILIBRIUM 25

where the first row/column corresponds with the decision not to confess and the second oneto the decision to confess.

It is clear that whatever one player does, the other prefers confess to dont confess. Hence(2, 2) is the unique Nash equilibrium of the game.

EXAMPLE 5 Battle of the Sexes

On an evening a man and a woman wish to go out together. The man prefers to attendthe opera but the woman likes to go to a soccer match. Both would rather spend theevening together than apart. We suppose that the preferences of the man and woman canbe described with the help of the payoffs represented in the following tableau:

opera match

opera (2, 1) (0, 0)

match (0, 0) (1, 2)

This situation can be modeled as the 2 2-bimatrix game[(2, 1) (0, 0)

(0, 0) (1, 2)

].

In this game the man is player 1 and the woman is player 2. Furthermore, the firstrow/column corresponds with the decision to attend the opera, while the other row/columncorresponds with the decision to visit a soccer match. This game has two Nash equilibria:(1, 1) and (2, 2).

EXAMPLE 6 Matching Pennies

Each of two people chooses either Head or Tail. If the choices differ, person 1 pays person2 one dollar; if they are the same, person 2 pays person 1 one dollar. This situation wherethe interests of the players are diametrically opposed can be modeled by the 2 2-bimatrixgame [

(1,1) (1, 1)(1, 1) (1,1)

],

where the first row/column corresponds with the decision of choosing Head. This strictlycompetitive game has no Nash equilibrium.

EXERCISE 5 Determine a Nash equilibrium of the bimatrix game in Exercise 3 and Ex-ercise 4, respectively.

EXERCISE 6 Determine the Nash equilibria of the 3 3-bimatrix game(2, 0) (1, 1) (4, 2)

(3, 4) (1, 2) (2, 3)

(1, 3) (0, 2) (3, 0)

.

EXAMPLE 7 A hide-and-seek game

Player 2 hides in one of three rooms numbered 1, 2 and 3. Player 1 tries to guess the numberof the room chosen by player 2. If his first guess is correct, he receives one dollar from player2. If his second guess is correct he has to pay one dollar. Otherwise player 1 pays two dollar

-

26 CHAPTER 2. NASH EQUILIBRIUM

to player 2. We can summarize this game in one matrix:

1 2 3

(1 2 3)(1 3 2)(2 1 3)(2 3 1)(3 1 2)(3 2 1)

1 1 21 2 1

1 1 22 1 11 2 12 1 1

This matrix contains the payoffs to player 1 and the triple (1 2 3) for example denotes theaction of player 1 where room 1 is his first guess, room 2 his second one (if necessary) and3 his third one (if necessary). Also this game doesnt have any equilibrium.

EXAMPLE 8 Cournot Model of Duopoly

We consider a simplified version of the Cournot Model discussed in Example 1. To be precise:we will suppose that

p(q1 + q2) =

{a q1 q2 if q1 + q2 < a0 otherwise,

for some positive real number a. Furthermore, we assume that ci(qi) = cqi for i = 1, 2; thatis: there are no fixed costs and the marginal cost is constant at c, where 0 < c < a.

The consequence of these assumptions is that the payoff function of player 1 in the corre-sponding two-person game is given by

u1(q1, q2) = q1p(q1 + q2) cq1

=

{q1[a q1 q2] cq1 if q1 + q2 < acq1 if q1 + q2 a

=

{q1[(a c q2) q1] if q1 + q2 < acq1 if q1 + q2 a.

Using the fact that the (positive part of the) intersection of the graph of the payoff function

-

2.3. NASH EQUILIBRIUM 27

of player 1 with vertical planes with equation q2 = b for some b (0, a c) look like

q1

q2

a c

a c

u1

we find that

B1(q2) =

{0 if q2 a c12(a c q2) if q2 < a c = max {0,

12(a c q2)}.

In a similar way one can show that

B2(q1) =

{0 if q1 a c12(a c q1) if q1 < a c = max {0,

12(a c q1)}.

best-response set In Figure 1 you find the two best-response sets

B1 = {(q1, q2)| q1 B1(q2)}and B2 = {(q1, q2)| q2 B1(q1)} :

q2

q1

a c

12(a c)

B1

q2

q1a c

12(a c)

B2

FIGURE 1 The best-response sets

-

28 CHAPTER 2. NASH EQUILIBRIUM

Obviously, a Nash equilibrium of the game corresponds with an intersection of the two best-response sets. So in order to determine the unique equilibrium of the game we have to solvethe system of equations {

q1 =12(a c q2)

q2 =12(a c q1).

Solving this system yields the equilibrium (q1 , q2), where

q1 = q2 =

13(a c).

EXERCISE 7 Consider the Cournot duopoly model where the price is given by the equa-tion p(q1 + q2) = a q1 q2, but firms have asymmetric marginal costs: c1for firm 1 and c2 for firm 2.What is the Nash equilibrium if 0 < ci < a/2 for each firm i?What if c1 < c2 < a but 2c2 > a+ c1?

EXERCISE 8 Players 1 and 2 are bargaining over how to split one dollar. Both playerssimultaneously name shares s1, s2 [0, 1] they would like to have. If s1 +s2 1, then the players receive the shares they named, otherwise bothplayers receive nothing.What are the Nash equilibria of this game?

EXERCISE 9 Consider the two-person game A1, A2, u1, u2, where A1 = A2 = [0, 1) andwhere for (x, y) [0, 1) [0, 1)

u1(x, y) = x and u2(x, y) = y.

Prove that this game doesnt have any Nash equilibrium.

EXERCISE 10 Suppose there are n firms in the Cournot oligopoly model. Let qi denote thequantity produced by firm i. The price p depends on the aggregate quantityQ = q1 + + qn on the market as is given by

p(Q) =

{aQ if Q < a0 otherwise.

Assume that the total cost of firm i from producing quantity qi is ci(qi) =cqi. That is, there are no fixed costs and the marginal cost is constant atc < a. Following Cournot, suppose that the firms choose their quantitiessimultaneously.a) Model this situation as an n-person game.Let (q1 , . . . , q

n) be a Nash equilibrium of this game.

b) Prove that qi = max{0, 12 (a cQi)}, where Qi =

j 6=i qj .

Let S be the set of firms i such that qi > 0.c) Prove that qi = q

j for all i, j S and use this fact to prove that

S = {1, 2, . . . , n}.d) Show that qi =

1n+1

(a c).EXERCISE 11 We consider two finite versions of the Cournot duopoly model.

First, suppose each firm must choose either half the monopoly quantity qm =ac4, or the Cournot equilibrium quantity qe =

ac3. No other quantities

are feasible.

-

2.4. APPLICATIONS 29

Show that this two-action game is equivalent to the Prisoners Dilemma:each firm has a strictly dominated strategy and both are worse off in equi-librium than they would be if they cooperated.Second, suppose that each firm can choose either qm/2, or qe, or a thirdquantity q.Find a value for q such that the game is equivalent to the Cournot model inExample 8, in the sense that (qe, qe) is a unique Nash equilibrium and bothfirms are worse off in equilibrium than they would be if they cooperated,but neither firm has a strictly dominated strategy.

2.4 Applications

In this section, we consider a different model of how two duopolists might interact, based onBertrands (1883) suggestion that firms actually choose prices rather than quantities as inCournots model.

EXAMPLE 9 Bertrand Model of Duopoly

We consider the case of differentiated products. If firms 1 and 2 choose prices p1 and p2,respectively, the quantity q1 that consumers demand from firm 1 satisfies

q1 = a p1 + bp2and the demand q2 from firm 2 satisfies

q2 = a p2 + bp1.Here b > 0 reflects the extent to which the product of the one firm is a substitute for theproduct of the other firm. (This is an unrealistic demand function because demand for theproduct of the one firm is positive even when this firm charges an arbitrary high price, pro-vided that the other firm also charges a high enough price. As will become clear, the problemmakes sense only if b < 2.) We assume that there are no fixed costs of production and thatmarginal costs are constant at c < a, and that the firms choose their prices simultaneously.First we model the situation as a two-person game in strategic form A1, A2, u1, u2.Obviously, Ai = [0,) for each i. Next we take as payoff function for a firm just its profit.Hence

u1(p1, p2) = q1(p1 c) = (a p1 + bp2)(p1 c)and

u2(p1, p2) = q2(p2 c) = (a p2 + bp1)(p2 c).Since

B1(p2) =12(a+ bp2 + c)

and B2(p1) =12(a+ bp1 + c),

the price pair (p1, p2) is a Nash equilibrium if it solves the system{

p1 =12(a+ bp2 + c)

p2 =12(a+ bp1 + c)

Solving this systems yields

p1 = p2 =

a+ c

2 b .

EXERCISE 12 We analyze the Bertrand duopoly model with homogeneous products.

-

30 CHAPTER 2. NASH EQUILIBRIUM

Suppose that the quantity q1 that consumers demand from firm 1 satisfies

q1 =

a p1 if p1 < p20 if p1 > p2

(a p1)/2 if p1 = p2,while the quantity that consumers demand from firm 2 satisfies a similarequation. Suppose also that there are no fixed costs and that marginal costsare constant at c < a.Show that if the firms choose prices simultaneously, then the unique Nashequilibrium is that both firms charge the price c.

EXAMPLE 10 The tragedy of the Commons

This example illustrates the phenomenon that if citizens respond only to private incentives,public resources will be overutilized.Consider n farmers in a village. Each summer, all the farmers graze their goats on thevillage green. Denote the number of goats the i-th farmer owns by gi. The cost of buyingand caring for a goat is c, independent of how many goats a farmer owns. The value to afarmer of grazing a goat on the green when a total of G = g1 + + gn goats are grazingis v(G) per goat. Since a goat needs at least a certain amount of grass in order to survive,there is a maximum number Gmax of goats that can be grazed on the green. Obviously,

v(G) > 0 for G < Gmax

and v(G) = 0 for G Gmax.Also, since the first few goats have plenty of room to graze, adding one more does littleharm to those already grazing, but when so many goats are grazing that they are all justbarely surviving (i.e., G is just below Gmax), then adding one more dramatically harms therest. Formally: for G < Gmax, v

(G) < 0 and v(G) < 0. So v is a decreasing and concavefunction of G as in the following picture

G

v

Gmax

During the spring, the farmers simultaneously choose how many goats to own.Assuming goats are continuously divisible, the foregoing can be modeled as an n-persongame where

Ai = [0, Gmax)

andui(g1, . . . , gn) = giv(g1 + + gn) cgi.

Thus, if the profile (g1 , . . . , gn) is a Nash equilibrium of this game, then g

i must maximize

the functionf : gi giv(gi + gi) cgi.

-

2.4. APPLICATIONS 31

So gi solves the equation

f (gi) = 0 v(gi + gi) + giv(gi + gi) c = 0.

Apparently,v(gi + g

i) + g

i v(gi + g

i) c = 0 for all i.

Hence, if we write G instead of g1 + + gn,i

[v(G) + gi v

(G) c]= 0 nv(G) +Gv(G) nc = 0

v(G) + 1nGv(G) c = 0.

In contrast, the social optimum, denoted by G, is a solution of the problem

maxG0

Gv(G)Gc.

So G solves the equation

v(G) +Gv(G) c = 0.

We ask you to show in an exercise that G > G. This means that, compared to the socialoptimum, too many goats are grazed in the situation corresponding to the Nash equilibrium.

-

32 CHAPTER 2. NASH EQUILIBRIUM

EXERCISE 13 Show that G > G, where G and G are the quantities introduced inthe foregoing example.

EXERCISE 14 Consider a population of voters uniformly distributed along the ideologicalspectrum represented by the interval [0, 1]. Each of the candidates for asingle office simultaneously chooses a campaign platform (i.e., a point ofthe interval [0, 1]).The voters observe the candidates choices, and then each voter votes for thecandidate whose platform is closest to the voters position on the spectrum.(If there are two candidates and they choose platforms x1 = 0.3 and x2 =0.6, for example, then all voters to the left of x = 0.45 vote for candidate1, all those to the right vote for candidate 2. Hence candidate 2 wins theelections with 55 percent of the vote.)Assume that any candidates who choose the same platform equally split the votescast for that platform,

ties among the leading vote-getters are resolved by coin flips.Suppose that the candidates care only about being elected they do notreally care about their platforms at all!a) Prove that there is only one Nash equilibrium if there are two candidates.b) If there are three candidates, exhibit a Nash equilibrium.

EXERCISE 15 Three players announce a number in the set {1, . . . ,K}. A prize of onedollar is split equally between the players whose number is closest to 2

3of

the average number.Find the unique equilibrium of this game.

-

Chapter 3

The Mixed Extension

Some of the finite games we discussed in Chapter 2 did not have a Nash equilibrium. In thischapter we first introduce for finite games a new class of actions by allowing the players torandomize over their actions. This leads to a class of non-finite games which appear to haveat least one equilibrium.

3.1 Mixed Strategies

In Chapter 2 we have observed that the hide-and-seek game

1 2 3

(1 2 3)(1 3 2)(2 1 3)(2 3 1)(3 1 2)(3 2 1)

1 1 21 2 1

1 1 22 1 11 2 12 1 1

possesses no value: the lower value of that game (= 2) is not equal to its upper value (= 1).In order to fill the gap between the upper and lower value we will introduce so-called mixedstrategies.Since player 2 doesnt prefer one of the rooms, he can decide to choose each room withprobability 1

3. We denote this plan or strategy by the vector ( 1

3, 1

3, 1

3). Such a strategy is

called a mixed strategy for player 2.More generally, a mixed strategy of player 2 is a vector

q = (q1, q2, q3),

where qj 0 represents the probability that player 2 chooses the j-th room and3

j=1 qj = 1.The set of all mixed strategies of player 2 is denoted as

3 = {q IR3| qj 0 and3

j=1

qj = 1}.

Note that the strategy e2 = (0, 1, 0) corresponds to the situation where player 2 chooses thesecond row (with probability one).

33

-

34 CHAPTER 3. THE MIXED EXTENSION

Analogously, the strategy space of player 1 can be extended to the set

6 := {p IR6| pi 0 and6i=1

pi = 1}.

What is the effect of this extension on the payoffs to the players?If player 1 chooses the strategy p = ( 1

6, 1

6, 1

6, 1

6, 1

6, 1

6) and player 2 chooses the first room

(which corresponds to the strategy q = e1), the expected payoff to player 1 is

16 1 + 1

6 1 + 1

6 1 + 1

6 2 + 1

6 1 + 1

6 2 = 2

3.

In general, a pair (p, q) 6 3 of strategies generates a(n expected) payoff to player 1equal to

p1q1 a11 + +piqjthe probability of entry (i,j)

aij + + p6q3 a63 = pAq.

In this case player 2 receives the amount pAq.summarized By introducing mixed strategies the new (zero-sum strategic) game

6,3, U,arises, where for a pair (p, q) 6 3

U(p, q) := pAq.

This game is called the mixed extension of the matrix game A.

We will show now that player 1, by using a specific mixed strategy, can guarantee himselfthe amount of 2

3, while player 2 can find a mixed strategy such that he doesnt lose more

than 23. This suggests that the mixed extension of the hide-and-seek game has a value

indeed (and that this value is equal to 23).

If player 1 uses the mixed strategy p = ( 16, 1

6, 1

6, 1

6, 1

6, 1

6), then his (expected) payoff against

an arbitrary strategy q of player 2 is equal to

pAq =

( 16, 1

6, 1

6, 1

6, 1

6, 1

6)

1 1 21 2 1

1 1 22 1 11 2 12 1 1

q1

q2

q3

=

( 23, 2

3, 2

3)q1

q2

q3

= 23 .

Similarly, if player 2 uses the mixed strategy q = ( 13, 1

3, 1

3), then his (expected) payoff

against an arbitrary strategy p of player 1 is equal to 23.

In Chapter 4 3 we will continue the investigation of the class of (mixed extensions of) matrixgames. In the next section we are going to introduce mixed extensions for arbitrary finitegames.

3.2 The Mixed Extension of a Finite Game

After the introductory example in Section 3.1 we now come to the formal definition of mixedstrategies and the mixed extension of a finite two-person strategic game. We will also discussthe existence of Nash equilibrium in this framework.

-

3.2. THE MIXED EXTENSION OF A FINITE GAME 35

the strategies As we have seen in the foregoing chapter a finite two-person game is in fact a bimatrix game,say (A,B), where A and B are two m n matrices. If we allow the players to randomizeover their action sets {1, 2, . . . ,m} and {1, 2, . . . , n} respectively, then we are in fact dealingwith a new game. In this new game the action set of player 1 is

m = {p IRm| pi 0 for all i andmi=1

pi = 1}

and the action set of player 2 is

n = {q IRn| qj 0 for all j andnj=1

qj = 1}.

The elements of m and n are called mixed strategies and we suppose that the playerschoose their mixed strategies independently and simultaneously.

x

y

1

1

2

xy

z

11

1

3

FIGURE 1 The sets 2 and 3

Note that pi refers to the probability that player 1 chooses the i-th row and that qj representsthe probability that player 2 chooses the j-th column.

-

36 CHAPTER 3. THE MIXED EXTENSION

pure strategy DEFINITION A strategy where a player chooses one of his actions, say the k-th one,with probability one, is also called a pure strategy and it is denoted by ek.

the payoffs If the players use the mixed strategies p and q, then the probability that the (i, j)-th cellof the bimatrix (A,B) will be chosen is equal to piqj (here we use the fact that the playerschoose their strategies independently!). So the expected payoff to player 1 corresponding tothe pair (p, q) of mixed strategies is

mi=1

nj=1

aijpiqj = pAq

and, similarly, the expected payoff to player 2 is equal to pBq. So formally,

mixed extension DEFINITION Corresponding to an m n-bimatrix game (A,B) we can considerof a game thestrategic game m,n, U1, U2, where

U1(p, q) = pAq

and U2(p, q) = pBq.

This game is called the mixed extension of the m n-bimatrix game (A,B).

Because we are only interested in the mixed extension of a game, from now on we will speakabout a bimatrix game instead of the mixed extension of a bimatrix game.

equilibria Note that a pair (p, q) of (mixed) strategies is a Nash equilibrium of the bimatrix game(A,B) if and only if

pAq pAq for all pand pBq pBq for all q.The set of all Nash equilibria of the game (A,B) is denoted as E(A,B).

EXERCISE 1 Prove that(( 12, 1

2), ( 1

2, 1

2))is a Nash equilibrium of the 22-bimatrix game

(A,B) =

[(1, 1) (0, 0)

(0, 0) (1, 1)

].

Does every mixed extension of a finite game in strategic form have a Nash equilibrium? In1950 J.F. Nash showed that the answer to this question is affirmative. In fact he gave notone, but two proofs for the existence of Nash equilibrium, one based on Brouwers fixedpoint theorem for continuous functions, and the other (simpler) one based on Kakutanisfixed point theorem for correspondences. We are not going to discuss these proofs, but wedo state the result here.

THEOREM 1 Equilibrium Point Theorem of Nash

Every finite strategic game has a mixed strategy Nash equilibrium.

The question that now arises is: how can we compute these Nash equilibria? Both proofsof Nash are non-constructive: they show that there is an equilibrium but not how to get it.

-

3.2. THE MIXED EXTENSION OF A FINITE GAME 37

Nevertheless, for bimatrix games there is a method to actually compute a Nash equilibrium,or even all Nash equilibria. In the remainder of this section we explain how this can be done.The method is based on the following characterization of Nash equilibrium.

EXERCISE 2 Let (A,B) be an m n-bimatrix game and let (p, q) m n.Prove that

(p, q) E(A,B){pAq eiAq for all i

pBq pBej for all j.

LEMMA 1 Let (A,B) be an m n-bimatrix game and let q be a strategy of player 2.Then there is at least one pure strategy that is a best response against q.

PROOF Let p be a best response against q. Then pAq eiAq for all i. Therefore

maxk ekAq pAq = p,Aq = p1 e1Aq + + pm emAq p1 maxk ekAq + + pm maxk ekAq= (p1 + + pm) maxk ekAq = maxk ekAq.

So we may conclude that pAq = maxk ekAq. Then however there must be at least one i suchthat pAq = eiAq. Since p is a best response against q, the pure strategy ei is a best responseagainst q too.

This lemma implies that a pure strategy that is a best response within the class of purestrategies is also a best response within the class of all strategies. For this reason weintroduce the class of pure best responses.

pure best responses DEFINITION Let (A,B) be an mn-bimatrix game and let p m and q n. Then

PB2(p) = {j| pBej = maxl

pBel}

is the set of pure best responses of player 2 to p and

PB1(q) = {i| eiAq = maxk

ekAq}

is the set of pure best responses of player 1 to q.

In the following lemma we show that all best responses against a strategy can easily bedetermined if the pure best responses against that strategy are available. In fact a strategyis a best response if all pure strategies chosen with a positive probability are a pure bestresponse. In order to make this precise we need the following

carrier DEFINITION If p t is a mixed strategy, then

C(p) = {i| pi > 0}

is called the carrier of p.

-

38 CHAPTER 3. THE MIXED EXTENSION

LEMMA 2 Let (A,B) be an m n-bimatrix game and let q be a strategy of player 2.Then a strategy p of player 1 is a best response against q if and only if

C(p) PB1(q).

PROOF (1) Suppose that p is a best response of player 1 against the strategy q of player2. Take an i C(p), so pi > 0. Now assume that eiAq < maxk ekAq. Then

pAq = p1 e1Aq + + pm emAq< p1 maxk ekAq + + pm maxk ekAq = maxk ekAq = pAq,

which is a contradiction. So eiAq = maxk ekAq, which means that i PB1(q).(2) Suppose that C(p) PB1(q). Then

pAq =i

pi eiAq =

iC(p)

pi eiAq =

iC(p)

pi maxk

ekAq = maxk

ekAq.

So pAq eiAq for all i, that is p is a best response against q. Since a pair of strategies one for each player is a Nash equilibrium if each strategy is abest response against the other one, Lemma 2 leads to the following result.

THEOREM 2 Let (A,B) be a bimatrix game. Then a strategy pair (p, q) is a Nashequilibrium if and only if

C(p) PB1(q) and C(q) PB2(p).

In the following example we will show how this result can be used.

EXAMPLE 1 Consider the bimatrix game

(A,B) =

(1, 1) (0, 1) (0, 1) (0, 1)

(1, 1) (1, 1) (0, 1) (0, 1)

(1, 1) (1, 1) (1, 1) (0, 1)

(1, 1) (1, 1) (1, 1) (1, 1)

and the strategies p = (0, 13, 1

3, 1

3) and q = ( 1

2, 1

2, 0, 0). Since

Aq =

12

111

and pB = [ 1 1 1 1 ] ,

PB1(q) = {2, 3, 4} and PB2(p) = {1, 2, 3, 4}. Since C(p) = {2, 3, 4} and C(q) = {1, 2}, thisimplies that C(p) PB1(q) and C(q) PB2(p). So (p, q) E(A,B). EXERCISE 3 Let (A,B) be an mn-bimatrix game. Suppose that there exists a y n

such that yn = 0 and By > Ben (i.e. there exists a mixture of the first n1columns of B that is strictly better than playing the last column of B).a) Prove that qn = 0 for each (p, q) E(A,B).Let (A, B) be the bimatrix game obtained from (A,B) by deleting the lastcolumn.

-

3.3. SOLVING BIMATRIX GAMES 39

b) Prove that (p, q) E(A, B) (p, q) E(A,B), where q is the strat-egy obtained from q by skipping the last element (which is equal to zeroby part a)).

EXERCISE 4 Consider the 3 3-bimatrix game

(A,B) =

(0, 4) (4, 0) (5, 3)

(4, 0) (0, 4) (5, 3)

(3, 5) (3, 5) (6, 6)

.

Let (p, q) E(A,B).a) Prove that it is impossible that {1, 2} C(p).b) Prove that it is impossible that C(p) = {2, 3}.c) Find all the equilibria of this game.

3.3 Solving Bimatrix Games

In the previous section you already used several ad hoc techniques to find the set of Nashequilibria of a bimatrix game. We will now present a more unified method to solve bimatrixgames. Actually this technique can be used for bimatrix games of any size. However, werestrict our analysis to 23-bimatrix games because tehn it is still possible to visualize whatis going on.

The technique for solving bimatrix games is based on an analysis of what is called the best-response correspondence. A correspondence is, as you may know already, a generalizationof the notion of a function. The difference being that a function assigns exactly one pointto a element in its domain, while a correspondence may assign an entire set of points to anelement in its domain.

correspondence DEFINITION Let C be a non-empty set in IRt. A correspondence

:C C

is a mapping that assigns to each point x C a non-empty subset (x) of C. For such acorrespondence the set

{(x, y) C C| y (x)}graph is called its graph.

fixed point We call an x such that x (x) a fixed point of the correspondence .EXAMPLE 2 Let be the mapping that assigns to a number x [0, 1] the set

(x) = {y [0, 1]| y x2}.

-

40 CHAPTER 3. THE MIXED EXTENSION

Then : [0, 1] [0, 1] is a correspondence and its graph can be found in the following figure.

x

y

1

1

x

y

1

1

a

(a)

EXERCISE 5 What are the fixed points of the correspondence ?

EXERCISE 6 Let the correspondence : IR IR be defined by

(x) = {y [0, 1]| y x3} for all x IR.

Draw the graph of and determine its fixed points. Give an example of acorrespondence that does not have any fixed points.

Switching back to the setting of bimatrix games, the best-response correspondence : m n m n of a bimatrix game (A,B) is defined by (p, q) = 1(q) 2(p), where

1(q) := {p| pAq = maxp

pAq}

is the set of best responses of player 1 to the strategy q and

2(p) := {q| pBq = maxq

pBq}

is the set of best responses of player 2 to the strategy p.

Actually this is the correspondence that John Nash introduced when he gave his secondproof of the existence of Nash equilibrium. (By the way, the term Nash equilibrium wasnot coined by Nash himself. He used the term equilibrium point.)

At least it is clear that (p, q) is an equilibrium of the game (A,B) if and only if (p, q) isa fixed point of the correspondence . (The proof of Nash is based on the fact that is atype of correspondence of which Kakutanis theorem asserts that it does indeed have a fixedpoint.)

What we are going to do is to use the best-response correspondences 1 and 2 to determinethe set of all equilibria of a bimatrix game where one of the players has two actions and theother one has at most three actions. For such bimatrix games it is possible to make a pictureof the best-response sets

B1 = {(p, q)| p 1(q)}and B2 = {(p, q)| q 2(p)}.

-

3.3. SOLVING BIMATRIX GAMES 41

Note that for a bimatrix game (A,B)

(p, q) E(A,B) p 1(q) and q 2(p) (p, q) B1 and (p, q) B2 (p, q) B1 B2.

EXAMPLE 3 Consider the 2 2-bimatrix game

(A,B) =

[(0, 9) (3, 5)

(1, 3) (2, 4)

].

In order to determine the best responses of player 1 we note that

Aq =

[0 3

1 2

] [q1

1 q1

]=

[3 3q12 q1

].

Ase1Aq = e2Aq 3 3q1 = 2 q1 q1 = 12 q = ( 12 , 12 ),

it follows that

1(q) =

{e1} if q1 < 122 if q1 =

12

{e2} if q1 > 12 .Similarly,

pB = [ p1 1 p1 ][9 5

3 4

]= [ 3 + 6p1 4 + p1 ]

andpBe1 = pBe2 3 + 6p1 = 4 + p1 p1 = 15 p = ( 15 , 45 )

imply

2(p) =

{e2} if p1 < 152 if p1 =

15

{e1} if p1 > 15 .In the following figure you find the (intersection of the) best-response sets:

e1 ( 15, 4

5) e2

( 12, 1

2)

e2

B1

B2

player 1

player 2

So E(A,B) = {(( 15, 4

5), ( 1

2, 1

2))}.

-

42 CHAPTER 3. THE MIXED EXTENSION

EXERCISE 7 Consider the 3 3-bimatrix game

(A,B) =

(2, 0) (1, 1) (4, 2)

(3, 4) (1, 2) (2, 3)

(1, 3) (0, 2) (3, 0)

.

Find the set of equilibria of this game.

EXAMPLE 4 We consider the 2 3-bimatrix game

(A,B) =

[(2, 1) (1, 0) (1, 1)

(2, 0) (1, 1) (0, 0)

].

Note that the Nash equilibria of this game are contained in the set 2 3 of all possiblestrategy pairs. This set is the product of the sets 2 and 3 and can be represented by thefollowing figure.

e1

e3

e2

e2

FIGURE 2 The set of all strategy pairs.