PDR.12 PRELIMINARY QUALIFICATION & ACCEPTANCE PLANbroekema/papers/SDP-PDR... · Figure 1: The...

Transcript of PDR.12 PRELIMINARY QUALIFICATION & ACCEPTANCE PLANbroekema/papers/SDP-PDR... · Figure 1: The...

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 1 of 12

PDR.12 PRELIMINARY QUALIFICATION & ACCEPTANCE

PLAN

Document number………………………………………………………………………………SKA-TEL-SDP-0000051

Context………………………………………………………………………………………………………………………………SE

Revision……………………………………………………………………………………………………………………………..01

Author…………………………………………………………………………………………………………………..S. Ratcliffe

Release Date………………………………………………………………………………………………………..2015-02-09

Document Classification…………………………………………………………………………………….Unrestricted

Status……………………………………………………………………………………………………………………………Draft

Name Designation Affiliation

Signature & Date:

Signature:

Email:Simon Ratcliffe (Feb 9, 2015)Simon Ratcliffe

Technical Lead

SKA South AfricaSimon Ratcliffe

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 2 of 12

Name Designation Affiliation

Signature & Date:

Version Date of Issue Prepared by Comments

0.1

ORGANISATION DETAILS

Name Science Data Processor Consortium

Signature:

Email:Paul Alexander (Feb 9, 2015)Paul Alexander

SDP Lead

University of CambridgePaul Alexander

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 3 of 12

1 Table of Contents 1 Table of Contents ................................................................................................................ 3

2 List of Figures ..................................................................................................................... 3

3 List of Tables ...................................................................................................................... 3

4 Introduction ......................................................................................................................... 4

5 References ......................................................................................................................... 4

5.1 Applicable Documents ................................................................................................. 4

5.2 Reference Documents ................................................................................................. 4

6 Assumptions ....................................................................................................................... 6

7 Verification Approach .......................................................................................................... 6

7.1 Qualification ................................................................................................................. 7

7.2 Acceptance .................................................................................................................. 7

7.3 Commissioning ............................................................................................................ 7

8 Verification programme ....................................................................................................... 8

8.1 Objectives .................................................................................................................... 8

8.2 Activities ...................................................................................................................... 8

Formal reviews, peer reviews and walkthroughs ................................................................. 9

Verification through testing .................................................................................................. 9

Verification through analysis ..............................................................................................10

Verification through Inspection ...........................................................................................11

8.3 Simulators ...................................................................................................................11

8.4 Test Environments ......................................................................................................11

Build Verification Environment ...........................................................................................11

Development Test Environment .........................................................................................11

Integration Test Environment .............................................................................................12

Volume and Performance Test Environment ......................................................................12

9 Verification Matrix ..............................................................................................................12

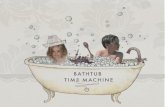

2 List of Figures Figure 1: The ‘bathtub’ curve depicting failure rates versus time. ..............................................10

3 List of Tables There are no tables in this document.

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 4 of 12

4 Introduction

This preliminary document sets out the approach taken by the Science Data Processor element towards

verification activities. This is primarily concerned with qualifying the design against the allocated

requirements and accepting constructed instances of the SDP sub-elements onto the SKA telescope sites

in preparation for commissioning activities.

This document will be split into a Qualification Test Plan (QTP) and an Acceptance Test Plan (ATP) for the

Critical Design Review (CDR). However, at this stage there is sufficient overlap to justify a single

document that describes the high level philosophy towards verification.

5 References

5.1 Applicable Documents The following documents are applicable to the extent stated herein. In the event of conflict between the

contents of the applicable documents and this document, the applicable documents shall take

precedence.

Reference Number Reference

[AD1] SKA Phase 1 System (Level 1) Requirements Specification

W Turner, Document Number SKA-TEL-SKO-0000008, Dated 15 Sep 14

[AD2] SKA-TEL-SKO-DD-001 SKA1 SYSTEM BASELINE DESIGN

[AD3] MISCELLANEOUS CORRECTIONS TO THE BASELINE DESIGN

[AD4] SKA-TEL-SDP-0000054 - SDP Prototyping Plan

[AD5] SKA-TEL-SDP-0000052 - SDP High-level Risk Register

5.2 Reference Documents

The following documents are referenced in this document. In the event of conflict between the contents

of the referenced documents and this document, this document shall take precedence.

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 5 of 12

Reference Number Reference

[RD1] International Standard – Systems and software engineering – Software Life Cycle

Processes ISO/IEC 12207 IEEE Std 12207-2008, Dated 01 Feb 08.

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 6 of 12

6 Assumptions

As a preamble to this document, we list the fundamental assumptions that guide our overall approach

to verification. Although this content is repeated and expanded upon in the following sections, it is

summarised here to ease review of the document.

● Functional qualification of the overall architecture will primarily be achieved through testing of

verification requirements against simulators.

● Performance qualification of aspects of the overall architecture may require deferral until real

sky data is available.

● An agile, iterative approach to qualification is taken, to match the proposed software

development methodology.

● Qualification and Acceptance are discussed jointly in this document and are only split in the run

up to the Critical Design Review.

7 Verification Approach

Although it is traditional to follow the “Vee” model as espoused in [RD1], our feeling is that this model is

too restrictive when considering verification of a system such as the SKA as a whole, and indeed the SDP

in specific.

The top down approach to development is likely to be followed fairly closely, and certainly the element

level of the SKA will do so with some rigour. However, at the lower levels it is felt that a more agile

approach to development and testing, and by extension verification, is needed.

The main aim for adopting an alternate approach is to allow testing that is both iterative and crosses the

traditional horizontal boundaries in the “Vee” approach. For instance, the LMC sub-element is likely to

provide a simulation capability, with limited functionality, at a very early timescale. This will be

integrated into a testing loop with other sub-elements at multiple levels to support component testing.

These types of prototyping activities [AD4] will take place throughout the SDP consortium, and are an

essential input into the design process. This deep embedding of prototyping into the design phase will

be fully documented in the Qualification Test Plan (QTP).

These activities will form a critical part of the evolution of the SDP design from PDR to CDR phase,

culminating in the Qualification Test Report, which proves that the proposed design meets the

requirements specification.

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 7 of 12

Verification is also closely tied to the management of risk [AD5], and many of the risk register items will

be explicitly retired only when the design components underlying the risk have been appropriately

verified.

7.1 Qualification

The purpose of qualification is to ensure that the proposed design meets the requirements that specify

the design. This happens at multiple levels, with a cascaded, hierarchical approach to the process.

The first step in the qualification process is to establish a set of verification requirements, which provide

coverage of the requirements assigned to a particular function. These verification requirements are

categorised by the method of verification, with those to be verified by test forming the backbone of the

qualification process.

The QTP will describe in detail the formal process for arriving at a fully qualified SDP element.

7.2 Acceptance

The purpose of acceptance testing is to ensure that a component, be it hardware or software, meets the

set of requirements that specify the particular component. In many circumstances, tests similar to those

used for qualification of the design can be used, although the stakeholders are generally different.

Typically acceptance marks the formal handover of a component from the developer to the client. This

may be an internal handover in the case of integrated components and can be quite lightweight, and

automated, in this scenario.

Once the individual components have been accepted, the formal process of verifying the emergent

behaviour of the integrated system commences.

7.3 Commissioning

Acceptance testing only proves that the component meets the requirements specified on it, it does not

guarantee that the component will perform acceptably once integrated into the operational system.

This is one reason why it is essential to have strict control of interfaces, as this significantly mitigates the

commissioning risk. Once any initial issues have been dealt with, a number of softer operational tasks

are performed:

● Handover of documentation and training material.

● Direct training of operational staff.

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 8 of 12

● Side by side operations.

At the conclusion of side-by-side operations, the commissioning team will take operational responsibility

to evaluate the final performance of the component. The SDP team, particularly QA, will be readily

available through this period to address any concerns and bugs that may be found.

For the SDP in particular, early commissioning will be a critical activity as it will mark the first time in

which the full performance can be adequately evaluated although it may be possible to gain an early

indication of system performance by deploying with simulated inputs on representative hardware in

large national facilities ahead of time (assuming representative hardware may be found). This is

described in Volume and Performance Tests below.

8 Verification programme

The SDP Element verification shall be risk-driven. High risk areas shall be identified at the time of the

requirements review and a plan shall be proposed for mitigating these risks. These risk mitigation

activities shall be described in the SDP element development plan and the prototyping plan [AD4]. The

risks will drive the verification activities performed on the SDP element.

8.1 Objectives

The objectives of the risk driven approach are to ensure that:

● Identification of risk, with mitigation through the verification function;

● The appropriate level of risk based verification is performed, under the guiding principle that the

verification is carried out at the earliest possible point in the lifecycle thereby reducing costs;

● To ensure that the design is proven to meet the qualification criteria, and is able to be deployed

to the production phase with a low risk of integration;

● To drive down technical/technology risks of the project;

8.2 Activities

Verification activities will include:

a) Formal reviews, peer reviews and walkthroughs;

b) Verification through testing of qualification models, including horizontal and vertical prototypes;

c) Verification through analysis by means of modelling and simulation;

d) Inspection of products and assemblies.

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 9 of 12

A subset of verification activities will form the basis of the Qualification Test Plan, where the results of

the verification activities will be captured in a Qualification Test Report after successful execution.

Formal reviews, peer reviews and walkthroughs

Key artefacts will be subjected to peer reviews and walkthroughs before being formally reviewed e.g.

requirements specification, design documents. The risk driven approach will dictate the type of review

performed on each artefact produced during the pre-construction phase. Code is treated as an artefact

and will likewise be subjected to reviews.

Verification through testing

Qualification models will be subjected to a range of tests including unit tests, component tests,

integrated SDP tests, hardware verification tests, regression tests and volume & performance tests

during the pre-construction phase.

Unit Tests

Each SDP component shall be sufficiently covered by unit tests to ensure the units within the

component are ready for component testing. Unit tests across all components will be executed on a

regular basis to ensure the integrity of the component is maintained, with quick identification and

correction of defects at this level.

Component Tests

Requirements allocated to each SDP component must be covered during component testing. SDP

components will be tested by simulating external interfaces. Component tests will be executed on a

regular basis to ensure integrity of the component is maintained, with quick identification and

correction of defects at this level.

Hardware Verification Test

Hardware components typically follow a ‘bathtub’ curve of failure rates versus time (see Figure 1).

Hardware components will undergo a ‘burn-in’ test to eliminate early component level failures.

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 10 of 12

Figure 1: The ‘bathtub’ curve depicting failure rates versus time.

Hardware verification may in some cases seek to verify the electromagnetic interference and

electromagnetic compatibility requirements of the SDP element.

Integrated SDP Tests

Integrated SDP tests will be performed against a full simulated system to cover SDP functionality across

multiple components and exercise the full SDP subsystem in a “true-to-life” framework. Integrated SDP

tests will be logged against the set of SDP requirements to ensure coverage of the requirements.

Integrated SDP tests will cover the bulk of the qualification tests required to ensure conformance with

the requirements.

Regression Tests

Functional tests will be scripted where possible such that a set of automated regression tests can be

executed before and after changes are introduced to a test environment. An automation framework is

proposed to be used to facilitate regression testing.

Volume and Performance Tests

Volume and performance tests will be conducted on representative hardware to ensure conformance

with performance requirements. Simulators will be used to scale inputs to the required levels. Volume

and performance tests will be logged against the set of performance requirements to ensure coverage

of requirements.

Verification through analysis

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 11 of 12

Verification through analysis includes sampling and correlating measured data and observed test results

with calculated expected values to establish conformance with requirements.

Qualification models and prototypes will be subjected to verification by analysis where verification

through testing is not possible.

Verification through Inspection

Verification through inspection involves the evaluation by observation and judgment accompanied by

the physical act of measurement or gauging to assess conformance with specified requirements.

8.3 Simulators

Simulation will play an essential role in both qualification and acceptance verification. Particularly as

part of an integrated, hierarchical testing framework, simulators of components that have multiple

dependencies will provide much more robust testing than would otherwise be possible.

Ideally, the SDP would like to completely isolate all external dependencies into simulators. This would

mean at least simulating the following interfaces:

● SDP-TM

● SDP-CSP

Such capabilities will be of use to the other party in the interface as well, and so opportunities for co-

development are clear.

8.4 Test Environments

Build Verification Environment

Responsible for building SDP element components and performing build verification tests. Automated

unit and component level tests will be executed on this environment to perform regular checkpoints on

the integrity of the current build. A minimal hardware specification is used for this environment.

Development Test Environment

A test environment for ad-hoc integration (functional) testing of units and components. This

environment allows development teams to test new units\components at a high-level without going

through the rigours of a configuration management process. This environment is also used for

Document No: SKA-TEL-SDP-0000051 Unrestricted

Revision: 01 Author: S. Ratcliffe

Release Date: 2015-02-09 Page 12 of 12

reproducing\troubleshooting defects not evident at a unit level. A minimal hardware specification is

used for this environment.

Integration Test Environment

A test environment used to perform functional integration testing of SDP units and components. This

environment is formally controlled using change control and configuration management processes.

Representative hardware is used for this environment.

Volume and Performance Test Environment

A test environment used to test non-functional requirements such as performance, capacity, resource

usage, and availability. This environment is formally controlled using change control and configuration

management processes. Representative hardware is used for this environment.

9 Verification Matrix

The verification matrix will detail each verification requirement placed on the SDP at all levels. It will list

the requirement under verification and the verification method.

This will be developed early on in the CDR process once the top-level requirements and design have

been signed off at PDR.

PDR12PreliminaryQualificationandAcceptanceplan(1)EchoSign Document History February 09, 2015

Created: February 09, 2015

By: Verity Allan ([email protected])

Status: SIGNED

Transaction ID: XJEEYGIF2I443AX

“PDR12PreliminaryQualificationandAcceptanceplan (1)” HistoryDocument created by Verity Allan ([email protected])February 09, 2015 - 2:51 PM GMT - IP address: 131.111.185.15

Document emailed to Simon Ratcliffe ([email protected]) for signatureFebruary 09, 2015 - 2:52 PM GMT

Document viewed by Simon Ratcliffe ([email protected])February 09, 2015 - 2:58 PM GMT - IP address: 66.249.93.195

Document e-signed by Simon Ratcliffe ([email protected])Signature Date: February 09, 2015 - 3:00 PM GMT - Time Source: server - IP address: 196.24.41.254

Document emailed to Paul Alexander ([email protected]) for signatureFebruary 09, 2015 - 3:00 PM GMT

Document viewed by Paul Alexander ([email protected])February 09, 2015 - 6:41 PM GMT - IP address: 131.111.185.15

Document e-signed by Paul Alexander ([email protected])Signature Date: February 09, 2015 - 6:41 PM GMT - Time Source: server - IP address: 131.111.185.15

Signed document emailed to Verity Allan ([email protected]), Paul Alexander ([email protected]) andSimon Ratcliffe ([email protected])February 09, 2015 - 6:41 PM GMT