Parallel treatment of block-bidiagonal matrices in the solution of ordinary differential boundary...

Transcript of Parallel treatment of block-bidiagonal matrices in the solution of ordinary differential boundary...

Journal of Computational and Applied Mathematics 45 (1993) 191-200 North-Holland

CAM 1289

191

Parallel treatment of block-bidiagonal matrices in the solution of ordinary differential boundary value problems

K. Wright

Computing Laboratory, University of Newcastle-upon-Tyne, United Kingdom

Received 18 October 1991 Revised 23 March 1992

Abstract

Wright, K., Parallel treatment of block-bidiagonal matrices in the solution of ordinary differential bound- ary value problems, Journal of Computational and Applied Mathematics 45 ( 1993) 191-200.

Ignoring boundary conditions, a number of schemes such as multiple shooting give rise to systems of equations in block-bidiagonal form. With some preliminary transformation others, such as collocation, can be reduced to this form. An algorithm which reduces a block-bidiagonal system of size n x (n + 1) to a system of size 1 x 2 blocks will be described. These equations can then be combined with the boundary conditions and the solution completed. The reduction uses ideas similar to “recursive doubling” and “block-cyclic reduction”, but full row interchanges are included. The algorithm is designed to facilitate the use of parallel processes. The algorithm has been implemented on the 1Cprocessor Encore Multimax shared-memory multiprocessor in Newcastle. Results will be described for different numbers of processors and the algorithm compared with other possibilities.

Keywords: Block-bidiagonal matrices; parallel algorithms; ordinary differential boundary value problems.

1. Introduction

Almost blockdiagonal matrices of various types arise from a variety of applications, one particu- larly important area being the numerical solution of ordinary differential boundary value problems. A survey of methods for sequential machines is given in [ 31. Here a particular form of matrix is considered which arises, for example, in the multiple-shooting method and the Keller [ 51 box scheme for boundary value problems. If the range of the differential equation is subdivided into n subintervals and the boundary conditions ignored for the moment, these schemes give rise to

Correspondence to: Dr. K. Wright, Computing Laboratory, University of Newcastle-upon-Tyne, Newcastle- upon-Tyne NE1 7RU, United Kingdom.

0377-0427/93/$06.00 0 1993 - Elsevier Science Publishers B.V. All rights reserved

192 K. Wright /Parallel methods for solving ODES

a block-bidiagonal matrix with n diagonal blocks. With some preliminary transformation other methods such as collocation can give rise to matrices of this form.

Most previous methods assume separated boundary conditions and put the left-hand boundary condition equations first and the right-hand conditions last. Then various elimination schemes are possible working from left to right, for example, [ 1,2,7]. In the present algorithm the unknowns are eliminated in a different order so that at the final stage the bidiagonal system is reduced to just two blocks which relate the unknowns corresponding to the end points. This is based on the ideas of block-cyclic reduction and recursive doubling, which are discussed, for example, in [4, Chapter 51. The methods described in [4], however, do not allow for row interchanges in the elimination process. The method presented here does allow for row interchanges, but also allows a simple parallel implementation similar to the methods discussed in [ 41. Naturally the interchanges involve some extra work and extra storage. The algorithm can be interpreted geometrically as repeatedly eliminating variables associated with the common point of two adjacent intervals.

Since this paper was originally prepared, a report [9] has appeared which describes essentially the same algorithm and a “blocked” form of it. This follows the report [8] which uses a similar strategy but with a QR rather than an LU decomposition. A detailed consideration of numerical stability is also given in [ 9 1. Other parallel algorithms for this problem based on the work in [ 2,7 ] are considered in [6].

Two variants of the algorithm are described: one for a single right-hand side, the other based on matrix factorization to allow for delayed solution of the equations.

The algorithms were implemented on a shared-memory bus-connected Encore Multimax multi- processor in Newcastle. This machine has fourteen NS32532 processors with 256Kb cache memory per processor and runs the UMAX operating system. This is a multi-user system, and does not allow the programmer to have direct control of the allocation of processors or storage. Timings relate to elapsed times and the best time over a number of runs is given to allow for delays caused by other users.

2. Outline of the algorithm

Suppose that the differential system is of order m, for example a system of m first-order simultaneous differential equations or a single equation of order m. The differential equation approximation gives rise to a block-bidiagonal matrix with block size of m x m with m unknowns associated with each subdivision point of the range. Suppose also that the given range [a, b ] is subdivided by points a = tl < t2 < ... -c t,, < t,, 1 = b. At each point tj we have a set of m unknowns yj. Putting the boundary conditions to one side we have a set of equations with an n x (n + 1) block-bidiagonal matrix. In the first stage of the algorithm the Yj with even subscript j and j < n are eliminated. This gives rise to a new set of simultaneous equations, and the matrix involved is also of bidiagonal form. The process is then repeated at each stage giving a new set of equations of roughly half the size of the previous set. The process is continued until a set of equations with two blocks remains. These equations relate the y values at the end points a and b, and so can now be combined with the boundary conditions giving a 2m x 2m system which can be solved directly for these end values. Note that with this scheme there is little advantage to having separated boundary conditions. Having found these values back-substitution can be carried out in stages in the reverse order to the elimination.

K. Wright /Parallel methods for solving ODES 193

If a single solution to the equations is required, then the data can be organised so that only one extra block of storage is required for each block row giving n extra blocks in all. If matrix factorization is used to allow for delayed solution of the equations, then a further extra block is required at each elimination step; this requires 2n extra blocks altogether.

2.1. Simple solution

Suppose the diagonal blocks of the matrix are denoted by Ai, the superdiagonals by Bi and the “till-in blocks” by Ci, i = 1,. . . , II. The basis of the algorithm is to eliminate the variables two successive block rows have in common. If full row interchanges are included, this will cause fill-in in both block rows, to the right on the first row and to the left on the second row. For simplicity consider block rows 1 and 2. On row 1 the blocks are arranged as A 1, B1, Ct ; on the second row as C,, AZ, Bz. Both Cl and C2 are set to zero initially. The elimination proceeds by choosing as pivot the largest element from the first columns of B1 and A2 considered together. Rows are interchanged to put this pivotal element into the ( 1,l) position of the A2 block.

Gaussian elimination is then used to eliminate the corresponding variable from the set of equations in both block rows. A similar elimination based on the diagonals of A2 is used for the other common variables of the two block rows. Note that all six blocks may be modified in this process. This leaves an upper triangular matrix in A2 with other information for the back-substitution in C2 and B2. The blocks Ai and Ci contain equations relating the outer variables needed for use in the next stage. The block B1 is in principle zero, though in practice it contains information which is no longer required. This allows B1 to be overwritten by Ci and then this block row contains information in the same form as it was initially.

Note that this calculation does not affect any other block rows of the matrix, so that this process can be applied to each pair of rows 2j - 1 and 2j, j = 1,. . . , [in], and that all the reductions are independent, so that they can be performed in parallel. At the end of the stage when all these reductions have been carried out, the odd block rows contain a new block-bidiagonal matrix of roughly half the size of the original. The same technique can clearly be applied to the new matrix and the whole process repeated until there remains just a single block equation with two blocks relating the unknowns corresponding to the ends of the interval. Note that if the number of block rows is odd, then the unpaired block is just passed on to the next stage unaltered, so this causes no difficulty.

The final block equation can be combined with the boundary equations and solved directly. If only values corresponding to the end points are required, the process need go no further. If the interior values are required, back-substitution must be carried out. This is done in stages in the reverse order to the elimination.

The matrix may be conveniently stored as three l-dimensional arrays of blocks, equivalent to three 3-dimensional arrays of elements, or as a 2-dimensional array of blocks equivalent to a 4-dimensional array of elements. Some care is needed to keep track of the position associated with the unknowns corresponding to the different blocks. This is done by keeping three indices, left, centre and right, corresponding to each block row. Initially just the left and right indices are set to the positions corresponding to the two sets of variables referred to. When an elimination is carried out, these indices are updated and a centre index set to indicate the position corresponding to the variables for the upper triangular matrix A2 in the central position in the second block.

194 K. Wright /Parallel methods for solving ODES

2.2. Solution using matrix factorization

The main difference needed in the matrix factorization form of the algorithm is that after elimination has been applied to a pair of block rows the multipliers used in the elimination need to be stored and a record of interchanges kept. The multipliers are usually kept in the parts of the matrix where elimination has taken place. This is convenient for the triangular matrix in the A2 position. To allow the Bt block to be overwritten as in the simple method, the multipliers corresponding to the Bt block can be kept in an extra block 02 associated with the second row of the pair. In a similar manner a record of interchanges can be kept in a vector associated with the second block row. An extra forward-substitution phase is now needed. This can be organised in precisely the same way as the elimination.

3. Implementation

These algorithms have been implemented in Encore Parallel Fortran (EPF) which is an extension of FORTRAN77. The extensions include mechanisms for setting up parallel tasks and synchronisa- tion. The EPF parallel version was particularly simple to implement using “DOALL” loops. With these loops the calculations for all values of the loop control variable are considered as potentially parallel, and the system assigns the different calculations to the available processors. Such loops were used to carry out the pair block row eliminations at each stage of the elimination, and similar loops were used for the back-substitution. The only synchronisation needed is a “barrier” at the end of each group of pair eliminations or back-substitutions.

The use of “DOALL” loops provides dynamic allocation of tasks to processors, which is partic- ularly appropriate in a multi-user environment where the program does not have control of the number of processors available. This contrasts to the approach used in [9] where the allocation is predetermined.

4. Collocation equations

Two methods for reducing matrices arising from piecewise polynomial collocation to block- bidiagonal form are described below. If, for example, a local Chebyshev or power series represen- tation is used for an mth-order system, a block corresponding to a central interval would have the form of a rectangle with more rows than columns. It would consist of m equations giving join conditions with the previous interval, p equations from the differential equation(s), and a further m equations representing join conditions to the next interval. The block would have p + m columns.

The first reduction method introduces new variables representing m values at the join points. (These may be function values for a first-order system or function and derivative values for higher- order equations.) These introduce extra columns to the block, essentially adjoining an m x m block unit matrix at the top left-hand corner and one at the bottom right-hand corner. Gaussian elimination with row interchanges can now be applied to eliminate the original variables. This leaves m equations in 2 x m unknowns, that is a block row of the required bidiagonal form. The same technique can be applied to the end intervals if equations involving end values are added. The boundary conditions may then be written directly in terms of the end values.

K. Wright /Parallel methods for solving ODES 195

The second method uses a selection of the original variables. This is done by applying column interchanges to find pivots in the rows corresponding to the differential equation(s), and then using row Gaussian elimination with these pivots for the whole block. This elimination can be performed with p pivots, and leaves 2 x m equations in m unknowns, that is a block column of the required bidiagonal form. Minor modifications are needed to the two end blocks to produce the required full bidiagonal matrix.

This technique clearly applies to any matrix with the same shape, and a similar idea can be used for matrices having the transpose of this form.

Note that for both methods the calculations for each subinterval and corresponding block are independent of each other and so can simply be performed in parallel. The second technique involves less work than the first, but the first does have the advantage that the variables associated with the bidiagonal form have an obvious significance for the differential equation.

5. Operation counts

The operation count for the elimination phase is N ynm3 multiplications for large n and m.

For the NAG [ lo] matrix decomposition subroutine FO 1 LHF, which was used for comparison and which uses row and column interchanges in a similar way to the method described in [2], the corresponding count is N in ( 5m3 + 3mp (m - p ) ), where p is the number of left-hand boundary conditions. This indicates that for separated boundary conditions the new method uses significantly more arithmetic with work ratios lying between 4 and 4.6. However, these operation counts give only a very rough guide as for both methods there is significant overhead.

6. Results

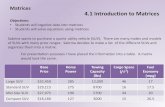

Firstly results comparing the different ways of solving a bidiagonal system will be considered. Figures 1 to 5 display graphs illustrating the behaviour for various block sizes and numbers of blocks for equations arising from multiple shooting and collocation. As the Bj blocks in the multiple- shooting case are always unit matrices, and other zeros are likely to be present, a minor modification was included in the elimination. This involved testing whether a multiplier was zero and if so, no row subtraction was carried out.

The graphs give the times for the new algorithm divided by that obtained by the NAG subroutines FOlLHF and F04LHF which make no use of the parallel facilities. The comparison is very much affected by block size. For block size m = 2 the new algorithm is quicker even using a single processor, and the speedup with more processors is reasonably good. For block size m = 4 the times on a single processor are similar, though the new algorithm gives slightly better times for 50 and 100 blocks. With two or more processors there is a significant gain in time. For block size m = 8 there is a significant difference between the results for multiple shooting and collocation. On a single processor times are roughly twice as long for collocation compared to the NAG routines, while for multiple shooting the factor is between 1 and 1.2. The speedup with more processors is reasonably good, though for twenty blocks there seems little advantage in using more than eight processors, and for collocation in this case there is only a marginal gain over the NAG routines. With block size sixteen the new method gives much poorer results than NAG on a single processor

196 K. Wright /Parallel methods for solving ODES

0.0 ’ I I I I I 1 2 4 6 8 10 12

Number of Processors

Fig. 1. Collocation; block size 2.

with a ratio between 2.5 and 3.5. There is good speedup relative to the same algorithm on a single processor so that with 50 or more blocks there is some advantage in using the parallel version.

The solution of the bidiagonal system is not necessarily the dominant part of the calculation. The set-up time for the equations can be substantial, particularly for multiple shooting. With collocation, particularly with a lot of collocation points, the time for reduction from the original matrix to bidiagonal form along with the equation set-up time can also be significant. To illustrate this, Fig. 6 displays some graphs of total times for different programs. These give total times for a

I I I I I I I 2 4 6 8 10 12

Number of Processors

Fig. 2. Collocation; block size 4.

K. Wright /Parallel methods for solving ODES 197

Block Rows -a!+ il.20 ---l -Ic 640 -&- n.100 + n2m

4 6 6 10 12

Number of Processors

Fig. 3. Collocation; block size 8.

number of ways of solving the collocation equations for the fourth-order boundary value problem

Y (4) + ty”’ + y = (2 + t)e’,

with

Y(O) = 1, Y’(O) = 1, y”(0) = 1, ~(1) = e,

which has the solution y = et. The results are for 300 subintervals with ten Gauss points in each interval.

2 4 6 6 10 12

Number of Processors *

Fig. 4. Multiple shooting; block size 8.

198 K. Wright /Parallel methods for solving ODES

o.oc, 2 4 6 8 10 12

Number of Processors

Fig. 5. Multiple shooting; block size 16.

Results for three versions using the NAG routines are given. The

Block Rows t n-20 -S- C-60 + n.100 + n-200

first (Naga) uses the first

method of reduction to bidiagonal form, the second (Nagb) uses the second method of reduction to bidiagonal form. These use parallel calculation for the set-up, reduction and final extension of the solution. The third (Nagc) uses the NAG routines without any preliminary reduction; parallelisation is applied only in the set-up phase. The next two methods use the first reduction method followed by two versions of the new block-bidiagonal solver. The first (e) not checking for zero multipliers,

the second (f) making the check and avoiding subtraction of rows. In both these programs all parts,

8

2 4 6 8 IO 12

Number of Processors

Fig. 6. Collocation; 10 point, m = 4, n = 300.

K. Wright /Parallel methods for solving ODES 199

set-up, reduction to block-bidiagonal form and solution use parallel constructs. The algorithm (g) uses the second method of reduction to bidiagonal form followed by solution taking account of zero multipliers.

First note that method (f) here is only marginally quicker than method (e), so that with collocation there is little advantage in testing for zero multipliers. For multiple-shooting equations the difference is usually more significant. The preliminary reduction is clearly well worthwhile when using the NAG routines. Even on a single processor the Nagc version is only marginally better than Nagb. Method (g) comes out best, slightly better than using the NAG routines after reduction. However, it should be noted that here the solution time is a fairly small proportion of the whole, being between 0.5 and 0.6 seconds on a single processor, with the whole calculation taking about seven seconds on a single processor.

Although detailed comparison with the results of [9] is not straightforward, it is clear that the lower arithmetic speed relative to data access speed on the Multimax compared to the CRAY and Alliant machines is important. This is in addition to the scalar nature of the processors of the Multimax compared to vector processors of the others.

It is interesting to note that the algorithm described here, equivalent to the “cyclic reduction” version of [9] is recommended there as particularly suitable for vector processors, while methods treating a number of blocks together appear better for the parallel case. This seems to be on account of reduced overhead and better data access. The Multimax results confirm this for small block sizes (d 4), but for larger sizes the good relative speedup indicates that there is likely to be little advantage. However, more experiments are needed to check these indications.

Another important difference is that good speedup is obtained on the Multimax for significantly smaller matrix sizes than on the Alliant and CRAY results in [9]. This is again likely to be related to the relative arithmetic speeds.

7. Conclusions

For the complete calculation there is considerable variation with both original and final block sizes, so it is not easy to come to a firm conclusion. Nevertheless it is clear that significant time savings can be made using parallel facilities, and that the recursive-doubling algorithm is good for a lot of small blocks and not so good for larger block sizes.

Apart from the timing differences, it is important to emphasise some other advantages of the “recursive-doubling” soIution algorithm. Firstly, no more work is required for nonseparated boundary conditions than for separated. Secondly, if only end values of the solution are required (as sometimes happens, for example in some parameter estimation problems), most of the back- substitution stage can be omitted. Sensitivity of these end values to changes in the boundary conditions can be checked very easily as the decomposition and forward substitution phases only need to be done once except for the final block where the boundary conditions are introduced.

As full row interchanges are carried out with the “recursive-doubling” scheme, no serious problem from growth of round-off error is expected unless the matrix is very large, and in the results obtained no problem was apparent in simple checks on the accuracy of the results. Numerical stability is considered more systematically in [9] where it is shown that the situation is significantly better than for a general matrix.

200 K. Wright /Parallel methods for solving ODES

Further savings are possible for the parallel versions by overlapping different stages, and by over- lapping the set-up and reduction phases with the decomposition. This naturally would significantly complicate the program, and it is not clear how much saving this would give.

This algorithm is very convenient to implement on a shared-memory multiprocessor, and does make reasonably efficient use of the parallel facilities, but the extra work compared to the row and column interchange method makes the results rather disappointing. However, it is clearly a viable parallel algorithm, particularly for differential equations with nonseparated boundary conditions.

References

[ 1 ] C. de Boor and R. Weiss, SOLVEBLOK: a package for solving almost block diagonal linear systems, ACM Trans. Math. Software 6 (1980) 80-87.

[2] J.C. Diaz, G. Fairweather and P. Keast, FORTRAN packages for solving certain almost block diagonal linear systems by modified row and column elimination, ACM Trans. Math. Software 9 (1983) 368-375.

[ 31 R. Fourer, Staircase matrices and systems, SIAM Rev. 26 (1984) l-70. [4] R.W. Hackney and C.R. Jesshope, Parallel Computers 2 (Adam Hilger, London, 1988). [ 51 H.B. Keller, Numerical Solution of Two-Point Boundary Value Problems (SIAM, Philadelphia, PA, 1976). [6] M. Paprzycki and I. Gladwell, Solving almost block diagonal systems on parallel computers, Parallel

Comput. 17 (2&3) (1991) 133-153. [7] J.M. Varah, Alternate row and column elimination for solving certain linear systems, SIAM J. Numer.

Anal. 13 (1976) 71-75. [ 81 S.J. Wright, Stable parallel algorithms for two-point boundary value problems, Preprint MCS-P178-0990,

Math. Comput. Sci. Div., Argonne National Lab., 1990. [9] S.J. Wright, Stable parallel elimination for boundary value ODES, Preprint MCS-P229-0491, Math.

Comput. Sci. Div., Argonne National Lab., 1991. [lo] NAG Fortran Library Manual Mark 13, Nag Ltd., Oxford, 1988.