outline

description

Transcript of outline

COMBINED STATIC AND DYNAMIC VARIANCE ADAPTATION FOR EFFICIENT INTERCONNECTION OF

SPEECH ENHANCEMENT PRE-PROCESSOR WITH SPEECH RECOGNIZER

Marc Delcroix, Tomohiro Nakatani, Shinji Watanabe

NTT Communication Science Laboratories, NTT Corporation

A Maximum-Likelihood Approach to Stochastic Matching for Robust Speech Recognition (Ananth Sankar and Chin-Hui Lee Speech Research Department AT&T Bell Laboratories)

2

outline

• Introduction • The stochastic matching framework• Estimation of Model-Space Transformation• Dynamic variance compensation• Proposed method for variance calculation• Experiment• Conclusion

Introduction(3-1)

• Conventionally there are two approaches for reducing the mismatch between the training and test conditions, namely model based approaches and feature based approaches.

• The model adaptation relies on likelihood maximization, which assures a reduction in the mismatch.

• Adaptive training is effective in removing static mismatch caused for example by speaker variations. However, it may not cope well with any mismatch arising for example from non-stationary noise or reverberation.

• Feature based approaches consist of estimation clean speech feature using the observed speech. For example, speech enhancement methods can be used as a pre-processor to ASR.

Introduction (3-2)

• Many speech enhancement algorithms can efficiently reduce non-stationary noise. However, remaining noise or the excessive removal of noise may introduce distortions that prevent high recognition performance.

• One kind of method uses the information on feature reliability to improve the performance of enhancement.– Focusing on reliable feature components during decoding– Dynamic variance compensation proposes increasing the model

variance for unreliable feature components by adding the variance of enhanced feature.

• So, there have been several proposals as regards estimating the variance of enhanced feature.– Usually depend on the speech enhancement pre-process– Lack generality. For example, feature variance is derived from a

Gaussian mixture model of clean speech.

Introduction (3-3)

• The generality of the feature variance calculation could be increased by approximating it with the estimated observed noise.

• However, the estimated variance may be far from the Oracle variance (i.e. the distance between clean and enhanced speech features).

• Propose introducing a dynamic variance compensation scheme into a static adaptive training framework.– Design a parametric model for the feature variance that includes

static and dynamic components.– Dynamic component is derived from the speech enhancement

pre-processor output as the estimated observed noise.– can be performed for any pre-processor and assuring generality.– Parameters of variance model are optimized using an adaptive

approach therefore may approach better Oracle feature variance.

• The stochastic matching algorithm operates only on the given test utterance and the given set of speech models, and no additional training data is required for the estimation of the mismatch.

• In speech recognition, the model are used to decode

using the maximum a posteriori decoder

The stochastic matching framework (3-1)

x Y

)(),|(argmax' X'

WWYWW

pp

),(YX F

• In the feature-space, if this distortion is invertible, then we may map back to the original speech with an inverse function , so that

Y X

F

• In the model-based consider the transformation so that

The stochastic matching framework (3-2)

)( XY G

G

)(),|(argmax' X'

WWYWW

pp

• One approach to decreasing the mismatch between and is to find the parameters or , and the word sequence that maximize the joint likelihood of and in

Y

Y

x W

W

),|,(argmax)','( X)','(

WYWW

p

• Thus in the feature-space, we need to find such that

• Correspondingly, in the model-space, we need to find such that),|,(argmax)','( X

)','(

WYW

Wp

'

'

• For this study, we assume that is a set of left to right continuous density subword HMMs, therefore the equation above can be written as

The stochastic matching framework (3-3)

• To simplify expressions, we remove the dependence on , and write the maximum-likelihood estimation become

W

),|(argmax' X'

Yp ),|(argmax' X'

Yp

x

S C

CSpp ),|,,(argmax),|(argmax' X'

X'

YY

S C

CSpp ),|,,(argmax),|(argmax' X'

X'

YY

• In general, it is not easy to estimate or directly. However, for some and , we can use the EM algorithm to iteratively improve on a current estimate and obtain a new estimate such that the likelihoods in equation above increase at each iteration.

' ' F

G

• Let the observation sequence be related to the original utterance

and the distortion sequence by

Estimation of Model-Space Transformation

),( ttt bxfy

},,{ 1 TyyY

},,{ 1 TxxX },,{ 1 TbbB

• Then, if the and are independent, we can write the probability density function of as

tH

ttttt dbdxbpxpyp )()()(

tx tbty

• Let the statistical model of be given by ,which could be an HMM or a mixture Gaussian density.

B B

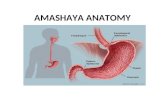

Dynamic variance compensation(2-1)

• Recognition is usually achieved by finding a word sequence that maximize the function as:

)(),|(argmax' X'

WWYWW

pp

• Speech is modeled using a HMM with state density modeled by a Gaussian Mixture:

M

mmnmnt

M

mtt xNmPmxpmPnxp

1,,

1

),;()()|()()|(

• In practice, speech feature used for recognition may differ from clean speech features used for training, , because of noise, reverberation or distortions induced by speech enhancement pre-processing. In this paper, we focus on the latter case.

• Model the mismatch, , between clean speech feature and enhanced speech feature as:

tx̂tx

txtbtx̂ ttt bxx ˆ

where is modeled by a Gaussian as: ),0;()( ˆtxtt bNbp

Dynamic variance compensation(2-2)

• The likelihood of a speech feature given a state , can be obtained by marginalizing the joint probability over mismatch as:

n

tb

tttttttt dbnbpnbxpdbnbxpnxp )|(),|()|,()|(

M

mxmnmnt t

xNmp1

ˆ,, ),;ˆ()(

where we assume the mismatch to be state independent, i.e. )()|( tt bpnbp

where the is the Kronecker symbol, is the observed noisy speech, feature, is the enhanced feature and and are model parameters.

• In theory, feature variance should be computed as the squared difference between clean and pre-processed speech features. However, the clean speech features are unknown.

• Assume that the feature variance is proportional to the estimated observed noise, i.e. the squared difference between observed noisy and pre-processed speech features.– Intuitively, this means that speech enhancement introduces more

distortions when a great amount of noise is removed. One way to model feature variance is thus:

Proposed method for variance calculation(3-1)

))ˆ(()),(( 2,,

2,,,,ˆ imniititijijix xu

t

ji, tui

iitx ,ˆ

– The model contains a dynamic variance part, , and a static bias,

.

2,, )ˆ( ititi xu

2,, imni

Proposed method for variance calculation(3-2)

• However, there is no closed form solution for the joint estimation of

. Therefore, we consider the three following case, (i.e. static Variance Adaptation (SVA)), (dynamic Variance Adaptation (DVA)) and a combination of the two (SDVA).

),( 00

BXX̂BXX̂BX)),|CS,B,X,(log()',|CS,B,X,()'|( ddpp

S CS C

Q

T

t

N

n

M

mt ddbpp

1 1 1BXX̂BX

))|(log()',|CS,B,X,(

where is a mismatch feature sequence.B

• The key point is to integrate dynamic variance concept into .B

• For simplicity, we consider supervised adaptation, where the word sequence is known. The maximum likelihood estimation problem can be solved using the EM algorithm. We define an auxiliary function as

)'|( Q

Proposed method for variance calculation(3-3)

• SVA

1),(

)ˆ(),(

1 1 1

1 1 1 2,,

2,,,

T

t

N

n

M

m t

T

t

N

n

M

mimn

imnitt

imn

xmn

• DVA

1),(

)ˆ(

}',,,,ˆ|{),(

1 1 1

1 1 1 2,,

2,

T

t

N

n

M

m t

T

t

N

n

M

mitit

titt

imn

x

mnxbEmn

where

)ˆ(}',,,,ˆ|{ ,,,2,,

2ˆ

2ˆ

,

,

,

imnitimnx

x

tit xmnxbEit

it

2,2

,,2ˆ

2,,

2ˆ2

, }',,,,ˆ|{}',,,,ˆ|{,

,

mnxbEmnxbE tit

imnx

imnx

tit

it

it

![Outline Product Liability Riina Spr2009 Outline[1]](https://static.fdocuments.in/doc/165x107/54fbf0ed4a795937538b4ab9/outline-product-liability-riina-spr2009-outline1.jpg)

![[ Outline ]](https://static.fdocuments.in/doc/165x107/56815a74550346895dc7db61/-outline--56b49f971d862.jpg)