Original SOINN

-

Upload

ohasegawa-lab-tokyo-tech -

Category

Technology

-

view

40.717 -

download

0

Transcript of Original SOINN

An incremental network for on-line

unsupervised classification and

topology learning

Shen Furao Osamu Hasegawa

Neural Networks, Vol.19, No.1, pp.90-106, (2006)

Background: Objective of unsupervised learning (1)

Clustering: Construct decision boundaries

based on unlabeled data.

– Single-link, complete-link, CURE

• Computation overload

• Much memory space

• Unsuitable for large data sets or online data

– K-means: • Dependence on initial starting conditions

• Tendency to result in local minima

• Determine the number of clusters k in advance

• data sets consisting only of isotropic clusters

Background: Objective of unsupervised learning (2) Topology learning: Given some high-dimensional data

distribution, find a topological structure that closely reflects the topology of the data distribution

– SOM: self-organizing map

• predetermined structure and size

• posterior choice of class labels for the prototypes

– CHL+NG: competitive Hebbian learning + neural gas

• a priori decision about the network size

• ranking of all nodes in each adaptation step

• use of adaptation parameter

– GNG: growing neural gas

• permanent increase in the number of nodes

• permanent drift of centers to capture input probability density

Background: Online or life-long learning

Fundamental issue (Stability-Plasticity Dilemma): How can

a learning system adapt to new information without

corrupting or forgetting previously learned information

– GNG-U: deletes nodes which are located in regions of

a low input probability density

• learned old prototype patterns will be destroyed

– Hybrid network: Fuzzy ARTMAP + PNN

– Life-long learning with improved GNG: learn number

of nodes needed for current task

• only for supervised life-long learning

Objectives of proposed algorithm • To process the on-line non-stationary data.

• To do the unsupervised learning without any priori

condition such as:

• suitable number of nodes

• a good initial codebook

• how many classes there are

• Report a suitable number of classes

• Represent the topological structure of the input

probability density.

• Separate the classes with some low-density overlaps

• Detect the main structure of clusters polluted by noises.

Proposed algorithm

Input

pattern

First Layer

Growing

Network

First

Output

Second Layer

Growing

Network

Second

Output

Insert

Node

Delete

Node Classify

Algorithms

• Insert new nodes

– Criterion: nodes with high errors serve as a criterion to insert a new node

– error-radius is used to judge if the insert is successful

• Delete nodes

– Criterion: remove nodes in low probability density regions

– Realize: delete nodes with no or only one direct topology neighbor

• Classify

– Criterion: all nodes linked with edges will be one cluster

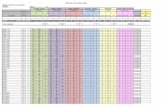

Experiment • Stationary environment: patterns are randomly chosen

from all area A, B, C, D and E

• NON-Stationary environment:

Environment

I II III IV V VI VII

A 1 0 1 0 0 0 0

B 0 1 0 1 0 0 0

C 0 0 1 0 0 1 0

D 0 0 0 1 1 0 0

E1 0 0 0 0 1 0 0

E2 0 0 0 0 0 1 0

E3 0 0 0 0 0 0 1 Original Data Set

Experiment: Stationary environment

Original Data Set Traditional method: GNG

Experiment: Stationary environment

Proposed method: first layer Proposed method: final results

Experiment: Non-stationary environment

GNG-U result GNG result

Experiment: Non-stationary environment

Proposed method: first layer

Proposed method: first layer

Experiment: Non-stationary environment

Experiment: Non-stationary environment

Proposed method: first layer Proposed method: Final output

Experiment: Non-stationary environment

Number of growing nodes during online learning

(Environment 1 ~ Environment 7)

Experiment: Real World Data

Facial Im

age

(AT

T_FA

CE

)

(a) 10 classes

(b) 10 samples of class 1

Experiment:Vector

Vector of (a)

Vector of (b)

Experiment: Face Recognition results

10 clusters

Stationary

Correct

Recognition

Ratio: 90%

Non-Stationary

Correct

Recognition

Ratio: 86%

Experiment: Vector Quantization

Original Lena (512*512*8) Stationary Environment: Decoding

image, 130 nodes, 0.45bpp,

PSNR = 30.79dB

Experiment: Compare with GNG

Number

of Nodes bpp PSNR

First-layer 130 0.45 30.79

GNG 130 0.45 29.98

Second-layer 52 0.34 29.29

GNG 52 0.34 28.61

Stationary Environment

Experiment: Non-stationary Environment

First-layer: 499 nodes, 0.56bpp,

PSNR = 32.91dB

Second-layer: 64 nodes, 0.375bpp,

PSNR = 29.66dB

Conclusion

• An autonomous learning system for

unsupervised classification and topology

representation task

• Grow incrementally and learn the number of

nodes needed to solve current task

• Accommodate input patterns of on-line non-

stationary data distribution

• Eliminate noise in the input data

![Self-Organizing Incremental Associative Memory …44c6cd6da5a332.lolipop.jp/papers/SOINN_AM_Robot.pdfa self-organizing incremental neural network (SOINN)[9]. In SOIAM model, each node](https://static.fdocuments.in/doc/165x107/5f33cb11ffe27f6f0d15fb64/self-organizing-incremental-associative-memory-a-self-organizing-incremental-neural.jpg)