On Optimum Strategies for Minimizing the Exponential...

Transcript of On Optimum Strategies for Minimizing the Exponential...

On Optimum Strategies for Minimizing the Exponential Moments

of a Loss Function

Neri Merhav

Department of Electrical EngineeringTechnion – Israel Institute of Technology

Technion City, Haifa 32000, [email protected]

Abstract

We consider a general problem of finding a strategy that minimizes the exponential momentof a given cost function, with an emphasis on its relation to the more common criterion ofminimization the expectation of the first moment of the same cost function. In particular, thebasic observation that we make and use is about simple sufficient conditions for a strategy to beoptimum in the exponential moment sense. This observation may be useful in various situations,and application examples are given. We also examine the asymptotic regime and investigateuniversal asymptotically optimum strategies in light of the aforementioned sufficient conditions,as well as phenomena of irregularities, or phase transitions, in the behavior of the asymptoticperformance, which can be viewed and understood from a statistical–mechanical perspective.Finally, we propose a new route for deriving lower bounds on exponential moments of certaincost functions (like the square error in estimation problems) on the basis of well known lowerbounds on their expectations.

Index Terms: loss function, exponential moment, large deviations, phase transitions, universalschemes.

1 Introduction

Many problems in information theory, communications, statistical signal processing, and related

disciplines can be formalized as being about the quest for a strategy s that minimizes (or maximizes)

the expectation of a certain cost function, ℓ(X, s), where X is a random variable (or a random

vector). Just a few examples of this generic paradigm are the following: (i) Lossless and lossy data

compression, where X symbolizes the data to be compressed, s is the data compression scheme,

and ℓ(X, s) is the length of the compressed binary representation, or the distortion (in the lossy

1

case) or a linear combination of both (see, e.g., [8, Chapters 5 and 10]). (ii) Gambling and portfolio

theory [8, Chapters 6 and 16], where cost function is logarithm of the wealth relative. (iii) Lossy

joint source–channel coding, where X collectively symbolizes the randomness of source and the

channel, s is the encoding–decoding scheme and ℓ(X, s) is the distortion in the reconstruction (see,

e.g., [53],[54]). (iv) Bayesian estimation of a random variable based on measurements, where X

designates jointly the desired random variable and the measurements, s is the estimation function

and ℓ(X, s) is the error function, for the example, the squared error. Non–Bayesian estimation

problems can be considered similarly (see, e.g., [48]). (v) Prediction, sequential decision problems

(see, for example, [35]) and stochastic control problems [6], such as the linear quadratic Gaussian

(LQG) problem, as well as general Markov decision processes, are also formalized in terms of

selecting strategies in order to minimize the expectation of a certain loss function.

While the criterion of minimizing the expected value of ℓ(X, s) has been predominantly the

most common one, the exponential moments of ℓ(X, s), namely, E exp{αℓ(X, s)} (α > 0), have

received much less attention than they probably deserve in this context, at least in information

theory and signal processing. In the realm of the theory of optimization and stochastic control, on

the other hand, the problem of minimizing exponential moments has received much more attention,

and it is well–known as the risk–sensitive or risk–averse cost function (see, e.g., [11], [16], [20], [24],

[50], [51] and many references therein), where one of the main motivations for using the exponential

function of ℓ(X, s) is to impose a penalty, or a risk, that is extremely sensitive to large values of

ℓ(X, s), hence the qualifier “risk–sensitive” in the name of this criterion. Another motivation is

associated with robustness properties of the resulting risk–sensitive optimum controllers [4], [18].

There are, in fact, a few additional motivations for examining strategies that minimize exponential

moments, which are also relevant to many problem areas of information theory, communications

and statistical signal processing. First and foremost, the exponential moment, E exp{αℓ(X, s)}, as

a function of α, is obviously the moment–generating function of ℓ(X, s), and as such, it provides

the full information about the entire distribution of this random variable, not just its first order

moment. Thus, in particular, if we are fortunate enough to find a strategy that uniformly minimizes

E exp{αℓ(X, s)} for all α ≥ 0 (and there are examples that this may be the case), then this is much

stronger than just minimizing the first moment. Secondly, exponential moments are intimately

related to large–deviations rate functions, and so, the minimization of exponential moments may

2

give us an edge on minimizing probabilities of (undesired) large deviations events of the form

Pr{ℓ(X, s) ≥ L0} (for some threshold L0), or more precisely, on maximizing the exponential rate of

decay of these probabilities. There are several works along this line, especially in contexts related to

buffer overflow in data compression [19], [25], [26], [30], [37], [47], [52], exponential moments related

to guessing [1], [2], [3], [29], [33], [38], large deviations properties of parameter/signal estimators,

[7], [27], [40], [45], [46], [55], and more.

It is natural to ask, in view of the foregoing discussion, how we can harness the existing body of

knowledge concerning optimization of strategies for minimizing the first moment of ℓ(X, s), which is

quite mature in many applications, in our quest for optimum strategies that minimize exponential

moments. Our basic observation, in this paper, provides a simple relationship between the two

criteria. In particular, in Section 2, we furnish sufficient conditions that the optimum strategy in

the exponential moment sense can be found in terms of the optimum strategy in the first moment

sense, for a possibly different probability distribution, which we characterize.

The main message of this expository paper is that the combination of these sufficient conditions

sets the stage for a useful tool that can be used to solve concrete problems of minimizing exponential

moments, and it may give a fresh look and a new insight into these problems. In some applications,

these sufficient conditions for optimality in the exponential moment sense, yield an equation in s,

whose solution is the desired optimum strategy. In other applications, however, this may not be

quite the case directly, yet the set of optimality conditions may still be useful: More often than

not, in a given instance of the problem under discussion, one may have a natural intuitive guess

concerning the optimum strategy, and then the optimality conditions can be used to prove that

this is the case.

At the heart of this paper stands a section of application examples (Section 3). One example for

the use of the proposed tool, that will be demonstrated in detail (and in more generality) later on,

is the following: Given n independent and identically distributed (i.i.d.) Gaussian measurements,

X1, . . . ,Xn, with mean θ, the sample mean, s(X1, . . . ,Xn) = 1n

∑ni=1 Xi, is the optimum unbiased

estimator of θ, not merely in the mean squared error sense (as is well known), but also in the sense

of minimizing all exponential moments of the squared error, i.e., E exp{α[s(X1, . . . ,Xn)− θ]2} for

all α ≥ 0 for which this expectation is finite. Another example belongs to the realm of universal

3

lossless data compression and the famous minimum description length principle (MDL), due to

Rissanen [44], who characterized the minimum achievable “price of universality” as a redundancy

term of about 12 log n (without normalization) for each unknown parameter of the information

source to be compressed. Here we show how this result (both the converse theorem and the direct

theorem) extends from the expected code–length criterion to all exponential moments of the code–

length. Yet another example is the memoryless Gaussian joint source–channel coding problem with

the quadratic distortion measure and without bandwidth expansion: As is well known, minimum

expected distortion can be achieved by optimum linear scalar encoders and decoders. When it

comes to exponential moments of the distortion, the behavior is somewhat more complicated. As

long as one forces a linear scalar encoder, the optimum decoder is also linear. However, once the

constraint of linear encoding is relaxed, both the optimum encoder and the optimum decoder are

no longer linear (see also [9] for a related work). Our above–mentioned basic principle sheds some

insight on this problem too.

We next devote some attention to the asymptotic regime (Section 4). Consider the case where

X is a random vector of dimension n, X = (X1, . . . ,Xn), governed by a product–form probabil-

ity distribution, and ℓ(X, s) grows linearly for a given empirical distribution of X, for example,

when ℓ(X, s) is additive, i.e., ℓ(X, s) =∑n

i=1 l(Xi, s). In this case, the exponential moments of

ℓ(X, s) typically behave (at least asymptotically) like exponential functions of n. If we can then

select a strategy s that somehow “adapts”1 to the empirical distribution of (X1, . . . ,Xn), then

such strategies may be universally optimum (or asymptotically optimum in the sense of achieving

the minimum exponential rate of the exponential moment) in that they depend on neither the

underlying probability distribution, nor on the parameter α. This is demonstrated in several ex-

amples, one of which is the above–mentioned extension of Rissanen’s famous results in universal

data compression [44].

An interesting byproduct of the use of the exponential moment criterion in the asymptotic

regime is the possible existence of phase transitions (Section 5): In turns out that the asymptotic

exponential rate of E exp{αℓ(X1, . . . ,Xn, s)} as a function of n, may not be a smooth function

of α and/or the parameters of the underlying probability distribution even when the model under

discussion seems rather simple and ‘innocent.’ This is best understood from a statistical–mechanical

1The precise meaning of this will be clarified in the sequel.

4

perspective, because in some cases, the calculation of the exponential moment is clearly analogous

to that of the partition function of a certain physical system of interacting particles, which is

known to exhibit phase transitions. It is demonstrated that at least in certain cases, these phase

transitions are not merely an artifact of a badly chosen strategy, but they appear even when the

optimum strategy is used, and hence these phase transitions are inherent in the model.

We end this paper by touching upon yet another aspect of the exponential moment criterion,

which we do not investigate very thoroughly here, but we believe it is interesting and therefore

certainly deserves a further study in the future (Section 6): Even in the ordinary setting, of seeking

strategies that minimize E{ℓ(X, s)}, optimum strategies may not always be known, and then lower

bounds are of considerable importance as a reference performance figure. This is a–fortiori the case

when exponential moments are considered. One way to obtain non–trivial bounds on exponential

moments is via lower bounds on the expectation of ℓ(X, s), using the techniques developed in this

paper. We demonstrate this idea in the context of a lower bound on the expected exponentiated

squared error of an unbiased parameter estimator, on the basis of the Cramer–Rao bound (CRB),

but it should be understood that, more generally, the same idea can be applied on the basis of

other well–known bounds of the mean-square error (Bayesian and non–Bayesian) in parameter

estimation, and in signal estimation, as well as in other problem areas.

2 The Basic Observation

Let X be a random variable taking on values in a certain alphabet X , and drawn according to a

given probability distribution P . The alphabet X may either be finite, countable, or a continuous

set. In the latter case, P (as well as other probability functions on X ) denotes a density with

respect to a certain measure µ on X , say the counting measure in the discrete case, or the Lebesgue

measure in the continuous case. Let the variable s designate a strategy, or an action, chosen from

some space S of allowed strategies. The term “strategy”, in our context, means a mathematical

object that, depending on the application, may be either a scalar variable, a vector, an infinite

sequence, a function (of X), or a function of another random variable/vector that is statistically

dependent on X. Associated with each x ∈ X and s ∈ S, is a loss ℓ(x, s). The function ℓ(x, s)

is called the loss function, or the cost function. The operator E{·} will be understood as the

5

expectation operator with respect to (w.r.t.) the underlying distribution P , and whenever we

refer to the expectation w.r.t. another probability distribution, say, Q, we use the notation EQ{·}.

Nonetheless, occasionally, when there is more than one probability distribution playing a role at

the same time and we wish to emphasize that the expectation is taken w.r.t. P , then to avoid

confusion, we may denote this expectation by EP {·}.

For a given α > 0, consider the problem of minimizing E exp{αℓ(X, s)} across s ∈ S. The

following observation relates the optimum s for this problem to the optimum s for the problem of

minimizing EQ{ℓ(X, s)} w.r.t. another probability distribution Q.

Observation 1 Assume that there exists a strategy s ∈ S for which

Z(s)∆= EP exp{αℓ(X, s)} < ∞. (1)

A strategy s ∈ S minimizes EP exp{αℓ(X, s)} if there exists a probability distribution Q on X that

satisfies the following two conditions at the same time:

1. The strategy s minimizes EQ{ℓ(X, s)} over S.

2. The probability distribution Q is given by

Q(x) =P (x)eαℓ(x,s)

Z(s). (2)

An equivalent formulation of Observation 1 is the following: denoting by sQ a strategy that

minimizes EQ{ℓ(X, s)} over S, then the sQ minimizes EP exp{αℓ(X, s)} over S if

Q(x) ∝ P (x)eαℓ(x,sQ), (3)

where by A(x) ∝ B(x), we mean that A(x)/B(x) is a constant, independent of x.

Proof. Let s ∈ S be arbitrary and let (s∗, Q∗) satisfy conditions 1 and 2 of Observation 1. Consider

the following chain of inequalities:

EP exp{αℓ(X, s)} = EQ∗ exp

{

αℓ(X, s) + lnP (X)

Q∗(X)

}

≥ exp {αEQ∗ℓ(X, s) − D(Q∗‖P )}

≥ exp {αEQ∗ℓ(X, s∗) − D(Q∗‖P )}

= Z(s∗) = EP exp{αℓ(X, s∗)}, (4)

6

where the first equality results from a change of measure (multiplying and dividing eαℓ(X,s) by

Q∗(X)), the second line is by Jensen’s inequality and the convexity of the exponential function

(with D(Q‖P )∆= EQ ln[Q(X)/P (X)] being the relative entropy between Q and P ), the third

line is by condition 1, and the next equality results from condition 2: On substituting Q∗(x) =

P (x)eαℓ(x,s∗)/Z(s∗) into D(Q∗‖P ), one readily obtains D(Q∗‖P ) = αEQ∗ℓ(X, s∗) − ln Z(s∗). This

completes the proof of Observation 1. �

Discussion: Several comments are in order at this point.

Partially related results have appeared in the literature of optimization and control (cf. [20,

Theorem 4.9]). However, in [20], a much more complicated and more involved paradigm (of con-

trolling finite–state Markov processes) has been considered, and the results therein do not seem to

be completely equivalent to Observation 1. Moreover, since the setting here is much simpler, then

so is the proof, which is not only short, but also almost free of regularity conditions (as opposed

to [20] and [11]). The only regularity condition needed here is that Z(s) < ∞ for some s ∈ S.

Obviously, without this condition, the problem under consideration is meaningless and empty in

the first place.

Note that for a given s, Jensen’s inequality

EQ exp

{

αℓ(X, s) + lnP (X)

Q(X)

}

≥ exp {αEQℓ(X, s) − D(Q‖P )} (5)

of (4) (but with Q∗ being replaced by a generic measure Q), becomes an equality for Q(x) =

P (x)eαℓ(x,s)/Z(s), since for this choice of Q, the random variable that appears in the exponent,

αℓ(X, s) + ln P (X)Q(X) , becomes degenerate (constant with probability one). Since the original ex-

pression is independent of Q, such an equality in Jensen’s inequality means that the expression

αEQℓ(X, s) − D(Q‖P ) is maximized by this choice of Q(x) = P (x)eα(x,s)/Z(s), a fact which can

also be seen from a direct maximization of this expression using standard methods. This leads

directly to the well–known identity (see, e.g., [11, Proposition 2.3]):

EP exp{αℓ(X, s)} = exp{maxQ

[αEQℓ(X, s) − D(Q‖P )]}, (6)

which is also intimately related to the well–known Laplace principle [17] in large deviations theory,

or more generally, to Varadhan’s integral lemma [12, Section 4.3].

7

In view of eq. (6), another look at the problem of minimizing EP exp{αℓ(X, s)} reveals that it

is equivalent2 to the minimax problem

mins

maxQ

F (s,Q) (7)

where

F (s,Q)∆= αEQℓ(X, s) − D(Q‖P ). (8)

Now, suppose that the set S and the loss function ℓ(x, s) are such that:

mins∈S

maxQ

F (s,Q) = maxQ

mins∈S

F (s,Q). (9)

This equality between the minimax and the maximin means that there is a saddle point (s∗, Q∗),

where s∗ is a solution of the minimax problem on the left–hand side and Q∗ is a solution to

the maximin problem on the right–hand side. As mentioned above, the maximizing Q in the

inner maximization on the left–hand side is Q∗(x) = P (x)eαℓ(x,s∗)/Z(s∗), which is condition 2 of

Observation 1. By the same token, the inner minimization over s on the right–hand side obviously

minimizes EQ∗ℓ(X, s), which is condition 1. This means then that the two conditions of Observation

1 are actually equivalent to the conditions for the existence of a saddle point of F (s,Q). This can

be considered as an alternative proof of Observation 1. The original proof above, however, is much

simpler: Not only is it shorter, but furthermore, it requires neither acquaintance with eq. (6) nor

with the theory of minimax/maximin optimization and saddle points.

When does eq. (9) hold? In general, the well–known sufficient conditions for

minu∈U

maxv∈V

f(u, v) = maxv∈V

minu∈U

f(u, v) (10)

are that U and V are convex sets (with u being allowed to take on values freely in U , independently

of v and vice versa), and that f is convex in u and concave in v. In our case, since the function

F (s,Q) is always concave in Q, this sufficient condition would automatically hold whenever ℓ(x, s)

is convex in s (for every fixed x), provided that S is a space in which convex combinations can

be well defined, and that S is a convex set. There are, of course, milder sufficient conditions that

allow commutation of minimization and maximization (see, e.g,, [43, Chapters 36 and 37]).

2See also [11].

8

A similar, but somewhat different, criterion pertaining to exponential moments, which is rea-

sonable to the same extent, is the dual problem of maxs∈S E exp{−αℓ(X, s)} (again, with α > 0),

which is called, in the jargon of stochastic control, a risk–seeking cost criterion, as opposed to the

risk-sensitive criterion discussed so far. If ℓ(x, s) is non-negative for all x and s, this has the ad-

vantage that the exponential moment is finite for all α > 0, as opposed to E exp{αℓ(X, s)} which,

in many cases, is finite only for a limited range of α. For the same considerations as before, here

we have:

maxs

E exp{−αℓ(X, s)} = maxs

exp{maxQ

[−αEQℓ(X, s) − D(Q‖P )]}

= exp{−mins

minQ

[αEQℓ(X, s) + D(Q‖P )]}, (11)

and so the optimality conditions relating s and Q are similar to those of Observation 1 (with α

replaced by −α), except that now we have a double minimization problem rather than a minimax

problem. However, it should be noted that here the conditions of Observation 1 are only necessary

conditions, as for the above equalities to hold, the pair (s,Q) should globally minimize the function

F (s,Q), unlike the earlier case, where only a saddle point was sought.3 On the other hand,

another advantage of this criterion, is that even if one cannot solve explicitly the equation for the

optimum s, then the double minimization naturally suggests an iterative algorithm: starting from an

initial guess s0 ∈ S, one computes Q0(x) ∝ P (x) exp{−αℓ(x, s0)} (which minimizes [αEQℓ(X, s) +

D(Q‖P )] over Q), then one finds s1 = arg mins∈S EQ0{ℓ(X, s)}, and so on. It is obvious that

E exp{−αℓ(X, si)}, i = 0, 1, 2, . . ., increases (and hence improves) from iteration to iteration. This

is different from the minimax situation we encountered earlier, where successive improvements are

not guaranteed.

3 Applications

Observation 1 tells us that if we are fortunate enough to find a strategy s ∈ S and a probability

distribution Q, which are ‘matched’ to one another (in the sense defined by the above conditions),

then we have solved the problem of minimizing the exponential moment. Sometimes it is fairly easy

to find such a pair (s,Q) by solving an equation. In other cases, there might be a natural guess

3In other words, it is not enough now that s and Q are in ‘equilibrium’ in the sense that s is a minimizer for agiven Q and vice versa.

9

for the optimum s, which can be proved optimum by checking the conditions. In yet some other

cases, Observation 1 suggests an iterative algorithm for solving the problem. In this section, we

will see a few application examples of all these types. Some of these examples could have been also

solved directly (and/or the resulting conclusions may even already be known from the literature),

without using Observation 1, but for others, this does not seem to be a trivial task. In any case,

Observation 1 may suggest a fresh look and some new insight on the problem.

3.1 Lossless Data Compression

We begin with a very simple example. Let X be a random variable taking on values in a finite

alphabet X , let s be a probability distribution on X , i.e., a vector {s(x), x ∈ X} with∑

x∈X s(x) = 1

and s(x) ≥ 0 for all x ∈ X , and let ℓ(x, s)∆= − ln s(x). This example is clearly motivated by lossless

data compression, as − ln s(x) is the length function (in nats) pertaining to a uniquely decodable

code that is induced by a distribution s, ignoring integer length constraints. In this problem, one

readily observes that the optimum s for minimizing EQ{− ln s(X)} is sQ = Q. Thus, by eq. (3),

we seek a distribution Q such that

Q(x) ∝ P (x) exp{−α ln Q(x)} =P (x)

[Q(x)]α(12)

which means [Q(x)]1+α ∝ P (x), or equivalently, Q(x) ∝ [P (x)]1/(1+α). More precisely,

sQ(x) = Q(x) =[P (x)]1/(1+α)

∑

x′∈X [P (x′)]1/(1+α). (13)

Note that here ℓ(x, s) is convex in s and so, the minimax condition holds. While this result is

well known and it could have been obtained even without using Observation 1, our purpose in this

example was to show how Observation 1 gives the desired solution even more easily than with the

direct method, by solving a very simple equation.

3.2 Quantization

Consider the problem of quantizing a real–valued random variable X, drawn by P , into M repro-

duction levels, x0, x1, . . . , xM−1, and let the distortion metric d(x, x) be quadratic, i.e., d(x, x) =

(x − x)2. The ordinary problem of optimum quantizer design is about the choice of a function

s : X → {x0, x1, . . . , xM−1}, that minimizes EP [X − s(X)]2, i.e., in this case, ℓ(x, s) = [x − s(x)]2.

10

As is well known [21], [23], [28], in general, this problem lacks a closed–form solution, and the

customary approach is to apply an iterative algorithm for quantizer design, which alternates between

two sets of necessary conditions for optimality: the nearest–neighbor condition, according to which

s(x) should be the reproduction level that is closest to x (i,e,, the one that minimizes (x − xi)2

over i), and the centroid condition, which means that xi should be the conditional expectation of

X given that X falls in the interval of values of x that are to be mapped to the i–th quantization

level.

Consider now the criterion of maximizing the negative exponential moment EP e−α[X−s(X)]2 .

Here the centroid condition is no longer relevant. However, in light of the discussion in Section 2,

one can use the fact that this problem is equivalent to the double minimization of

G(s,Q)∆= αEQ[X − s(X)]2 + D(Q‖P ) (14)

over s and Q. This suggests an iterative algorithm that consists of two nested loops: The outer loop

alternates between minimizing s for a given Q, on the one hand, and minimizing Q for a given s, on

the other hand. As explained in Section 2, these two minimizations are, in principle, nothing but

the conditions of Observation 1, just with α being replaced by −α. The inner loop implements the

former ingredient of minimizing EQ[X − s(X)]2 over s for a given Q, which is again implementable

by the standard iterative procedure that was described in the previous paragraph. Of course, it is

not guaranteed that such an iterative algorithm would converge to the global minimum of G(s,Q),

just like in the case of ordinary iterative quantizer design.

As a simple example for a combination of s and Q that are matched (in the above sense),

consider the case where P is the uniform distribution over the interval [−A,+A]. The optimum

MMSE quantizer in this case is uniform as well: The interval [−A,A] is partitioned evenly into M

sub-intervals, each of size 2A/M and the reproduction level xi, pertaining to each sub-interval, is

its midpoint, What happens when the exponential moment is considered? Let us ‘guess’ that the

same quantizer s remains optimum. Then,

Q(x) ∝ 1{|x| ≤ A} · exp{−α(x − s(x))2} = 1{|x| ≤ A} · exp{−α mini

(x − xi)2}, (15)

which means that Q has “Gaussian peaks” at all reproduction points {xi}. It is plausible that

the same uniform quantizer s continues to be optimum (or at least nearly so) for Q, and hence

11

these s and Q match each other. Moreover, at least for large α, this quantizer nearly attains the

rate–distortion function of Q at distortion level D = 1/(2α). To see why this is true, observe that

for large α, the factor exp{−αmini(x − xi)2} = maxi exp{−α(x − xi)

2} is well approximated by∑

i exp{−α(x − xi)2}, which after normalization of Q, becomes essentially a mixture of M evenly

weighted Gaussians, where the i–th mixture component is centered at xi, i = 0, 1, . . . ,M − 1. This

mixture approximation of Q can then be viewed as a convolution between the uniform discrete

distribution on {xi} and the Gaussian density N (0, 1/(2α)). Thus, for D ≤ 1/(2α), the rate–

distortion function of Q agrees with the Shannon lower bound (see, e.g., [22, Chapter 4]), which

is

RL(D) = h(Q) −1

2log(2πeD)

≈ log M +1

2log(πe

α

)

−1

2log(2πeD)

= log M +1

2log

(

1

2αD

)

, (16)

which, for D = 1/(2α), indeed gives a coding rate of log M , just like the uniform quantizer. Here,

h(Q) stands for the differential entropy pertaining to Q.

Finally, consider the case where the source vector X = (X1, . . . ,Xn) is to be quantized by a

sequential causal quantizer with memory, i.e., Xt is quantized into one of M quantization levels,

which are now allowed to depend on past outputs of the quantizer X1, . . . , Xt−1. Here, the relevant

exponential moment criterion would be EP exp{−α∑n

t=1[Xt − s(Xt|X1, . . . , Xt−1)]2}. As is shown

in [36], however, whenever the source P is memoryless, the allowed dependence of the current

quantization on the past does not improve the exponential moment performance, i.e., the optimum

quantizer of this type makes use of the current symbol Xt only. This means that the causal vector

quantization problem actually degenerates back to the scalar quantization problem considered in

the previous paragraphs. This continues to be true even if variable–rate coding is allowed, except

that then time–sharing between at most two quantizers must also be allowed. These results of [36],

for the exponential moment criterion, are analogous to those of Neuhoff and Gilbert [42] for the

ordinary criterion of expected code length for a given distortion (or expected distortion at fixed

rate).

12

3.3 Non–Bayesian Parameter Estimation

Let X = (X1,X2, . . . ,Xn)T be a Gaussian random vector with mean vector θu, where θ ∈ IR and

u = (u1, . . . , un)T is a given deterministic vector. Let the non–singular n × n covariance matrix of

X be given by Λ. The vector X can then be thought of as a set of measurements (contaminated

by non–white Gaussian noise) of a signal, represented by a known vector u, but with an unknown

gain θ to be estimated. This is a classical problem in non–Bayesian estimation theory. It is well

known that for this kind of a model, among all unbiased estimators of θ, the one that minimizes

the mean square error (or equivalently, the estimation error variance) is the maximum likelihood

(ML) estimator, which in this case, is easily found to be given by

s(x) =uT Λ−1x

uT Λ−1u. (17)

Does this estimator also minimize E exp{α[s(X) − θ]2} among all unbiased estimators and for all

values of α in the allowed range?

The class S of all unbiased estimators is clearly a convex set and (s − θ)2 is convex in s. Let

us ‘guess’ that this estimator indeed minimizes also E exp{α[s(X) − θ]2} and then check whether

it satisfies the conditions of Observation 1. Denoting v = Λ−1u/(uT Λ−1u), the corresponding

probability measure Q, which will be denoted here by Qθ, is given by

Qθ(x) ∝ exp

{

−1

2(x − θu)T Λ−1(x − θu) + α

(

vT x − θ)2}

= exp

{

−1

2(x − θu)T Λ−1(x − θu) + α

[

vT (x − θu)]2}

= exp

{

−1

2(x − θu)T Λ−1(x − θu) + α(x − θu)T vvT (x − θu)

}

= exp

{

−1

2(x − θu)T (Λ−1 − 2αvvT )(x − θu)

}

, (18)

where α is chosen small enough such that the matrix (Λ−1 − 2αvvT ) is still positive definite. Now,

the ML estimator of θ under Qθ is given by

sQ(x) =uT (Λ−1 − 2αvvT )x

uT (Λ−1 − 2αvvT )u

=uT Λ−1x − 2αuT vvT x

uT Λ−1u − 2αuT vvT u

=[1 − 2α/(uT Λ−1u)]uT Λ−1x

[1 − 2α/(uT Λ−1u)]uT Λ−1u

= s(x), (19)

13

where in the third line we have used the fact that uT v = 1. In other words, we are back to

the original estimator we started from, which means that our ‘guess’ was successful. Indeed, the

MSE of s(x) under Qθ, which is vT (Λ−1 − 2αvvT )−1v, can easily be shown to be identical to the

Cramer–Rao lower bound under Qθ, which is given by 1/[uT (Λ−1 − 2αvvT )u]. We can therefore

summarize our conclusion in the following proposition:

Proposition 1 Let X be a Gaussian random vector with a mean vector θu and a non–singular

covariance matrix Λ. Let a be the supremum of all values of α such that the matrix (Λ−1 − 2αvvT )

is positive definite, where v = Λ−1u/(uT Λ−1u). Then, among all unbiased estimators of θ, the

estimator s(x) = vT x uniformly minimizes the exponential moment E exp{α[s(X) − θ]2} for all

α ∈ (0, a). This minimum of the exponential moment is given by

E exp{

α(

vT X − θ)2}

=1

√

det(I − 2αvvT Λ). (20)

It is easy to see that for α → 0, the limit of 1α ln E exp{α(vT X − θ)2} recovers the mean–

square error tr{vvT Λ} = vT Λv, as expected. Here we have the full best achievable characteristic

function and hence also the best achievable large deviations performance in the sense of minimizing

of probabilities of the form Pr{|s(X) − θ| ≥ R} for all R > 0.

Related results by Kester and Kallenberg [27] (and some subsequent works) concern the asymp-

totic large deviations optimality of the ML estimator, among all consistent estimators, for the case

of exponential families of i.i.d. measurements. Here, on the other hand, the model is not i.i.d.,

the result holds (for exponential moments) for all n, and not only asymptotically, and the class of

competing estimators is the class of unbiased estimators.

3.4 Gaussian–Quadratic Joint Source–Channel Coding

Consider the Gaussian memoryless source

PU (u) = (2πσ2u)−n/2 exp

{

−1

2σ2u

n∑

i=1

u2i

}

(21)

and the Gaussian memoryless channel y = x + z, where the noise is distributed according to

PZ(z) = (2πσ2z )−n/2 exp

{

−1

2σ2z

n∑

i=1

z2i

}

. (22)

14

In the ordinary joint source–channel coding problem, one seeks an encoder and decoder that would

minimize D = 1n

∑ni=1 E{(Ui − Vi)

2}, where V = (V1, . . . , Vn) is the reconstruction at the decoder.

It is very well known that the best achievable distortion, in this case, is given by

D =σ2

u

1 + Γ/σ2z

, (23)

where Γ is the maximum power allowed at the transmitter, and it may be achieved by a transmitter

that simply amplifies the source by a gain factor of√

Γ/σ2u and a receiver that implements linear

MMSE estimation of Ui given Yi, on a symbol–by–symbol basis.

What happens if we replace the criterion of expected distortion by the criterion of the expo-

nential moment on the distortion, E exp{α∑

i(Ui − Vi)2}? It is natural to wonder whether simple

linear transmitters and receivers, of the kind defined in the previous paragraph, are still optimum.

The random object X, in this example, is the pair of vectors (U ,Z), where U is the source vector

and Z is the channel noise vector, which under P = PU×PZ , are independent Gaussian i.i.d. random

vectors with zero mean and variances σ2u and σ2

z , respectively, as said. Our strategy s consists of

the choice of an encoding function x = f(u) and a decoding function v = g(y). The class S is then

the set of all pairs of functions {f, g}, where f satisfies the power constraint EP {‖f(U )‖2} ≤ nΓ.

Condition 2 of Observation 1 tells us that the modified probability distribution of u and z should

be of the form

Q(u,z) ∝ PU (u)PZ(z) exp

{

α

n∑

i=1

[ui − gi(f(u) + z)]2

}

(24)

where gi is restriction of g to the i–th component of v.

Clearly, if we continue to restrict the encoder f to be linear, with a gain of√

Γ/σ2u, which simply

exploits the allowed power Γ, and the only remaining room for optimization concerns the decoder

g, then we are basically dealing with a problem of pure Bayesian estimation in the Gaussian regime,

and then the optimum choice of the decoder (estimator) continues to be the same linear decoder

as before (see [41]).4 However, once we extend the scope and allow f to be a non–linear encoder,

then the optimum choice of f and g would no longer remain linear like in the expected distortion

case. It is not difficult to see that the conditions of Observation 1 are no longer met for any

linear functions f and g. The key reason is that while Q(u,z) of eq. (24) continues to be Gaussian

4This can also be obtained by applying Observation 1 to the problem of Bayesian estimation in the Gaussianregime under the exponential moment criterion. See Section 3.2 in the original version of this paper [32].

15

(though now Ui and Zi are correlated) when f and g are linear, the power constraint, EP {‖X‖2} ≤

nΓ, when expressed as an expectation w.r.t. Q, becomes EQ{‖f(U )‖2P (U )/Q(U )} ≤ nΓ, but

“power” function ‖f(u)‖2P (u)/Q(u), with P and Q being Gaussian densities, is no longer the

usual quadratic function of f(u) for which there is a linear encoder and decoder that is optimum.

Another way to see that linear encoders and decoders are suboptimal, is to consider the following

argument: For a given n, the expected exponentiated squared error is minimized by a joint source–

channel coding system, defined over a super-alphabet of n–tuples, with respect to a distortion

measure, defined in terms of a single super–letter, as

d(u,v) = exp

{

α

n∑

i=1

(ui − vi)2

}

. (25)

For such a joint source–channel coding system to be optimal, the induced channel P (v|u) must [5,

p. 31, eq. (2.5.13)] be proportional to

P (v) exp{−βd(u,v)} = P (v) exp

[

−β exp

{

α∑

i

(ui − vi)2)

}]

(26)

for some β > 0, which is the well–known structure of the optimum test channel that attains the

rate–distortion function for the Gaussian source and the above defined distortion measure. Had

the aforementioned linear system been optimum, the optimum output distribution P (v) would

be Gaussian, and then P (v|u) would remain proportional to a double exponential function of∑

i(ui − vi)2. However, the linear system induces instead a Gaussian channel from u to v, which

is very different, and therefore cannot be optimum.

Of course, the minimum of E exp{α∑

i(Ui − Vi)2} can be approached by separate source- and

channel coding, defined on blocks of super–letters formed by n–tuples. The source encoder is an

optimum rate–distortion code for the above defined ‘single–letter’ distortion measure, operating at

a rate close to the channel capacity, and the channel code is constructed accordingly to support

the same rate.

4 Universal Asymptotically Optimum Strategies

The optimum strategy for minimizing EP exp{αℓ(X, s)} depends, in general, on both P and α. It

turns out, however, that this dependence on P and α can sometimes be relaxed if one gives up the

ambition of deriving a strictly optimum strategy, and resorts to asymptotically optimum strategies.

16

Consider the case where, instead of one random variable X, we have a random vector X =

(X1, . . . ,Xn), governed by a product distribution function

P (x) =n∏

i=1

P (xi), (27)

where each component xi of the vector x = (x1, . . . , xn) takes on values in a finite set X . If

ℓ(x, s) grows linearly5 with n for a given empirical distribution of x and a given s ∈ S, then it is

expected that the exponential moment E exp{αℓ(x, s)} would behave, at least asymptotically, as

an exponential function of n. In particular, for a given s, let us assume that the limit

limn→∞

1

nln EP exp{αℓ(X , s)}

exists. Let us denote this limit by E(s, α, P ). An asymptotically optimum strategy is then a strategy

s∗ for which

E(s∗, α, P ) ≤ E(s, α, P ) (28)

for every s ∈ S. An asymptotically optimum strategy s∗ is called universal asymptotically optimum

w.r.t. a class P of probability distributions, if s∗ is independent of α and P , yet it satisfies eq.

(28) for all α in the allowed range, every s ∈ S, and every P ∈ P. In this section, we take P to

be the class of all memoryless sources with a given finite alphabet X ,6 We denote by TQ the type

class pertaining to an empirical distribution Q, namely, the set of vectors x ∈ X n whose empirical

distribution is Q.

Suppose there exists a strategy s∗ and a function λ : P → IR such that following two conditions

hold:

(a) For every type class TQ and every x ∈ TQ, ℓ(x, s∗) ≤ n[λ(Q) + o(1)], where o(1) designates a

(positive) sequence that tends to zero as n → ∞.

(b) For every type class TQ and every s ∈ S,

∣

∣

∣

∣

TQ ∩ {x : ℓ(x, s) ≥ n[λ(Q) − o(1)]}

∣

∣

∣

∣

≥ e−no(1)|TQ|. (29)

5This happens, for example, when ℓ is additive, i.e., ℓ(x, s) =Pn

i=1l(xi, s).

6It should be understood that extensions of the following discussion to more general classes of sources, like theclass of Markov sources, is essentially straightforward in many cases, although there may be elements that mightrequire some more caution.

17

It is then a straightforward exercise to show, using the method of types, that s∗ is a universal

asymptotically optimum strategy w.r.t. P, with

E(s∗, α, P ) = maxQ

[αλ(Q) − D(Q‖P )], (30)

where condition (a) supports the direct part and condition (b) supports the converse part. The

interesting point here then is not quite in the last statement, but in the fact that there are quite a

few application examples where these two conditions hold at the same time.

Before we provide such examples, however, a few words are in order concerning conditions (a)

and (b). Condition (a) means that there is a choice of s∗, that does not depend on x or on its

type class,7 yet the performance of s∗, for every x ∈ TQ, “adapts” to the empirical distribution Q

of x in a way, that according to condition (b), is “essentially optimum” (i.e., cannot be improved

significantly), at least for a considerable (non–exponential) fraction of the members of TQ. It is

instructive to relate conditions (a) and (b) above to conditions 1 and 2 of Observation 1. First,

observe that in order to guarantee asymptotic optimality of s∗, condition 2 of Observation 1 can

be somewhat relaxed: For Jensen’s inequality in (4) to remain exponentially tight, it is no longer

necessary to make the random variable αℓ(X , s) + ln[P (X)/Q(X)] completely degenerate (i.e., a

constant for every realization x, as in condition 2 of Observation 1), but it is enough to keep it

essentially fixed across a considerably large subset of the dominant type class, TQ∗ , i.e., the one

whose empirical distribution Q∗ essentially achieves the maximum of [αλ(Q) − D(Q‖P )]. Taking

Q∗(x) to be the memoryless source induced by the dominant Q∗, this is indeed precisely what

happens under conditions (a) and (b), which imply that

αℓ(x, s∗) + lnP (x)

Q∗(x)≈ nαλ(Q) +

n∑

i=1

lnP (xi)

Q∗(xi)

= nαλ(Q) + n∑

x∈X

Q∗(x) lnP (x)

Q∗(x)

= n[αλ(Q∗) − D(Q∗‖P )], (31)

for (at least) a non–exponential fraction of the members of TQ∗ , namely, a subset of TQ∗ that is large

enough to maintain the exponential order of the (dominant) contribution of TQ∗ to E exp{αℓ(x, s∗)}.

Loosely speaking, the combination of conditions (a) and (b) also means then that s∗ is essentially

7As before, s∗ is chosen without observing the data first.

18

optimum for (this subset of) TQ∗ , which is a reminiscence of condition 1 of Observation 1. More-

over, since s∗ “adapts” to every TQ, in the sense explained above, then this has the flavor of the

maximin problem discussed in Section 2, where s is allowed to be optimized for each and every Q.

Since the minimizing s, in the maximin problem, is independent of P and α, this also explains the

universality property of such a strategy.

Let us now discuss a few examples. The first example is that of fixed–rate rate–distortion

coding. A vector X that emerges from a memoryless source P is to be encoded by a coding scheme

s with respect to a given additive distortion measure, based on a single–letter distortion measure

d : X × X → IR, X being the reconstruction alphabet. Let DQ(R) denote the distortion–rate

function of a memoryless source Q (with a finite alphabet X ) relative to the single–letter distortion

measure d and let ℓ(x, s) designate the distortion between the source vector x and its reproduction,

using a rate–distortion code s. It is not difficult to see that this example meets conditions (a) and

(b) with λ(Q) = DQ(R): Condition (a) is based on the type covering lemma [10, Section 2.4],

according to which each type class TQ can be completely covered by essentially enR ‘spheres’ of

radius nDQ(R) (in the sense of d), centered at the reproduction vectors. Thus s∗ can be chosen

to be a scheme that encodes x in two parts, the first of which is a header that describes the index

of the type class TQ of x (whose description length is proportional to log n) and the second part

encodes the index of the codeword within TQ, using nR nats. Condition (b) is met since there is

no way to cover TQ with exponentially less than enR spheres within distortion less than DQ(R).

By the same token, consider the dual problem of variable–rate coding within a maximum allowed

distortion D. In this case, every source vector x is encoded by ℓ(x, s) nats, and this time, conditions

(a) and (b) apply with the choice λ(Q) = RQ(D), which is the rate–distortion function of Q (the

inverse function of DQ(R)). The considerations are similar to those of the first example.

It is interesting to particularize this example, of variable–rate coding, to the lossless case, D = 0

(thus revisiting Subsection 3.1), where RQ(0) = H(Q), the empirical entropy associated with Q. In

this case, a more refined result can be obtained, which extends a well known result due to Rissanen

[44] in universal data compression: According to [44], given a length function of a lossless data

compression ℓ(x, s) (s being the data compression scheme), and given a parametric class of sources

of n–vectors, {Pnθ }, indexed by a parameter θ ∈ Θ ⊂ IRk, a lower bound on EP n

θℓ(X, s), that

19

applies to most8 values of θ, is given by

EP nθℓ(X, s) ≥ H(Pn

θ ) + (1 − ǫ)k

2log n, (32)

where ǫ > 0 is arbitrarily small (for large n), H(Pnθ ) is the entropy of X associated with Pn

θ , and

EP nθ{·} is the expectation under Pn

θ . On the other hand, the same expression is achievable, by

a number of universal coding schemes, provided that the factor (1 − ǫ) in the above expression is

replaced by (1 + ǫ).

Now, for a given sources Pnθ , let us define Qn

θ as being the source probability function that is

proportional to (Pnθ )1/(1+α). Then, as a lower bound, we have

ln EP nθ

exp{αℓ(X , s)} = maxQ

[αEQℓ(X , s) − nD(Q‖Pnθ )]

≥ αEQnθℓ(X , s) − D(Qn

θ ‖Pnθ )

≥ α

[

H(Qnθ ) + (1 − ǫ)

k

2log n

]

− D(Qnθ ‖P

nθ )

= αH1/(1+α)(Pnθ ) + α(1 − ǫ)

k

2log n, (33)

where the third line follows from Rissanen’s lower bound (for most sources), and where Hu(Pnθ ) is

Renyi’s entropy of order u, namely,

Hu(Pnθ ) =

1

1 − uln

{

∑

x∈Xn

[Pnθ (x)]u

}

. (34)

Consider now the case where {Pnθ , θ ∈ Θ} is the class of all memoryless sources over X , where

the parameter vector θ designates k = |X | − 1 letter probabilities. In this case, since the source

is completely defined by the single–letter probabilities, we can omit the superscript n of Pnθ and

denote the source by Pθ. Define a two–part code s∗, which first encodes the index of the type class

Q and then the index of x within the type class. The corresponding length function is given by

ℓ(x, s∗) = ln |TQ| + k log n ≈ nH(x) +k

2log n, (35)

where H(x) is the empirical entropy pertaining to x, and where the approximate inequality is easily

8“Most values of θ” means all values of θ with the possible exception of a subset of Θ whose Lebesgue measuretends to zero as n tends to infinity.

20

obtained by the Stirling approximation. Then,

ln EPθexp{αℓ(X , s)} = ln EPθ

exp{αnH(X)} + αk

2log n

= ln EPθexp{α min

Q[− ln Q(X)]} + α

k

2log n

≤ minQ

lnEPθexp{−α ln Q(X)} + α

k

2log n

= nαH1/(1+α)(Pθ) + αk

2log n, (36)

and then it essentially achieves the lower bound. Rissanen’s result is now obtained a special case

of this, by dividing both sides of the inequality by α and then taking the limit α → 0.

We next summarize these findings in the form of a theorem, which is an exponential–moment

counterpart of [44, Theorem 1]. The converse part (part (a)) can actually be extended to even more

general classes of sources, which are not even necessarily parametric, using the results of [34], where

the expression (k log n)/(2n) is replaced, more generally, by the capacity of the “channel” from θ to

X,9 as defined by the class of sources {Pθ} when viewed as a set of conditional distributions of X

given θ. For the sake of simplicity, the direct part (part (b)) of this theorem is formalized for the

class of all memoryless sources with a given finite alphabet, parametrized by the letter probabilities,

but it can also be extended to wider classes of sources, like Markov sources of a given order.

Theorem 1 (a) Converse part: Let P = {Pnθ , θ ∈ Θ} be a parametric class of finite–alphabet

memoryless sources, indexed by a parameter θ that takes on values in a compact subset Θ

of IRk. Let the central limit theorem hold, under Qnθ , for the ML estimator of each θ in the

interior of Θ. If ℓ(x, s) is a length function of a code s satisfying the Kraft inequality, then

for every α > 0 and ǫ > 0,

1

nαln EP n

θexp{αℓ(X , s)} ≥ H1/(1+α)(P

nθ ) + (1 − ǫ) ·

k log n

2n, (37)

for all points θ ∈ Θ except for a set Aǫ(n) ⊂ Θ whose Lebesgue measure vanishes as n → ∞

for every fixed ǫ > 0.

(b) Direct part: For the case where P is the class of all memoryless sources with a given alphabet

of size k + 1 and θ designates the vector of the k first letter probabilities, there exists a uni-

9Moreover the converse part can also be harnessed to derive lower bounds on exponential moments of universalprediction w.r.t. similar classes of sources, using the results of [35, eq. (32)].

21

versal lossless data compression code s∗, whose length function ℓ(x, s∗) satisfies the reversed

inequality where the factor (1 − ǫ) is replaced by (1 + ǫ).

Our last example corresponds to a secrecy system. A sequence x is to be communicated to a

legitimate decoder which shares with the transmitter a random key z of nR purely random bits.

The encoder transmits an encrypted message y = φ(x,z), which is an invertible function of x given

z, and hence decipherable by the legitimate decoder. An eavesdropper, which has no access to the

key z, submits a sequence of guesses concerning x until it receives an indication that the last guess

was correct (e.g., a correct guess of a password admits the eavesdropper into a secret system). For

the best possible encryption function φ, what would be the optimum guessing strategy s∗ that the

eavesdropper may apply in order to minimize the α–th moment of the number of guesses G(X , s),

i.e., E{Gα(X, s)}? In this case, ℓ(x, s) = lnG(x, s). As is shown in [33], there exists a guessing

strategy s∗, which for every x ∈ TQ, gives ℓ(x, s∗) ≈ n min{H(Q), R}, a quantity that essentially

cannot be improved upon by any other guessing strategy, for most members of TQ. In other words,

conditions (a) and (b) apply with λ(Q) = min{H(Q), R}.

5 Phase Transitions

Another interesting aspect of the asymptotic behavior of the exponential moment is the possible

appearance of phase transitions, i.e., irregularities in the exponent function E(s, α, P ) even in some

very simple and ‘innocent’ models. By irregularities, we mean a non–smooth behavior, namely,

discontinuities in the derivatives of E(s, α, P ) with respect to α and/or the parameters of the

source P .

One example that exhibits phase transitions is that of the secrecy system, mentioned in the

last paragraph of the previous section. As is shown in [33], the optimum exponent E(s∗, α, p) for

this case consists of two phase transitions as a function of R (namely, three different phases). In

particular,

E(s∗, α, P ) =

αR R < H(P )(α − θR)R + θRH1/(1+θR)(P ) H(P ) ≤ R ≤ H(Pα)

αH1/(1+α)(P ) R > H(Pα)(38)

22

where Pα is the distribution defined by

Pα(x)∆=

P 1/(1+α)(x)∑

x′∈X P 1/(1+α)(x′), (39)

H(Q) is the Shannon entropy associated with a distribution Q, Hu(Q) is the Renyi entropy of

order u as defined before, and θR is the unique solution of the equation R = H(Pθ) for R in the

range H(P ) ≤ R ≤ H(Pα). But this example may not really be extremely surprising due to the

non–smoothness of the function λ(Q) = min{H(Q), R}.

It may be somewhat less expected, however, to witness phase transitions also in some very

simple and ‘innocent’ looking models. One way to understand the phase transitions in these cases

comes from the statistical–mechanical perspective. It turns out that in some cases, the expression

of the exponential moment is analogous to that of a partition function of a certain many–particle

physical system with interactions, which may exhibit phase transitions and these phase transitions

correspond the above–mentioned irregularities.

We now demonstrate a very simple model, which has phase transitions. Consider the case where

X is a binary vector whose components take on values in X = {−1,+1}, and which is governed by

a binary memoryless source Pµ with probabilities Pr{Xi = +1} = 1 − Pr{Xi = −1} = (1 + µ)/2

(µ designating the expected ‘magnetization’ of each binary spin Xi, to make the physical analogy

apparent). The probability of x under Pµ is thus easily shown to be given by

Pµ(x) =

(

1 + µ

2

)(n+P

i xi)/2

·

(

1 − µ

2

)(n−P

i xi)/2

=

(

1 − µ2

4

)n/2

·

(

1 + µ

1 − µ

)

P

i xi/2

. (40)

Consider the estimation of the parameter µ by the ML estimator

µ =1

n

n∑

i=1

xi. (41)

How does the exponential moment of Eµ exp{αn(µ − µ)2} behave? A straightforward derivation

yields

Eµ exp{αn(µ − µ)2} =

(

1 − µ2

4

)n/2

enαµ2∑

x

(

1 + µ

1 − µ

)

P

i xi/2

exp

α

n

(

∑

i

xi

)2

− 2αµ∑

i

xi

=

(

1 − µ2

4

)n/2

enαµ2∑

x

exp

(

1

2ln

1 + µ

1 − µ− 2αµ

)

∑

i

xi +α

n

(

∑

i

xi

)2

.

23

The last summation over {x} is exactly the partition function pertaining to the Curie–Weiss model

of spin arrays in statistical mechanics (see, e.g., [39, Subsection 2.5.2]), where the magnetic field is

given by

B =1

2ln

1 + µ

1 − µ− 2αµ (42)

and the coupling coefficient for every pair of spins is J = 2α. It is well known that this model

exhibits phase transitions pertaining to spontaneous magnetization below a certain critical temper-

ature. In particular, using the method of types [10], this partition function can be asymptotically

evaluated as being of the exponential order of

exp

{

n · max|m|≤1

[

h2

(

1 + m

2

)

+ Bm +J

2· m2

]}

,

where h2(·) is the binary entropy function, which stands for the exponential order of the number

of configurations {x} with a given value of m = 1n

∑

i xi. This expression is clearly dominated

by a value of m (the dominant magnetization m∗) which maximizes the expression in the square

brackets, i.e., it solves the equation

m = tanh(Jm + B), (43)

or in our variables,

m = tanh

(

2αm +1

2ln

1 + µ

1 − µ− 2αµ

)

. (44)

For α < 1/2, there is only one solution and there is no spontaneous magnetization (paramagnetic

phase). For α > 1/2, however, there are three solutions, and only one of them dominates the

partition function, depending on the sign of B, or equivalently, on whether α > α0(µ)∆= 1

4µ ln 1+µ1−µ

or α < α0(µ) and according to the sign of µ. Accordingly, there are five different phases in the plane

spanned by α and µ. The paramagnetic phase α < 1/2, the phases {µ > 0, 1/2 < α < α0(µ)} and

{µ < 0, α > α0(µ)}, where the dominant magnetization m is positive, and the two complementary

phases, {µ < 0, 1/2 < α < α0(µ)} and and {µ > 0, α > α0(µ)}, where the dominant magnetization

is negative. Thus, there is a multi-critical point where the boundaries of all five phases meet, which

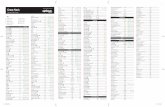

the point (µ, α) = (0, 1/2). The phase diagram is depicted in Fig. 1.

Yet another example of phase transitions is that of fixed–rate lossy data compression, discussed

in the previous section. To demonstrate this explicitly, consider the binary symmetric source

(BSS) and the Hamming distortion measure d, and consider a random selection of a rate–R code

24

α

µ+1−1

α = 1/2

α = α0(µ)

paramagnetic phase

m∗ > 0

m∗ > 0 m∗ < 0

m∗ < 0

0

Figure 1: Phase diagram in the plane of (µ, α).

by nenR independent fair coin tosses, one for each of the n components of every one of the enR

codewords. It was shown in [31] that the asymptotic exponent of the negative exponential moment,

E exp{−α∑

i d(Ui, Vi)} (where the expectation is w.r.t. both the source and the random code

selection), is given by the following expression, which obviously exhibits a (second order) phase

transition:

limn→∞

1

nln E exp

{

−α∑

i

d(Ui, Vi)

}

=

{

−αδ(R) α ≤ α(R)−α + ln(1 + eα) + R − ln 2 α > α(R)

(45)

where δ(R) is the distortion–rate function of the BSS w.r.t. the Hamming distortion measure and

α(R) = ln1 − δ(R)

δ(R). (46)

The analysis in [31] is based on the random energy model (REM), [13],[14],[15], a well-known

statistical–mechanical model of spin glasses with strong disorder, which is known to exhibit phase

transitions. Moreover, it is shown in [31] that ensembles of codes that have an hierarchical structure

may have more than one phase transition.

6 Lower Bounds on Exponential Moments

As explained in the Introduction, even in the ordinary setting, of the quest for minimizing E{ℓ(X, s)},

optimum strategies may not always be known, and then useful lower bounds are very important.

25

This is definitely the case when exponential moments are considered, because the exponential mo-

ment criterion is even harder to handle. To obtain non–trivial bounds on exponential moments, we

propose to harness lower bounds on the expectation of ℓ(X, s), possibly using a change of measure,

in the spirit of the proof of Observation 1 and the previous example of a lower bound on universal

lossless data compression. We next demonstrate this idea in the context of a lower bound on the

expected exponentiated squared error of an unbiased estimator, on the basis of the Cramer–Rao

bound (CRB). The basic idea, however, is applicable more generally, e.g., by relying on other well–

known Bayesian/non–Bayesian bounds on the mean-square error (e.g., the Weiss–Weinstein bound

for Bayesian estimation [49]), as well as in bounds on signal estimation (filtering, prediction, etc.),

and in other problem areas as well. Further investigation in the line may be of considerable interest.

Consider a parametric family of probability distributions {Pθ, θ ∈ Θ}, Θ ⊆ IR being the

parameter set, and suppose that we are interested in a lower bound on Eθ exp{α(θ − θ)2}, for any

unbiased estimator of θ, where as before, Eθ denotes expectation w.r.t. Pθ. Consider the following

chain of inequalities, which holds for any θ′ ∈ Θ:

Eθ exp{α(θ − θ)2} = Eθ′ exp

{

α(θ − θ)2 + lnPθ(X)

Pθ′(X)

}

≥ exp{

αEθ′(θ − θ)2 − D(Pθ′‖Pθ)}

= exp{

αEθ′(θ − θ′)2 + α(θ − θ′)2 − D(Pθ′‖Pθ)}

≥ exp{

αCRB(θ′) + α(θ − θ′)2 − D(Pθ′‖Pθ)}

, (47)

where CRB(θ) is the Cramer–Rao bound for unbiased estimators, computed at θ (i.e., CRB(θ) =

1/I(θ), where I(θ) is the Fisher information). Since this lower bound applies for every θ′ ∈ Θ, one

can take its supremum over θ′ ∈ Θ and obtain

ln Eθ exp{α(θ − θ)2} ≥ supθ′∈Θ

[

αCRB(θ′) + α(θ′ − θ)2 − D(Pθ′‖Pθ)]

. (48)

More generally if θ = (θ1, . . . , θk)T is a parameter vector (thus θ ∈ Θ ⊆ IRk) and α ∈ IRk is an

arbitrary deterministic (column) vector, then

ln Eθ exp{αT (θ − θ)(θ − θ)Tα} ≥ supθ′∈Θ

[

αT I−1(θ′)α + [αT (θ′ − θ)]2 − D(Pθ′‖Pθ)]

, (49)

where here I(θ) is the Fisher information matrix and I−1(θ) is its inverse.

26

It would be interesting to further investigate bounds of this type, in parameter estimation in

particular, and in other problem areas in general, and to examine when these bounds may be tight

and useful.

Acknowledgment

Interesting discussions with Rami Atar are acknowledged with thanks.

References

[1] E. Arikan, “An inequality on guessing and its application to sequential decoding,” IEEE

Trans. Inform. Theory, vol. IT–42, no. 1, pp. 99–105, January 1996.

[2] E. Arikan and N. Merhav, “Guessing subject to distortion,” IEEE Trans. Inform. Theory,

vol. 44, no. 3, pp. 1041–1056, May 1998.

[3] E. Arikan and N. Merhav, “Joint source–channel coding and guessing with application to

sequential decoding,” IEEE Trans. Inform. Theory, vol. 44, no. 5, pp. 1756–1769, September

1998.

[4] R. Atar, P. Dupuis, and A. Shwartz, “An escape–time criterion for queueing networks: asymp-

totic risk–sensitive control via differential games,” Mathemtics of Operation Research, vol. 28,

no. 4, pp. 801–835, November 2003.

[5] T. Berger, Rate Distortion Theory: A Mathematical Basis for Data Compression, Prentice

Hall, Englewood Cliffs, New Jersey, U.S.A., 1971.

[6] D. Bertsekas, Dynamic Programming and Optimal Control, Vol. I and II, 3rd ed. Nashua,

NH: Athena Scientific, 2007.

[7] J. P. N. Bishwal, “Large deviations and Berry–Esseen inequalities for estimators in nonlin-

ear nonhomogeneous diffusions,” REVSTAT Statistical Journal, vol. 5, no. 3, pp. 249–267,

November 2007.

27

[8] T. M. Cover and J. A. Thomas, Elements of Information Theory, Second Edition, John Wiley

& Sons, Hoboken, New Jersey, U.S.A., 2006.

[9] I. Csiszar, “On the error exponent of source-channel transmission with a distortion threshold,”

IEEE Trans. Inform. Theory , vol. IT–28, no. 6, pp. 823–828, November 1982.

[10] I. Csiszar and J. Korner, Information Theory: Coding Theorems for Discrete Memoryless

Systems, Academic Press, New York, U.S.A., 1981.

[11] P. Dai Pra, L. Meheghini, and W. J. Runggaldier, “Connections between stochastic control

and dynamic games,” Mathematics of Control, Signals and Systems, vol. 9, pp. 303–326, 1996.

[12] A. Dembo and O. Zeitouni, Large DEviations and Applications, Jones and Bartlett Publishers,

London 1993.

[13] B. Derrida, “Random–energy model: limit of a family of disordered models,” Phys. Rev.

Lett., vol. 45, no. 2, pp. 79–82, July 1980.

[14] B. Derrida, “The random energy model,” Physics Reports (Review Section of Physics Letters),

vol. 67, no. 1, pp. 29–35, 1980.

[15] B. Derrida, “Random–energy model: an exactly solvable model for disordered systems,” Phys.

Rev. B, vol. 24, no. 5, pp. 2613–2626, September 1981.

[16] G. B. Di Masi and L. Stettner, “Risk–sensitive control of discrete–time Markov processes

with infinite horizon,” SIAM Journal on Control and Optimization, vol. 38, no. 1, pp. 61–78,

1999.

[17] P. Dupuis and R. S. Ellis, A Weak Convergence Approach to the Theory of Large Deviations,

John Wiley & Sons, 1997.

[18] P. Dupuis, M. R. James, and I. Petersen, “Robust properties of risk–sensitive control,” Math-

ematics of Control, Signals and Systems, vol. 13, pp. 318–322, 2000.

[19] N. Farvardin and J. W. Modestino, “On overflow and underflow problems in buffer instru-

mented variable-length coding of fixed-rate memoryless sources,” IEEE Transactions on In-

formation Theory , vol. IT–32, no. 6, pp. 839–845, November 1986.

28

[20] W. H. Fleming and D. Hernandez–Hernandez, “Risk–sensitive control of finite state machines

on an infinite horizon I,” SIAM Journal on Control and Optimization, vol. 35, no. 5, pp. 1790–

1810, 1997.

[21] R. M. Gray, “Vector quantization,” IEEE Transactions on Acoustics, Speech, and Signal

Processing , Mag: 4–29, April 1984.

[22] R. M. Gray, Source Coding Theory, Kluwer Academic Publishers, Norwell, MA, U.S.A., 1990.

[23] R. M. Gray and D. L. Neuhoff, “Quantization,” IEEE Trans. Inform. Theory , vol. IT–44,

no. 6, pp. 2325–2383, October 1998.

[24] R. Howard and J. Matheson, “Risk–sensitive Markov decision processes,” Management Sci-

ence, vol. 18, pp. 356–369, 1972.

[25] P. A. Humblet, “Generalization of Huffman coding to minimize the probability of buffer

overflow,” IEEE Transactions on Information Theory , vol. IT–27, no. 2, pp. 230–232, March

1981.

[26] F. Jelinek, “Buffer overflow in variable length coding of fixed rate sources,” IEEE Transactions

on Information Theory , vol. IT–14, no. 3, pp. 490–501, May 1968.

[27] A. D. M. Kester and W. C. M. Kallenberg, “Large deviations of estimators,” Annals of

Mathmatical Statistics, vol. 14, no. 2, pp. 648–664, 1986.

[28] Y. Linde, A. Buzo, and R. M. Gray, “An algorithm for vector quantizer design,” IEEE

Transactions on Communications, vol. COM–28, no. 1, pp. 84–95, January 1980.

[29] J. L. Massey, “Guessing and entropy,” Proc. 1994 IEEE Int. Symp. Inform. Theory, (ISIT

‘94), p. 204, Trondheim, Norway, 1994.

[30] N. Merhav, “Universal coding with minimum probability of code word length overflow,” IEEE

Trans. Inform. Theory, vol. 37, no. 3, pp. 556–563, May 1991.

[31] N. Merhav, “The generalized random energy model and its application to the statistical

physics of ensembles of hierarchical codes,” IEEE Trans. Inform. Theory, vol. 55, no. 3, pp.

1250–1268, March 2009.

29

[32] N. Merhav, “On optimum strategies for minimizing the exponential moments of a given cost

function,” http://arxiv.org/PS cache/arxiv/pdf/1103/1103.2882v1.pdf

[33] N. Merhav and E. Arikan, “The Shannon cipher system with a guessing wiretapper,” IEEE

Trans. Inform. Theory, vol. 45, no. 6, pp. 1860–1866, September 1999.

[34] N. Merhav and M. Feder, “A strong version of the redundancy–capacity theorem of universal

coding,” IEEE Trans. Inform. Theory, vol. 41, no. 3, pp. 714-722, May 1995.

[35] N. Merhav and M. Feder, “Universal prediction,” IEEE Trans. Inform. Theory, vol. 44, no.

6, pp. 2124–2147, October 1998.

[36] N. Merhav and I. Kontoyiannis, “Source coding exponents for zero–delay coding with finite

memory,” IEEE Trans. Inform. Theory, vol. 49, no. 3, pp. 609–625, March 2003.

[37] N. Merhav and D. L. Neuhoff, “Variable-to-fixed length codes provide better large deviations

performance than fixed-to-variable length codes,” IEEE Trans. Inform. Theory, vol. 38, no. 1,

pp. 135–140, January 1992.

[38] N. Merhav, R. M. Roth, and E. Arikan, “Hierarchical guessing with a fidelity criterion,” IEEE

Trans. Inform. Theory, vol. 45, no. 1, pp. 330–337, January 1999.

[39] M. Mezard and A. Montanari, Information, Physics and Computation, Oxford University

Press, 2009.

[40] M. N. Mishra and B. L. S. Prakasa Rao, “ Large deviation probabilities for maximum likeli-

hood estimator and Bayes estimator of a parameter for fractional Ornstein–Uhlenbeck type

process,” Bulletin of Information and Cybernetics, vol. 38, pp. 71–83, 2006.

[41] J. B. Moore, R. J. Elliott, and S. Dey, “Risk–sensitive generalizations of minimum variance

estimation and control,” Journal of Mathematical Systems and Control, vol. 7, no. 1, pp.

1–15, 1997.

[42] D. L. Neuhoff and R. K. Gilbert, “Causal source codes,” IEEE Trans. Inform. Theory ,

vol. IT–28, no. 5, pp. 701–713, September 1982.

[43] R. T. Rockafellar, Convex Analysis, Princeton University Press, Princeton, NJ, U.S.A., 1972.

30

[44] J. Rissanen, “Universal coding, information, prediction, and estimation,” IEEE Transactions

on Information Theory , vol. IT–30, no. 4, pp. 629–636, July 1984.

[45] S. Sherman, “Non–mean square error criteria,” IRE Transactions on Information Theory,

vol. IT-4, pp. 125–126, September 1958.

[46] A. Sieders and K. Dzhaparidze, “A large deviations result for parameter estimators and its

application to non–linear regression analysis,” Annals of Statistics, vol. 15, no. 3, pp, 1031–

1049, 1987.

[47] O. Uchida and T. S. Han, “The optimal overflow and underflow probabilities with variable–

length coding for the general source,” preprint 1999.

[48] H. van Trees, Detection, Estimation, and Modulation Theory, Part I, John Wiley & Sons,

New York, 1968.

[49] A. J. Weiss and E. Weinstein, “A lower bound on the mean square error in random parameter

estimation,” IEEE Trans. Inform. Theory , vol. IT–31, no. 5, pp. 680–682, September 1985.

[50] P. Whittle, “Risk–sensitive linear/quadratic/Gaussian control,” Advances in Applied Proba-

bility, vol. 13, pp. 764–777, 1981.

[51] P. Whittle, “A risk–sensitive maximum principle,” Systems and Control Letters, vol. 15, pp.

183–192, 1990.

[52] A. D. Wyner, “On the probability of buffer overflow under an arbitrary bounded input-output

distribution,” SIAM Journal on Applied Mathematics, vol. 27, no. 4, pp. 544–570, December

1974.

[53] A. D. Wyner, “Another look at the coding theorem of information theory- a tutorial,” Proc.

IEEE , vol. 58, no. 6, pp. 894–913, June 1980.

[54] A. D. Wyner, “Fundamental limits in information theory,” Proc. IEEE , vol. 69, no. 2, pp. 239–

251, February 1981.

[55] A. D. Wyner and J. Ziv, “On communication of analog data from a bounded source space,”

Bell Systems Technical Journal, vol. 48, pp. 3139–3172, 1969.

31