New trends in Virtual Tape: Cloud and...

Transcript of New trends in Virtual Tape: Cloud and...

Robin Fromm

Dell EMC

Date of presentation (07/11/2017)

Session <DC>

New trends in Virtual Tape: Cloud and Automation

2

AGENDA• Virtual Tape Architecture and Tiering

• Cloud Object Storage

• Mainframe Tape Cloud use Cases and Architecture

• Taking Advantage of Cloud for Mainframe Tape Long

Term Retention of Data

• The new requirements for an System Automation

• Emerging Automation Capability that Include Tape

• Summary

3

Use Cases: Mainframe “tape” is not just for backup

Backup and RecoveryWorking Tape sets

Compliance, security &

long term retention

HSM Migrations

DLm Benefits:

• Eliminate CPU cycles

• Eliminate physical

“recycles”

DLm Benefits:

• Eliminate physical

movement of cartridges

• No catalog or directory

migration with the data

• More secure; customer

data not lost in transit

DLm Benefits:

• Achieves much higher

read / write performance

• Managing applications

is more efficient

-Syncsort

-logging

-SMF records

DLm Benefits:

• Improve RPO & RTO

objectives

• Can reduce backup

window

• Can reduce restore time

• 2x More capacity than

leading competitors

• Reduce cost of sending

data offsite

4

The Modern Features of Mainframe Virtual Tape

• Not virtual tape, but tape on disk

• True IBM tape device emulation

• Transparent to mainframe operations

• Leverages low-cost SAS disk and Flash drive

• High performance read and write

• Data Deduplication

• Architecture supporting Continuous Availability

• Flexible remote replication options

• Read/Write Snapshot to enable 100% testing of D/R processes

• Enable exploitation of low cost managed object storages to support long term retention and get rid of the tape

IBM mainframe EMC Disk Library for mainframe

5

A different Virtual Tape Architecture:

• Individual servers each with dedicated data storage

• Network connectivity between servers for inter-server communication

• Servers can ONLY fail-over to one another, providing availability

• Environment scales only by adding servers andstorage

Server Server

ImbeddedDisk

+ Table

Clustered-Server-scaled

Architecture

ImbeddedDisk

+table

Server

ImbeddedDisk

+table

Shared-Storage scaled

Architecture

Virtual Tape engines (VTEs) are Individual servers with shared data storage

Servers operate independent of one another

Surviving servers continue to operate when one or more servers fail, providing availability

Environment scales by adding servers orstorage

VTE(1)

3 optionsSTD disk

VTE(2)

VTE(n)

VTE(1)

3 optionsSTD disk

VTE(2)

VTE(n)

VTE(1)

3 optionsSTD disk

VTE(2)

VTE(n)

Industry

StandardIndustry

Leading

“all custom”

6

Primary Storage

Backup

Interactive Batch

Processing

Hierarchical Storage

Management

Compliance and Long

Term Retention

Mainframe Primary

Storage Backups DASD

Full Volume Dumps

Migration of data between

different tiers of storage

Fixed content data: Images,

Contracts, Healthcare

Temporary Storage, Sort Files,

Database Extracts, Generation

Data Groups

Deduplication Storage Standard NFS Storage Enterprise Storage

Data that dedupes well

(backups, logreq)

Disk & tape data

Consistency

Performance

Sensitive

Data

Why Different Storage for Mainframe Data?

7

BENEFITS OF CLOUD (Object) STORAGE

2. Reduce on-site storage footprint, management, life cycle costs

3. Maximize storage efficiency

4. Enable new initiatives that extend to cloud storage or compute

1. Elastically increase storage capacity

9

Cloud (Object) Storage Features • Single. Global. Shared.

Highly Efficient Data Protection

Large and Small File Management

Global Availability & Protection

Comprehensive Data Access

Native Multi-tenant Architecture

Advanced Retention Management

Lower TCO than public cloud Built-in Metadata Search

10

Solutions

Protecting unstructured data at scale

Challenge

Highly Efficient Data Protection• Optimized Storage Utilization

Hybrid protection scheme: Triple

mirroring, Erasure coding and XOR

algorithms

Lower storage overhead option for cold

data scenarios

Enhanced data durability without the

overhead of storing multiple copies

Impact

Enhanced data protection

Storage overhead scales inversely

Flexible protection schemes based on

use case

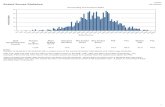

74

37

49

55

5961

63 64

82

41

54

61

6568

70 71

1 2 3 4 5 6 7 8

Active Archive Cold Archive

% usable storage

# of sites

11

2MB Buffered

Writer

Ack

Efficient Large and Small File Storage• Process Large and Small Files or User Requests Efficiently

Solutions

Managing both large and small files

on a single system

Challenge

Small files stored in cache and written

to a single disk through box-carting

Large files over 128MB in size are

erasure coded immediately vs triple

mirroring and erasure coded later

Provides up to 20% higher throughput

for larger files

Impact

Management efficiency for large

and small files

Ideally suited for unstructured data

Optimized performance under

heavy load

12

Fully Geo-distributed High Availability & Protection

Solutions

Global accessibility with high data

durability & availability

Challenge

A geographically distributed environment that

acts as single logical resource

Active/Active platform with access to content

through a single global namespace

Provides geo-caching to improve operational

performance and reduces latency

Read/write access from any location globally

Impact

No single point of failure

Increases developer productivity

Reduces WAN/network congestion

Ensures data is protected from site

failures

13

Native Multi-tenant Architecture• Meets The Diverse Needs Of Modern Applications, Meter And Monitor

Solutions

Inefficient use of storage resources

with the data center

Challenge

Shared storage resources amongst multiple

applications and tenants

System securely and automatically separates

Namespaces, object buckets and users

Integration with LDAP and AD environments

Ensures the integrity of customers’ stored

data

Impact

Better data isolation

Simplified management

Provides Object-Storage-as-a-Service

platform

Ability to implement charge-back or

“pay as you go” models

14

Lower TCO than Public Cloud

Solutions

Public cloud storage advertises a low

cost measured in cents per GB per

month. By comparison Investment in

hardware looks large

Challenge

Common metric for cost calculation: Convert capital investments into cents per

gigabytes per month- the same model

used by popular public cloud vendors

Validate cost model by analysts:Open the model to independent analysts

and ask for validation.

Impact

ECS running in your datacenter or in a

co-location facility provides a 48%

lower TCO compared to public cloud

You can offer Public cloud like storage

services to your customers using tools

built into ECS

Public Cloud-Archive

1.75 C/GB/M

Storage TCO(Cents per GB per Month)

Public Cloud-Active

2.59 C/GB/M

Public Cloud-Cold Archive

1.57 C/GB/M

EMC ECS

1.38 C/GB/M

Storage

Maintenance

Operations

Data Access/Requests

15

Cloud Use Cases

• Cloud storage as a Long-Term Retention / Secondary Tier

of storage

• Cloud storage as the Primary Tier of Storage

16

DLm Long-Term Retention (LTR) Write Operations

Virtualization and

Policy Mgmt.

DLm storage (VMAX/VNX/DD)

(Tier 1)

DLm File Services

DLm LTR Services

1. Tape datasets are written to DLm’s (local) storage (DD/VNX/VMAX)

2. DLm’s LTR file services migrate the tape volumes to LTR storage. Long-term retention storage converts it to an object, backs it up and optionally encrypts it.

3. DLm Policy management tracks the movement of tape volumes between tiers of storage.

DLm (VTEs)

Long-term retention storage

(Tier 2)

17

DLm Read Operations With Long-Term Retention (LTR)

Virtualization and

Policy Mgmt.

DLm (STORAGE (VMAX/VNX/DD))

(Tier 1)

DLm File Services

LTR STORAGE(Tier 2)DLm LTR

Services

1. Tape volumes, if present locally, are always read from DLm’s storage (DD/VNX/VMAX)

2. Policy management is aware of tape volumes that are only retained as LTR objects, backed-up and optionally encrypted by the Long Term Retention Storage.

3. LTR file services read the DLm tape object image from LTR storage and/or private / public cloud, decrypting it (if needed) and converting it to a tape volume.

DLm (VTEs)

18

The Policy manager

CloudArray Web console

• Age of tape volume

• Library / Class

• Size (Kb/Mb/Gb)

• Scheduler

• Type

• VTEs

20

Phase I - Initial Move Of LTR Candidates

• Identify Tape Candidates That Haven’t Been

Referenced In 13 Months Or More Using Tape

Management Catalog

• Policy Is Set To Migrate All Tape To ECS After Zero

Days Of Disuse (Immediate)

• Use This List Of Volumes As Input To Migration In

Place Software, Preserving Original Tape VOLSERs

• Limit Daily Movement To 5 TB – 10 TB Per Day

Migration In Place

Phase II – Perform Migration Of Remaining Tape

• Change The Policy To Migrate Tapes To ECS After

13 Months Of Disuse.

• Migrate The Remaining (Non-LTR) Tapes To The

New Environment Using The Migration In Place

Software.

Migration In Place

The End State

• Tape Processing Continues

• All Benefits Of DLm Fully Realized

• Automatic Migration Of Tape Volumes To ECS Once

“Days Of Disuse” Elapse Based On User-Defined

Policies

DLm8100 models & Capabilities

• 1 to 8 Virtual Tape Engines (VTEs)256 to 2048 Virtual IBM tape drives

• Concurrent & mixed storage (Data Domain, VNX, VMAX)One or two VNX7600(s) or VNX5400(s)

One or two DD6300(s), DD6800(s), DD9300(s), or DD9800(s)

One VNX and one DD

One to two Cloud Arrays

VMAX40K

• Highly scalable performance and capacity20 TB to 17.3 PB

Up to 6.4 GB/sec. throughput (800MB/s per VTE)

IBMMainframe DLm8100

25

DLm2100 models & Capabilities

• One or two VTEs256 to 512 Virtual IBM tape drives

• Uses ONLY Data Domain storage

• Highly Scalable performance and capacity20 TB to 6 PB (8:1 Compression)

Up to 1600 MB/s throughput

IBMMainframe DLm2100

26

Disaster preparedness

DISASTER

COMES IN

MANY

FORMS

PLANNED AND

UNPLANNED

FAILOVER EVENTS

LOSS OF “TRIBAL”

KNOWLEDGE

MANUAL, ERROR-

PRONE FAILOVER

PROCEDURES

X

Do you have the confidence

to push this button?

Do you even HAVE a button?

28

Geographically Dispersed Disaster Restart Emergency business continuity button

DC1

DC2

DC3

DC4

AUTOMATED disaster recovery protection

Automates, reacts, and monitorsenabling planned and unplanned DR functions

Supports 2, 3, 4 site configurations

Supports mixed z/OS and distributed systems

Minimal setup and customization

Automates both disk and tape DR

29

Is the datacenter ARMed?

Sysplexes, creating cross sysplex consistency

Storage, including all replication health

Monitors from dedicated control LPAR for DASD, shared LPAR for tapeMONITOR

Health events for servers and storage

Configuration and procedure testing requests

Planned and unplanned operationsREACT

Stop/start systems (including DB2, IMS, CICS)

Activate/deactivate LPARs

Full-site swaps and DASD-only swaps

Couple dataset selection, CBU activate/undo

Consistency between Tape (using VMAX-based DLm) and DASD

Recovery of both z/OS and distributed systemsAUTOMATE

30

Why rethink the ‘home grown’ approach to business continuity automation?

Expensive to implement

Expensive to maintain

Unreliable

During your last planned or unplanned disruption, did you

consider using a Business Continuity solution?

31

• Aging staff

• User written automation

Support issues

• People

• Lab resources

• Time

Cost

• Changes and test cycles

• No lab environment

Increasing complexity

• Complex situation management

Custom Automation

Extra time isrequired todiagnose

Recovery time elongation

Practical challenges

32

Value of a product-based automation solution

ValueAvailability Time to Resume

Manual processes

RTO=0

Product based Automation solution

drives

RISK

RPO=0

Technology investment

RIS

K

‘home grown’

automation

33

Automation Manages The Functional StackThe key to successful Business Continuity

zOS

GDDR

2, 3 & 4 Site Support

AutoSwap

Consistency Group

SRDF- Sync & Async

TimeFinder

VMAX – DASD : DLm -TAPE

34

What is different

• ‘2nd Generation’ Product Based Automation• 1 product for all topologies (2, 3, 4 site)

• One, and only one, master control system• Single panel set supporting all configurations• One configuration definition file• One maintenance stream for automation• One operational interface• One manual

• Automation Dynamically Generate based on Topology and Event

• Sysplex Independent• Manage Multiple Sysplexes from a single Control LPAR• No reliance on sysplex communications• Supports sysplex, monoplex, multiple sysplexes

• Flexible. Allows IT to respond quickly to business demands

35

Expert Systems For DR Automation

Automation should use an expert system and artificial intelligence to dynamically determine state transition event management.

36

Universal Data Consistency Data consistency for Tape and DASD

Single ConsistencyGroup

CKD

CompressedTape data

FBA

Tape catalogHSM CDS

GDDR

• Storage arrays used for both FBA DLmTape data and CKD DASD

• Remote Replication using Synchronous or Asynchronous

• Space Efficeint Local Replication

• Full Failover Automation

• Planned Failover

• Unplanned Failover

• Planned D/R Test

• Single replication methodology

• Tape and DASD Consistency

37

GDDR Tape

Monitoring Monitoring

DR

AutomationDR

Automation

• Leverages Existing GDDR Technology

• No C-System Required

• Supports DLm w/ VNX and DLm w/ DD

• Consistency and recovery

38

Recover after loss of DC1

• Disaster Recognized – No Heartbeat

• Systems IPL’d

• User reply “YES” - submits script to restart production @ DC3

• Create SnapShot to preserve gold copy

• Make R/O copy R/W

Snap

Shot

AutoSwap

DC1GDDR

GDDR

Taper/w

DC3♥ ♥

R1

Taper/oTapeR/W

40

Site swap

• Script submitted to Site Swap• DLm Shutdown

• Systems Shutdown at DC1 - Manual

• Systems IPL’d @ DC3

• Script Submitted to restart production @ DC3

• Create SnapShot to preserve gold copy (manual today)

• Reverse Replication

• Make R/O copy R/W

Snap

Shot

AutoSwap

DC1GDDR

GDDR

Taper/w

DC3♥ ♥

R1

Taper/oTapeR/W

TapeR/O

41

DR Testing

• Script submitted to perform DR Test @DC3

• Create SnapShot to preserve gold copy

• Filesystems mounted to DC3 DLm

• Systems IPL’d @ DC3

• DR Testing executed

• DR Test Complete – Submit Script to go back standby

Snap

Shot

AutoSwap

DC1GDDR

GDDR

Taper/w

DC3♥ ♥

R1

Taper/o

TapeR/O

42

Automated health monitoring

AutoSwap

DC1GDDR

GDDR

Taper/w

DC3♥ ♥

R1

Taper/o

• GDDR/Tape periodically checks health of DLm at all sites– Runs predefined DLm health checks

– Checks replication status

• Runs at a predefined user configurable interval

• If any HC fails– Notifies mainframe admin if any HC fails

– Generates call home to notify EMC

43

GDDR was built for DASD failover automation across sites and Universal Data Consistency between DASD & Tape

• SRDF/S is used between Primary Site and Local DR Site

• SRDF/A concurrently replicates between Primary Site and Remote DR Site

• GDDR Monitors all 3 Sites

• GDDR Controls Failover to Either Local or Remote DR Site in the Event of a Failure

• ConGroups ensure DASD & Tape data consistency

VMAX DASD

VMAX DASD

zSeries

zSeries

ConGroup

MSC Group GDDR

GDDR

zSeries

ConGroup GDDR

VMAX DASD

SRDF/A

SRDF/S

DLm8100 DLm8100

DLm810044

Summary

• Virtual Tape Architecture Matters

• Cloud (Object) Storage can be easily exploited for mainframe Tape

• Changing Demographics is driving new requirements for automation

• Product based Expert Systems provide a valid option to traditional custom build automation solutions

45