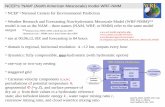

MEsoSCale dynamical Analysis through combined model, satellite and in situ data

Mesoscale Model Evaluation

description

Transcript of Mesoscale Model Evaluation

Mesoscale Model Evaluation

Mike Baldwin

Cooperative Institute for Mesoscale Meteorological Studies, University of Oklahoma

Also affiliated with NOAA/NSSL and NOAA/NWS/SPC

NWS – forecasts on hi-res gridsWhat would you suggest that NWS do to verify these forecasts?

Issues in mesoscale verificationValidate natural behavior of forecasts

Realistic variability, structure of fieldsDo predicted events occur with realistic frequency?Do characteristics of phenomena mimic those found in nature?

Traditional objective verification techniques are not able to address these issues

OutlineProblems with traditional verificationSolutions:

Verify characteristics of phenomenaVerify structure/variabilityDesign verification systems that address value of forecasts

Traditional verificationCompare a collection of matching pairs of forecast and observed values at the same set of points in space/timeCompute various measures of accuracy: RMSE, bias, equitable threat scoreA couple of numbers may represent the accuracy of millions of model grid points, thousands of cases, hundreds of meteorological eventsBoiling down that much information into one or two numbers is not very meaningful

Dimensionality of verification infoMurphy (1991) and others highlight danger of simplifying complex verification informationHigh-dimension information = data overloadVerification information should be easy to understandNeed to find ways to measure specific aspects of performance

Quality vs. valueScores typically measure quality, or degree in which forecasts and observations agreeForecast value is benefit of forecast information to decision makerValue is subjective, complex function of qualityHigh-quality forecast may be of low value and vice versa

Forecast #1: smooth

OBSERVED

FCST #1: smooth

FCST #2: detailed

OBSERVED

Traditional “measures-oriented” approach to verifying these forecasts

Verification Measure Forecast #1 (smooth)

Forecast #2 (detailed)

Mean absolute error 0.157 0.159

RMS error 0.254 0.309

Bias 0.98 0.98

Threat score (>0.45) 0.214 0.161

Equitable threat score (>0.45)

0.170 0.102

n

kkk xf

nMAE

1

1

xfBIAS

HOFHTS

)( ChHOF

ChHETS

Phase/timing errorsHigh-amplitude, small-scale forecast and observed fields are most sensitive to timing/phase errors

Mean Squared Error (MSE)For 1 point phase error

MSE = 0.0016

Mean Squared Error (MSE)For 1 point phase error

MSE = 0.165

Mean Squared Error (MSE)For 1 point phase error

MSE = 1.19

Verify forecast “realism”Anthes (1983) paper suggests several ways to verify “realism”

Verify characteristics of phenomenaDecompose forecast errors as function of spatial scaleVerify structure/variance spectra

Characterize the forecast and observed fields

Verify the forecast with a similar approach as a human forecaster might visualize the forecast/observed fieldsCharacterize features, phenomena, events, etc. found in forecast and observed fields by assigning attributes to each objectNot an unfamiliar concept:

“1050 mb high” “category 4 hurricane” “F-4 tornado”

Many possible ways to characterize phenomena

Shape, orientation, size, amplitude, locationFlow patternSubjective information (confidence, difficulty)Physical processes in a NWP modelVerification information can be stratified using this additional information

“Object-oriented” approach to verification

Decompose fields into sets of “objects” that are identified and described by a set of attributes in an automated fashionUsing image processing techniques to locate and identify eventsProduce “scores” or “metrics” based upon the similarity/dissimilarity between forecast and observed eventsCould also examine the joint distribution of forecast and observed events

Characterization: How?Identify an objectUsually involves complex image processing

Event #16

Characterization: How?Assign attributesExamples: location, mean, orientation, structure

Event #16:Lat=37.3N,Lon=87.8W,=22.3,=2.1

Automated rainfall object identification

Contiguous regions of measurable rainfall (similar to CRA; Ebert and McBride (2000))

Connected component labeling

Expand area by 15%, connect regions that are within 20km, relabel

Object characterizationCompute attributes

Verification of detailed forecasts

12h forecasts of 1h precipitation valid 00Z 24 Apr 2003

observed

fcst #1

RMSE = 3.4

MAE = 0.97

ETS = 0.06

RMSE = 1.7

MAE = 0.64

ETS = 0.00

fcst #2

Verification

12h forecasts of 1h precipitation valid 00Z 24 Apr 2003

observed

fcst #1

fcst #2

= 3.1

ecc 20 = 2.6

ecc 40 = 2.0

ecc 60 = 2.1

ecc 80 = 2.8 = 1.6

ecc 20 = 10.7

ecc 40 = 7.5

ecc 60 = 4.3

ecc 80 = 2.8

= 7.8

ecc 20 = 3.6

ecc 40 = 3.1

ecc 60 = 4.5

ecc 80 = 3.6

Example of scores produced by this approach

fi = (ai, bi, ci, …, xi, yi)t

ok= (ak, bk, ck, …, xk, yk)t

di,k(fi,ok) = (fi-ok)t A (fi-ok) (Generalized Euclidean distance, measure of dissimilarity) where A is a matrix, different attributes would probably

have different weights

ci,k(fi,ok) = cov(fi,ok) (measure of similarity)

Ebert and McBride (2000)Contiguous Rain AreasSeparate errors into amplitude, displacement, shape components

Contour error map (CEM) methodCase et al (2003)Phenomena of interest – Florida sea breezeObject identification – sea breeze transition timeContour map of transition time errorsDistributions of timing errorsVerify post-sea breeze winds

CompositingNachamkin (2004)Identify events of interest in the forecastsCollect coordinated samplesCompare forecast PDF to observed PDFRepeat process for observed events

Decompose errors as a function of scale

Bettge and Baumhefner (1980) used band-pass filters to analyze errors at different scalesBriggs and Levine (1997) used wavelet analysis of forecast errors

Verify structureFourier energy spectraTake Fourier transform, multiply by complex conjugate – E(k)Display on log-log plotNatural phenomena often show “power-law” regimesNoise (uncorrelated) results in flat spectrum

Fourier spectraSlope of spectrum indicates degree of structure in the data

Larger absolute values of slope correspond with less structure

slope = -1

slope = -3

slope = -1.5noise

Multiscale statistical properties (Harris et al 2001)Fourier energy spectrumGeneralized structure function: spatial correlationMoment-scale analysis: intermittency of a field, sparseness of sharp intensitiesLooking for “power law”, much like in atmospheric turbulence (–5/3 slope)

FIG. 3. Isotropic spatial Fourier power spectral density (PSD) for forecast RLW (qr; dotted line) and radar-observed qr (solid line). Comparison of the spectra shows reasonable agreement at scales larger than 15 km. For scales smaller than 15 km, the forecast shows a rapid falloff in variability in comparison with the radar. The estimated spectral slope with fit uncertainty is = 3.0 ± 0.1

Example

log[

E(k)

]

log[wavenumber]

Obs_4 Eta_12 Eta_8

WRF_22 WRF_10 KF_223-6h forecasts from 04 June 2002 1200 UTC

3-6h forecasts from 04 June 2002 1200 UTC

June 2002 00z runs 12, 24, 36, 48h fcsts

Comparing forecasts that contain different degrees of structure

Obs=blackDetailed = blueSmooth = greenMSE detailed = 1.57MSE smooth = 1.43

Common resolved scales vs. unresolved

Filter other forecasts to have same structureMSE “detailed” = 1.32MSE smooth = 1.43

Lack of detail in analysesMethods discussed assume realistic analysis of observationsProblems: Relatively sparse observationsOperational data assimilation systems

Smooth first guess fields from model forecastsSmooth error covariance matrix

Smooth analysis fields result

True mesoscale analysesDetermine what scales are resolvedMesoscale data assimilation

Frequent updatesAll available observationsHi-res NWP provides first guessEnsemble Kalman filterTustison et al. (2002) scale-recursive filtering takes advantage of natural “scaling”

Design verification systems that address forecast value

Value measures the benefits of forecast information to usersDetermine what aspects of forecast users are most sensitive toIf possible, find out users “cost/loss” situationAre missed events or false alarms more costly?

IssuesHow to distill the huge amount of verification information into meaningful “nuggets” that can be used effectively?How to elevate verification from an annoyance to an integral part of the forecast process?What happens when conflicting information from different verification approaches is obtained?

SummaryProblems with traditional verification techniques when used with forecasts/observations with structureVerify realismIssues of scaleWork with forecasters/users to determine most important aspects of forecast information

ReferencesGood booksPapers mentioned in this presentationBeth Ebert’s website

Scores based on similarity/dissimilarity matrices

D = [di,j] euclidean distance matrix

C = [ci,j] covariance matrix

Scores could be:tr[D] = trace of matrix, for euclidean distance this

equates to (fi – oi)2 ~ RMSE

det[D] = determinant of matrix, a measure of the magnitude of a matrix

5280 5340 5400 5460

5520

5580

5640

5700

5760

5820

5820

Mean Geoptential for Cluster 4

Fourier power spectraCompare 3h accumulated QPF to radar/gage analysesForecasts were linearly interpolated to same 4km grid as “Stage IV” analysisErrico (1985) Fourier analysis code used. 2-d Fourier transform converted to 1-d by annular average Fixed grid used for analysis located away from complex terrain of Western U.S.Want to focus on features generated by model physics and dynamics, free from influence of orographically forced circulations