MedGAN CGAN b-GAN ff LAPGAN MPM-GAN GANs for Creativity … · iGAN IAN ID-CGAN IcGAN InfoGAN...

Transcript of MedGAN CGAN b-GAN ff LAPGAN MPM-GAN GANs for Creativity … · iGAN IAN ID-CGAN IcGAN InfoGAN...

Ian Goodfellow, Staff Research Scientist, Google Brain NIPS Workshop on ML for Creativity and Design

Long Beach, CA 2017-12-08

GANs for Creativity and Design

3D-GAN AC-GAN

AdaGANAffGAN

AL-CGANALI

AMGAN

AnoGAN

ArtGAN

b-GAN

Bayesian GAN

BEGAN

BiGAN

BS-GAN

CGAN

CCGAN

CatGAN

CoGAN

Context-RNN-GAN

C-RNN-GANC-VAE-GAN

CycleGAN

DTN

DCGAN

DiscoGAN

DR-GAN

DualGAN

EBGAN

f-GAN

FF-GAN

GAWWN

GoGAN

GP-GAN

IANiGAN

IcGANID-CGAN

InfoGANLAPGAN

LR-GANLS-GAN

LSGAN

MGAN

MAGAN

MAD-GAN

MalGANMARTA-GAN

McGAN

MedGAN

MIX+GAN

MPM-GAN

SN-GAN

Progressive GAN

DRAGAN GAN-GP

(Goodfellow 2017)

no mention of realism

(Goodfellow 2017)

Generative Modeling• Density estimation

• Sample generation

Training examples Model samples

(Goodfellow 2017)

Adversarial Nets Framework

x sampled from data

Differentiable function D

D(x) tries to be near 1

Input noise z

Differentiable function G

x sampled from model

D

D tries to make D(G(z)) near 0,G tries to make D(G(z)) near 1

(Goodfellow et al., 2014)

(Goodfellow 2017)

Imagination

(Merriam Webster)

(Goodfellow 2017)

What is in this image?

(Yeh et al., 2016)

“not present to the senses”

(Goodfellow 2017)

Generative modeling reveals a face

(Yeh et al., 2016)

“not present to the senses”

(Goodfellow 2017)

Celebrities who have never existed

(Karras et al., 2017)

“never before wholly

perceived in reality”

(Goodfellow 2017)

Is imperfect mimicry originality?

(Karras et al., 2017)

(Goodfellow 2017)

Creative Adversarial Networks

(Elgammal et al., 2017)

See this afternoon’s

keynote

(Goodfellow 2017)

GANs for design

• A lower bar than “true creativity”

• A tool that assists a human designer

(Goodfellow 2017)

GANs for simulated training data

(Shrivastava et al., 2016)

(Goodfellow 2017)

Introspective Adversarial Networks

youtube

(Brock et al., 2016)

(Goodfellow 2017)

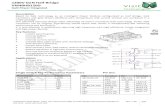

Image to Image Translation

Input Ground truth Output Input Ground truth Output

Figure 13: Example results of our method on day!night, compared to ground truth.

Input Ground truth Output Input Ground truth Output

Figure 14: Example results of our method on automatically detected edges!handbags, compared to ground truth.

(Isola et al., 2016)

Image-to-Image Translation with Conditional Adversarial Networks

Phillip Isola Jun-Yan Zhu Tinghui Zhou Alexei A. Efros

Berkeley AI Research (BAIR) LaboratoryUniversity of California, Berkeley

{isola,junyanz,tinghuiz,efros}@eecs.berkeley.edu

Labels to Facade BW to Color

Aerial to Map

Labels to Street Scene

Edges to Photo

input output input

inputinput

input output

output

outputoutput

input output

Day to Night

Figure 1: Many problems in image processing, graphics, and vision involve translating an input image into a corresponding output image.These problems are often treated with application-specific algorithms, even though the setting is always the same: map pixels to pixels.Conditional adversarial nets are a general-purpose solution that appears to work well on a wide variety of these problems. Here we showresults of the method on several. In each case we use the same architecture and objective, and simply train on different data.

Abstract

We investigate conditional adversarial networks as ageneral-purpose solution to image-to-image translationproblems. These networks not only learn the mapping frominput image to output image, but also learn a loss func-tion to train this mapping. This makes it possible to applythe same generic approach to problems that traditionallywould require very different loss formulations. We demon-strate that this approach is effective at synthesizing photosfrom label maps, reconstructing objects from edge maps,and colorizing images, among other tasks. As a commu-nity, we no longer hand-engineer our mapping functions,and this work suggests we can achieve reasonable resultswithout hand-engineering our loss functions either.

Many problems in image processing, computer graphics,and computer vision can be posed as “translating” an inputimage into a corresponding output image. Just as a concept

may be expressed in either English or French, a scene maybe rendered as an RGB image, a gradient field, an edge map,a semantic label map, etc. In analogy to automatic languagetranslation, we define automatic image-to-image translationas the problem of translating one possible representation ofa scene into another, given sufficient training data (see Fig-ure 1). One reason language translation is difficult is be-cause the mapping between languages is rarely one-to-one– any given concept is easier to express in one languagethan another. Similarly, most image-to-image translationproblems are either many-to-one (computer vision) – map-ping photographs to edges, segments, or semantic labels,or one-to-many (computer graphics) – mapping labels orsparse user inputs to realistic images. Traditionally, each ofthese tasks has been tackled with separate, special-purposemachinery (e.g., [7, 15, 11, 1, 3, 37, 21, 26, 9, 42, 46]),despite the fact that the setting is always the same: predictpixels from pixels. Our goal in this paper is to develop acommon framework for all these problems.

1

arX

iv:1

611.

0700

4v1

[cs.C

V]

21 N

ov 2

016

(Goodfellow 2017)

Unsupervised Image-to-Image Translation

(Liu et al., 2017)

Day to night

(Goodfellow 2017)

CycleGAN

(Zhu et al., 2017)

(Goodfellow 2017)

vue.ai

(Goodfellow 2017)

vue.ai

✓

✓

(Goodfellow 2017)

Future directions

• Beyond realism: train the discriminator to estimate how appealing an artifact is, in addition to or instead of modeling whether the design is statistically similar to past designs

• Extreme personalization: highly automate design to generate artifacts to fit each customer or appeal to each customer’s tastes

• GAN-based simulators to help test artifacts being designed (vue.ai is a first step in this direction)

(Goodfellow 2017)

Conclusion

• GANs are useful tools for design

• GANs have a form of imagination

• It is debatable whether GANs are “original” enough to count as truly creative. Though designed to perfectly mimic a pattern, they can be used to do more than that

![Dist-GAN: AnImproved GAN using Distance Constraints...Dist-GAN: AnImproved GAN using Distance Constraints Ngoc-Trung Tran[0000−0002−1308−9142], Tuan-Anh Bui[0000−0003−4123−262],](https://static.fdocuments.in/doc/165x107/60aad22afa8ec440d64b3f4c/dist-gan-animproved-gan-using-distance-constraints-dist-gan-animproved-gan.jpg)