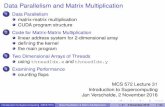

Matrix Multipliction

-

Upload

qsashutosh -

Category

Documents

-

view

218 -

download

0

Transcript of Matrix Multipliction

-

8/8/2019 Matrix Multipliction

1/5

Partially Reconfigurable Matrix Multiplication for Area and

Time Efficiency on FPGAs

Luo Jianwen and Jong Ching ChuenSchool of Electrical and Electronic Engineering, Nanyang Technological University

Nanyang Avenue, Singapore [email protected], [email protected]

Abstract

This paper presents a novel architecture for matrix

multiplication implemented on reconfigurable hardware

with partially reconfigurable feature. The proposed

design significantly reduces the size and achieves the

minimum computation cycles for the n n matrix

multiplication. Compared with the linear array design [1],

the area of our design is reduced by 72% - 81% while the

AT metrics (product of area and latency) is reduced by

40% - 58% for matrix size between 3 3 and 48 48.

The versatility of our design is demonstrated in different

parameterisable instantiation to cater for different

implementations with various time and area requirements.

Partially reconfiguration allows us to reload the design

contents with the minimum configuration overhead. The

performance of our design is even better for larger

matrices.

1. Introduction

Matrix multiplication is one of the essential operations in

a wide range of applications such as graphic, video,image, robotic and signal processing. These applications

need high performance as well as cost efficient design.

Reconfigurable systems offer us a potential for

computation acceleration due to its software-like

programmable nature of the parallel processing units.

Run-time configuration explores a novel research area for

reconfigurable hardware to further speedup the

processing speed by eliminating the configuration

overhead with the overlapping of execution time. This

offers a non-interrupted processing system even with the

change of the circuits and greatly improves the logic

density by the time-sharing logic.

Many existing schemes addressing matrix multiplication

on FPGAs are on the area-time tradeoff issues to achieve

the maximum processing speed. Partially reconfigurable

devices offer us the ability to change the design

implementation without stopping the whole executing

process. To our best knowledge, none of the existing

matrix multiplication design is run-time configurable. In

this paper we present a novel matrix multiplier with

partially reconfigurable feature which greatly improves

area and latency tradeoff when compared with the

existing designs.

Linear array design by Jang et.[1] implemented matrix

multiplication on the Xilinx Virtex-II device. Their design

adopted systolic design architecture and focused on the

area-latency tradeoff minimization, which achieved great

improvement when compared with the state-of-the-art

FPGA based designs [2] and [3]. For a matrix size of 4

4, it had 52% and 69% less in area/speed metricsrespectively, and saved up to 46% silicon against design

in [4], while achieving a maximum frequency of 166MHz.

Xilinx reference design [5] for 3 3 matrix multiplication

maximized the pipelined data flow by multi pump the

embedded multipliers to the speed of 9 times of the

environment frequency, up to 154MHz. We use this

design as our benchmark for matrix multiplication

implemented on the Xilinx Virtex-II device.

Xilinx core generator tool [5] has many parameterisable

library cores for fast design realization. These cores have

guaranteed high performance and density. We

implemented the uniprocessor for matrix multiplication by using this tool and made the comparison with our

proposed design. The uniprocessor can run at 113MHz

when adopting MAC v3.0 core [5].

The rest of this paper is organized as: Section 2 describes

the proposed matrix multiplier design architecture for AT

efficiency. Section 3 presents the FPGA implementation

and comparison with the existing designs. Section 4

addresses the content partial reconfiguration used in our

design. And we conclude in Section 5.

2. Design architecture

Since Virtex-II devices incorporate large amounts of 18

Kbit Block SelectRAM, which has versatile configuration

options, we can instantiate the memory cell with the

operand matrix the way we do with parameterisable

registers. The proposed matrix multiplier uses two chunks

of the memory area. Figure 1 shows the architecture of

the proposed processing element (PE). Memory B is used

1

Proceedings of the EUROMICRO Systems on Digital System Design (DSD04)0-7695-2203-3/04 $ 20.00 IEEE

Authorized licensed use limited to: Pune Institute of Computer Technology. Downloaded on October 30, 2009 at 05:15 from IEEE Xplore. Restrictions apply.

-

8/8/2019 Matrix Multipliction

2/5

to store the columnj of matrixB and memory Cis used to

store the partial and final products of columnj. Compared

with the previous techniques, our design significantly

reduces the number of registers needed for data

movement. 4n registers are required for data movement in

Linear Array design [1], while only n registers are used in

our design. In Linear Array design, n

2

+ 2n cycles areneeded for the n n matrix multiplication. With run-time

configurable parameters and parallel processors, we save

n cycles in our systolic mode design and 2n cycles in the

parallel mode.

Figure 1. Architecture ofPEj in the Proposed Design

Based on the proposed PE architecture, a number of

theorems are derived to show the performance of the

proposed multiplier.Lemma 1 gives the minimum latency

requirement of n n matrix multiplication with n MACs

(multiplier-and-accumulator) and uniprocessor. Lemma 2

improves the Linear Array algorithm for matrix

multiplication with respect to both the number of registers

and number of computation cycles.Lemma 2 is extended

in Corollary 1 and 2 for demonstration of the ability of

the proposed design to meet the latency limitation with n

and one MAC respectively. Lemma 3 addresses the

matrix decomposition when the matrix size is lager than

the number of available PEs and gives the quantitative

analysis of the trade-offs between area and latency.

Lemma 1 n n matrix multiplication can never be

performed in less than n2 cycles with n multipliers or n3

cycles with one multiplier.

Proof: We define O (n3) as the complexity for n n

matrix multiplication. Equation cij=

aik bkj denotes

the calculation of any n n matrix multiplication C =

AB with aik, bkj and cij represent the elements of the n n

matrices A, B and C respectively. We need n times of

multiplication to produce each element of product C.

Thus, to compute the whole n dimension C, n3

times of

multiplication are needed. If we have n multipliers

working in parallel, n

2

cycles are the latency elapsed inmultiplication. Note that we have not counted the latency

for addition in pipelined processing and those wasted in

data movement. So the minimum timing requirement for

an n n matrix multiplication is n2 cycles with n

multipliers and we get the n3 cycles with one multiplier

for the same reason. These are the low bound latency for

implementing n n matrix multiplication.

Lemma 2 n n matrix multiplication can be performed in

n2 + n cycles using n PEs, each with 1 MAC, 1 register

and a Block SelectRAM of2n words and 1 I/O port.

Proof: Figure 1 is devised to compute cij=

aik bkj

for all i, j. aik, bkj and cij represent the elements of the n n matrices A, B and C. PEj denotes the j-th PE in the

whole structure.PEj computes column j of matrix C, c1j,

c2j, , cnj stored in Block SelectRAM Cpart. The input of

PEj connects to the output ofPEj-1 and the output ofPEjis the input of the next array element PEj+1. In phase k,

row kof matrixA (aik , 1 i n ) traversesPE 1 , PE2 ,

PE3 ,PEn in order. Columnj of matrixB resides in

the Block SelectRAM of PEj that can be partially

configured. This scheme allowsPEj to update cij = cij +

aik bkj every clock cycle, where cij represents the

intermediate value ofcij. And it takes n cycles to calculate

each element of Matrix C. The MACinPEj will not start

until the first element of MatrixA a11 arrives. Thus, PEjstarts computing j cycles after the ready signal activates,

and completes onj + n2 cycles. So we get the result after

the last element cnn inPEn is ready, which is after the (n2

+ n) th cycle.

Corollary 1 n n matrix multiplication can be performed

in n2

cycles using n PEs, each with 1 MAC, 1 register and

1 Block SelectRAM of2n words and 1 I/O port.

Proof: There is no change here fromLemma 2 except the

way Matrix A traverses. Instead of through PE1 , PE2,

PE3 ,PEn, the elements of Matrix A are traveling

through the data bus and fed into each PE simultaneously.

We instantiate all the PEs with the parameter ofPE1 butdifferent column value of MatrixB in Block SelectRAM

part B - PEj with j-th column of Matrix B. This method

allows all the PEs start at the same time and finish with

the latency ofPE1 as inLemma 2.

Corollary 2 n n matrix multiplication can be performed

in n3 cycles using 1 PE with 1 MAC, 1 register and a

Block SelectRAM of2n2 words and 1 I/O port.

2

Proceedings of the EUROMICRO Systems on Digital System Design (DSD04)0-7695-2203-3/04 $ 20.00 IEEE

Authorized licensed use limited to: Pune Institute of Computer Technology. Downloaded on October 30, 2009 at 05:15 from IEEE Xplore. Restrictions apply.

-

8/8/2019 Matrix Multipliction

3/5

Proof: This is the same as in the Uniprocessor. n n

matrix multiplication can also be performed using only

PE1 . We can parameterize the value of Block SelectRAM

partB with MatrixB in column order. MatrixA is fed into

PE1 n times with the production rate of 1 column per time.

So n3 cycles are needed in this case.

8887868584838281

7877767574737271

6867666564636261

5857565554535251

4847464544434241

3837363534333231

2827262524232221

1817161514131211

4321

bbbbbbbb

bbbbbbbb

bbbbbbbb

bbbbbbbbbbbbbbbb

bbbbbbbb

bbbbbbbb

bbbbbbbb

B

PEPEPEPE

(b)

Lemma 3 n n matrix multiplication can be performed inrn2 cycles using n/r PEs, each with 1 MAC, 1 register and

1 Block SelectRAM of2n words and 1 I/O port where n

is divisible by r.

Proof: n n matrix multiplication can be decomposed

into r3n/rmatrix multiplications. Using Corollary 1 with

n replaced by n/r, the result is obtained. The matrix

operand management would be like this: Matrix A is fed

in with major sequence of the row of sub-matrix, and

minor sequence of row order in each sub-matrix; Matrix

B resides in the Block SelectRAM, with major order in

the column of sub-matrix and minor order of each column

within the sub-matrix. For example, if we decompose an8 8 matrix multiplication with factor ofr= 2, we can

manipulate the matrices in the arrow sequence as depicted

in Figure 2.

Figure 2. Decomposition of Matrix Multiplication in the

Proposed Scheme

Block SelectRAM of PEs are configured in the order as

shown in Figure 2 (b). The way that Matrix A is fed is

illustrated in the following Pseudo code:For major-row_count = 1 to rdo

For major-row = 1 to rdo

For major-column = 1 to rdo

For minor-row = 1 to n/rdoFirstFor minor-column = 1 to n/rdo

8887868584838281

7877767574737271

6867666564636261

5857565554535251

4847464544434241

3837363534333231

2827262524232221

1817161514131211

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

A

aik = AijIf (minor-column = n/r) loop again, else minor-

column++;

If (minor-row = n/r) loop again, minor-row++;

If (major-column = r) loop again, else major-

column++;

If (major-row = r) loop again, else major-row++;

If (major-row_count = r) end loop;

Where aik is the register ofaik in Figure 1 and Aij is the

current element of matrixA ready for feeding in.

Lemma 3 caters for area and latency trade-off. A smaller

value ofn/rreduces the number of PEs, resulting in lesser

area. However, it increases the number of cycles to

complete the matrix multiplication.

Next

8887868584838281

7877767574737271

6867666564636261

5857565554535251

4847464544434241

3837363534333231

2827262524232221

1817161514131211

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

aaaaaaaa

A

3. FPGA implementation

3.1 Performance Comparison

The matrix multiplier described above was implemented

in Xilinx Virtex-II device and its performance in term of

area and latency metrics was evaluated.

We define the performance equation to be Perf = n3 /

(slices Latency), where n is the matrix size, and slices

and latency are for the area consumption and computing

(a)

3

Proceedings of the EUROMICRO Systems on Digital System Design (DSD04)0-7695-2203-3/04 $ 20.00 IEEE

Authorized licensed use limited to: Pune Institute of Computer Technology. Downloaded on October 30, 2009 at 05:15 from IEEE Xplore. Restrictions apply.

-

8/8/2019 Matrix Multipliction

4/5

time respectively. By using the metrics slices Latency

(AT) for evaluation, we are able to take into account the

effect of increased numbers of processing elements and

the area differences for various types of memory. This is

especially relevant in an era of deep pipelines and huge

caches where small performance improvements are

bought at the cost of dramatic increases in area.

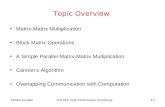

Figure 3 shows the performance evaluation of the 3

existing designs against our proposed one for various

sizes of matrix multiplication. The performance equation

shows the significant improvement over the existing

modules under the AT metrics calibration. The

comparable linear array design can run almost 2 times

faster than the proposed module, but the performancedeteriorates after n=15 due to the significant slice

consumption beyond that point.Table 1 shows the different matrix multiplication modules

with various area and latency tradeoff with the Xilinx

reference design run at 154MHz, the module generated by

Core Generator at 113MHz, the linear array at 166MHz

and the proposed module at 74MHz. The reason for

which our design runs at a relative low rate is due to our

non-pipelined design architecture and this part needs

further optimization. Note that the modules by Core

Generator and by Xilinx use single multiplier so the area

is the same for all the matrix sizes.

0 5 10 15 20 25 30 35 40 45 500.4

0.6

0.8

1

1.2

1.4

1.6

1.8

2Perf=n3/(SlicesxLatency)

Matrix Size : n

PerformanceValues

XilinxCoreGenLinearArrayProposed

Table 1. Comparison of 3 existing designs against the

proposed design for various sizes of matrix multiplication

(a) Area Comparison

Matrix

Size

AXilinx

(slices)

ACoreGen

(slices)

ALinearArray

(slices)

AProposed

(slices)

3 3 207 158 393 1106 6 207 158 786 2199 9 207 158 1179 334

12 12 207 158 1572 44615 15 207 158 1965 55824 24 207 158 3912 89348 48 207 158 9360 1798

Figure 3. Performance Evaluation of Matrix

Multiplication with Various Sizes

Lemma 2 and its corollaries show the ability of our design

to be configured as different types of processor according

to different specific requirements. By instantiating the

column parameter all with the first column number, we

get a cluster of vector multipliers that compute in parallel

and can achieve the maximum speedup in any kind of n- processor mode with computation cycles ofn

2. Each

vector multiplier can also be used individually as an

uniprocessor for matrix-vector multiplication. Table 2

shows the latency of the proposed module when

configured as an Uniprocessor, a Systolic Array and the

an Optimal Parallel module:

(b) Latency Comparison

Matrix

Size

LXilinx(cycles

/s)

LCoreGen(cycles

/s)

LLinearArray(cycles

/s)

LProposed(cycles

/s)

3 3 45/0.292

45

/0.398

16

/0.096

13

/0.175

6 6 360/2.337

288

/2.549

49

/0.295

43

/0.581

9 9 1215/7.890

891

/7.885

100

/0.602

91

/1.229

12 12 2280/14.805

2016

/17.841

169

/1.018

157

/2.121

15 15 5625/36.526

3825/33.850

256/1.542

241/3.256

24 24 23040/149.610

14976

/132.531

625

/3.765

601

/8.121

48 48 184320/1196.883

115200

/1019.469

2401

/14.464

2353

/31.797

Table 2. Latency for Various Size of Matrix

Multiplication in Versatility of the Proposed Module

Proposed module configured as:Matrix

Size Uniprocessor

(cycles)

SystolicArray

(cycles)

Parallel

(cycles)3 3 28 13 106 6 217 43 379 9 730 91 82

12 12 1729 157 14515 15 3376 241 22624 24 13825 601 57748 48 110593 2353 2305

4

Proceedings of the EUROMICRO Systems on Digital System Design (DSD04)0-7695-2203-3/04 $ 20.00 IEEE

Authorized licensed use limited to: Pune Institute of Computer Technology. Downloaded on October 30, 2009 at 05:15 from IEEE Xplore. Restrictions apply.

-

8/8/2019 Matrix Multipliction

5/5

Note that the performance evaluation in Table 1 and

Figure 3 is carried out by instantiating the proposed

module in the systolic array mode, while the parallel

mode can achieve even greater improvement.

5

linearly distributed along the Block Ram columns which

Figure 4 shows the Area-Latency tradeoffs as stated in

Lemma 3 with n= 48. For matrices of other sizes, the

trend remains the same. We can see from the chart thatthe metrics Area-Latency are actually inversely

proportional.

Figure 4. Area-Latency Tradeoffs for Matrix

Multiplication (n=48)

3.2 Partial Reconfiguration

One of the novel features in our architecture is the partial

reconfigurability. As the target device Virtex-II FPGA

supports partial configuration, we can partially change

our design within independent configuration bitstream

flows and modify only the desired parts of the silicon

without stopping the processing or reprogramming the

whole device. This gives us a novel space to work in

which the cost of reconfiguration can be alleviated by

reduced size of the bitstream.

The contents of our matrix multiplier can be changed

dynamically by partially reconfiguring the memory cell of

the Block Ram embedded in the Virtex-II device. In this

way, the modification of the matrixB multiplicand can be

carried out at run-time without re-synthesizing the whole

design flow.

At this point, only the contents of matrix multiplicand can

be partially configured. We will extend the components

with partially reconfigurability to the clock templates,

data width, and the number of processing elements for

different matrix size.

Figure 5 shows the whole implementation of 4848

matrix multiplication. The rectangles designate the PEs

each contains a Block Ram for partial content

implementation.

Figure 5. 4848 Matrix product implementation on

4. Conclusion

computation and area efficient architecture for matrix

. References

] Ju-Wook Jang, Seonil Choi, and Viktor K. Prasanna, Area

ccelerating

and M. Flynn, PAM-Blox: High

anna Kumar and Y. Tsai, On Synthesizing

x Application Note XAPP284, Virtex-II Series,

Area-Latency tradeoffs for matrix multiplication

2000

PE1

PE2

1800

1600

1400

Area(Slices)

1200

1000

800

600

400

200

Virtex-II 4000, PEs distribution0 2 4 6 8 10 12x 10

4

Latency (cycles)

A

multiplication is proposed with instantiation versatility

and feature of content partial reconfiguration. We

demonstrate the improved area and latency tradeoff by

comparing its performance with existing designs.

5

[1

and Time Efficient Implementation of Matrix Multiplication on

FPGAs, The First IEEE International Conference on Field

Programmable Technology (FPT), December 2002.

[2] A. Amira, A. Bouridane, and P. Milligan, A

Matrix Product on Reconfigurable Hardware for Signal

Processing, Field- Programmable Logic and Applications

(FPL), pp. 101-111, 2001.

[3] O. Mencer,M. Morf,

Performance FPGA Design for Adaptive Computing, IEEE

Symposium on FPGAs for Custom Computing Machines, pp.

167-174, 1998.

[4] V. K. Pras

Optimal Family of Linear Systolic Arrays for Matrix

Multiplication, IEEE Transactions on Computers, Vol. 40, no.

6, 1991.

[5] Xilin

http://www.xilinx.com, 2003.

Proceedings of the EUROMICRO Systems on Digital System Design (DSD04)0-7695-2203-3/04 $ 20.00 IEEE