Marc Strickert and Barbara Hammer- Merge SOM for temporal data

Transcript of Marc Strickert and Barbara Hammer- Merge SOM for temporal data

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

1/37

Merge SOM for temporal data

Marc Strickert

Pattern Recognition Group,Institute of Plant Genetics and Crop Plant Research Gatersleben

Barbara Hammer

Institute of Computer Science,Technical University of Clausthal

Abstract

The recent merging self-organizing map (MSOM) for unsupervised sequence process-ing constitutes a fast, intuitive, and powerful unsupervised learning model. In thispaper, we investigate its theoretical and practical properties. Particular focus is puton the context established by the self-organizing MSOM, and theoretic results onthe representation capabilities and the MSOM training dynamic are presented. Forpractical studies, the context model is combined with the neural gas vector quan-tizer to obtain merging neural gas (MNG) for temporal data. The suitability ofMNGis demonstrated by experiments with artificial and real-world sequences with one-

and multi-dimensional inputs from discrete and continuous domains.

Key words: Self-organizing map, temporal Kohonen map, sequence processing,recurrent models, neural gas, fractal encoding, finite automata, speakeridentification, DNA splice site detection.

1 Introduction

The design of recursive data models is a challenging task for dealing withstructured data. Sequences, as a special case, are given by series of tempo-rally or spatially connected observations DNA chains and articulatory timeseries are two interesting examples of sequential data. Sequential data play amajor role in human processing of biological signals since virtually all visual,

Email addresses: [email protected] (Marc Strickert),

[email protected] (Barbara Hammer).

Preprint submitted to Elsevier Science 19 October 2004

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

2/37

auditory, or sensorimotor data are given as sequences of time-varying stimuli.Thus, biologically plausible and powerful neural sequence processing modelsare interesting from a practical as well as from a cognitive point of view.

Unsupervised self-organization is a particularly suitable paradigm for biologi-

cal learning because it does not rely on explicit teacher information and as ituses intuitive primitives like neural competition and cooperation. Kohonensself-organizing map (SOM) is a well-known method for projecting vectors of afixed, usually high dimension onto a low-dimensional grid, this way enablinganalysis and visualization [11]. In contrast to the SOM, the neural gas (NG)algorithm yields representations based on prototypes in the data space, whichcooperate in a data-driven topology and yield small quantization errors [13].However, in their original formulations, SOM and NG have been proposed forprocessing real-valued vectors, not for sequences.

Given sequential data, vector representations usually exist or can be found forsingle sequence entries. For vector quantizer models, such as the mentionedSOM and NG, temporal or spatial contexts within data series are often real-ized by means of data windows. These windows are constructed as serializedconcatenations of a fixed number of entries from the input stream; problemsrelated to this are a loss of information, the curse of dimensionality, and usu-ally inappropriate metrics. The last issue, inappropriateness, can be tackledonly partially by using adaptive metrics [17,19].

Recently, increasing interest can be observed in unsupervised recurrent self-

organizing networks for straight sequence processing. Prominent methods arethe temporal Kohonen map (TKM), the recurrent self-organizing map (RSOM),recursive SOM (RecSOM), and SOM for structured data (SOMSD) [1,7,24,26].These are unsupervised neural sequence processors with recurrent connectionsfor recursive element comparisons. Thereby, similarity structures of entire se-quences emerge as result of recursive operations. TKM and RSOM are based onbiologically plausible leaky integration of activations, and they can be used toexplain biological phenomena such as the development of direction selectivityin the visual cortex [4]. However, these two models use only a local form ofrecurrence. RecSOM and SOMSD use a richer recurrence as demonstrated inseveral experiments [7,26].

First experimental comparisons of the methods and steps towards an inves-tigation of their theoretical properties have been presented recently in thearticles [8,9]. It turns out that all models obey the same recurrent winner dy-namic, but they differ in the notion of context; internally, they store sequencesin different ways. This has consequences on their efficiency, their representa-tion capabilities, the induced metrics, and the possibility to combine theircontexts with different lattice structure. The focus of this work is an investi-gation of the recent merge SOM (MSOM) approach [20]. MSOM is based upon

2

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

3/37

a very effective context model which can be combined with arbitrary latticestructures: the temporal context of MSOM combines the currently presentedpattern with the sequence history in an intuitive way by referring to a mergedform of the winner neurons properties.

Especially for unsupervised approaches, a thorough understanding of the emerg-ing representation is needed for a valid interpretation. In this article, we willinvestigate the representation model and the capacity ofMSOM in theory andpractice. We demonstrate that MSOM develops a kind of fractal sequence en-coding which makes it comparable to the context representation of TKM andRSOM. However, unlike TKM, the encoding is a stable fixed point of the learn-ing dynamic, and it has got a larger flexibility than RSOM, because the internalrepresentation and the influence of the context can be controlled separately.We prove that MSOM, unlike TKM and RSOM, can simulate finite automatawith constant delay. Moreover, we demonstrate in several experiments the

appropriateness of the model for unsupervised and supervised learning tasks.

2 Related Work

Before the MSOM context model is discussed in detail, a quick reminder ofthe original self-organizing map and alternative vector quantization modelsis given. Then the focus is put on the short revision of existing SOM-basedmodels for sequences before introducing MSOM.

2.1 Models for plain vector processing

The original self-organizing map for plain vector processing is given by a setof neurons N = {1, . . . , m} equipped with weights wi Rn, and a topologyof the neuron configuration with a function dN : N N R. In the standardSOM, neurons are usually connected in a two-dimensional grid, and dN denotesthe distance of two neurons in that grid. A similarity measure on the weight

space Rn is fixed; often the standard squared Euclidean metric 2 is taken.The goal of training is to find prototype weights which represent given datapoints well in Rn; simultaneously, the topology of the neural grid should matchthe topology of the data. For training, data vectors are iteratively presented,and the weights of the closest neuron and its neighbors in the grid are adaptedtowards the currently processed data point. The update formula for neuron igiven pattern xj is given by the formula

wi = h(dN(i, I)) (xj wi) ,

3

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

4/37

where I is the winner, i.e. the neuron with smallest distance from xj, and h()is a decreasing function of increasing neighborhood distance, e.g. a Gaussianbell-shaped curve or a derivative of the Gaussian.

Popular alternatives to the standard SOM with different target topologies are

the neural gas (NG) and the hyperbolic SOM (HSOM). The HSOM model op-erates on a target lattice with exponentially increasing neighborhood size [16];NG adapts all neurons according to their rank with respect to the distancefrom the current data point, i.e. according to a data-driven optimal latticestructure without topology constraints [13].

2.2 Models for sequence processing

A number of recursive modifications of the SOM have been proposed in liter-ature, and the most prominent models are briefly revisited for a motivation ofthe MSOM model.

TKM, the temporal Kohonen map, is an early approach to SOM-basedsequence processing which has been proposed by Chappell and Taylor [1].The TKM neurons implement recurrence in terms of leaky signal integration.For a sequence (x1, . . . , xt) with entries xi Rn, the integrated distance ofneuron i with weight vector wi is computed by

di(t) =t

j=1

(1 )(tj) xj wi2 .

This distance expresses the neurons quality of fit, not only to the currentinput pattern, but also to the exponentially weighted past. The parameter (0; 1), kept constant, controls the rate of signal decay during summation.This way, the balance between the currently processed and the historic inputsaffects winner selection for getting the best matching compromise between thepresent and the past.

An equivalent recursive formulation for the integrated distance di(t) of neu-ron i after the t th time step is di(t) = xt wi2 + (1 ) di(t 1)

with di(0) := 0. TKM training is realized by adapting the weights wi at eachtime step towards the unintegrated current input xt using the standard SOMupdate rule wi = h(dN(i, I)) (xt wi) [1]. This way, by making theprototype weights more similar to the input vector, the correlation of eitheris increased which is known as Hebbian learning. Temporal context for TKMis represented by the activation of the winner neuron in the previous timestep. Evidently, this context choice does not depend on the particular choiceof the neuron target grid architecture and it can be combined with hyperboliclattices or the data-optimum topology of neural gas.

4

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

5/37

RSOM, the recurrent SOM proposed by Koskela et al. is similar to TKM;it takes the integrated direction of change into account, not only for distancecalculation but also for weight update [12]. Both RSOM and TKM refer tocontext by signal integration. Only RSOM stores context information in theweight vectors for further comparison. Due to vector summation, the distinc-

tion between the currently processed pattern and the history is impossible,which makes the interpretation and analysis of trained RSOMs a difficult task.

RecSOM uses a richer context representation [26]: in addition to a weightwi Rn, each neuron i possesses a vector ci R|N | representing the temporalcontext as the activation of the entire map in the previous time step. Thedistance is

di(t) = xt wi2 + C t ci2 ,

whereby , > 0 are fixed values to control the influence of the context com-pared to the presented sequence element. The current context describing the

previous time step is an exponentially weighted vector of neuron activationsin the last time step, Ct := (exp(d1(t 1)), .. . , exp(d|N |(t 1))). Trainingadjusts both weight and context of the winner neuron and its neighborhoodtowards the current values xt and Ct. This context type does not depend on aregular neuron grid and it can be used also with hyperbolic lattices or withNG-topology. However, this context model is computationally costly becauseextra dimensions related to the number |N | of neurons in the map are addedto each neuron.

SOMSD has been proposed for general tree structures [7], but we focus on

the special case of sequences. Like for RecSOM, an additional context vector ci

is used for each neuron, but only the last winner index is stored as lightweightinformation about the previous map state. Thus, ci is element of a real vectorspace which includes the grid of neurons, e.g. ci R2, if a two-dimensionalrectangular lattice is used. SOMSD distances are

di(t) = xt wi2 + dG(It1, c

i) ,

whereby It1 denotes the index of the winner in step t 1. dG measuresdistances in the grid, usually in terms of the squared Euclidean distance ofthe neurons low-dimensional lattice coordinates. During training, the winner

and its neighborhood adapt weights and contexts ofxt and It1, respectively.Thereby, grid topology and the indices of neurons are expressed as elementsof a real-vector space. Obviously, the context of SOMSD relies on ordered ad-dressing in terms of the topology of the neural map; consequently, it cannotbe combined with alternative models such as neural gas. If complex sequencesare mapped to low-dimensional standard Euclidean lattices, topological mis-matches can be expected. Thus, since the distance between context vectors isdefined by the lattice-dependent measure dG, this approach must be carefullyrevised to handle alternative target lattices.

5

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

6/37

HSOM-S transfers the idea of SOMSD to more general grids expressed bya triangulation of a two-dimensional manifold, such as a hyperbolic plane, inorder to better match the data topology of sequences, as discussed in [21].The previous winner, adjusted through Hebbian learning, is referred to by acoordinate within a triangle of adjacent neurons. For a stable learning, the

context influence starts at = 0, for getting an initially weight-driven SOMordering, and it is then increased to a desired influence value. Such an approachconstitutes a step towards more general lattice structures which are bettersuited for the presentation of input sequences but it is still limited to a priorlyfixed topology.

Obviously, RecSOM offers the richest notion of history because temporal con-text is represented by the activation of the entire map in the previous timestep; therefore, however, RecSOM also constitutes the computationally mostdemanding model. SOMSD and HSOM-S still use global map information, but

in a compressed form; their storage of only the location of the winner is muchmore efficient and noise-tolerant, albeit somehow lossy, in comparison to thewhole activity profile. In both models, context similarity, expressed by thecomparison of distances between grid locations, depends on the priorly chosenstatic grid topology. For this reason, this back-referring context model cannotbe combined with the dynamic rank-based topology of NG for data-optimalrepresentations. Context ofTKM and RSOM is just implicitly represented bythe weights of neurons, i.e. without explicit back-reference to previous mapactivity; hence, both methods sequence representation domains are restrictedto superpositions of values from the domain of the processed sequence entries.

A brief overview of context complexity representable by the models is givenin [20], a more detailed discussion is found in [9]. With reference to the prop-erties of the above-mentioned models, a wish list for a new type of contextmodel is derived: The lesson learned from Voegtlins RecSOM is the beneficialconsideration of the map activity in the previous time step as independent partof the neurons. SOMSD and HSOM-S compress the costly RecSOM activationprofile to only the topological index of the last winner in the neuron grid. NGyields better quantization performance than the SOM. TKM context is givenby signal integration; this element superposition would yield efficient fractalcontext encodings, if these were stable fixed points of the TKM training dy-namic, but usually they are not. In the following, we define and investigate indetail another model, the merge SOM (MSOM), which allows to integrate ideasfrom the listed methods and to also tackle some of the mentioned deficiencies.

The MSOM model has been proposed in the article [20] and first theory andexperiments have been presented in [9,22]. MSOM combines a noise-tolerantlearning architecture which implements a compact back-reference to the pre-vious winner with separately controllable contribution of the current inputand the past with arbitrary lattice topologies such as NG. It converges to

6

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

7/37

an efficient fractal encoding of given sequences with high temporal quantiza-tion accuracy. With respect to representation, MSOM can be interpreted asan alternative implementation of the encoding scheme of TKM, but MSOMpossesses larger flexibility and capacity due to the explicit representation ofcontext: In MSOM neurons specialize on both input data and previous winners

in such a way that neuron activation orders become established; thus, order iscoded by a recursive self-superposition of already trained neurons and the cur-rent input. Consequently, this yields a successive refinement of temporal spe-cialization and branching with fractal characteristics similar to LindenmayersL-systems. Unlike RecSOM, this context representation is space-efficient andunlike SOMSD it can be combined with arbitrary lattices.

3 The Merge SOM Context (MSOM)

In general, the merge SOM context refers to a fusion of two properties char-acterizing the previous winner: the weight and the context of the last winnerneuron are merged by a weighted linear combination. During MSOM training,this context descriptor is kept up-to-date and it is the target for the contextvector cj of the winner neuron j and its neighborhood. Target means that thevector tuple (wi, ci) R2n of neuron i is adapted into the direction of thecurrent pattern and context according to Hebbian learning.

3.1 Definition of the Merge Context

A MSOM network is given by neurons N = {1, . . . , m} which are equippedwith a weight wi Rd and context ci Rd. Given a sequence entry xt, thewinner It is the best matching neuron i, for which its recursive distance

di(t) = (1 ) xt wi2 + C t ci2

to the current sequence entry xt and the context descriptor C t is minimum.Both contributions are balanced by the parameter . The context descriptor

Ct = (1 ) wIt1 + cIt1

is the linear combination of the properties of winner It1 in the last time step,setting C1 to a fixed vector, e.g. 0. A typical merging value for 0 1 is = 0.5.

Training takes place by adapting both weight and context vector towards thecurrent input and context descriptor according to the distance of the neuron

7

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

8/37

from the winner given by a neighborhood function dN : N N R thatdefines the topology of MSOM neurons:

wi = 1 h(dN(i, It)) (xt wi)

ci = 2 h(dN(i, It)) (Ct ci)

1 and 2 are the learning rates and, as beforehand, h is usually a Gaussianshaped function.

3.2 Merge Context for Neural Gas (MNG)

Since the context does not depend on the lattice topology, a combination of thedynamic with alternative lattice structures like the neural gas model [13] orthe learning vector quantization model [11] is easily possible. Here, we combinethe context model with neural gas to obtain merging neural gas (MNG).

The recursive winner computation is the same as for MSOM. The adaptationscheme is customized appropriately to incorporate the data-driven neighbor-hood. After presenting a sequence element xt, the rank

k = rnk(i) =

{i | di(t) < di(t)}

of each neuron i is computed. This accounts for the topological informationthat a number of k neurons is closer to xt than neuron i is. Upon that, rigidneuron competition is established, because the update amount is an exponen-tial function of the rank:

wi = 1 exp(rnk(i)/) (xt wi)

ci = 2 exp(rnk(i)/) (Ct ci)

The context descriptor Ct has to be updated during training to keep trackof the respective last winner. In experiments, the learning rates were set toidentical values 1 = 2 = . The neighborhood influence decreases expo-nentially during training to get neuron specialization. The two update rulesminimize separately the average distortion error between the internal proto-type states and the target points in terms of a stochastic gradient descent [13].In combination, these separate minima need not lead to a global minimum;however, the minimization target of either can be controlled via the distancemeasure di(t).

8

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

9/37

Choice of the weight/context balance parameter

A crucial parameter of the recursive distance di(t) is which is consideredin this paragraph. The initial contribution of the context term to the dis-

tance computation and thus to the ranking order is chosen low by settingthe weight/context balance parameter to a small positive value. Since theweight representations become more reliable during training, gradually moreattention can be paid to the specific contexts that refer to them. Thus, afteran initial weight specialization phase, can be released and directed to a valuethat maximizes the neuron activation entropy.

In computer implementations, such an entropy-controlled adaptation has beenrealized: is increased, if the entropy is decreasing, i.e. the representationspace for the winner calculation is widened by allowing more context influence

to counteract the specialization of the neurons on only the input patterns;otherwise, if the entropy is increasing, is slightly decreased in order to allowa fine tuning of the context influence and of the ordering. As a result, thehighest possible number of neurons should on average be equally activeby the end of training. This entropy-driven -control strategy has proved tobe very suitable for obtaining good results in the experiments.

3.3 Properties of the merge context

For MNG and MSOM learning, the optimum choice for the weight vector andthe context vector is obtained for their minimum distance to the currentlypresented pattern and the context descriptor. It has been shown for vanish-ing neighborhoods, i.e. for the finalizing training phase, that the optimumweight vector converges towards the pattern which is responsible for winnerselection and that the optimum context vector converges to a fractal encodingof the previously presented sequence elements [9]. The term fractal is usedto express that ordered temporal element superposition leads to a more orless uniform filling of the state space, this way relating location to meaning.

Importantly, the optimum vectors for fractal encoding result as stable fixedpoints from the MSOM or MNG dynamic.

Theorem 1 Assume thatMSOMwith recursive winner computation

di(t) = (1 ) wi xt2 + C i

(1 ) wIt1 + cIt1

2

is given. Assume the initial context is C1 := 0. Assume a sequence with entriesxj for j 1 is presented. Then the winners optimum weight and context

9

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

10/37

vectors at time t

wopt(t) = xt, copt(t) =t1j=1

(1 )j1 xtj ,

emerge from the learning dynamic as stable fixed points, provided that enoughneurons are available, that neighborhood cooperation is neglected, and that thevectors

tj=1 (1 )

j1 xtj are pairwise different for each t.

For convenience, a proof of Theorem 1 is provided in the Appendix A; thisproof is a simplified version of the one given in [9]. One can see from theappended proof that, starting from random positions, weights and contextsconverge to the postulated settings in the late training phase, when neighbor-hood cooperation vanishes. In addition, the proof supports the experimentalfindings that convergence takes place consecutively, first for the weights, thenfor the context representations further back in time. This fact may explainthe success of entropy-based choices of , which guarantees the successiveconvergence of weights and contexts over time.

In subsequent experiments, the emergence of a space-filling set resembling thefractal Cantor set with non-overlapping context can be observed for a mergingparameter of = 0.5. The spreading dynamic of the zero-initialized context inthe weight space is self-organizing with respect to the density of the contextualinput, which is a benefit over encoding ab initio. Since the context is a functionof the previous winners weight and context, the adaptation is a moving targetproblem; therefore, it is generally a good policy to update weights faster than

contexts, or to put more influence on the pattern matching than on the contextmatching by choosing < 0.5.

The theoretical result of the Theorem 1 neglects the neighborhood structureof the neural map which is responsible for topological ordering of the weightsand contexts. Theoretical investigations of neighborhood cooperation for MNGand MSOM face severe problems: unlike NG, the MNG update dynamic is, dueto context integration, only an approximate gradient descent of an appropriateerror function as shown in [8]. Therefore, a potential function is not availablefor this case. For MSOM, the investigation of both correlated components,the weight and context representation, would be necessary. Also for standardSOM, results are available only for the one-dimensional case and results forhigher dimensions are restricted to factorizing distributions [3,10]. Thus, thesetechniques cannot be transferred to our setting. However, in the experimentsection of this paper, we will provide experimental evidence that a reasonableordering of both, weights and contexts, can be observed for MNG, the MSOMmodel combined with neural gas.

We would like to mention that TKM and RSOM are also based on a fractal en-coding of sequences, as pointed out in the articles [12,24,25]. TKM uses weight

10

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

11/37

vectors only for which the standard Hebbian learning does not yield the op-timum codes as fixed points of the training dynamic. RSOM uses a differentupdate scheme which is based on integrated distances and which yields opti-mum codes during training. Unlike MSOM, no explicit context model is used.Therefore, a parameter which controls the influence of the context for winner

determination and a parameter which controls the internal representation ofsequences cannot be optimized independently. Instead, the single context pa-rameter determines both the context influence on the winner selection andthe adaptation of the sequence representation space. MSOM allows an inde-pendent optimization of these parameters. In particular, as explained above,it allows a very efficient adaptation scheme for the parameter based on themap entropy. We will see in experiments that values = are beneficial inpractical applications.

An additional advantage of MSOM is that, by dint of the winner compu-

tation, information of longer time spans than for TKM and RSOM can bestored, and MSOM is less affected by noise. This claim can be accompaniedby an exact mathematical result: the dynamic of MSOM allows to simulatefinite automata with constant delay whereas the dynamic of TKM does not.We now address this fundamental issue, the representation capacity of MSOMin principle. This refers to the question whether one can explicitly characterizeall computations possible within the dynamic which can be implemented bythe recursive winner computation defined above. Thereby, aspects of learningor topological ordering are neglected and, as beforehand, we disregard neigh-borhood cooperation. In particular, the lattice topology is not relevant. Forsimplicity, we assume a finite input alphabet.

We will show that it is in fact possible to find an equivalent characterizationof the capacity in these terms: the recursive winner dynamic based on themerge context is equivalent to finite automata, as specified and proved below.We will also show that TKM cannot simulate automata with constant delay.This provides a mathematical proof that the more general recursion of MNGis strictly more powerful than TKM.

We first recall the definition of automata and then we define the notion ofsimulation of an automaton by a recursive self-organizing map model with

constant delay. Afterwards, we turn towards the results describing the capacityof MSOM and TKM.

Definition 1 A finite automaton consists of a triple

A = (, S , )

whereby = {1, . . . , ||} is a finite alphabet of input symbols, S = {sta1,. . . , sta|S|} is a set of states, and : S S is the transition function. denotes the set of finite sequences over . The automaton A accepts a

11

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

12/37

sequence s = (i1, . . . , it) iff it(s) = sta|S|, whereby

it is defined asthe recursive application of the transition function to elements of s, startingat the initial state sta1:

it

(s) =

sta1 if s is the empty sequence, i.e. t = 0,

(it(i1, . . . , it1), it) if t > 0 .

The dynamic equation ofMSOM and TKM has been defined for all entries ofone given sequence. In a natural way, we extend this notation to the case ofdifferent input sequences : given a sequence s = (x1, . . . ,xt) with elements inR

d, the distance ofs from neuron i is defined as

di(s) = di(xt) ,

i.e. it yields the distance of the last element xt of the sequence s which isprocessed within the context given by the previous entries of s. This extensionallows to introduce the notation of simulation of an automaton with constantdelay by a recursive neural map.

Definition 2 Assume a given finite automaton A = (, S , ). A recursiveself-organizing map with input sequences in (Rd) simulates A with constantdelay delay if the following holds: there exists a mapping enc : (Rd)delay

and a specified neuron with indexI0 such that for every sequence s in holds:

s is accepted by A I0 = argminj{dj(enc(s))} ,

wherebyenc(s) denotes the component-wise application ofenc to s = (i1 , . . . , it):(enc(i1), . . . , enc(it)).

The dynamic provided by the merge context can simulate finite automata withconstant delay. More precisely, the following holds:

Theorem 2 Assume that A = (, S , ) is a finite automaton with alphabet = {1, . . . , ||} and states S = {sta1, . . . , sta|S|}. Then a neural map withmerge context andL1-norm can be found with input dimensiond = |S|||+4,

m = O(|S| ||) neurons, and initial contextC0

, and an encoding functionenc : (Rd)4 exists which depends only on the quantities |S| and ||, suchthat the neural map simulates A with delay 4.

The proof can be found in the Appendix B.

Since, when the merge context is used, a fixed map only yields a finite numberof different internal states, it is obvious that a recursive map provided withthe merge context can simulate at most finite automata. Thus, equivalenceholds.

12

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

13/37

In contrast, the TKM cannot simulate every automaton with a constant de-lay which is independent of the automaton because of its restricted contextrepresentation. More precisely, one can show the following result:

Theorem 3 Assume that the alphabet consists of only one element . As-

sume a fixed constant delay. Assume a finite automaton with alphabet beingsimulated by a givenTKMand an encoding function with constant delay. Thenthe automaton is trivial, i.e. it accepts all sequences or it rejects all sequences.

The proof is provided in the Appendix C.

4 Experiments

In the following, experiments are presented to shed light on different aspects ofthe MNG model, such as the quality of the obtained context representation, thestorage lengths of learned histories, the reconstruction of temporal informationfrom trained networks, and the potential for using posterior neuron labelingfor classification tasks.

Six data sets have been used for training: three discrete and three contin-uous sets. In the discrete domain, 1 a stream of elements from binary au-tomata, 2 words generated with the Reber grammar, and 3 DNA sequencesare processed; in the continuous domain, 4 the continuous one-dimensional

Mackey-Glass time series, 5 a medical three-variate series with physiolog-ical observations, and 6 speaker articulation data containing sequences of12-dimensional cepstrum vectors.

Thereby, datasets 3 and 6 also contain class information which can be usedto evaluate the map formation with respect to this auxiliary data. The DNAsequences will be considered for splice site detection, and the articulation datawill be used for speaker identification; the classifications are obtained from themap by calculating the activation histograms of neurons for different data toget a probability-based class assignment to the neurons.

4.1 Binary automata

In the first learning task, the focus is put on the representation of the mostlikely sub-words in binary sequences generated by automata with given transi-tion probabilities. Two questions concerning the MNG context are addressed:Can the fractal encoding stated in theory be observed in experiments? Whatis the empirical representation capability?

13

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

14/37

Context development

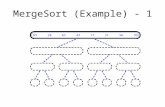

Figure 1 displays the experimental context space resulting from MNG trainingof 128 neurons for a random binary sequence that contains 106 symbols of0and 1 independently drawn with P(0) = P(1) = 1/2. Parameters are a learn-

ing rate of = 0.03, a fair combination of context and weight by = 0.5, andan initial context influence of = 0.001 that has reached by the entropy-based parameter control a final value of = 0.34 after training. Duringadaptation, two of the 128 neurons have fallen idle, because the specializa-tion on context, being subject to further updates, is a moving target problemwhich can cause prototypes to surrender at fluctuating context boundaries.The other 126 neurons represent meaningful sequences. Plot 1 is for reasonsof almost perfect symmetry with specialization to symbol 1 reduced to the 63active neurons that represent the current symbol 0. These neurons refer topositions in the context space located on the lower horizontal line (). The

depicted columns of

,

refer to the longest {0

,1

}-sequences which can beuniquely tracked back in time from the respective neurons. Thus, from bot-tom to top, the stacked symbols point into the further past of the neuronstemporal receptive fields. Furthermore, it can be observed that the emergedcontexts fill the input space in the interval [0; 1] with an almost equidistantspacing. In good agreement with the theory given in the Appendix A, thelearned sequences are arranged in a Cantor-like way in the space covered bythe neurons context specializations.

Representation capability

The average length of the binary words corresponding to Figure 1 is 5.36;this is quite a good result in comparison to the optimum context represen-tation of random strings with a temporal scope of log2(128) = 7 time stepsper neuron. More structure can be obtained from binary automata exhibitinga bias as a result of choosing asymmetric transition probabilities. We con-duct an experiment which has been proposed by Voegtlin in the article [26].The transition probabilities for a two-state automaton with symbols 0 and1 are chosen as P(0|1) = 0.4 and P(1|0) = 0.3. The other probabilities arethen fixed to P(0|0) = 1 P(1|0) = 0.7 and P(1|1) = 0.6. The temporally

stationary solution for the frequency of0

is given by the Master equation

0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1

temp.rec.f

ield

location in context space

Fig. 1. MNG context associated with the current symbol 0 of a binary sequence.The temporal receptive fields are specialized to sequences of for 0 and for 1.

14

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

15/37

P(0)/t = P(1) P(0|1) P(0) P(1|0) = 0 for P(0) = 4/7, inducingP(1) = 3/7.

Figure 2 shows neuron histograms that illustrate the effect of the entropy-controlled context influence parameter for 64 neurons trained on a biased

automaton. The first training phase yields two specialized neurons shown inthe inset reflecting the symbol probabilities P(0) = 4/7 and P(1) = 3/7. Aftertraining, a value of = 0.28 is obtained, for which the neuron activations aredisplayed by the large histogram in the figure. As a matter of fact, the entropy-based control scheme is suitable for involving all available neurons by allowingthe dynamic to account for the unfolding of neurons in the context space.

Another training run has been carried out for the same automaton for 100neurons with = 0.025, = 0.45, and the initial value of = 0 was steeredto a final value of 0.37 after 106 pattern presentations; this experimental setup

resembles much the one given by Voegtlin [26].

Figure 3 shows, in tree form, the resulting 100 MNG neurons receptive fieldswhich correspond to the longest words for which neurons are still unique win-ners. According to the higher frequency of symbol 0 that belongs to left-handbranches, the tree has got a bias towards long sequences for the left. The aver-age word length for this biased automaton is 6.16. The context representationtree is compared to the overall 100 most likely sequences produced by thatautomaton. As shown by the leaf branches in the figure, many neurons havedeveloped disjoint receptive fields, with the exception of transient neurons,indicated by bullets on the interior tree nodes, for which the descendants andleaves represent still longer sequences. After all, a total number of 63 longestwords can be discriminated by MNG, and they are in good correspondence tothe most frequent 100 sequences generated by the automaton.

0

0.01

0.02

0.03

0.04

0.05

0 10 20 30 40 50 60

act

ivationfrequency

neuron index

neuron activation

0

0.1

0.2

0.3

0.4

0.5

0.6

0 10 20 30 40 50 60

Neuron activities before context adaptation

Fig. 2. Sorted neuron activation frequencies for the -entropy control strategy for abiased binary automaton. Inset: before context consideration, two neurons special-ize, reflecting the sequence element frequencies P(0) = 3/7 and P(1) = 4/7. Largeplot: after entropy maximization, all 64 neurons are involved.

15

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

16/37

time

stepsintopast

100 most likely sequences

MNG (2 idle neurons)

Fig. 3. Tree representation of MNG temporal receptive fields for the biased binaryautomaton. From bottom to top further past is addressed; the horizontal line marksthe (0/1)-dichotomy of the present instant. Left branching denotes 0-context, rightbranching 1. For comparison, the 100 most likely words of the automaton are dis-played in dashed style.

4.2 Reber grammar

So far, the automata experiments deal with sequence elements that can beeasily represented as one-dimensional real values. Now, the context represen-tation capabilities of MNG are studied for strings over a 7-letter alphabet.These sequences are generated by the automaton depicted in Figure 4 relatedto the Reber grammar [15]. The seven symbols have been encoded with pair-wise unit distance in a 6-dimensional Euclidean space, analogous to the fourcorners of a tetrahedron in 3-dimensional space. The concatenation of ran-domly generated Reber words led to sequences with 3 106 input vectors for

training and 10

6

vectors for testing.

For training, 617 MNG neurons are taken for the Reber grammar, a size thatcan be compared with the HSOM-S architecture [21]. The MNG merge para-meter is = 0.5, the context influence is initialized to = 0, the startingneighborhood size is = 617, and the context vector is initialized to 0 R6

which is the center of gravity of the embedded symbols. The learning rate is = 0.03; after training, the adjusted parameters are = 0.5 and = 0.43.

The context information stored by the ensemble of neurons has been analyzedin two ways: 1 externally, by using the test sequence to observe characteristic

neuron activation cascades, 2 internally, by backtracking typical activation

B

V

V

PX

X

P

ST

S

T

E

Fig. 4. Reber grammar state graph.

16

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

17/37

orders of each neuron based on the best matching predecessor context. Thelatter results rely exclusively on the trained map and not on the training data.

For the externally driven context characterization, the average length of Re-ber strings from the test sequence leading to unambiguous winner selection

is 8.902. Of the 617 available neurons, 428 neurons develop a specialization ondistinct Reber words. This is a first indication that the transition propertiesof the Reber grammar have been faithfully learned. The remaining 189 neu-rons (30%) represent Reber words as well, but they fall into idle states becausetheir representations are superseded by the better specialized competitors.

Further internal analysis, a one-step context backtracking, has been carriedout as follows: for each neuron i, all other neurons are visited and the mergingvector of their context vector and weight vector is computed; these mergingvectors are compared to the context expected by neuron i and sorted according

to the distances; a symbol is associated to each neuron in this list by means ofits weight vector. On average the first 67.9 neurons in this list correspondingto the best matching contexts represent the same symbol, before neurons fora different symbol are encountered. This large number strongly supports thefindings of the external statistics for both high context consistency and properprecedence learning.

Therefore, a three-step backtracking has been performed subsequently in orderto collect the 3-letter strings associated with each neuron, strings which arecomposed of symbols represented by the recursively visited best matchingpredecessors. The occurrence of these backtracked triplets have been counted.

Only valid Reber strings were found, of which the frequencies are given in

frequency

Reber triplet

Fig. 5. MSOM frequency reconstruction of trigrams of the Reber grammar. The darkbars show exact Reber triplet frequencies, the light bars show frequencies obtainedfor MNG. The correlation between both distributions is r2 = 0.77.

17

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

18/37

Figure 5. In general, the substring frequencies stored in the trained MSOMnetworks correspond well to the original Reber triplet frequencies, also shownin Figure 5. However, looking closer reveals an underrepresentation of thecyclic TTT and the TTV transitions, whereas SSS is over-represented, and theEndBeginT-state EBT switching is only weakly expressed; a systematical

bias cannot be derived easily. Still, the overall correlation of the probabilitiesof Reber trigrams and the reconstructed frequencies from the map is r2 = 0.77which is another indication of an adequate neural representation of the Rebertransitions.

In a final step, the backtracking and the string assembly has not stopped untilthe first revisitation of the neuron that started the recursion. This way, wordswith an average length of 13.78 are produced, most of them validated by theReber grammar. The longest word

TVPXTTVVEBTSXXTVPSEBPVPXTVVEBPVVEB

corresponds almost perfectly to the test data-driven specialization

TVPXTTVVEBTSXXTVPSEBPVPXTVVE

that has been determined by keeping track of the winners for the training set.A similarly high correspondence of the shorter strings could be observed forthe other neurons. These promising results underline that for a small numberof discrete input states a distinctive and consistent representation emerges inthe context space.

4.3 DNA sequences

The following real-life example provides clustering of DNA sequences. Thereby,labeling information that is excluded from training will be used to perform aposterior evaluation of the representation capability of the trained network. Inother words, classification will be obtained by labeling the neurons subsequentto training, and the classification accuracy on test data will be a rough mea-sure for the generalization ability of the MNG model. DNA data is taken fromSonnenburg who has used and prepared the genome from the Caenorhabdi-tis elegans nematode for studies on the recognition of splice sites [18]. Thesesites mark transitions from expressed to unexpressed gene regions and viceversa, and they are thus important for the assembly of structural and regula-tory proteins that constitute and control biochemical metabolisms. Splice siterecognition is therefore a topic of interest for the understanding of genotype-phenotype relationships.

Specifically, five pairs of data sets for training and testing of acceptor sitedetection have been used. Each pair contains a number of 210,000 strings

18

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

19/37

over the {C,G,T,A}-alphabet with 50 nucleotides centered around potentialcanonic (A,G) splicing boundaries. These strings are labeled by true splicesite and decoy (no splice site); after training, these labels will be used forneuron assignments.

For training, the four nucleotides A, G, T, and C are encoded as tetrahedroncorner points centered around the origin of the 3-dimensional Euclidean space.Furthermore, the DNA strings have been split into a pre-splicing substring ofthe non-coding intron region and a post-splicing coding exon string to enablethe semantic differentiation of both cases. The pre-string has been reversedto first serve, during training, the important nucleotides close to the poten-tial splice location. Independent DNA samples are separated in the modelby activating a special neuron that represents the literal null context withundetermined zero weight vector, important for reseting the initial contextdescriptor of the next DNA string. In each run, a number of 25 cycles has

been trained, corresponding to 6 106 iterations of 10,000 strings with 24nucleotides each. Ten runs are calculated with identical training parameterson the five training sets separated into their pre- and post-substrings parts.The MNG networks have 512 neurons and the parameters are learning rate of = 0.075 and an initial context consideration value of = 0.001. The mixingfactor for the context descriptor has been set to = 0.75 in order to rate thecontext diversification higher than the specialization to one of only four rootsymbols.

After training, the resulting context values adjusted by entropy-based con-

trol are located in the large interval (0.3; 0.6). This observation is ex-plained by the fact that, in the final training phase, high specialization withsmall neighborhoods makes the winner selection insensitive to the weightmatching, because weights almost fit perfectly for one of the four symbols;what only matters is a context contribution sufficiently greater than zero. Ifthe splice site detection accuracy is taken for network comparison, the ob-tained models corresponding to either pre-splice or post-splice DNA stringsare mutually very similar in their respective case.

Classification is carried out after unsupervised training in an easy manner:

training sequences are presented element by element; thereby the winner neu-rons count, in associated histograms, the two cases of seeing a sequence markedas splice site or as decoy string. This soft histogram-based labeling is normal-ized by scaling the bins with the corresponding inverse class frequencies andby scaling with the inverse neuron activation frequency. Then, a test string ispresented and a majority vote over the histograms for the winners of the pre-sented symbols determines the class membership. The classes obtained by thisvoting for all strings in the test set are finally compared against the correctclass labels known for the test set.

19

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

20/37

contextaxis2(orth.proj.)

context axis 1 (orth. proj.)

splice site

unknown

decoy

Fig. 6. Prototypes (2048) in MNG context space for DNA intron data with = 0.5.The orthogonal 2D-projection of the trained context tetrahedron shows the uti-lization of the space spanned by the four C,G,T,A-nucleotide vectors. Also, localcontext specialization can be observed in dependence of splice site and non-splicesite strings.

The default guessing classification error is 38.0%, because the overall classfrequencies are P(splice site) = 0.38 and P(decoy) = 0.62. For classifica-tion based on the post-splice site information, only this baseline value isreached: the average training error is 38.71%0.60%, the average testing error

is 39.43%0.56%. In contrast to that, for the pre-splice site strings, classifi-cation errors of 14.06%0.66% and 14.26%0.39% have been obtained forthe training and test sets, respectively. Although for supervised classifiers likeSVM with the sophisticated TOP kernel the error drops to only 2.2%0.2%,the result for unsupervised MNG training is still remarkable: the used archi-tecture only takes 512 neurons with 6 dimensions, which is much smaller thanthe TOP-SVM with 1,000 support vectors and 5,700 dimensions [18].

The observed differences between the classification results for the pre-splicingintron strings and the post-splicing exon strings are in good correspondencewith the biological knowledge about the splicing process: if a (A,G) pair de-notes a true splicing boundary, then it is typically preceded by a pyrimidine-rich region described by approximately 15 nucleotides [14]. MNG is able tocapture these consensus strings typical of true splice sites. For the exon stringsno such prominent regularity is known, they just re-initiate the coding of thenucleotide to amino-acid transcription without indicating that there has re-cently been a splice site. These post-splicing nucleotides thus cannot be usedfor splice site identification by MNG.

Finally, the focus has been put on the context development of the 3D-codes.

20

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

21/37

Figure 6 displays the 3-dimensional context, obtained by the training of 2,048neurons on pre-splice site strings with a descriptor mixing parameter of =0.5. For visualization, the context tetrahedron has been rotated to a frontview and orthogonally projected to two dimensions: the vertical center lineof neurons corresponds to the backside edge. A fractal structure resembling

the Sierpinsky gasket becomes clearly visible. At the same time, the neuronlabels indicate that this symmetric mixing parameter of = 0.5 cannot beused to obtain a clear separation of the splicing versus decoy states. Figure 7shows the difference for a projection pursuit snapshot of the 2 3-D neuronsmaximizing the linear discrimination (LD) of the 2D-projection; 512 neuronsare plotted, but now for a mixing parameter of = 0.75. Projection pursuitlinearly projects the data from high-dimensional space into a low-dimensionalvisualization space, thereby optimizing the projection matrix parameters ac-cording to given separation conditions like LD; for details and references see [2].

For biologically plausible dynamic, it would be desirable to process nucleotidetriplets as a whole, because they atomically represent a certain amino acid ora control codon. Related experiments with the required 43 = 64-dimensionalencoding are in preparation.

LDAproj.axis2

LDA proj. axis 1

splice siteunknowndecoy

Fig. 7. Prototypes (512) in projected LDmax-space for DNA intron data with = 0.75. The 2D-projection of the 23D internal states of the neurons isalmost linearly separable into splice site vs. non-splice site; due to the linearLDmax-projection, good separability and clusterization also applies to the origi-nal weight/context space.

21

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

22/37

0.7

0.8

0.9

1

1.1

0.4 0.5 0.6 0.7 0.8 0.9 1 1.1 1.2 1.3

context

weight

Fig. 8. Prototypes in the MNG context vs. weight space for the Mackey-Glass series.Neuron context has spread well from an initial average value into a large subintervalof the time series domain. A good distribution in the representation space is shown.

4.4 Mackey-Glass time series

An introductory example for processing continuous sequences is the Mackey-Glass time series described by the differential equation dx

d= bx()+ ax(d)

1+x(d)10,

using a = 0.2, b = 0.1, d = 17; a plot of this well-known benchmark seriesand a histogram is given in Figure 9. The MNG training parameters are acontext-preferring mixing value = 0.75 and the entropy-controlled forbalancing weight and context in the distance computations; its initialization

at = 0 is driven to a final value of = 0.18 after training, which will bedetailed below. As shown in Figure 8, the trained MNG context for 100 neuronscovers the subinterval [0.7; 1.15] of the input domain [0.4; 1.3]. All neurons arewell spread in the context vs. weight plane; the vertical line at a weight valueof 0.93 marks the average of 106 Mackey-Glass values which serves as commoninitialization value for the prototype context.

0.01

0.02

0.03

0.04

0.05

0.06

0.07

0.515 0.644 0.773 0.902 1.031 1.160 1.289

P(x)

Histogram bin for Mackey-Glass process value x

0.3

0.6

0.9

1.2

1.5

0 20 40 60 80 100 120 140 160

x(t)

t

Fig. 9. Histogram for the Mackey-Glass series. The inset shows a part of the series.

22

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

23/37

3.75

4

4.25

4.5

4.75

0 100 200 300 400 500 600 700 800 900 1000

entropy

training iterations [*1000]

upper bound

entropy value

Fig. 10. MNG entropy during Mackey-Glass training. Iteration 0 refers to the pointwhere, after a first context-free specialization phase, the balance parameter hasbeen released. Entropy saturation, close to the theoretical upper bound of 4 .605,corresponds to a final value of = 0.18; see text.

In the first training phase the context influence has been ignored and theprototype weight distribution is by a magnification functional closelyrelated to the data distribution shown in the histogram Plot 9. By virtue of thesubsequently activated context dynamic, an additional dimension is utilized inorder to represent also the history of the currently processed element. Thereby,the bimodal density structure of the Mackey-Glass series is beneficial for theMNG context model to emerge and to unfold in the context space spannedby the data modes. As reported in the article [20], the resulting specializationleads to very small quantization errors for the historic context components.

Here, the focus is put on the neuron activation entropy during training, which

shall be maximized by adapting the context metric influence to an appro-priate value. Figure 10 shows the entropy starting from highly specializedneurons without context: small numbers of active neurons yield skewed activ-ity histograms which, as a consequence, correspond to small entropy values.By gradually augmenting the context influence , a richer state space for thedistance computation is compelled. This triggers a more detailed winner selec-tion, and the entropy of neuron activations grows. A theoretical upper boundis H =

100i=1 pi log(pi) = 100 1/100 log(1/100) = 4.605 with equal ac-

tivation probabilities of pi = 1/100 for all neurons. The control strategy for is a dynamical adaptation at run time, aiming at entropy maximization: isincreased in case of an entropy decay trend and it is decreased otherwise. As

shown in Figure 10, entropy saturation takes place at about 300 training cycleswith released after prior context-free weight specialization; however, differ-ent learning tasks yield different entropy curves, depending on the relationshipof the number of neurons and the number of data modes. After the first weightspecialization, the entropy maximization by smooth -adaptation is generallyconducive to the context development. Here, spurious entropy fluctuations andinstabilities during training are damped by means of a momentum term for .In all experiments presented here, a sampling of about 1,000 entropy values istaken for a training task.

23

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

24/37

0

0.05

0.1

0.15

0.2

0.25

0.3

-4 -2 0 2 4

p(x1)

x1

0

0.05

0.1

0.15

0.2

0.25

0.3

-4 -2 0 2 4

p(x2)

x2

0

0.05

0.1

0.15

0.2

0.25

0.3

-4 -2 0 2 4

p(x3)

x3

Fig. 11. Histograms of preprocessed B-1991 data columns.

4.5 Physiological 3-variate series (B-1991)

A task that illustrates the limitations of the MNG context for roughly space-filling data has been taken from physionet.org for recordings of a patient suf-fering from apnea during sleep. The first column contains the heart rate, thesecond the chest volume, and the third is the blood oxygen concentration.

Linear trends, caused by parameter drifts in the gage, have been removedfrom the data by calculating the first derivative of a smooth order 4 splineinterpolation. Mean subtraction has been carried out, and a logarithmic trans-form x = sgn(x) log(|x| + 1) has been computed for each column to accountfor the sharp peaks in the otherwise moderate physiological dynamic. Datapreprocessing has been concluded by a z-score transform. The final set con-tains 64,701 samples of temporally dependent 3D-vectors. Although the dis-tributions of each single attribute exhibit a small number of modes, in phasespace, the compound temporal 3D data vectors form a curve of concatenatedspatial loops around the origin 0 with almost unimodal space filling density

distribution.

For the obtained series, training has been conducted for 20 cycles. It is as-sumed that the singular wrap-around for events at the end of the sequenceto its beginning do not significantly disturb the training. Figure 12 shows thequantization errors of the training data for three methods, each of them pos-

0.1

0.2

0.3

0.4

0.5

0 5 10 15

QuantizationError

Index of past inputs (index 0: present)

NGHSOM-S

MNG

Fig. 12. Temporal quantization errors for the preprocessed B-1991 data. Left:present instant at t = 0, right: 15 steps in the past. An error increase over time isapparent for all three models. MNG is best for the recent past 1 < t 4.

24

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

25/37

sessing a number of 617 neurons: original neural gas (NG), hyperbolic SOM forstructured data (HSOM-S), and MNG. The error refers to the quality of repre-sentation for sequence elements located t steps back in time, i.e. the temporalquantization error as defined in [9]. It is expressed by the average standarddeviation of the given sequence and the winner units receptive field, both

considered at the temporal position t; thereby, a neurons receptive field is theaverage sequence that has occurred just before the winner selection. As ex-pected, NG without context is the worst temporal quantizer, MNG works prettywell up to four steps in the past, but it is then outperformed by HSOM-S.

A particular difficulty of the data set for MNG is caused by the fact that long-term context integration falls into the indistinguishable center of gravity ofthe data trajectory. Therefore, distinct context clusters cannot easily emergefor histories longer than four steps, and MNG asymptotically results in thestandard NG quantization performance. HSOM-S does not suffer from this

problem, and it remains on a smaller error level; this is because the HSOM-Scontext representation does not implement a folding of historic informationinto the weight domain, but it uses the independent space of explicit back-references to the index of the last winner neuron instead.

A main characteristic of the B-1991 data set is a noisy trajectory which entersand revisits regions of the phase space from different directions after only a fewtime steps. Since already many neuron weights are required to represent thedensely filled phase space, no storage capacity is left for capturing the temporalcontext appropriately. However, higher quantization accuracy can be obtainedby MNG at higher computational costs, if only a sufficient number of neuronsis provided.

4.6 Speaker identification by hindsightMNG labeling

The final experiment processes real-valued speaker data from the UCI reposi-tory 1 . In addition to unsupervised clustering, the soft labeling scheme intro-duced for the DNA strings is used again to obtain a classification model. Dataare given as recordings of the Japanese vowel ae from 9 speakers, consti-

tuting sequences of 12-dimensional vectors with frequency information. Eachutterance is described by a stream of a length between 7 and 29 of tempo-rally connected vectors. In the training set, 30 articulations are available foreach speaker, adding up to 270 patterns; in the test set, a variable numberof articulations per speaker yields a total of 370 utterances. Each articulationhas its own temporal structure; since between different utterances there is notemporal connection, as in the DNA experiment, a special neuron is added to

1 http://kdd.ics.uci.edu/databases/JapaneseVowels/JapaneseVowels.html

25

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

26/37

LDAproj.axis2

LDA proj. axis 1

123456789

Fig. 13. LDA-projection of the weight context-space of speaker data.

represent the default state w = c = E(X) when no context is available at anutterance start.

Training has been realized for 150 neurons without taking into account thespeaker identity. The initial neuron context is set to the center of the trainingdata, the context descriptors mixing is a fair value of = 0.5, and a largelearning rate of = 0.3 could be used. The initial context influence parameteris set to = 0.01 and driven to a final value of = 0.37 during entropymaximization.

Figure 13 supports a good clusterability of the (weight context)productspace R12 R12: the according 24-dimensional prototypes have been projected

to two dimensions by means of the linear discriminant analysis (LDA) tech-nique [6]; the parameters of the 242-projection matrix have been adaptedby projection pursuit to yield a maximum Gaussian separation of the pro-totypes in the two-dimensional target space [5]. Hence in the unprojectedoriginal space, a good separability can be expected, which is confirmed by theexperiment.

Analogous to the DNA experiment, after training, each neuron is assigned a9-bin histogram containing activation frequencies for the speakers from thetraining set. For each articulation sequence in the test set, the accumulatedmajority vote over the bins of activated neurons is calculated to identify the

speaker. In contrast to the DNA splice site detection task, the resulting his-tograms are very specific for the speakers. When, finally, the obtained posteriorlabels are checked against the data, there is no error on the training set and anerror of only 2.7% on the test set. This accuracy is much better than the ref-erence errors of 5.9% and 3.8% accompanying the original data set. For MNGtraining with 1,000 neurons, a number of 21 neurons fall into idle state dur-ing context formation, and the error decreases to only 1.6%. This interestingresult illustrates that good-natured interpolation emerges from the dynamic,if more neurons than training examples are given.

26

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

27/37

5 Conclusions

We have investigated the MSOM merge context model for temporally or spa-

tially connected sequential data. Self-organizing context is obtained by a back-reference to the winner in the previous time step, compactly described by aweighted linear combination of the last winners weight and context. MSOMcontext can be combined with other self-organizing architectures such as NGor LVQ. For unsupervised sequence processing with accurate temporal quan-tization, MSOM has been integrated into NG in this work.

In the first part of this article, theoretical properties of the model have beendiscussed. In the limit of vanishing neighborhood cooperation, the proposedrecursive context definition leads to an efficient fractal encoding as the fixedpoint of the training dynamic. This fact brings MSOM into line with TKM andRSOM which are also based on fractal encoding of sequences in weight space.In contrast to that, SOMSD and RecSOM extend the context representationsto the separate space of topological previous winner indices. However, unlikeTKM and RSOM, MSOM models context explicitly and this gained adaptationflexibility allows MSOM to simulate finite automata.

From the supplied experiments it becomes clear that sophisticated data pre-processing is not mandatory in order to obtain good temporal data models

with MSOM; for discrete symbols just a canonic real-value vector encodingshould be provided, and for initialization convenience, data should be zero-centered. As a consequence of self-organization, the capacity of the contextrepresentation grows with the number of neurons. After initial specializationwithout context contribution, a maximum utilization of neurons is obtained bymaximizing the network entropy via the context influence parameter . Sincerecurrent neural networks cover the supervised learning tasks pretty well, mostapplications of the merge context model are found for unlabeled data. Never-theless, the DNA splice site identification and especially the speaker recogni-tion task, both realized by means of soft a posteriori neuron labeling, indicatethat we can trust in the found context representations and that there is somepotential for processing labeled data too.

Further work should consider metrics more general than the squared Euclideandistance. Also systematic studies on the interplay of the context influence andthe context update strength are of interest. Another even more challengingquestion, if there is a way to generalize the presented robust MSOM modelfrom sequences to more complex graph structures, remains to be answered infuture.

27

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

28/37

Acknowledgements

Many thanks to the anonymous reviewers for helping to improve the qualityof this manuscript by their valuable comments.

A Proof of Theorem 1

The properties of the merge context is studied in two steps: 1 the optimumchoices for the prototype weight and the context are investigated; 2 it isproved that these optima result from the training dynamic as stable fixedpoints. We assume that neighborhood cooperation is neglected, i.e. only thewinner is adapted according to the given learning rule. For the winner, this

assumption applies to both MSOM and MNG.

1 The best choice of neuron i is the one for which weight wi and context ci

yield di(xt) = 0 for the current pattern xt:

di(xt) = ( 1 ) xt wi2 + Ct ci2

= (1 ) xt wi2 + (1 ) wIt1 + cIt1 ci2 .

It1 is the index of the winner in the previous time step. Both squared

summands can be considered separately. The left one trivially becomes theminimum of 0, if the weight vector represents the input pattern, i.e. forwi = wopt(t) = xt. Then, by induction, the right one expands to

ci = copt(t) = (1 ) xt1 + cIt1

= (1 ) xt1 + copt(t1)

= (1 ) xt1 +

(1 ) xt2 + (. . . + (1 ) x1) . . .

=t1

j=1

(1 ) j1 xtj (note: j 1 is exponent, t j is index)

with the assumption of zero context at the sequence start x1.

2 Now, focusing on the convergence of a neuron that is specialized on aparticular sequence element within its unique context, asymptotically stablefixed points of the training update dynamic are obtained. Still, we neglectneighborhood cooperation, and we assume that enough neurons are availablefor generating disjoint optimum weight settings for each presented pattern.

28

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

29/37

The analysis of iterative weight adaptation in presence of the target vectoryields:

wIt + (xt wIt) xt = (1 ) wIt xt wIt xt .

This describes an exponential convergence because of (0; 1). Analogously,

cIt +

(1 ) wIt1 + cIt1 cIt

copt(t) cIt copt(t)

describes the context convergence, if

(1 ) wIt1 + cIt1 copt(t)

can be shown. With wIt1 xt1 and by induction ofcIt1 copt(t1) withcI1 C1 = 0 = copt(1):

(1 ) xt1 + copt(t1) = (1 ) xt1 + t1j=2

(1 ) j2 xtj

=t1j=1

(1 ) j1xtj = copt(t) 2

This sum for copt denotes a fractal encoding of the context vector in the weightspace, which is known to be a very compact and efficient representation [23].

B Proof of Theorem 2

Assume that a finite automaton A = (, S , ) is given. We construct a net-work with merge context which simulates A. We assume that distances arecomputed using the L1-norm x1 x21 =

i |x

1i x

2i | and the parameters

are set to = = 0.5. We refer to the weight and context of a neuron bythe symbols w and c. The recursive network dynamic for an input sequences = (x1, . . . , xt) is given by the equation

dj(xt

) = 0.5 xt

wj

1 + 0.5 Ct

cj

1 ,

where C1 is a fixed vector and

Ct = 0.5 wIt1 + 0.5 cIt1 .

It1 is the index of the winner in the previous time step.

We now show that the transition function of the automaton can be imple-mented by this dynamics. The transition function can be interpreted as a

29

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

30/37

boolean function based on the primitives which test whether the current sym-bol is a specific symbol i and the current state is a specific state staj. Wecan state this formula in the following form: assume I is a variable for thecurrent input state and S a variable for the current input symbol. O is a vari-able the output state. Then we can formulate the function as a disjunction of

implications

((I = stai1 S = j1) . . . (I = stail S = jl)) (O = stak))

corresponding to all possible output states of . Thus, to implement , wehave to test a disjunction of conjunctions for the precondition of the respec-tive implication. This will be done in two steps, one for the computation ofthe disjunction, one for the conjunction. However, within a MSOM, no vari-ables, states, and symbols are explicitly available and we need to interpret

appropriate activation patterns of MSOM as a distribution of truth valuesamong the variables of . For this purpose, we identify the neurons with an|S||| array, and we identify the rows (i.e. all neurons in a row are on) withinput symbols and the columns with states. This is the main idea of the con-struction. For the concrete implementation, some minor technical issues areto be added, such that the activation of rows, columns, and the computationof the above boolean formulas can be implemented by a recursive iteration ofdistance computations in MSOM.

Weights and contexts are elements of Rd = R|S|||+4. We refer to the com-

ponents of a vector x in this space by tuples xij for the first |S| || entries,1 i |S| and 1 j ||, and xi for the last 4 entries, 1 i 4.

First, we define the neurons of the map and their weight and context vectors.The idea behind this construction is as follows: the last four positions act asa counter; they ensure that only a neuron of a specified group can becomewinner. For the other positions, we identify the state stai with the vectorx Rd with xij = 1 for all j, and all remaining entries are 0; we identifyj with the vector x with xij = 1 for all i, all remaining entries are 0. Weconstruct neurons which simulate the transition function of the automaton

in two steps. The first step identifies input tuples (stai, j) of to ensure thatthe internal context C of the map is 1 for position cij and 0 for any otherposition. The second step collects, for a fixed state stak, all tuples (stai, j)which are in the inverse image of stak under . The output of this step is aninternal context which corresponds to the state stak. These two steps are bothdivided into two sub-steps, one which actually performs the computation andanother one which is responsible for a cleaning of the result.

For every pair we define (stai, j) S two neurons of the map,

30

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

31/37

n(stai, j) with weight vector

wkl =

1 if l = j

0 otherwiseand wk =

2 d if k = 1

0 otherwise

and context vector

ckl =

1 if k = i

0 otherwiseand ck = 0 for all k .

nclean(stai, j) with weight vector

wkl = 0 for all k and l and wk =

2 d if k = 2

0 otherwise

and context vector

ckl =

1 if k = i and l = j

0 otherwiseand ck = 0 for all k .

For every state stai S we choose two neurons of the map

n(stai) with weight vector

wkl =

4 if k = i

0 otherwiseand wk =

2 d if k = 3

0 otherwise

and context vector

ckj =

0.5 if (stak, j) = si

0 otherwiseand ck =

GAPi if k = 3

0 otherwise,

whereby GAPi = 0.5 MAX

{(stal, j) S | (stal, j) = stai}

. MAX

is defined as maxk{(stal, j) S | (stal, j) = stak}

nclean(stai) with weight vector

wkl = 0 for all k and l and wk =

2 d if k = 4

0 otherwise

31

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

32/37

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

33/37

Neuron n(stai, j) yields the distance 0.5 d and the context vector C with

cml =

1 if m = i and l = j

0.5 if m = i and l = j

0.5 if m = i and l = j

0 otherwise

and cm =

d if m = 1

0 otherwise

Neuron n(stai, m) with m = j yields the distance 0.5 d + |S|.

input context winner weight winner context

1 1 1 1 1 1

1 1

1 1

20 10 20

1 0.5 1

0.5

0.5

20 10 20

0.5 0.5

4 4 0.5 0.5

20 10 20 0.5

0.25

2.25 2.25 2 2

20 10 20

Table B.1An example of the computation steps for |S| = 3 and || = 2, i.e. d = 6 + 4.In every block of the table, the input, the internal context, and the weight andcontext vector of the winner are depicted. Thereby, the components of the vectorsare denoted using the convention introduced above, i.e. using |S| = 3 rows with|| = 2 columns, and an additional row with 4 entries. The input for is 1 andthe context is sta1. Assume (sta1, 1) = sta2. In addition, assume that the pairs(sta2, 1) and (sta2, 2) yield sta2. Assume MAX = 3. All entries which are not shownyield 0.

33

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

34/37

Neuron n(stam, j) with m = i yields the distance 0.5 d + ||. Neuron n(stam, l) with m = i and l = j yields the distance 0.5 d + |S| +

|| 1. Neurons nclean(stam, l), n(stam), and nclean(stam) yield a distance of at

least 2 d.

Step 2: the context C of Step 1 and input 02: Neuron nclean(stai, j) yields a distance 0.5d+0.25(|S|1)+0.25(||1).

The resulting context vector C is of the form

cml =

0.5 if m = i and l = j

0 otherwiseand cm =

d if m = 2

0 otherwise

Neuron nclean(stai, m) with m = j and neuron nclean(stam, j) with m = iyield a distance of 0.5 d + 0.25 (|S| 1) + 0.25 (|| 1) + 0.5.

Neuron nclean(stam, l) with m = i and l = j has got the distance 0.5 d +0.25 |S| + 0.25 || + 0.5.

Neurons n(stam, l), n(stam), and nclean(stam) yield distances of at least2 d.

Step 3: the context C of Step 2 and input 03 (remember that (stai, j) = stak): Neuron n(stak) yields a distance 2 || + 0.5 d + 0.25 MAX 0.25 and the

context vector C with

cml = +

2 if m = k

0 otherwiseand cm =

d + 0.5 GAPi if m = 3

0 otherwise

whereby R|S||| is a vector with entries 0.25 for indices (m, l) with(stam, l) = stak and 0 for any other entry.

Neuron n(stam) with m = k yields the distance 2 || + 0.5 d + 0.25 MAX + 0.25.

Neurons n(stam, l), nclean(stam, l), and nclean(stam) yield distances of atleast 2 || 0.5 + 2 d.

Step 4: the context C of Step 3 and input 04: Neuron nclean(stak) yields distance 0.5d+0.25GAPi+0.125(MAX2GAPi) =

0.5 d + 0.125 MAX. The context vector C with

cml =

1 if m = k

0 otherwiseand cm =

d if m = 4

0 otherwise

arises. This is C(stak). Neuron nclean(stam) for m = k yields a distance of at least 0.5 d + 0.25 GAPi+2|S|0.125|S|+0.125(MAX2GAPi) = 0.5d+0.125MAX+1.75|S|.

For all other neurons, a distance of at least 2 d arises. Note that MAX < d.

34

-

8/3/2019 Marc Strickert and Barbara Hammer- Merge SOM for temporal data

35/37

This concludes the proof.

C Proof of Theorem 3

Given a finite automaton A = ( = {}, S , ) and a delay delay. Assumeenc : Rd is an encoding function and a TKM is given, such that the TKMsimulates A with this delay. Input sequences for A just differ with respect totheir length, since the alphabet is unary. Assume enc() = (x1, . . . , xdelay).Then an input sequence s of length t yields the activation

tj=1

(1 )delay(tj)

(1 )delay1 x1 wi2 + . . . +

(1 ) xdelay1

wi

2

+ xdelay

wi

2

of neuron i with weight vector wi. The term Wi := delay

j=1 (1 )delayj

xj wi2 is independent of t, thus the activation can be rewritten as

Wi t

j=1

(1 )delay(tj) .

Because the termt

j=1(1)delay(tj) is identical and positive for all neurons i,