Machine Minds

Transcript of Machine Minds

-

8/14/2019 Machine Minds

1/79

The Emerging Mind of the Machine

John Cameron Glasgow II

1

-

8/14/2019 Machine Minds

2/79

ABSTRACT

A philosophy of emerging systems based on general systems theory concepts is propounded and

applied to the problem of creating intelligence in a machine. It is proposed that entropy, a

principle that provides a framework for understanding the organization of physical systems

throughout the universe, serves also as a basis for understanding the creation of an intelligence.

That position is defended by the argument that the universe is a statistically described place in

which the entropic processes of information bearing physical systems (e.g. RNA, DNA, neural

networks, etc.) describe the same thing under interpretation as information or physical structures.

As a consequence the observations concerning hierarchical stratification and rule creation

described in general systems theory and so obvious in physical systems are assumed to apply to

information systems. The mind and its intelligence is identified as such an information system

that emerges into the human environment as a system of hierarchically structured mental objects

and associated rules. It is inferred that those rules/objects that emerge at a level in a mind are not

reducible to the functions and interactions of constituent parts at lower levels. All of this implies

that any implementation of humanlike intelligence in a machine will have to include lower levels,

and that the mechanism of that implementation will have to be emergence rather than

construction. It is not concluded that this precludes the implementation of intelligence in a

machine. On the contrary, these ideas are developed in the context of an attempt to implement anundeniably intelligent machine, that is, a machine that will be considered intelligent in the same

manner that one person normally and implicitly considers another person intelligent.

2

-

8/14/2019 Machine Minds

3/79

INTRODUCTION

Emergence is a controversial philosophical viewpoint that maintains that the universe exhibits an

ongoing creativity. It claims that creativity is manifest in the orderly systems that emerge at a

level from constituent parts at lower levels. More explicitly, such systems are seen to exhibit

behavior that is coherent and deterministic but not predictable and not reducible to the laws that

apply at the lower levels. That is, new levels exhibit new laws. Thus a person who accepts the

concept of emergence might claim that, while the laws of physics and chemistry can explain the

internal bonds and the physical attributes of any and all molecules, those laws cannot predict

which molecules will be produced or the subsequent behavior of a particular molecule or a group

of molecules, even though the nature of those activities can be codified. As a more concrete

example, while the structure and chemical proclivities of a molecule of deoxyribonucleic acid(DNA) can be explained by the laws of chemistry and its behavior in the world as it encounters

other objects can be explained by laws of physics, the spectacular behavior exhibited over time

by groups of these molecules (as codified in synthetic theories of evolution) is not implicit in

those laws. As an example at a higher level, while demographers might determine that the world

human population will reach a particular level in fifty years, they cannot predict the nature of the

social, governmental, or economic institutions that will arise any more than they can predict that

some specific set of people will produce offspring who will in turn produce offspring who will

produce a great leader who will materially affect those institutions (although it is reasonable to

assert that such will be the case). The concept of a universe in a state of ongoing creative activity

lies somewhere between the two most popular world models; the idea that the universe is in

some manner controlled or directed from without and the idea that the universe consists of a set

of unchanging rules and objects to which all observed phenomena can be reduced.

The philosophical concept of reductionism maintains that the nature and activity of everything is

implicit in some basic finite set of rules. According to reductionism, given those rules and an

initial state of the universe all of the past and future states of the universe can be calculated (an

assertion made by Pierre Simon Laplace in the late seventeenth century at a time when it seemed

that the clockwork mechanism of the universe would soon be completely revealed). This

3

-

8/14/2019 Machine Minds

4/79

philosophy is easily accepted by scientists, for whom it can serve as both the justification of

continued search for underlying laws, and the assurance that such laws exist. Philosophically,

reductionism has come under attack from many quarters. The implied existence of a regress of

explanation of phenomena in terms of their constituent phenomena requires (for any practical

application of the concept) the hypothesis of some particular set of low level, basic and

fundamental objects and rules whose validity is assumed. In a macroscopic sense the job of

science can be taken to be the reduction of phenomena at high levels to some hierarchical

combination of the basic set, thus revealing why things are as they are. But the selection of a

basic set of rules and objects has often proven problematical. In practice, an assumed truth often

turns out to be just a particular case in a larger scheme. In geometry, Euclids parallel lines axiom

is famous as an assumed truth that is only true in flat or uncurved space. Newtons laws are

true only in the context of a restricted portion of an Einsteinian universe, albeit the portion of

which we happen to be most aware. At the quantum level of existence some of the laws of logic

that seem so evident at the human level of existence, break down. Even some of Einstein's

assumptions break down at the quantum level (e.g. the assumption that the speed of light is

fundamental limit). A reductionist would take this to mean that the basis set of fundamental

truths had not yet been discovered.

In the early part of this century the school of philosophical thought that exemplified the

reductionists attitude was logical positivism. The two famous proponents of logical positivismwere Alfred North Whitehead and Bertrand Russell. Their attempt to provide the philosophy

with a sound analytical basis ( Principia Mathematica) was brought into question in 1931 by the

famous incompleteness theorem of Kurt Goedel. Goedel proved that in any formal system based

on a set of axioms and that is sufficiently complex to be able to do arithmetic, either there will be

assertions that can be made within the framework of the system that are true but that cannot be

proven true (i.e. the system is incomplete), or there will be contradictory assertions that can be

proven true (i.e. the system is inconsistent). That is, there are inherent weaknesses in any such

system that tend to invalidate the system as a whole. Still, scientists can work within a

reductionist framework if their work is restricted to the phenomena at some particular level or

some small closely associated group of levels. In the normal conduct of their research they do not

come up against an infinite regress of explanations. Problems of incompleteness or inconsistency4

-

8/14/2019 Machine Minds

5/79

are rarely encountered. When they are encountered, the necessary assumptions concerning the

effects of other levels phenomena can usually be revised to remove the specific problem. Indeed,

the very process of revision of assumptions can be considered an integral part of scientific

process (see Kuhn 1962). Consequently scientists have not found it necessary to seriously alter

the methodological framework within which they have worked since the development and

adoption of that methodology in the eighteenth century. It is natural then, that scientifically

oriented researchers, when faced with the problem of creating intelligence in a machine feel that

such intelligence can be achieved by an appropriate basic set of rules and objects installed in the

proper hierarchically structured control system. Assuming, that reductionism holds across the

range of levels required for the implementation of a mind, and that humans have the perception,

tools, and time to determine the content of those levels together with the skill to emulate and

implement those contents in a machine, then certainly machines can be built to be intelligent.

Reductionism is unpopular with philosophers defending concepts of ethics and morality as

formulated in Judeo-Christian religions. Most of the doctrines of western religious institutions as

they apply to ethical and moral considerations turn upon the human intuition of free choice. If

the universe is predetermined as reductionism implies, then free choice is an illusion. In

particular a choice to commit or not commit an immoral act, no matter how real the choice seems

to the chooser, is a charade. Further, any supposed imposition of reward or punishment for such

behavior becomes a compounded charade. The inclusion of a Deity in a reductionist framework results in a religious concept called Deism. It maintains that a Deity created and set the universe

in motion after which the universe did not require (and does not require) any further

intervention. Deism enjoyed a brief popularity among intellectuals about the time of the

American revolution, a time at which the consequences of the Newtonian revolution dominated

most of the thought of the times. But such a belief does not provide a sufficient base upon which

to build the requisite ethical and moral dogma of a western style religion. Persons worried about a

philosophical basis for ethical and moral behavior, or those who insist simply that the decisions

they make are meaningful in that the future course of events is not fixed and does respond to

those decisions, normally prefer some concept of a supervised, managed, or directed universe

(well use the term spiritualism to refer to any such a concept of a directed universe).

5

-

8/14/2019 Machine Minds

6/79

Spiritualists will almost certainly disagree with the reductionist conclusion concerning machine

intelligence. Inherent in spiritualism is the belief in the existence of a non-corporeal motivating

and controlling force or forces that in some (inexplicable) manner interact with corporeal objects

causing behavior that does not yield to reductionist explanation. This force or forces must be

an eternal feature of the universe for if man is the result of a lengthy metamorphosis from simple

molecular structures to his present state (a theory that has achieved the status of accepted fact

for all but a tiny minority of people) then the motivating force must have been there all along,

directing, maintaining, and imbuing each and every object or process with its unique non-

reducible characteristics. To maintain otherwise requires either that evolution be rejected in favor

of an instantaneous creation replete with all of the evidence that argues against it, or that it be

accepted that unenlightened matter in some way can give rise to, or create, inexplicable forces

that subsequently vitalize it. Thus an eternal and all pervasive life force or elan vital is

hypothesized to account for the obvious but not easily described difference between living and

non-living matter, and the human essence, mind, or soul, is considered an entity, separate from,

but associated with the body, yet not subject to the bodys limitations. The believer is free to

assert that the forces are responsible for any perceived phenomenon or state of nature. If the

human mind is perceived as a unique, non-reducible phenomenon, then that is the intent of the

forces of which we can have no knowledge. Certainly we cannot reproduce in a machine that of

which we can have no knowledge.

Phenomena for which no reductionist explanations exist serve as the only support for belief in

spiritualism (e.g. inexplicable human mental activity such as intuition, a flash of insight, or

creativity may be cited as evidence the non-reducible quality of the human mind). In fact the term

belief applies only to assertions about such phenomena. At the other end of the spectrum the

term fact applies only to those phenomena that are reducible (we use the term in its sense as an

objective measure of reality rather than its use in referring to data or in making assertions of

truth). The axioms to which facts may be reduced might be as informal as the (reproducible)

evidence of the senses or as formal as an abstract mathematical system. While adherents of facts

and beliefs both claim to have a corner on the market in truth , both are subject to challenge.

Spiritualists are stuck with the problem of trying to support their beliefs by citing inexplicable

phenomena that might at any moment be explained, while reductionists must fear (or they should6

-

8/14/2019 Machine Minds

7/79

fear) that their systems might yield contradictory facts, or that facts will be discovered that are

not explainable in their system. It might appear that there are no alternatives to these two

opposed ways of conceptualizing the world. Such is not the case. The concept of emergence

provides another 1 explanation of the way things are; one that allows for the existence of non-

reducible phenomena , and yet one that is not spiritualistic . We stress that concepts

associated with theories of emergence should not be confused with spiritualism. Certainly

spiritual forces could be invoked to explain creative processes but that would simply be another

version of a directed universe. Many real, non-spiritual mechanisms can be proposed to explain

the process of ongoing creation (and some are detailed below). Nor are the concepts regarding

emergence, philosophically compatible with reductionism. Theories concerned with emerging

systems hypothesize a hierarchically structured universe in which the levels of the hierarchy

exhibit a semi-permeability with respect to the effects of the rules and objects at one level onadjacent levels. The further removed two levels the less the phenomena in the one can be reduced

to the phenomena in the other. Since the levels are assumed to extend throughout the universe,

laws can be at once, both universal and restricted in scope. In effect the laws of the universe

never die, they just fade away (across levels). This concept has many implications for what it

means to exist and be intelligent at one level in such a universe. In the context of this paper it has

implications for the implementation of machine intelligence. To sum up, reductionism easily

admits of the possibility of building a machine intelligence while spiritualism argues against it.

Emergence lies somewhere between these two extremes. As might be expected then, it implies

that the creation of a machine intelligence lies somewhere between impossible and possible. First

we develop support for the concept of emerging systems by considering the concept of a

universe organized into hierarchical levels through the interaction of two seemingly opposed

facts of nature, chaos and order. We follow that by stating the ideas more clearly as a hypothesis.

Finally we make inferences from the hypothesis concerning the possibility of creating a machine

intelligence (which we deem possible) and the conditions necessary to accomplish that feat in the

context of an emergent universe.

1 But not necessarily the only other one.

7

-

8/14/2019 Machine Minds

8/79

EMERGENT SYSTEMS

Evolution

The philosophicalreligious climate of Europe in the eighteenth and nineteenth centuries was

favorable for investigations into the nature of biological organisms. This was due to two aspects

of the JudeoChristian thought of that time: time was considered linear and events, measured

from creation to eternity with (for the Christians) memorable deterministic events occurring at

the advent and second coming of Christ. This was different from the cyclical nature of time linear

and cyclical of the Greek philosophers (and of most other cultures).

It was assumed that God had created the world in a methodical ordered manner. One perceptionof the order of things was the "scale of being," the "ladder of perfection" or the "ladder of life."

Man was perceived to occupy the highest earthly rung of the ladder with all of the other various

life forms occupying lower positions depending upon their perfection or complexity. The ladder

did not stop at man but continued on with Angels, Saints and other heavenly creatures that

occupied successively higher rungs until finally God was reached. Man thus had a dual nature; a

spiritual nature that he shared with the Angels above him on the ladder and an animal nature that

he shared with the creatures below him on the ladder.

Scientific thought and religious thought often mixed during the renaissance and even into the

nineteenth century. Investigations into biological complexity were encouraged so that the order of

succession of creatures on the ladder of life could be more accurately ascertained. That, coupled

with the perception that time moved inexorably from the past into the future set the stage for the

discovery of evolutionary processes. All that was needed was the concept of vast periods of

geologic 2 time, the idea that the ladder of life might have a dynamic character, and a non

supernatural mechanism by which movement on it could occur. These ideas, or various forms of

them, had already gained widespread acknowledgement when Charles Darwin wrote his Origin of

Species (published in 1859). Origin united the idea of the transmutation of species over geologic

2 The idea of geologic time was provided by James Hutton, the father of geology.

8

-

8/14/2019 Machine Minds

9/79

time with the mechanism of natural selection 3 to yield the theory of biological evolution. The

theory required strong champions for it made formal and respectable the heretical ideas that had

only been the object of speculation. Even in 1866 (seven years after publication of Origin of

Species ) the ladder was still being defended (Eiseley , 1958). From a scientific point of view these

were the last serious challenges to evolution. The evidence from biology, geology, and

paleontology were too strong to support that particular form of theologically generated order. At

about the same time (1866) an Austrian monk named Gregor Mendel published a report on his

research into genes, the units of heredity. In it he outlined the rules that govern the passing of

biological form and attribute from one generation to the next. The report was to lay

unappreciated for the next thirty years until around the turn of the century when the rules were

independently rediscovered. The Mendelian theory provided the Darwinian mechanism of natural

selection with an explanation for the necessary diversification and mutation upon which it relied.

The mendelian laws were incorporated into Darwinism which became known as NeoDarwinism.

In 1953 J. D. Watson and F. H. C. Crick proposed the double helix molecular structure of the

deoxyribonucleic acid (DNA)) from which genes are constructed and which contain the actual

coded information needed to produce an organism. This and various loosely related theories such

as population genetics, speciation, systematics, paleontology and developmental genetics

complement neodarwinism and combine with it to form what is termed the synthetic theory of

evolution. The synthetic theory of evolution represents the state of the theory today (Salthe

1985).

This new wave of scientific discovery about the earth and the particularly the biology of the

earth worked a new revolution in philosophical thought in which man had to be perceived as

playing a smaller role in the scheme of things if for no other reason than the true grandeur of the

scheme of things was becoming apparent. The first philosopher to embrace the new biological

theories was Herbert Spencer. He was a philosopher of the latter half of the nineteenth century

who seized upon the theory of evolution as presented by Darwin and generalized it to a

philosophical principle that applied to everything, as Will Durant (Durant 1961) demonstrates

3 The result of the uneven reproduction of the genotypephenotype in a group of members of a species thatcan mate with each other. The mechanism of selection is that of the interaction of the phenotype with itsenvironment. The concept was popularized as 'the survival of the fittest' by Herbert Spencer.

9

-

8/14/2019 Machine Minds

10/79

by partial enumeration, to:

"The growth of the planets out of the nebulae; the formation of oceans and mountains on the earth; the metabolismof elements by plants, and of animal tissues by men; the development of the heart in the embryo, and the fusion of the bones after birth; the unification of sensations and memories into knowledge and thought, and of knowledge

into science and philosophy; the development of families into clans and gentes and cities and states and alliancesand the 'federation of the world'."

the last, of course, being a prediction. In everything Spencer saw the differentiation of the

homogeneous into parts and the integration of those parts into wholes. The process from

simplicity to complexity or evolution is balanced by the opposite process from complexity to

simplicity or dissolution. He attempted to show that this followed from mechanical principles by

hypothesizing a basic instability of the homogeneous, the similar parts being driven by external

forces to separated areas in which the different environments produce different results.

Equilibration follows; the parts form alliances in a differentiated whole. But all things run down

and equilibration turns into dissolution. Inevitably, the fate of everything is dissolution. This

philosophy was rather gloomy but it was in accord with the second law of thermodynamics 4 or

the law of entropy that states that all natural processes run down. Entropy was proposed by

Rudolf Clausius and William Thompson in the 1850's and was derived from the evidence of

experiments with mechanical systems, in particular heat engines (see the section on order and

entropy below). That scientific fact tended to support a philosophy that the universe and

everything in it was bound for inevitable dissolution. This was shocking and depressing to philosophers and to the educated populace at the turn of the twentieth century. However, the

universal application of the Darwinian theory of evolution is not so simple as Spencer would

have it.

In response to the overwhelming wealth of detail, the biological sciences began to turn inward.

The detail allowed for tremendous diversification without leaving the confines of the life sciences.

The subject matter of biology was, and is, perceived as so different from the subject matter of

4 The first law of thermodynamics deals with the conservation of energy, stating that the sum of the flow of heat and the rate of change of work done, are equal to the rate of change of energy in a system. There is azero'th law (that, as you may suspect, was proposed after the first and second laws, but that logically precedesthem). It states that two objects that are in thermal equilibrium with a third object, will be in thermalequilibrium with each other.

10

-

8/14/2019 Machine Minds

11/79

other areas of science that it is considered unique and to some extent a closed discipline. But the

isolationist tendency is widespread in all of the sciences and, as has been shown on many

occasions, uniqueness is an illusion. In recent years there has been a move on the part of some

biologists to find a more general framework within which biology fits together with the other

disciplines of science. Such a move toward integration should, of course, be of interest to all of

the sciences. One such approach is espoused by Stanley N. Salthe 5 .

Salthe notes (Salthe 1985) that there are at least seven levels of biological activity that must be

considered for the investigation of what are considered common biological processes. These

include, but are not limited to, the molecular, cellular, organismic, population, local ecosystem,

biotic regional, and the surface of the earth levels. The systems at these levels are usually studied

as autonomous systems largely because of the difficulty in attributing cause to other levels. There

is, inherent in biological phenomena, a hierarchical structure differentiated mainly by spacial and

temporal scaling. The hierarchy is not limited to biological systems, but extends upward to the

cosmos and downward to quantum particles. Nor is it limited to any particular enumeration such

as the seven levels mentioned above. Interactions between levels are largely constrained to those

that occur between adjacent levels. At a particular level (focal level) and for a particular entity the

surrounding environment (or next higher level) and the material substance of the entity (next

lower level) are seen as providing initial conditions and constraints for the activities or evolution

of the entity. Salthe proposes that a triadic system of three levels (the focal level and theimmediately adjacent levels) are the appropriate context in which to investigate and describe

systemic, in particular, biologic phenomena. So the theory of evolution that depends upon

natural selection applies at the population level and may or may not apply at other levels. Salthe

distinguishes several factors that provide differentiation into levels. These include 1) scale in both

size and time that prohibits any dynamic interaction between entities at the different levels, 2)

polarity, that causes the phenomena at higher and lower levels to be radically different in a

5 The specific approach presented by Salthe has its roots in general system theory. That theory's inception wasin Norbert Wiener's cybernetics. It was given its strong foundations and extended far beyond the originalcontent by such researchers as Ludwig Von Bertalanffy (1968), Ervin Laszlo (1972), Ilya Prigogine (1980),Erich Jantsch (1980), David Layzer (1990) and many others (the cited works are included in the bibliography,and are not intended to represent the principle work of the cited researcher). General systems science is now afirmly established discipline. It provides the context in which the discussion concerning 'levels' on theensuing pages should be considered.

11

-

8/14/2019 Machine Minds

12/79

-

8/14/2019 Machine Minds

13/79

homogeneous classes. There is then a 'secondary' type of order or regularity which arises only through the(usually cumulative) effect of individualities in inhomogeneous systems and classes. Note that such order neednot violate the laws of physics"

Salthe and others who attempt to distinguish levels as more than mere handy abstractions to

locate or specify objects provides a means by which mechanists and vitalists have some commonground. The processes and objects that occupy a level are not expected to be completely

explainable in terms of any other level, in fact any complete explanation is precluded by the

increasing impermeability that characterizes the correspondence between increasingly distant

levels. Only the most immediate levels have any appreciable impact on the activities of the other.

This saves the mechanists from the infinite regress of explanations but leaves the explanation of

elan vital for further investigation. As Elsasser points out, these need not be mystic,

spiritualistic, or nonscientific. One such possible explanation for the observed propensity of

systems to selforganize is given below in the section on entropy and order. It is an unexpected

explanation, advocating that organization results not because of any positive motivating force

but rather because of the inability of some open systems to pursue their entropic imperative at a

rate commensurate with the expanding horizons of the local state space. It will be argued that the

nature of the system at a level in an environment together with existence of chaotic tendencies,

dictates the form that the resulting organization takes. These ideas have applicability to virtually

any system at any level, not just biological systems at the levels identified above. We shall

comment and expand upon these ideas in later sections, but first we will review the developmentof knowledge about the nature of the physical world beyond (and below) the lowest levels

mentioned above. We skip over the molecular level, whose rules and objects are detailed in

chemistry, the atomic level described by atomic physics, and the nuclear level described by

nuclear physics, to the quantum level whose rules and objects are expressed in particle physics

by quantum mechanics. We do this because the quantum level vividly illustrates the increasing

inability to relate the actions and objects at different levels far removed from the human level of

existence.

Quantum Theory

At the end of the nineteenth century physicists were perplexed by the fact that objects heated to

13

-

8/14/2019 Machine Minds

14/79

the glowing point gave off light of various colors (the spectra). Calculations based on the then

current model of the atom indicated that blue light should always be emitted from intensely hot

objects. To solve the puzzle, the German scientist, Max Planck proposed that light was emitted

from atoms in discrete quanta or packets of energy according to the formula, E = hf, where f was

the frequency of the observed light in hertz and h was a constant unit of action (of dimensions

energy/frequency). Planck calculated h = 6.63 x 10 27 ergs/hertz and the value became known as

Planck's constant. In 1905 Albert Einstein suggested that radiation might have a corpuscular

nature. Noting that mc 2 = E = hf, or more generally mv 2 = hf (where v stands for any velocity)

provides a relation between mass and frequency he suggested that waves should have particle

characteristics. In particular since the wavelength l, of any wave, is related to the frequency by f

= v/l, then l = h/mv, or setting the momentum mv = p, then p = h/l. That is the momentum of a

wave is Planck's constant divided by the wavelength. Years later (in 1923), Prince Louis

DeBroglie, a French scientist, noted that it should be true that the inverse relation also exists and

that l = h/p. That is, particles have the characteristics of waves. This hypothesis was quickly

verified by observing the interference patterns formed when an electron beam from an electron

gun was projected through a diffraction grating onto a photosensitive screen. An interference

pattern forms from which the wavelength of the electrons can be calculated and the individual

electron impact points can be observed. This, in itself, is not proof of the wave nature of

particles since waves of particles, (for instance sound waves or water waves) when projected

through a grating will produce the same effect. However, even when the electrons are propagated

at widely separated intervals (say one a day), the same pattern is observed. This can only occur

if the particles themselves and not merely their collective interactions have the characteristics of

waves. In other words, all of the objects of physics (hence all of the objects in the universe), have

a waveparticle dual nature. This idea presented something of a problem to the physicists of the

early part of this century. It can be seen that the wave nature of objects on the human scale of

existence can be ignored (the wavelength of such objects will generally be less than 10 27 meters

so, for instance, if you send a stream of billiard balls through a (appropriately large) grating the

interference pattern will be too small to measure, or at least, small enough to safely ignore).

Classical physics, for most practical purposes, remained valid, but a new physics was necessary

14

-

8/14/2019 Machine Minds

15/79

for investigating phenomena on a small scale. That physics became known as the particle 6

physics.

The Austrian physicist, Erwin Schr_dinger applied the wave mechanics developed by James

Clerk Maxwell to develop an appropriate wave equation (y) for calculating the probability of the

occurrence of the attributes of quantum particles. For example, solving y for a specified position

of a particle (say the position of an electron near an atom) yields a value which, when squared,

gives the probability of finding the particle at that position. Then, to investigate the trajectory of

an electron in relation to its atom, the equation can be solved for a multitude of positions. The

locus of points of highest probability can be thought of as a most likely trajectory for the

electron. There are many attributes that a quantum particle might have, among which are mass,

position, charge, momentum, spin, and polarization. The probability of the occurrence of

particular values for all of these attributes can be calculated from Shr_dinger's wave function.

Some of these attributes (for example mass and charge), are considered static and may be thought

of as fixed and always belonging to the particle. They provide, in effect, immutable evidence for

the existence of the particle. But other attributes of a particle are complementary in that the

determination of one affects the ability to measure the other. These other attributes are termed

dynamic attributes and come in pairs. Foremost among the dynamic complementary attributes

are position, q, and momentum, p. In 1927 Werner Heisenberg, introduced his famous

uncertainty principle in which he asserted that the product of the uncertainty with which the position is measured, Dq, and the uncertainty with which the momentum is measured, D p must

always be greater than Planck's constant. That is, Dq D p h. In other words, if you measure one

attribute to great precision (e.g. Dq is very small) then the complementary attribute must

necessarily have a very large uncertainty (e.g. D p h/Dq). A similar relation is true of any pair of

6 All things at the quantum level (even forces) are represented by particles. Some of these particles arefamiliar to most people (e.g. the electron and photon), others are less familiar (muons, gluons, neutrinos,etc.). Basically, quantum particles consist of Fermions and Bosons. The most important Fermions are quarksand leptons. Quarks are the components of protons and neutrons, while leptons include electrons. TheFermions then, contain all the particles necessary to make atoms. The Bosons carry the forces between theFermions (and their constructs). For example, the electromagnetic force is intermediated by photons, and thestrong and weak nuclear forces are intermediated by mesons, and intermediate vector bosons respectively.Quantum physicists have found it convenient to speak of all of the phenomena at the quantum level in termsof particles. This helps avoid confusion that might arise because of the wave/particle duality of quantum

phenomena. It gives nonphysicists a comfortable but false image of a quantum level populated by tiny billiard balls.

15

-

8/14/2019 Machine Minds

16/79

dynamic attributes. In the early days of quantum mechanics it was believed that this fact could be

attributed to the disturbance of the attribute not being measured by the measuring process. This

view was necessitated by the desire to view quantum particles as objects that conform to the

normal human concept of objects with a real existence and tangible attributes (call such a concept

normal reality ). Obviously, if Heisenberg's principle was true and the quantum world possessed

a normal reality, the determination of a particle's position must have altered the particle's

momentum in a direct manner. Unfortunately, as will be discussed below, the assumption of

normal reality at quantum levels leads to the necessity of assuming faster than light

communication among the parts of the measured particle and the measuring device. The idea that

quantum objects conform to a normal reality is now out of favor with physicists (though not

discarded). In any case, the principle was not intended as recognition of the clumsiness of

measuring devices. Heisenberg's uncertainty principle arose from considerations concerning

the wave nature of the Schr_dinger equations and result directly from them. Richard P. Feynman,

in one of his famous lectures on quantum electrodynamics (QED) tried to put the principle in its

historical perspective (Feynman, 1985):

When the revolutionary ideas of quantum physics were first coming out, people still tried to understand themin terms of old-fashioned ideas (such as, light goes in straight lines). But at a certain point the old-fashionedideas would begin to fail, so a warning was devised that said, in effect, Your old-fashioned ideas are nodamned good when... If you get rid of the old-fashioned ideas and instead use the ideas Im explaining inthese lectures [ ] there is no need for an uncertainty principle.

The old-fashioned ideas were of course, the normal physics of normal reality, and as Feynman

readily admitted, the new ideas appeared non-sensical in that reality.

But the predictions made by quantum mechanics (which now consist of four different systems

equivalent to Schr_dinger equations) 7 of the results of quantum particle experiments have proven

accurate for fifty years. They consistently and precisely predict the observations made by

physicists of the effects produced by the interactions of single particles (from perhaps cosmic

sources) and beams of particles produced in laboratories. The tools for the prediction of 7 Three other systems equivalent to the Schr_dinger equations are Heisenberg's matrix mechanics, Dirac'stransformation theory and Feynman's sum over histories system.

16

-

8/14/2019 Machine Minds

17/79

experimental results are perfected and used daily by physicists. So, under the test that a

successful theory can be measured by its ability to predict, quantum theory is very successful. In

spite of all of their accuracy and predictive ability the systems do not provide a picture of, or

feeling for the nature of quantum reality any more than the equation F = ma provides a picture of

normal reality. But the quantum mechanics does provide enough information to indicate that

quantum reality cannot be like normal reality. Unfortunately (or fortunately if you are a

philosopher) there are many different versions of quantum reality that are consistent with the

facts; all of which are difficult to imagine. For instance, the concept of attribute, so strong and

unequivocal in normal reality, is qualified at the quantum level as indicated above. Worse, if the

quantum mechanics equations are to be interpreted as a model of the quantum level of existence

then the inhabitants of quantum reality consist of waves of probability, not objects with

attributes (strange and quirky though they may be). As Werner Heisenberg said "Atoms are not

things." The best one can do is to conjure up some version of an Alice in Wonderland place in

which exist entities identifiable by their static attributes but in which all values of dynamic

attributes are possible but in which none actually exist. Occasionally the quantum peace is

disturbed by an act that assigns an attribute and forces a value assignment (e.g. a measurement or

observation occurs). Nick Herbert in his book Quantum Reality (Herbert, 1989) has identified

eight versions of quantum reality held by various physicists (the versions are not all mutually

exclusive):

1) The Copenhagen interpretation (and the reigning favorite) originated by Niels Bohr, Heisenberg, and Max Bornholds that there is no underlying reality. Quantum entities possess no dynamic attributes. Attributes arise as a joint

product of the probability wave and the normal reality measuring device.

2) The Copenhagen interpretation part II maintains that the world is created by an act of observation made in thenormal world. This has the effect of choosing an attribute and forcing the assignment of values.

3) The world is a connected whole arising from the history of phase entanglements of the quantum particles thatmake up the world. That is, when any two possibility waves (representative of some quantum particle) interact, their

phases become entangled. Forever afterward, no matter their separation in space, whatever actuality may manifestitself in the particles, the particles share a common part. Originally the phase entanglement was thought of as just amathematical fiction required by the form of the equations. Recent developments (Bell's theorem, see below) lend

credence to the actuality of the entanglements in the sense that those entanglements can explain, and in fact areneeded to explain, experimental data. Phase entanglement was originally proposed by Erwin Schr_dinger.

4) There is no normal reality hypothesis (normal reality is a function of the mind). John Von Neumann felt that the problem with explaining the reality behind the experimental observation arose because the measurement instrumentswere treated as normally real while the particles being measured were considered as waveforms in a quantum reality.He undertook to treat the measurement devices as quantum waveforms too. The problem then became one of

17

-

8/14/2019 Machine Minds

18/79

determining when a quantum particle as represented by a wave of all possible attributes and values, collapsed (took a quantum leap) to a particular set of attributes and values. He examined all of the possible steps along the path of the process of measurement and determined that there was no distinguished point on that path and that thewaveform could collapse anywhere without violating the observed data. Since there was no compelling place toassume the measurement took place, Von Neumann placed it in the one place along the path that remainedsomewhat mysterious, the human consciousness.

5) There are an infinity of worlds hypothesis. In 1957, Hugh Everett, then a Princeton University graduate studentmade the startling proposal that the wave function does not collapse to one possibility but that it collapses to all

possibilities. That is, upon a moment requiring the assignment of a value, every conceivable value is assigned, onefor each of a multitude of universes that split off at that point. We observe a wave function collapse only because weare stuck in one branch of the split. Strange as it may seem this hypothesis explains the experimental observations.

6) Logic at the quantum level is different than in normal reality. In particular the distributive laws in logic do notapply to quantum level entities, that is, A or (B and C) is not the same as (A or B) and (A or C). For example, if club Swell admits people who are rich or it admits people who come from a good family and have goodconnections, while club Upper Crust admits people who are rich or come from a good family and who are rich or have good connections, then in normal reality we would find that after one thousand people apply for membershipto both clubs the same group of people will have been accepted at both clubs. In the quantum world, not only willthe memberships be different but in club Upper Crust there will be members who are not rich and do not come froma good family or are not well connected.

7) Neo-realism (the quantum world is populated with normal objects). Albert Einstein said that he did not believethat God played dice with the universe. This response was prompted by his distaste for the idea that there was noquantum reality expressible in terms of the normal reality. He didn't want to except probability waves as in somesense real. He and DeBroglie argued that atoms are indeed things and that the probabilistic nature of the quantummechanics is just the characteristic of ensembles of states of systems as commonly presented in statisticalmechanics. John Von Neumann proved a theorem that stated that objects that displayed reasonable characteristicscould not possibly explain the quantum facts as revealed by experimental data. This effectively destroyed the neo-realist argument until it was rescued by David Bohm who developed a quantum reality populated by normal objectsconsistent with the facts of quantum mechanics. The loophole that David Bohm found that allowed him to develophis model was the assumption by Von Neumann of reasonable behavior by quantum entities. To Von Neumann,reasonableness did not include faster than light phenomena. In order to explain the experimental data in a quantumreality populated by normal objects, Bohm found it necessary to hypothesize a pilot wave associated with each

particle that was connected to distant objects and that was able to receive superluminal communications. The pilotwave was then able to guide the particle to the correct disposition to explain the experimental data. Unfortunatelyfor the neoreality argument faster than light communications puts physicists off. As discussed below, theacceptance of a variation of that concept, at least for objects in the quantum world, may be required by recentfindings.

8) Werner Heisenberg champions a combination of 1 and 2 above. He sees the quantum world as populated by potentia or "tendencies for being." Measurement is the promotion of potentia to real status. The universe is observer created (which is not the same as Von Neumann's consciousness created universe).

Albert Einstein did not like the concept of a particle consisting of a wave of probabilities. In

1935, in collaboration with Boris Podowski and Nathan Rosen he proposed a thought experiment

that would show that, even if quantum mechanics could not be proven wrong, it was anincomplete theory. The idea was to create a hypothetical situation in which it would have to be

concluded that there existed quantum attributes/features that were not predictable by quantum

mechanics. The thought experiment is known as the EPR experiment.

18

-

8/14/2019 Machine Minds

19/79

The EPR experiment consists of the emission in opposite directions from some source of two

momentum correlated quantum particles. Correlated particles (correlated in all attributes, not just

by the momentum attribute) may be produced, for example, by the simultaneous emission of two

particles from a given energy level of an atom. Call the paths that the first and second particles

take the right hand and left hand paths respectively. At some point along the right hand path

there is a measuring device that can measure the momentum of the first particle. On the left hand

path there is an identical measuring device at a point twice the distance from the source than the

first device. When the particles are simultaneously emitted along their respective paths, according

to quantum theory, they both consist of a wave of probability that will not be converted into an

actuality until they are measured. At the point of being measured their probability wave is

collapsed, or the momentum attribute is forced to take a value, or the consciousness of the

observer assigns a momentum value (e.g. a human is monitoring the measuring device), or the

universe splits, or some other such event takes place to fix the momentum. Now consider the

events of the experiment. The particles are emitted at the same time in opposite directions. Soon

the first particle (on the right hand path) is measured and its momentum is fixed to a particular

value. What is the status of the second particle at this point in time? According to quantum

mechanics it is still a wave of probability that won't become actualized until it encounters the

measuring device (still off in the distance). And yet it is known that the left hand measuring

device will produce the same momentum that the right hand device has already produced andwhen the second particle finally gets to that measuring device it does show the expected

momentum. Two possibilities present themselves, either the results of the measurement of the

first particle is somehow communicated to the second particle in time for it to assign the correct

value to its momentum attribute, or the second particle already 'knows' in some sense, which

actual momentum to exhibit when it gets to the measuring device. The particles are moving at or

near the speed of light so the former possibility requires superluminal communication and must

be rejected. But then the quantum particle must contain knowledge that is not accounted for by

quantum theory. In fact it must contain a whole list of values to assign to attributes upon

measurement because it can't possibly know which attribute will be measured by the measuring

device it encounters. So, Einstein concludes, quantum theory is incomplete since it says nothing

about such a list.19

-

8/14/2019 Machine Minds

20/79

Einsteins rejection of superluminal communication is equivalent to an assumption of locality.

That is, any communication that takes place must happen through a chain of mediated

connections and may not exceed the speed of light. Electric, magnetic and gravitational fields may

provide such mediation but the communication must occur through distortions of those fields

that can only proceed at subluminal speeds. In 1964 John Stewart Bell, an Irish Physicist

attached to CERN, devised a means of using a real version of the EPR thought experiment to test

the assumption of locality. He substituted two beams of polarity correlated (but randomly

polarized) photons for the momentum correlated particles of the EPR experiment.

By 1972 John Clauser at University of California at Berkeley, using a mercury source for the

beams obtained results indicating that the quantum mechanics predictions were correct. In 1982

Alain Aspect of the University of Paris performed a more rigorous version of the experiment.

The validity of quantum theory was upheld and the assumption of locality in quantum events

shown to be wrong. That quantum reality must be nonlocal is known as Bell's theorem. It is a

startling theorem that says that unmediated and superluminal or instantaneous communication

occurs between quantum entities no matter their separation in space. It is so startling that many

physicists do not accept it. It does little to change the eight pictures of quantum reality given

above except to extend the scope of what might be considered a measurement situation from the

normal reality description of components to include virtually everything in the universe. Theconcept of phase entanglement is promoted from mathematical device to active feature of

quantum reality.

Of course, we see none of this in our comfortable, normal reality. Objects are still constrained to

travel below the speed of light. Normal objects communicate at subluminal rates. Attributes are

infinitely measurable and their permanence is assured even though they may go for long periods

of time unobserved and uncontemplated. We can count on our logic for solving our problems, it

will always give the same correct answer, completely in keeping with the context of our normal

reality. But we are constructed from quantum stuff. How can we not share the attributes of that

level of existence? And we are one of the constituents of the Milky Way, (although we never

think of ourselves as such); what role do we play in the nature of its existence? Does such a20

-

8/14/2019 Machine Minds

21/79

question even make sense? It might help to look more closely at the nature of levels of existence.

Levels

Biologists, physicists, astronomers, and other scientists, when describing their work, often

qualify their statements by specifying the scale at which their work occurs. Thus it's common to

hear the 'quantum level', the 'atomic level', the 'molecular level', the 'cellular level', the 'planetary

level' or the 'galactic level' mentioned in their work. And they might further qualify things by

mentioning the time frame in which the events of their discipline occur, e.g. picoseconds,

microseconds, seconds, days, years, millions of years, or billions of years.

The fact that such specifications must be made is not often noted; it's just taken as the nature of

things that, for example, objects and rules at the quantum level don't have much to do with the

objects and rules at the cellular level, and the objects and rules at the cellular level don't have

much to do with objects and rules at the galactic level and so on. A bit of reflection however,

reveals that it's really quite an extraordinary fact! All of these people are studying exactly the

same universe, and yet the objects and rules being studied are so different at the different levels,

that an ignorant observer would guess that they belonged to different universes. Further, when

the level at which humankind exists is placed on a scale representing the levels that are easily

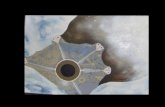

identified, it is very nearly in the center (see figure 1).

Three reasons why that might be, come immediately to mind: 1) we're in the center by pure

chance, 2) the universe was created around us (and therefore, probably for us), or 3) it only looks

like we're in the center because we can 'see' about the same distance in both directions. Is our

perception of these levels, embedded in the scale of being, just an artifact of our failure to see the

underlying scheme (i.e. the universe can be explained by a few immutable object types together

with their rules of interaction, so that the different objects and rules that we perceive at different

levels are simply a complex combination of those primitives), or were these levels set up, in

some way for our support, or do these levels (and their objects and rules) have a real and, in

some sense, independent existence? The answer we give is part of the hypothesis that will be

made below: levels exist and contain rules not derived from surrounding levels of existence, and 21

-

8/14/2019 Machine Minds

22/79

the further removed any two levels the less the one can be interpreted or explained in terms of the

other .

If accepted, this answers the question of why we seem to be at the center of the scale of being;

the further removed a level from the human level of existence, the less that level has in common

with the human level and the less humans are able to interpret what occurs at that level in familiar

terms. For sufficiently remote levels, no interpretation can be made. We cannot 'see' beyond what

our minds can comprehend. So, for example, we don't understand quantum reality because it is on

the very edge of that to which we as humans can relate. For the same reason, at the other end of

the scale, we can't conceive of all that is as the universe, and at the same time, the universe as all

that is, nor do our minds comfortably deal with infinity and eternity. If we were a quantum

entity, we

22

-

8/14/2019 Machine Minds

23/79

radii of proton or neutron

radii of electron shells of atomsmoleculesmacro-molecules(DNA etc.)virusesbacteriablood corpusclesvegetable and animal cells

atomic Nuclei

manmousewhale

snowflakes

small town

radius of the Earth

radius of Solar System

large city

radius of galaxy (Milky Way)

radius of the Sun

radius of largest structures (clusters of galaxies)

range of strong nuclear force

range of weak nuclear force

distance to nearest star

10-25

25

-20

-15

-10

- 5

0

5

10

15

20

10

Meters

Meters

Figure 1: New scale of being

would undoubtedly express the same wonder at the impossible nature of the strange subluminal

world populated by unconnected, virtually uncommunicative, objects with frozen attributes, that

exist at the very edge of the ability to comprehend. But we shall pull back from such tempting

speculations. Our purpose is not to investigate the concept of levels in general. We will leave

many questions unanswered. We are mainly interested in the fact of the existence of levels and

the effect of that on what we call intelligence. We take up that problem in subsequent sections of

this paper. It would be good, however, to have a firmer grasp of how and why levels occur.

23

-

8/14/2019 Machine Minds

24/79

Why should the universe be hierarchically structured and what process or processes leads to the

semi-permeability of objects and rules across levels?

Chaos

Mathematics was invented as an aid to reasoning about objects and processes in the world. Its

main feature is abstraction and its main implementation mechanism is deduction from a set of

axioms. In the beginning the axioms were restricted to self evident, non-reducible truths about the

world. Tools of proof were restricted to real world artifacts such as the compass and divider.

Eventually it was discovered that the restriction to self-evident truths was not fundamental to the

process of deduction, and finite tools were not sufficient for the proof of all theorems that could

otherwise be shown to be true. It was found that different axiomatic systems could give rise to

diverse and interesting mathematical structures, some of which could be applied to real world

problems. Throughout all of its history, the axiomatic method remained the unquestioned

approach of mathematics. The cracks in that foundation evidenced by competing yet seemingly

valid axiomatic systems spurred the attempt to find an encompassing set of axioms upon which

to base all of mathematics. That search came to an abrupt end with the publishing of Goedel's

Theorem which stated that no such system could exist. Mathematics had moved from an aid to

reckoning, to acceptance as a main revealer of truth about the world ; it was considered the

handmaiden or even the queen of the sciences. But like a skyrocket that rises from a bottle to thesky and then explodes into a thousand beautiful whirligigs, mathematics, while a beautiful sight to

behold, no longer had, nor does it have, a discernible direction. The importance of Goedel's

theorem should not be overlooked, it does not just apply to abstract mathematics. It applies to

any codified (formal) system and so denies the validity of highly mathematical world models and

loosely argued cosmologies alike.

When a system is determined to be incomplete or inconsistent for some specific reason, then

those inconsistencies or the incompleteness can often be corrected by enlarging the system to

include appropriate axioms. This is reminiscent of the way in which one scientific theory

supplants another. Unfortunately, any such measure simply creates another system to which

Goedel's theorem applies as strongly as the first. To avoid an infinite regress it becomes24

-

8/14/2019 Machine Minds

25/79

necessary at some point to accept a world system and the fact that, in it, there will be

unexplainable (non-reducible) or contradictory phenomena. We will argue below that this is not

simply a mathematical conundrum but a feature inherent in a creative hierarchically structured

universe. In preparation for that argument we first discuss an obvious manifestation of the

inexplicable in the world system that most humans find comfortable, that is the phenomena

subject to description (not explanation) in the mathematics of chaos theory.

Geometry, algebra, calculus, logic, computability theory, probability theory, and the various

other areas and subdivisions of mathematics that have been developed over the years serve to

describe and aid in the development of the various hard and soft sciences. As the sciences have

come to treat more complex systems (e.g. global weather systems, the oceans, economic systems

and biological systems to name just a few) the traditional mathematical tools have proved, in

practice, to be inadequate. With the advent of computers a new tactic emerged. The systems

could be broken down into manageable portions. A prediction would be calculated for a small

step ahead in time for each piece, taking into account the effects of the nearby pieces. In this

manner the whole system could be stepped ahead as far as desired. A shortcoming of the

approach is the propagation of error inherent in the immense amount of calculation, in the

approximation represented by the granularity of the pieces, and in the necessary approximation

of nonlocal effects. To circumvent these problems, elaborate precautions consisting of

redundancy and calculations of great accuracy have been employed. To some extent such tacticsare successful, but they always break down at some point in the projection. The hope has been

that with faster more accurate computers the projections could be extended as far as desired.

In 1980 Mitchell J. Feigenbaum published a paper (Feigenbaum 1980) in which he presented

simple functionally described systems whose stepwise iterations (i.e. the output of one iteration

serves as the input to the next iteration in a manner similar to the mechanism of the models

mentioned above) produced erratic, nonpredictable trajectories. Beyond a certain point in the

iteration, the points take on a verifiably random nature. That is the nature of the points

25

-

8/14/2019 Machine Minds

26/79

produced satisfy the condition for a truly random sequence of numbers 8. The resulting

unpredictability of such systems arises not as an artifact of the accumulation of computational

inaccuracies but rather as a fundamental sensitivity of the process to the initial conditions of the

system. 9 Typically these systems display behavior that depends upon the value of parameters in

that system. For example the equation x i+1 = ax i(1 x i) where 0 < x 0 < 1, has a single solution

for each a < 3, (that is the iteration settles down so that for above some value of i, x i remains the

same). At a = 3 there is an onset of doubling of solutions (i.e. beyond a certain i, x i alternates

between a finite set of values; the number of values in that set doubles at incremental values as a

increases). Above a = 3.5699... the system quits doubling and begins to produce random values.

If you denote by l n the value for which the function doubles for the nth time then ( l n+1

l n)/( l n l n1 ) = 4.6692. The value 4.6692 and another value 2.5029 are universal constants

that have been shown to be associated with all such doubling systems. The points at which

doubling occurs are called points of bifurcation. They are of considerable interest because for real

systems that behave according to such an iterative procedure 10, there is an implication that the

system might move arbitrarily to one of two or more solutions. The above example has one

dimension but the same activity has been observed for many dimensional systems and

apparently occurs for systems of any dimension. When the points generated by a two

dimensional system are plotted on a coordinate system (for various initial conditions) they form

constrained but unpredictable, and often beautiful trajectories. The space to which they areconstrained often exhibits coordinates to which some trajectories are attracted, sometimes

8 For example a random sequence on an interval is random if the probability that the process will generate avalue in any given subinterval is the same as the probability of it generating a number in any other subintervalof the same length.

9 Consider the following simple system: x 0 = pi 3, x i+1 = 10*x i trunc(10*x i), where trunc means todrop the decimal part of the argument. The sequence of numbers generated by the indicated iteration arenumbers between 0 and 1 where the nth number is the decimal portion of pi with the first n digits deleted.This is a random sequence of numbers arising from a deterministic equation. Changing the value of x 0,however slightly, inevitably leads to a different sequence of numbers.

10 For example the equation x i+1 = ax i(1 x i) is called the logistics function and can serve as a model for the growth of populations.

26

-

8/14/2019 Machine Minds

27/79

providing a simple stable point to which the points gravitate or around which the points orbit. At

other times trajectories orbit around multiple coordinates in varying patterns.

More spectacularly, some trajectories gravitate toward what have been called strange attractors .

Strange attractors are objects with fractional dimensionality. Such objects have been termed

fractals by Benoit B. Mandelbrot. who developed a geometry based on the concept of fractional

dimensionality (Mandelbrot, 1977). Fractals of dimension 0 < d < 1, consists of sets of points

that might best be described as a set of points trying to be a line. An example of such a set of

points is the Cantor set which is constructed by taking a line segment, removing the middle one

third and repeating that operation recursively on all the line segments generated (stipulating that

the remaining sections retain specifiable endpoints). In the limit an infinite set of points remains

that exhibits a fractal dimensionality of log 2 / log 3 = .6309. Objects of varying dimensionality in

the range [0,1] may be constructed by varying the size of the chunk removed from the middle of

each line. An object with dimensionality 1 < d < 3 can be described as a line trying to become a

plane. It consists of a line, so contorted, that it can have infinite length yet still be contained to a

finite area of a plane. An example of such an object is the Koch snowflake as a fractal. The Koch

snowflake is generated by taking an equilateral triangle and deforming the middle one third of each

side outward into two sides of another equilateral triangle. After the first deformation a star of

David is formed. Each of the twelve sides of the star of David is deformed in the same manner,

and the sides of the resulting figure deformed, and so on. In the limit a snowflake shaped figurewith an infinitely long perimeter and a fractal dimensionality of log 4 / log 3 = 1.2618 results.

Beyond a few iterations the snowflake does not change much in appearance.

Many processes in the real world display the kind of abrupt change from stability to chaos that

Feigenbaum's systems display. Turbulence, weather, population growth and decline, material

phase changes (e.g. ice to water), dispersal of chemicals in solution and so forth. Many objects of

the real world display fractal like dimensionality. Coastlines consists of convolutions, in

convolutions, in convolution, and so on. Mountains consist of randomly shaped lumps, on

randomly shaped lumps, on randomly shaped lumps, and so on. Cartographers have long noted

that coastlines and mountains appear much the same no matter the scale. This happens because

at large scales detail is lost and context is gained while at small scale detail is gained but context is27

-

8/14/2019 Machine Minds

28/79

lost. In other words, shorelines look convoluted and mountains bumpy. It might be expected,

given that the chaotic processes described above give rise to points, lines, orbits, and fractal

patterns that real world systems might be described in terms of their chaotic tendencies. And

indeed that is the case. The new area of research in mathematics and physics arising from these

discoveries has come to be known as chaos theory . Chaos theory holds both promise and peril

for model builders. Mathematical models whose iterations display the erratic behavior that

natural systems display, capture to some extent the nature of the bounds, constraints and

attractors to which the systems are subject. On the other hand, the fact that any change, however

slight (quite literally), in the initial conditions from which the system starts, results in totally

different trajectories implies that such models are of little use as predictors of a future state of the

system. Further, it would appear that it may be very difficult, perhaps even impossible, to

extract the equations of chaos (no matter that they may be very simple) from observation of the

patterns to which those equations give rise. Whatever the case, the nature of the activity of these

systems has profound implications for the sciences.

If PierreSimon Laplace turns out to have been correct in assuming that the nature of the

universe is deterministic (which the tenets of chaos theory do not preclude, but which they do

argue against 11), he was wrong when he conjectured that he only needed enough calculating

ability and an initial state to determine all the past and future history of the universe. A major

error was in assuming that initial conditions could ever be known to the degree necessary to provide for such calculations. As an example, imagine a frictionless game of billiards played on a

perfect billiard table. The player, who has absolutely perfect control over his shot and can

11 We reject it mainly because of the butterfly effect which states that the flapping of the wings of a butterflyin Tokyo might affect the weather at a later date in New York City. This is because the weather systems at thelatitude of New York City and Tokyo travel West to East so that the weather in New York can be thought of as arising from the initial conditions of the atmosphere over Tokyo. As another example, consider the oldsaying, "for want of a nail the shoe was lost, for want of a shoe the horse was lost, for want of a horse the

battle was lost, and for want of the battle the kingdom was lost." Consider the nail in the horse's shoe. For some coefficient of friction between the nail and the hoof, the shoe would have been retained. The exact valueof that coefficient might have depended on the state of a few molecules at the nail/hoof interface. Supposethose molecules were behaving in a manner that had brought them to a point of bifurcation that would resultin either an increase or decrease in the coefficient of friction. In the one case the shoe would have been lost andin the other it would have been retained.The loss of the nail would then have been a true random event thatwould have propagated its way to the level of events in human civilization. If the battle in question had beenthe battle of Hastings, the strong French influence in the language you are reading might well have beenmissing.

28

-

8/14/2019 Machine Minds

29/79

calculate all of the resulting collisions, sends all of the balls careening around the table. If he

neglects to take into account the effects of one electron on the edge of the galaxy, his calculations

will begin to diverge from reality within one minute (Crutchfield et al, 1986). Knowing the

impossibility of prediction even in a theoretically deterministic universe, decouples the concepts

of determinism and predictability, and of describability and randomness . Further, chaotic systems

have built into them an arrow of time. The observer of such a system at some given point in time

can not simply extrapolate backward to the initial conditions nor can he (so far as we know)

divine the underlying equations by the character and relationship of the events it generates. The

laws that underlie those events are obscured and can be determined only by simulated trial and

error, the judicious use of analogy, description in terms of probability, or blind luck. There is a

strong sense that something of Goedel's theorem is revealing itself here. Just as in any

mathematical system of sufficient complexity there are truths that cannot be proved, there are

real deterministic systems for which observations can be made that cannot be predicted. Perhaps

the most important result of the discoveries in chaotic processes, is the growing realization

among scientists that the linear, closed system, mathematics that have served so well for

describing the universe during the last two hundred and fifty years, and which gave rise to the

metaphor of a clockwork universe, only describe exceptionally well behaved and relatively rare

(but very visible) phenomena of that universe. It is becoming apparent that it may not be

possible to derive a neat linear, closed system model for everything that exhibits a pattern. It is

likely that new nonlinear, open system approaches that rely heavily on computers will berequired to proceed beyond the current state of knowledge, and that in the explanation of

phenomenon in terms of laws, those laws may have to be constrained to the levels of existence of

the systems they describe and not be reduced to more fundamental laws at more fundamental

levels.

It is not surprising that the investigation of chaotic activity waited on the development of fast

computers and high resolution graphics display devices. The amount of calculation required to get

a sense of chaos is staggering and the structures revealed can only be appreciated graphically with

the use of computers. But chaos theory has implications for computing as well. Computers have

been around for a long time. The first machine that can truly be said to have been a computer was

the Analytic Engine, designed in England in the mid nineteenth century by Charles Babbage. The29

-

8/14/2019 Machine Minds

30/79

machine was never finished, but Ada Augusta Byron, daughter of Lord Byron, and who held the

title, Countess of Lovelace, wrote programs for it, making her the first computer programmer.

The Analytic Engine, though incomplete, was a showpiece that impressed and intimidated

people. The countess is said to have stated (McCorduck, 1979):

"The Analytical Engine has no pretensions whatever to originate anything. It can do whatever we know how toorder it to perform."

Since then, those sentiments have often been repeated to reassure novice programmers and others

whose lack of understanding causes them to see the computer as an intimidating, intelligent, and

perhaps malevolent entity. More experienced programmers, however, find that the machine is

truly an ignorant brute, incapable of doing anything on its own. And so the myth is propagated

across generations. In chaos theory the myth is refuted. In pursuing chaotic processes themachine can truly create, though, in a mechanical manner that seems to lack purpose. We will

argue that the seeming lack of purpose is a human prejudice based on our concept of what

constitutes purpose, and that chaotic processes may very well be the mark of a creative universe.

The structure revealed in chaotic processes is statistically accessible. While the sequence and

position of the points generated within the constraints of the system are not predictable, if,

taking as an example a two dimensional space, the points are treated as forming a histogram on a

grid imposed upon the shape/form generated by the system then the system can be treated

probabilistically. In terms of levels, the points may be seen as occupying one level and the form

defined by the points as occupying the next higher level. The theory then has potential for the

probabilistic prediction of system activities. This appeal to statistical description is not

frivolous, but in fact is essential to any description of the universe as David Layzer indicates in

the statement of his proposed cosmological principle.

According to Layzer (Layzer 1990) (and as indicated above) the world/universe at its

fundamental smallest scale (quantum level) consists of permanent , and indistinguishable objects

that occupy discrete (but, in principle, unpredictable ) states as opposed to the continuous,