LU Dec Sparse

-

Upload

amir-a-sadr -

Category

Documents

-

view

226 -

download

0

Transcript of LU Dec Sparse

-

7/31/2019 LU Dec Sparse

1/21

A grid-based multilevel incomplete LUfactorization preconditioning technique for

general sparse matrices

Jun Zhang

Department of Computer Science, University of Kentucky, 773 Anderson Hall, Lexington,

KY 40506-0046, USA

Abstract

We design a grid-based multilevel incomplete LU preconditioner (GILUM) for

solving general sparse matrices. This preconditioner combines a high accuracy ILU

factorization with an algebraic multilevel recursive reduction. The GILUM precondi-tioner is a compliment to the domain-based multilevel block ILUT preconditioner. A

major dierence between these two preconditioners is the way that the coarse level nodes

are chosen. The approach of GILUM is analogous to that of algebraic multigrid

method. The GILUM approach avoids some controversial issues in algebraic multigrid

method such as how to construct the interlevel transfer operators and how to compute

the coarse level operator. Numerical experiments are conducted to compare GILUM

with other ILU preconditioners. 2001 Elsevier Science Inc. All rights reserved.

Keywords: Incomplete LU factorization; Multilevel ILU preconditioner; Algebraic multigrid

method; Sparse matrices

1. Introduction

We propose a new preconditioning technique that is based on a multilevel

recursive incomplete LU factorization of general sparse matrices. Unstructured

sparse matrices are often solved by Krylov subspace methods coupled with

Applied Mathematics and Computation 124 (2001) 95115

www.elsevier.com/locate/amc

E-mail address: [email protected] (J. Zhang).

http://www.cs.uky.edu/ $jzhang

0096-3003/01/$ - see front matter 2001 Elsevier Science Inc. All rights reserved.

PII: S 0 0 9 6 - 3 0 0 3 ( 0 0 ) 0 0 0 8 1 - 3

-

7/31/2019 LU Dec Sparse

2/21

suitable preconditioners [34]. The development of robust preconditioners has

received lots of attention in recent years due to their critical roles in precon-

ditioned iterative schemes.

Although originally proposed for structured matrices the standard incom-

plete LU factorization (ILU(0)) has been used as a general purpose precon-

ditioner for general sparse matrices for more than two decades [27]. For many

realistic problems, however, this rather simple preconditioner is inecient and

may fail completely. More robust preconditioners, many of them are based on

dierent extensions of ILU(0), have since been proposed. We refer to [34] for a

partial account of the literature along this line.

The multi-elimination ILU preconditioner (ILUM), introduced in [33], is

based on exploiting the idea of successive independent set orderings. It has amultilevel structure and oers a good degree of parallelism without sacricing

overall eectiveness. Similar preconditioners developed in [6,36] show near-grid

independent convergence for certain types of problems. Block versions of

ILUM have recently been designed using small dense blocks (BILUM) or large

domains (BILUTM) as pivots instead of scalars [36,37,40]. For some hard to

solve problems, BILUM and BILUTM may perform much better than ILUM.

Various strategies have been proposed to invert or factor the blocks or do-

mains eciently. We remark that extracting parallelism from ILU factoriza-

tions has been the initial motivation behind the development of these multilevel

ILU preconditioners [33,36,37]. In a recent paper [45], BILUM was tested withseveral popular Krylov subspace accelerators for solving a few nonsymmetric

matrices from applications in computational uid dynamics. The test results

show that the quality of the preconditioner determines the convergence rates of

preconditioned iterative schemes.

Alternative multilevel approaches have been developed by other researchers.

Examples of such approaches include nested recursive two-level factorization

and repeated red black orderings [1], generalized cyclic reduction [24], and

parallel point- and domain-oriented multilevel methods [26]. Some recently

developed multilevel methods require only the adjacency graph of the coe-

cient matrices [33,36,37]. Other generalized multigrid techniques are algebraicmultigrid method [8,12,31] and certain types of multigrid methods employing

matrix-dependent interlevel transfer operators [19]. Equally interesting are

multilevel preconditioning techniques based on hierarchical basis [5], multi-

graph [3], approximate cyclic reduction [30], Schur complement [43], ILU de-

composition [4], and other approaches associated with nite dierence or nite

element matrices [10,41].

One major dierence between multilevel preconditioning technique and al-

gebraic multigrid method is the choice of the coarse level nodes (the rows of the

coarse level matrix). The coarse level nodes in multilevel preconditioning

technique [36] are the ne level nodes in algebraic multigrid method [31], and

vice versa. There are dierences in constructing multilevel preconditioning

96 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

3/21

matrices and algebraic multigrid matrices. However, a series of recently pro-

posed methods [3,7,30,43], which we refer to as algebraic multigrid precondi-

tioning methods, have brought the two classes of methods close to each other.

In this paper we propose a fully algebraic multilevel ILU preconditioning

technique. This grid-based multilevel ILU preconditioning technique (GI-

LUM) takes the approach of algebraic multigrid method in choosing coarse

level nodes, in contrast to the approach of BILUM. However, GILUM is fully

algebraic with respect to general sparse matrices. Such a generality does not

seem to have been achieved by other algebraic multigrid method or algebraic

multigrid preconditioning method.

This paper is organized as follows. Section 2 gives an overview and back-

ground on algebraic multigrid method and multilevel preconditioning tech-nique. Section 3 illustrates a partial Gaussian elimination process for

constructing the coarse level systems. Section 4 introduces some diagonal

threshold strategy. Section 5 discusses the grid-based multilevel ILU precon-

ditioner (GILUM). Section 6 contains numerical experiments and Section 7

gives concluding remarks.

2. Multilevel preconditioners and multigrid methods

Multilevel preconditioning technique and algebraic multigrid method takeadvantage of the fact that dierent parts of the error spectrum can be treated

independently on dierent levels. In construction, multilevel preconditioners

also exploit, explicitly or implicitly, the property that a set of unknowns that

are not coupled to each other can be eliminated simultaneously in a Gaussian

elimination type process. Such a set is usually called an independent set. The

concept of independentness can easily be generalized to a block version. Thus a

block independent set is a set of groups (blocks) of unknowns such that there is

no coupling between unknowns of any two dierent groups (blocks) [36].

Unknowns within the same group (block) may be coupled.

Various heuristic strategies may be used to nd an independent set withdierent properties [33,36]. A maximal independent set is an independent set

that cannot be augmented by other nodes and still remains independent. In-

dependent sets are often constructed with some constraints such as to guar-

antee certain diagonal dominance for the nodes of the independent set or of the

vertex cover, which is dened as the complement of the independent set. Thus,

in practice, the maximality of an independent set is rarely guaranteed, espe-

cially when some dropping strategies or diagonal threshold strategies are ap-

plied [40].

Algebraic and black box multigrid methods attempt to mimic geometric

multigrid method by choosing the coarse level nodes as those in the indepen-

dent set [8,31]. These methods usually dene a prolongation operator Iaa1

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 97

-

7/31/2019 LU Dec Sparse

4/21

based on some heuristic arguments, here 06 a

-

7/31/2019 LU Dec Sparse

5/21

inverse technique is used to compute D1a by inverting each small block inde-

pendently (in parallel). In [40], some regularized inverse technique based on

singular value decomposition is used to invert the (potentially near singular)

blocks approximately. The domain-based BILUTM preconditioner utilizes an

ILUT factorization procedure similar to the one used in this paper and avoids

the sparsity problems associated with inverting large domains [37].

Although multilevel preconditioning technique and algebraic multigrid

method originated from dierent sources, there has been reported success in

using multigrid methods as preconditioners for Krylov subspace methods

[28,29,42]. Further, a series of papers recently published by several multigrid

practitioners advocate algebraic multigrid preconditioning methods for dis-

cretized partial dierential equations or sparse matrices. These methods in-clude multigraph algorithm of Bank and Smith [3], multilevel ILU

decomposition of Bank and Wagner [4], algebraic multigrid method of Braess

[7], approximate cyclic reduction preconditioning method of Reusken [30], and

Schur complement multigrid of Wagner, Kinzebach and Wittum [43]. These

methods began to adopt the concept of (incomplete) matrix factorization and

preconditioning in algebraic multigrid type approaches. However, most of

these methods are not fully algebraic and do not aim at solving general sparse

matrices.

The grid-based multilevel ILU preconditioning technique (GILUM) of this

paper is a fully algebraic multilevel method. It targets general sparse matrices.GILUM preconditioner can be considered as a converging point of algebraic

multigrid method and multilevel preconditioning technique. This is because

GILUM adopts the coarse level choice of algebraic multigrid method, employs

the concept of preconditioning, and takes the approach of ILU factorization to

construct coarse level operator and interlevel transfer operators.

In reality, we can use algebraic multigrid ordering as in the right part of (2)

to write another block LU factorization analogous to (3) as

Ca Ea

Fa Da

Ia 0

FaC1a Ia

Ca Ea

0 Aa1

; 4

where Aa1 is the Schur complement with respect to Da. Now C1a is not easy to

compute exactly and we will use ILU factorization of Ca instead.

The initial motivation for developing grid-based multilevel preconditioner

was to utilize the nice property of the independent set of algebraic multigrid

method for discretized partial dierential equations on regular grids. For ex-

ample, if standard central dierence scheme is used to discretize Poisson

equation with Dirichlet boundary conditions on a square domain. The greedy

algorithm [34] will nd an independent set and its vertex cover roughly of equal

size. Both ILUM and GILUM will yield similar preconditioners. However, if a

fourth-order 9-point compact scheme is employed, the greedy algorithm will

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 99

-

7/31/2019 LU Dec Sparse

6/21

nd an independent set that is only one-third as large as its vertex cover, see

Fig. 1. This will cause slow reduction of system size in ILUM which chooses

the independent set as the ne level system. On the other hand, algebraic

multigrid method uses the vertex cover as the ne level system and thus a faster

reduction of system size can be expected. Of course, the diculty of slow re-

duction of system size in ILUM can be alleviated in BILUM and BILUTM

which utilize block independent sets.For unstructured general sparse matrices with many nonzero elements in

each row the standard greedy algorithm will yield a very small independent set

with a very large vertex cover. It is not uncommon that the size of the vertex

cover is more than 10 times larger than that of the independent set. Such a

partitioning of the nodes is not suitable for either ILUM or algebraic multigrid

method. Hence, certain strategy must be employed to restrict (balance) the

sizes of both the independent set and the vertex cover (see Section 4).

Furthermore, there are controversies in algebraic multigrid method as how

to dene the interlevel transfer operators and how to compute the coarse level

operator [11,12]. For general sparse matrices, the concept of conventional re-laxation may not be reliable as there is no guarantee that a given relaxation

method will converge or will even have smoothing eect. These problems of

algebraic multigrid method are avoided in multilevel preconditioning technique

such as ILUM and BILUTM, which do not use conventional relaxation

methods and do not use heuristic formula to dene interlevel transfer opera-

tors.

The previous discussions show that neither multilevel preconditioning

technique nor algebraic multigrid method is perfect for all types of problems. It

may be benecial to take the advantages of and to avoid the disadvantages of

both approaches. GILUM is designed as a hybrid of multilevel preconditioning

technique and algebraic multigrid method.

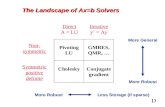

Fig. 1. Results of greedy algorithm search on 5-point (left) and 9-point (right) stencils. The emptycircles are independent set nodes and the solid circles are vertex cover nodes.

100 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

7/21

3. Partial ILUT factorization

The partial ILUT factorization for a reordered sparse matrix is similar to

that described in [37] with the exception that the matrix is under dierent or-

dering. For the purpose of a clear illustration we highlight a few key parts.

ILUT is a high accuracy preconditioner and its implementation is based on

the IKJ variant of Gaussian elimination [32,34,37]. ILUT attempts to limit the

ll-in elements by applying a dual dropping strategy during the construction.

The accuracy of ILUT(s;p) is controlled by two dropping parameters s and p.Elements with small magnitude relative to s are dropped as soon as they are

computed. After an incomplete row is computed a sorting operation is per-

formed such that only the largest p elements in absolute value are kept. Afterthe dual dropping strategy, there are at most p elements kept in each row of the

L and U factors [34].

Assume that the rst m equations are associated with the vertex cover as in

(4) without the subscript a. If we perform an LU factorization (Gaussian

elimination) to the upper part (the rst m rows) of the matrix, i.e., to the

submatrix C E. We have

C E LU L1E:

We then continue the Gaussian elimination to the lower part, but the elimi-

nation is only performed with respect to the submatrix F. In other words, we

only eliminate those elements ai;k for which m < i6 n; 16 k6m. Appropriatelinear combinations are also performed with respect to the D submatrix, in

connection with the eliminations in the F submatrix, as in the usual Gaussian

elimination. Note that, when doing these operations on the lower part, the

upper part of the matrix is only accessed, but is not modied [37]. The pro-

cessed rows of the lower part are never accessed again. Note again that the

nodes in the lower part are processed independently, since they only need to use

the nodes in the upper part to eliminate nonzero elements in the F submatrix,

see [37]. This partial (restricted) Gaussian elimination is equivalent to a blockLU factorization of the form

C E

F D

L 0

FU1 I

U L1E

0 A1

LU:

The ai;k's (of the lower part) for k6m are the elements in FU1 and the other

elements are those in A1.

It has been proved in [37] that the matrix A1 computed by the partial

Gaussian elimination is the Schur complement of A with respect to D. Note

that the submatrices FU1 and L1E are formed automatically, and the Schur

complement is formed implicitly, during the partial Gaussian elimination with

respect to the lower part of A.

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 101

-

7/31/2019 LU Dec Sparse

8/21

Dropping strategies similar to those used in ILUT can be applied to the

partial Gaussian elimination, resulting in an ILU factorization with an ap-

proximate Schur complement A1. We formally describe the partial ILUT fac-

torization as in Algorithm 3.1, where w is a work array of length n.

Algorithm 3.1 (Partial ILUT(s;p) factorization).

In Line 5 the function nzavgai;b returns the average absolute value of thenonzero elements of a given sparse row. We mention that in Algorithm 3.1 the

diagonals of the approximate Schur complement (A1) are not dropped re-

gardless of their values. It may be protable to use dierent dropping pa-

rameter set s;p for the upper and lower parts of the ILU factorizations.However, the issue of adjusting parameters within the GILUM construction

process is not discussed in this paper.

4. Independent set and diagonal thresholding

It is obvious that Algorithm 3.1 will fail when a zero-pivot is encountered in

the upper part ILU factorization. Even a small pivot may cause stability

problem by producing large size elements in the L and U factors [23]. In

Gaussian elimination such a problem may be avoided by employing a partial or

full pivoting strategy. In multilevel preconditioning technique a diagonal

threshold strategy may be used to force the nodes (rows) with small size di-

agonal elements into the coarse level system [38,39].

Thus, all rows in the vertex cover should have large absolute diagonal

values. Moreover, there is a concept of strong coupling (connection) among a

group of nodes. A node j is said to be strongly connected to a node i if jai;jj is

1. For i 2; . . . ; n; Do

2. w : ai;b3. For k 1; . . . ; mini 1; m and when wk T 0; Do

4. wk : wk=ak;k5. Set wk : 0 if jwkj < s nzavgai;b

6. If wk T 0; then7. w : w wk uk;b8. End If

9. End Do

10. Apply a dropping strategy to row w

11. Set li;j : wj for j 1; . . . ; mini 1; m whenever wj T 0

12. Set ui;j : wj for j mini; m; . . . ; n whenever wj T 0

13. Set w : 0

14. End Do

102 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

9/21

large. In multilevel block preconditioning technique (BILUM and BILUTM)

the nodes that are strongly connected to each other are solved together (within

one block independent set) in order to preserve physical couplings among them

[36]. However, algebraic multigrid method requires that each node on the ne

level is strongly connected to some nodes on the coarse level [31]. Since GI-

LUM takes the approach of algebraic multigrid method, we require that a

node in the vertex cover is strongly connected to at least one node in the in-

dependent set. Hence, if a node jis in the vertex cover (ne level) it must satisfy

the conditions that jaj;jj is greater than a certain threshold tolerance and jai;jj islarge for at least one node i in the independent set.

It is important to design an ecient implementation of the multilevel ILU

preconditioner with a diagonal threshold strategy. A preconditioner with adiagonal threshold strategy certainly incurs an additional cost over the one

which does not use the matrix values in constructing the independent sets. With

a carefully designed implementation the additional cost of implementing di-

agonal threshold strategy can be kept to a minimum. A diagonal threshold

strategy may be implemented with respect to a certain norm of the rows of the

matrix. This is called a diagonal threshold strategy with a relative tolerance. It

is not ecient to compute the norm of a given row of the matrices during an

independent set search. Thus, before the search of an independent set begins,

we use Algorithm 4.1 to compute a measure of each row of the matrix based on

the diagonal value and the sum of the absolute nonzero values of the row.

Algorithm 4.1 (Computing a measure for each row of the matrix).

In Line 2 of Algorithm 4.1 the set Nzj is the set of the indices j for whichai;j T 0, i.e., the nonzero row pattern for the row i. A row with a small diagonalvalue will have a small ti measure. A row with a zero diagonal value will have

an exactly zero ti measure. The real array ftig of length n is used in the inde-pendent set algorithm. Note that 06 ti6 1. The diagonal threshold strategy is

enforced by forcing a node i into the independent set if ti < . Such an im-plementation only uses the matrix values once to compute the measure ftig.

1. For i 1; . . . ; n; Do

2. ri

jPNzj jai;jj

3. If ri T 0, then

4. ti jai;ij=ri5. End If

6. End Do

7. T maxiftig8. For i 1; . . . ; n; Do

9. ti ti=T

10. End Do

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 103

-

7/31/2019 LU Dec Sparse

10/21

The graph of the matrix is used to build an independent set, along with the

array ftig.

Algorithm 4.2 is an implementation of the greedy algorithm for constructing

an independent set with a diagonal threshold strategy and strong coupling

constraint, where S and Scv denote the independent set and the vertex cover,

respectively.

Algorithm 4.2 (Greedy algorithm for independent set with constraints).

The number 0:001 in Line 9 is used to determine strong connection between thenode jand the node iand was chosen based on a few numerical experiments. It

could be made as an input parameter as well, but we kept it xed for the

numerical results reported in this paper. If the node i is put in the D submatrix

because of its small diagonal value and it has no link to any other nodes in theC submatrix, then the same row in the F submatrix contains all zero elements.

According to our partial Gaussian elimination, elimination is only applied to

nonzero elements in the F submatrix. It follows that there will be no modi-

cation on the ith row in either the For the D submatrices. Hence, the node with

a small diagonal value will not change in the Schur complement. However,

since we use a relative threshold tolerance to compute the measure of the rows,

a node with a small diagonal value may have dierent measures on dierent

levels. Hence, it may be included in the vertex cover on a coarse level, even if it

was excluded from the vertex cover on ne levels.

In Algorithm 4.2 the parameter plays two roles. The rst role is to controlthe diagonal values of the nodes in the vertex cover such that a stable ILU

1. Set S Svc Y and select a threshold tolerance > 0

2. For i 1; . . . ; n; Do

3. If the node i is not marked, then

4. S S fig and mark the node i

5. For j 1; . . . ; n (the neighbors of the node i), Do

6. If the node j is not marked, then

7. If tj6 , then

8. S S fjg and mark the node j

9. Else If tj > and jai;jj > 0:001ti, then

10. Svc Svc fjg and mark the node j

11. End If

12. End If

13. End Do

14. End If

15. End Do

16. Put all unmarked nodes in S

104 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

11/21

factorization may be obtained. The second role, which is implicit in Line 9, is to

balance the sizes of the independent set and the vertex cover and to make sure

none of them is too small or too large. Based on our experience, we would like

to have a vertex cover that is slightly larger than the independent set. With

these constraints the independent set and the vertex cover found by Algorithm

4.2 obviously have symbolic meaning only.

4.1. Minimum degree ordering

After the independent set is found, we may reorder the nodes in the vertex

cover using a minimum degree algorithm [25]. (Note that the minimum degree

algorithm is applied before the ILU factorization, not during the ILU fac-torization.) Then an explicit permutation is performed. This variant of GI-

LUM will be denoted by GILUMm. Such a reordering strategy can usually

reduce ll-in elements during ILU factorization. We will compare GILUM and

GILUMm through numerical experiments.

There are other graph reordering algorithms [18,21,22] that may be used to

reorder the nodes in the vertex cover. However, the overall eect of these re-

ordering algorithms on the convergence of preconditioned iterative methods is

not clear. For this reason, we did not experiment other reordering algorithms.

We remark that there is no need to reorder the nodes in the independent set,

even if they are not really independent. This is because they are processedindependently, per our discussion in Section 3.

5. Multilevel ILU preconditioning

The grid-based multilevel ILU preconditioner (GILUM) is based on the

partial ILUT Algorithm 3.1. On each level a, a partial ILUT factorization is

performed and an approximate coarse level system Aa1 is formed. Formally,

we have

Ca EaFa Da

La 0

FaU1a Ia

Ua L1a Ea

0 Aa1

LaUa: 5

The whole process of nding independent independent set, permuting the

matrix, and performing the partial ILUT factorization, is recursively repeated

on the matrix Aa1. The recursion is stopped when the coarsest level system ALis small. Then a standard ILUT factorization LLUL is performed on AL.

However, we do not store any coarse level systems on any level, including the

last one. Instead, we store two sparse matrices on each level

La La 0

FaU1a Ia

and Ua U

a L1a Ea0 0

for 06 a

-

7/31/2019 LU Dec Sparse

12/21

along with the factors LL and UL. All such matrices are stored one followed by

another, level by level, in one long vector. The preconditioning matrix has a

multilevel structure of the form

L0U0 L10 E0

F0U10

L1U1 L11 E1

F1U11

LL1UL1 L

1L1EL1

FL1U1L1 LLUL

2 3Hfd

Ige

Hfffd

Iggge:

The preconditioning process consists of a level by level forward elimination,

the coarsest level approximate solution, and a level by level backward substi-

tution. Vector permutations and reverse permutations with respect to the in-

dependent set orderings are performed on each level. The preconditioned

iteration process structurally looks like a multigrid V cycle algorithm [37]. A

Krylov subspace iteration is performed on the nest level acting as a smoother,

the residual is then transferred level by level to the coarsest level, where one

sweep of ILUT is used to yield an approximate solution. In the current situ-

ation, the coarsest level ILUT is actually a direct solver with limited accuracy

comparable to the accuracy of the whole preconditioning process.

Let us rewrite Eq. (5) as

Ia 0

FaU1a L1a Ia

LaUa 0

0 Aa1

Ia U

1a L

1a Ea

0 Ia

; 6

and examine a few interesting properties. It is clear that the central part of (6) is

an operator acting on the full vector on level a. LaUa may also be viewed as an

ILU smoother on the ne grid nodes on level a. In a two-level analysis, we may

dene

Ia1a

FaU1a L

1a Ia

and Iaa1

U1a L1a Ea

Ia

as the restriction and interpolation operators, respectively. Then the following

results linking GILUM with algebraic multigrid method can be veried di-rectly.

Proposition 5.1. Suppose factorization (6) exists and is exact. Then

1. the coarse level system Aa1 satisfies the Galerkin condition (1), and

2. if, in addition, Aa is symmetric, then Ia1a I

aT

a1.

One advantage of the ILUT type factorizations is that the memory cost of

the resulting preconditioner can be predicted in advance. The sparsity of GI-

LUM depends primarily on the parameter p used to control the amount of ll-

in allowed and on the size of the vertex covers. The following proposition is

analogous to the one for BILUTM in [37] and the proofs are exactly the same.

106 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

13/21

Proposition 5.2. Let ma be the size of the vertex cover on levela. The number of

nonzero elements of GILUM with L levels of reductions is bounded by

p2n L

a1 ama.

Note that in the above bound the term 2pn is the bound for the number of

nonzero elements of standard ILUT. The term pL

a1 ama represents the extra

nonzeros for the multilevel implementation. Since m0 is not in the second term

and the factor a grows as the level increases, it is advantageous to have large

vertex covers.

6. Numerical experiments

Implementations of multilevel preconditioning techniques have been de-

scribed in detail in [33,36,37]. We also added a diagonal threshold strategy

described in Section 4 and a local reordering of the blocks by reverse-Cuthill-

Mckee algorithm to the BILUTM preconditioner [37,39]. Unless otherwise

indicated explicitly, we used the following default parameters for our precon-

ditioned iterative solver: GMRES1 0 0 without restart was used as the ac-celerator; the maximum number of levels allowed was 10, i.e., L 10; thediagonal threshold parameter was 0:3. For BILUTM, the block size was

chosen to equal p.A set of unstructured sparse matrices from realistic applications were tested.

Most of these matrices have been used in other tests [36,37] and none of them

are easy to solve by standard ILU preconditioning techniques. The right-hand

side was generated by assuming that the solution was a vector of all ones and

the initial guess was a vector of some random numbers. The computations were

terminated when the 2-norm of the residual was reduced by a factor of 107. The

numerical experiments were conducted on an SGI Power Challenge worksta-

tion. The codes were written in Fortran 77 programming language and were

run in 64 bit arithmetic.

In all tables with numerical results, ``iter'' shows the number of GMRESiterations, p and s are the parameters used to control ll-in elements, ``spar''

shows the sparsity ratio which is the ratio between the number of nonzeros of

the preconditioner to that of the original matrix. 1 GILUMm represents GI-

LUM with a minimum degree reordering of the Ca submatrix. The symbol ``''

indicates more than 100 iterations required. Since these ILU preconditioners

approach direct solvers as p 3 n and s 3 0, we compare their robustness with

1 The denition of sparsity ratio is dierent from that of operator complexity in algebraic

multigrid method [31]. The operator complexity does not count storage cost of the interleveltransfer operators, which may account for more than half of the total storage cost in multilevel ILU

preconditioners.

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 107

-

7/31/2019 LU Dec Sparse

14/21

respect to the memory cost (sparsity ratio). We remark that our codes have not

been optimized and the chosen parameters were not meant to be optimal.

6.1. RAEFSKY4 matrix

The RAEFSKY4 matrix 2 has 19,779 unknowns and 1,328,611 nonzeros. It

is from buckling problem for container model and was supplied by H. Simonfrom Lawrence Berkeley National Laboratory (originally created by A.

Raefsky from Centric Engineering). Table 1 lists a set of test results when the

parameters p and s were varied.

Based on the results in Table 1 we can make some comments with respect to

solving the RAEFSKY4 matrix. It is seen that GILUMm is the most robust

preconditioner and used least memory to achieve fast convergence. ILUT is

least robust among the four preconditioners compared. BILUTM is more

robust than GILUM, but is less ecient than GILUMm. The number of it-

erations is directly related to the sparsity ratios. High accuracy preconditioners

(with large sparsity ratios) have fast convergence rates.

6.2. WIGTO966 matrix

The WIGTO966 matrix 3 has 3864 unknowns and 238,252 nonzeros. It

comes from an Euler equation model and was supplied by L. Wigton from

Boeing. It is solvable by ILUT with large values ofp [13]. This matrix was also

2

The RAEFSKY matrices are available online from the University of Florida sparse matrixcollection [17] at http://www.cise.u.edu/$davis/sparse.

Table 1

Solving the RAEFSKY4 matrix by dierent preconditioners with dierent choices of the ll-in

parameters

Parameters BILUTM GILUM GILUMm ILUT

p s iter spar iter spar iter spar iter spar

70 104 23 2.44 2.93 44 1.97 1.95

90 104 41 2.95 98 3.40 40 2.25 2.45

70 105 26 2.58 3.21 21 2.05 1.96

100 105 22 3.44 86 3.25 20 2.84 2.71

70 106 22 2.58 2.68 9 2.10 1.95

100 106 11 3.53 72 3.38 4 2.91 2.72

120 106 4 4.01 13 4.36 5 3.42 3.22

110 10

7

2 3.79 78 3.71 1 3.22 2.98130 107 43 4.45 2 3.91 1 3.72 73 3.47

3 The WIGTO966 matrix is available from the author.

108 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

15/21

used to compare BILUM with ILUT in [35] and to test point and block pre-

conditioning techniques in [14,15].

The test results in Table 2 show that BILUTM is the most robust precon-

ditioner and ILUT is the least robust in solving the WIGTO966 matrix. In fact,

for all the parameters tested, ILUT did not converge at all, even if it used more

storage space than other preconditioners did in some cases. It is interesting to

see that GILUMm used even less storage space than ILUT did. This and other

tests show that the minimum degree reordering of the vertex cover nodes doesreduce the amount of ll-in elements signicantly.

6.3. BARTHS1A matrices

The BARTHS1A matrix 4 has 15,735 rows and 539,225 nonzeros and was

supplied by T. Barth of NASA Ames. It is for a 2D high Reynolds number

airfoil problem, with one equation turbulence model. For this set of tests we

chose 0:05. The results are given in Table 3.Once again, we see ILUT did not converge under our test conditions. BI-

LUTM seems to perform slightly better than GILUM and GILUMm and usedless storage space. GILUM is the only one that converged for all the param-

eters tested and it used the most storage space.

6.4. OLAFU matrix

The OLAFU matrix 5 has 16,146 unknowns and 1,015,156 nonzeros. It is a

structural modeling problem from NASA Langley. The diagonal threshold

Table 2

Solving the WIGTO966 matrix by dierent preconditioners with dierent choices of the ll-in

parameters

Parameters BILUTM GILUM GILUMm ILUT

p s iter spar iter spar iter spar iter spar

60 104 27 2.29 2.32 1.75 1.86

90 104 29 3.21 52 3.20 2.53 2.78

140 104 8 4.47 25 4.62 36 3.73 4.28

80 105 22 3.00 3.02 67 2.31 2.48

140 105 11 4.64 25 4.85 30 3.84 4.28

80 106 22 3.01 3.06 74 2.33 2.48

140 106 10 4.67 26 4.98 29 3.92 4.29

90 10

7

22 3.25 53 3.39 2.63 2.78140 107 10 4.70 25 5.08 29 3.97 4.29

4

The BARTHS1A matrix is available from the author.5 The OLAFU matrix is available online from the University of Florida sparse matrix collection

[17] at http://www.cise.u.edu/$davis/sparse.

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 109

-

7/31/2019 LU Dec Sparse

16/21

parameter was 0:2. Table 4 lists test results with a few set of parametersp; s.

We point out that both GILUM and GILUMm did much better than BI-

LUTM in this set of tests. GILUM converged with less iteration counts but

used more storage space than GILUMm did. ILUT did very poorly and for all

test parameters chosen there was no convergence. In fact, in our tests, we

observed little residual reduction in 100 iterations.

6.5. Diagonal threshold parameter

The choice of the diagonal threshold parameter plays an important role indetermining the convergence rate of the grid-based multilevel preconditioner.

A good choice of can result in a stable and accurate preconditioner while abad choice can lead to a useless preconditioner. Fig. 2 shows the convergence

history of GILUMm with dierent values of for solving the BARTHS1Amatrix with the other parameters xed as p 120 and s 105. We see the bestchoice for in this case is 0:15. Larger and smaller hampered the convergenceof the preconditioner. In particular, choosing 0, which is equivalent to no

Table 4

Solving the OLAFU matrix by dierent preconditioners with dierent choices of the ll-in pa-

rameters

Parameters BILUTM GILUM GILUMm ILUT

p s iter spar iter spar iter spar iter spar

120 105 4.10 77 3.86 96 3.61 3.42

150 105 4.78 52 4.63 69 4.39 4.05

130 106 98 4.58 52 4.28 69 4.02 3.70

150 106 89 5.16 68 4.85 73 4.55 4.14

110 107 4.14 84 3.74 95 3.51 3.23

150 107 47 5.21 40 4.96 39 4.66 4.17

Table 3

Solving the BARTHS1A matrix by dierent preconditioners with dierent choices of the ll-in

parameters

Parameters BILUTM GILUM GILUMm ILUT

p s iter spar iter spar iter spar iter spar

110 105 90 6.74 92 8.49 7.37 6.00

140 105 8.24 87 10.85 82 8.86 7.58

110 106 86 7.16 86 8.79 7.77 6.06

140 106 50 8.54 78 10.99 77 9.58 7.65

140 107 45 8.75 77 11.26 77 9.99 7.68

110 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

17/21

diagonal threshold strategy, yielded a preconditioner that almost did not

provide any preconditioning eect at all.

Corresponding to the test results in Fig. 2, Fig. 3 shows the dimensions ofthe original matrix and the coarse level systems for dierent values of the

parameter . It can be seen that a small leads to fast reduction of the system

Fig. 3. Dimension of the original and coarse level systems of GILUMm with dierent values of the

parameter for solving the BARTHS1A matrix with p 120; s 105.

Fig. 2. Convergence history of GILUMm with dierent values of the parameter for solving theBARTHS1A matrix with p 120; s 105.

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 111

-

7/31/2019 LU Dec Sparse

18/21

size as the number of reduction level increases. As depicted in Fig. 2, a faster

reduction of system size usually yields a faster convergence rate. This obser-

vation, however, just tells half of the story. Another half of the story will say

that too small will reduce the eect of diagonal thresholding. Choosing 0leads to very fast reduction of system size. In fact, only three reductions are

needed. However, as we see in Fig. 2 and remarked in Section 4 a precondi-

tioner constructed without a diagonal threshold strategy may be unstable. (We

note that the BARTHS1A matrix can be solved by BILUTM without a di-

agonal threshold strategy, but the required parameters were p 250; s 106,see [37].)

7. Concluding remarks

We have presented a grid-based multilevel ILU preconditioning technique

(GILUM) with a dual dropping strategy for solving general sparse matrices.

The method oers exibility in controlling the amount of ll-in during the ILU

factorization and a cost-eective construction of coarse level operator. We also

implemented a diagonal threshold strategy in both the grid- and domain-based

multilevel preconditioning techniques. GILUM combines ideas and concepts

from multilevel preconditioning technique and algebraic multigrid method and

demonstrates the convergence of two classes of most promising iterativetechniques.

Our numerical experiments with several unstructured realistic sparse

matrices show that the proposed preconditioning technique indeed demon-

strates the anticipated robustness and eectiveness. Both GILUM and BI-

LUTM are more robust and are more ecient than standard ILUT. We

also showed that it is sometimes useful to reorder the ne level nodes with a

minimum degree ordering before the ILU factorization is applied. Such a

reordering can at least reduce the amount of ll-in elements during the

ILUT factorization. Our numerical experiments seem to show that the ro-

bustness of the grid- and domain-based multilevel ILU preconditioningtechniques is comparable. One implication of the results of this paper is that

multilevel ILU preconditioning techniques and algebraic multigrid precon-

ditioning approaches should have comparable robustness when they are

fully algebraic with respect to general sparse matrices. Thus, future research

on either multilevel preconditioning technique or algebraic multigrid method

should take both approaches into consideration and combine the strengths

of both.

Unlike BILUTM, the current version of GILUM does not seem to possess

inherent parallelism. However, parallelism may be introduced by using a sparse

approximate inverse strategy to replace ILUT [46,44]. The construction process

will have to be modied. We will extend our research along this line.

112 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

19/21

Acknowledgements

This work was supported by the US National Science Foundation under

grants CCR-9902022 and CCR-9988165, and in part by the University of

Kentucky Center for Computational Sciences.

References

[1] O. Axelsson, P.S. Vassilevski, Algebraic multilevel preconditioning methods, SIAM J. Numer.

Anal. 27 (6) (1990) 15691590.

[2] V.A. Bandy, Black box multigrid for convectiondiusion equations on advanced computers,

Ph.D. Thesis, University of Colorado, Denver, CO, 1996.[3] R.E. Bank, R.K. Smith, The incomplete factorization multigraph algorithm, SIAM J. Sci.

Comput. 20 (4) (1999) 13491364.

[4] R.E. Bank, C. Wagner, Multilevel ILU decomposition, Numer. Math. 82 (4) (1999) 543576.

[5] R.E. Bank, J. Xu, The hierarchical basis multigrid method and incomplete LU decomposition,

in: D. Keyes, J. Xu (Eds.), Proceedings of the Seventh International Symposium on Domain

Decomposition Methods for Partial Dierential Equations, AMS, Providence, RI, 1994, pp.

163173.

[6] E.F.F. Botta, F.W. Wubs, Matrix renumbering ILU: an eective algebraic multilevel ILU

preconditioner for sparse matrices, SIAM J. Matrix Anal. Appl. 20 (4) (1999) 10071026.

[7] D. Braess, Towards algebraic multigrid for elliptic problems of second order, Computing 55

(4) (1995) 379393.

[8] A. Brandt, S. McCormick, J. Ruge, Algebraic multigrid (AMG) for sparse equations, in: D.J.Evans (Ed.), in: Sparsity and its Applications (Loughborough 1983), Cambridge University

Press, Cambridge, MA, 1985, pp. 257284.

[9] M. Brezina, A.J. Cleary, R.D. Falgout, V.E. Henson, J.E. Jones, T.A. Manteuel, S.F.

McCormick, J.W. Ruge, Algebraic multigrid based on element interpolation (AMGe), SIAM

J. Sci. Comput. 22 (5) (2000) 15701592.

[10] T.F. Chan, S. Go, J. Zou, Multilevel domain decomposition and multigrid methods for

unstructured meshes: algorithms and theory, Technical Report CAM 95-24, Department of

Mathematics, UCLA, Los Angeles, CA, 1995.

[11] Q.S. Chang, Y.S. Wong, L.Z. Feng, New interpolation formulas of using geometric

assumptions in the algebraic multigrid method, Appl. Math. Comput. 50 (23) (1992) 223254.

[12] Q.S. Chang, Y.S. Wong, H.Q. Fu, On the algebraic multigrid method, J. Comput. Phys. 125

(1996) 279292.

[13] A. Chapman, Y. Saad, L. Wigton, High-order ILU preconditioners for CFD problems, Int.

J. Numer. Meth. Fluids 33 (6) (2000) 767788.

[14] E. Chow, M.A. Heroux, An object-oriented framework for block preconditioning, ACM

Trans. Math. Software 24 (2) (1998) 159183.

[15] E. Chow, Y. Saad, Experimental study of ILU preconditioners for indenite matrices,

J. Comput. Appl. Math. 86 (2) (1997) 387414.

[16] A.J. Cleary, R.D. Falgout, V.E. Henson, J.E. Jones, T.A. Manteuel, S.F. McCormick, G.N.

Miranda, J.W. Ruge. Robustness and scalability of algebraic multigrid, SIAM J. Sci. Comput.

21 (5) (2000) 18861908.

[17] T. Davis, University of Florida sparse matrix collection, NA Digest 97 (23) (1997).

[18] E.F. D'Azevedo, F.A. Forsyth, W.P. Tang, Ordering methods for preconditioned conjugategradient methods applied to unstructured grid problems, SIAM J. Matrix Anal. Appl. 13

(1992) 944961.

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 113

-

7/31/2019 LU Dec Sparse

20/21

[19] P.M. de Zeeuw, Matrix-dependent prolongations and restrictions in a blackbox multigrid

solver, J. Comput. Appl. Math. 33 (1990) 125.

[20] J.E. Dendy Jr., Black box multigrid, J. Comput. Phys. 48 (3) (1982) 366386.[21] I.S. Du, G.A. Meurant, The eect of reordering on preconditioned conjugate gradients, BIT

29 (1989) 635657.

[22] L.C. Dutto, The eect of reordering on the preconditioned GMRES algorithm for solving the

compressible NavierStokes equations, Int. J. Numer. Meth. Engrg. 36 (3) (1993) 457497.

[23] H.C. Elman, A stability analysis of incomplete LU factorization, Math. Comput. 47 (175)

(1986) 191217.

[24] H.C. Elman, Approximate Schur complement preconditioners on serial and parallel

computers, SIAM J. Sci. Statist. Comput. 10 (3) (1989) 581605.

[25] J.A. George, J.W.H. Liu, The evolution of the minimum degree ordering algorithm, SIAM

Rev. 31 (1989) 119.

[26] M. Griebel, T. Neunhoeer, Parallel point- and domain-oriented multilevel methods for

elliptic PDEs on workstation networks, J. Comput. Appl. Math. 66 (1996) 267278.

[27] J.A. Meijerink, H.A. van der Vorst, An iterative solution method for linear systems of which

the coecient matrix is a symmetric M-matrix, Math. Comput. 31 (1977) 148162.

[28] C.W. Oosterlee, T. Washio, An evaluation of parallel multigrid as a solver and a

preconditioner for singularly perturbed problems, SIAM J. Sci. Comput 19 (1) (1998) 87110.

[29] A. Ramage, A multigrid preconditioner for stabilised discretizations of advectiondiusion

problems, Technical Report 33, Department of Mathematics, University of Strathclyde,

Glasgow, UK, 1998.

[30] A.A. Reusken, Approximate cyclic reduction preconditioning, Technical Report RANA 97-02,

Department of Mathematics and Computing Science, Eindhoven University of Technology,

The Netherlands, 1997.

[31] J.W. Ruge, K. Stuben, Algebraic multigrid, in: S. McCormick (Ed.), Multigrid Methods,Frontiers in Appl. Math., SIAM, Philadelphia, PA, 1987, pp. 73130 (Chapter 4).

[32] Y. Saad, ILUT: a dual threshold incomplete LU preconditioner, Numer. Linear Algebra Appl.

1 (4) (1994) 387402.

[33] Y. Saad, ILUM: a multi-elimination ILU preconditioner for general sparse matrices, SIAM J.

Sci. Comput. 17 (4) (1996) 830847.

[34] Y. Saad, Iterative Methods for Sparse Linear Systems, PWS Publishing, New York, 1996.

[35] Y. Saad, M. Sosonkina, J. Zhang, Domain decomposition and multi-level type techniques for

general sparse linear systems, in: J. Mandel, C. Farhat, X.-C. Cai (Eds.), Domain

Decomposition Methods 10, Contemporary Mathematics, vol. 218, AMS, Providence, RI,

1998, pp. 174190.

[36] Y. Saad, J. Zhang, BILUM: block versions of multielimination and multilevel ILU

preconditioner for general sparse linear systems, SIAM J. Sci. Comput. 20 (6) (1999) 21032121.

[37] Y. Saad, J. Zhang, BILUTM: a domain-based multilevel block ILUT preconditioner for

general sparse matrices, SIAM J. Matrix Anal. Appl. 21 (1) (1999) 279299.

[38] Y. Saad, J. Zhang, Diagonal threshold techniques in robust multi-level ILU preconditioners

for general sparse linear systems, Numer. Linear Algebra Appl. 6 (4) (1999) 257280.

[39] Y. Saad, J. Zhang, A multi-level preconditioner with applications to the numerical simulation

of coating problems, in: D.R. Kincaid, A.C. Elster (Eds.), Iterative Methods in Scientic

Computing II, IMACS, New Brunswick, NJ, 1999, pp. 437449.

[40] Y. Saad, J. Zhang, Enhanced multilevel block ILU preconditioning strategies for general

sparse linear systems, J. Comput. Appl. Math. 130 (2001) 99118.

[41] G. Starke, Multilevel minimal residual methods for nonsymmetric elliptic problems, Numer.

Linear Algebra Appl. 3 (5) (1996) 351367.

114 J. Zhang / Appl. Math. Comput. 124 (2001) 95115

-

7/31/2019 LU Dec Sparse

21/21

[42] O. Tatebe, The multigrid preconditioned conjugate gradient method, in: N.D. Melson, T.A.

Manteuel, S.F. McCormick (Eds.), Proceedings of the Sixth Copper Mountain Conference on

Multigrid Methods, Copper Mountain, CO, 1993, pp. 621634.[43] C. Wagner, W. Kinzelbach, G. Wittum, Schur-complement multigrid a robust method for

groundwater ow and transport problems, Numer. Math. 75 (1997) 523545.

[44] J. Zhang, Two-grid analysis of minimal residual smoothing as a multigrid acceleration

technique, Appl. Math. Comput. 96 (1) (1998) 2745.

[45] J. Zhang, Preconditioned Krylov subspace methods for solving nonsymmetric matrices from

CFD applications, Comput. Meth. Appl. Mech. Engrg. 189 (3) (2000) 825840.

[46] J. Zhang, Sparse approximate inverse and multilevel block ILU preconditioning techniques for

general sparse matrices, Appl. Numer. Math. 35 (1) (2000) 89108.

J. Zhang / Appl. Math. Comput. 124 (2001) 95115 115

![The Diameter of Sparse Random Graphspeople.math.sc.edu/lu/papers/diameter.pdf258 chungandlu usedtoexaminetheconnectedcomponentsandthediameterofInternet graphs[2,15]. LetG np denotearandomgraphonn](https://static.fdocuments.in/doc/165x107/5ec39e49957691153f31fdc2/the-diameter-of-sparse-random-258-chungandlu-usedtoexaminetheconnectedcomponentsandthediameterofinternet.jpg)

![GLU3.0: Fast GPU-based Parallel Sparse LU …arXiv:1908.00204v2 [cs.DC] 2 Aug 2019 GLU3.0: Fast GPU-based Parallel Sparse LU Factorization for Circuit Simulation Shaoyi Peng, Student](https://static.fdocuments.in/doc/165x107/5e70d3e0657c551f9b7856c6/glu30-fast-gpu-based-parallel-sparse-lu-arxiv190800204v2-csdc-2-aug-2019.jpg)

![arXiv:1512.08669v1 [cs.CV] 29 Dec 2015arXiv:1512.08669v1 [cs.CV] 29 Dec 2015 Noname manuscript No. (will be inserted by the editor) Robust Scene Text Recognition Using Sparse Coding](https://static.fdocuments.in/doc/165x107/5f779d15f8354e60cc0aa267/arxiv151208669v1-cscv-29-dec-2015-arxiv151208669v1-cscv-29-dec-2015-noname.jpg)

![a arXiv:1812.10033v1 [cs.CV] 25 Dec 2018 · the sparse coe cients for test volumes could be obtained by adopting sparse representation on these dictionaries. Finally, pixel-level](https://static.fdocuments.in/doc/165x107/5f503a74553e891cca709be0/a-arxiv181210033v1-cscv-25-dec-2018-the-sparse-coe-cients-for-test-volumes.jpg)