Log everything!

-

Upload

icans-gmbh -

Category

Documents

-

view

21.652 -

download

4

description

Transcript of Log everything!

1

Log everything! Dr. Stefan Schadwinkel und Mike Lohmann

2 2

Who we are.

Log everything

Mike Lohmann Architektur

Author (PHPMagazin, IX, heise.de)

Dr. Stefan Schadwinkel Analytics

Author (heise.de, Cereb.Cortex, EJN, J.Neurophysiol.)

3 3

Agenda.

Log everything

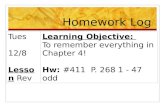

§ What we do. What we need to do. What we are doing.

§ Requirement: Log everything!

§ Infrastructure and technologies.

§ We want happy business users.

4 4

Icans GmbH

Log everything

5 5

Numberfacts of PokerStrategy.com

Log everything

6.000.000 Registered Users

PokerStrategy.com Education since 2005

19 Languages

2.800.000 PI/Day

700.000 Posts/Day

7.600.000 Requests/Day

6 6

Topics of this talk

Log everything

- How to use existing technologies and standards. - Scalability and simplicity of the solution - „Good enough“ for now! - Showing way from requirement to solution. - OpenSource Sf2 bundles for logging.

- Livedemo.

- Out of the box solution - Ready to use scripts

7 7

What we do.

Log everything

§ We teach Poker.

§ We create webapplications.

§ We serve millions of users in different countries respecting

a multitude of market rules.

§ We make business decisions driven by complex

data analytics.

8 8

What we need to do.

Log everything

§ We need to try out other teaching topics, fast.

§ We need to gather data from all of these „try outs“ to accumulate them

and build business decisions on their analysis.

§ We need a bigger infrastructure to gather more data.

§ We need to hire more (good) people! J

9 9

What we are doing.

Log everything

§ We build ECF (Education Community Framework).

§ We (can) log everything!

§ We (now) use Amazon S3 and Amazon EMR to have a scaling

storage and map reduce solution.

§ We hire (good) people! J

10 10

Requirement: Log everything.

Log everything

§ „Are you mad?!“

§ „Be more specific, please!“

§ „But what about the user‘s data?!“

11 11

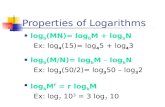

Logging Tools / Technologies

Producer

Symfony2 Application Server and Databases

15.10.12

Transport

Now: RabbitMQ

Erlang Consumer

Was: Flume

Storage

Now: S3 Storage Hadoop via

Amazon EMR

Was:

Virtualized Inhouse Hadoop

Analytics

MapReduce Hive

BI via QlikView

12 12

Logging Infrastructure

Producer

15.10.12

Transport

Storage

Analytics Databases

LB

S3

Rabbit MQ

Consumer

QlikView

Graylog

Zabbix

Reverse Proxy

App 1-x

Hadoop - Cluster

13 13

Producer

15.10.12

Page Controller

Monolog-Logger

Shovel

Local RabbitMQ

PageHit Event

Listener

Processor

Handler

Formatter

PageHit-Event

Logger::log()

LogMessage, JSON

/Home

14 14

Producer

15.10.12

§ LoggingComponent: Provides interfaces, filters and handlers

§ LoggingBundle: Glues all together with Symfony2

h=ps://github.com/ICANS/IcansLoggingComponent h=ps://github.com/ICANS/IcansLoggingBundle

15 15

Transport – First Try

15.10.12

§ Hey, if we use Hadoop, why not use Flume?

- Part of the Ecosystem

- Central config

- Extensible via Plugins

- Flexible Flow Configuration

- How? : Flume Nodes à Flume Sinks

16 16

Transport – First Try

15.10.12

§ But, .. wait!

- Ecosystem? Just like Hadoop version numbers…

- Admins say: Central config woes!

- issues: multi-master, logical vs. physical nodes, Java heap

space, etc.

- Will my plugin run with flume-ng?

- Ever tried to keep your complex flow and switch reliability levels?

Read: Our admins still hate me …

17 17

Transport – Second Try

15.10.12

§ RabbitMQ vs. Flume Nodes

- Each app server has ist own local RabbitMQ

- The local RabbitMQ shovels ist data to a central RabbitMQ

cluster

- Similar to the Flume Node concept

- Decentralized config: Producers and consumers simply connect

18 18

Transport – Second Try

15.10.12

§ But, .. wait! We still need Sinks.

- Custom crafted RabbitMQ consumers

- We could write them in PHP, but ..

- Erlang, teh awesome!

- Battle-hardened OTP framework.

- „Let it crash!“ .. and recover.

- Hot code change. If you want.

Read: Runs forever.

19 19

Storage – First Try

15.10.12

§ Use out-of-the-box Hadoop (Cloudera)

§ But:

- Virtualized Infrastructure

- Unknown usage patterns

- Must be cost effective

- Major Hadoop version upgrades

Hadoop

20 20

Storage – Second Try

15.10.12

§ Use Amazon Webservices

§ Provides flexible virtualized infrastructure

§ Cost-effective storage: S3

§ Hadoop on demand: EMR

Amazon S3

21 21

Storage – Storage Amazon S3

15.10.12

§ Erlang RabbitMQ consumer simply copies the

incoming data to S3

- Easy: exchange „hadoop“ command with „s3cmd“

Amazon S3

22 22

Storage – Storage Amazon S3

15.10.12

§ S3 bucket receives many small, compressed log file chunks

§ Amazon provides s3DistCp which does distributed data copy:

- Aggregate many small files into partitioned large chunks

- Change compression

Amazon S3

23 23

Analytics

15.10.12

§ We want happy business users.

§ We want to answer questions.

- People want answers to questions they have. Now.

- No, they couldn‘t tell you that question yesterday. If they had

known, they would have already asked for the answer. Yesterday.

§ We also want data-driven applications.

- Production system analysis.

- Fraud prevention.

- Recommendations.

- Social metrics for our users.

24 24

Analytics

15.10.12

§ Remember MapReduce.

- Custom Jobs.

- Streaming: Use your favorite.

- Java API: Cascading. Use your favorite: Java, Groovy, Clojure,

Scala.

- Data Queries.

- Hive: similar to SQL.

- Pig: Data flow.

- Cascalog: Datalog-like QL using Clojure and Cascading.

25 25

Analytics

15.10.12

§ Cascalog is Clojure, Clojure is Lisp

(?<- (stdout) [?person] (age ?person ?age) … (< ?age 30))

Query Operator

Cascading Output Tap

Columns of the dataset generated

by the query

„Generator“ „Predicate“

§ as many as you want

§ both can be any clojure function

§ clojure can call anything that is

available within a JVM

26 26

Analytics

15.10.12

§ We use Cascalog to preprocess and organize that incoming flow of log messages:

27 27

Analytics

15.10.12

§ Let‘s run the Cascalog processing on Amazon EMR:

./elastic-mapreduce --create --name „Log Message Compaction"

--bootstrap-action s3://[BUCKET]/mapreduce/configure-daemons

--num-instances $NUM

--slave-instance-type m1.large

--master-instance-type m1.large

--jar s3://[BUCKET]/mapreduce/compaction/icans-cascalog.jar

--step-action TERMINATE_JOB_FLOW

--step-name "Cascalog"

--main-class icans.cascalogjobs.processing.compaction

--args "s3://[BUCKET]/incoming/*/*/*/","s3://[BUCKET]/icanslog","s3://[BUCKET]/icanslog-error

28 28

Analytics

15.10.12

§ After the Cascalog Query we have:

s3://[BUCKET]/icanslog/[WEBSITE]/icans.content/year=2012/month=10/day=01/part-00000.lzo

Hive ParSSoning!

29 29

Analytics

15.10.12

§ Now we can access the log data within Hive:

30 30

Analytics

15.10.12

§ Now we can run Hive queries on the [WEBSITE]_icanslog_content table!

§ But we also want to store the result to S3.

31 31

Analytics

15.10.12

§ Now, get the stats:

32 32

Analytics

15.10.12

§ We can now simply copy the data from S3 and import in any local analytical tool, like:

- Excel (It must really make business people happy…)

- QlikView (Anyone can be happy with it…)

- R (If I want an answer…)

33 33

Merci.

15.10.12

Questions

?

34 34

Contacts.

15.10.12

Dr. Stefan Schadwinkel

ICANS_StScha

Mike Lohmann

mikelohmann

35 35

Tools/Technologies

15.10.12

36

ICANS GmbH Valentinskamp 18 20354 Hamburg Germany Phone: +49 40 22 63 82 9-0 Fax: +49 40 38 67 15 92 Web: www.icans-gmbh.com