Checkerboard-free topology optimization using polygonal finite elements

Learning sensorimotor navigation using synchrony …...ated navigation zone (stripes) is a 3.40 m...

Transcript of Learning sensorimotor navigation using synchrony …...ated navigation zone (stripes) is a 3.40 m...

Learning sensorimotor navigation using synchrony-basedpartner selection

Marwen BelkaidETIS laboratory

CNRS/ENSEA/UCP95000 Cergy Cedex, France.

Caroline Lesueur-GrandETIS laboratory

CNRS/ENSEA/UCP95000 Cergy Cedex, [email protected]

Ghilès MostafaouiETIS laboratory

CNRS/ENSEA/UCP95000 Cergy Cedex, France.

[email protected] Cuperlier

ETIS laboratoryCNRS/ENSEA/UCP

95000 Cergy Cedex, [email protected]

Philippe GaussierETIS laboratory

CNRS/ENSEA/UCP95000 Cergy Cedex, France.

ABSTRACTFuture robots are supposed to become our partners andshare the environments where we live in our daily life. Con-sidering the fact that they will have to co-exist with “non-expert” people (elders, impaired people, children, etc.), wemust rethink the way we design human/robot interactions.In this paper, we take a radical simplification route tak-ing advantage from recent discoveries in low-level humaninteractions and dynamical motor control. Indeed, we arguefor the need to take the dynamics of the interactions intoaccount. Therefore, we propose a bio-inspired neuronal ar-chitecture to mimics adult/infant interactions that: (1) areinitiated thanks to synchrony-based partner selection, (2)are maintained and re-engaged thanks to partner recogni-tion and focus of attention, and (3) allow for learning senso-rimotor navigation based on place/action associations. Ourexperiment shows good results for the learning of a navi-gation area and proves that this approach is promising formore complex tasks and interactions.

CCS Concepts•Computer systems organization → Embedded sys-tems; Redundancy; Robotics; •Networks → Network reli-ability;

KeywordsHuman-Robot interaction, synchrony, partner selection, sen-sorimotor navigation, neural networks

1. INTRODUCTION

Permission to make digital or hard copies of all or part of this work for personal orclassroom use is granted without fee provided that copies are not made or distributedfor profit or commercial advantage and that copies bear this notice and the full cita-tion on the first page. Copyrights for components of this work owned by others thanACM must be honored. Abstracting with credit is permitted. To copy otherwise, or re-publish, to post on servers or to redistribute to lists, requires prior specific permissionand/or a fee. Request permissions from [email protected].

ICAIR and CACRE ’16, July 13-15, 2016, Kitakyushu, Japanc© 2016 ACM. ISBN 978-1-4503-4235-3/16/07. . . $15.00

DOI: http://dx.doi.org/10.1145/2952744.2952754

Future Robots are supposed to become partners and fa-cilitate our daily life. This fact is confirmed by severalemerging applications for Human Robot Interaction (HRI)as: companion robots for helping elders and impaired peo-ple, robots for pedagogical tools, monitoring robots, guidingrobots (museums, hotels), etc.

Hence, considering a possible presence of theses artificialagents in our physical environment, the acceptability of HRIbecomes a central issue [1]. Indeed, we must think or rethinkfuture robots behaving in a more ”user-friendly”way and be-ing able to interact with ”non-expert” persons (including el-ders, impaired people, children etc.). An observed fact is thedifficulty of actual complex computer systems (monitoringsystems, drones, robots etc.) to sense the human world andinteract with it in ways that emulate collaborative human-human works. Actual HRI can induce two major drawbacks:(i) Human-Machine Interfaces (HMI) are highly demanding,(ii) humans have to adapt their way of thinking to the po-tential and limitations of the system. A major and difficultissue is understanding and mastering the development of”pleasant” but still efficient HRI.

In this race for more user-friendly HRI, many researchhave been conducted on the technical features of HRI. For in-stance, a major focus of interest has been put on the expres-siveness and the appearance of robots and avatars [2]. Thesenumerous and interesting researches contribute to partiallysolve the above questions. Yet, these approaches have ne-glected the importance of understanding the dynamics ofthe interactions.

In fact, studies on development psychology acknowledgedsynchrony as a prime requirement for interaction between amother and her infant. An infant stops interacting with itsmother when she stops synchronizing with it [3]. Synchronydetection mechanism in young infants plays a pervasive rolein learning and cognitive development (word learning, self-awareness and control, learning related to self etc.[4][5]).

In this paper, instead of elaborating on existing compli-cated solutions, we take a radical simplification route takingadvantages of recent discoveries in low-level human interac-tions and dynamical motor control. We argue that exploit-ing natural stability and adaptability properties of synchro-nizations and rhythmic activities can solve several of the

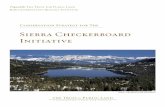

(a) Robotic platform (b) Indoor environment

Figure 1: Experimental setup and environment. LEFT:

Robotic platform: Two panning cameras – respectively

simulating head motion during interactions (1) and eye

saccades during navigation (2) –, an oscillating arm (3)

and a laser sensor (4). RIGHT: Experimental environ-

ment: in a 8 m long by 6.5 m wide lab room, the toler-

ated navigation zone (stripes) is a 3.40 m diameter area

around a safe zone (checkerboard) in the center of the

room.

acceptability problems of HRIs, and allow rethinking thecurrent approaches to design them.

We will discuss the use of synchrony and rhythmic adap-tation in the applicative framework of Human/Robot Inter-action in the context of navigation tasks. We propose a bio-inspired adult/infant like interaction permitting a mobilerobot to: (1) initiate an interaction with a selected humanpartner (2) maintain the interaction by learning to recognizethe partner and to focus the visual attention on him (3) andlearn, by imitating the human, to automatically navigate ina ”tolerated” area shown by the selected partner (throughthe interaction).

2. EXPERIMENTAL SETUP AND POSITION-NING

As stated above, our objective here is to build a bio-inspired model for HRI taking into account the human sen-sitivity to synchronous interactions. In addition to the im-portance of synchrony in human development, it is worthnoticing that studies on interpersonal motor coordinationpoint out unintentional synchronizations among people. Is-sartel et al. studied interpersonal motor-coordination be-tween two participants when they were instructed not tocoordinate their movements. The results showed that par-ticipants could not avoid unintentional coordination witheach other [6]. This reflects that when visual informationis shared between two people in an interpersonal situation,they coordinate (unintentionally) with each other.

Inspired by these studies, we argue that for obtaining ”in-tuitive” HRI we must build bi-directionnal interactions, therobot must not only have abilities to adapt its dynamics tothe human partner ones but also to ”entrain” unintention-ally (without cognitive load) the human rhythm by givinga feedback (stimuli) representing its own dynamics (headmovement, arm movement, auditive stimuli etc.).

For our experimental applicative setup we use a 40 cm-

Figure 2: Synoptic schema illustrating the human/robot

interaction dynamics. In order to learn a navigation

task, we establish a bidirectional action-based interac-

tion. When the human imitates the robot arms motion,

synchrony detection allows for partner selection. Devia-

tion detection according to the orientation shown by the

selected partner triggers the sensorimotor learning of as-

sociations between visual places and directions (VPC).

The robot interacts with the environment by performing

place/action navigation.

wide, 48 cm-long, 140 cm-high, 4-wheel mobile robot (Rob-ulab) represented in Figure 1a. This platform is embeddedwith a small added arm controlled by an oscillator represent-ing its interacting dynamic. Two panning cameras are alsoused in this experiment. One for Landmarks recognition(simulating eye saccades during navigation) and the otherone for optical flow detection, partner recognition and vi-sual tracking (simulating head motion during interactions).They respectively have rotation fields of approximately 300degrees (the whole panorama minus the pole position) and200 degrees. The robot additionally has a laser sensor forthe detection of obstacles and human presence. The exper-iments are in the indoor environment illustrated in Figure1b.

The typical scenario of our application (summarized inFigure 2), is as fellow :

• The robot moves autonomously according to its owndynamics (physically expressed by the rhythmic oscil-lations of its arm) and visually scans the environmentwith its first panning camera,

• If a human stands in front of it and starts moving, theinduced optical flow influences the robot dynamics andconsequently the arm oscillations; the human partnercan thus be rhythmically and (possibly) unintention-nally ”entrained”

• If this mutual (bi-directional) influence converge tocommon motion dynamics, synchrony is detected, therobot stops scanning the environment and focuses itsvisual attention on synchronous regions permitting tolearn the partner shape,

• When the so selected partner starts moving, the robot’stracks and follows the human using the second camera;thereby the partner shows the desired direction for thecurrent robot location (place)

• If the difference between the robot body and head ori-entations (resp. current and desired orientations) issufficient, a new place/action learning is triggered

Figure 3: Neuronal architecture for interactive learn-

ing of sensorimotor navigation. Catego: Categorization

of input patterns. STM: Short Term Memory (tempo-

ral integration). W-W map: What-Where map storing

landmark/azimuths couple (max-pi is basically a tenso-

rial product between the two input vectors). LMS: Least

Mean Square conditioning.

• After the human intervention, whether a new place islearned or not, the robot starts scanning the environ-ment again to perform place/action navigation.

3. SYNCHRONY-BASED PARTNER SELEC-TION

In this section, we focus on the interaction mechanismsthat will later be used to learn the navigation task. Asstated earlier, interaction is initiated through synchrony-based partner selection. Initially, the robot arm oscillatesat its own frequency and amplitude illustrating the robotinternal dynamics. When a person interacts with the robotby moving her arm, the motion in the visual field is esti-mated by an optical flow algorithm. The so deduced motionenergy is used to entrain and adapt the robot’s arm oscil-lations to the human movement dynamics using a simpleneural model presented in [7] and [8]. Synchrony detectionthen consists in extracting the maxima from the human andthe robot oscillators and calculating their correlation. Fig 4shows images from phases of interaction engagement by twopartners.

Synchronized interactants are selected as partners. Inorder to facilitate future interaction initiations, the robotlearns to visually recognize those partners. To do so, pointsof interest are detected and local views around them are en-coded by a log-polar transformation. In the dedicated neuralnetwork, a first layer categorizes those local views. Then, ashort term memory allows a second layer to captures a setof local views in order to recognize a partner (see Fig. 3).

Recognized partner are then visually tracked. Becausepoints of interest stability cannot be guaranteed when thepartner starts moving, visual tracking relies on a low resolu-tion shape recognition. More precisely, the original 320×240image is subsampled at a factor of 2 and the central 65× 45subimage is captured while facing the human partner. Then,the tracking method consists in finding the maximum of cor-relation in a 11 pixels neighbourhood around the center ofthe image. We use a 1D correlation to reduce computationalcost[9].

Fig 4 shows results regarding partner selection and visualtracking. Here, the robot is static while three different peo-

Figure 4: TOP: Interaction initiation with robot. Two

partners move their arms in front of the camera. BOT-

TOM: Sequence of human/robot interactions with three

partners. Two selected partners are recognized and vi-

sual tracking is launched after each of their interventions.

However, a third person (unknown) is not able to engage

the interaction without the synchronization phase.

ple try to engage an interaction. Two of them had alreadysynchonized their arm movements with the robot but thethird one did not. Consequently, the robot only learnedthe shapes of two synchronised partners. Therefore, visualtracking is performed only when one of them is recognized(recognition level has to be higher than a threshold).

4. NAVIGATION AREA LEARNINGThe purpose of this experiment is to teach the robot to

stay inside an area of the environment (see Fig 1b). Giventhe obstacle detection distance, we define a tolerated navi-gation zone with a diameter of 3.40 m in the center. Takinginto account the linear and rotational speed, the safe zonewhere the robot can freely move is a 1.80 m diameter con-centric area. The 0.80 m-wide ring between these two circlesdetermines the area where a human intervention is requiredin order to correctly build the attraction basin inside thetolerated zone.

At the beginning of the experiment, the robot is insidethe safe zone. Before any learning, it navigates randomlydue to noise activities. Whenever it gets close to the limitsof the area, the human interactor intervenes to correct itstrajectory. As explained earlier, if the robot does not recog-nize the human interactor, synchrony detection is necessaryto engage the interaction. In case of a known partner, therobot visually tracks the person in front of it. If a suffi-

cient deviation (20 degrees in this experiment) is detectedbetween its current direction and the one that is suggestedby the partner, the robot visually scans the environmentthanks to the second camera.

Like for partner recognition, points of interest are de-tected and local views around them are encoded by log-polar transformation. Then, a short term memory allowsfor storing the local views encoding landmarks as well asthe azimuths where they are observed (what-where infor-mation). Thereby, a new place place is categorized as a setof landmarks/azimuths couples. To each place, the robotassociates the direction suggested by the partner during theinteraction. Such sensorimotor learning of place/action cou-ples allows for building a basin of attraction that guides therobot navigation [10].

Note that the initial condition is not determining for theresulting behavior. If the robot starts the experiment thetolerated zone, only a couple of additional place/action as-sociations is required to guide it toward the safe zone.

We show an example of area learning in Fig 5. This ex-periment lasted 14 minutes. The X axis represents time insecondes and y axis activities of neurons. Some neural ac-tivities are represented more than once for clarity purpose.If so, they have the same color in (A), (B), (C), (D) or (E).

(A) shows the evolution of synchrony detection duringhuman intervention. When synchrony detection signal ishigher than a threshold (dotted black curve) and a human(or object) presence is detected (based on laser sensor) thepartner learning is launched (green curve). There is only oneexception after t = 500. Partner learning is not launched be-cause no human (or object) presence is detected.

(B) shows the partner recognition (blue curve) level dur-ing the experiment. When it is higher than a recognitionthreshold, it allows for the partner tracking. We can seethat when the robot camera is moving the high-resolutionrecognition (based on points of interest) is not possible. Thishighlights the interest of a low-resolution shape tracking.

(C) shows the robot head orientation (black curve). Insome cases, the partner verifies if the robot is really trackinghim. This can be observed when the head is turned in theopposite direction at the beginning of some tracking phases.The deviation is calculated from the difference between theorientation of the robot head and body.

(D) shows the place/action learning signals following eachtracking phases that induces a sufficient deviation from robotinitial orientation.

(E) shows the place recognition levels. We can see thatonly a few place/action couples are required for this task.Fig 6 illustrates the robot trajectory and the spots where thelearning occurred. During task performance, one can notethat places are not very clearly discriminated. This is dueto the environment size (learned place are very close) and tosome technical limitations (the limited fields of the laser andcamera servomotor force the human partner to decompose a180 degrees deviation in two successive place/action couplesin order to teach the robot to go back to the center of thenavigation area). Despite these shortcomings, the resultingbehavior is satisfying since the robot stays inside the desiredarea after only a few human interventions.

5. DISCUSSION AND CONCLUSIONIn this paper, we argue for the need to take the dynamics

of the interactions into account in the development of the

Figure 5: Results of the experiment on interactive learn-

ing of place/action navigation. The same signal have

the same color in (A), (B), (C), (D) and (E). (A) Dur-

ing human intervention, synchrony detection activities

higher than threshold phases trigger partner learning.

(B) When the parter is recognized the tracking phase is

launched. (C) When the robot head orientation changes

a deviation is detected. (D) Tracking phase induces a

change of orientation which triggers place/action learn-

ing. (E) Visual places (VPC) activities.

y

Time

Place

Action

Place/Action

x

3.4 m

Figure 6: Robot trajectory and spots where

place/action learning occured (same color as Fig 5). The

gray gradient illustrates the time course. The robot

learned to stay inside the 170 cm radius tolerated zone.

robots that will share our environment. More specifically,we give an example for the use of a previously developedpartner selection model [7] [8] in the context of a navigationtask [10]. Thereby, we show how synchrony and rhythmicadaptation can serve as catalyst for sensorimotor learning.

A major advantage of our approach is to allow non-expertsto efficiently and intuitively make robot learn the behav-ior they expect from them. In fact, initiating, re-engagingand maintaining the interaction using a simple low levelvisuomotor mutual rhythmic adaptation (optical flow) canbe achieved by common and untrained people through dif-ferent ways as arm movements, hand/head/object shakingfacilitating intuitive human/robot non-verbal communica-tion without any additional costly or invasive device needingtraining phases to be used.

Several examples of interactive learning architectures canbe found in the literature. For instance, [11] [12] get interest-ing results in terms of learning performance. However, theinteraction situatedness is questionnable since the teacherdoes not directly communicate with the system. Besides,in [12], the learning is based on reward-like feedback, whichis different from our demonstration/imitation approach. Inthis regard, [13] rely on an ecological learning by demonstra-tion based on studies about primates social behavior. Yet,the authors separate the learning mode from the executionone. As pointed out above and since our initial work oninteractive learning of navigation tasks [10], we think thesetwo phases have to be gathered in order to get natural andefficient interactions. Thereby, the teacher directly observesthe effect of his/her interventions and the robot can moreeasily communicate its inners states.

Our model still has a few shortcoming in terms of HRI. Noturn taking is established in the interaction and the robotnever plays the role of the teacher. Thus, the human partnerdoes not benefit from the interaction, except from the factthe robot performs the desired task. These are some of theissues that we aim at addressing in future studies. In futurework, we also plan to add a dynamical gait synchronisation.The robot should be able to detect the human rhythm onhis legs motion during locomotion. New learnings wouldbe possible without stopping the robot. For example, wecould use this method to learn a round navigation task. Thepartner could show the trajectory by walking side by sidewith the robot.

6. ACKNOWLEDGMENTThis work was supported by DGA (Direction Generale

de l’Armement), the DIRAC ANR project and the CentreNational de la Recherche Scientifique (CNRS).

7. REFERENCES[1] M A Goodrich and A C Schultz. Human-Robot

Interaction: A Survey. Foundations and TrendsAo inHuman-Computer Interaction, 1(3):203–275, 2007.

[2] Cynthia Breazeal. Experimental Robotics VII, chapterRegulation and Entrainment in Human-RobotInteraction, pages 61–70. Springer Berlin Heidelberg,Berlin, Heidelberg, 2001.

[3] Jacqueline Nadel, Isabelle Carchon, Claude Kervella,Daniel Marcelli, and Denis Reserbat-Plantey.Expectancies for social contingency in 2-month-olds.Developmental Science, 2(2):164–173, 1999.

[4] Lakshmi J Gogate and Lorraine E Bahrick.Intersensory redundancy facilitates learning ofarbitrary relations between vowel sounds and objectsin seven-month-old infants. Journal of experimentalchild psychology, 69(2):133–149, 1998.

[5] Philippe Rochat and Tricia Striano. Perceived self ininfancy. Infant Behavior and Development, 23(3 -4):513 – 530, 2000.

[6] Johann Issartel, Ludovic Marin, and Marielle Cadopi.Unintended interpersonal co-ordination: “can wemarch to the beat of our own drum?”. NeuroscienceLetters, 411(3):174 – 179, 2007.

[7] S K Hasnain, P Gaussier, and G Mostafaoui.“Synchrony” as a way to choose an interacting partner.In Proceedings of the 2nd Joint IEEE InternationalConference on Development and Learning and onEpigenetic Robotics (ICDL-EpiRob), pages 1–6, 2012.

[8] C Grand, G Mostafaoui, S K Hasnain, andP Gaussier. Synchrony detection as a reinforcementsignal for learning: Application to human robotinteraction. In International Conference on Timingand Time Perception, 2014.

[9] Nicola Ancona and Tomaso Poggio. Optical flow from1-d correlation: Application to a simple time-to-crashdetector. International Journal of Computer Vision,14(2):131–146, 1995.

[10] C Giovannangeli and P Gaussier. Interactive Teachingfor Vision-Based Mobile Robots: A Sensory-MotorApproach. IEEE Transactions on Systems, Man, andCybernetics - Part A: Systems and Humans, 40(1),2010.

[11] J A Fails and D R Olsen. Interactive machinelearning. In Proceedings of the 8th internationalconference on Intelligent User Interfaces, IUI ’03,pages 39–45. ACM Press, 2003.

[12] W B Knox, P Stone, and C Breazeal. Training aRobot via Human Feedback: A Case Study. InProceeding of the 5th International Conference onSocial Robotics, pages 460–470. Springer, 2013.

[13] J Saunders, C L Nehaniv, and K Dautenhahn.Teaching Robots by Moulding Behavior andScaffolding the Environment. In Proceedings of the 1stACM Conference on Human-robot Interaction, pages118—-125, 2006.