JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 1 Risk ...

Transcript of JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 1 Risk ...

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 1

Risk Adversarial Learning System for Connectedand Autonomous Vehicle Charging

Md. Shirajum Munir, Graduate Student Member, IEEE, Ki Tae Kim, Student Member, IEEE,Kyi Thar, Member, IEEE, Dusit Niyato, Fellow, IEEE, and Choong Seon Hong, Senior Member, IEEE

Abstract—In this paper, the design of a rational decisionsupport system (RDSS) for a connected and autonomous vehiclecharging infrastructure (CAV-CI) is studied. In the consideredCAV-CI, the distribution system operator (DSO) deploys electricvehicle supply equipment (EVSE) to provide an EV charging fa-cility for human-driven connected vehicles (CVs) and autonomousvehicles (AVs). The charging request by the human-driven EVbecomes irrational when it demands more energy and chargingperiod than its actual need. Therefore, the scheduling policyof each EVSE must be adaptively accumulated the irrationalcharging request to satisfy the charging demand of both CVsand AVs. To tackle this, we formulate an RDSS problem for theDSO, where the objective is to maximize the charging capacityutilization by satisfying the laxity risk of the DSO. Thus, wedevise a rational reward maximization problem to adapt the ir-rational behavior by CVs in a data-informed manner. We proposea novel risk adversarial multi-agent learning system (RAMALS)for CAV-CI to solve the formulated RDSS problem. In RAMALS,the DSO acts as a centralized risk adversarial agent (RAA) forinforming the laxity risk to each EVSE. Subsequently, each EVSEplays the role of a self-learner agent to adaptively schedule its ownEV sessions by coping advice from RAA. Experiment results showthat the proposed RAMALS affords around 46.6% improvementin charging rate, about 28.6% improvement in the EVSE’s activecharging time and at least 33.3% more energy utilization, ascompared to a currently deployed ACN EVSE system, and otherbaselines.

Index Terms—connected and autonomous vehicle, rationaldecision support system, electric vehicle supply equipment, multi-agent system, intelligent transportation systems.

I. INTRODUCTION

A. Motivation and Challenges

IN the era of intelligent transportation systems (ITS), theconnected and autonomous vehicle (CAV) technologies

have gained the considerable potential to achieve an indis-pensable goal of smart citizens [1], [2]. One of such intrinsicrequirements is to install both human-driven connected ve-hicles (CVs) and autonomous vehicles (AVs) into the CAVsystem [3]–[9]. The CVs and AVs are being electrification toreduce stringent emissions of CO2 that transforming vehiclesmarket from internal combustion engine (ICE) vehicles tobattery electric vehicles (BEVs) and plug-in hybrid electric

Md. Shirajum Munir, Ki Tae Kim, Kyi Thar, and Choong Seon Hongare with the Department of Computer Science and Engineering, Kyung HeeUniversity, Yongin-si 17104, Republic of Korea (e-mail: [email protected];[email protected]; [email protected]; [email protected]).

Dusit Niyato is with School of Computer Science and Engineering,Nanyang Technological University, Nanyang Avenue 639798, Singapore, andalso with the Department of Computer Science and Engineering, Kyung HeeUniversity, Yongin-si 17104, Republic of Korea (Email: [email protected]).

Corresponding author: Choong Seon Hong (e-mail: [email protected]).

vehicles (PHEVs) [10], [11]. In particular, 55% of the ICEvehicles can be replaced by electrical vehicles (EVs) at theend of 2040 [10] while around 350 TWh electric power isrequired to fulfill the energy demand of the EVs [12]. Tosuccessfully support such energy demand to CVs and AVsin the connected and autonomous vehicle charging systems,electric vehicle supply equipment (EVSE) energy managementis one of the most critical design challenges. In particular, itis imperative to equip CAV charging system with renewableenergy sources as well as to ensure a rational EV chargingscheduling for CVs and AVs, in order to achieve an extremelyreliable energy supply with maximum utilization of chargingtime.

The rational decision-making process focused on choos-ing decisions that provide the maximum amount of benefitor utility to an individual or system [13]–[15]. Thus, theassumption of rational behavior implies that individuals orsystems would take actions that benefit them; actions thatare misleading to reach the goal of maximizing the benefitbecome irrational behavior [13]–[15]. The goal of this work isto maximize the overall electricity utilization by the EVSE fora distribution system operator (DSO). Therefore, the decisionsof electric vehicle (EV) charging scheduling must be rationalso that DSO can maximize the usage of available electricity. Inthis work, rational EV charging scheduling entails allocatingenergy, charging time, energy delivery rate to EVSE, andother factors in order to maximize the overall electricity useof all EVSEs. However, the challenges of such rational EVcharging scheduling are introduced by the CV (i.e., human-driven) that impose irrational energy demand and chargingtime to each EVSE. Since the connected and autonomousvehicle (CAV) system [3], [5], [6], [16]–[18] deals with twotypes of electric vehicles, human-driven connected vehicle(CV), and autonomous vehicle (AV) [see Appendix B]. TheAV can estimate and request an exact amount of energy andcharging duration to EVSE by its own analysis. Therefore, theallocated energy and charging time for an AV can fulfill theexact demand since the gap between the amount of demandedenergy and actual charged energy becomes minimal. Further,the difference between requested charging time and actualcharging time is also minimal. Thus, the decision of chargingschedule to AV become rational since EVSE can maximizethe utilization of energy resources. On the other hand, thehuman-driven connected EVs have no guarantee to requestan exact amount of energy and charging time to the EVSEdue to manual interaction by an individual. The empiricalanalysis using ACN-Data [19] of currently deployed two

arX

iv:2

108.

0146

6v1

[cs

.AI]

2 A

ug 2

021

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 2

TABLE I: List of abbreviations

Abbreviation ElaborationAV Autonomous VehicleCV Human-driven Connected VehicleCAV Connected and Autonomous VehicleCAV-CI Connected and Autonomous Vehicle Charging

InfrastructureDDPG Deep Deterministic Policy GradientDSO Distribution System OperatorEV Electric VehicleEVSE Electric Vehicle Supply EquipmentEVSE-LA EVSE Learning AgentLSTM Long Short-Term MemoryMDP Markov Decision Processnon-CAVSystem

Non-connected and Autonomous Vehicle Sys-tem

RAA Risk Adversarial AgentRAMALS Risk Adversarial Multi-agent Learning SystemRDSS Rational Decision Support SystemRNN Recurrent Neural Network

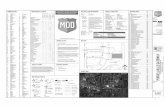

EVSE sites (i.e., JPL and Caltech) have shown the strongevidence of such behavior for CVs (as shown in Figure 1).For instance, the requested energy by a CV can be almostdouble that of the actual charged energy. Additionally, therequested charging time can be more than three times theactual charging time. Thus, each EVSE faces challenges tomaximize the use of energy due to allocate more chargingtime and energy than the actual based on the request ofthe human-driven connected vehicle. Therefore, the chargingbehavior of each CV is considered irrational [13], [14] sincethe charging request by the CVs misleads them to reach thegoal of maximizing the benefit of the DSO. Meanwhile, theEVSE executes the irrational energy supply and allocates thecharging time to CV. Thus, a rational EV charging systemrefers to maximizing the charging capacity utilization of theDSO by taking into account the irrational charging behaviorof the CVs.

To ensure rational EV charging for connected and au-tonomous vehicle systems, a rational decision support sys-tem (RDSS) can be incorporated into the connected andautonomous vehicle charging infrastructure (CAV-CI). In par-ticular, the RDSS not only relies solely on the energy demandbehavior of each EV (i.e., CV and AV), but also on theresponse for energy supply of each EVSE. Thus, the irrationalbehaviors for energy demand and supply can be characterizedby discretizing a tail-risk for laxity of the CAV chargingsystem. In particular, establishing a strong correlation amongthe energy demand-supply behavior of CAV charging systemwhile the decision of each EV charging session can bequantified from a tail-risk distribution of a Conditional Valueat Risk (CVaR) [20], [21] of the laxity [22]. Thus, the laxityrisk can guide each EVSE to efficient EV charging schedulingfor the CAV-CI. Therefore, it is imperative to develop anRDSS in such a way so that the rationality of EV chargingscheduling is taken into account in CAV-CI. In order to dothis, a data-informed mechanism can be a suitable way thatnot only quantifies the behavior of energy data but also assistto take an efficient EV charging session decision for the EVSE.

B. Contributions

The main goal of this paper is to develop the novel riskadversarial multi-agent learning system (RAMALS) that ana-lyzes the risk of irrational energy demand and supply of CAV-CI for efficient energy management. The proposed multi-agentsystem captures the behavioral features from energy demand-supply parameters of CAV-CI as these behavioral featureshave direct effects on EVs (i.e., CVs and AVs) and indirecteffects on EVSEs. Such behavioral effects lead each EVSEfor determining rational EV charging session scheduling bycoping with the risk of irrational energy demand-supply inCAV-CI. To capture this behavior, a data-informed approachis suitable that not only captures the characteristics from bothCVs and AVs energy charging data but is also being notifiedby the contextual (i.e., rational/irrational) behavior of thosedata. In particular, we have the following key contributions:

• We formulate a rational decision support system for EVscharging session scheduling in CAV-CI that can capturethe irrational tail-risk between CVs, AVs, and EVSEs. Inparticular, we analyze laxity risk by capturing CVaR-tailfor each EVs in CAV-CI. We then discretize a rationalreward maximization decision problem for CAV-CI bycoping with laxity risk. Thus, we solve the formulatedproblem in a data-informed manner, where the decision ofEV charging session schedule for each EVSE is obtainedby using both intuition and behavior of the data.

• Decomposing this RDSS, we derive a novel risk adversar-ial multi-agent learning system for CAV-CI that is com-posed of two types of agents: a centralized risk adversarialagent (RAA) that can analyze the laxity risk for CAV-CI, and a local agent that acts as a self-learning agentfor each EVSE. In particular, each EVSE learning agent(EVSE-LA) can learn and discretize its own EV sessionscheduling policy by coping with the irrational risk of therisk adversarial agent. In order to do this, we model eachEVSE-LA as a Markov decision process (MDP) whilethe decisions (e.g., actual deliverable energy, chargingrate, and session time) of each EV session schedulingis affected by laxity risk from the CVaR-tail distribution.

• We model to solve RAMALS the interaction betweeneach EVSE-LA and the RAA of CAV-CI by employing ashared recurrent neural architecture. In particular, RAAacts as a centralized training node while each EVSE-LA works as an individual learner for determining itsown EV session scheduling policy. Accordingly, bothRAA and each EVSE-LA consist of the same configuredrecurrent neural network (RNN) to capture the temporaltime-dependent features of uncertain behavior of CAV-CI. Each EVSE-LA receives charging session schedulingrequest by the both CVs and AVs while estimates itsimitated experiences by coping with the risk adversarialinformation of RAA. Subsequently, RAA receives onlythe posterior experiences from all of the EVSE-LAs anddetermines its updated risk adversarial policy for eachEVSE-LA. In particular, exploration of the RAMALS istaken into account at each EVSE-LA while exploitationis done by RAA for avoiding the irrational laxity risk of

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 3

each EVSE’s EV session scheduling policy.• Through experiments using real dataset (i.e., ACN EVSE

sites [19]), we show that the proposed RAMALS outper-forms the other baselines such as currently deployed ACNEVSE system, A3C-based framework, and A2C-basedmodel. In particular, the proposed RAMALS achievesthe significant performance gains for DSO in CAV-CI,where increasing at least 46.6% of the charging rate,about 28.6% of active charging time, around 33.3% ofmore energy utilization, and about 34% additional EVcharging, compared to the baselines.

The rest of this paper is organized as follows. Some ofthe interesting related works are discussed in Section II. Thesystem model and problem formulation of the rational decisionsupport system for DSO in CAV-CI are presented in SectionIII. In Section IV, the proposed risk adversarial multi-agentlearning framework is described. Experimental results andits analysis are discussed in Section V. Finally, concludingremakes are given in Section VI. A list of abbreviations isgiven in Table I.

II. RELATED WORK

The challenges of EVSE energy management have gainedconsiderable attention by academia and industries for utilizingthe maximum capacity of the vehicle charging. However, theintegration of both CV and AV electric vehicles charging doesnot seem to be taken into account in CAV systems [3]–[6], [8],[9], [23]. Therefore, the problem of EVSE energy managementfor non-CAV system has been widely studied in [24]–[38].

Recently, some of the EV charging energy managementchallenges for the non-CAV system has been studied thatfocuses on game-theoretical approaches in [24]–[27]. In [24],the authors proposed a two-stage non-cooperative game amongEV aggregators while these aggregators decide EV chargingenergy profiling. In particular, the objective of this game modelis to minimize EV charging energy cost for the EVs. Thus, theauthors have considered ideal, or non-ideal, decisions for theaggregators to analyze the impact of EV charging decision.In [25], the authors proposed an incentive-based EV chargingscheduling mechanism by considering uncertain energy loadof each charging station (CS) and EV charging time con-straint. In particular, the authors have designed a distributedonline EV charging scheduling algorithm by combining 𝜖-Nash equilibrium and Lyapunov optimization to maximize thepayoff of each CS. Furthermore, a game-based decentralizedEV charging schedule was proposed in [26]. Particularly, theauthors in [26] developed a Nash Folk regret-based strategymechanism while minimizing the EV user payment and maxi-mizing the power grid efficiency. The work in [27] developeda competitive pricing mechanism for electric vehicle chargingstations (EVCSs) by considering both large-sized EVCSs (L-EVCSs) and small-sized EVCS (S-EVCSs) with heteroge-neous capacities and charging operation behavior of CSs. AStackelberg game-based solution approach was proposed toanalyze the price competition among the CSs in the formof a multiplicatively-weighted Voronoi diagram. However, theworks in [24]–[27] do not investigate the problem of long

(a) Irrational session time requests by each EV.

(b) Irrational energy requests by each EV.

Fig. 1: Example of irrational EV charging time and energyrequest (x-axis represents the EV ID for each charging session)at EVSE 1 − 1 − 194 − 821 based on ACN-Data [19] of JPLsite.

time charging, minimization of idle charging time for CS (i.e.,EVSE) nor they account for the irrational energy demand andcharging time among the EVs (i.e., CVs and AVs). Dealingwith irrational energy demand and charging time among EVsas well as EVSEs is challenging due to the intrinsic behaviorsof each EV and EVSE evolve the risk of uncertainty. In orderto overcome this unique EV charging scheduling challenge,we propose to develop a risk adversarial multi-agent learningframework that can adapt new uncertain risk towards a rationaldecision support system for the connected and autonomousvehicle charging.

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 4

The machine learning and multi-agent learning has thusbeen the focus of many recent works [28]–[33]. For example,the authors in [28] proposed fuzzy multi-criteria decisionmaking (FMCDM) model to solve the problem of chargingstation selection for the EVs. To model the FMCDM, be-havior of CS selection by EV drivers and reaction by CSare considered. The work in [29] studied a joint mechanismfor plug-in electric vehicles (PEVs) charging scheduling andpower control with the goal of minimizing PEV charging costand energy generation cost for both residence and PEV energydemands. Further, the authors in [30] introduced a hybridkernel density estimator (HKDE) scheme to optimize EVcharging schedule. In particular, HKDE usages both Gaussian-and Diffusion-based KDE to predict EV stay duration andenergy demand from the historical data. Thus, the objective isto reduce energy cost by utilizing the characteristics of EV userbehavior and energy charging load in CS. The authors in [31]studied a model-free control mechanism to solve the problemof jointly coordinate EV charging scheduling. Particularly, theMarkov decision process (MDP) was considered to aggregateenergy load decisions of CS and individual EVs. A centralizedsolution was proposed using a batch reinforcement learning(RL) algorithm in a fitted Q-iteration manner. A multi-agentreinforcement learning-based dynamic pricing scheme wasproposed in [32] to maximize the long-term profits of chargingstation operators. Particularly, the work in [32] consideredthe Markov game to analyze a competitive market strategyfor ensuring dynamic pricing policy. The authors in [33]proposed a demand response scheme for PEV that can reducethe long-term charging cost of each PEV. In particular, adecision problem was designed as a MDP by consideringunknown transition probabilities to estimate am optimum cost-reducing charging policy. Then a Bayesian neural network isdesigned to predict the energy prices for the PEV. However,all of these existing studies [28]–[33] assume that the EVenergy demand and charging time is available from the EVusers. Since the assumed approaches are often focused onpricing-based scheduling and EVSE selection for the EVs, themaximum charging capacity utilization for utility provider andreduction of charging time are not taken into account. Indeed,it is necessary to maximize the EV charging fulfillment ratefor each EVSE to incorporate fair charging policy in CAVinfrastructure.

Nowadays, the individual infrastructure-based EV chargingenergy management has gained a lot of attention due to thenecessity of EV charging in the EVSE supported area [34]–[38]. In [34], the authors proposed an anti-aging vehicle togrid (V2G) scheduling approach that can quantify batterydegradation for EVs using a rain-flow cycle counting (RCC)algorithm. Particularly, the authors have been modeled a multi-objective optimization problem to anticipate a collaborativeEV charging scheduling decision between EVs and grid. Thework in [35] studied a dynamic pricing model to solve theproblem of overlaps energy load between residential andCS. To do this, the authors have been formulated a con-straint optimization problem and that problem was solved byproposing a rule-based price model. A day-ahead EV chargingscheduling scheme was proposed in [36] for integrated photo-

TABLE II: Summary of notations

Notation DescriptionN Set of electric vehicle supply equipmentV𝑛 Set of EV charging sessions in EVSE 𝑛 ∈ NY

cap𝑛 (𝑡) Energy supply capacity at each EVSE 𝑛 ∈ N

Yreq𝑛,𝑣 Energy demand requested by EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈

NYact𝑛,𝑣 Actual energy delivered by EVSE 𝑛 ∈ N to EV 𝑣 ∈ V𝑛

𝜏req𝑛,𝑣 Session duration requested by EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈

N𝜏act𝑛,𝑣 Actual charging time by EVSE 𝑛 ∈ N for EV 𝑣 ∈ V𝑛

𝜏plug𝑛,𝑣 Total plugged-in time by EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N

𝜏strt𝑛,𝑣 Charging session starting time at EVSE 𝑛 ∈ N of EV

𝑣 ∈ V𝑛

𝜏end𝑛,𝑣 Charging end time at EVSE 𝑛 ∈ N to EV 𝑣 ∈ V𝑛

𝜏uplg𝑛,𝑣 Charging unplugged time by EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N

_req𝑛 (𝑡) Average energy demand rate at EVSE 𝑛 ∈ N

_act𝑛 (𝑡) Energy delivery rate at EVSE 𝑛 ∈ N

Yrec𝑣 (𝑡) Energy receiving capability by EV𝑣 ∈ V𝑛

Z𝑛,𝑣 (𝑡) Ratio between actual and requested energy rate of an EV𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N

𝜌𝑛,𝑣 Ratio between actual charging time and total plugged-intime of EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N

Υ𝑛,𝑣 Ratio between delivered and requested energy of EV 𝑣 ∈V𝑛 at EVSE 𝑛 ∈ N

voltaic systems and EV charging systems in office buildings.In particular, a two-stage energy management mechanismwas considered in which day-ahead scheduling and real-timeoperation were solved by proposing stochastic programmingand sample average approximation-based optimization, re-spectively. Further, in [37], the authors studied a dual-modeCS operation process by analyzing a multi-server queuingtheory. In particular, customer attrition minimization problemwas formulated for CS and solved the problem to achievean optimal pricing EV charging scheme so that EV servicedropping rate become minimized. The work in [38] proposeda smart charging scheme for EV charging station. Hence, theauthors in [38] considered a multi-objective optimization thatcan take into account the dynamic energy pricing among themulti-options charging station. A meta-heuristic mechanismwas proposed to solve this problem. However, the works in[34]–[38] do not consider the case of CAV infrastructure inwhich both human-driven CVs and AVs request for chargingsession to EVSE. In particular, Figure 1 illustrates an exampleof irrational EV charging time and energy request at EVSE1 − 1 − 194 − 821 based on ACN-Data [19] of the JPL site.This figure demonstrates empirical evidence of the irrational[13]–[15] charging behavior of CVs. In other words, the EVcharging session request becomes irrational among the CVsand AVs due to the considerable gap between the requestedand charged energy of the human-driven EV. Moreover, EVcharging requirements (i.e., demand-supply) are also variedamong the EVSEs due to deferent energy receiving and supplycapacity between each EV and EVSE. Therefore, it is essentialto develop a system that not only overcomes the risk ofirrational EV charging session selection but also increases theutilization rate of each EVSE.

III. SYSTEM MODEL AND PROBLEM FORMULATION

In this section, first, we present a detailed system modeldescription for the proposed risk adversarial multi-agent learn-

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 5

EV User Energy Request ((())) ((())) ((()))

EV User Choice

EVSE Agent 1

EV User Energy Request ((())) ((())) ((()))

EV User Choice

EVSE Agent N

Feed

back

to U

ser

Feed

back

to U

ser

Imita

ted

Know

ledg

e

Imita

ted

Know

ledg

e

DSO Controller

Adve

rsar

ial R

isk

Adve

rsar

ial R

isk

Energy Flow

Electric Vehicle Supply Equipment (EVSE)

Autonomous Vehicle (AV)

Human-Driven Connected Vehicle (CV)

((()))

((()))

Risk Adversarial Agent

X2V Communications

V2X Communications

X2X Communications

Fig. 2: Risk adversarial multi-agent learning system for au-tonomous and connected electric vehicle charging infrastruc-ture.

ing system of CAV-CI. Second, then we formulate a maximumcapacity utilization decision problem for the DSO of CAV-CI,and we call this problem as a Max DSO capacity problem.Table II illustrates the summary of notations.

A. CAV-CI System Model

Consider a connected and autonomous vehicle (CAV) sys-tem that is attached with a distribution system operator (DSO)controller as shown in Figure 2. The CAV system enableselectricity charging facility for electric vehicles (EVs) thatinclude both human-driven and automated vehicle. We con-sider a set N = {1, 2, . . . , 𝑁} of 𝑁 EVSEs that encompassa DSO coverage area. A DSO controller is connected withenergy sources, in order to provide energy supply to eachEVSE 𝑛 ∈ N with a capacity Y

cap𝑛 (𝑡). We consider a time

slot 𝑡 having a fixed duration (i.e., 1 hour) and a finite timehorizon T = 1, 2, . . . , 𝑇 of 𝑇 time slots [27], [39]. A setV𝑛 = {1, 2, . . . , 𝑉𝑛} of 𝑉𝑛 EV charging session requests willarrive from EVs to EVSE 𝑛 ∈ N through V2X communication[40] with an average charging rate _

req𝑛 (𝑡) (kWh) at time

slot 𝑡. Each EV will request for a certain amount of energyY

req𝑛,𝑣 and available charging time 𝜏

req𝑛,𝑣 to an EVSE 𝑛 ∈ N .

In particular, we consider the first come first serve (FCFS)queuing approach due to the prioritizing EVs request. Theproperties of this charging session 𝑣 ∈ V𝑛 request is denotedby a tuple (Yreq

𝑛,𝑣 , 𝜏req𝑛,𝑣 ). Thus, the average energy demand rate

[kWh] of EVSE 𝑛 ∈ N will be,

_req𝑛 (𝑡) =

∑𝑣∈V Y

req𝑛,𝑣∑

𝑣∈V 𝜏req𝑛,𝑣 ∗ 60

. (1)

The duration of EV charging at EVSE 𝑛 ∈ N dependson its capacity Y

cap𝑛 (𝑡) along with the receiving capability

Yrec𝑣 (𝑡) of each EV [19]. We consider 50 kW (125A) DC

fast charger that uses either the CHAdeMO or CCS chargingstandards [41]. Meanwhile, energy delivery rate _act

𝑛 (𝑡) of theEVSE 𝑛 ∈ N relies on actual amount of charged energyduring the EV charging session 𝑣 ∈ V𝑛. Consider a tuple(𝜏strt

𝑛,𝑣 , 𝜏end𝑛,𝑣 , 𝜏

uplg𝑛,𝑣 , Yact

𝑛,𝑣 ) that denotes 𝜏strt𝑛,𝑣 , 𝜏end

𝑛,𝑣 , 𝜏uplg𝑛,𝑣 , and Yact

𝑛,𝑣

as session start time, session end time, unplugged time, andamount of delivered energy, respectively of an EV chargingsession 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N . Therefore, we can determinethe actual charging rate _act

𝑛 (𝑡) (kWh) of EVSE 𝑛 at time slot𝑡 as follows:

_act𝑛 (𝑡) =

∑𝑣∈V Yact

𝑛,𝑣∑𝑣∈V 𝜏act

𝑛,𝑣 ∗ 60, (2)

where 𝜏act𝑛,𝑣 = 𝜏end

𝑛,𝑣 − 𝜏strt𝑛,𝑣 determines an actual changing

duration of a charging session 𝑣 ∈ V𝑛 and _act𝑛 (𝑡) ≤ Y

cap𝑛 (𝑡).

In the considered CAV charging system, we can employan efficient EV charging sessions ∀𝑣 ∈ V𝑛 scheduling ateach EVSE 𝑛 ∈ N in such that rationality among thehuman-driven and automate EVs charging is preserved. Inparticular, a tail-risk for that schedule is taken into account as aform of rationality for both human-driven and automate EVscharging. Thus, the laxity of an actual EV charging sessiondepends on each of the EV session request (Yreq

𝑛,𝑣 , 𝜏req𝑛,𝑣 ) and

operational (𝜏strt𝑛,𝑣 , 𝜏

end𝑛,𝑣 , 𝜏

uplg𝑛,𝑣 , Yact

𝑛,𝑣 ) properties of each EVSE𝑛 ∈ N . Therefore, we first discuss a laxity risk adversarialmodel of the DSO controller. We will then describe a rationalreward model of each EVSE. Table II illustrates a summaryof notations.

1) Laxity Risk Adversarial Model of DSO Controller:We denote laxity of the DSO controller as 𝐿 (𝒙, 𝒚) that isassociated with a 𝑝 = 3 dimensional decision vector 𝒙 B(Yact

𝑛,𝑣 , _act𝑛 (𝑡), 𝜏act

𝑛,𝑣 ) ∈ R𝑝 at each EVSE 𝑛 ∈ N . We considera set 𝑿 of decision vectors that contain available decisions∀𝒙 ∈ 𝑿 at time slot 𝑡. The decision 𝒙 ∈ 𝑿 of an EV chargingsession 𝑣 ∈ V𝑛 is affected by a 𝑞 = 2 dimensional vector𝒚 B (Yreq

𝑛,𝑣 , 𝜏req𝑛,𝑣 ) ∈ R𝑞 that is requested by each EV 𝑣 ∈ V𝑛.

Further, a set 𝒀 of random vectors (i.e., ∀𝒚 ∈ 𝒀) that holdsthe properties of EV session requests at each EVSE 𝑛 ∈ N .Therefore, in the context of EV charging, the laxity [22] ofthe DSO controller at time slot 𝑡 is defined as follows:

Definition 1. Laxity of the DSO controller: Considering eachEV 𝑣 ∈ V𝑛 requests an energy demand Y

req𝑛,𝑣 and charging

rate _req𝑛 (𝑡) to an EVSE 𝑛 ∈ N . While the amount of actual

energy Yact𝑛,𝑣 is delivered to EV 𝑣 ∈ V𝑛 by EVSE 𝑛 ∈ N with

a delivery rate _act𝑛 (𝑡). Thus, the laxity of the DSO controller

(i.e., ∀𝑣 ∈ V𝑛 and ∀𝑛 ∈ N ) at time slot 𝑡 is defined as follows:

𝐿 (𝒙, 𝒚) = min𝒙∈𝑿E𝒙∼𝑿

[ ∑𝑛∈N

∑𝑣∈V

|( Y

req𝑛,𝑣

_req𝑛 (𝑡)

−Yact𝑛,𝑣

_act𝑛 (𝑡)

)|], (3)

where _req𝑛 (𝑡) and _act

𝑛 (𝑡) are determined by (1) and (2),respectively.

We consider a cumulative distribution function 𝜓(𝒙, b) ≔

𝑃(𝐿 (𝒙, 𝒚) ≤ b) of laxities (3), where 𝜓(𝒙, b) is a probabilitydistribution that laxity can not exceed an upper bound b [20],[21], [42]. Therefore, a risk of laxity (3) is associated with therandom variable 𝒙 ∈ 𝑿. As a result, the risk of laxity is related

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 6

with a decision 𝒙 in such a way that belongs to the distributionof 𝜓(𝒙, b) for a significance level 𝛼 ∈ (0, 1). Thus, a Value-at-Risk (VaR) 𝑅VaR

𝛼 (𝐿 (𝒙, 𝒚)) of laxity (3) is determined asfollows [20], [21], [43]:

𝑅VaR𝛼 (𝐿 (𝒙, 𝒚) = min

b ∈R{b ∈ R : 𝜓(𝒙, b) ≥ 𝛼} . (4)

Our goal is to capture extreme tail risk of the laxity for EVcharging session scheduling that will cope with beyond theVaR cutoff point. In particular, we characterize volatile risk ofEV requests 𝒚 ∈ 𝒀 , which is random. Thus, we define a CVaR𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) of laxity 𝐿 (𝒙, 𝒚) (i.e., (3)) as follows:

Definition 2. CVaR of laxity for the DSO controller:Considering CVaR of laxity 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚)) as a form ofexpectation E𝒚∼𝒀

[𝐿 (𝒙, 𝒚) |𝐿 (𝒙, 𝒚) ≥ 𝑅VaR

𝛼 (𝐿 (𝒙, 𝒚)], while a

tail-risk of the laxity for the DSO controller exceed a cutoffpoint from the distribution of the VaR 𝑅VaR

𝛼 (𝐿 (𝒙, 𝒚) (i.e.,(4)). Thus, a CVaR 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚) of EV scheduling laxityfor the DSO controller is defined as a function of b and theCVaR of laxity for the DSO controller becomes 𝜙𝛼 (𝒙, b) ≔

b + 11−𝛼Eb∼𝜓 (𝒙, b ) ≥𝛼

[(𝐿 (𝒙, 𝒚) − b)+

]. In which, 𝒙 represents

a decision vector 𝒙 B (Yact𝑛,𝑣 , _

act𝑛 (𝑡), 𝜏act

𝑛,𝑣 ) ∈ R𝑝 at each EVSE𝑛 ∈ N .

Therefore, we can determine the CVaR of laxity for theDSO controller as follows:

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) = min

𝒙∈𝒙, b ∈R𝜙𝛼 (𝒙, b), (5)

where CVaR of the laxity associated with 𝒙 ∈ 𝑿. Therefore,the risk adversarial model of laxity is determined as follow:

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) =

min𝒙∈𝒙, b ∈R

(b + 1

1 − 𝛼Eb∼𝜓 (𝒙, b ) ≥𝛼

[(𝐿 (𝒙, 𝒚) − b)+

] ).

(6)

In (6), we determine a CVaR-based risk adversarial model forthe DSO controller, which can capture a tail-risk of the laxity(3) for the considered CAV system. Therefore, it is imperativeto model a rational reward for each EVSE 𝑛 ∈ N such that thereward can cope with the laxity tail-risk towards the future EVscheduling decision. A rational reward model of each EVSEis defined in the following section III-A2.

2) Rational Reward Model of Each EVSE: A rational EVcharging session scheduling decision relates to a ratio betweenthe amount of energy delivered by an EVSE and the requestedenergy of an EV as well as a ratio of the actual charging timeand total plugged-in time [30], [35], [36], [44]. In particular,a ratio between the actual energy delivery rate _act

𝑛 (𝑡) (in (2))and the requested demand rate _

req𝑛 (𝑡) (in (1)) at EVSE 𝑛 ∈ N

can characterize a rational EV charging session schedulingdecision. Thus, we define a ratio between actual and requestedenergy rate for each EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N as follows:

Z𝑛,𝑣 (𝑡) =_act𝑛 (𝑡)

_req𝑛 (𝑡)

. (7)

In (7), we can discretize a behavior of energy delivery rate ofEVSE 𝑛 ∈ N during the charging period. In fact, we can notfully cope with a rational decision by (7) while EVSE will notbe free for the next EV charging scheduled until the physically

unplugged. Therefore, to deal with a rational decision, the totalplugged-in time 𝜏

plug𝑛,𝑣 = 𝜏

uplg𝑛,𝑣 − 𝜏strt

𝑛,𝑣 of EV 𝑣 ∈ V𝑛 is takeninto account, where 𝜏

uplg𝑛,𝑣 and 𝜏strt

𝑛,𝑣 represent unplugged andstarting (i.e., plugged) time, respectively. A ratio between theactual charging time and total plugged-in time of EV 𝑣 ∈ V𝑛

is defined as follows:

𝜌𝑛,𝑣 =𝜏act𝑛,𝑣

𝜏plug𝑛,𝑣

, (8)

where 𝜏act𝑛,𝑣 represents the actual charging duration. In EVSE

𝑛, we represent energy delivered Yact𝑛,𝑣 to requested Y

req𝑛,𝑣 ratio

Υ𝑛,𝑣 for EV 𝑣 ∈ V𝑛 as follows:

Υ𝑛,𝑣 =Yact𝑛,𝑣

Yreq𝑛,𝑣

. (9)

We consider a set A = {A1,A2, . . . ,A𝑁 } of 𝑁 sets that canstore EVs scheduling indicators for all EVSEs over the DSOcoverage area. We define a scheduling indicator as a vector𝒂𝑛,𝑣 B (𝑃(Υ𝑛,𝑣 ), 1 − 𝑃(Υ𝑛,𝑣 )) ∈ R2, 𝒂𝑛,𝑣 ∈ A𝑛 ∈ R |V𝑛 |×2

for EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N , where 𝑃(Υ𝑛,𝑣 ) represents aprobability of EV rational scheduling decision that belongs to acontinuous space. Thus, we consider a binary decision variable𝑎𝑛,𝑣 for each EV 𝑣 ∈ V𝑛 charging scheduling at EVSE 𝑛 ∈ Nas,

𝑎𝑛,𝑣 =

{1, if arg max(𝒂𝑛,𝑣 ) = 0,0, otherwise, (10)

where 𝑎𝑛,𝑣 = 1 if EVSE 𝑛 ∈ N is capable of schedulingfor charging EV 𝑣 ∈ V𝑛, and 0 otherwise (i.e., in the queue).We define a vector 𝜼𝑛,𝑣 B (Υ𝑛,𝑣 , 1 − Υ𝑛,𝑣 ) ∈ R |𝒂𝑛,𝑣 | thatholds the demand-supply ratio of EV 𝑣 ∈ V𝑛 at EVSE𝑛 ∈ N , where [𝑛,𝑣 (𝑎𝑛,𝑣 ) = arg

𝑎𝑛,𝑣 ∈ 𝒂𝑛,𝑣

(𝜼𝑛,𝑣 ) represents an

index of 𝜼𝑛,𝑣 (i.e., 0 or 1). Thus, the value of [𝑛,𝑣 relieson the decision of 𝑎𝑛,𝑣 ∈ 𝒂𝑛,𝑣 and we can determine thecurrent 𝑣 ∈ V𝑛 and next 𝑣′ ∈ V𝑛 EVs demand-supplyratio by [𝑛,𝑣 (𝑎𝑛,𝑣 ) and [𝑛,𝑣′ (𝑎𝑛,𝑣 ), respectively. We thenconsider a set S = {S1,S2, . . . ,S𝑁 } of 𝑁 EVSE state sets,where each EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N consist of a statevector 𝒔𝑛,𝑣 B (Yreq

𝑛,𝑣 , 𝜏req𝑛,𝑣 , 𝜏

strt𝑛,𝑣 , 𝜏

end𝑛,𝑣 , 𝜏

uplg𝑛,𝑣 , Yact

𝑛,𝑣 ) ∈ R6 and𝒔𝑛,𝑣 ∈ S𝑛 ∈ R |V𝑛 |×6. Thus, a reward of EV 𝑣 ∈ V𝑛 chargingfor EVSE 𝑛 ∈ N is defined as follows:

𝑟 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) =

1 + Z𝑛,𝑣 (𝑡)𝜌𝑛,𝑣 (1 − 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚))),if Z𝑛,𝑣 (𝑡) = 1, [𝑛,𝑣 (𝑎𝑛,𝑣 ) ≥ [𝑛,𝑣′ (𝑎𝑛,𝑣 ),

Z𝑛,𝑣 (𝑡)𝜌𝑛,𝑣 (1 − 𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚))),

if Z𝑛,𝑣 (𝑡) ≠ {0, 1} , [𝑛,𝑣 (𝑎𝑛,𝑣 ) ≥ [𝑛,𝑣′ (𝑎𝑛,𝑣 ),0, otherwise,

(11)where 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚)), Z𝑛,𝑣 (𝑡), and 𝜌𝑛,𝑣 are determined by(6), (7), and (8), respectively. In (11), the reward of eachEV 𝑣 ∈ V𝑛 charging for EVSE 𝑛 ∈ N is characterized thatcopes with a tail-risk of the laxity as well as discretizes arational demand-supply behavior between each EV and EVSE.The goal of the proposed Max DSO capacity problem is tomaximize the overall electricity utilization of the all EVSEs.In particular, the EV charging scheduling will be performedby enabling a risk adversarial so that the laxity of EV chargingscheduling is reduced and electricity utilization of each EVSE

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 7

is increased. A detailed discussion of the CAV charging systemproblem formulation is given in the next section.

B. Problem Formulation for CAV-CI

The goal of Max DSO capacity problem problem is tomaximize the utilization of charging capacity for the DSO,where tail-risk of the laxity is imposed that incorporatesirrational energy and charging time demand by the EVs. Thus,the Max DSO capacity problem is formulated as follows:

max𝒂𝑛,𝑣 ∈A𝑛 ,𝒙∈𝑿 , b

∑∀𝑡 ∈T

∑∀𝑛∈N

∑∀𝑣∈V𝑛

𝑟 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ), (12)

s.t. 𝑃(𝐿 (𝒙, 𝒚) ≤ b) ≥ 𝛼, (12a)𝑎𝑛,𝑣_

act𝑛 + (1 − 𝑎𝑛,𝑣 )_req

𝑛 (𝑡) ≤ Ycap𝑛 (𝑡), (12b)

𝑎𝑛,𝑣Υ𝑛,𝑣 + (1 − 𝑎𝑛,𝑣 )Υ𝑛,𝑣 ≤ Yrec𝑣 (𝑡), (12c)

(𝜏end𝑛,𝑣 − 𝜏strt

𝑛,𝑣 )𝑎𝑛,𝑣 ≤ 𝜏req𝑛,𝑣 , (12d)

𝑎𝑛,𝑣 (𝜏uplg𝑛,𝑣 − 𝜏strt

𝑛,𝑣 ) ≥ 𝜏act𝑛,𝑣 , (12e)∑

𝑛∈N

∑𝑣∈V𝑛

𝑎𝑛,𝑣 ≤ |V𝑛 |,∀𝑛 ∈ N , (12f)

𝑎𝑛,𝑣 ∈ {0, 1} ,∀𝑡 ∈ T ,∀𝑛 ∈ N ,∀𝑣 ∈ V𝑛. (12g)

In problem (12), 𝑎𝑛,𝑣 , 𝒙 B (Yact𝑛,𝑣 , _

act𝑛 (𝑡), 𝜏act

𝑛,𝑣 ) ∈ 𝑿, and b arethe decision variables, where 𝑎𝑛,𝑣 decides EV 𝑣 ∈ V𝑛 chargingor queuing decision at EVSE 𝑛. Meanwhile, Yact

𝑛,𝑣 , _act𝑛 (𝑡), and

𝜏act𝑛,𝑣 represent actual deliverable energy, charging rate, and

actual charging time, respectively, for EV 𝑣 ∈ V𝑛 at EVSE𝑛 ∈ N . An upper bound of the cumulative distribution functionof the laxity is determined by b. Constraint (12a) in problem(12) ensures a tail-risk of the laxity (3) while 𝛼 represents asignificance level (e.g., empirically used 𝛼 = [0.90, 0.95, 0.99][20], [21], [42]) of the considered tolerable tail-risk. Inessence, this constraint executes the role of risk adversarial inproblem (12). Constraint (12b) defines a coordination betweendeliverable charging rate (2) of each EVSE 𝑛 ∈ N andrequested rate (1) by the EVs. Additionally, this constraintrestricts the energy delivery rate _act

𝑛 in a capacity Ycap𝑛 (𝑡)

of each EVSE 𝑛 ∈ N . Hence, constraint (12c) assures arational energy supply to each EV 𝑣 ∈ V𝑛 in a receivingcapacity Yrec

𝑣 (𝑡). Constraint (12d) provides a guarantee forcharging each EV 𝑣 ∈ V𝑛 in a requested time duration 𝜏

req𝑛,𝑣 .

Constraint (12e) ensures EVs are being plugged-in during thecharging period. Finally, constraints (12f) and (12g) assurethe charging scheduling/queuing decision for all EV requests∀𝑣 ∈ V𝑛 at each EVSE 𝑛 ∈ N and the decision variable 𝑎𝑛,𝑣is a binary, respectively. The formulate Max DSO capacityproblem in (12) leads to a mixed-integer decision problemwith its corresponding variables and constraints. Therefore,the formulated problem (12) becomes NP-hard and intractablein a deterministic time. Perhaps, the formulated problem (12)can be solved in a nondeterministic polynomial time butnot efficiently. Hence, we solve the formulated problem (12)by decomposing it into a rational decision support systemby designing a data-informed approach. This approach notonly discretizes the behavior of both CVs and AVs energycharging data but is also can take an action by analyzingthe contextual (i.e., rational/irrational) behavior of those data.

In particular, we propose a novel risk adversarial multi-agentlearning system by utilizing the recurrent neural network in adata-informed manner. Further, the proposed solution approachprovides a rational decision-making model for the DSO byfulfilling the following criteria:

• We define a goal to maximize the overall electricity uti-lization for each EVSE that can accumulatively maximizethe utilization of the DSO.

• Then, we discretize an alternative EV charging sessionscheduling by capturing the CVaR risk of the DSO. Inparticular, that can meet with an uncertainty of EVscharging session request as well as assists each EVSEto an alternative charging session policy by coping withthe adversarial risk of the DSO.

• The DSO can cope with an imitated knowledge from eachEVSE to evaluate the alternative charging session policyand capable of updating policies for each EVSE.

• Each EVSE has its own admission control capabilities forexecuting the EV charging session.

Therefore, in the following section, we provide a detaileddiscussion of the proposed RAMALS. In which, each EVSEacts as a local agent and DSO controller works as a riskadversarial agent for the CAV-CI.

IV. RAMALS FRAMEWORK FOR CONNECTED ANDAUTONOMOUS VEHICLE CHARGING

In this section, we develop our proposed RAMALS frame-work (as shown in Figure 3) for EV charging session schedul-ing in the CAV-CI. In this work, we have designed a riskadversarial multi-agent learning system, where behaviors ofthe data (i.e., EV charging behavior) are coping in a data-informed [45], [46] manner. In particular, a centralized riskadversarial agent (RAA) analyzes the laxity risk for CAV-CI by employing Conditional Value at Risk (CVaR) [20],[21] to the charging behavior of EVs (i.e., both CA andAV). Subsequently, each EVSE learning agent (EVSE-LA) canlearn and discretize its own EV session scheduling policy bytaking into account the adversarial risk of RAA. Thus, thiswork has designed each reward function (11) by consideringadversarial risk from RAA. To this end, each EVSE-LA canexplore its own environments while systematic perturbationsof EV charging requests are analyzed by the RAA for avoidingthe laxity risk of the EVSE scheduled policy. Therefore, theproposed risk adversarial learning system belongs to the familyof adversarial learning (as shown in Figure 4) [47]–[49]. First,we present a laxity risk estimation procedure for the DSO.Second, we discuss the learning procedure of the proposedRAMALS. Finally, we discuss the execution mechanism of theproposed RAMALS towards the efficient EV charging sessionscheduling for each EVSE.

A. Laxity Risk Estimation for DSO

To calculate the laxity risk for the DSO, RAA will executea student’s t-distribution-based linear model. Thus, the laxity𝐿 (𝒙, 𝒚) in (3) itself is a random variable and we denote as,𝐷 ≔ 𝐿 (𝒙, 𝒚), where a sample of laxity is represented by 𝑑.We consider 𝜔, `, and 𝜎 as degree of freedom, mean, and

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 8

Fig. 3: Proposed framework of risk adversarial multi-agent learning system (RAMALS) for connected and autonomous vehiclecharging.

Fig. 4: An overview of the proposed risk adversarial multi-agent learning system.

standard deviation, respectively. Thus, the probability densityfunction (pdf) for student t-distribution defines as follows [50]:

𝑃(𝑑, 𝜔, `, 𝜎) =Γ( 𝜔+1

2 )Γ( 𝜔2 )

√𝜋𝜔𝜎

(1 + 1

𝜔

(𝑑 − `

𝜎

)2)−( 𝜔+1

2 )

, (13)

where Γ(·) represents gamma function. We fit t-distributionto observational laxity 𝑑1, 𝑑2, . . . , 𝑑𝐽 ∈ 𝐷 = 𝐿 (𝒙, 𝒚) thatis indexed by 𝑗 , 𝐽 = |V𝑛 |,∀𝑛 ∈ N . This fit is computedby maximizing a log-likelihood function 𝑙 (𝐷;𝜔, `, 𝜎) that isdefined as follows [50], [51]:

𝑙 (𝐷;𝜔, `, 𝜎) = 𝐷 logΓ(𝜔 + 12

) + 𝐷𝜔

2log(𝜔) − 𝐷Γ(𝜔

2)−

𝐷

2log𝜎 − 𝜔 + 1

2

𝐽∑𝑗=1

log

(𝜔 +

(𝑑 𝑗 − `)2

𝜎2

),

(14)

where we can obtain parameters 𝜔, `, and 𝜎 that representdegree of freedom, mean, and standard deviation, respectively.Therefore, we can determine the upper bound b of the laxityrisk by a percent point function (PPF) of the consideredprobability distribution. This PPF is an inverse of cumulative

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 9

Algorithm 1 Laxity Risk Estimation based on Linear Model

Input: (Yreq𝑛,𝑣 , 𝜏

req𝑛,𝑣 , 𝜏

strt𝑛,𝑣 , 𝜏

end𝑛,𝑣 , 𝜏

uplg𝑛,𝑣 , Yact

𝑛,𝑣 )∀𝑣 ∈ V𝑛,∀𝑛 ∈ NOutput: 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚)),∀𝑣 ∈ V𝑛,∀𝑛 ∈ NInitialization: 𝜔, `, 𝜎, 𝛼

1: for Until: 𝑡 ≤ 𝑇 do2: for Until: ∀𝑛 ∈ N do3: for ∀𝑣 ∈ V𝑛 do4: Calculate: _

req𝑛 (𝑡) using (1) {Average energy de-

mand rate by EVs}5: Calculate: _act

𝑛 (𝑡) using (2) {Average charging rateby EVSE}

6: Calculate: 𝐿 (𝒙, 𝒚), using (3), where 𝒙 B(Yact

𝑛,𝑣 , _act𝑛 (𝑡), 𝜏act

𝑛,𝑣 ), and 𝒚 B (Yreq𝑛,𝑣 , 𝜏

req𝑛,𝑣 ), ∀𝒙 ∈ 𝑿,

∀𝒚 ∈ 𝒀 { Laxity of the DSO 𝐷 ≔ 𝐿 (𝒙, 𝒚), 𝑑 ∈ 𝐷}7: end for8: end for9: Calculate: 𝑃(𝑑, 𝜔, `, 𝜎) using (13) {PDF of observa-

tional laxity 𝑑 ∈ 𝐷}10: Estimate: 𝑙 (𝐷;𝜔, `, 𝜎) using (14) {Maximizing log-

likelihood of 𝑑 ∈ 𝐷}11: if 𝛼 ≤ 𝐹𝐷 (b) then12: Calculate: 𝑃𝜔 (b) using (15)13: Estimate: 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚)) using (16)14: end if15: end for16: return 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚)), _req𝑛 (𝑡), _act

𝑛 (𝑡)

distribution function (CDF) 𝐹𝐷 (b) ≔ 𝑃(𝐷 ≤ b). Hence,for a given significance level 𝛼 of the laxity tail-risk, thePPF is defined as, 𝐹−1

𝐷(𝛼) = inf {b ∈ R : 𝛼 ≤ 𝐹𝐷 (b)}, where

𝐹−1𝐷

(𝛼) ≤ b if and only if 𝛼 ≤ 𝐹𝐷 (b). Then, we can rewritethe probability density function (13) for the of standardizedstudent t-distribution as follows:

𝑃𝜔 (b) =Γ( 𝜔+1

2 )Γ( 𝜔2 )

√𝜋𝜔

(1 + b2

𝜔

)−( 𝜔+12 )

, (15)

where b is a cut-off point of the laxity tail-risk. Laxity riskfor the risk adversarial is calculated as follows:

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) = −1

𝛼(1 − 𝜔) (𝜔 + b2)𝑃𝜔 (b)𝜎`. (16)

Laxity risk for a DSO can estimate by executing a linearmodel based on student’s 𝑡-distribution [See Appendix A].Hence, we estimate laxity risk for a DSO in Algorithm 1.Algorithm 1 receives input (Yreq

𝑛,𝑣 , 𝜏req𝑛,𝑣 , 𝜏

strt𝑛,𝑣 , 𝜏

end𝑛,𝑣 , 𝜏

uplg𝑛,𝑣 , Yact

𝑛,𝑣 )of all EV charging sessions ∀𝑣 ∈ V𝑛 for all of the EVSEagents ∀𝑛 ∈ N . Thus, the steps from lines 2 to 8 calculateaverage energy demand rate by EVs, average charging rate byEVSE, and laxity of the DSO using equation (1), (2), and (3),respectively. Then we determine the parameters 𝜔, `, and 𝜎

in line 9 while line 10 estimates a maximum log-likelihood inAlgorithm 1. Finally, we estimate laxity tail-risk from lines 11to 14 (in Algorithm 1) for the DSO of each time-slot 𝑡. TheAlgorithm 1 is executed iteratively for a finite time 𝑇 . Theoutcome of Algorithm 1 is used to decide a risk-adversarialreward for each EVSE.

B. Learning Procedure of RAMALS

We consider each EVSE 𝑛 ∈ N to be a learning agent thatcan explore the dynamics of the Markov Decision Process(MDP) for each EV 𝑣 ∈ V𝑛 charging request and deliveredenergy scheduling, independently. Thus, using a reward (11)of each EV 𝑣 ∈ V𝑛, the expectation of a discounted reward[52] at each EVSE 𝑛 ∈ N is defined as follows:

𝑅𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) = max𝒂𝑛,𝑣 ∈A𝑛

E𝒂𝑛,𝑣∼𝒔𝑛,𝑣 [𝑣∈V𝑛∑𝑣′=𝑣

𝛾𝑣′−𝑣𝑟 (𝒂𝑛,𝑣′ , 𝒔𝑛,𝑣′)],

(17)where 𝛾 ∈ (0, 1) represents a discount factor that ensuresconvergence of the discounted reward at EVSE 𝑛 ∈ N . Weconsider a set 𝜋 =

{𝝅𝜽1 , 𝝅𝜽2 , . . . , 𝝅𝜽𝑁

}of 𝑁 EVSE scheduling

policies with the set of parameters \ = {𝜽1, 𝜽2, . . . , 𝜽𝑁 }.EVSE learning agent (EVSE-LA) 𝑛 ∈ N decides a chargingscheduling decision 𝒂𝑛,𝑣 for each EV 𝑣 ∈ V𝑛 with theparameters 𝜽𝑛 that will be determined by a stochastic policy𝝅𝜽𝑛 . In particular, this policy 𝝅𝜽𝑛 is estimated by a deepdeterministic policy gradient (DDPG) method [53] that caneffectively handle the continuous decision space to a discretespace for an EV charging scheduling decision at EVSE 𝑛 ∈ N .Moreover, the policy 𝝅𝜽𝑛 can directly map 𝝅𝜽𝑛 : 𝒔𝑛,𝑣 ↦→ 𝒂𝑛,𝑣with state 𝒔𝑛,𝑣 and EV charging decision 𝒂𝑛,𝑣 instead ofproducing the probability distribution throughout a discretedecision space. Thus, an expectation of decision value functionfor EVSE-LA 𝑛 ∈ N can express as follows:

𝑄𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) = E𝝅𝜽𝑛[ 𝑣∈V𝑛∑𝑣′=𝑣

𝛾𝑣′−𝑣𝑟 (𝒂𝑛,𝑣′ , 𝒔𝑛,𝑣′) |𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ] .

(18)As a result, an expectation of state value function for EVSE-LA 𝑛 ∈ N is determined by,

𝑉𝝅∗𝜽𝑛 (𝒔𝑛,𝑣 ) = E𝒂𝑛,𝑣∼𝝅𝜽𝑛 (𝒂𝑛,𝑣 |𝒔𝑛,𝑣 ;𝜽𝑛)

[𝑄𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 )

], (19)

where 𝝅∗𝜽𝑛

represents the best EV charging scheduling policy.In essence, (18) determines a policy 𝝅𝜽𝑛 for each EV 𝑣 ∈ V𝑛

at EVSE-LA 𝑛 ∈ N . Meanwhile, based on the EV schedulingpolicy 𝝅𝜽𝑛 , (19) estimates an optimal value for a given currentstate 𝒔𝑛,𝑣 of EV 𝑣 ∈ V𝑛. Therefore, a temporal difference (TD)error [54], [55] of each EVSE-LA 𝑛 ∈ N can be calculatedas,

Λ𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) = 𝑄𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) −𝑉𝝅∗𝜽𝑛 (𝒔𝑛,𝑣 ). (20)

In order to estimate EV charging schedule policy 𝝅𝜽𝑛 foreach EVSE-LA 𝑛 ∈ N , we design a shared neural networkmodel. In particular, we model a long short-term memory(LSTM)-based recurrent neural network (RNN) [55]–[57] thatcan share the learning weights among the other EVSE-LAs. Asa result, each EVSE-LA 𝑛 ∈ N can capture the time-dependentfeatures from the RNN states to make its own scheduling deci-sion, where RNN states are denoted by 𝚿𝑛. We consider an ob-servational memory vector 𝒘𝑛,𝑣 for each EV 𝑣 ∈ V𝑛 at EVSE𝑛 ∈ N . That memory 𝒘𝑛𝑣 : (𝒂𝑛,𝑣 , 𝑅𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ), 𝑣,𝚿𝑛) ∈ R4

comprises of action 𝒂𝑛,𝑣 , discounted reward 𝑅𝑛 (𝒔𝑛,𝑣 𝒂𝑛,𝑣 ),EV index 𝑣, and RNN states 𝚿𝑛 of EV 𝑣 ∈ V𝑛. Therefore,a set W𝑛 =

{𝒘𝑛,1, 𝒘𝑛,2, . . . , 𝒘𝑛,𝑉𝑛

}of 𝑉𝑛 observation will

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 10

Algorithm 2 RAMALS Training Procedure Based on Recur-rent Neural Network

Input: 𝒔𝑛,𝑣 B (Yreq𝑛,𝑣 , 𝜏

req𝑛,𝑣 , 𝜏

strt𝑛,𝑣 , 𝜏

end𝑛,𝑣 , 𝜏

uplg𝑛,𝑣 , Yact

𝑛,𝑣 ), _req𝑛 (𝑡),

_act𝑛 (𝑡), 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚))Output: RAMALS_model

Initialization: 𝒘𝑛,𝑣 , 𝚿𝑛, ∀V𝑛,∀N , 𝛾 ∈ (0, 1), 𝜋, \, 𝛽1: for Until: 𝑡 ≤ 𝑇 {No. of episodes} do2: Calculate: Z𝑛,𝑣 (𝑡) using (7)3: Calculate: 𝜌𝑛,𝑣 using (8)4: Calculate: Υ𝑛,𝑣 using (9)5: for Until: ∀𝑛 ∈ N do6: for ∀𝑣 ∈ V𝑛 do7: if Z𝑛,𝑣 (𝑡) ≠ {0, 1} && [𝑛,𝑣 (𝑎𝑛,𝑣 ) ≥ [𝑛,𝑣′ (𝑎𝑛,𝑣 )

then8: 𝑟 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) = 1 + Z𝑛,𝑣 (𝑡)𝜌𝑛,𝑣 (1 −

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)))

9: else if Z𝑛,𝑣 (𝑡) ≠ {0, 1} && [𝑛,𝑣 (𝑎𝑛,𝑣 ) ≥[𝑛,𝑣′ (𝑎𝑛,𝑣 ) then

10: 𝑟 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) = Z𝑛,𝑣 (𝑡)𝜌𝑛,𝑣 (1 − 𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)))

11: else12: 𝑟 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) = 013: end if14: Calculate: 𝑅𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ), 𝑉

𝝅∗𝜽𝑛 (𝒔𝑛,𝑣 ), and

Λ𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) using (17), (19), and (20),respectively. {Discounted reward, optimal value,and advantage}

15: Append: 𝒘𝑛𝑣 : (𝒂𝑛,𝑣 , 𝑅𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ), 𝑣,𝚿𝑛), 𝒘𝑛𝑣∀ ∈W𝑛

16: Estimate losses using LSTM for each EVSE-LA: Lvalue (𝜽𝑛), Lpolicy (𝝅𝜽𝑛 (𝒔𝑛,𝑣′ |𝒂𝑛,𝑣 )), 𝐻 (𝝅𝜽𝑛 ),and Lloss (𝜽𝑛) using (21), (22), (23), and (24)respectively. {Value loss, policy loss, entropy loss,and overall loss}

17: Update: 𝜽𝑛 and 𝝅𝜽𝑛18: end for19: Append: 𝜽𝑛 ∈ \,∀𝑛 ∈ N , 𝝅𝜽𝑛 ∈ 𝜋,∀𝑛 ∈ N20: Update: 𝚿𝑛,∀𝑛 ∈ N21: Gradient for each EVSE-LA: ∇𝜽𝑛Lloss (𝜽𝑛) using

(25)22: Find: | |𝜽𝒏 | |2 using (26) {Estimating learning param-

eters 𝜽𝑛 of each EVSE-LA}23: Calculate: Δ𝜽𝑛 using (27) {Changes of gradent}24: \𝑛 = \𝑛 + Δ\𝑛 {Updating learning parameters for

shared LSTM}25: end for26: 𝝅𝜽𝑛 = (𝝅𝜽1 · 𝝅𝜽𝑛−1 · 𝝅𝜽𝑛+1 . . . 𝝅𝜽𝑁 ),∀𝑛 ∈ N {EVSE-LA

policy}27: end for28: return RAMALS_model

take place at the EVSE-LA 𝑛 ∈ N during the observationalperiod. In particular, a set W = {W1,W2, . . . ,W𝑁 } of 𝑁

observational sets are encompassed for all EVSE-LAs in theconsidered EV charging DSO coverage area.

In order to learn an optimal charging scheduling policy foreach EVSE-LA 𝑛 ∈ N , we have to find the optimal learning

parameters that can minimize learning error between targetand learned value. Thus, we consider an ideal target valuefunction 𝑟 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) + 𝛾 max

𝒂𝑛,𝑣 ∈A𝑛

��𝝅𝜽𝑛 (𝒂𝑛,𝑣′ , 𝒔𝑛,𝑣′) of EVSE-

LA 𝑛 ∈ N , where ��𝝅𝜽𝑛 (𝒂𝑛,𝑣′ , 𝒔𝑛,𝑣′) denotes the value for anext EV 𝑣′ ∈ V𝑛 charging request with state 𝒔𝑛,𝑣′ and decision𝒂𝑛,𝑣′ at EVSE 𝑛 ∈ N . Therefore, for parameters 𝜽𝑛, the valueloss function for the EV charging scheduling policy is givenas follows:

Lvalue (𝜽𝑛) = min𝜽𝑛EW𝑛∼W

12

[(𝑟 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 )

+𝛾 max𝒂𝑛,𝑣 ∈A𝑛

��𝝅𝜽𝑛 (𝒂𝑛,𝑣′ , 𝒔𝑛,𝑣′) −𝑄𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 |𝜽𝑛,𝑣 ))2]

.

(21)

In (21), the EVSE-LA 𝑛 ∈ N can determine the best schedul-ing decision by coping with the other EVSE-LAs decisionsthrough the shared observational memory W . In particular,EVSE-LA 𝑛 ∈ N can estimate the policy 𝝅𝜽𝑛 for the next state𝒔𝑛,𝑣′ by taking into account EV scheduling decision 𝒂𝑛,𝑣′ aswell as inferring polices from others EVSE-LA observationalmemory W𝑛′ ∈ W , 𝑛 ≠ 𝑛′. Meanwhile, we determine a policyloss of (20) at EVSE-LA 𝑛 ∈ N as,

Lpolicy (𝝅𝜽𝑛 (𝒔𝑛,𝑣′ |𝒂𝑛,𝑣 )) =−EW𝑛∼W ,𝒂𝑛,𝑣∼𝝅𝜽𝑛

[Λ𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ) log(𝝅𝜽𝑛 (𝒔𝑛,𝑣′ |𝒂𝑛,𝑣 ))

].

(22)

In case of EV charging scheduling policy estimation, a bias-ness of the EVSE learning agent 𝑛 ∈ N can appear by theinfluence of the others EVSE-LA policies in the consideredsystem. Therefore, to overcome this challenge, we consideran entropy 𝐻 (𝝅𝜽𝑛 ) for the stochastic policy of each EVSE-LA 𝑛 ∈ N . Thus, the entropy of an EV scheduling policy 𝝅𝜽𝑛at EVSE-LA 𝑛 ∈ N is defined as follows:𝐻 (𝝅𝜽𝑛 ) = −E𝒂𝑛,𝑣∼𝝅𝜽𝑛

[𝝅𝜽𝑛 (𝒔𝑛,𝑣 ,𝒂𝑛,𝑣 ) log(𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ))

].

(23)

Then we define the overall self-learning loss at EVSE-LA 𝑛 ∈N using (21), (22), and (23) as follows:

Lloss (𝜽𝑛) = EW𝑛∼W , 𝒂𝑛,𝑣 ∼ 𝝅𝜽𝑛[Lvalue (𝜽𝑛) + Lpolicy (𝝅𝜽𝑛 (𝒔𝑛,𝑣′ |𝒂𝑛,𝑣 )) − 𝛽𝐻 (𝝅𝜽𝑛 )

],

(24)

where 𝛽 represents an entropy regularization parameter andLpolicy (𝝅𝜽𝑛 (𝒔𝑛,𝑣′ |𝒂𝑛,𝑣 )) − 𝐻 (𝝅𝜽𝑛 )𝛽 denotes the policy loss.Thus, the gradient of each self-learning EVSE-LA 𝑛 ∈ Nloss (24) is as follows:

∇𝜽𝑛Lloss (𝜽𝑛) = E𝒔𝑛,𝑣∼W𝑛 , 𝒂𝑛,𝑣∼𝜋𝜽𝑛 (𝒔𝑛,𝑣 )[∇𝜽𝑛 log 𝝅𝜽𝑛 (𝒔𝑛,𝑣′ |𝒂𝑛,𝑣 )

Λ𝝅𝜽𝑛(𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 | (W1,W𝑛−1,W𝑛+1, . . . ,W𝑁 )

)+ 𝛽𝐻 (𝝅𝜽𝑛 )

].

(25)

In order to update the gradient to the shared neural network,we define the norms of the learning parameters for each EVSE-LA 𝑛 ∈ N as,

| |𝜽𝒏 | |2 =

√√√𝑣∈V∑𝑣=1

(∇𝜽𝑛Lloss (𝜽𝑛))2, (26)

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 11

where each EVSE-LA 𝑛 ∈ N can determine its own learningparameters 𝜽𝑛. Therefore, we can obtain the changes ofgradient as follows:

Δ𝜽𝑛 =𝜽𝒏𝜽𝑛

max𝒂𝑛,𝑣∼𝝅𝜽𝑛 (𝒔𝑛,𝑣 )

( | |𝜽𝒏 | |2, 𝜽𝑛), (27)

and update the learning parameters by \𝑛 = \𝑛 + Δ\𝑛 to theconsidered share neural network. Thus, EV session schedulingpolicy 𝝅𝜽𝑛 of EVSE-LA 𝑛 ∈ N is determined as a product ofall others 𝑛 ∈ N , 𝝅𝜽𝑛 = (𝝅𝜽1 · 𝝅𝜽𝑛−1 · 𝝅𝜽𝑛+1 . . . 𝝅𝜽𝑁 ).

In summary, the expectation of the discounted reward ofeach EVSE learning agent 𝑛 ∈ N [52] is estimated by(17). An interaction between RAA (i.e., DSO controller)and each EVSE-LA is taken place to calculate each reward𝑟 (𝒂𝑛,𝑣′ , 𝒔𝑛,𝑣′) of EVSE learning agent 𝑛 ∈ N . In particular,the expected discounted reward (17) is estimating by takinginto account the adversarial risk (11) of the DSO controller.Further, during the training and execution, the proposed frame-work minimizes a loss function (24) that is combined withvalue (21), policy (22), and entropy (23) losses. The EVSE-LA 𝑛 ∈ N can determine the best scheduling decision bycoping with the other EVSE-LAs decisions through the sharedobservational memory W . In other words, each EVSE-LA𝑛 ∈ N can estimate the policy 𝝅𝜽𝑛 for the next state 𝒔𝑛,𝑣′ byconsidering its own EV scheduling decision 𝒂𝑛,𝑣′ as well asinferring polices from others observations (i.e., W𝑛′ ∈ W , 𝑛 ≠

𝑛′). Moreover, we deploy a long short-term memory (LSTM)-based shared recurrent neural network (RNN) [55]–[57] thatcan share the learning weights (i.e., RNN states) among theother EVSE-LAs. As a result, each EVSE-LA 𝑛 ∈ N cancapture the time-dependent features from those RNN states tomake its own scheduling decision. In which, the gradient ofloss for each self-learning EVSE-LA 𝑛 ∈ N is estimated in(25) by taking into account of other EVSE agents’ observa-tions (i.e., Λ𝝅𝜽𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 | (W1,W𝑛−1,W𝑛+1, . . . ,W𝑁 ))). Par-ticularly, each EVSE agent determines its own learning pa-rameters 𝜽𝑛 in (26). Then each EVSE agent is obtained thechanges of a gradient in (27) by utilizing its own parameters(i.e., 𝜽𝑛) along with shared neural network parameters 𝜽∀𝑛∈N .These changes are reflected in the shared RNN states forestimating the scheduling policy of other EVSE agents. To thisend, the proposed learning mechanism do jointly optimizedthe loss during training and execution while estimates eachreward from a centralized risk adversarial agent (RAA) andits own states. Therefore, the proposed learning framework forthe connected and autonomous vehicle charging infrastructurebecomes a multi-agent learning system (as shown in Figure4). The training procedure of the proposed RAMALS isrepresented in Algorithm 2.

Algorithm 2 receives input from the output of the Algorithm1, _act

𝑛 (𝑡), _req𝑛 (𝑡), 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚)) as well as state informationfor all charging sessions ∀𝒔𝑛,𝑣 ∈ S of 𝑁 EVSEs. Lines from 2to 4 calculate ratio between actual and requested EV chargingrate, ratio between actual and plunged in EV charging time,and a ratio between actual and requested energy using (7), (8),and (9), respectively. The reward for each charging session isdetermined in lines from 7 to 13 in Algorithm 2. Then, cumula-

TABLE III: : Summary of experimental setup

Description ValueNo. of DSO sites 2No. of EVSEs for training 52No. of EVSEs for testing 92No. of EV sessions for training 3387No. of EV sessions for execution 5246CVaR significance level [0.9, 0.95, 0.99]Energy supply capacity at each EVSE Y

cap𝑛 (𝑡) 50 kW (125A) [41]

DSO capacities [Caltech, JPL] [150, 195] kW [19]No. of episode for training 2000Learning rate 0.001Learning optimizer AdamInitial discount factor 𝛾 0.9Initial entropy regularization parameter 𝛽 0.05No. of LSTM units in one LSTM cell [48, 64, 128]Output layer activation Softmax

tive discounted reward, optimal value function, and advantagefunction for training procedure of the RAMALS are estimatedin line 14 using (17), (19), and (20), respectively. Meanwhile,line 15 in Algorithm 2, appending an observational memorythat memory will be used as an input of RNN (i.e., LSTM) toestimate the training loss in line 16. The learning parameters𝜽𝑛 and EV charging policy 𝝅𝜽𝑛 of each EVSE-LA is updatingin line 17 (in Algorithm 2). Lines from 21 to 24 in Algorithm2, gradient of each EVSE-LA and parameters are updatingby neural network training using Adam optimizer. Finally, apolicy of each EVSE-LA is calculated in line 26. As a result,Algorithm 2 returns a trained model for each EVSE-LA ofthe considered DSO. In particular, this pre-trained model isexecuted by each EVSE-LA to determine its own EV chargingscheduling policy of CAV-CI while RAMALS model is beingupdated periodically by exchanging RNN parameters betweeneach EVSE-LA and RAA.

Note that, in a deep deterministic policy gradient (DDPG)[53] setting, the initial exploration of action is taken intoaccount by a random process to receive an initial observationstate. In this work, we train the model by collecting thecurrently deployed ACN-Data dataset [19]. To do this, wedesign Algorithm 2 in such a way that the initial explorationof action comes from data by receiving observation state fromthat dataset. Therefore, the state of Algorithm 2 contains allof the variables including actual energy delivered by EVSE𝑛 ∈ N to EV 𝑣 ∈ V𝑛 Yact

𝑛,𝑣 . Further, during the loss estimationfor recurrent neural network training in Algorithm 2 (line 16),the action 𝒂𝑛,𝑣 is generated by the current policy 𝝅𝜽𝑛 anddetermines the next state 𝒔𝑛,𝑣′ . In particular, the state 𝒔𝑛,𝑣′contains all necessary variables that are obtained from thepolicy 𝝅𝜽𝑛 . We discuss the execution procedure of RAMALSfor each EVSE 𝑛 ∈ N in the following section.

C. Execution Procedure of RAMALS for Each EVSE 𝑛 ∈ NEach EVSE 𝑛 ∈ N runs Algorithm 3 individually for

deciding its own EVs (i.e., AVs and CVs) charging sessionscheduling policy in CAV-CI. Therefore, the Algorithm 3contains pre-trained RAMALS model as well as request froma current EV. In which, a data-informed laxity CVaR riskassists to determine a rational EV session scheduling for AVs

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 12

Algorithm 3 RAMALS Execution Procedure for Each EVSE𝑛 ∈ N in CAV-CI

Input: 𝒔𝑛,𝑣 : (Yreq𝑛,𝑣 , 𝜏

req𝑛,𝑣 , 𝜏

strt𝑛,𝑣 , 𝜏

end𝑛,𝑣 , 𝜏

uplg𝑛,𝑣 , Yact

𝑛,𝑣 ), RA-MALS_model, 𝑅CVaR

𝛼 (𝐿 (𝒙, 𝒚))Output: 𝑎𝑛,𝑣 , Yact

𝑛,𝑣 , _act𝑛 (𝑡), 𝜏act

𝑛,𝑣 )Initialization: 𝒘𝑛,𝑣 , 𝚿𝑛, 𝜼𝑛,𝑣 : (Υ𝑛,𝑣 , 1 − Υ𝑛,𝑣 ), 𝛾 ∈(0, 1), 𝜋, \, 𝛽, 𝑠𝑤𝑖𝑡𝑐ℎ𝑖𝑛𝑔

1: 𝝅𝜽𝑛 , 𝜽𝑛,𝚿𝑛 = run.Session(RAMALS_model)2: for Until: 𝑡 ≤ 𝑇 do3: for ∀𝑣 ∈ V𝑛 do4: 𝒂𝑛,𝑣 = arg max(𝝅𝜽𝑛 )5: Find: 𝑎𝑛,𝑣 using (10)6: [𝑛,𝑣 (𝑎𝑛,𝑣 ) = arg

𝑎𝑛,𝑣 ∈ 𝒂𝑛,𝑣

(𝜼𝑛,𝑣 )

7: if [𝑛,𝑣 (𝑎𝑛,𝑣 ) ≥ [𝑛,𝑣′ (𝑎𝑛,𝑣 ) then8: 𝑎𝑛,𝑣′ = 1 {Scheduled}9: else

10: 𝑎𝑛,𝑣′ = 0 {Queue}11: end if12: if 𝑎𝑛,𝑣′ == 1 then13: 𝒔𝑛,𝑣′= findNewState(𝑎𝑛,𝑣′)14: end if15: Append: 𝒘𝑛𝑣 : (𝒂𝑛,𝑣 , 𝑅𝑛 (𝒔𝑛,𝑣 , 𝒂𝑛,𝑣 ), 𝑣,𝚿𝑛), 𝒘𝑛𝑣∀ ∈

W𝑛, 𝑎𝑛,𝑣′ ∈ 𝒂𝑛,𝑣16: end for17: Scheduling: 𝑎𝑛,𝑣′ , Yact

𝑛,𝑣′ , _act𝑛 (𝑡), 𝜏act

𝑛,𝑣′ + 𝑠𝑤𝑖𝑡𝑐ℎ𝑖𝑛𝑔

18: end for19: Periodic Update: RAMALS_model using Algorithm 220: return 𝑎𝑛,𝑣′ , Yact

𝑛,𝑣′ , _act𝑛 (𝑡), 𝜏act

𝑛,𝑣′ , ∀𝑣 ∈ V𝑛

and CVs. Line 1 in Algorithm 3 loads the RAMALS modelthat initializes recurrent neural parameters along with currentcharging session policy. Lines from 3 to 16 in Algorithm3 determine scheduling decision in a control flow manner.In particular, scheduling decisions are made in line 5 whileline 13 determines the value of that particular decisions.Line 15 prepares memory of each EV scheduling sessiondecision for further usages in Algorithm 3. Each EV sessionscheduling policy are executed by EVSE 𝑛 ∈ N in line 17(in Algorithm 3). Additionally, the allocated charging timeof each EV is calculated by considering switching time ofthe EVSE 𝑠𝑤𝑖𝑡𝑐ℎ𝑖𝑛𝑔. Each EVSE-LA updates periodically byexchange information with RAA that is represented in line 19in Algorithm 3.

In this work, our aim is to solve the formulated problem(12) in a data-informed manner. Particularly, the decision ofEV charging session schedule of each EVSE be obtained bytaking in to account both intuition and behavior of the data.Therefore, to handle constraints of the formulated rationaldecision support system (RDSS) (i.e., (12)) in the data-informed approach, we design a centralized risk adversarialagent for estimating laxity risk for the DSO and a uniqueeach reward calculation function for EVSE agents. Thus, allof the constraints for problem ((12) have been taken intoaccount before policy learning. In particular, the constraint(12a) is executed in Algorithm 1 during laxity risk estimationbased on Linear Model. The constraints from (12b) to (12e)

are reflected in (11) during each reward calculation of EVSEagents. To this extend, the constraint (12b) is related withaverage energy demand rate (1), actual charging rate (2), andratio between actual and requested energy rate (7), where (1)and (2) are executed in Algorithm 1 while (7) in determined byAlgorithm 2 to calculate each reward (i.e., (11)). The constraint(12c) is associated with energy delivered to requested ratioΥ𝑛,𝑣 for each EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N (i.e., in (9)).That is considered to determine a scheduling indicator vector𝒂𝑛,𝑣 B (𝑃(Υ𝑛,𝑣 ), 1 − 𝑃(Υ𝑛,𝑣 )) ∈ R2, 𝒂𝑛,𝑣 ∈ A𝑛 ∈ R |V𝑛 |×2

for EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N , where 𝑃(Υ𝑛,𝑣 ) represents aprobability of EV rational scheduling decision. In which, eachEV charging decision is taken into account by (10). Thus, theconstraint (12c) is fulfilled by Algorithm 2 during the eachreward calculation. Further, the constraints (12d) and (12e)of the formulated problem (12) are directly reflected on eachreward calculation (11) of EV 𝑣 ∈ V𝑛 at EVSE 𝑛 ∈ N throughthe ratio between actual charging time and total plugged-intime of (8). These constraints are addressed in Algorithm 2before starting the policy learning procedure during the eachreward calculation of EV 𝑣 ∈ V𝑛. Finally, we consider eachEV charging session as a single state during training andexecution in Algorithms 2 and 3, respectively. Therefore, theconstants (12f) and (12g) are satisfied since each EV chargingcan be scheduled or put in a queue during the learning andexecution of Algorithms 2 and 3. As a result, the proposedrisk adversarial multi-agent learning framework has taken intoaccount all of the constraints during laxity risk estimationand each reward estimation by risk adversarial agent andeach EVSE learning agent, respectively. Particularly, theseconstraints are satisfied before starting the policy learningprocedure.

V. EXPERIMENTAL RESULTS AND ANALYSIS

For our experiment, we consider the ACN-Data dataset[19] with a period from October 16, 2019 to December31, 2019 for evaluating the significance of the proposedRAMALS. In particular, we train the RAMALS using JPLsite’s EVSE data of the ACN-Data [19] while tested with bothof JPL and Caltech sites to establish the effectiveness of theproposed scheme. We have divided our experiment into twopart: 1) training, and 2) execution performance analysis. Weconsider the ACN-Data dataset [19] as a first baseline sincethis dataset is collected from a currently deployed real EVcharging system. Further, neural advantage actor-critic (A2C)[58] framework is taken into account as a centralized baselinemethod for effectively compare the proposed RAMALS onthe same reward structure (11) and neural network settings.Additionally, an asynchronous advantage actor-critic (A3C)[52], [54]-based multi-agent cooperative model is consideredto compare the proposed data-informed RAMALS with re-spect to a decentralized scheme. We present a summary of ex-perimental setup in Table III. We implement the proposed riskadversarial multi-agent learning system and other baselines byusing the Python platform 3.5.3 on a desktop computer (i.e.,Core i3-7100 CPU with a speed of 3.9 GHz along with 16 GBof main memory). In particular, we design an asynchronous

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 13

Fig. 5: Risk adversarial cumulated rewards during the trainingusing JPL EVSE data from October 16, 2019 to December 31,2019.

mechanism for all EVSE learning agents (i.e., ∀𝑛 ∈ N ) anda centralized risk adversarial agent to manage the trainingand execution procedure in a realistic and effective manner.Besides, we use SciPy [59], scikit-learn [60], and TensorFlow[61] APIs for scientific computing, predictive data analysis,and recurrent neural network design in the implementation.

A. RAMALS Training Procedure Evaluation for DSO

Risk adversarial cumulated rewards during the training arepresented in Figure 5. The Figure 5 shows that the proposedmethod can converge faster than the A3C framework whileit reaches convergence around 600 episodes. On the otherhand, the A3C framework does not converge during entireepisodes (i.e., 2000), and also a huge gap (i.e., more than 34%)between the cumulated rewards of the proposed RAMALS andA3C. On the other hand, the proposed RAMALS achievesalmost the same cumulated rewards as the neural advantageactor-critic (A2C) model (i.e., centralized). Thus, the trainingperformance (i.e., convergence and cumulated rewards) ofthe proposed RAMALS is almost the same as a centralizedapproach. However, the proposed RAMALS needs a smallamount of information exchange through the communicationnetwork while centralized approach requires a huge amountof data to train the model.

In Figure 6, we illustrate the training performance withrespect to training losses. In particular, Figures 6a, 6b, and 6crepresent value loss, policy loss, and entropy loss during thetraining process of the proposed RAMALS along with base-lines. In Figure 6a, the value loss of A3C-based frameworkless than the proposed RAMALS and centralized approachdue to less reward gain during the training. However, valueloss of A3C-based framework does not converge upto 2000episodes during the training. On the other hand, the valueloss of the proposed RAMALS converges after around 150episodes while centralized A2C converges after 550 episodesduring the training in Figure 6a. Thus, the proposed RAMALSperforms significantly better than other baselines in terms ofpolicy loss. In case of the policy loss (in Figure 6b), theperformance of the proposed RAMALS is almost identical asthe value loss. To capture the uncertainty, we model the pro-posed RAMALS along with other baselines by incorporating

(a) Value loss analysis during the training with JPLEVSE data.

(b) Policy loss analysis during the training with JPLEVSE data.

(c) Entropy loss analysis during the training with JPLEVSE data.

Fig. 6: RAMALS training loss analysis with baselines usingACN-Data dataset JPL site [19].

entropy regularization. The Figure 6c describes the entropyloss analysis during the training. In Figure 6c shows that theproposed RAMALS perform better than the other baselines.Particularly, RAMALS has less entropy loss variations amongthe episodic training than the other baselines. The Figure 6calso shows a lots of spike for all three method because of theuncertain charging scheduling requests by the electric CVsand AVs over time. Therefore, this variation for entropy lossis usual and acceptable when charging demand is random overtime. To this end, the proposed RAMALS perform more betterduring the training then the other baselines in terms of lossesand convergence.

In Figure 7, we present the impact on risk adversarial cu-mulated rewards based on number of recurrent neural networkunits (i.e., LSTM units) in each LSTM cell of the proposedRAMALS training. In particular, we choose 48, 64, and 128LSTM units for each LSTM cell to examine the achievedrisk adversarial cumulated rewards during the training. In

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 14

Fig. 7: Risk adversarial cumulated rewards variation analysisbased on number of LSTM units in each LSTM cell duringthe training using JPL EVSE data.

Fig. 8: EV charging rate comparison of JPL and Caltech EVSEsites.

Figure 7, the changes of risk adversarial cumulated rewardsare minimum for different number of LSTM cells (i.e., 48,64, and 128) for each LSTM unit. Moreover, each LSTM cellthat consists of 64 LSTM units performs better than 48 and128 of LSTM units with respect to convergence as seen inFigure 7. Thus, in the proposed RAMALS neural architecture,we select 64 LSTM units in each LSTM cell that can reducecomputational complexity as well as ensures a guarantee of asmooth convergence during training.

Next, we analyze execution performance of the proposedRAMALS for EVSEs with respect to charging rate, chargingefficiency, active charging time, and utilization of energyduring the charging scheduling in the following section.

B. RAMALS Execution Performance Evaluation for EVSEs

We illustrate an analysis on EV charging rate improve-ment for validating execution performance of the proposedRAMALS in Figure 8. In particular, we have executed thetrained RAMALS model on JPL and Caltech EVSE sitesand compared with other baselines. In case of JPL site thecharging rate increases around 46.6%, 39.6%, and 33.3%compared to A3C-based framework (i.e, decentralized), A2C-based model (i.e., centralized), and ACN-data (i.e., currently

Fig. 9: An illustration of active EV charging time improvementfor JPL EVSE site from October 16, 2019 to December 31,2019.

Fig. 10: EV assignment efficiency comparison of JPL andCaltech EVSE sites.

operating), respectively. Subsequently, the proposed RAMALScan improve about 71.4%, 40%, and 46.6% of the EV chargingrate for the Caltech EVSE site as compared with A3C-basedframework, A2C-based model, and ACN-data, respectively, asseen in Figure 8. This significant improvement of the chargingrate occurs due to a rational scheduling among the CV-EVsand AV-EVs in the CAV-CI by the RAMALS. Further, theRAMALS can ensure a rational decision support system forimproving the charging capacity of the EVSE sites due toa data-informed scheduling that significantly reduces an idlecharging time of EVSE.

We present a comparison based on total active EV chargingtime of JPL EVSE site from October 16, 2019 to December31, 2019 in Figure 9. Particularly, the Figure 9 illustrates totalactive charging time of 52 EVSEs that are encompassed in JPLEVSE site. Figure 9 shows that the active charging time can beimproved from 8 to 40 hours more than the currently deployedscheme for the JPL EVSE site. The execution performance ofthe A3C-based framework and A2C-based scheme is lowerthan the proposed RAMALS since the RAMALS can handleuncertain EV charging demand during the scheduling of eachEVSE by employing data-informed rational decisions from its

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 15

Fig. 11: Energy usages improvement of EVSEs (x-axis represents each EVSE) JPL site from October 16, 2019 to December31, 2019.

Fig. 12: Energy usages improvement of EVSEs (x-axis represents each EVSE) Caltech site from October 16, 2019 to December31, 2019.

prior knowledge. To this end, the proposed RAMALS cansignificantly improve (i.e., around 28.6%) of active chargingtime for each EVSE in CAV-CI. This performance gain of theeach EVSE directly reflects with scheduling efficiency of theEVSE sites in CAV-CI.

Therefore, in Figure 10, we demonstrates EV assignmentefficiency by scheduling 5246 EV charging session requests,where 3387 and 1859 sessions are considered for JPL andCaltech sites, respectively. The Figure 10 shows that theproposed RAMALS can achieve significant performance gain

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 16

for fulfilling the EV charging requests from both human-drivenand autonomous connected vehicles in CAV-CI. In particular,the RAMALS can attain around 95% of EV charging sessionrequests and fulfills EV charging demand for both CVs andAVs in the considered CAV-CI. Instead, the currently deployedcharging system of ACN-infrastructure only can pursue 70%and 61% of charging demand by the EVs (as seen in Figure10) due to irrational scheduling among the human driven andautonomous EVs. Specifically, the charging session durationand the amount of energy are allocated by the influence ofhuman-driven EVs’ request. The performance of the otherbaselines does not meet with the performance of the currentACN system. Moreover, the performance gap almost half ascompared to the proposed RAMALS due to lack of adaptivitywhen the environment is unknown and random over time.

Finally, we have shown the efficacy of the proposed RA-MALS in Figures 11 and 12 in terms of the total amount ofenergy utilization by JPL and Caltech EVSE sites, respectively.In Figures 11 and 12 x-axis represents each EVSE of JPL andCaltech EVSE sites, respectively while y-axis shows the totalamount of energy usage by each EVSE during from October16, 2019 to December 31, 2019 for scheduled EVs charging.The proposed RAMALS can utilize 28.2% more energy forJPL sites (in Figures 11) while Caltech site enhances around33.3% performance gain on average since a data-informeddecision support system can provide a rational scheduling forthe CVs and AVs. To this end, the DSO can capture an imitatedknowledge from each EVSE based on the charging sessionrequested behavior of each EV and provides an adaptive charg-ing session policy to each EVSE (i.e., each agent). Therefore,the charging session policy of each EVSE is being enhancedby its own self-learning capabilities during the execution ofRAMALS. As a result, the proposed RAMALS can provide anefficient way of supporting connected and autonomous vehiclecharging infrastructure in the era of intelligent transportationsystems.

VI. CONCLUSION

In this paper, we have studied a challenging rational EVcharging scheduling problem of EVSEs for DSO in CAV-CI. We formulate a rational decision support optimizationproblem that attempts to maximize the utilization of EVcharging capacity in CAV-CI. To solve this problem, wehave developed a novel risk adversarial multi-agent learningsystem for the connected and autonomous vehicle chargingthat enables the EVSE to adopt a charging session policy basedon the adversarial risk parameters from DSO. In particular, theproposed RAMALS handles the irrational charging sessionrequest by the human-driven connected electric vehicle inCAV-CI by coping with a laxity risk analysis in a data-informed manner. Experiment results have shown that theproposed RAMALS can efficiently overcome the problem ofirrational EV charging scheduling of EVSEs for DSO in CAV-CI by utilizing 28.6% more active charging time. Additionally,the EV charging rate has improved around 46.6% while EVcharging sessions fulfillment has increased 34% more than thecurrently deployed system and other baselines.

APPENDIX ALAXITY RISK DERIVATION BASED ON STUDENT’S

𝑡-DISTRIBUTION

We recall the probability density function 𝑃𝜔 (b) (15) ofthe standardized student 𝑡-distribution and let us consider 𝑀 =Γ( 𝜔+1

2 )Γ( 𝜔

2 )√𝜋𝜔

, 𝑚 = 1𝜔

, and 𝑐 = −( 𝜔+12 ). Therefore, we can reduce

the PDF (15) as follows:

𝑃𝜔 (b) = 𝑀 (1 + 𝑚b2)𝑐 . (28)

Since we can derive (6) as, 𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) = 𝑅CVaR

𝛼 (𝐷) =

−E[(𝐷 |𝐷 < b)] = −1𝛼

∫ b𝛼

−∞ b𝑃𝜔 (b) db then the laxity risk isdefined as follows:

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) = −1

𝛼

∫ b

−∞b𝑃𝜔 (b) 𝑑b

=−1𝛼

∫ b

−∞b𝑀 (1 + 𝑚b2)𝑐𝑑b

= −𝑀

𝛼

∫ b

−∞b (1 + 𝑚b2)𝑐𝑑b.

(29)

Let consider 𝑧 = (1 + 𝑚b2), where 𝑑𝑧 = 2𝑚𝑑b then db =

𝑑𝑧/2𝑚b, therefore (29) can be written as,

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) = −𝑀

𝛼

∫ 𝐶

−∞

b (1 + 𝑚b)𝑐2𝑚b

𝑑𝑧, (30)

where 𝑧 = 1 + 𝑚b2 and 𝑚 are known while we denote 𝐶 =

1 + 1𝜔b2. Then we can redefine (30) as,

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) = − 𝑀

2𝑚𝛼

∫ 𝐶

−∞𝑧𝑐𝑑𝑧

= − 𝑀

2𝑚𝛼

𝐶𝑐+1

(𝑐 + 1)

= − 𝑀

𝛼 1𝜔

2𝐶(1−𝜔)

2

(1 − 𝜔) ,

where 𝑐 + 1 = − (𝜔 + 1)2

+ 1 =(1 − 𝜔)

2.

(31)

Now we apply 𝐶 = 1 + 1𝜔b2 and we get,

𝑃𝜔 (b) = 𝑀 (1 + 1𝜔b2)𝑐𝑃𝜔 (b)

= 𝑀𝐶𝑐𝑀

= 𝑃𝜔 (b)𝐶−𝑐

= 𝑃𝜔 (b)𝐶(1−𝜔)

2 .

(32)

We can rewrite (31) by applying (32) as follows:

𝑅CVaR𝛼 (𝐿 (𝒙, 𝒚)) = − 1

𝛼

𝑃𝜔 (b)𝐶(1+𝜔)

2

2 1𝜔

2𝐶(1+𝜔)

2

(1 − 𝜔)

= − 1𝛼𝑃𝜔 (b)𝜔

1(1 − 𝜔)𝐶

= − 1𝛼𝜔

1(1 − 𝜔) (1 + 1

𝜔b2)𝑃𝜔 (b)

= − 1𝛼

1(1 − 𝜔) (𝜔 + b2)𝑃𝜔 (b)

=−1

𝛼(1 − 𝜔) (𝜔 + b2)𝑃𝜔 (b)𝜎`.

(33)

JOURNAL OF XXX, VOL. XX, NO. X, JULY 2021 17

APPENDIX BTYPES OF THE VEHICLE IN THE CONTEXT OF THE EV

CHARGING SYSTEM

Autonomous Vehicle (AV): An electric vehicle that cansense its surroundings and move safely with little or no humanintervention is considered an autonomous vehicle [3], [5], [6],[16]–[18]. An AV is equipped with high computational powerand large data storage to make autonomous decisions by itself.An AV can estimate and request an exact amount of energydemand to electric vehicle supply equipment (EVSE) fromits own analysis and maneuvering to DSO selected EVSE forcharging.

Human-driven Connected Vehicle (CV): A connectedvehicle is a human-driven electric vehicle that uses wirelessconnectivity to communicate with other vehicles and infras-tructure [17], [18]. Thus, human interaction is required forrequesting energy demand. Therefore, CV does not have theguarantee to request an exact amount of energy and chargingtime for vehicle charging.

Connected and Autonomous Vehicle (CAV): The con-nected and autonomous vehicle (CAV) [3], [5], [6], [16]–[18]includes both electric vehicles: 1) connected vehicle (CV) (i.e.,human-driven), and 2) autonomous vehicle (AV). Therefore,AV and CV are connected wirelessly (such as LTE and 5G)with the connected and autonomous vehicle infrastructure.However, in CAV-CI, the charging behavior of both CA andAV electric vehicles becomes distinct among them [17], [18].

REFERENCES

[1] W. Saad, M. Bennis, and M. Chen, “A vision of 6g wireless systems:Applications, trends, technologies, and open research problems,” IEEENetwork, vol. 34, no. 3, pp. 134–142, 2020.

[2] A. Ferdowsi, U. Challita, and W. Saad, “Deep learning for reliable mo-bile edge analytics in intelligent transportation systems: An overview,”IEEE Vehicular Technology Magazine, vol. 14, no. 1, pp. 62–70, 2019.

[3] H. Qi, R. Dai, Q. Tang, and X. Hu, “Coordinated intersection signaldesign for mixed traffic flow of human-driven and connected andautonomous vehicles,” IEEE Access, vol. 8, pp. 26 067–26 084, 2020.

[4] N. Lu, N. Cheng, N. Zhang, X. Shen, and J. W. Mark, “Connectedvehicles: Solutions and challenges,” IEEE internet of things journal,vol. 1, no. 4, pp. 289–299, August 2014.