Joint Optimization of Cascaded Classifiers for Computer Aided Detection by M.Dundar and J.Bi Andrey...

-

Upload

scot-jefferson -

Category

Documents

-

view

220 -

download

0

description

Transcript of Joint Optimization of Cascaded Classifiers for Computer Aided Detection by M.Dundar and J.Bi Andrey...

“Joint Optimization of Cascaded Classifiers for Computer Aided

Detection” by M.Dundar and J.Bi

Andrey KolobovBrandon Lucia

Talk OutlinePaper summaryProblem statementOverview of cascade classifiers and current

approaches for training themHigh-level idea of AND-OR framework Gory math behind AND-OR frameworkExperimental resultsDiscussion

Paper summary

• Proposes procedure for offline joint learning of cascade classifiers

• Resulting classifier is tested on polyp detection from computed tomography images

• Resulting cascade classifier is more accurate than cascade AdaBoost, on par with SVM, and faster than either

Problem statement• Polyp detection in a CT

image• Methodology: Identify candidate

structures (subwindows)

Compute features of candidate structures

Classify candidates

Cascade classifiers

A digression to previous work...

Why use cascades in the first place?

We motivate their use with Paul Viola and Michael Jones' 2004 work on detecting faces.

(Also, this is more vision-related)

Viola & Jones Face Detection Work

• Used cascaded classifiers to detect faces• To show why cascades are useful, evaluated

one big (200 feature) classifier vs. 10 20-feature classifiers– 5000 faces, 10000 non-faces– Stage n trained on faces + FP of stage n-1– Monolithic trained on union of all sets used to train

each stage in cascade

Viola & Jones Face Detection Work

• Monolithic vs. Cascade similar in accuracy

• Cascade is ~10 times faster– Eliminate FPs early –

later stages don't think about them.

Viola & Jones Face Detection Work

• In a small experiment, even the big classifier works– This paper claims that the big classifier only works

w/ ~10,000 (“maybe ~100,000”) negative examples– Cascaded version sifts through hundreds of

millions of negatives, since many are pared off at each stage.

Viola & Jones Face Detection Work• In a bigger experiment, they show that their 38

classifier cascade approach is 600 times faster than previous work. – Take that, previous work. (Schneiderman & Kanade,

2000)• The Point: Cascades eliminate candidates early on, so

later stages with higher complexity have to evaluate fewer candidates.

Cascade classifiers• Advantages over monolithic classifiers: faster

learning and smaller computation time• Key insight: a small number of features can reject a

big number of false candidates• To train Ci use set Ti of examples that passed

previous stages• Low false negative rate is critical at all stages

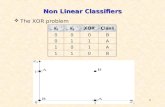

Example: cascade training with AdaBoost

• Given: set H = {h1, …, hM} of single-feature classifiers• Goal: construct cascade C1, …, CN s.t. Ci is a weighted

sum of an mi-subset of H, mi << M• Train Ci with AdaBoost to select an mi-subset using

examples that passed through previous cascade stages.• Optimal mi and N are very hard to find. They are set

empirically. Each stage is trained to achieve a some false positive rate FPi and/or false negative rate FNi

Drawbacks of modern cascade training

• Greedy; classifier at stage i is optimal for this stage but not globally.

• Drawback of AdaBoost cascade training: feature computational complexity is ignored, leading to inefficiency in early stages.

Proposition: AND-OR training• Each stage is trained for optimal system, not stage,

performance• All examples are used for training every stage• The stage classifier complexity increases down the

cascade• Parameters of different stage classifiers may be

adjusted throughout the training process, depending on how other classifiers are doing

• Motivation: a negative example is classified correctly iff it’s rejected by at least one stage (OR); a positive one must pass all stages (AND)

Proposition: AND-OR training

Review of Hyperplane Classifiers w/ Hinge Loss

Hinge Loss

If hinge loss is 0, correct classification.

If hinge loss > 0, incorrect classification.

Goal: Minimize Hinge Loss.

J (α) = Φ(α) + ∑Li=1

wi (1 − αT yi xi)+*

Min: J (α) = Φ(α) + ∑Li=1

wi E

E ≥ (1 − αT yi xi), E ≥ 0 *there are L pairs {xi,yi} of training data

Sequential Cascaded Classifiers So all that pertains to one classifier on its own.

Cascaded Version, w/ k classifiers. (xi gets split into k non-overlapping

subvectors)

– Subvectors ordered by computational complexity

For αk, J(αk) = Φ(αk) + ∑Li=1

wi (1 − αkT yi xik)+ if i is in

Tk-1

T is set of “yes”'s from previous classifiers.

Cut as many “no”'s as possible, leave the rest for the rest of the α's

AND-OR Cascaded Classifiers Everything at once – same starting pt. for each

J(α1, ... ,αK) = ∑Kk=1Φk(α) + ∑

i in F (1 − αkT yi xik)+ + ∑i in Tmax(0,(1

− α1T yi xi1), ... , (1 − αk

T yi xik))k=1

K

AND-OR Cascaded Classifiers Everything at once – same starting pt. for each

J(α1, ... ,αK) = ∑Kk=1Φk(α) + ∑

i in F (1 − αkT yi xik)+ + ∑i in Tmax(0,(1

− α1T yi xi1), ... , (1 − αk

T yi xik))k=1

K

“AND” “OR”Regularization Terms

This is the training cost function.

Optimizing Cascaded Classifiers New minimization (similar to the first one)

For each k in K, fix all (αj) | j ≠ k

Minimize Φk(α) +(∑ i in F wiEi) + (∑

i in T Ei)

Ei ≥ 1 − αkT yi xik, Ei ≥ 0

Ei ≥ max(0,(1 − α1T yi xi1),...,(1 − αk-1

T yi xik-1),

(1 − αk+1T yi xik+1),...,(1 − αK

T yi xiK))

all but αk are not variable, so this is easy (linear prog./quadratic prog.

solver)

This subproblem is convex.

Cyclic Optimization Algorithm 0.Initialize all αk to αk

0 using init. training dataset.

1.for all k: fix all αi, i != k, minimize eq. on prev. slide.

2.Compute J(α1, ... αk ... αK) using αkc instead of αk

c-1

3.If Jc has improved enough, or we've run long enough, stop, otherwise, go back to step 1.

Convergence Analysis They show that they are globally convergent to the set of

sub-optimal solutions

Using magic, and a theorem by two people named Fiotot, and Huard.

• Idea behind proof: Since we fix all vars but one, and minimize, the sequence of solutions is a decreasing sequence, with a lower bound of zero – so we converge to local min. or 0. We threshold and limit iterations, so we could hit a flat spot or just fall short.

Evaluation

• Application: polyp detection in computed tomography images. Important because polyps are an early stage of cancer

• Training set: 338 volumes (images), 88 polyps, 137.3 false positives per volume

• Test set: 396 volumes, 106 polyps, 139.4 false positives per volume

• Goal: reduce false positives per volume (FP/vol) to 0-5

Evaluation (cont’d)

• 46 features per candidate object• Comparison of AdaBoost-trained cascade, single-

stage SVM, and AND-OR trained cascade.• Features were split into 3 sets, in the increasing

order of complexity• 3-stage AND-OR classifier was built.• AdaBoost classifier was built in 3 phases, where

phase n used feature sets 1 through n for training.

Evaluation (cont’d)

• Sensitivity thresholds for AdaBoost were 0 missed polyps for phase 1, 2 for phase 2, and 1 for phase 3

• Number` of stages in each phase was picked to satisfy these thresholds.

Results

Results (cont’d)

• Cascade AdaBoost - 118 CPU secs • Cascade AND-OR – 81 CPU secs• SVM – 286 CPU secs• Cascade AND-OR is as accurate as SVM and

30% better than cascade AdaBoost

Discussion• Other methods of solving the optimization• How to assign cascade parameters

– How are feature sub-vectors sorted– heuristic? What is “ordered by computational complexity”?

• Speed increase was more prominent result, but hardly mentioned next to discussion of false positives.

• Generally better to use parallel cascades?