JLargeMFE MT Notes Wks1-4

Transcript of JLargeMFE MT Notes Wks1-4

-

MFE Financial Econometrics

Michaelmas Term 2011

Weeks 1 - 4

Jeremy Large and Neil Shephard

Oxford-Man Institute of Quantitative Finance, University of Oxford

September 27, 2011

-

2

-

Contents

1 Probability 5

1.1 Basic probability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.1.1 Reading . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

1.1.2 Sample spaces, events and axioms . . . . . . . . . . . . . . . . . . . . . . 6

1.1.3 Independence . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.1.4 Conditional Probability . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

1.2 Random variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2.1 Basics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2.2 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

1.2.3 Random walk . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

1.2.4 Distribution functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.2.5 Quantile functions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

1.2.6 Some common random variables . . . . . . . . . . . . . . . . . . . . . . . 15

1.2.7 Multivariate random variables . . . . . . . . . . . . . . . . . . . . . . . . . 19

1.2.8 Moments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

1.2.9 Covariance matrices . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

1.2.10 Back to distributions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

1.2.11 Conditional distributions . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

1.2.12 Bayes theorem . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

1.3 Estimators . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

1.3.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

1.3.2 Bias and mean square error of estimators . . . . . . . . . . . . . . . . . . 45

1.3.3 Histogram . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

1.3.4 Bayes theorem* . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

1.4 Simulating random variables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

1.4.1 Pseudo random numbers . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

1.4.2 Discrete random variables . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

1.4.3 Inverting distribution functions . . . . . . . . . . . . . . . . . . . . . . . . 48

1.5 Asymptotic approximation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

1.5.1 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

1.5.2 Definitions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

1.5.3 Some payback . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

1.5.4 Some more theory (first part examinable) . . . . . . . . . . . . . . . . . . 54

1.6 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

1.7 Solutions to some exercises . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

1.8 Addition and multiplication of matrices . . . . . . . . . . . . . . . . . . . . . . . 69

3

-

4 CONTENTS

2 Estimating models and testing hypotheses 752.1 Generic inference . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 762.2 Motivation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

2.2.1 Notation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 762.2.2 Choice of model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 782.2.3 Nuisance parameters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

2.3 Likelihood based estimation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 792.3.1 ML estimator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 792.3.2 The model and DGP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 842.3.3 Properties of ML estimator . . . . . . . . . . . . . . . . . . . . . . . . . . 852.3.4 Properties of ML estimator if model is true . . . . . . . . . . . . . . . . . 892.3.5 Confidence intervals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 92

2.4 Moment based estimation * . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 932.4.1 Method of moment estimator * . . . . . . . . . . . . . . . . . . . . . . . . 932.4.2 Behaviour of MM estimator * . . . . . . . . . . . . . . . . . . . . . . . . . 942.4.3 MLE as a MM estimator * . . . . . . . . . . . . . . . . . . . . . . . . . . 952.4.4 Moments for dynamic models * . . . . . . . . . . . . . . . . . . . . . . . . 962.4.5 Generalised method of moments (GMM) * . . . . . . . . . . . . . . . . . 97

3 Appendix 993.1 Appendix: the Newton-Raphson method . . . . . . . . . . . . . . . . . . . . . . 993.2 Simple matrix inverses and determinants . . . . . . . . . . . . . . . . . . . . . . . 100

NOTE: some sections are marked * to indicate that they describe non-essential (andnon-examinable) material for this part of the lecture course (i.e. for the first four weeksof Michaelmas Term).

-

Chapter 1

Probability

5

-

6 CHAPTER 1. PROBABILITY

1.1 Basic probability

1.1.1 Reading

Probability theory is the basis of all modern econometrics. The treatment I will developwill focus on results we will need in order to progress in the econometrics courses, howeveryou will be aware that much of other core courses will discuss events using probabilitytheory.

Remark 1.1 Textbook treatments include Grimmett and Stirzaker (2001). Discussionsof probability theory can be given at a number of different levels. The most formal is basedon measure theory see Billingsley (1995). An econometric text which uses this in itsintroduction to probability is Gallant (1997). I would recommend this book to studentswho have a strong maths/stats background. We will not use this material very formally,although we will discuss sigma algebras briefly. Our treatment will be based at the samelevel as Casella and Berger (2001), which is an excellent book. Alternative solid textson this subject include Hendry (1995, pp. 639-676). All of Gallant (1997), Hendry(1995) and Casella and Berger (2001) will be useful for our later treatment of inference.A modern book on statistical inference is Davison (2003).

In this rest of these notes we will discuss the initial construction of the probabilitycalculus. The emphasis will be on sample spaces, events and probability functions.

1.1.2 Sample spaces, events and axioms

Basic probability is built around set theory. We need some definitions before we start.To illustrate these ideas we will use a simple example throughout.

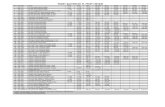

Write Yi as the price of a very simple asset at time i. e.g. normal Vodafone tradesare only priced to the nearest 0.25p, so here 0.25p represents a tick. Figure 1.1 shows anexample of this.

We suppose the price starts at zero and it can move 1 tick up or down each timeperiod, or stay the same! Then the following table shows the possible prices an abstractasset could have at different times

time Yii = 0 0i = 1 1, 0, 1i = 2 2,1, 0, 1, 2i = 3 3,2,1, 0, 1, 2, 3i = 4 4,3,2,1, 0, 1, 2, 3, 4

Thus, for example, Y4 can take on 9 different values.Sample space. The set , is called the sample space, if it contains all possible

(primitive) outcomes that we are considering, e.g. if we think about Y4 then its samplespace is

= {4,3,2, 1, 0, 1, 2, 3, 4} .Event. An event is a subset of (including itself), e.g. Let

A = {1}

-

1.1. BASIC PROBABILITY 7

8 9 10 11 12 13 14 15 16

138.75

139.00

139.25

139.50

139.75

140.00

140.25

Figure 1.1: Sample path of the best bid price (best available marginal price to a seller) forVodafone on the LSEs electronic order book SETS for the first working day in 2004.

i.e. Y4 = 1. Further let B be the event that Y4 is strictly positive, so

B = {1, 2, 3, 4}.

Example 1.1 Value at risk. An important concept is downside risk how much youcan lose, how quickly and how often. In this case the event of a large loss might bedefined as the event

{4,3} .A rapid fall of 3 ticks or more. In practice value at risk tends to be computed over a dayor more, rather than over tiny time periods.

We now recall some notation for A and B which are events in .

Union. A B, e.g. A B = {1, 2, 3, 4}. Intersection. A B, e.g. A B = {1}. Complementation. Ac, e.g. Ac = {4,3,2,1, 0, 2, 3, 4}.

Modern probability theory is built on an axiomatic development based upon the theoryof sets.Probability axioms based on the triple (,F ,Pr)

-

8 CHAPTER 1. PROBABILITY

For a sample space , a probability function is a function Pr that satisfies, on allpossible events F which are subsets of ,

1. Pr() 0, for all F ( is an element of F)2. Pr() = 1.

3. If { = Aj F} are disjoint then

Pr

( i=1

Ai

)=

i=1

Pr(Ai).

e.g.

Pr(Y4 > 0) =

4i=1

Pr(Y4 = i).

Comments:

Only events have probabilities. Events, E, are subsets of , not elements. So E or, equivalently, E F . Probabilities are always zero. A realization is when a single is picked (happens). However, strictly speaking this realization has no probability (giving it a probabilitymakes no sense).

If events are disjoint (non-overlapping) then the probability of exactly one of themoccurring is the sum of the probabilities that each one occurs. An example is of twodisjoint events {A1, A2}. Then

Pr(A1 A2) = Pr(A1) + Pr(A2).

Example 1.2 Divide any into {A,Ac}. (e.g. Y4 is strictly positive A = {1, 2, 3, 4} ,Ac = {4,3,2,1, 0}). We know = {A} {Ac} and that these events are disjoint.Consequently

Pr (Ac) = 1 Pr (A) .As Pr (Ac) 0 we have that

Pr (A) 1.Finally, the sigma algebra allows us to write = and so

Pr() = 0.

Example 1.3 Consider two events A,B which are in F . These events are not necessarilydisjoint and so we cannot immediately use axiom 3. e.g. for Y4

A = {1, 2, 3} , B = {1, 1} .

-

1.1. BASIC PROBABILITY 9

The result is thatPr (A B) = Pr(A) + Pr(B) Pr {A B} . (1.1)

In our example this is

Pr(Y4 = {1, 1, 2, 3}) = Pr(Y4 = {1, 2, 3}) + Pr(Y4 = {1, 1}) Pr(Y4 = 1).That is the probability of at least one ofA andB occurring is the probability ofA occurringplus the probability of B occurring minus the probability that they both happen.

1.1.3 Independence

Consider two events A,B which are in F . We are interested in the concept that theoccurrence of one event does not affect the probability of another even also happening.When this is true we say that the two events are independent. Mathematically we writethe events A,B are independent (in F) iff

Pr {A B} = Pr(A) Pr(B),Note that these events cannot be independent if the events are disjoint, for then Pr {A B} =0.

It is often helpful to have a shorthand for independence. We write

A B.Example 1.4 Let S and T be any subsets of {1, 0, 1} (e.g. suppose that S = {1, 1}and T = {1} ). Define A and B by:

A is [ (Y4 Y3) S]and

B is [ (Y3 Y2) T ].Many models assume that for any S and T ,1

A B.

1.1.4 Conditional Probability

Basics

Sometimes we wish to change the sample space on which we compute probabilities. e.g.suppose we are at time 3, then we know the value of Y3 = 3, say. Then

Y4 {2, 3, 4} ,which is a new sample space. On this sample space we can define new events and newprobabilities, e.g.

Pr(Y4 > 2) = 1 Pr(Y4 = 2).1Empirically this is rejected, for although A, B are typically almost uncorrelated they are not independent.

High levels of volatility tend to follow high levels of volatility.

-

10 CHAPTER 1. PROBABILITY

but it confusing to have two meanings for Pr, so it is better to be explicit that we knowY3 = 3. We are thus conditioning on this and write

Pr(Y4 > 2|Y3 = 3) = 1 Pr(Y4 = 2|Y3 = 3).This is called a conditional probability conditional probabilities are normal probabilitiesbut where we are changing the sample space.

More abstractly lets use condition on some event B (e.g. Y2 = 3) then the probabilityaxioms transfer across to become

Pr ( A| B) 0Pr ( B| B) = 1Pr ( i=1Ai| B) =i=1 Pr ( Ai| B)

if {(Ai B)} are disjoint.Example 1.5 Maybe interested in interested in the forecast distributions:

Pr(Y4|Y3),Pr(Y4|Y2)Pr(Y4|Y1)Pr(Y4|Y0, Y1, Y2, Y3)

the distribution of Y4 given we know the price at time 3, 2 or 1. The last conditionalprobability is a one-step ahead forecast distribution given the path of the process.

Definition

It is often useful to deduce conditional probability statements from the behaviour ofthe joint distribution. This is we know the joint distribution of employment and wagesit would be nice to automatically deduce the conditional probability statements aboutwages given employment. We can do this in the following way.

Consider a world with two outcomes, A and B. We might be interested in Pr(A) orPr(B) or Pr(AB). Alternatively, we could want to know Pr(A|B), assuming Pr(B) > 0.This means I constrain my world so that B happens and I ask if A then happens. Thiscan only happen if both A and B occurs, so we define

Pr(A|B) = Pr(A B)Pr(B)

.

It is easy to show that this obeys the probability axioms. This is a vital concept ineconometrics.

We are often interested in joint conditional probabilities

Pr(A B|C).If

Pr(A B|C) = Pr(A|C) Pr(B|C),we say that conditionally on C, A and B are independent. This is often written as

(A B) |C.

-

1.2. RANDOM VARIABLES 11

Example 1.6 In many models in financial econometrics

Pr(Yi|Yi1, Yi2, ..., Y0) = Pr(Yi|Yi1),

which is called the Markov assumption. This means the probability distribution at time ionly depends upon the value of the process one period earlier, not on any further historyof the process. This means that

(Y2 Y4) |Y3,the price of the asset at time 4 is independent of its value at time 2, given we know itsvalue at time 3.

Bayes theorem

Of course we have the corresponding result that

Pr(B) Pr(A|B) = Pr(A B),

and

Pr(A) Pr(B|A) = Pr(A B).Rearranging we get one of the two (the other being the central limit theorem) most famousresults in probability theory

Pr(B|A) = Pr(A B)Pr(A)

=Pr(B) Pr(A|B)

Pr(A), (1.2)

Bayes theorem. This says that I can go from knowing Pr(A|B) into knowing Pr(B|A) bysimply computing the ratio Pr(B)/Pr(A). We will return to it later.

1.2 Random variables

1.2.1 Basics

We have written to denote each of the events associated with the triple (,F ,Pr). Thatis F is generated from , F and Pr is the function which associates a probability.

The events do not need to refer to numerical events. However, if we take a function ofthese events X() which leads to a (possibly a vector or matrix valued) numerical valuethen X() is called a random variable. Most of econometrics is about random variables.In most probability theory when we refer to random variables we drop reference to , sowe will write X as the random variable.

1.2.2 Examples

A classic model for asset price models is the so-called binomial tree. This simplifies theabove model to allow prices to go up or down one tick, but not stay the same. The

-

12 CHAPTER 1. PROBABILITY

corresponding sample space for the price at time i is given below

time Yii = 0 0 = {0}i = 1 1 = {1, 1}i = 2 2 = {2, 0, 2}i = 3 3 = {3,1, 1, 3}i = 4 4 = {4,2, 0, 2, 4}

Bernoulli random variable. Write the event of an up tick at time 1 ( = U) or downtick ( = D). Let X( = U) = 1 and X( = D) = 0. Then X is a binary randomvariable with two points of support, 0, 1 and is called a Bernoulli random variable.Clearly we can think of

Y1 = 2X 1 {1, 1} .Write Pr(X = 1) = p and Pr(X = 0) = 1 p. Then

Pr(Y1 = 1) = p, Pr(Y1 = 1) = 1 p.More generally write Xi as the same as above but for time i and assume that Xi are

independent and identically distributed (i.i.d.). We model prices as

Yi = Yi1 + 2Xi 1, i = 1, 2, 3, ..., Y0 = 0, (1.3)which is called the binomial tree process and is one of the most famous in asset pricing.The definition of a process Y0, Y1, Y2, ... is that we record the value of an item at a sequenceof times.

Binomial. Suppose we carry out n independent Bernoulli trials with Pr (Xi = 1) = pthen

X =

ni=1

Xi,

is called a binomial random variable. Simple combinational arguments give

Pr (X = x) =n!

x! (n x)!px(1 p)nx, x = 0, 1, ..., n. (1.4)

The permissible range of X, that is 0,1,2,...,n is called the support of X.

1.2.3 Random walk

The binomial tree (1.3) can be written as

Yi = 2i

j=1

Xj i, i = 0, 1, 2, ....

using the fact that Y0 = 0. This is a rather nice process for we know that

Pr(Yi = x) = Pr

(X =

x+ i

2

),

where X has the form (1.4).

-

1.2. RANDOM VARIABLES 13

Exercise 1.1 Suppose Yi obeys a binomial tree process. Derive the form of

Pr(Yi+3 = x|Yi = y).The binomial tree model is the special case of the random walk process, which can be

written asYi = Yi1 +Xi,

where the shocks Xi are i.i.d.. This is a much discussed process in asset pricing, althoughwe will see later that the i.i.d. assumption in the model is overly strong. Clearly if wethink of Yi as log-prices then

Xi = Yi Yi1,are returns. Hence the price process can be transformed into an i.i.d. sample by takingfirst differences.

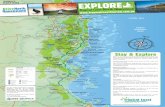

Example 1.7 Figure 1.2 shows 8 random sample paths of Yi for i = 0, 1, ..., 100 froma binomial tree process using p = 0.5. The way we carried out this simulation will bediscussed in Section 1.4. In the lower right hand corner of the Figure we also show ahistogram of 10, 000 draws from Y100. The histogram will be defined in Section 1.3.3, fornow it surfeits that it approximates Pr(Y100 = x) for a range of x.

0 50 100

0

5

10 1st sample path of Yi

0 50 100

10

0

0 50 100

0

5

10

0 50 100

0

10

20 4th sample path of Yi

0 50 100

20

10

0

0 50 100

0

5

10

0 50 100

0

10

7th sample path of Yi

0 50 100

0

5

25 0 25

0.02

0.04

Histogram of Y100. Binomial density

Figure 1.2: 8 sample paths from a binomial tree with p = 0 .5 . Also shown is the histogram ofthe process at time 100, that is Y100. It is based on drawing 10,000 sample paths of the process.code: bintree

-

14 CHAPTER 1. PROBABILITY

1.2.4 Distribution functions

Distribution function of a random variable X is

FX(x) = Pr(X x).Here x is an indicator. Density function for continuous X,

fX(x) =FX(x)

x.

Note that for continuous variables

Pr(X = x) = 0,

for every x. One can go from the density function to the distribution function via

FX(x) =

x

fX(y)dy.

For X with atoms of support we often write fX(x) = Pr(X = x).

1.2.5 Quantile functions

An important function in econometrics is obtained by inverting the distribution function.i.e. we ask: for a given u [0, 1], find x such that

u = FX(x).

We callx = F1X (u),

the quantile function of X. The 0.1 quantile tells us the value of X such that only 10%of the population fall below that value. The most well known quantile is

x = F1X (0.5),

which is called the median.

Example 1.8 Quantiles are central in simple value at risk (VaR) calculations, whichmeasure the degree of risk taken by banks. In simple VaR calculations one looks at themarginal distribution of the returns over a day, written Yi Yi1, and calculate

F1YiYi1(0.05),

the 5% percentile of the return distribution.

If X has atoms of non-zero probability then the quantile function is not unique.

Example 1.9 An exponential random variable X Exp () has densityfX(x) =

1

exp(x/), x, R+. (1.5)

The corresponding distribution and function is

FX(x) = 1 exp(x/),while the quantile function is

F1X (u) = log(1 u).

-

1.2. RANDOM VARIABLES 15

1.2.6 Some common random variables

Normal

The normal or Gaussian distribution is probably the most important we will come across.It naturally arises out of the distribution of an average (mean) and has convenient prop-erties under linear translations. Its form does not look immediately attractive. Thedensity is

fX (x) =12pi2

exp

{(x )

2

22

}, x, R, 2 R+.

We can see this density peaks at and is symmetric around .Mathematically we can think of this density in the following way:

log fX (x) = c 122

(x )2.

Thus the log-density is quadratic in x. The constant c is determined to make the densityintegrate to one. Its exact form is not very interesting, it just turns out to be rathersimple in this case.

Example 1.10 Think of the distribution of daily changes in UK Sterling against USDollar. We will work with the rate which is recorded daily by Datastream from 26 July1985 to 28th July 2000. Throughout, for simplicity of exposition, we report 100 times thereturns (daily changes in the log of the exchange rate, which are very close to percentagechanges). The Gaussian fit to the density is given in Figure 1.3. The Gaussian fit is in linewith the Brownian motion model behind the Black-Scholes option pricing model. Thex-axis is marked off in terms of returns, not standard deviations which are 0.623. Figure1.4 shows the log of the densities, which shows the characteristic quadratic decay of theGaussian density. We can see that for exchange rate data a better density is where thedecay is linear. This suggests we might become interested in densities of the type

log fX (x) = c 1|x | .

When we calculate the normalisation2 this delivers the Laplace density

fX (x) =1

2exp

{1|x |

}, x, R, 2 R+,

which is exponentially distributed to the left and right of the location .

2This looks non-trivial, but set = 0, then

1 = d

exp

{ 1|y|}dy

= 2d

0

exp

{ 1y

}dy

= 2d,

which determines d. Allowing 6= 0 just shifts the density so it makes no difference to the solution.

-

16 CHAPTER 1. PROBABILITY

2.5 2.0 1.5 1.0 0.5 0.0 0.5 1.0 1.5 2.0 2.5

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8Flexible estimator Fitted normal

Figure 1.3: Estimates of the unconditional density of the 100 times returns for Sterling/Dollar.Also density for the ML fit of the normal distribution.

A normal density has support on the real line. Centred at , 2 determines its scale(spread). The notation for a normal is X N (, 2) . This will be discussed at morelength later. FX (x) is only available via numerical methods.

A vital property of the normal distribution is that if X N (, 2) and and arenon-random then

+ X N( + , 22).That is under linear (affine) transformations a normal variable is normal. This result,as well as the others given in this paragraph, are most easily proved using cumulantfunctions, which will be introduced in a moment.

Notice also that we can write

Xlaw= + u,

where u N(0, 1). Equality in law, means the left and right hand side quantities havethe same law or distribution. Finally, if X and Y are independent normal variables withmeans x and y and variances

2x and

2y, then

X + Y N(x + y, 2x + 2y).

That is the means and variances sum while normality is maintained. This is a veryconvenient result for asset pricing, as we will see later.

-

1.2. RANDOM VARIABLES 17

2.5 2.0 1.5 1.0 0.5 0.0 0.5 1.0 1.5 2.0 2.5

10

8

6

4

2

0Flexible estimator Fitted normal

Figure 1.4: Estimates of the unconditional log-density of 100 times the returns for Ster-ling/Dollar. Also the log-density for the ML fit of the normal distribution.

Example 1.11 Suppose that Xi are i.i.d. N(, 2) then the random walk

Yi = Yi1 +Xi, Y0 = 0,

has the feature thatYi N(i, i2),

orYi+s|Yi N(Yi + s, s2).

Figure 1.5 replicates Figure 1.2 but replaces the scaled and recentred Bernoulli variablewith a normal draw. We selected = 0 and 2 = 4 0.5 0.5 so that it matches themean and variance of the previous binomial tree.

Gamma

Normal densities have support on R. Often it is convenient to have a density on R+. Acommonly used density is the gamma

fX(x) =x1

()exp (x/) , x, , R+,

which we write as G(, ). An important special case of the gamma density is the expo-nential where we set = 1. This is written as Exp () .

-

18 CHAPTER 1. PROBABILITY

0 50 1002.5

0.0

2.5

5.0

7.5 1st sample path of Yi

0 50 100

5

0

5

0 50 100

10

5

0

0 50 100

0

5

10

4th sample path of Yi

0 50 100

0

5

10

15

0 50 100

20

10

0

0 50 100

10

5

0

5 7th sample path of Yi

0 50 100

5

0

5

10

25 0 25

0.02

0.04Histogram of Y100. Gaussian density

Figure 1.5: 8 sample paths from a Gaussian random walk with = 0 and 2 = 4 0.5 0.5.Also shows the histogram of the process at time 100, that is Y100. It is based on drawing 10,000sample paths of the process. code: bintree

Chi-squared

Suppose Xii.i.d. N(0, 1) (often written NID(0, 1), which means we have independent and

identically distributed copies of the random variables) then

Y =

i=1

X2i 2 ,

a chi-squared random variable with degrees of freedom . It can be shown that 2 =Ga(/2, 1/2).

Uniform

Sometimes variables are constrained to live on small intervals. The leading example ofthis is the standard uniform

fX(x) = 1, x [0, 1].Hence this variables only has support on [0, 1]. This random variable is often used ineconomic theory as a stylised way of introducing uncertainty into a model. It also playsa crucial role in the theory and practice of simulation.

-

1.2. RANDOM VARIABLES 19

A more general uniform is defined by

fX(x) =1

2 1 , x [1, 2] .

Poisson

Counts are often modelled in economics, e.g. the number of trades in a fixed interval oftime. The standard model for this is the Poisson

fX(x) =ex

x!, x = 0, 1, 2, ..., (1.6)

which lives on the non-negative integers. This is written as X Po(). It can be shownthat if Xi Po(i) are independent over i, then

ni=1

Xi Po(

ni=1

i

).

Student t

Student t random variable is generated by a ratio of random variables

t =N(0, 1)2/

,

where N(0, 1) 2 . This is symmetrically distributed about 0. When is not an integerit can be defined by

t =N(0, 1)

Ga(/2, 1/2)/, > 0.

Its density is quite complicated and is uninteresting from our viewpoint. An importantfeature of Student t random variables is that

E |t |r r.

The special case of = 1 is called a Cauchy random variable and it has the featurethat even the mean does not exist!

1.2.7 Multivariate random variables

All of the above holds for multivariate p 1 vectorX = (X1, ..., Xp)

.

The elements of this vector need not be independent, hence they could for example rep-resent a vector of returns on a collection of assets. Hence multivariate random variablesplay a central role in portfolio allocation and risk assessments, as well as really all aspectsof econometrics.

-

20 CHAPTER 1. PROBABILITY

Sustained example: returns from a portfolio

Conside the bivariate case where p = 2. We might think of

X =

(X1X2

)=

(YZ

),

where X1 = Y is the return over the next day on IBM and X2 = Z is the return over thenext day on the S&P composite index.

Consider the case of measuring the outperformance of the index by IBM. This is

Y Z.We can write this as

(1,1)(YZ

)= bX

where

b =

(11

), so b = (1,1) .

Thus the outperformance can be measured using linear algebra. This outperformance canbe thought of as a simple portfolio, buying IBM and selling the index.

Consider, slightly more abstractly, a portfolio made up of c shares in Y and d in Z.Then the portfolio returns

cY + dZ.

This can be written in terms of vectors as

(c, d)

(YZ

)= f X, f =

(cd

).

More generally, we might write p portfolios, each with different portfolio weights asB11Y +B12ZB21Y +B22ZB31Y +B32Z

...Bp1Y +Bp2Z

= BX,where

B =

B11 B12B21 B22B31 B32...

...Bp1 Bp2

.This is quite a powerful way of compactly writing out portfolios. Here depending upontwo assets. But if one was to extend it to q assets

X =

X1X2X3...Xq

,

-

1.2. RANDOM VARIABLES 21

and a weight matrix

B =

B11 B12 B13 B1qB21 B22 B23 B2qB31 B32 B33 B3q...

......

. . ....

Bp1 Bp2 Bp3 Bpq

.Now the p portfolios, depending upon q assets, have returns

BX =

qj=1B1jXjqj=1B2jXjqj=1B3jXj

...qj=1BpjXj

.Again this is quite a simple representation of quite a complicated situation.

Back on track

In particular if X is a 2 1 vector

X =

(X1X2

)and x =

(x1x2

),

thenFX(x) = Pr(X1 x1, X2 x2),

which in the continuous case becomes

FX(x) =

x2

x1

fX(y1, y2)dy1dy2.

Likewise

fX(x1, x2) =2FX(x1, x2)

x1x2.

When X1 X2 then this simplifies to

fX(x1, x2) =2FX1(x1)FX2(x2)

x1x2=FX1(x1)

x1

FX2(x2)

x2= fX1(x1)fX2(x2).

An important point is that

fX(y1, x2)dy1 =FX(, x2)

x2

= Pr(X1 , X2 x2)

x2

=FX2(x2)

x2= fX2(x2).

-

22 CHAPTER 1. PROBABILITY

(a) Standard normal density

2.5 0

.0 2.5

2.50.0

2.5

0.05

0.10

0.15

(b) NIG(1,0,0,1) density

2.5 0

.0 2.5

2.50.0

2.5

0.1

0.2

0.3

(c) Standard logdensity

2.5 0

.0 2.5

2.50.0

2.5

10

.0

7.5

5.

0

2.5

(d) NIG(1,0,0,1) logdensity

2.5 0

.0 2.5

2.50.0

2.5

7.

5

5.0

2.

50.

0

Figure 1.6: Graph of the densities and log-densities of N(0,I) and NIG(1,0,0,1,I) variables. (a)Density of N(0,I). (b) Density of NIG variables. (c) Log-density of N(0,I). (d) Log-density ofbivariate NIG variables. Code: levy graphs

Hence if we integrate out a variable from a density function we produce the marginaldensity of the random variable.

The conditional distribution function takes on the form

FX1|X2=x2(x1) = Pr(X1 x1|X2 = x2),while, we define

fX1|X2=x2(x1) = Pr(X1 x1|X2 = x2)

x1,

which must have the properties of a density. When X is continuous then it can be shownthat

fX1|X2=x2(x1) =fX(x1, x2)

fX2(x2).

Although intuitively obvious the proof of this last result is not straightforward, to ourknowledge.

The following example is taken from WRDS and thinks of the daily returns fromIBM and the S&P composite index from 2000 until the end of 2004 as bivariate randomvariables. These are shown, as a time series, in Figure 2.3.1. We ignore the time seriesstructure of these returns for now and regard them as being bivariate i.i.d. through time.

Figure 2.3.1 shows a plot of the IBM returns graphed against the S&P. It shows thatthe S&P variable has much less variability than the IBM one, and that when the S&Pindex goes up then so tends IBM.

-

1.2. RANDOM VARIABLES 23

2000 2001 2002 2003 2004 2005

0.15

0.10

0.05

0.00

0.05

0.10

(PtPt1)/Pt1IBM returns S&P composite returns

1.2.8 Moments

General case

Suppose X is a random variable. We often will use an expectation of a function of randomvariable. We define3, for a continuous X, if it exists4

E {g(X)} =g(x)fX(x)dx. (1.7)

The expectation obeys some important rules. For example if a, b are constants thenE {a+ bg(X)} = a + bE {g(X)}. This follows from the definition of expectations assolutions to integrals (1.7).

Exercise 1.2 Prove that E{a + bg (X)} = a+ bE{g (X)}.3If X is discrete then

E {g(X)} =

g (xi) fX(xi).

In the more theoretical literature we often use a notation which allows us to deal with both the discrete and thecontinuous cases. There we write

E {g(X)} =g(x)dFX(x).

4The expectation of g(X) is said to exist iff|g(X)| fX(x)dx

-

24 CHAPTER 1. PROBABILITY

0.05 0.04 0.03 0.02 0.01 0.00 0.01 0.02 0.03 0.04 0.05

0.15

0.10

0.05

0.00

0.05

0.10IBM

S&P

IBM S&P composite

Mean

The most important special case of the expectation operator is the mean. This is definedas (when it exists)

1 = E(X) =

xfX(x)dx.

It is often used as a measure of the average value of a random variable (alternatives includemode and median).

Example 1.12 Suppose X is a Bernoulli trial with Pr(X = 1) = p and Pr(X = 0) =1 p. Then

E(X) = 1 Pr(X = 1) + 0 Pr(X = 0)= p. (1.8)

Likewise if X Bin(n, p) thenE(X) = np.

Likewise if Yi follows a binomial tree process (1.3) then

E(Yi) = 2i

j=1

E (Xj) i = i(2p 1).

Example 1.13 If X N(, 2), then

E(X) =

x12pi2

exp

{(x )

2

22

}dx

= +

(x ) 12pi2

exp

{(x )

2

22

}dx

= ,

-

1.2. RANDOM VARIABLES 25

using the fact that a density integrates to one.

Multivariate mean

Recall we write

X =

X1X2X3...Xq

.Now each Xj has a mean, E(Xj), so it would be nice to collect these together. Thefollowing notation does this. We define

E(X) =

E(X1)E(X2)E(X3)

...E(Xq)

.This is the mean of the vector.

Recall from Section 1.2.7 that we wrote the return on p portfolios as

BX,

where B is a p q weight matrix. An interesting question is what is the mean of theportfolio that is what is the mean of each element of this vector or each portfolio? Itturns out this has a simple answer

E(BX) = BE(X).

Why? Recall a mean of a vector is the mean of all the elements of the vector

E(BX) =

E(q

j=1B1jXj

)E(q

j=1B2jXj

)E(q

j=1B3jXj

)...

E(q

j=1BpjXj

)

.

But, for i = 1, 2, ..., p,

E

(qj=1

BijXj

)=

qj=1

E(BijXj) =

qj=1

BijE(Xj).

Hence

E(BX) =

qj=1B1jE(Xj)qj=1B2jE(Xj)qj=1B3jE(Xj)

...qj=1BpjE(Xj)

= BE(X),

-

26 CHAPTER 1. PROBABILITY

as stated. This is an important result for econometrics.

r th momentMore generally we introduce the notation

r = E(Xr) =

xrfX(x)dx,

to denote the r th moment (about the origin).Example 1.14 Suppose X is exponentially distributed (1.5) then

r =

0

xr1

exp(x/)dx.

Recall that the gamma function is defined as

(r) =

0

xr1 exp(x)dx, r > 0,

noting that if r is an integer then (r) = (r 1)! So

r = r1 0

(x/)r exp(x/)dx

= r 0

xr exp(x)dx= r(r + 1)

= rr!.

Variance

Likewise if X is univariate, the variance is defined as

2 = Var(X)= E {X E(X)}2

=

{x E(X)}2 fX(x)dx

= E(X2) {E(X)}2 .It is the second moment of the variable X E(X). The standard deviation is defined asVar(X).The variance is the basic measure used in asset pricing of the risk of holding an asset.

It is often assumed that investors try to avoid being exposed to such risk and are onlywilling to be exposed to it by being given a higher mean.

Example 1.15 Suppose X is a Bernoulli trial with Pr(X = 1) = p then

E(X2) = 1 Pr(X = 1) + 0 Pr(X = 0)= p,

-

1.2. RANDOM VARIABLES 27

so using (1.8)

Var(X) = p p2= p(1 p).

Likewise if X Bin(n, p) thenVar(X) = np(1 p).

Notice this variance is maximised at p = 1/2, when it is n/4.

Example 1.16 Suppose X N(0, 1), then

Var(X) =

x212pi

exp

(x

2

2

)dx

= 2

0

x212pi

exp

(x

2

2

)dx.

Letting y = x2, then dy = 2xdx, which means

Var(X) = 2

0

y

2x

12pi

exp (y) dy

=

0

y1/212pi

exp (y) dy

=12pi

(3/2)

=12pi

(1/2)

= 1,

using the well known result that (1/2) =2pi. This implies if X N(, 2) then

Var(X) = 2.

Example 1.17 Suppose X is exponentially distributed (1.5) then

1 = , 2 = 22.

This impliesVar(X) = 2.

Exercise 1.3 Prove that Var(a+ bX) = b2Var(X).

Example 1.18 Consider holding $1 of an asset giving a return of r (and interest rate)with no risk, and $b of an asset with return X which is random (ie risky). Then the returnwill obviously your portfolio would return

r + bX.

-

28 CHAPTER 1. PROBABILITY

The mean of this would ber + bE(X).

Exercise 1.3 implies the variance of the portfolio is

b2Var(X).

So we get a key feature for asset pricing the mean and standard deviation of the portfolioincreases with proportionally with the weight.

Exercise 1.4 Show the mean and variance of the Laplace random variable

fX (x) =1

2exp

{1|x |

}is and 22, respectively. HINT: the Laplace density is a two sided exponential variable.

Exercise 1.5 What is the mean and variance of a standard uniform?

Exercise 1.6 Prove that the mean and variance of a Poisson random variable (1.6) is.

Covariance

The covariance of X and Y is defined (when it exists) as

Cov(X, Y ) = E {X E(X)} {Y E(Y )}=

{x E(X)} {y E(Y )} fX,Y (x, y)dxdy

= E(XY ) E(X)E(Y ).Exercise 1.7 Prove that Cov(a + bX, c + dY ) = bdCov(X, Y ). Hence covariances arelocation invariant.

Exercise 1.8 Prove that Var(X + Y ) = Var(X) + Var(Y ) + 2Cov(X, Y ).

Exercise 1.9 Prove that

Var(aX + bY ) = a2Var(X) + b2Var(Y ) + 2abCov(X, Y ).

Exercise 1.10 Prove that if X1,...,Xn are independent then

Var

(1

n

ni=1

Xi

)=

1

n2

ni=1

Var(Xi).

If the random variables are identically distributed, then show this simplifies to

Var

(1

n

ni=1

Xi

)=

1

nVar(X1).

-

1.2. RANDOM VARIABLES 29

Independence implies uncorrelatedness (nearly)

Recall

Cov(X, Y ) = E(XY ) E(X)E(Y ).So if X Y then

Cov(X, Y ) = E(X)E(Y ) E(X)E(Y ) = 0.Hence independence implies uncorrelatedness so long as the covariance exists. If thecovariance does not exist then this result obviously does not hold. e.g. two independentCauchy random variables do not have zero covariance as the covariance does not exist.

If the covariance between X and Y is zero we say they are uncorrelated. We write thisas X Y . So

(X Y ) = (X Y ) .The reverse is not true, uncorrelatedness does not imply independence (in Gaussian caseit does!).

Example 1.19 Suppose

X N(0, 1), Y = X2.Then

Cov(X, Y ) = E(XY ) E(X)E(Y ) = E(X3) = 0.

Cumulant function

The cumulant function (which, subject to some mild and relaxable regularity assump-tions5, uniquely characterises a distribution) of X is

K() = log E(eX). (1.9)

The exponent of this function is the moment generating function (mgf)

E(eX).

It has the property that so long as all the moments exist that

r =rE(eX)

r

=0

.

Example 1.20 In the case where X N(, 2) then it can be show that

K() = +1

222.

5Formally, the characteristic function

E exp(1X)

uniquely characterises the distribution. It always exists while cumulant functions do not. Here I am being moreinformal, ignoring the complex arguments.

-

30 CHAPTER 1. PROBABILITY

Exercise 1.11 Use the cumulant function to show that when X N(, 2) so1 = , 2 =

2, 3 = 0.

In the case where = 0 show that4 = 3

4.

Use these result to show that

E(21) = 1 and Var(21) = 2,

whileE(2) = and Var(

2) = 2.

Exercise 1.12 Use the property that densities integrate to one to derive the mgf of theinverse Gaussian variable (??). Use this result to show that the sum of two i.i.d. inverseGaussian variables are distributed as inverse Gaussian.

Correlation

The correlation of X and Y is defined (when it exists) as

Cor(X, Y ) =Cov(X, Y )Var(X)Var(Y )

.

NowCor(X, Y ) [1, 1],

which follows from the Cauchy-Schwarz inequality6.

Exercise 1.13 Prove that Cor(a + bX, c + dY ) = Cor(X, Y ). Hence correlations arescale and location invariant.

Example 1.21 In the IBM and S&P example then Mean IBM: 0.000206, Mean S P:-0.0000721, S.D. IBM: 0.0225, S.D. S P: 0.0127, Cor: 0.625. If we scale all returns by 100,then Mean IBM: 0.0206, Mean S P: -0.00721, S.D. IBM: 2.25, S.D. S P: 1.27, Cor: 0.625.

1.2.9 Covariance matrices

Think of X as a p dimensional7 vector of random variables. Thus

X =

X1X2X3...Xp

.6Which says that for all reals x1, x2, y1, y2 then(

x21 + x22

)(y21 + y

22) (x1y1 + x2y2)2.

7It is somewhat inelegant that I have change the dimension of the X vector from q to p. But it is of noconsequence and it is more familiar to work with p dimensional objects.

-

1.2. RANDOM VARIABLES 31

Then we define the covariance matrix of X as

Cov(X) =

Var(X1) Cov(X1, X2) Cov(X1, Xp)Cov(X2, X1) Var(X2) Cov(X2, Xp)...

.... . .

...

Cov(Xp, X1) Cov(Xp, X2) Var(Xp)

.This is a symmetric p p matrix. Notice this notation is slightly confusing, for we writeCov(X) of a vector X as a matrix and Cov(A,B) of scalars A and B as scalars. Hopefullyit will always be clear as to the meaning due to the context.

The covariance matrix can be calculated as

Cov(X) = E {X E(X)} {X E(X)} .If you do not see this reult immediately think of the p = 2 case. Then

{X E(X)} {X E(X)} =(X1 E(X1)X2 E(X2)

)(X1 E(X1)X2 E(X2)

)=

((X1 E(X1))2 (X1 E(X1)) (X2 E(X2))(X2 E(X2)) (X1 E(X1)) (X2 E(X2))2

).

Taking expectations of this delivers the required result, for

E {X E(X)} {X E(X)}

=

(E{(X1 E(X1))2

}E {(X1 E(X1)) (X2 E(X2))}

E {(X2 E(X2)) (X1 E(X1))} E{(X2 E(X2))2

} )=

(Var(X1) Cov(X1, X2)Cov(X2, X1) Var(X2)

).

Example 1.22 In the IBM and S&P example then we have approximately that

E(X) =

(0.02060.00721

)

Cov(X) =

(5.07 1.791.79 1.62

).

A very important result is that if B is a q p matrix of constants, then

E (a +BX) = a+BE(X) Cov(a+BX) = BCov(X)B.

Example 1.23 Suppose X is a return vector on p assets. Then if we construct a portfoliowith weights b = (b1, b2, ..., bp)

, then the return on portfolio will be bX. Thus the expectedreturn will be

E(bX) = bE(X) and Var(bX) = bCov(X)b.

-

32 CHAPTER 1. PROBABILITY

Example 1.24 Think of IBM returns - S&P returns.

Y = X1 X2.

E(Y ) = (1 1)(

0.02060.00721

)= 0.0278

Var(Y ) = (1 1)(

5.07 1.791.79 1.62

)(11

)= (1 1)

(3.280.17

)= 3.11.

The second of these results implies that Cov(X) > 0, for if we think of an arbitrarynon-stochastic z vector, then

zCov(X)z = Cov(zX) = Var(zX) 0.

Exercise 1.14 Show that

Var

(1

n

ni=1

Yi

)= E

{1

n

ni=1

Yi 1n

ni=1

E(Yi)

}2

= E

[1

n

ni=1

{Yi E(Yi)}]2

=1

n2

ni=1

nj=1

Cov(Yi, Yj).

What happens to this result if the Yi are independent? Further, what happens to it if the Yiare i.i.d. (independent and identically distributed) with variance 2? On the other handwhat happens when

Cov(Yi, Yi+s) =

2 s = 0 s = 10 s 6= 0.

Comment on this.

We also introduce the notation

r = E [{X E(X)}r] , r = 2, 3, ...

to denote the r-th central moment (about the mean).

-

1.2. RANDOM VARIABLES 33

Block covariance matrices

Think about the block structure

X =

(YZ

),

where Y and Z are vectors. Then often the following block covariance is defined

Cov(Y, Z) = E{(Y E(Y )) (Z E(Z))} .

The dimension of this matrix is dim(Y ) dim(Z). ClearlyCov(Y, Z) = Cov(Z, Y ).

Confusingly this gives us yet another meaning for the Cov notation! You just have to usethe context to work out which you mean.

This structure means that

Cov(X) = E

{(YZ

) E

(YZ

)}{(YZ

) E

(YZ

)}= E

((Y E(Y )) (Y E(Y )) (Y E(Y )) (Z E(Z))(Z E(Z)) (Y E(Y )) (Z E(Z)) (Z E(Z))

)=

{Cov(Y, Y ) Cov(Y, Z)Cov(Z, Y ) Cov(Z,Z)

}=

{Cov(Y ) Cov(Y, Z)

Cov(Z, Y ) Cov(Z)

}.

Then we say Y Z, that is the vectors are uncorrelated, if Cov(Y, Z) is a matrix of zeros.

Correlation matrices

Corresponding to the covariance matrix is the correlation matrix, which is (when it exists)

Cor(X) =

1 Cor(X1, X2) Cor(X1, Xp)Cor(X2, X1) 1 Cor(X2, Xp)...

.... . .

...

Cor(Xp, X1) Cor(Xp, X2) 1

= Cor(X).

This matrix is invariant to location and scale changes, but obviously not in general lineartransformations. Clearly Cor(X) has to be positive semi-definite.

1.2.10 Back to distributions

General discussion

Figure 1.6 gives, in the upper plate, two examples of bivariate densities. In fact here thetwo variables are independent and so

fX(x1, x2) = fX1(x1)fX2(x2),

-

34 CHAPTER 1. PROBABILITY

the product of the two marginal densities. The left graph is the normal case, the seconda non-normal case with heavier tails. It is interesting to look at the log-densities. Thenormal one is quadratic. The non-normal is more like being linear in the tails.

Figure 1.7 is an example of the above in practice. It displays the estimated jointdensity of daily changes in the DM and FF against the US Dollar in the last 6 yearsbefore the advent of the Euro. The left plates are the normal fit, the right plates are amore flexible non-normal model. The picture shows a tight correlation between these twocurrency movements (expected given they were about to be merged), while the flexibledistribution suggests the tails of the fit are much more linear than the quadratic assumedin the normal model.

FF

N L

ogd

ensit

y

(a): DM/FF Normal density

2

02

20

2

0.5

1.0

FF

GH

Log

den

sity

(b): DM/FF GH density

2

02

20

22.

55.

07.

5

FF

N L

ogd

ensit

y

(c): DM/FF Normal logdensity

2

02

20

2

10

5

0

FF

GH

Log

den

sity

(d): DM/FF GH logdensity

2

02

20

2

5

0

Figure 1.7: Fit of bivariate Gaussian and GH models for the DM and FF against the Dollar.(a) ML fit of bivariate Gaussian density, (c) gives the log of this density. (b) ML fit of bivariateGH density, (d) gives the log of this density. Code: em gh mult.

Multivariate normal

The p-dimensional X N(,). E(X) = , Cov(X) = . Assume > 0 (positivedefinite8). is always symmetric of course. Then

fX(x) = |2pi|1/2 exp{12(x )1 (x )

}, x Rp.

8Recall > 0 iff for all z 6= 0 we have thatzz > 0.

-

1.2. RANDOM VARIABLES 35

Here 1 is a matrix inverse, which exists due to the > 0 assumption. Further

|2pi|1/2 = (2pi)p/2 ||1/2 .Example 1.25 Suppose

= 2Ip,

so the elements of X are independent and homoskedastic. Then

fX(x) =(2pi2

)p/2exp

{ 122

(x ) (x )}

=(2pi2

)p/2exp

{ 122

pi=1

(xi i)2}

Density has a single peak at . The cumulant function of a multivariate normal is

K() = log E(eX) = +

1

2. (1.10)

Here is a vector.If a is q 1 and B is q p and both are constants, then

Y = a +BX N(a+B,BB), (1.11)a q-dimensional normal. That is linear combinations of normals are normal.

Exercise 1.15 Prove this result using (1.10).

Example 1.26 In particular if p = 2, then for X = (X1, X2)

= Cov(X) =

{Var (X1) Cov (X1, X2)Cov (X1, X2) Var (X2)

}=

(21 1212

22

),

where = Cor(X1, X2). This is an important model. Often written as(XY

) N

{(xy

),

(2x xyxy

2y

)}.

Quadratic forms in normals

SupposeX N(0, Ip),

where Ip is a p-dimensional identity matrix, then

X X =pi=1

X2i 2p,

a chi-squared variable with p degrees of freedom.Suppose

X N(0, Ip),

-

36 CHAPTER 1. PROBABILITY

where Ip is a p-dimensional identity matrix. Suppose is a p p idempotent matrix,which means that

= ,

then9

X X 2tr().

1.2.11 Conditional distributions

Basics

Consider two (multivariate) random variables X, Y , then

FX|Y=y(x) = Pr(X x|Y = y) = Pr(X x, Y = y)Pr (Y = y)

.

This is somewhat tricky for continuous random variables as then Pr (Y = y) = 0. Likewisethe conditional density is

fX|Y=y(x) =FX|Y=y(x)

x=fX,Y (x, y)

fY (y).

Remark 1.2 I do not know of a trivial proof of this result. The following informal

9Recall that for any matrix the eigenvalue decomposition means we can write

= PP ,

where is a diagonal matrix of eigenvalues of and the matrix of eigenvector P is such that P P = I . Theidempotency of means

= PP PP = PP = .

This can only hold if is a matrix of one and zeros.Now

Ylaw= P X N(0, P P ) = N(0, Ip),

so

X Xlaw= Y Y =

pj=1

21I(jj = 1) 2tr(),

as required.

-

1.2. RANDOM VARIABLES 37

argument is relatively easy to follow though

fX|Y=y(x) =FX|Y=y(x)

x

= limy0

xPr(X x|y Y y +y)

= limy0

x

Pr(X x, y Y y +y)Pr (y Y y +y)

= limy0

x

FX,Y (x, y +y) FX,Y (x, y)FY (y +y) FY (y)

=

x

yFX,Y (x, y)yFY (y)

=

2

yxFX,Y (x, y)

fY (y)

=fX,Y (x, y)

fY (y).

If X, Y are independent then

Pr(X x, Y y) = Pr (X x) Pr (Y y)and

fX,Y (x, y) = fX(x)fY (y).

Independence implies Cov(X, Y ) = 0, as E(XY ) = E(X)E(Y ), but Cov(X, Y ) = 0 doesnot imply independence (e.g. stock returns are basically uncorrelated through time, butnot independent big movements usually follow big movements).

Example 1.27 Bivariate normal. In this case(XY

) N

{(XY

),

(2X XYXY

2Y

)},

then

Y |X = x N{Y +

Y X2X

(x X), 2Y (Y X)

2

2X

}

= N

{Y +

YX

(x X), 2Y(1 2)} .

An illustration of this is where Y is the return on an asset, X is the return on the marketportfolio (i.e. a widely based market index). Then

Y |X =YX

is often called the beta of Y and is a measure of how Y moves with the market. So ifY |X is bigger than one, then Y is regarded as being sensitive to overall market moves,while when Y |X is small then Y behaves pretty differently from the market index.

-

38 CHAPTER 1. PROBABILITY

BIG picture.

Conditional variance does not depend upon x.

Change in the conditional mean isYX

(x X),

so is linear in x. Effect is compared to mean, i.e. xX . Divide by X removes thescale of x, times by Y puts the variables onto the y scale.

Obviously

X|Y = y N{X +

XY

(y Y ), 2X(1 2)} .

Notice the symmetry of this. We can condition on X or Y . Just because we write downX|Y or Y |X does not mean thatX causes Y or vice versa. It says nothing about causality.

More generally, consider two (multivariate) random variables X, Y . Suppose(XY

) N

{(XY

),

(XX XYY X Y Y

)}, XX > 0,Y Y > 0,XY =

Y X .

Then

X N (X ,XX) , Y N (Y ,Y Y ) ,and

X|Y = y N {X + XY1Y Y (y Y ),XX XY1Y YY X}= N

{X|Y ,X|Y

}.

That is if variables are jointly normal, then marginally and conditionally they are normal.

Exercise 1.16 Prove this result using the fact that

fX|Y=y(x) =fX,Y (x, y)

fY (y),

and(XX XYY X Y Y

)1=

(XX XY

Y X Y Y

)=

( (XX XY1Y YY X

)1 XXXY (Y Y )1 (XX)1Y YY X (Y Y )1 Y X

(XX

)1XY

).

Exercise 1.17 Prove that X Y if and only if XY is a matrix of zeros.

-

1.2. RANDOM VARIABLES 39

Regression and condition densities

Later we will discuss in some detail so-called regression methods, which relates the varia-tion of one variance, Y , to the values of other variables, written X. In the case were X, Yare jointly Gaussian this amounts to considering the conditional density of

Y |X = x N{Y +

Y X2X

(x X), 2Y (Y X)

2

2X

}.

If our scientific interest centres on this conditional density, to see how Y may respond tovariation in X, then it is often useful to use a different parameterisation. In particular,typically one writes

Y |X = x N {Y |X + Y |Xx, 2Y |X} ,or for simplicity

Y |X = x N (+ x, 2) .Here is usually referred to as the intercept, while is the slope. In the case where X ismultivariate, it takes on the more abstract form of the linear regression model

Y |X = x N ( x, 2) .Of course if the X, Y variables are not Gaussian then the above analysis looks rather

inappropriate. In later lectures we will see some aspects of this modelling framework isrobust to departures from Gaussianity.

In corporate finance there is some considerable interest in conditional regression modelsfor binary variables, with

Pr(Yi = 1|X = x) = g(x).The most well model of this type is the logistic regression where

Pr(Yi = 1|X = x) = exp(x)

1 + exp (x).

Notice in this case, no direct attempt to model the joint distribution of X, Y is made,instead we have just decided that our interest focuses on the parameters which index theconditional probability. More details of this analysis will be given in the third part ofthese lectures.

Conditional moments

Recall

fX|Y=y(x) =fX,Y (x, y)

fY (y).

ImportantlyfX,Y (x, y) = fX|Y=y(x)fY (y).

The definition of a conditional expectation is

EX|Y=yg(X) =g(x)fX|Y=y(x)dx.

-

40 CHAPTER 1. PROBABILITY

Exercise 1.18 Prove that EX|Y=y{a+ bg (X)} = a + bEX|Y=y{g (X)}.Now recall

fX,Y (x, y) = fX|Y=y(x)fY (y).

As a result we have that

EX(g(X)) = EY{EX|Y=y (g(X))

},

the law of iterated expectationsProof:

EX(g(X)) =

g(x)fX(x)dx

=

g(x)fX,Y (x, y)dxdy

=

g(x)fX|Y=y(x)fY (y)dxdy

=

{g(x)fX|Y=y(x)dx

}fY (y)dy

=

{EX|Y=y (g(X))

}fY (y)dy

= EY{EX|Y (g(X)|Y )

}Exercise 1.19 Suppose E (X|Y = y) = a + by and E(Y ) = c, then EX = EY EX|Y =EY (a+ bY ) = a+ bc.

LikewiseVarX(X) = EY

(VarX|YX|Y

)+VarY (EX|YX|Y ).

Proof:

VarX(X) = EXX2 (EXX)2

= EY EX|Y(X2) (EY EX|YX)2

=[EY EX|Y

(X2) EY (EX|YX)2] + [EY (EX|YX)2 {(EY EX|YX)2}]

= EY

[EX|Y

(X2) (EX|YX)2]+ [EY (EX|YX)2 {(EY EX|YX)2}]

= EY(VarX|YX|Y

)+VarY (EX|YX|Y )

Exercise 1.20 Suppose V ar(X|Y = y) = a+ by2 and E(X|Y = y) = c+ dy. ThenVarX(X) = EY

(a+ bY 2

)+VarY (c+ dY )

= a+ bEY(Y 2)+ d2VarY (Y ).

Example 1.28 The simple value at risk (VaR) calculation based on the quantile

V aR = F1X (),

-

1.2. RANDOM VARIABLES 41

where X is the return, has been heavily criticised in the literature as being incoherent. Awidely used alternative is to calculate the so-called expected short-fall measure

ES = E{X|X < F1X ()

},

which calculates the expected loss given the move is in the bottom quantile. A relativelysimple introduction to this is given in Acerbi and Tasche (2002).

Martingale

In modelling dynamics in financial economics martingales play a large role. They formthe mathematical formulation of a risk-neutral fair game in financial economics. They arecentral to stochastic analysis and allow us to generalise central limit theories away fromthe i.i.d. case.

Consider a sequence asset prices recorded through time

Y1, Y2, Y3, ...

where the subscript reflects time. A natural object to study is

E(Yi|Y1, ..., Yi1),the conditional expectation (which we assume exists) of the future given the past. Thenif

E(Yi|Y1, ..., Yi1) = Yi1,then the sequence is said to be a martingale with respect to its own past history10.

Example 1.29 A special case of the martingale is a random walk

Yi = Yi1 + i,

where i is i.i.d. with zero mean.

Example 1.30 Suppose

Yi = Yi1 + i, i|Y1, ..., Yi1 N(0, 2i )where

2i = + 12i1 + ...+ p

2ip.

Clearly, so long as E |Yi|

-

42 CHAPTER 1. PROBABILITY

0 50 100 150 200 250 300 350 400 450 5007.5

5.0

2.5

0.0

2.5

5.0

7.5

10.0

Copula

Consider a bivariate density for X, Y . Write, for all permissible points of support11

GX,Y (x, y) =FX,Y (x, y)

FX(x)FY (y).

Thus we can always write

FX,Y (x, y) = FX(x)FY (y)GX,Y (x, y).

Then G solely controls the stochastic dependence between X and Y .If we assume that X and Y are continuous random variables, then knowing x gives

me FX(x) and vice versa. Thus we can find a H function

HX,Y (FX(x), FY (y)) = GX,Y (x, y),

so

FX,Y (x, y) = FX(x)FY (y)HX,Y (FX(x), FY (y))

= C(FX(x), FY (y)),

where C(., .) is a copula function. Notice that FX(x) and FY (y) live on 0,1. A copula issimply a bivariate distribution function for variables defined on the unit interval!

This looks really boring and contrived. However, what is has achieved is the separationof the modelling of the marginal distribution of X and Y , given through FX(x) and FY (y),and their dependence, given by C(., .).

11That is we only work with x and y for which FX(x) > 0 and FY (y) > 0.

-

1.2. RANDOM VARIABLES 43

A nice feature of the above setup is that by the chain rule

FX,Y (x, y)

x=FX(x)

x

C(FX(x), FY (y))

FX(x),

so2FX,Y (x, y)

xy=FX(x)

x

FY (y)

y

2C(FX(x), FY (y))

FX(x)FY (y),

or in other wordsfX,Y (x, y) = fX(x)fY (y)c (FX(x), FY (y)) ,

the product of marginal densities times the density of two unit interval variables. Ourfavourite paper on this topic is Patton (2004).

1.2.12 Bayes theorem

Bayes theorem (1.2) is important for random variables. It plays a large role in economictheory and some parts of econometrics. Hence we spell it out in some details.

Clearly

fX|Y=y(x) =fX,Y (x, y)

fY (y)=fX(x)fY |X=x(y)

fY (y).

However, it is often easier to think of this in a more cut down form as

fX|Y=y(x) = cfX(x)fY |X=x(y),

where c is a constant such thatcfX(x)fY |X=x(y)dx = 1.

This allows one to do some calculations in cases where f(y) is not known. This is aconvenient trick.

Often fX(x) is called a PRIOR and fY |X=x(y) the LIKELIHOOD. In such cases it isstandard to call fX|Y=y(x) the POSTERIOR density. Thus we have

posterior prior likelihood.

Example 1.31 SupposeX N(X , 2X)

andY |X = x N(x, 2),

thenX|Y = y N(p, 2p),

where

p = 2p

(1

2XX +

1

2y

)where 2p =

(1

2X+

1

2

)1. (1.12)

-

44 CHAPTER 1. PROBABILITY

To show this, write

log fX|Y=y(x) = log c+ log fX(x) + log fY |X(y|x) (1.13)= log c 1

22X(x X)2

1

22(y x)2 . (1.14)

This is quadratic in x so X|Y must be Gaussian. The only task is to compute p and 2p.Of course, as it is a Gaussian density

log fX|Y=y(x) = log c 1

22p

(x p

)2. (1.15)

The quadratic term in x in (1.15) and (1.14) are, respectively,

122p

and 122X

122

.

This means that

2p =

(1

2X+

1

2

)1. (1.16)

Likewise, the linear term in x in (1.15) and (1.14) are, respectively,

1

2pp and

1

2XX +

1

2y.

This implies that

p = 2p

(1

2XX +

1

2y

). (1.17)

This means that the posterior mean weights the prior mean and the new observationy according to their variability. Special cases are where 2X , (the prior tells usnothing) in which case p y and where 2X 0, (the prior tells us everything) inwhich case p X .

1.3 Estimators

1.3.1 Introduction

A statistic S(X) is a function of a (vector) random variable X. When we use the statisticto learn about a feature of the probability model then we say we are estimating themodel. We call the random version of this function S(X) an estimator, while if we takethis function of an observed vector then we call it an estimate S(x).

Example 1.32 A simple example of this is where

S(X) =1

n

ni=1

Xi.

-

1.3. ESTIMATORS 45

If Xi NID(, 2) then using the fact that S(X) is a linear combination of normals wehave that

S(X) N(,2

n

).

If n is very large the estimator is very close to , the average value of the normal distri-bution.

1.3.2 Bias and mean square error of estimators

Suppose we design an estimator to estimate some quantity . Then we might wish forS(X) to be close to on average. One way of thinking about this is to record the

bias: E {S(X)} .

Example 1.33 If Xi NID(, 2) then

X =1

n

ni=1

Xi,

the sample mean (sample average), has a zero bias.

When the bias is zero, the estimator is said to be unbiased. Unbiased estimators maybe very imprecise for they can have a very large dispersion. One way of measuring theimprecision of estimator is through the mean square error criteria

mse:

E {S(X) }2 = Var {S(X)}+ [E {S(X)} ]2 .A more precise estimator may in fact be biased.

Exercise 1.21 Estimate 2 using a random sample from NID (, 2) by

S(X) =1

n kni=1

(Xi X

)2.

Show this has minimum mse when k = 1 while it is unbiased when k = 1. HINT: Notethat

ni=1

(Xi )2 =ni=1

(Xi X

)2+ n

(X )2 ,

and thatn

i=1 (Xi )2 /2 2n whilen(X ) / N(0, 1).

1.3.3 Histogram

Histograms are popular ways of getting a simple impression of the density of a randomsample of some random variable X. This is based on constructing bins of the support ofX:

c0 < c1 < c2 < ... < cJ

-

46 CHAPTER 1. PROBABILITY

The histogram tries to estimate

fi =1

ci ci1 Pr(X (ci1, ci])

=1

ci ci1E {I(X (ci1, ci])} ,

which for bins of short width we approximates the density of X. The histogram is anestimator of this based on the random variables X1, X2, ..., Xn

fi =1

ci ci1nj=1

I(Xj (ci1, ci]).

Examples of histograms are given in Figures 1.2 and 1.5.

1.3.4 Bayes theorem*

We saw that when Xi NID(, 2) then X estimates , withX N(, 2).

Thus the statistic is centred around a fixed, unknown constant .An alternative approach to carrying out statistical calculations is to regard as un-

known with uncertainty expressed via a prior N(, 2). For simplicity of exposition

suppose 2 is known. Then we can calculate

f(|X1, X2, ..., Xn) f()f(X1,X2,...,Xn|).The the calculations in 1.2.12 show that

|X1, X2, ..., Xn N(p, 2p),with

p = 2p

(1

2 +

1

2

ni=1

Xi

)where 2p =

(1

2+

n

2

)1.

Here the statisticn

i=1Xi appears in the posterior mean.

1.4 Simulating random variables

Cameron and Trivedi (2005, Ch. 12.7)A crucial feature of modern econometrics is that much of it depends upon simulation

that is to produce random variables from known distribution functions. Further,simulation is increasingly used in asset pricing theory to study analytically intractablemodels, e.g. to study complicated options. The advantage of simulation is that it allowsus to easily understand quite complicated transformations of random variables, which aresometimes extremely hard to carry out using the change of variable method.

In this section we will discuss the basic probability theory associated with simulation.Textbook expositions of this material include Ripley (1987) and Devroye (1986).

-

1.4. SIMULATING RANDOM VARIABLES 47

1.4.1 Pseudo random numbers

All of the simulation methods we will discuss here will be built out of draws based on asequence of independent and identically distributed (standard) uniform (pseudo12) ran-dom numbers {Ui [0, 1]}. The problem of producing such numbers will be regarded assolved in these notes. An example is given below

Ui.734.452.234.123.987

1.4.2 Discrete random variables

Suppose we wish to simulate a Bernoulli trial Xi on a computer with Pr(Xi = 1) = p.We set Xi = 0 if Ui 1p, and Xi = 1 tail if Ui > 1p. Then Pr(Xi = 0) = Pr(Ui

1 p) = 1 p and Pr(Xi = 1) = Pr(Ui > 1 p) = p.Another way of writing this is through the distribution function. Let

FX(x) = Pr(X x) = 0 x < 01 p 0 x < 11 1 x.

It would be useful if we could invert FX(x), i.e. for a given u [0, 1] find an x such thatu = FX(x).

But it has jumps in the function and so there does not exist a straightforward inverse.We can define a unique inverse (smallest value of x [0, 1] such that u FX(x)) of this

FX (u) ={

0 u 1 p1 u > 1 p.

ThenXi = F

X (Ui),

produces the Bernoulli trial as required. Using the same underlying uniforms as in theprevious subsection produces, when p = 1/2,

Ui Xi.734 1.452 0.234 0.093 0.987 1

When p = 0.9 the same underling uniforms produce12In the simulation literature these uniforms are sometimes generated using natural random numbers (e.g.

depending upon atomic decay), but mostly the uniforms are based on approximations (see Ripley (1987)). Theseapproximations are typically deterministic sequences which are sufficiently chaotic that it is very hard to discernpatterns in these sequences even with massively large samples of draws.

-

48 CHAPTER 1. PROBABILITY

Ui Xi.734 1.452 1.234 1.093 0.987 1

Example 1.34 Suppose that the support of a random variable X, with density fX(x) =Pr(X = x), is finite. For simplicity suppose it is on the points 0, 1, 2. Then

FX (u) =

0 u [0,Pr(Xi = 0)]1 u (Pr(Xi = 0),Pr(Xi 1)]2 u (Pr(Xi 1), 1].

So we can sample from this using Xi = FX (Ui). Such an algorithm will be very fast. e.g.

Pr(Xi = 0) = 0.2, Pr(Xi = 1) = 0.4, Pr(Xi = 2) = 0.4 then

Ui Xi.734 2.452 1.234 1.093 0.987 2

When the support of X has a lot of points then this algorithm may become quiteexpensive and more involved methods may become necessary.

1.4.3 Inverting distribution functions

Given a sequence of i.i.d. uniforms we can produce i.i.d. draws from any continuousdistribution FX(x). The argument goes like this:

As Ui is uniform, soPr(Ui FX(x)) = FX(x).

Thus

Pr(Ui FX(x)) = Pr(F1X (Ui) x)= Pr(Xi x),

if we takeXi = F

1X (Ui). (1.18)

Thus we can produce random numbers from any continuous distribution by plugging theuniforms into the quantile function (1.18).

Example 1.35 The exponential distribution. Recall FX(x) = 1 exp( 1x), and so

the quantile function isF1X (p) = log (1 p) .

Hence log (1 Ui)

are i.i.d. exponential draws. e.g. = 1

-

1.5. ASYMPTOTIC APPROXIMATION 49

Ui Xi.734 1.324.452 0.601.234 0.266.093 0.097.987 4.343

This method is convenient for a large numbers of problems where we can evaluateinexpensively the quantile function. However, for many problems this is not true. An ex-ample of this is the normal distribution, where the distribution function is very expensiveto evaluate (and the quantile is even more so).

1.5 Asymptotic approximation

Cameron and Trivedi (2005, Ch. A)

1.5.1 Motivation

Example 1.36 Classical convergence

Xn = 3 +1

n 3

as n.

Example 1.37 A little more fuzzy when we think of

Xn = 3 +Y

n? 3,

where Y is a random variable. Different measures of convergence. Some need moments,others dont.

Distribution theory can become very complicated and sometimes impossible. As aresult we often employ approximations. Many different types of approximations arepossible. The dominant way of constructing approximations is to think of the errorinduced by using the approximation as the sample size gets large such approximationsare called asymptotic approximations (asymptotic in n). This is a particularly attractiveidea if we are estimating a parameter and we wish that our estimator becomes moreprecise as we increase the sample size. Two basic results are used in this literature: thelaw of large numbers and the central limit theorem. Such approximations are examples ofthe more general concepts convergence in probability and convergence in distributionwhich will be presented in some detail. Additional references are Gallant (1997, Ch. 4),Grimmett and Stirzaker (2001), Newey and McFadden (1994) and McCabe and Tremayne(1993).

Formally we will think of a sequence of random variables X1, X2, . . . , Xn which, as ngets large, will be such that Xn will behave like some other random variable or constantX.

-

50 CHAPTER 1. PROBABILITY

Example 1.38 We are interested in

Xn =1

n

nj=1

Yj.

Then it forms a sequence

X1 = Y1, X2 =1

2(Y1 + Y2) , X3 =

1

3(Y1 + Y2 + Y3).

What does 1n

nj=1 Yj behave like for large n? What does Xn converge to for large n?

1.5.2 Definitions

We think of a sequence of random variables {Xn} and we ask if the distance between {Xn}and some other variable X (in this context X is almost always a constant in econometrics,guide your thinking by making that assumption) gets small as n goes to infinity. We canmeasure smallness in many ways and so there are lots of different notions of convergence.We discuss three, the second of which will be the most important for us.

Definition 1.1 (Convergence in mean square) Let X and X1, X2, . . . be random vari-ables. If

limn

E (Xn X)2 = 0,then the sequence X1, X2, . . . is said to converge in mean square to the random variableX. A shorthand notation is

Xnm.s. X. (1.19)

Exercise 1.22 Show that necessary and sufficient conditions for Xnm.s. X are that

limn

E(Xn X) = 0, [asymptotic unbiased] limn

Var(Xn X) = 0.

Example 1.39 Suppose Y1, ..., Yn are i.i.d. with mean and variance 2. Then define

Xn =1

n

ni=1

Yi,

which has

E (Xn) =1

n

ni=1

E(Yi) = ,

and

Var (Xn) =1

n2Var

ni=1

Yi =1

n2

ni=1

Var(Yi)

=1

n2.

Hence Xn is unbiased and the variance goes to zero. Hence

Xnm.s. .

-

1.5. ASYMPTOTIC APPROXIMATION 51

Definition 1.2 (Convergence in probability) If for all , > 0 no s.t.Pr(|Xn X| < ) > 1 , n > n0,

then the sequence X1, X2, . . . is said to converge in probability to the random variable X.A shorthand notation is

Xnp X. (1.20)

Exercise 1.23 Prove that mean square convergence is stronger than convergence in prob-ability by using Chebychevs inequality:

Pr (|Xn X| > ) 1rE |Xn X|r , for all > 0.

Definition 1.3 (Convergence almost surely) Let X and X1, X2, . . . be random variables.If, for all , > 0, there exists a n0 s.t.

Pr(|Xn X| < , n > n0) > 1 ,

then we say that {Xn} almost surely converges to X, which we write as Xn a.s. X.This almost sure convergence is about ensuring that the joint behaviour of all events

n > n0 is well behaved. Convergence in probability just looks at the marginal probabilitiesfor each n.

Exercise 1.24 Prove that

Xna.s. X Xn p X.

Further note that Xna.s. X does not imply or is not implied by Xn m.s. X.

Theorem 1.1 Weak Law Large Numbers (WLLN). Let Xi iid,E(Xi),Var (Xi) exist,then

1

n

ni=1

Xip E(Xi),

as n.Proof. Straightforward using Chebyshevs inequality or using the generic result that

m.s p . Figure 1.8 gives some examples of this. The experiment has varying

sample sizes and uses normal and student t Xi. As we go down the sample size increasesand the scatter of the points becomes narrower. Indicates convergence. Expected resultas mean and variance exist.

Sometimes it will be helpful to strengthen this to a result which is much harder toprove!

Theorem 1.2 (Kolmogorovs) Strong Law of Large Numbers (SLLN). Let Xi iid,E(Xi)exist, then

Xa.s. E(Xi).

-

52 CHAPTER 1. PROBABILITY

2 0 2 4 6 8

0.1

0.2

0.3Average of N() draws

N(s=1.17)

15 10 5 0 5 10 15

0.1

0.2

0.3 Average of t drawsN(s=1.62)

1.0 1.5 2.0 2.5 3.0 3.5 4.0 4.5

0.5

1.0Average of N() draws

N(s=0.399)

1 2 3 4 5 6 7

0.25

0.50

0.75Average of t draws

N(s=0.573)

2.50 2.75 3.00 3.25 3.50

1

2

3Average of N() draws

N(s=0.125)

2.00 2.25 2.50 2.75 3.00 3.25 3.50 3.75

1

2

Average of t drawsN(s=0.181)

Figure 1.8: Histogram of 10,000 simulations of a sample average of n shifted normal (left panel)or student t with 4 degrees of freedom (right panel) together with fitted normal curve. Top hasn = 3, n = 25 and n = 250. Code: sim mean.

Proof. Difficult. See, for example, Gallant (1997, p. 132).

The above theorem is remarkable, even if the variance does not exist the sample averageconverges to the expected value. Notice it implies

1

n

ni=1

g(Xi)p E(g(Xi)),

so long as E(g(Xi))

-

1.5. ASYMPTOTIC APPROXIMATION 53

15 10 5 0 5 10 15 20

0.05

0.10

0.15 n = 10

2.5 0.0 2.5 5.0 7.5 10.0

0.2

0.4n = 100

0 1 2 3 4 5 6

0.25

0.50

0.75n = 1,000

1.5 2.0 2.5 3.0 3.5 4.0 4.5

0.5

1.0n = 10,000

2.00 2.25 2.50 2.75 3.00 3.25 3.50 3.75

1

2

3

n = 100,000

2.8 3.0 3.2 3.4 3.6

2.5

5.0

7.5n = 1,000,000

Figure 1.9: Estimated density, using 5,000 simulations, of the sample average of n shiftedand scaled student t with 1.5 degrees of freedom. Sample size is given on the graphs. Code:sim var infinity.

1.5.3 Some payback

Then the most important rules are

If A p a , then g(A) p g (a) where g(.) is a continuous function.Example 1.40 Suppose Xi iid,E(Xi) 6= 0, Var (Xi) exist, then

1

n

ni=1

Xip E(Xi),

which implies1

1n

ni=1Xi

p 1E(Xi)

.

IfA

p a, B p b,then

g(A)h(B)p g(a)h(b)

Example 1.41 Suppose Yi iid,E(Yi),Var (Yi) exist. then(1

n

ni=1

Xi

)(1

n

ni=1

Yi

)p E(Xi)E(Yi).

-

54 CHAPTER 1. PROBABILITY

1.5.4 Some more theory (first part examinable)

Refined measure of convergence (examinable)

Convergence almost surely or in probability is quite a rough measure for it says thatXn X implodes to zero with large values of n. It does not indicate at what rate thisconvergence will be achieved nor give any distributional shape to Xn X. In order toimprove our understanding we need to have a concept called convergence in distribution.

Definition 1.4 (Convergence in distribution) The sequence X1, X2, . . . of random vari-ables is said to converge in distribution to the random variable X if

FXn(x) FX (x) . (1.21)A shorthand notation is

Xnd X. (1.22)

Important result in this context is Slutskys results:

Suppose Xn d X and Yn P . Then XnYn d X and Xn/Yn d X/ if 6= 0.

Suppose Xn d X and Yn P . Let be a continuous mapping. Then (Xn, Yn) d (X,) .

A proof of this result can be found in Gallant.

Example 1.42 Suppose X1, ..., Xn are i.i.d. normal with mean and variance 2. Then

n(X )

N(0, 1).

Likewise

2 =1

n

ni=1

(Xi X

)2=

1

n

ni=1

X2i X2 a.s. 2.

Then by Slutsky theorem n(X )

d N (0, 1) .

In order to use asymptotic theory we have some generic tools central limit theorems.The most famous of these is the Lindeberg-Levy central limit theorem. This is a veryimportant result.

Theorem 1.3 (Lindeberg-Levy) Let X1, X2, . . . be independent, identically distributedrandom variables, so that EXi = , Var (Xi) =

2. Set

X = (X1 + +Xn)/n.Then

n(X ) d N (0, 2) .

-

1.5. ASYMPTOTIC APPROXIMATION 55

2.5 0.0 2.5 5.0 7.5 10.0

0.2

0.4Average of 21 draws

N(s=1.4)

15 10 5 0 5

0.1

0.2

0.3 Log of average of 2

1 drawsN(s=1.68)

2.5 0.0 2.5 5.0 7.5 10.0

0.1

0.2

0.3Average of 21 draws

N(s=1.42)

10.0 7.5 5.0 2.5 0.0 2.5 5.0

0.1

0.2

0.3 Log of average of 2

1 drawsN(s=1.49)

5.0 2.5 0.0 2.5 5.0 7.5

0.1

0.2

0.3 Average of 2

1 drawsN(s=1.41)

7.5 5.0 2.5 0.0 2.5 5.0

0.1