Item analysis

-

Upload

melanio-florino -

Category

Education

-

view

164 -

download

0

Transcript of Item analysis

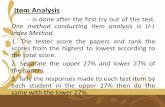

Item AnalysisItem Analysis

How do we evaluate our test?

A glimpse of our practices

When do we evaluate our test?

What is a test?An objective measure of a sample of

behavior or psychological object (Anastasi & Urbina, 1997).

A systematic procedure for measuring a sample of behavior by posing a set of questions in a uniform manner (Gronlund & Linn, 2000).

Are only tools…

QUALITIES OF A QUALITIES OF A GOOD TESTGOOD TEST

To be a “GOOD” test, a test ought to have validity, reliability, and accuracy.

The Error Components The Error Components of a Test of a Test

Random Error◦Sources: Fatigue, Cheating,

Guessing, etc.Systematic Error

◦Sources: Item bias, Technical errors, Contextual clues, etc.

True Score = Observed Score Error

Important Notes from the Classical Test TheorySystematic errors lead to poor

test reliability and validity.Interpretations of test result

may be distorted by too many errors.

Random errors are more difficult to control than systematic errors.

Systematic errors can be controlled by systematic assessment (Item Analysis).

How do we evaluate our How do we evaluate our tests?tests?◦QUALITATIVE EVALUATION Systematic inspection of test plan, tasks, and format

◦QUANTITATIVE EVALUATION Psychometric techniques in item analysis

A Systematic Inspection of A Systematic Inspection of Tests Tests

Adequacy of Assessment or Test Plan

Adequacy of Assessment Task

Adequacy of Test Format and Directions

QUANTITATIVE EVALUATION

- determining the psychometric characteristics of the test using ITEM ANALYSIS

Psychometric Psychometric Techniques for Item Techniques for Item AnalysisAnalysis

Test / Item Statistics - These are psychometric techniques generally based on a norm-referenced perspective (Method of extreme groups).◦Item Difficulty◦Item Discrimination Power◦Effectiveness of Distracters

The Method of Extreme The Method of Extreme GroupsGroupsSelecting criterion groups

(Upper & Lower Criterion Groups)◦If samples are less than 50: Use

50% groupings◦If samples used are more than

50, and for a more refined analysis: Use upper & lower 27% groupings

◦Set aside the papers which will not be used in the analysis.

The Method of Extreme The Method of Extreme GroupsGroups

Determine item statistics◦Item Difficulty◦Item Discrimination◦Effectiveness of distracters

Determine test statistics◦Solve for the mean (average) of the

difficulty and discrimination indices of all the items in the test.

What is item difficulty?What is item difficulty?Item difficulty is simply the percentage of

students taking the test who answered the item correctly. It is represented by p index.

Range: 0.0 to 1.0 or 0% to 100%It can be computed using the formula below

NLUp RR

◦Some important notations UR = No. of students from the UPPER

criterion group that had gotten the item correctly or had chosen a particular option under analysis.

LR = No. of students from the LOWER criterion group that had gotten the item correctly or had chosen a particular option under analysis.

N = No. of students that had tried to answer the item

Rule of the Thumb: Rule of the Thumb: pp - - indexindexThe nearer the p value is to 0.0, or 0.0% the more DIFFICULT the item becomes.

The nearer the p value is to 1.0, or 100 % the EASIER the item becomes.

How difficult should our How difficult should our test/item be?test/item be?

For an objective item test, the ideal difficulty would be halfway between the percentage of pure guess and 100%. (Thompson & Levitov, 1985)◦Ex. p=0.63 for a multiple choice test with 4

options.Eclectic distribution of difficult, average and easy items; with extremely limited use of items having p = 0.9 or more (Frary, 1995)

How important is item How important is item difficulty?difficulty?

An item having p = 0.0 and 1.0 does not in any way contribute to measuring individual differences, and seriously affects test validity.

Item difficulty has a profound effect on the variability of test scores and the precision to which the test discriminates between achievement groups. (Thorndike, et al.,1991)

What is item What is item discrimination?discrimination?

It is the ability of an item to discriminate between students with high or low achievement. It is represented by Di.

Range: -1.0 to 1.0 or 0% to 100%

It can be computed using the formula below

0.5NLUDi RR

Rule of the Thumb: Rule of the Thumb: D iD i- - indexindex

The higher the discrimination index, the better the item becomes. This is so because such a value indicates that the discrimination index is in favor of the upper achievement group, whom we expect to get more of the items in the test correctly.

Why should our items Why should our items discriminate?discriminate?

Items that do not discriminate can seriously affect the validity of the test.

Negatively discriminating items are useless and tend to decrease the validity of the test. (Wood, 1960)

How do we evaluate the How do we evaluate the effectiveness of distracters?effectiveness of distracters?

Solve for Di of each wrong option (applicable only for multiple choice items)

Rule of the thumb: The more negative the Di index will be the more effective is the distracter

Workshop 2Workshop 2

Systematic inspection of test items, tasks and format

Item analysis of using Microsoft Excel ® and

STATISTICA 6.0 .0 or SPSS

Some points of caution in Some points of caution in interpreting item/test interpreting item/test

statisticsstatisticsA low index of discriminating power does NOT necessarily indicate a defective item.◦Non-technical factors which contribute to item discriminating power Emphasis given to a domain or content covered by a test.

Homogeneity and characteristics of student groups

Difficulty of an item

Some points of caution in Some points of caution in interpreting item/test interpreting item/test

statisticsstatisticsItem analysis data from small samples are highly tentative and tends to fluctuate due to its norm reference perspective.

A test that had undergone psychometric analysis is NOT necessarily a STANDARDIZED test.

What should we do after What should we do after item analysis?item analysis?

Further analysis with other psychometric methods ◦Ex. Item bias analysis, Point-biserial and biserial correlations

Item Calibration ◦IRT and Rasch Scaling

Item banking

Practical Implications Practical Implications of Evaluating Testsof Evaluating Tests

It helps prevent wastage of time and effort that went in to test and assessment preparation.

It provides a basis for the general improvement of classroom instruction.

It provides a venue for teachers to develop their test construction skills.