IQ - Innovation Quest Big Data Issue Fall 2015

-

Upload

mcmaster-university -

Category

Documents

-

view

220 -

download

2

description

Transcript of IQ - Innovation Quest Big Data Issue Fall 2015

IQINNOVATION QUEST

McM

aste

r Uni

vers

ity’s

Rese

arch

New

smag

azin

eFa

ll 20

15

Big DataIN THIS ISSUE: Cracking the code for predictive analytics

McM

aste

r Uni

vers

ity’s

Rese

arch

New

smag

azin

eFa

ll 20

15

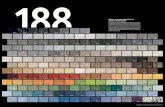

n On the cover: Abigail Payne, director of MacDATA and professor of economics

IQ INNOVATION QUEST

3 Data for the stars Christine Wilson

4 Gene machine Guillaume Paré

5 Battling bacteria with big data Andrew McArthur

6 McMaster launches MacDATA Abigail Payne

8 Global reach, lasting impact Salim Yusuf

9 Innovative infrastructure Ranil Sonnadara

10 The A-Team Paul McNicholas

11 Brain buzz Allison Sekuler

12 Quality counts Fei Chiang

13 Driving with data Mark Lawford

14 Supply chain success Elkafi Hassini

14 McMaster’s Research Data Centre Byron Spencer

15 Mining for data Victor Kuperman

INQUIREIQ is published by the Office of the Vice-President, Research

Editor Lori Dillon

Contributors Andrew Baulcomb, Sherry Cecil, Danelle D’Alvise, Maria Lee Fook, Meredith MacLeod

Design Nadia DiTraglia

Photography Ron Scheffler

Please forward inquiries to:

IQOffice of the Vice-President, Research Gilmour Hall 208, McMaster University, 1280 Main Street WestHamilton ON CANADA L8S 4L8

(905) 525-9140 ext. 27002 | [email protected] | research.mcmaster.caPrinted in Canada 10/2015

Collecting, analyzing, autonomizing, and sharing big data in its most secure

state requires big thinkers. Trust me when I tell you, at McMaster, there is no

shortage of big thinkers.

This issue of IQ is focused on ‘Big Data’ and, more precisely, the incredibly

talented researchers at McMaster who, along with their research teams –

including undergraduate students, graduate students, and postdoctoral fellows

– are making amazing strides to ensure that the right data are reaching the right

people at the right time.

You see, at McMaster, researchers in every discipline, in every corner of

our campus, are working with enormous sets of data to improve the lives of

Canadians – whether through combatting infectious disease, performing clinical

trials, imaging brains, interpreting cultures, discovering stars, or ensuring the

right infrastructure is in place to support our research community.

Although their work varies, to be sure, all of our researchers share a

common bond. They know the true value of their work is measured not by

output, but by outcomes – how their work is adding to Canada’s, indeed the

world’s, economic, social and cultural prosperity. With the creation of our new

MacDATA Institute, and our existing support for research computing led by the

Research and High-Performance Computing team, previously disparate groups

will be working together to achieve even greater goals.

Take some time away from the seemingly never-ending deluge of data that

overwhelms each and every one of us, day after day. Instead, sit back, relax,

and discover how McMaster researchers are working with big data to improve

the way we all live, work and play.

Enjoy the read.

Allison SekulerActing Vice-President, Research(and a big fan of big data)

HOT TOPICS

HOT TOPICS

The vast amount of astronomical

data being collected by high-powered

telescopes presents both a challenge and

an opportunity for scientists studying our

universe.

The challenge is that the data is

outpacing the ability of high-end desktop

computers to download and process it.

But the opportunities to answer questions

about how stars are formed are boundless,

says Christine Wilson, professor of physics

and astronomy.

From 1999 to 2014, Wilson was the

Canadian Project Scientist for the massive

Atacama Large Millimeter/submillimeter

Array (ALMA), the world’s most

sophisticated ground-based radio telescope

perched high in the Andes in Chile.

While getting viewing time on the

telescope is difficult because of the large

demand, Wilson is beginning to crunch

the data retrieved by other scientists to aid

her own research.

“One of the things that is very cool

about the new telescope is that archives of

the data will be publically available after

just one year… We’ll be able to synthesize

the data gathered by others and look at it

in new ways.”

There is about a year’s worth of ALMA

data in the public domain now, which

Wilson expects will triple over the next year.

She spent a sabbatical in 2013 to 2014

beginning her archival project at ALMA’s

international headquarters in Santiago

and the North American headquarters in

Charlottesville, Virginia.

Though the launch of the telescope was

hampered by power system troubles in

2013, Wilson was able to help with some of

ALMA’s commissioning and calibration. She’s

also done research at the Submillimeter Array

operated by the Smithsonian Observatory

in Hilo, Hawaii and was part of the team

that built SPIRE, one of three instruments

of the Hershel Space Observatory, which

operated from 2009 to 2013.

Wilson, who discovered Comet Wilson

while she was a grad student at Caltech,

is primarily interested in the formation

of stars in nearby galaxies. (Galaxies that

are 500 million light years away qualify as

“nearby.”)

She looks at the properties of gas in the

interstellar medium and giant molecular

clouds to understand what is necessary to

create stars.

“Why are some galaxies forming stars

much faster than others? What are the

properties of the gas that mean star

formation catches fire? ALMA gives us a

bird’s eye view of it.”

ALMA allows for much sharper images

than possible before, allowing scientists to

see previously unseen detail.

“Putting all the datasets together from

the archival data has got to lead us

someplace but I’m just starting out, so I

don’t know where that is yet.”

While in grad school, Wilson managed

only the second sighting of a giant

molecular cloud in one local galaxy

and has been hooked on her branch of

inquiry, radio-astronomy, ever since.

Using radio frequencies allows

scientists to probe into the dark regions

of space where galaxy collisions trigger

intense bursts of star formation, as well

as exploring the quieter star formation

process, which is the norm in galaxies

like our own Milky Way.

Astronomers take information gathered

from high-resolution images of nearby

galaxies to deduce what is happening in

galaxies much further away.

Understanding the formation of stars is

a crucial underpinning to understanding

everything else in the universe, says

Wilson. There is plenty to learn, with

about 96 per cent of the universe

unknown.

Wilson, who grew up in Toronto, came

to McMaster by way of a Women’s Faculty

Award from the Natural Science and

Engineering Research Council in 1992.

She also received a Premier’s Research

Excellence Award from the Ontario

government in 1999 and was elected to

the Royal Society of Canada in 2014.

The large amounts of data being

produced by modern, high-powered

telescopes means Wilson and her students

may have to take advantage of SHARCnet,

the network of high-performance

computers shared by 18 universities and

colleges, including McMaster.

The Research and High-Performance

Computing Support group has also been

invaluable to her work, she says.

“Having that kind of support means that

I don’t hit roadblocks in my work. The

computer support at McMaster is the best

I’ve ever seen anywhere.” n

3

Data for the starsCHRISTINE WILSON

n Christine Wilson, Fellow of the Royal Society of Canada, with ALMA antenna dishes in background

4

Guillaume Paré would be the first to admit

that he wears many hats.

A leading genomics scientist and

epidemiologist – his genetic and molecular

epidemiology lab is one of a few such labs

in Canada – he is also a clinician who is

using cutting-edge genomic techniques in

the war against stroke.

“Stroke used to be a disease of the elderly,”

says the associate professor of pathology

and molecular medicine, who holds a joint

appointment in clinical epidemiology and

biostatistics. “Today we’re seeing a growing

number of Canadians having strokes in

their 50s and 60s.”

Armed with a Canada Research Chair

in Genetic and Molecular Epidemiology,

Paré is working to decipher the genetic

architecture of strokes and better identify

people who are at risk.

By analyzing biomarkers (biological

molecules found in blood), he discovered

that some people carry a gene that

makes their blood more likely to clot and

interrupt blood flow to the brain.

“Our findings show that young stroke

victims – or about 60,000 Canadians –

have genetic mutations that are related to

cardiovascular disease. If we can identify

those who have such mutations, we can

start targeting them with drug therapies to

reduce their risk.”

Developing predictive and therapeutic

technologies is not easy. It requires

integrating vast amounts of data from

biomedical research, clinical trials and

patient feedback. Paré, who is also an

associate director of MacDATA, is one of

the few physician-scientists in Canada

with the expertise to tackle this new

and evolving area of research called

pharmacogenetics. And he’s getting

some help.

In a move that could transform the way

academic research is conducted in Canada

and throughout the world, Cisco Canada

has committed $1.6 million to McMaster to

establish a university-wide research cloud

computing environment and infrastructure.

Paré was named Cisco Professor in

Integrated Health Biosystems to oversee

the project, which will capture the value

of the exponentially increasing volumes of

data generated by McMaster researchers.

The simple cloud-based infrastructure

will replace the silo approach that

typically exists in universities, where

data is created and accessed only by the

original research team and seldom shared

across campus. It will allow researchers

at McMaster to share data and collaborate

more effectively across the university, with

other institutions and with industry to

ensure novel approaches and applications

make their way to the wider world.

And, says Paré, to the bedside. “We

have accelerated the pace of discovery in

genetics. DNA sequencing is becoming

more accessible and affordable every day.

Tackling big data problems is the last hurdle

that stands in the way of us using genomics

tools in the lab to inform patient care.”

He’s already made significant progress,

discovering new genetic markers for an

anticoagulant that can decrease bleeds

by 30 per cent, and another genetic

variant that boosts the effects of aspirin in

decreasing the risk of stroke.

These breakthroughs are just the tip

of the iceberg, says Paré. “Discovery of a

gene linked to familial high cholesterol in

the blood has led to the development of

new drugs that could prevent heart disease

within 10 years. If we can repeat this feat

through the discovery of yet unknown

genes, we might well make cardiovascular

disease a thing of the past.” n

GUILLAUME PARÉ

“Tackling big data problems is the last hurdle that

stands in the way of us using genomics tools in the lab to inform patient care.”( )

Gene machine

n Dr. Guillaume Paré, Canada Research Chair in Genetic and Molecular Epidemiology

It’s possible that one day patients with a

bacterial infection will be able to walk

into a hospital or clinic, have that bacteria

genome sequenced on site and walk out

the same day with a drug custom-designed

to fight that bacterial strain.

That’s the kind of world Andrew

McArthur is working towards. He’s

the inaugural Cisco Research Chair in

Bioinformatics and an associate professor

of biochemistry and biomedical sciences.

His work focuses on building a world

of big data to battle antibiotic resistant

infection. “It’s a global crisis and it’s getting

worse by the week.”

The World Health Organization has

identified resistance to antibiotics as one of

the top three challenges to human health.

The Centres for Disease Control estimates

that more than 2 million people in the United

States are sickened every year with antibiotic-

resistant infections and at least 23,000 die.

Britain estimates that by 2050, there will

be a global drop in GDP of three to four

per cent because of drug resistance.

The emergence of “super-bugs” that are

resistant to most, if not all, of available

antibiotics has resulted in a growing health

crisis. All of this has happened much quicker

than it was ever anticipated, says McArthur.

The poor use of antibiotics for decades

accelerated the development of resistance.

“We are at risk of re-entering a pre-

antibiotic world where we can’t fight many

infections.”

Some strains of gonorrhea are now 100

per cent untreatable, fears are rising about

drug-resistant tuberculosis, and some

hospitals fear loss of the ability to perform

hip replacements because they can’t contain

secondary infections, says McArthur.

Physicians have to play a guessing

game about what drugs to prescribe and

ultimately, may have nothing that will work.

Yet, sequencing of DNA can now be

done at a high speed and low cost never

before possible. Ultimately, that testing

could be done at hospitals and clinics,

rather than at centralized labs.

What is lacking is the routine,

continuous and complete collection of data

around drug resistance to help clinicians

choose treatment plans, to give public

health officials the ability to monitor

the emergence of resistance and detect

and track outbreaks and to guide the

development of new drugs to fight them.

“The tools are there but we’ve been slow

to create a big data environment.”

So McArthur and his team are working

on writing software and algorithms to

collect, analyze and aggregate potentially

millions of data points a day collected

from front-line DNA sequencers.

That is critical to the development of

new, targeted antibiotics, which is an

expensive and risky venture now but

crucial because the effectiveness of broad-

spectrum drugs has been destroyed by the

ability of pathogens to mutate.

McArthur says governments worldwide

have made tackling antibiotic resistance a

priority. The British government has even

put the threat on the same scale as global

terrorism. That means there is more funding

channelled into the problem than ever before.Creating the big data environment to take on

the crisis will require the work of academics,

health-care professionals, governments, public

health authorities, pharmaceutical companies

and the information technology sector all

brought together.

Before coming to McMaster, McArthur

owned a bioinformatics company

and consulted and collaborated with

researchers in academia and government,

including several investigators at McMaster.

That includes a four-year project with

Canada Research Chair in Antibiotic

Biochemistry Gerry Wright of the

Michael G. DeGroote Institute for Infectious

Disease Research in the construction of

the Comprehensive Antibiotic Resistance

Database (CARD), with a consortium of

academic and government researchers in

Canada and the United Kingdom.

McArthur says he was enticed to

take the Cisco chair a year ago because

McMaster has a long and pioneering

history of evidence-based medicine.

“The best data means making the best

decisions. We need a big data solution to

this crisis.”

These are early days in the big data

revolution, especially in the medical field,

and there is much to learn, says McArthur.

Then there are ethical and legal challenges

to the collection and sharing of health-care

information, and to be effective, infection

data must be shared globally.

It’s no easy task, says McArthur, but the

consequences of failure are grave.“Bacteria are tricky little things that can

outsmart us. That’s why we have resistance.

…But there is real hope we have a chance now.” n

5

ANDREW McARTHUR

Battling bacteria with big data

n Andrew McArthur holds the inaugural Cisco Research Chair in Bioinformatics

Gene machine

6

Abigail Payne already had big ideas about

big data when she was appointed director

of MacDATA – McMaster’s newly created

big data institute.

The one-time lawyer-turned-economist

has been in the data business for more

than two decades, working with local

educators, governments and charities,

analyzing their administrative and

proprietary data to understand how

the services they provide affect the

communities in which they operate.

And now she’s keen to put that

experience to work as the founding

director of the multidisciplinary institute.

“Most universities take a narrow view of

big data, confi ning their research initiatives

and training programs to a single faculty,

typically business, science or engineering,”

says Payne, who for the past 13 years

has headed PEDAL – Public Economics

Data Analysis Laboratory – a secure data

laboratory, that transforms administrative

and proprietary data to study policy

relevant issues based at McMaster.

“But researchers in every faculty touch

data, technology, and/or the tools needed

to work with data. Each discipline brings

an insight and an expertise yet they

face the same issues around creation,

collection, processing, storage and

analysis. We need to fi nd synergies that

allow us to work together and learn from

each other.”

The result is a big data initiative that is

uniquely McMaster: A collaborative, cross-

disciplinary approach that values innovation

and is focused on outcomes. There will be

no big data facility, and leadership of the

institute will be shared with two associate

directors, currently one from engineering

and one from health sciences.

“I like to describe MacDATA as an

institute that hovers,” says Payne. “We still

want to encourage individuals to do their

own thing but, at the same time, we want

to get them talking to each other, sharing

information and feeding off each other’s

work in ways that will elevate everyone’s

research.”

Moreover, she notes, while so many

researchers embrace data in their work,

MacDATA offers an opportunity to see the

bigger picture. “MacDATA will showcase

the insights that are being generated

through the development and analysis of

data as well as our technological advances

that permit the collection of data to create

a bigger picture of how things work in

IQ feature story

Abigail Payne

ABIGAIL PAYNE

MacDATA

7

our society and how to understand better

the issues individuals, organizations, and

governments face.”

Having a social scientist lead the way

is not a stretch, says Payne. “It’s not just

about the data. It’s about how we’re using

it. Is it just a lot of numbers or is it a

meaningful indicator that can be used to

improve decision making? Data scientists

are really good on the tools, but their

work can be complemented by researchers

who want to answer questions that the

tools will help address. Similarly, she says,

researchers who ask questions benefit by

understanding what tools are available to

support, and even speed up, their research.

For Payne, who specializes in developing

high quality research data for projects

that address key public sector issues, that

means improving educational outcomes,

helping charities deliver better results,

and strengthening communities. “But,”

she acknowledges, “big data means many

things across the university – it could be

producing a safer car, delivering better

medicine, improving transportation, or

predicting the success of a business tool.”

It’s an approach that’s in lockstep, she

says, with where universities should be

heading. “Historically, universities were at

the centre of the universe in data collection.

Today, data are being created everywhere

by everyone. Some of that data, with the

right tools and using careful analysis, can

be used to enhance our understanding

of the world. So the question we, as a

university must ask, is what is our role and

how do we give value?”

MacDATA researchers have several

things in mind. One is to partner with

businesses, nonprofits and governments

to develop new tools that assist in data

cleaning, data linking, and data analyses

that fuel innovation and advances in

industry, science, policy development

and public services. Another is to provide

McMaster students, researchers and

practitioners with the skills they need to

traverse the big data terrain, now and well

into the future.

True to her background, Payne is

determined to ensure students from every

faculty and discipline have the opportunity

to receive training in the acquisition,

storage, processing, analysis and use of

large data sets.

Just as data scientists can learn from

social and health scientists, and others

across the disciplines, the same holds true

as to what we can learn from them, says

Payne, adding what is equally important

is “our collective understanding of the

philosophical and ethical underpinnings

about how we collect and use data.”

One of the institute’s challenges will

be to consider and engage the University

in how we think about data security and

privacy. It’s a challenge Payne has had

years of experience managing as director

of PEDAL, which has just received

funding from the Canada Foundation for

Innovation to upgrade to a high-security

facility with enhanced technology and

safer administrative protocols.

In an era when pop-up ads for products

you’ve just googled haunt your computer,

credit cards record your purchase habits,

and mobile phones track your every

move, it’s not easy to remain anonymous.

Pregnant teens found out the hard way

in 2002 when Target launched a coupon

campaign based on a “pregnancy

prediction algorithm” that tracked their

purchase of baby products, and many

a marriage is now on the rocks thanks

to poorly protected passwords of clients

using Ashley Madison’s cheating web site.

“Big data has had a lot of bad press,”

confesses Payne. Does that mean we

should not pursue the use of data for

research and innovation? “Absolutely not,”

she says. “Instead we should consider how

best to provide a spectrum of security

when working with sensitive or proprietary

data, and how we ensure that everyone

across the institution is engaging in best

practices around the handling of data.”

One solution, which McMaster

researchers are now working on, is to

use machine learning algorithms that

would raise a red flag whenever security

is compromised. Another consideration

is to think more carefully about how we

structure data used in analyses. “Every

minute, a billion bits of data are being

created. But to answer certain research

questions, we may need only a million

bits,” says Payne.

More important than the amount of

data may be the availability of real-time

data that companies and governments

can use to make important decisions that

impact their customers and citizens –

an area where McMaster can play a

critical role.

Payne points to the successful

interactive data portal that the City of

Edmonton has developed that publishes

data on the city’s performance on a wide

range of services. It’s a model – a citizen’s

dashboard, if you will – that she’d like to

see Hamilton implement.

“There are natural synergies between

our research teams and our local

community that would benefit from a

similar type of dashboard,” she says, using

the example of improving living conditions

through data gained by examining

homeless shelters. “Working together and

sharing our data means we’ll accomplish

more. Looking at single measures of data

only tells part of the story, but connecting

those data points will put us on the fast-

track to putting a plan in place to find

solutions,” she says.

Payne is excited about MacDATA’s

approach, which is already resonating

with industry, government and other

organizations, and is keen to have both

sides of the research enterprise at the

same table.

“She recalls being the only social

scientist at a 2014 IBM conference for data

scientists and how she jumped to her feet

when organizers pronounced they had

“all the people we need right here in this

room” to solve the issues around big data.

“I said, no, you don’t. You have only half

the people.”

MacDATA, she promises, will always

have all of the right people in the room. n

Abigail Payne

“Big data means many things across the

university – it could be producing a safer car, delivering better medicine, improving transportation, or

predicting the success of a business tool.”

( )

8

There is no universal definition of big

data but if there were one, the work of

the Population Health Research Institute

(PHRI) must certainly qualify.

For 23 years, the PHRI, founded as

a collaboration of McMaster University

and Hamilton Health Sciences, has been

conducting worldwide studies of hundreds

of thousands of people, combining clinical

trials with population studies, to determine

the factors that lead to the world’s most

devastating diseases.

“We collect data from 50 to 60 countries

every day and generate 15 million to 20

million pages of data every year,” said

Dr. Salim Yusuf, founder and executive

director of the institute and a professor in

the Department of Medicine.

Altogether, PHRI studies have included

more than 1 million people worldwide.

That requires an IT group of 40 people

to set up and manage the software and

hardware; 28 biostatisticians to write

extensive programs to check that the

data are valid, clean and accurate and

to analyse them; and about 35 principal

investigators to design the studies, and

manage them with a team of project

managers and coordinators.

The PHRI works with more than 1,500

sites around the world, with 30 projects

currently in progress.

“No one institute can have all

the expertise,” said Yusuf. “Without

collaboration, our work would not exist.

And that’s collaboration across borders,

across specialities and across fields. The

most important questions cross multiple

areas.”

The PHRI was formed with an

initial focus on cardiovascular disease

and diabetes, but research areas have

broadened to include population genomics,

perioperative medicine, stroke, thrombosis,

cardiovascular surgery, renal disease,

obesity, childhood obesity, nutrition and

trauma and implementation science.

The institute employs about 320

researchers and staff. That makes it among

the largest health research institutes in

Canada.

But by number of published papers in

high-impact journals, more than 2,250,

and number of citations, the PHRI is

the country’s research institute with the

greatest global impact, says Yusuf, who

was the second-most cited researcher in

the world in 2011.

That same year, the institute was ranked

Number 7 in the world in the collective

impact of its work.

Yusuf didn’t always imagine the institute

would grow to where it is today.

“We just wanted to do good research.

That lead to better research, and then

to even better research. Things have

certainly snowballed,” said Yusuf, who

was inducted into the Canadian Medical

Hall of Fame and awarded the Canada

Gairdner Wightman Award for leadership

in medicine and medical science last year.

He is also an Officer of the Order of

Canada, a member of the Royal Society of

Canada and current president of the World

Heart Foundation.

“Collectively, we have some of the best

investigators in the world here who work

as a great team.”

The institute examines the biological and

genetic determinants of health, along with

social, environmental and policy factors.

“To get precise estimates of the effects

of a risk factor or that of any treatment, we

need several thousands of each type of event

and then relate them to specific exposures,

whether that’s diet, activity or pollution.”

One of the largest studies conducted

by PHRI, the Prospective Urban and Rural

Epidemiological Study, includes 225,000

subjects from 25 countries and will track

them over 10 years to determine the impact

of urbanization on disease risk factors.

“That is one of the biggest studies in the

world. We are linking it with satellite image

data on air pollution and then able to relate

air quality to lung function, and rates of

heart attack, lung disease or asthma.”

The institute’s trials such as the Heart

Outcomes Prevention and Evaluation

(HOPE), RelY, and CURE, among others,

have also advanced treatments of

cardiovascular diseases, and studies such

as INTEHEART and INTERSTROKE have

advanced knowledge on the causes of

heart attacks and strokes.

These studies will also have lasting

impact, says Yusuf. n

SALIM YUSUF

n Dr. Salim Yusuf, founder and executive director of PHRI

Global reach, lasting impact

Phot

o co

urte

sy P

HRI

9

McMaster University was a pioneer when it

launched a technology incubator dedicated

to supporting, training and collaborating

with researchers needing software and

hardware support.

The Research and High-Performance

Computing Support (RHPCS) group is now

14 years old and has become a key facilitator

of the uptake of advanced computing by

McMaster’s research community.

Think of it as a 15-member technology

SWAT team that can partner with

researchers, teams, labs and departments in

every faculty at any point in their project.

“We help people interface with technology

to answer the questions they are exploring,”

said RHPCS director Ranil Sonnadara. He

says that is critical to a research-intensive

university such as McMaster.

“RHPCS has enabled research at

McMaster to thrive.”

The team was formed when researchers

from different faculties, who were pushing the

boundaries of what was possible with computers

at the time, needed specialized support.

The research-focused system analysts and

programmers from the central information

technology group and individual research

groups spread across the campus were

gathered into a core team.

Some campus researchers know how

to design the technology they need and

simply need help with the execution, says

Sonnadara. Others need help untangling

how technology can help them with

research methodology, data extraction,

number crunching, signal processing,

simulation, modelling and visualization.

Some research, such as modelling of

the universe, requires powerful servers,

large amounts of storage and extensive

involvement of RHPCS staff. Other

research requires fewer resources and less

intensive support.

In some cases, RHPCS staff work with

local technology support staff in research

labs, in others they take on the role of

research staff directly.

Whatever the needs, RHPCS is able to

customize a solution.

“We can create the infrastructure

that allows data collection and analysis

in a timely manner,” said Sonnadara,

who came to McMaster to earn his PhD

in experimental psychology, and now

holds academic appointments in the

Departments of Surgery, and Psychology,

Neuroscience and Behaviour.

That means having staff members who

understand the hardware and software but

can also speak the language of research.

Sonnadara says his team is highly skilled

and works hard to enhance McMaster’s

reputation as a research-intensive university.

Some members of RHPCS work for

SHARCNET (the Shared Hierarchical

Academic Research Computing Network),

a consortium of 18 universities who share

a network of high-performance computers,

of which McMaster was a founding partner.

The university has made digital

infrastructure for research a high priority,

said Sonnadara, who is a special advisor to

McMaster’s Vice-President of Research, and

serves as Vice-Chair of Compute Ontario,

Innovative infrastructure n Ranil Sonnadara heads up McMaster’s Research and High-Performance Computing Support team

– continued on next page

“RHPCS has enabled

research at McMaster

to thrive.”( )

Global reach, lasting impact

RANIL SONNADARA

10

Statistician Paul McNicholas heads up

what he thinks of as a

campus A-Team.

His group, The

Computational Statistics

Research Group, solves

problems and tackles

data that others can’t.

“We try to be the A-Team of computational

statistics problems. We tackle the big

problems where the data sets are very large

and complex. We are looking for subgroups.”

The 21-member group of four post-doctoral

fellows, eight PhD students, seven masters

students and two undergraduates is the

biggest of its kind in Canada, says McNicholas,

a professor in Mathematics and Statistics.

The group has funding support from the

Natural Sciences and Engineering Research

Council of Canada, the Government

of Ontario, the Canada Foundation for

Innovation, and an industry partner,

Compusense Inc.

McNicholas, who held a faculty position

at the University of Guelph from 2007 and

served as the director of the Bioinformatics

programs there for two years, was drawn

to McMaster in July 2014 because of its

culture of inter-disciplinary research.

“Mac is such a great place to be for a

statistician,” he said.

“When I came to think about Mac and

what makes it really attractive, it’s that

research cuts across disciplines. That

inter-disciplinary research is precious to a

statistician. It gives access to incredible data.”

His advice to those striking out with

their own research is to say yes as much as

possible because collaborations are crucial

and lead to great things.

So how does a statistician see big data?

McNicholas, says there are three V’s that

make up big data. Volume means many

variables; variety means those variables are

of different type; and velocity means the

data keeps coming.

“Big data sometimes means constant

updating because data can keep coming.

People think of big data as a lot of data

but it’s more than that.”

McNicholas was an organizer of an

industrial problem-solving workshop at the

Fields Institute in Toronto earlier this year that

led to a collaboration to study data collected

at Rugby Canada Women’s Sevens games.

That data was collected through GPS

sensors on players’ backs that collected

84,000 points of data per player per game

and will be used to study game strategies

and training approaches.

McNicholas is collaborating with

Ayesha Khan in the Department of

Psychology, Neuroscience and Behaviour, to

study the impact of community engagement

on undergraduate learning, and with

Nathan Magarvey in the Department of

Biochemistry and Biomedical Sciences,

who researches drugs from nature.

He’s also collaborating with Stuart

Phillips from the Department of Kinesiology

on the genetic impact of exercise.

McNicholas is impressed by the programs

run by McMaster’s School of Computational

Science and Engineering in training

specialists in computing and big data.

“They’re great programs. I haven’t seen

others like them.”

McNicholas says one of Mac’s great

resources is the Research and High-

Performance Computing Support group,

which helped him with sourcing the

equipment needed for his lab and worked

to help secure research funding.

“They’re magnificent. Without their

presence, we couldn’t do what we do.” n

PAUL McNICHOLAS

The A-Team

“Big data sometimes means constant updating because data can keep

coming. People think of big data as a lot of data but it’s

more than that.”( )

a not-for-profit corporation that

supports advanced computing across

the province.

RHPCS was crucial in setting up the

initial infrastructure needed for the

Canadian Longitudinal Study on Aging,

a national project headquartered at

McMaster Innovation Park. It was also

a key player in launching the LIVELab

research concert hall at the McMaster

Institute for Music and the Mind.

Staff at RHPCS worked on the

infrastructure design in L.R. Wilson Hall,

and have provided research support

to many research groups including the

McMaster Automotive Resource Centre,

the Public Economics Data Analysis

Laboratory, the Digital Music Lab, the

Collaboratory for Research on Urban

Neighbourhoods and the Stem Cell and

Cancer Research Institute.

RHPCS has been successful in

facilitating research across campus

by linking faculty members and

staff working on similar problems or

using similar technology, as well as

leveraging research grants.

“We support big projects but often the

most value we can provide is in helping individual researchers who are getting their

teams off the ground,” said Sonnadara.

“Quite often the most innovative

ideas can have bigger pay offs but

they can be less likely to succeed. By

having infrastructure such as RHPCS

provides available, research projects

can be spun up quickly without

requiring a large initial investment.

This allows more freedom to

researchers because their risk is lower,

and can enable them to quickly collect

data that can be leveraged in grant

applications.”

RHPCS was recognized by Orion’s

ACTION report in 2014 as being the

“gold standard” in academic research

computing support in Canada. n

– continued from previous page

Innovative infrastructure

Paul McNicholas

11

Imaging the 100 billion neurons of the brain

produces a lot of data

all by itself.

But with access to

the thousands of users

of apps designed to

improve brain function,

researchers have more data than they

ever dreamed of, says Allison Sekuler,

co-director of the Vision and Cognitive

Neuroscience Lab at McMaster.

When the lab uses an EEG test to collect

brain wave information, each patient is set

up with 256 electrodes that can measure

brain activity every millisecond for an

hour or more.

But there is a practical limit to how

many subjects can be measured in a lab,

so typical studies report results from just

10 to 20 individuals.

To overcome that limit, the lab is

collaborating with InteraXon, the Toronto-

based developer of Muse, a EEG headband

that measures the electrical activity of the

brain to help users achieve better focus

and lower stress levels.

Muse sends data collected through

sensors in the headband to a smartphone

app. The app then guides the user to shift to

a more focused, less stressed state of mind.

Purchasers are asked if the data

collected by the device and stored in

cloud servers can be used for brain

research. About 68 per cent of users

consent, so a first grouping of data

included more than 6,000 users and

repeated EEG tests over time.

“That gives us access to an incredibly

large amount of brain data we couldn’t

have imagined before,” said Sekuler, a

professor of Psychology, Neuroscience and

Behaviour and Acting Vice-President of

Research at McMaster.

The numbers, which are expected to

be closer to 25,000 unique users when

the lab gets access to data for its next

analyses, brings more clarity to the

variability of aging, says Sekuler, whose

husband Patrick Bennett is co-director of

the lab and chair of the Department of

Psychology, Neuroscience and Behaviour.

“Some people age quickly and some age

much more slowly. When we are looking

at 6,000 people instead of 20, there are

so many more data points to fill in the

missing parts. We may have hundreds of

people in each age group, which allows us

to see the trends so much more clearly.”

That includes the differences in the

aging brains of men and women.

The goal is to map the changes in the

brain and then determine if there are ways

to train it to offset the effects of aging.

The lab presented its first findings out

of the data at the Society for Neuroscience

annual meeting in mid-October. That

round of research was funded by a

National Science and Engineering Research

Council Engage grant.

Future research will include documenting

whether mindfulness and cognitive training

has long-term results beyond narrow tasks.

“We’ll be looking at the neuro-markers to

predict who is benefiting and who isn’t and

assessing how much the brain has improved.”

Sekuler sees tremendous opportunities

to piggyback scientific research with

data generated by apps or devices. She

points to an iPhone game where users

are scanning baggage for contraband at

the airport. It becomes gradually more

challenging.

A colleague studying attention and

learning has access to the playing data of

hundreds of thousands of users.

Smart cars will collect untold numbers

of data points that can feed studies of

distracted driving or the impacts of aging.

Graeme Moffat, director of scientific affairs

at InteraXon, says collaboration with McMaster

researchers is crucial to the company, which

launched in 2009 and released its first

iteration of Muse in August 2014.

“Neuroscience at McMaster is very

highly regarded in Canada and around

the world. It’s impossible for us to do this

kind of work in-house,” he said.

InteraXon is seeking more research

grants to continue partnership with the

neuroscience lab and other scientists at

McMaster. He predicts the neuro-wearable

space to expand rapidly and to have

benefits in treating a range of disorders,

including attention deficit, depression,

dementia, post-traumatic stress and anxiety.

The collaboration has convinced the

company to create the infrastructure to

allow continuous access for researchers to

ever-growing data streams.

“We can’t make the most of the data,”

said Moffat. “No one knows the limits of

what can be done with it.” n

ALLISON SEKULER

Brain buzz

n Research assistant Sarah Batiste instructs a senior research participant on using the Muse band

Allison Sekuler

Phot

o: D

onna

Wax

man

12

How is it that your financial institution, cell

phone company or local cable provider

knows everything about you from your

postal code to your mother’s maiden

name, but they can’t figure out how to

correct extra charges on your monthly

statement without bouncing you from one

department to another, or putting you on

hold for an interminable amount of time?

It’s an all-too-familiar scenario that

underscores one of the challenges of big

data that extends beyond volume, velocity

and variety.

For Fei Chiang, the challenge is ensuring

quality over quantity, focussing on the

veracity and value of the data – whether or

not it’s accurate, consistent and timely.

“Many companies simply aren’t sharing

the data they’ve collected on their

customers,” says Chiang, associate director

of the MacDATA Research Institute. “If

updates aren’t done across departments,

then the department that knows the details

about your service plan won’t know that

you’ve moved, or perhaps changed the

PIN on your account.”

For the computing and software

professor, an easy solution is a central

repository that keeps everyone in synch

with the client’s most current data.

However, this is not always practically

feasible due to synchronization and

concurrency issues.

Chiang and her research group focus on

developing the techniques and tools that

can repair, clean, validate and safeguard

the massive datasets generated by

telecommunications corporations, financial

institutions, health care systems and

industry. Their work ensures that high

quality data is produced, which in turn

ensures valuable insights and real-time

decision making – right down to solving

the issue with your cell phone provider in

one short, efficient phone call.

Chiang’s Data Science Research Lab is

involved in a number of projects, one of

which involves a collaboration with IBM

Canada. Her research team is tackling

the challenge of developing efficient data

cleaning algorithms that can be used in

IBM’s product portfolio.The software technology they’re

developing would enable data cleaning

tools that assess and predict a user’s

intentions with the data. This would enable

more customized software solutions that

curate and clean the data according to the

specific information a user is looking for.

“Take for example the sports industry –

we would create a customized data profile

view that could be used by the fan who

wants the latest stats for their football pool,

and another view for the sports station that

requires in-depth analysis and predictions

for each and every team in the NHL,”

Chiang explains.

“Whether it’s a basketball scout, the

wagering industry or a marketing firm

trying to foresee the World Series MVP so

they can lock up a promotions contract,

we are building automated software

tools to provide users with consistent

and accurate data based on their specific

information needs.”

The added bonus of working with IBM

is that the prestigious global enterprise

provides Chiang’s graduate students

with entrée to the latest ‘real-world’

technologies and tools, plus access to

modern data sets and the opportunity to

solve data quality challenges.

Yu Huang, one of Chiang’s PhD

students, has also had the opportunity

to work with IBM’s Centre for Advanced

Studies as an intern. The internship is

every year for three years, connecting

Huang with architects and software

developers, where he gains not only

leading edge technological knowledge, but

industry relevant experience.

“It’s graduate students like Yu who

are going to solve the next-generation

challenges of Big Data, to harness its

potential and power,” says Chiang.

“It isn’t just the vast amounts of data

that make Big Data ‘big’. The real value

lies in extracting the value from this data

to produce accurate, high quality data, and

to reap the information and insights this

data provides.” n

Quality countsn Fei Chiang and some of her graduate students from the Data Science Research Lab

FEI CHIANG

13

Accurate, timely and useful information

is vitally important to industries and

organizations across the board in today’s

market. But nowhere is that information

more important than in safety critical

domains, says Mark Lawford.

Lawford is a professor of Computing

and Software and is an expert in

software certification, safety critical

real-time systems, and discrete event

systems. He’s also the associate director

of the McMaster Centre for Software

Certification (McSCert), where he and his

colleagues are building effective methods

and efficient tools to demonstrate the

safety and security of software intensive

systems. The next phase of the research

will involve extending the methods and

tools to store, retrieve, and analyze vast

amounts of data while ensuring the

data and analysis are safe and secure.

McSCert, says Lawford, is positioned to

bring the big data revolution to systems

safety analysis for the automotive and

transportation industries, as well as the

healthcare sector.

Lawford knows the importance of

safety from both research and practice.

Prior to joining McMaster nearly 18 years

ago, he consulted on the Darlington

Nuclear Generating Station Shutdown

Systems Redesign project – which earned

him the Ontario Hydro New Technology

Award for Automation of Systemic Design

Verification of Safety Critical Software.

More recently, he was a co-recipient of a

Chrysler Innovation Award for his work

with Dr. Ali Emadi, Canada Excellence

Research Chair in Hybrid Powertrain, on

the next generation affordable electrified

powertrains.

Lawford believes that in the future

much of his time will be spent looking

at how big data is affecting the auto

industry.

He estimates that cars are currently

instrumented with between 60 – 100

sensors, but cautions that’s just the tip of

the iceberg.

“The number of sensors in any one

vehicle is expected to climb to as many as

200,” he says, noting that with autonomous

driving and other advanced features, this

is a conservative estimate.

“With new vehicle sales topping

75 million a year, the amount of data

generated by all of the sensors in all of

these new vehicles is staggering,” he says.

“We want to make sure that auto makers

don’t miss a game changing opportunity

by leaving all that data on the road.”

Lawford and his colleagues at McSCert

want to ensure that data finds its way

back to the dealers and manufacturers.

“We need to get this data into the right

hands to enable them to perform big data

analytics for fault diagnosis and, more

importantly, fault prediction to improve

system safety, as well as for fine tuning

performance and concomitant energy

savings.”

Add to that the information flows

required for vehicle-to-vehicle, and

vehicle-to-infrastructure technologies of

the future, he says, and the implication

of data quality and security become even

more crucial.

The same holds true for health care

systems of the near future, says Lawford,

as interconnected medical devices work in

conjunction to provide improved patient

care, particularly as these systems are

integrated with electronic health records.

McMaster is a recognized world leader

in software engineering and software

certification, working with industry,

regulators and academics from around

the world to improve software intensive

system safety and reliability.

Lawford points to the work of his

colleagues, Tom Maibaum, Ridha Khedri,

Rong Zheng, Doug Down Fei Chiang and

Alan Wassyng, the director of McSCert,

who have set the standard for software

engineering research and have helped

build McMaster’s reputation in this field.

“It’s our collective ability to move

our research out of the lab and into the

marketplace,” he says, referencing research

partnerships with industry leaders like

IBM, Chrysler and GM, to name but a

few. “It’s these industrial collaborations

that will lead, quite naturally, to research

into the use of big data analytics in these

critical domains.”

It’s in these domains, says Lawford,

where we’ll see a rapidly increasing

number of sensors generating data that

are being integrated and monitored. “We

need to use this data to predict vehicle

reliability and safety, and to predict and

improve performance, be that for fuel

economy or patient outcome.” n

MARK LAWFORD

Driving with data

n Mark Lawford is the associate director of the McMaster Centre for Software Certification (McSCert)

Quality counts

14

ELKAFI HASSINI

Supply chain success

The DeGroote School of Business has made

digital analytics a

strategic priority and a

key step to making that

a reality is a new big-

data business analytics

lab to collect consumer

and supplier information for Hamilton and

Burlington companies.

The Marketing and Supply Chain Analytics

Lab (MiSCAN) is now under construction at

the Ron Joyce campus in Burlington.

“Businesses don’t have the big-picture

data. They may have pieces but they may

not have the expertise or the equipment to

analyze the data so they can use it,” said

Elkafi Hassini, research director, supply

chain analytics, for MiSCAN.

“There is a big need for people who can

extract and analyze data and figure out how

to use it to improve business operations.

Most small and medium businesses don’t

have that kind of capacity.”

Big companies are interested in the

project, too, because they need their

suppliers to effectively manage their

supply chains and operate efficiently.

“If they are strong, the big companies

are strong, too.”

Funded by the Canada Foundation for

Innovation, MiSCAN brings together a

number of disciplines within the DeGroote

School of Business. Marketing professor

Sourav Ray is the lab’s research director of

marketing analytics.

Hassini says in addition to finding

partners and customers, the team may

look for campus research collaborators.

He thinks the most likely will be found in

engineering and health sciences.

One of the keys is reassuring potential

customers that all data will be confidential,

says Hassini, an associate professor of

operations management.

Another challenge is developing

graduates who have the breadth of

necessary skills – a blend of computer

science, statistics, optimization modelling

and business – to answer the call for big

data for business.Another step coming out of digital analytics

(along with healthcare management) is

an e-MBA in Digital Transformation, in

collaboration with industry partners that

will launch with a beta version next year.

This program will be the first e-MBA in the

world in the big data space.

Among Hassini’s research projects, he’s

working with students in the E-health

program who study the implications of big

data in the health supply chain of supplies

and patient records.

He’s also looking at using big data for

measuring sustainability within supply

chains. To account for sustainability

across the three pillars – economic,

environmental and social – requires

looking at a broad spectrum of measures.

“Companies are good at measuring

their own performance but to extend to

suppliers, there is no good framework for

that. We need to think about what types

of data to collect and what measures

would be fair, reliable and acceptable

throughout the supply chain.”

Europe is far ahead in measuring

sustainability and sharing that information

with consumers so they can make big

picture buying decisions, says Hassini.

Big data provides the information

to make supply chains more efficient.

That includes customer experiences and

feedback, quality and performance markers

for suppliers, and efficiency measures for

manufacturers. It’s often collected in real

time to make important daily decisions.

That data can be collected through

chips and sensors embedded in equipment

or products, while predictive customer

analytics are used to ensure the right

quantity and mix of products is sent to the

right stores, for instance.

“Apple is that successful because of its

supply chain. They have manufacturing

facilities around the world and they always

have the product at the right place at the

right time.” n

Elkafi Hassini

Big Data is no stranger to McMaster

University. It is, after

all, home to Canada’s

first Research Data

Centre (RDC), which

opened its doors in

December 2000.

McMaster’s RDC is part of the

Canadian Research Data Centre Network

that includes 27 centres across the

country. In partnership with Statistics

Canada’s Research Data Centre Program,

these centres have “literally transformed

quantitative social science research in

Canada,” according to Byron Spencer,

scientific director of McMaster’s RDC.

Spencer, who’s also the director

of McMaster’s Research Institute for

Quantitative Studies and Population,

explains that these secure computer

laboratories on university campuses

across the country allow university,

government and other approved

researchers, the opportunity to analyse

a vast array of social, economic and

health data.

At the McMaster RDC, some 150

researchers have access to detailed, de-

identified information at the individual

and household levels, based on

hundreds of Statistics Canada surveys,

censuses and a number of administrative

databases. Spencer estimates there are

close to 75 projects currently underway.

Access to these files, says Spencer,

allows us to investigate virtually

all aspects of society, including the

health, education, and well-being of

the population. Such research, he says,

leads to a better understanding of our

society and provides an evidence-base

on which to build policy.

The RDCs are supported by the

Canada Foundation for Innovation,

the Social Sciences and Humanities

Research Council, the Canadian

Institutes for Health Research and host

institutions. n

Byron Spencer

McMaster’s Research Data Centre

15

The world’s largest collection of free data

is located right at our fingertips.

But what’s to be done when the desired

sample size is so vast it could take weeks,

months or even years to collect and

process via conventional Internet browsing?

Enter McMaster’s Victor Kuperman,

associate professor of Linguistics and

Languages.

Throughout the past year, Kuperman

and two McMaster PhD candidates have

been reading and studying roughly 1.8

million blogs and webpages from 340,000

websites around the globe using a fleet of

high-powered computers in Togo Salmon

Hall. The sites are personal, commercial

and governmental in nature.

Their search has yielded valuable

information from 20 countries and

geographic regions where English is a

primary language of communication,

including Canada, Australia, New Zealand,

India, Jamaica and the United Kingdom.

The team is also collecting metadata that

allows them to identify the source, genre

and time-of-creation for each piece of text.

Their goal is to mine the web’s

seemingly bottomless pit of “big data,” in

the hopes that their findings will impact

everything from government policy to how

we understand our global neighbours.

“Blogs and websites are active all the time,”

says Kuperman an expert in statistical and

experimental methods in language research.

“People exchange massive quantities of

language and information every second

of the day, and that big data is right there

waiting to be analyzed.”

Most recently, Kuperman’s team has

been focusing on how our perception of

various countries and cultures is impacted

by what we glean from websites and

blogs. They’ve found that first-world

nations in Scandinavia and other Western

European countries are often mentioned

in posts alongside “positive” adjectives and

“excited” sentiments.

By comparison, third-world countries

and nations embroiled in armed conflicts

and political crises are often associated

with “negative” adjectives.

The United States is a unique case,

says Kuperman. Posts involving the U.S.

are often, “more exciting, arousing and

passionate,” whether leaning positive or

negative.

Big data, the foundation of the team’s

research, is a relatively new term used to

describe huge pools of information so large

and unwieldy they’re often impossible to

read or study in any meaningful way.

Kuperman’s team is not only succeeding

in doing so, they’re being rewarded for

their efforts.

The team recently received a $70,000

SSHRC Insight Development Grant,

and will also receive $290,000 from the

Canadian Foundation for Innovation’s John

R. Evans Leaders Fund and the Ontario

Research Fund to continue their work.

The real value in Kuperman’s research

can be found in the forthright nature of the

Internet, and the willingness of its users to

share honest, unfiltered commentary.

In other words, big data culled from the

web provides a unique cross section of

public opinion, without the added social

filter that often comes with face-to-face

interaction.

“Our methods are not unlike traditional

phone surveys run by polling agencies,

where they ask a resident, ‘what do you

think about this or that?’ and record the

data,” says Kuperman.

“The major difference is, people

speak more freely online. They’re much

more open, because it can be a one-

way exchange. Over the phone, in a

one-on-one conversation, you may hold

something back and not express your true

opinion.”

When McMaster’s new L.R. Wilson Hall

is complete, Kuperman and his two PhD

students – Bryor Snefjella and Daniel

Schmidtke, fellows of the Lewis & Ruth

Sherman Centre for Digital Scholarship

– will move across campus and continue

their work using even faster computers and

software in the University’s state-of-the-art

home for the humanities and social sciences.

He’s confident that several more PhD

candidates will be able to join once the lab

relocates, and that the team will be able

to expand their focus into popular social

networks, including Facebook and Twitter.

The latter could prove to be an

invaluable resource for collecting and

studying big data.

Roughly 288 million monthly active

users post more than 500 million tweets

per day, and more than 77 per cent of the

network’s accounts are located outside the

United States. n

VICTOR KUPERMAN

Mining for data

n Victor Kuperman is an expert in statistical and experimental methods in language research

Phot

o: A

ndre

w B

aulc

omb

research.mcmaster.ca

So what’s the big deal on big data? Just ask Guillaume Paré and Andrew McArthur who, with Cisco’s support, are improving the way vast amounts of biomedical data are managed, analyzed, integrated and distributed. They want to make sure that the right data is working for the right people at the right time. Their work will transform the way research is conducted and how healthcare is delivered. The way we see it, that’s a big deal.

McMaster researchers Guillaume Paré, MD, Cisco Professor in Integrated Health Biosystems and Andrew McArthur, PhD, Cisco Research Chair in Bioinformatics

Big Ideas, Better Cities is a year-long series of events showcasing how McMaster research can help cities respond to 21st century challenges.

bigideasbettercities.mcmaster.ca