Summarizing Data. Statistics statistics probability probability vs. statistics sampling inference.

Introduction to Statistics, Probability and Econometrics

-

Upload

dr-michel-zaki-guirguis -

Category

Documents

-

view

210 -

download

8

Transcript of Introduction to Statistics, Probability and Econometrics

Happy New Year 2016

I am working very hard to cover the book introduction to Econometrics. Please ignore the page numbers as in Linkedin are showed differently. I have covered the sections related to linear regression, multiple regression and correlation. I have done an effort to explain in detail all the calculations. I have done vertical integration with Econometrics and I am working very hard to add new examples.

Please refer to the other document Introduction to Econometrics. I have strated to add examples. Please check the level of your understanding and let me know. Thanks.

I have added new sections related to discrete and continuous random variables. I have explained the characteristics of probability distributions in terms of expected value, variance and standard deviation. I have done an introduction to multivariate probability density function in terms of bivariate variables. I have included and example of marginal probability functions. It is very important to fully understand four distributions. The normal distribution, the t-distribution, the chi-square distribution and the F - distribution. I mentioned the t and F distribution in the regression section. I have included a detailed example of t-distribution is the probability distributions section.

I have included a detailed example related to ADF unit root test. Unit root test should be carried in all variables dependent and independent. I have added a detailed section related to cointegration and error correction model, ECM, and causality. I have included a detailed example, of autocorrelation function, ACF, partial autocorrelation function, PACF and Q statistic used in the correlogram. I have included analytical illustration of covariances calculation in Excel. Please start with Jarque – Bera test, which is after the chi-square test until the end of the chapter. They are related to normality, stationarity, cointegration and ECM, causality and autocorrelation. They are important concepts used in Econometrics. I have included examples and screenshots of the related statistics and their interpretation. I wish you a pleasant and peaceful day wit the Econometrics subject. I am adding new sections. It is very important to understand the basic concepts of statistics before starting Econometrics. Everyting has to be done gradually and smoothly in order that you stay relaxed and happy. This is the most important.

All the sections are under revision. I have added a section related to weighted mean, quartiles and percentiles. I have added a new equation in the regression section. I have also added detailed explanations of how to use the Casio model fx – 83MS and fx -83ES. Please check the section related to measures of dispersion. I have included two examples of Casio calculation. List of numbers and grouped data. I have included detailed steps of creating a scatter diagram. Finally. I have included a chart related to Lorenx curve without including bars on the curve.

I have added in the time - series section the exponential smoothing. I am adding all missing graphs. I have added an example of a pie chart with actual and degrees numbers. These are first term sections.

1

I am working very hard to add new examples in sections related to measures of location and dispersion. I am correcting the layout of the formulas. I am sorry to be sloppy in some sections. All the sections are under revision and additions.

Many problems that I have included in my handouts are from the book of Dr Jon Curwin and Roger Slater. This is the book that we were suggesting in the class. The title of the book is quantitative methods for business decisions. I have also included handouts of professor Philip Hardwick. I am in the process of adding new examples. There will be a delay as I am very busy with daily needs and wants.

I have added an Excel example that shows how to calculate skewness, kurtosis and the Jarque – Bera statistics. I have used the Excel formula. You will find skewness and kurtosis. It is the section of measures of dispersion. The Jarque Bera test. It uses a Chi-square distribution. Please be familiar with the calculation and the interpretation. They are used in Econometrics in EViews software.

I have covered the Bayes’ and Chebyshev’s theorem. I have added an additional example of Bayes’theorem.

E-mail me on [email protected]

If you have questions or difficulties, please feel free to e-mail. I have plenty of time to answer your questions.

Thanks once again for your patience and good luck with your future plans and your career.

2

Introduction to Statistics, Probability and Econometrics. A practical guide for first, second and third year undergraduate, postgraduate and research students.

Dr Michel Zaki Guirguis 06/02/2016Bournemouth University1

Institute of Business and LawFern BarrowPoole, BH12 5BB, UKTel:0030-210-9841550Mobile:0030-6982044429Email: [email protected]

Biographical notes

I hold a PhD in Finance from Bournemouth University in the U.K. I have worked for several multinational companies including JP Morgan Chase and Interamerican Insurance and Investment Company in Greece. Through seminars, I learned how to manage and select the right mutual funds according to various clients needs. I supported and assisted the team in terms of six-sigma project and accounts reconciliation. Application of six-sigma project in JP Morgan Chase in terms of statistical analysis is important to improve the efficiency of the department. Professor Philip Hardwick and I have published a chapter in a book entitled “International Insurance and Financial Markets: Global Dynamics and Local Contingencies”, edited by Cummins and Venard at Wharton Business School (University of Pennsylvania in the US). I am working on several papers that focus on the Financial Services Sector.

1 I have left from Bournemouth University since 2006. The permanent address of the author’s is, 94, Terpsichoris road, Palaio – Faliro, Post Code: 17562, Athens – Greece.

3

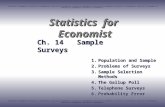

Table of contents

Introduction and definition of statistics 5

Presentation of data 25

Measures of location or central tendency 40

Measures of dispersion 48

Time - series 74

Regression analysis 94

Multiple regression analysis 112

Correlation 128

Probability, set theory and counting principles 137

Factorials, permutation and combinations 154

Probability distributions 157

Confirmatory data analysis or inferential statistics 181

Chi-square test and introduction to Econometrics 221

Introduction to matrix algebra 271

Revision and solutions 286

4

Introduction and definition of statistics

Definition of quantitative approach and aims of the units

It is more than just doing sums, subtraction and multiplication. It is about making sense of numbers within a strategic context.

Unit aims and learning outcomes

Develop knowledge of the fundamental techniques frequently used in the collection, presentation, analysis and interpretation of data.

An ability to calculate a range of descriptive statistics and interpret the results

An ability to analyse data using time – series methods, regression and correlation

Application of the principles of probability and hypothesis testing

Use quantitative methods to obtain accurate and reliable management information

5

Definition of statistics

The study of statistics is concerned with the collection, presentation, analysis and interpretation of numerical data.

Another definition of statistics is that it is a body of methods and theories that are applied when making decisions in the face of uncertainty.

Statistics is divided into two parts

A) Descriptive statistics or exploratory data analysis involves summarising, describing and analysing quantitative data.

B) Confirmatory data analysis or inferential statistics is a group of statistical techniques used to go beyond the data. It involves the analysis and interpretation of data to make generalizations. In other words, to draw conclusions about a population from a quantitative data collected from a sample

Importance of statistics

All of us have to make sense of the numbers presented to us as part of our everyday lives. From the simplest to the most complicated things you need to count and decide which the best solution is.

For example, even buying newspaper involves counting money and checking change. Making a larger purchase such as a car involves the comparison of prices, consideration of interest rates (percentages) and budgeting.

In more complex projects, businesses and politicians are supplied with the quantitative information derived from statistical inference in order to make decisions.

Statistics will be essential to investigate a problem in your final year and make the necessary forecasting based on past observations.

6

Important keywords used in statistics.

Fill in appropriate definitions

Data: The collection together of facts and opinions, typically in numerical form, provides data. So data is essentially sets of raw numbers.

Information:

Variable:

Frequency distribution: It shows the number of times each value occurs (frequency).

Random

Bias

Population

Sample

7

Solution

• Data: The collection together of facts and opinions, typically in numerical form. So data is essentially sets of raw numbers

• Information: Arranging these data in a meaningful way become information.

• Variable: an attribute of an entity that can change and take different values which are capable of being observed or measured.

• Frequency distribution: It shows the number of times each value occurs.

• Random number: Selected by chance to avoid bias.

• Bias: the data collected misrepresent the population or sample of interest then we have bias.

• Population: A body of people or any collection of items under consideration.

• Sample: A subset of a population

8

Types of data and how to collect them through the internet

Secondary data is existing information which has been published by the government or other researchers for some other purpose.

• Official statistics supplied by the Office for National Statistics (ONS) http://www.statistics.gov.uk

• Annual company reports through Financial Times http://www.ft.com

• Economic trends for all markets http://www.econstats.com

• Mintel market intelligence reports (monthly reports on consumer products). It can be found in the main library of Talbot Campus.

Time series data: are data that vary across time and used for forecasting about past trends.

• Official statistics supplied by the Office for National Statistics (ONS) http://www.statistics.gov.uk

Other types of data

Cross-sectional are data collected at a single point of time. For example, numerical values for the consumption expenditures and disposable income of each family at a particular point in time say in 2000

Primary data are collected by the researcher for a specific purpose. They are collected from samples using face-to-face interviews or questionnaires.

Discrete data can take only one of a range of distinct values, such as number of employees

Continuous data can take any value within a given range such as time or length

Pooled data could be mixed cross – sectional and time – series data.

9

Formulas for sums. They are very important and common in Econometrics

10

Data presentation

1) Presenting data in an array

In an array the raw data are presented in ascending order.

Advantages of data array

• We can quickly notice the lowest and highest values in data.

• We can see whether any values appear more than once in the array.

• We can observe the distance between succeeding values in the data.

2) Frequency distribution

• It shows the number of times each value occurs. It presents data in a compact form and gives a good overall picture.

• For example, the distribution of the population of UK by age.

3) Relative frequency distribution

By expressing the frequency of each value as a fraction or a percentage of the total number of observations we get relative frequency as fraction or a percentage. A percentage is a statistic which summarizes the data by describing the proportion or part in every 100

% relative frequency

Where: f = the frequency Σ = the sum of individual frequencies

11

4) Cumulative frequency distribution

• It shows the total number of times that a value above or below a certain amount occur.

Exercise

The following data represents the value of the weekly revenues in £(m)of the bank the last 50 weeks.

210 110 95 80 80 95 105 65 70 70200 95 60 80 75 150 170 60 70 195

70 190 170 70 95 160 70 50 65 8560 140 105 65 140 90 190 120 45 6545 65 75 45 45 75 75 45 55 140

In an array the raw data are presented in order of magnitude:

45 45 45 45 45 50 55 60 60 6065 65 65 65 65 70 70 70 70 7070 75 75 75 75 80 80 80 85 9095 95 95 95 105 105 110 120 140 140140 150 160 170 170 190 190 195 200 210

Required:

Prepare a Frequency distribution table

12

Class £ (m) Frequencies

45 but less than 65

65 but less than 85

85 but less than 105

105 but less than 125

125 but less than 145

145 but less than 165

165 but less than 185

185 but less than 205

205 but less than 225

Total 50

Relative frequency distribution

Class £ (m) % Relative frequencies

45 but less than 65

65 but less than 85

85 but less than 105

105 but less than 125

125 but less than 145

145 but less than 165

165 but less than 185

185 but less than 205

205 but less than 225

Total

Cumulative and percentage cumulative frequency distribution

Class: value of revenues £ (m)

Frequency Cumulative frequencies

% Cumulative frequencies

13

Less than 65 10 10Less than 85 18 28 = (10+18)Less than 105 6Less than 125 4Less than 145 3Less than 165 2Less than 185 2Less than 205 4Less than 225 1Total 50 100

Solution

14

Prepare a frequency distribution

Class £ (m) Frequencies45 but less than 65 10

65 but less than 85 18

85 but less than 105 6

105 but less than 125 4

125 but less than 145 3

145 but less than 165 2

165 but less than 185 2

185 but less than 205 4

205 but less than 225 1

Total 50

Relative f requency d istributions

15

When the frequencies are expressed as proportions or percentages, the frequency distribution is called a relative frequency distribution.

Class: value of revenues £ (m) Frequency % Relative frequencies

45 but less than 65 10 20

65 but less than 85 18 36

85 but less than 105 6 12

105 but less than 125 4 8

125 but less than 145 3 6

145 but less than 165 2 4

165 but less than 185 2 4

185 but less than 205 4 8

205 but less than 225 1 2

Total 50 100

Cumulative frequency distributions and % cumulative frequencies

Class: value of Frequency Cumulative % Cumulative

16

revenues £ (m) frequencies frequenciesLess than 65 10 10 20Less than 85 18 28 (10+18) 56Less than 105 6 34 (28+6) 68Less than 125 4 38 76Less than 145 3 41 82Less than 165 2 43 86Less than 185 2 45 90Less than 205 4 49 98Less than 225 1 50 100Total 50

So the cumulative frequency is calculated from the running total of the frequency. Example in the second row 28 is obtained from the addition of 10 +18, which are figures from the frequency table and so on.

Please arrange the following data in a frequency distribution table. The width is 5000.

17

5000, 5000, 6000, 10000, 11000, 12000, 13,000, 15000, 17,000, 20000, 21,000 25000, 26,000, 30000, 32000, 35000, 37000, 40000, 41000, 43000, 45000, 47,000 50000

Solution

Value of cars in pounds Number of cars ( frequency)5000 but less than 10000 310000 but less than 15000 415000 but less than 20000 220000 but less than 25000 225000 but less than 30000 230000 but less than 35000 235000 but less than 40000 240000 but less than 45000 345000 but less than 50000 2

1) Answer the following question by circling True (T) or False (F).

18

1. In comparison to a data array, the frequency distribution has the advantage of

representing data in compressed form. T F

2. A population is a collection of all the elements we are studying T F

3. One disadvantage of the data array is that it does not allow us to easily find the

highest and lowest values in the data set T F

4. A data array is formed by arranging raw data in order of time of observation

T F

5. As a general rule, statisticians regard a frequency distribution as incomplete if

it has fewer than 20 classes. T F

6. Primary data is existing information which has been published by the

government T F

7. Discrete data can take any value within a given range, such as time or length

T F

8. Interval scale is a measure which only permits data to be classified into named

categories T F

9. A survey should be designed and administered in such a way as to minimize

the chance of bias (an outcome which does not represent the population of

interest). T F

10. When there are a large number of observations, it is often convenient to

classify the raw data into a frequency distribution. T F

2) Multiple choice questions.

Please select the right answer by circling a, b, c, or d.

19

1. Which of the following represents the most accurate scheme of classifying

data?

(a) Quantitative methods

(b) Qualitative methods

(c) A combination of quantitative and qualitative methods

(d) A scheme can be determined only with specific information about the

situation.

2. Why is it true that classes in frequency distributions are all inclusive?

(a) No data point falls into more than 2 classes

(b) There are always more classes than data points

(c) All data fit into one class or another.

(d) All of these

3. Advantages of data array are the following:

(a) We can quickly notice the lowest and highest values in data

(b) We can easily divide the data into sections

(c) We can see whether any values appear more than once in the array

(d) All of the above

4. Graphs of frequency and relative frequency distributions are useful because

(a) They emphasize and clarify patterns that are not shown in tables

(b) Easy to Interpret

(c) They help you to draw conclusion quickly about your data

(d) All of the above

3) Fill the gap with the right word

20

1. A ___________ is a collection of all the elements in a group. A

collection of some, but not all, of these elements is a ___________

2. Dividing data points into similar classes and counting the number of

observations in each class will give a ___________ distribution.

3. If a collection of data is called a data set, a single observation would be

called a ________________

4. Data which can take only one of a range of distinct values, such as

number of employees are known as __________, while data which can

take any values within a given range such as time or length are known

as ___________

5. Age is considered to be a __________ data but it is considered as

_________ data and the reason is that ______________

Solution

21

1: T

2: T

3: F

4: F

5: F

6: F

7: F

8: F

9: T

10: T

1.d

2.c

3.d

4.d

1. population/ sample2. Frequency distribution3. Data point4. Discrete/ continuous5. Continuous/ discrete/ last birthday age

Essential Reading

22

John Curwin and Roger Slater (2002), Quantitative Methods For Business Decisions. Fifth Edition. Thomson Learning. ch 1-2, ch 4 (pp67-73)

Further Reading

Stanley Letchford (1994), Statistics for Accountants. Chapman and Hall.

BPP Publishing (1997), Business Basics. A study guide for degree students. Quantitative methods.

Presentation of data

Definition of histogram

23

A histogram is a means of illustrating a frequency distribution and should give the reader an impression of the distribution of values among the various classes.

Steps to construct a histogram

(1) Plot the frequencies on the vertical axis and the classes (in this case value of revenue £ (m)) on the horizontal axis.

(2) Construct the bars of the histogram so that their heights represent frequencies and their widths represent the class intervals.

(3) The bars should be joined together at the class boundaries ( in this case 65, 85, 105, etc)

Frequency distribution table

Class: value of revenues £(m) Frequencies45 but less than 65 1065 but less than 85 1885 but less than 105 6105 but less than 125 4125 but less than 145 3145 but less than 165 2165 but less than 185 2185 but less than 205 4205 but less than 225 1Total 50

Construct an appropriate histogram for the revenues £(m) data taken at the bank

Solution

24

Histogram

02468

101214161820

45 butless

than 65

65 butless

than 85

85 butless

than 105

105butlessthan125

125butlessthan145

145butlessthan165

165butlessthan185

185butlessthan205

205butlessthan

225

Value of revenues

Freq

uenc

ies

Histogram with unequal class intervals

When the class intervals are of unequal width (or size), then the vertical axis which represent the height of the bar and it is the frequency must be adjusted. For example,

25

if the width of a particular class intervals doubles then we must halve the height. We must do this to keep the areas of class interval proportional to the frequencies.

Steps to construct Histogram with unequal class intervals

1) The width of each bar on the chart must be proportionate to the corresponding class interval.

2) A standard width of bar must be selected. This should be the

size of the smallest class interval.

3) Open – ended classes must be closed off in order to avoid gaps.

4) Each frequency is then multiplied by (standard class width / actual class width) to obtain the height of the bar in the histogram.

Consider a frequency distribution with unequal class intervals.

Revenues outstanding (£) Frequencies

Less than 200 30 200 Less than 400 40 400 less than 800 30 100

Construct an appropriate histogram

Solution

Revenues outstanding Frequencies

26

0 less than 200 30200 less than 400 40400 less than 800

The histogram will be as follows:

Histogram

05

1015

20

253035

4045

0 less than 200 200 less than 400 400 less than 800

Revenues outstanding

Freq

uenc

ies

Another exercise that show the adjusted frequencies

Income group in pounds (000)

Frequencies Adjusted frequencies

27

10 but under 15 80 8015 but under 20 100 10020 but under 30 80

30 but under 50 40

The histogram will be as follows:

Histogram

0

20

40

60

80

100

120

10 but under15

15 but under20

20 but under30

30 but under50

Income group

Adj

uste

d fr

eque

ncie

s

Frequency polygon

To construct a frequency polygon, plot the frequencies on the vertical axis against the class mid – points on the horizontal axis.

28

Note that this is equivalent to joining together the mid – points of the tops of the bars in a histogram.

Class: value of revenues £(m)

Class mid-points Frequencies

45 but less than 65 1065 but less than 85 1885 but less than 105 6105 but less than 125 4125 but less than 145 3145 but less than 165 2165 but less than 185 2185 but less than 205 4205 but less than 225 1Total 50

Plot an appropriate frequency polygon for the revenues £(m) data taken at the bank

Solution

Class: value of revenues £(m)

Class mid-points of value of revenues

Frequencies

45 but less than 65 55 1065 but less than 85 75 1885 but less than 105 95 6105 but less than 125 115 4125 but less than 145 135 3145 but less than 165 155 2165 but less than 185 175 2185 but less than 205 195 4205 but less than 225 215 1

Total 50

29

Frequency polygon

02468

101214161820

55 75 95 115 135 155 175 195 215

Class midpoints of value of revenues

Freq

uenc

ies

Cumulative frequency curve (or Ogive)

30

To construct a cumulative frequency curve, plot the cumulative frequencies (or percentage cumulative frequencies) on the vertical axis against the upper class boundaries on the horizontal axis:

Cumulative frequency distribution

Class: value of revenues £ (m) Cumulative frequenciesLess than 65 10Less than 85 28Less than 105 34Less than 125 38Less than 145 41Less than 165 43Less than 185 45Less than 205 49Less than 225 50

Plot an appropriate Ogive for the revenues £(m) data taken at the bank.

Solution

31

Ogive

0

10

20

30

40

50

60

Lessthan

65

Lessthan

85

Lessthan 105

Lessthan 125

Lessthan 145

Lessthan 165

Lessthan 185

Lessthan 205

Lessthan 225

Value of revenues

Cum

ulat

ive

freq

uenc

ies

32

Pie Chart

A pie chart is a useful tool as it shows the total amount of each category split by the 360 degrees of the circle. Each category is represented as a part of the pie. I will show to draw a pie chart using the numbers given and how to convert them in degrees.

For example, consider the delivery orders of Mexican foods in different parts of the United Kingdom.

Different parts Number of orders of Mexican foodsBournemouth 10Brighton 20Southampton 30Portsmouth 40

To construct a pie chart, plot the data. The, select chart wizard. Then, pie. Then, press next. Select values, if you want the numbers to be displayed on the slices.

Number of orders of Mexican food in different parts

10

20

30

40 Bournemouth

Brighton

Southampton

Portsmouth

33

I will convert the above table in degrees.

Different parts Number of orders of Mexican foods

Degrees

Bournemouth 10

Brighton 20 72

Southampton 30 108

Portsmouth 40 144

100

Number of orders of Mexical food in different parts

36

72

108

144Bournemouth

Brighton

Southampton

Portsmouth

34

Lorenz curve

It is often used with income data or with wealth data to show the distribution or more specifically, the extent to which the distribution is equal or unequal. Let us consider the percentage comparison of the population and wealth distribution.

Group Percentage of population

Cumulative percentage population

Percentage of wealth

Cumulative percentage wealth

0 0 0 0Poorest A 50 50 10 10 B 25 50 + 25 =75 20 20 + 10 = 30 C 10 85 10 40 D 10 95 15 55 E 3 98 25 80Richest F 2 100 20 100

To construct a Lorenz curve, plot cumulative percentage wealth in the vertical axis (y) against cumulative percentage population in the horizontal axis (x).

Plot a Lorenz curve for the wealth distribution data.

35

Solution

To construct a Lorenz curve, plot cumulative percentage wealth in the vertical axis (y) against cumulative percentage population in the horizontal axis (x).

Cumulative percentage wealth

Cumulative percentage population

0 010 5030 7540 8555 9580 98100 100

Solution

Lorenz curve

0

10

2030

40

50

60

7080

90

100

0 50 75 85 95 98 100

Cumulative % population

Cum

ulat

ive

% w

ealth

Cumulativepercentage wealthCumulativepercentage population

36

Other commonly used diagrams

Bar charts

A bar chart is a chart which quantities are shown in the form of bars.

Example: A company’s total sales for the years from 1991 to 1996 are as follows:

Year Sales £(000)1991 8001992 12001993 11001994 14001995 16001996 1700

Plot a bar chart here for the total sales data in relation to years.

37

Solution

Bar Chart

0

200

400

600800

10001200

1400

1600

1800

1991 1992 1993 1994 1995 1996

Years

Sale

s

Sales £(000)

38

Measures of location or central tendency

Mean, median, and mode from untabulated data (list of numbers)

Mean

The arithmetic mean (usually shortened to mean) is the name given to the simple average that most people calculate.

For a sample of n values denoted by x the mean is

Where: = mean x: individual observations ∑ = (sigma). It is a symbol used to sum values n = the total number of values

Example: An accountant wants to calculate the average wages of 5 employees which are £ 250, £ 310, £ 280, £ 410, £ 210.

= --------------------------------------------- =

Complete the calculation

Solution

39

Median

The median is the middle value when the numbers are arranged in ascending order. For n values, it is the

[n + 1 / 2] th item.

Example: the revenues transactions in an insurance company:

8 10 12 14 18 20

So the median will be equal to

In this case, we have 6 transactions. By applying the formula (n+1)/2, we have:(6 + 1) / 2 = 3.5

In this case, we are looking to the third and fourth number, which are (12 + 14) / 2 = 13

Another example: Bank expenses transactions are:

25 29 65 72 80

So the median will be equal to

We have five transactions. By applying the formula (n+1)/2, we have: (5 + 1) / 2 = 6 / 2 = 3.

The median will be 65 transactions.

40

Mode

The mode is the value that occurs most frequently and more than once

Example: 3 3 5 6 7 7 7 8

So the mode is

Mode = 7

41

Mean, median and mode from grouped data

Reconsider the frequency distribution of revenues £ (m) taken at the bank

Class: value of revenues £(m)

Class mid-points x Frequencies ƒ

Cumulative Frequencies

ƒx

45 but less than 65 55 10 10 55065 but less than 85 75 18 28 135085 but less than 105 95 6 34 570105 but less than 125 115 4 38 460125 but less than 145 135 3 41 405145 but less than 165 155 2 43 310165 but less than 185 175 2 45 350185 but less than 205 195 4 49 780205 but lee than 225 215 1 50 215Total n = ∑ƒ = 50 ∑ƒx =4990

Solution

Mean

Median

Where: : Median

42

L: lower boundary of the median class i: Width of the median class ( the difference between the class boundaries) n: Sample size F: Cumulative frequency up to, but not including the frequency of the median class ƒm: Frequency of the median class.

Mode

d1= Difference between the frequency of the modal class and the frequency of the preceding class.

d2 = Difference between the frequency of the modal class and the frequency of the succeeding class.

The geometric and harmonic means

The geometric mean

43

It is defined to be the nth root of the product of n numbers. It is useful when we are trying to average percentages. It is also used with index numbers as we will see in other session.

The formula is

Example, given the percentage of time spent on a certain task we have the following data: 30% 20% 65%

A simple mean will be

Make a comparison between the two means.

Solution

The simple mean will be as follows:

Please comment on the two means? Which one you will select and why?

The harmonic mean

The harmonic mean is used when we are looking at ratio data.

Example, we have the following data set:

44

23 25 26 27 23

First of all, you need to find their reciprocals.

Then find their average.

Then take the reciprocal of the answer

Then, contrast with the simple mean.

Solution

The reciprocals are found as follows:

Reciprocal: 1 / 0.04 = 25 transaction

45

The simple mean is

Contrast with the simple mean.

Weighted mean

The mathematical formula for the weighted mean is as follows:

Where : x are individual numerical values related to individual weights w1, w2, etc…

Please consider the following table related to individual numerical values and their weights.

Numerical values Weights10 0.1025 0.1035 0.1040 0.2055 0.2067 0.2080 0.10

1

The weighted mean is as follows:

Measures of dispersion

Standard deviation from untabulated data (list of numbers)

The formula for sample standard deviation is as follows:

46

Where: x = individual observation = the mean n = the total number of observations = the square root Σ = the sum of

An accountant wants to calculate the weekly profits of a business which are £ 250, £ 310, £ 280, £ 410, £ 210.

Please calculate the mean and the standard deviation.

X

250 292 - 42 1764310 292 18 324280 292 - 12 144410 292 118 13924210 292 - 82 6724

∑x = 1460 ∑ = 22880

Complete the calculation

Solution

The mean

=

The sample variance

pounds

The sample standard deviation

(to 2.d.p.).

47

Additional example

Please consider the following dataset:

5, 7, 7, 8, 8

Calculate the variance and the standard deviation.

The first thing is to calculate the mean as follows:

Mean =

The sample variance

The sample standard deviation

48

Standard deviation from ungrouped data

Example: The number of transactions relating to foreign currency in a bank.

Number of transactions (x)

Frequency(ƒ) ƒx ( - ) ( - )2

8 3 24 -7.17 51.4089 154.226712 7 84 -3.17 10.0489 70.342316 12 192 0.83 0.6889 8.266817 8 136 1.83 3.3489 26.791219 5 95 3.83 14.6689 73.3445

n = ∑ƒ =35 ∑ƒx=531

∑ =332.9715

ons transacti17.1535531

n

fxx

Where n is the sum of frequencies ∑ƒ

Complete the calculation

Solution

Standard deviation from grouped data

49

Reconsider the frequency distribution of revenues £ (m) taken at the bank

Class: value of revenues £(m)

Class mid-points( x )

Frequencies (ƒ)

fx

45 but less than 65 55 10 550 -44.8 2007.04 20070.465 but less than 85 75 18 1350 -24.8 615.04 11070.7285 but less than 105 95 6 570 -4.8 23.04 138.24105 but less than 125 115 4 460 15.2 231.04 924.16125 but less than 145 135 3 405 35.2 1239.04 3717.12145 but less than 165 155 2 310 55.2 3047.04 6094.08165 but less than 185 175 2 350 75.2 5655.04 11310.08185 but less than 205 195 4 780 95.2 9063.04 36252.16205 but less than 225 215 1 215 115.2 13271.04 13271.04Total n = ∑ƒ = 50 ∑ƒx=

4990∑ =102848

Where n is the sum of frequencies ∑ƒ

Complete the calculations

Solution

million pounds. (to 2.d.p.).

Other measures of dispersion

50

The range

Pearson’s coefficient of skewness

Coefficient of Variation

The Range

2 4 6 6 8 9 10

R =

Calculate the range and mention its advantages and disadvantages

Solution

The range = highest value – lowest value

The range = 10 – 2 = 8.

Advantage

It is easy to calculate.

Disadvantage

Please complete the advantage and disadvantage.

Pearson’s coefficient of skewness Pearson’s coefficient of skewness (Sk) is given by the following formula:

51

Skewness =

Where: : is the sample mean. : is the sample median. s: is the sample standard deviation.

The number of transactions relating to foreign currency in a bank

Number of transactions (x)

8 -6.4 40.9612 -2.4 5.7616 1.6 2.5617 2.6 6.7619 4.6 21.16

∑x = 72 ∑ =77.2

Complete the calculations and interpret the result

You need to calculate first the mean, the median and the standard deviation. Then, solve the skewness equation.

Solution

= 16 The median was calculated from the equation n +1 /2 = 5 + 1 / 2 = 3 Thus, the third number from the table is 16. The numbers should be arranged from the smallest to the largest value in ascending order.

Skewness =

In Excel, you will find a different formula. The formula is as follows:

52

After plotting your data, the Excel formula is =SKEW(range of data, for example, A1:A10). Skewness is known as the third moment around the mean. It help us to understand the shape of the probability distribution. Skewness is a measure of asymmetry. For a random variable, the first moment of a probability distribution is is the mean. The second moment around the mean is the variance. The third moment is skewness. The distribution could be positively or negatively skewed.

Asymmetry of the distribution

Positive skewed to the right

Mean Median Mode

The mean is bigger than the median bigger than the mode.

Asymmetry of the distribution

Negative skewed to the left

53

Mean Median Mode

Mean is less than the median less than the mode.

In symmetrical distribution, the mean = median = mode.

Mean = median = mode

I will illustrate based on the above example how to calculate skewness by using the Excel formula.

Number of transactions (x)A2:A6

Average or

( )

8 14.4 -6.4 -262.14412 14.4 -2.4 -13.82416 14.4 1.6 4.09617 14.4 2.6 17.57619 14.4 4.6 97.336

-156.96

The sample standard deviation is 4.39318 and the third moment is 4.39318^3 = 84.79

54

If you use the formula of skewness in Excel as follows:

=SKEW(A2:A6), then, you should get -0.77.

In this case the distribution of the data is negatively skewed to the left.

Kurtosis is the fourth moment around the mean. If the probability distribution function has value less than 3, then, the distribution is platykurtic. If the kurtosis value is greater than 3, then, the distribution is leptokurtic. A normal distribution has a kurtosis value of three and it is called mesokurtic. It is a measure of tallness or flatness of the probability distribution.

The formula for kurtosis in Excel is as follows:

After plotting your data, the Excel formula is =KURT(range of data, for example, A1:A10).

I will illustrate based on the above example how to calculate kurtosis by using the Excel formula.

Number of transactions (x)

A

Averageor

8 14.4 -6.4 1677.7216

55

12 14.4 -2.4 33.177616 14.4 1.6 6.553617 14.4 2.6 45.697619 14.4 4.6 447.7456

2210.896

The sample standard deviation is 4.39318 and the third moment is 4.39318^4 = 372.49

The formula for kurtosis in Excel is as follows:

8 = -0.58

After plotting your data, the Excel formula is =KURT(range of data, for example, A1:A10).

If you use the formula of kurtosis in Excel as follows:

=KURT (A2:A6), then, you should get -0.58.

Is it platykurtic, leptokurtic or mesokurtic?

Leptokurtic Mesokurtic

Platykurtic

56

Coefficient of Variation

The coefficient of variation measures the relative dispersion in the data. It is expressed as a pure number without any units. This is to be contrasted with standard deviation and other measures of absolute dispersion.

It is used to compare the relative dispersion of 2 data sets which are measured in different units or have different means. The standard deviation cannot be used directly to compare their variability.

(1)

Where s: is the standard deviation of the sample : is the mean of the sample

Example two data sets:

A: 10 20 30 40 50

B: 5 10 15 2 4

57

Find the coefficient of variation for Data set A and B and compare the results

To be able to solve equation (1), you need to find the mean and the standard deviation for each sample and substitute them in equation (1).

( )

∑ = 1000

2

∑ 2 =

Solution

( )- 20 400- 10 100

0 010 10020 400

∑ = 1000

2

-2.2 4.842.8 7.847.8 60.84-5.2 27.04-3.2 10.24

∑ 2 = 110.8

58

59

Example of interquartile range

Lowest 1st quartile 2nd quartile 3rd quartile HighestObservation Observation Q1 Q2 Q3

The first quartile, Q1, is defined as the median of the first half of the values.The third quartile, Q3, is defined as the median of the second half of the observations. The median, Q2 is the middle value of your dataset and it is calculated from the equation (n+1)/2.

The quartiles divide the area under the distribution into four equal parts

60

1st Median 3 rd

quartile quartile Interquartile range = Q3 – Q1

As an example, consider the following data set.

3 , 5 , 7 , 8 , 10

The median is (n +1)/ 2 = (5 +1) / 2 = 3In the example mentioned, the median = 7.

Q1 is the median of the values below the calculated median. In this case, it is 3+5 /2 = 4. The first quartile is also defined as a number for which 25% of the data is less than that number.

Q3 is the median of the values above the calculated median. In this case,it is 8+10 / 2 = 9. The third quartile is also defined as a number for which 75% of the data is less than that number. You could check the percentage figure using the relative frequency %. Interquartile range = 9 - 4 = 5.

Data set Relative frequency %3 0.095 0.157 0.218 0.2410 0.30

Total 33

Another example. Please consider the following dataset of numerical values:

1, 2, 3, 4, 5, 6, 7, 8, 10, 9

The first step is to arrange the data in ascending order:

1, 2, 3, 4, 5, 6, 7, 8, 9, 10

The median is (n +1)/ 2 = (10 +1) / 2 = 5.5The median is 5 + 6 / 2 = 5.5

Q1 is the median of the values below the calculated median. The first five values are 1, 2, 3, 4, 5.

The median of the dataset is (5+1) / 2 = 3Thus, the third value is 3. Q1 = 3.

61

Q3 is the median of the values above the calculated median. The last five values are 6, 7, 8, 9, 10The median of the dataset is (5+1) / 2 = 3Thus, the third value is 8. Q3 = 8.

Percentiles

The value that is relatd to the Pth percentile is found by the following equation:

For example, let’s assume that we have n = 15 numbers arranged in ascending order.

2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16

It is required to calculate which value is equal the 8th percentile.

By applying the formula we have the following result:

Another example to consider is the following dataset:

5, 7, 8, 9, 10, 11, 12, 13, 14, 15

n = 10

Please calculate the 20th percentile.

62

P20 =

The second number in the ordered list is 7.

P20 = 7

Consider the frequency distribution table which shows the beverage expenditure of 100 households.

Beverage expenditure in pounds frequency0 under 1000 10

1000 under 2000 82000 under 3000 63000 under 4000 44000 under 5000 35000 under 6000 2

It is required the following:

1) Construct a histogram and a cumulative frequency curve.2) Calculate the mean, the median and the standard deviation.3) Calculate the coefficient of skewness and comment on the result.

Solution

1) Histogram and a cumulative frequency curve.

63

0123456789

10

Frequency

0under1000

1000under2000

2000under3000

3000under4000

4000under5000

5000under6000

Beverage expenditure in pounds

Histogram

frequency

Cumulative frequency

0

5

10

15

20

25

30

35

Lessthan1000

Lessthan2000

Lessthan3000

Lessthan4000

Lessthan5000

Lessthan6000

Beverage expenditure in pounds

Cum

ulat

ive

freq

uenc

y

Cumulativefrequency

64

2) Calculate the mean, the median and the standard deviation.

Solution

This is the layout of the table in Excel.

Beverage expenditure in pounds

frequencyMidpoint f * Midpoint

0 under 1000 10 500 50001000 under 2000 8

1500 120002000 under 3000 6

2500 150003000 under 4000 4

3500 140004000 under 5000 3

4500 135005000 under 6000 2 5500 11000

Total 33 70500

I have included the table for the standard deviation. Please complete the calculations and thanks for your effort.

Beverage expenditure in pounds

Class mid-points( x )

Frequencies (ƒ)

fx

0 under 1000

500

10 5000 500 – 2136.36 =-1636.36

2677674.05 26776740.5

1000 under 2000 1500 82000 under 3000 2500 63000 under 4000 3500 44000 under 5000 4500 35000 under 6000 5500 2

10Total n = ∑ƒ = 33 ∑ƒx= ∑ =

65

Where: x = individual observation = the mean n = the total number of observations = the square root Σ = the sum of

Once you have calculated the sample standard deviation, then, please, calculate the coefficient of skewness.

Skewness =

Where: : is the sample mean. : is the sample median. s: is the sample standard deviation.

Instructions of how to use your Casio calculator model fx – 83ES

66

Clear the Memory

Shift ------- 9 ------- 3------ = -------- AC Key

Measures of location and dispersion from a list of numbers

Clear the Memory

Shift ------- 9 ------- 3------ = -------- AC Key

Mode --------- 2 (STAT) ------- 1(1-VAR)--------Input the numbers. Example, 5 then = , 2then = etc……

AC-------Shift ------------ 1 (STAT) --------- 5 (Var) -------- Then select any measures of location and dispersion according to the number. To select more than one statistic you have to repeat the whole procedure.

Measures of location and dispersion from grouped data

Clear the Memory

Shift ------- 9 ------- 3------ = -------- AC Key

Shift -------- Mode -------- press the arrow down --------- then select 3 (STAT) ------- then 1 (ON)

Mode ----- 2 (STAT)-------- 1 (1-VAR)-----Then input your x values. For example, 3 then =, 4 then equal. Through the arrow, you can move from one table to the other. Thus, input your x values first then the frequency.

Finally, AC------shift ----- 1(STAT) ------- 5 (VAR) ------- then select according to the numbers the appropriate measures of location and dispersion. Repeat the same procedure namely from shift ------1(STA) -------5 (VAR) to access other measures.

Instructions of how to use your Casio calculator model fx – 83MS

67

Clear the memory / Two options

Shift ------- mode/ CLR------1 The first option clear the memory in the mode that you are.

Or

Shift -------- mode ----- 3 = = It clears everything and you start from the beginning.

Measures of location and dispersion are calculated through the SD function. Press mode and then select 2, which corresponds to the SD function.

Please consider the data set 3, 4, 7, 8, 10, 15, 17, 20

Once that you are in the SD mode, then, please start to input your data as follows:

3 M+ which is the first observation n = 1 4 M+ This is the second observation n = 2 7 M+ n = 3 8 M+ n = 410 M+ n = 515 M+ n = 617 M+ n = 720 M+ n = 8

Please make sure that you have inserted eight observations.

By selecting for example 1 and pressing =, you will get the mean. In this example, the mean = 10.5

By pressing 3 and =, you will get the sample standard deviation. In this example, it is

How about this example. How are you going to calculate in Casio fx – 83MS the mean and the sample standard deviation from grouped data.

Class: value of Class mid- Frequencies fx

68

revenues £(m) points( x ) (ƒ)45 but less than 65 55 10 550 -44.8 2007.04 20070.465 but less than 85 75 18 1350 -24.8 615.04 11070.7285 but less than 105 95 6 570 -4.8 23.04 138.24105 but less than 125 115 4 460 15.2 231.04 924.16125 but less than 145 135 3 405 35.2 1239.04 3717.12145 but less than 165 155 2 310 55.2 3047.04 6094.08165 but less than 185 175 2 350 75.2 5655.04 11310.08185 but less than 205 195 4 780 95.2 9063.04 36252.16205 but less than 225 215 1 215 115.2 13271.04 13271.04Total n = ∑ƒ = 50 ∑ƒx=

4990∑ =102848

Where n is the sum of frequencies ∑ƒ

The steps are as follows:

Measures of location and dispersion are calculated through the SD function. Press mode and then select 2, which corresponds to the SD function.

The dataset that we are interesting in is the midpoint (x) followed by the frequencies. I have attached the table for convenience.

Class mid- Frequencies

69

points( x ) (ƒ)55 1075 1895 6115 4135 3155 2175 2195 4215 1

n = ∑ƒ = 50

Once that you are in the SD mode, then, please start to input your data as follows:When you are dealing with grouped data, you will need to use semicolon (;).

The data will be inserted as follows:

55; 10 M+ which is the first observation n = 10 75; 18 M+ This is the second observation n = 28 95; 6 M+ n = 34115; 4 M+ n = 38135; 3 M+ n = 41155; 2 M+ n = 43175; 2 M+ n = 45195; 4 M+ n = 49215; 1 M+ n = 50

If you get a syntax error, press delete and continue to input your data. You should get at the end n = 50, which is the total frequencies. If you get a different number, then, you inserted a wrong number and you should make a new start.

By selecting for example 1 and pressing =, you will get the mean. In this example, the mean = 99.8

By pressing 3 and =, you will get the sample standard deviation. In this example, it is

70

Time - series

Definition of time - series

A time - series is a statistical series which shows how a given set of data has been changing over time.

71

Components of a time - series

Time - series are often composed of four distinct types of movement:

(a) Trend (T) this is the general movement in the data which represent the general direction in which the figures are moving.

(b) Seasonal Variation (S) these are regular fluctuations which take place within one complete period. If the data are quarterly, then they are fluctuations specifically associated with each quarter. If the data are daily, then fluctuations are associated with each day.

(c) Cyclical Variation (C) this is a longer term regular fluctuation which may take several years to complete. To identify this factor we would need to have annual data

(d) Random Variation (R). These are all those factors that may make a difference at a particular point in time. However, from time to time they do have a significant, but unpredictable, effect on the data. For example, weather forecasting these are not yet predictable.

In the following example, we ignore cyclical variation(C) and consider T, S and R only.

In this session, we will assume that the total variation in the time-series (denoted by y) is the sum of the trend (T), the seasonal variation (S) and the random variation (R).

This is called the additive model:

y = T + S + R

Since the random element is unpredictable, we shall make an assumption that its overall value, or average value, is 0. Thus, the equation becomes as follows:

y = T + S or S = y - T

In the alternative multiplicative model, it is assumed that:

y = T x S x R

The random element is still assumed to have an average value of 0, but in this case the assumption is that this average value is 1. Thus, the equation becomes as follows:

y = T x S or S = y / T

The additive model is appropriate when the variations about the trend are of similar magnitude in the same period of each year.

72

The multiplicative model is preferred when the variations about the trend tend to increase or decrease proportionately with the trend.

Example of an additive model of time - series

Consider the following example in which y represents a company’s quarterly sales (£000).

Year Quarter y 4 quarter Centred Seasonal moving average MA effect (MA) (T) y - T 1 1 87.5 2 73.2 78.5 3 64.8 79.2 78.85 -14.05 4 88.5 79.9 79.55 2 1 90.3

2 76.0

3 69.2

4 94.7

3 1 93.9

2 78.4

3 72.0

4 100.3

Complete the table

The four quarter moving average for the first observation is displayed between quarter 2 and 3. The 78.5 figure was obtained by adding 87.5 + 73.2 + 64.8 + 88.5 and dividing by four. The 78.85 figure was obtained by adding 78.5 + 79.2 and dividing by two. The seasonal effect was obtained by subtracting sales from the trend.

73

Plot the y and T values on the vertical axis against time in years and quarters on the horizontal axis.

Estimating the seasonal variation

The seasonal variation can be estimated by averaging the values of y-t for each quarter.

74

Quarters 1 2 3 4

Years 1 2

3

Total

Average

Strictly, these seasonal factors should sum to zero. If the sum differs significantly from zero the seasonal factors should be adjusted to ensure a zero sum. The net value of unadjusted average could be adjusted by changing the sign for example – to + then dividing by 4.

Quarters 1 2 3 4

Average(unadjusted S)

Adjusted S

Sum all adjusted S = 0

Forecasting

To forecast the company’s sales in the first quarter of year 4:

(1) Calculate the average increase in the trend from the formula:

75

Where Tn is the last trend estimate (85.5 in the example), T1 is the first trend estimate (78.9) and n is the number of trend estimates calculated.

In the example, the average increase in the trend is:

(85.5 – 78.90) / 7 = 0.94

(2) Forecast the trend for the first quarter of year 4 by taking the last trend estimate and adding on three average increases in the trend. This gives:

85.5 + (3 x 0.94) =

(3)Now adjust for the seasonal variation by adding on the appropriate seasonal factor for the first quarter.

Forecast = 88.32 + =

Complete the calculation

Now repeat the above for the second, third and fourth quarters of year 4

Forecasting the company sales year 4

Year Quarter Sales Trend

4 1

76

2

3 4

Solution of the additive model of the time - series problem

Consider the following example in which y represents a company’s quarterly sales (£000).

Year Quarter y 4 quarter Centred

77

moving average MA y - T (MA) (T) 1 1 87.5 2 73.2 78.5 3 64.8 79.2 78.85 -14.05 4 88.5 79.9 79.55 8.95 2 1 90.3 81.0 80.45 9.85 2 76.0 82.55 81.775 -5.775 3 69.2 83.45 83 -13.8 4 94.7 84.05 83.75 10.95 3 1 93.9 84.75 84.4 9.5

2 78.4 86.15 85.45 -7.05 3 72.0

4 100.3

Estimating the seasonal variation

The seasonal variation can be estimated by averaging the values of y-t for each quarter.

78

Quarters 1 2 3 4

Years 1 -14.05 8.95 2 9.85 -5.775 -13.8 10.95

3 9.5 -7.05 Total 19.35 -12.825 -27.85 19.9Average 9.675 -6.4125 -13.925 9.95

These results imply that quarters 1 and 4 are high sales quarters whereas quarter 2 and quarter 3 are low sales quarters.

Strictly, these seasonal factors should sum to zero. If the sum differs significantly from zero the seasonal factors should be adjusted to ensure a zero sum.

In this case, a net value of unadjusted S = - 0.7125

+ 0.7125 / 4 = 0.178125

Quarters 1 2 3 4

Average 9.675 -6.4125 -13.925 9.95 (Unadjusted S)

Adjusted S 9.853125 -6.234375 -13.746875 10.128125

Adjusted S = 0

I have included the layout of the table with the data that you will input in Excel to get the line chart. Sales + trend are plotted in the vertical axis and the quarters in the horizontal axis. To calculate a four quarter moving average, press tools in Excel, then, data analysis, then, select moving average. In the input range select and input all the sales figure. In the box of interval, please write 4, as we use quarterly data. In output range, select any cell and press OK. Adjust the data to start from the second quarter of

79

year 1. Then, calculate the centered moving average by adding, for example, the first two figures of the four quarter moving average and dividing by two. Then, calculate the seasonal effect. It is the sales minus the centered moving average for each quarter.

Quarter Sales Trend1 87.5 2 73.2 3 64.8 78.854 88.5 79.551 90.3 80.452 76 81.7753 69.2 834 94.7 83.751 93.9 84.42 78.4 85.453 72 4 100.3

Additive model of time series

0

20

40

60

80

100

120

1 2 3 4 1 2 3 4 1 2 3 4

Quarters

Sale

s +

tren

d

SalesTrend

I have also added the layout of the table and the graph in Excel that shows sales in different quarters.

Quarter Sales1 87.52 73.23 64.8

80

4 88.51 90.32 763 69.24 94.71 93.92 78.43 724 100.3

Sales in different quarters

0

20

40

60

80

100

120

1 2 3 4 1 2 3 4 1 2 3 4

Quarters

Sale

s

Sales

Forecasting

To forecast the company’s sales in the first quarter of year 4:

(1) Calculate the average increase in the trend from the formula:

81

Where Tn is the last trend estimate (85.45 in the example), T1 is the first trend estimates (78.85) and n is the number of trend estimates calculated. In our case, we have eight.

In the example, the average increase in the trend is:

(85.45 – 78.85) / 7 = 0.94

(2) Forecast the trend for the first quarter of year 4 by taking the last trend estimate and adding on three average increases in the trend. This gives:

85.45 + (3 x 0.94) = 88.27

(3) Now adjust for the seasonal variation by adding on the appropriate seasonal

factor for the first quarter.

Forecast = 88.27 + 9.853125 = 98.12

Now repeat the above for the second, third and fourth quarters of year 4

Forecasting the company sales year 4

Year Quarter Trend (y) Seasonal effect Forecast(trend + or –

82

seasonal effect)4 1 88.27 9.853125 98.12 (to 2.d.p.).

2 89.21 -6.234375 82.98 (to 2.d.p.).3 90.15 -13.746875 76.40 (to 2.d.p.).4 91.09 10.128125 101.22 (to 2.d.p.).

Example of a multiplicative model of time - series.

Consider the level of economic activity over three years expressed in millions pounds.

Year Quarter Economic activity

83

1 1 102 2 110 3 112 4 115

2 1 101 2 113 3 114 4 118

3 1 120 2 121 3 122 4 123

It is the required to calculate the following:

1) Calculate a moving average trend.

2) Calculate the seasonal factors for each quarter using the multiplicative model.

3) Forecast the economic activity for the four quarter of year 4.

Consider the following example in which y represents the economic activity expressed in millions pounds.

Year QuartersEconomic activityy

4 quarter moving average

Centred moving average, (T)

Seasonal effect, trend.S = y / T

1 1 102 2 110 109.75 3 112 109.5 109.625 1.022

84

4 115 110.25 109.875 1.0472 1 101 110.75 110.5 0.914

2 113 111.5 111.125 1.017 3 114 116.25 113.875 1.001 4 118 118.25 117.25 1.006

3 1 120 120.25 119.25 1.006 2 121 121.5 120.875 1.001 3 122 4 123

Estimating the seasonal variation

The seasonal variation can be estimated by averaging the values of y/T for each quarter.

Year Quarter 1 Quarter 2 Quarter 3 Quarter 41 1.022 1.0472 0.914 1.017 1.001 1.0063 1.006 1.001 0 0

Total 1.92 2.018 2.023 2.053Average 0.96 1.009 1.0115 1.0265

These results imply that quarter 1 recorded a low economic activity and quarters 2, 3 and 4 recorded high economic activity.The sum of the averages should equal to 4. In our case, we have 0.96 + 1.009 + 1.0115 + 1.0265 = 4.0105. The adjustments are made by multiplying each average by 4 / 4.0105 = 0.998. The following table shows the adjustments.

Quarter Average Adjustment Adjusted average Seasonal effect rounding1 0.96 0.998 0.958 0.962 1.009 0.998 1.007 1.013 1.0115 0.998 1.009 1.014 1.0265 0.998 1.024 1.02

Total 4

Forecasting

We have used the same steps as the additive models except that we multiplied the seasonal factor instead of adding it.

Calculate the average increase in the trend from the formula:

85

Where Tn is the last trend estimate (120.875 in the example), T1 is the first trend estimates (109.625) and n is the number of trend estimates calculated. In our case, we have eight.

In the example, the average increase in the trend is:

(120.875 – 109.625) / 7 = 1.61

Forecast the trend for the first quarter of year 4 by taking the last trend estimate and adding on three average increases in the trend. This gives:

120.875 + (3 x 1.61) = 125.705 120.875 + (4 x 1.61) = 127.315 120.875 + (5 x 1.61) = 128.925 120.875 + (6 x 1.61) = 130.535

Now adjust for the seasonal variation by multiplying on the appropriate seasonal factor for the first quarter.

Forecast = 125.705 x 0.96 = 120.6768 or 120.68 ( to 2.d.p.).

Forecasting the economic activity for year 4

Year Quarter Trend (y) Seasonal effect Forecast(trend + or – seasonal

effect)4 1 125.705 0.96 120.68 ( to 2.d.p.).

86

2 127.315 1.01 128.59 (to 2.d.p.). 3 128.925 1.01 130.21 ( to 2.d.p.). 4 130.535 1.02 133.15 ( to 2.d.p.).

I have included the layout of the table with the data that you will input in Excel to get the line chart. Sales + trend are plotted in the vertical axis and the quarters in the horizontal axis. To calculate a four quarter moving average, press tools in Excel, then, data analysis, then, select moving average. In the input range select and input all the sales figure. In the box of interval, please write 4, as we use quarterly data. In output range, select any cell and press OK. Adjust the data to start from the second quarter of year 1. Then, calculate the centered moving average by adding, for example, the first two figures of the four quarter moving average and dividing by two. Then, calculate the seasonal effect. It is the sales divided by the centered moving average for each quarter.

Quarters Economic activity Trend1 102 2 110 3 112 109.6254 115 109.8751 101 110.52 113 111.1253 114 113.8754 118 117.251 120 119.252 121 120.8753 122 4 123

87

Multiplicative time series model

0

20

40

60

80

100

120

140

1 2 3 4 1 2 3 4 1 2 3 4

Quarters

Sale

s +

trend

Economic activity

Trend

88

I have also added the layout of the table and the graph in Excel that shows sales in different quarters.

Quarters Economic activity1 1022 1103 1124 1151 1012 1133 1144 1181 1202 1213 1224 123

Sales over quarters

0

20

40

60

80

100

120

140

1 2 3 4 1 2 3 4 1 2 3 4

Quarters

Econ

omic

act

ivity

Economic activity

89

The exponential smoothing analysis tool predicts a value based on the forecast for the prior period. We add a damping factor a to determine determines how strongly forecasts respond to errors in the prior forecast.

Where a = 1 – damping factor. For example, if the damping factor is 0.3, then,

a = 1 – 0.3 = 0.7

The alpha value a varies from 0 to 1. 0 ≤ a ≤ 1. Excel states that values of 0.2 to 0.3 are reasonable smoothing constants. The current forecast should be adjusted 20 to 30 percent for error in the prior forecast.

As an example, please, calculate the exponential smoothing of the price level for the first ten period observations. In Excel, please, go to Tools, then, select data analysis and then exponential smoothing. In the input range select all prices. In the damping factor insert for example 0.3, then, the alpha will be 0.7. The formula for calculating the exponential smoothing will be as follows:

C3 = 0.7 * B2 + 0.3* C2

C4 = 0.7 * B3 + 0.3 * C3

C5 = 0.7 * B4 + 0.3 * C4

C6 = 0.7 * B5 + 0.3 * C5

C7 = 0.7 * B6 + 0.3 * C6

C8 = 0.7 * B7 + 0.3 * C7

C9 = 0.7 * B8 + 0.3 * C8

C10 = 0.7 * B9 + 0.3 * C9

Observations (A) Prices (B) Exponential smoothing calculations in Excel (C)

1 150 N/A

2 110 150

3 105122

4 102110.10

5 90104.43

6 8094.33

90

7 7084.30

8 6074.29

9 5064.29

10 3054.29

The exponential smoothing chart will be as follows:

Exponential Smoothing

0

20

40

60

80

100

120

140

160

1 2 3 4 5 6 7 8 9 10

Data Point

Valu

e Actual

Forecast

91

Regression analysis

Definition of regression analysis

Regression analysis is concerned with examining the relation between two or more variables when it is believed that one of the variables (the dependent variable) is determined by the other variable the independent variable.

Variable: An attribute of an entity that can change and take different values which are capable of being observed / or measured. Sales for example.

Dependent variable: The variable whose values are predicted by the independent variable.

Independent variable: The variable that can be manipulated to predict the values of the dependent variable.

In this session, we focus on simple linear regression which has only one independent variable.

So the equation is:

= a + bx

Scatter diagram

• It is used to show the relationship between two variables. One variable is plotted against the other on a graph which thus displays a pattern of points.

• The pattern of points indicate the strength and direction of the two variables

92

A Scatter diagram

1 Input the dependent y values and the independent x values in two separate columns.

2 Highlight the columns containing the information.3 Go to the chart wizard icon 4 Select the x,y scatter5 Select the first scatter diagram i.e. the one with the points6 Click on Next7 Click on Series

a) In Name write the name of the chartb) Make sure that the cells in the x values and the cells in the y values

correspond to what is in the worksheet8 Click on next

a) In the titles put in the titles for the (x) axis and the (y) axis9 Click on finish and the diagram will appear

Positive linear relation

• If the line around which the points tend to cluster runs from lower left to upper right, the relation is positive.

• It occurs when an increase in the value of one variable is associated with an increase in the value of other

Example

Dependent variable (y) Independent variable ( x )Sales (£ 000) Advertising (£000)1 12 34 44 65 87 98 119 14

93

Sketch a scatter diagram of the data with y on the vertical axis and x on the horizontal axis

Solution

Scatter diagram

0

12

345678

910

0 2 4 6 8 10 12 14 16

Advertising (Pounds, 000)

Sale

s (P

ound

s, 0

00)

94

Negative linear relation

• If the line around which the points tend to cluster runs from upper left to lower right, the relation is negative.

• It occurs when an increase in the value of one variable is associated with a decrease in the value of other. Example, higher interest rates associated with lower house sales

Example

Dependent variable (y) Independent variable ( x )Sales (£ 000) Advertising (£000)14 111 3 9 4 8 6 6 8 4 9 3 10 1 12

Sketch a scatter diagram in which x and y are negatively related

Solution

95

Scatter diagram

0

2

4

6

8

10

12

14

16

0 2 4 6 8 10 12 14

Advertising (Pounds, 000)

Sale

s (

Poun

ds, 0

00)

No relationship

• If the points are scattered randomly throughout the graph, there is no correlation relationship between the two variables

96

The method of least –squares and example

The least squares method of linear regression analysis provides a technique for estimating the equation of a line of best fit in such a way that ∑d=0. The vertical distance between each point and the line is denoted by d which represents the error.

Writing the regression equation as In Econometrics, the regression equation incorporates the error term and the regression equation become:

I will show later in an Excel example and in a tabular form how to calculate the error term. The error tem . It is calculated as the difference of the individual values from the predicted ones.

Another equation to calculate b is as follows:

97

Example

Consider the relationship between money spent on Research and Development(R& D) and the firm’s annual profits during 6 years. The dependent variable (y) is annual profits and the independent variable (x) is expenditures on Research and Development.

Year Annual profits(£000) (y)

Expenditures for R&D(£000) (x)

2003 31 52002 40 112001 30 42000 34 51999 25 31998 20 2

Calculate a and b

Year(n=6)

Annual profits(£000) (y)Dependent variable

Expenditures for R&D(£000) (x) Independent variable

xy x2

2003 31 5 155 252002 40 11 440 1212001 30 4 120 162000 34 5 170 251999 25 3 75 9

98

1998 20 2 40 4∑y = 180 ∑x = 30 ∑xy =1,000 ∑x2 = 200

Let’s try the following equation to calculate b.

99

A , which is the intercept of the regression equation.B, which is the coefficient of the independent variable.r, which is the correlation coefficient.

By following the above steps you should get the following numbers for the attached equation:

100

You get the same result as the equation that we have covered in the class, which is as follows:

a = 30 - (2 x 5) = 20

101

The regression equation may now be written as:

Plot the actual values on the scatter diagram

Then plot the regression equation on the same scatter diagram and check the accuracy of the estimating equation. The individual positive and negative errors must sum to zero

=20+2x Error (d) = - 31 [20+2(5)]= 30 1 40 [20+(2x11)]=42 -2 30 [20+(2x4)]= 28 2 34 [20+(2x5)]= 30 4 25 [20+(2x3)]= 26 -1 20 [20+(2x2)]= 24 -4

∑d = 0

102

It is now possible to predict what the annual profits will be from the amount budgeted for R &D. If the firm spends £8000 for R&D in 2004, it can expect to earn ….

=20 + 2x = 20 +(2x8)= 20 +16= 36 millions of Pounds

So expected £36 millions annual profits.

This is the output that you will get in Excel. I will explain the calculation of the different numbers through mathematical formulas.

Based on the above calculated formulas, you can see from the table that the intercept is 20 and the b coefficient related to the x variable is 2. These numbers are at the bottom of the table. I will show how the regression statistics in terms of multiple R and R square were calculated from the ANOVA table. It is very important to understand the ANOVA table and the mathematical formulas that we use to calculate the different numbers. You will get similar tables in Econometrics. We deal with simple and multiple regression.

SUMMARY OUTPUT

Regression StatisticsMultiple R 0.909091R Square 0.826446Adjusted R Square 0.783058Standard Error 3.24037

103

Observations 6

ANOVA

df SS MS FSignificance

FRegression (Explained variation) 1 RSS 200 200 19.04762 0.012021Residual (It is the error or unexplained variation) 4 SSE 42 10.5Total 5 SST 242

CoefficientsStandard

Error t Stat P-value Lower 95%Upper 95%

Lower 95.0%

Upper 95.0%

Intercept 20 2.645751 7.559289 0.001641 12.6542 27.3458 12.6542 27.3458X variable 2 0.458258 4.364358 0.012021 0.72767 3.27233 0.72767 3.27233

Calculation of the regression

1 Go to Tools2 Select Data Analysis 3 Select Regression4 In the field Input y range highlight the y values5 In the field Input x range highlight the x values

In output options

1 Make sure that the cursor is in the output range field2 Highlight the cell where you want the data to appear3 Make sure that the information in the filed input ranges has not changed

Summary Output will appear on the screen

Multiple R = the correlation co-efficient for the 2 variablesR square is the square of the correlation co-efficient

104

Consider the equation

Y = a + bx

Look at the co-efficients

The Intercept = a The X variable 1 = the slope

The standard rrror givers the idea of the confidence of the co-efficient

When you write the equation write the standard error underneath each co-efficient

105

106

The individual positive and negative errors must sum to zero

=20+2x Error (d) = - 31 [20+2(5)]= 30 1 40 [20+(2x11)]=42 -2 30 [20+(2x4)]= 28 2 34 [20+(2x5)]= 30 4 25 [20+(2x3)]= 26 -1

107

20 [20+(2x2)]= 24 -4 ∑d = 0

In Excel, you will get the following residual output, which is exactly the same as the above table. Please pay particular attention to the residual tests or error term, as they are used in Econometrics to test for the assumptions violations of the original model.

RESIDUAL OUTPUT

ObservationPredicted

Y Residuals1 30 12 42 -23 28 24 30 45 26 -16 24 -4

If you add to the above residual output table the actual y values and you subtract them from the predicted, then, you will get the residuals as follows:

RESIDUAL OUTPUT

ObservationActual Y values

Predicted Y Residuals.

1 31 30 12 40 42 -23 30 28 24 34 30 45 25 26 -16 20 24 -4

The following scatter graph shows the residual plot. You will find similar plot when we are going to use EViews. It is a software that we use to do Econometrical and statistical tests.

108

Residual Plot

-5

-4

-3

-2

-1

0

1

2

34

5

0 2 4 6 8 10 12Resi

dual

s

You can see the deviations between the actual and predicted values of the six observations between variable Y and X.

Exercise

109

Find the least square regression equation that describes the relationship between the age of a truck and its annual repair expense.

Truck number Age of truck in Years (x) Repair expense during last year in hundreds of £ (y)

101 5 7102 3 7103 3 6104 1 4

Writing the regression equation as

a =

Complete the calculations

Multiple regression analysis

110

Multiple regression is an extension of the simple regression model. The difference is that the model has more than one independent variable.

A multiple regression equation has the following format:

For sake of simplicity, we will use a model with two independent variables. Therefore, the equation will be as follows:

The required equations are as follows:

111

Standard errors of the coefficients b1 and b2 that will be used in t-tests are given by the following equations:

Please consider other formulas after covering the correlation section. Thus, other convenient formulas to consider after plotting the numbers of the dependent and independent variables in Excel are as follows:

After plotting the numbers of the dependent and independent variables in Excel, then, calculate the correlation coefficients and the sample standard deviations by using the statistical functions in Excel and the data analysis pack from Tools. After clicking on the data analysis, then, select correlation. In the input range, select the numbers of the dependent and independent variables by including the labels. Then select the cell that your output will be displayed. From the statistical functions, select STDEV. It is the sample standard deviation.

112

Input the values or numbers separately of each variable. The formula will be for example

=STDEV(Cell A: Cell E)

Good luck! Thanks for your patience and participation.

A numerical example will be very helpful to understand the calculations that are involved in a multiple regression analysis with two independent variables. Please consider the example that we have covered in simple regression section by adding an

113

additional independent variable. The dependent variable is annual profits and the independent variables are expenditures for R&D and marketing expenses.

Year Annual profits(£000) (Y)

Expenditures for R&D(£000) (X1)

Marketing expenses(£000) (X2)

2003 31 5 42002 40 11 102001 30 4 82000 34 5 71999 25 3 51998 20 2 3

A convenient way to solve the multiple regression problem is to construct the following Table. Please sum each variable. Then, find the mean of the dependent and independent variables. Then, subtract from each value from its mean.

Year(n=6)

Annual profits(£000) (y)

Expenditures for R&D(£000) (x1)

MarketingExpenses (£000) (x2)

x1 x2

2003 31 5 4 0 0 -2.1666672002 40 11 10 10 6 3.8333332001 30 4 8 -1 -1 1.8333332000 34 5 7 3 0 0.8333331999 25 3 5 -6 -2 -1.1666671998 20 2 3 -11 -3 -3.166667

∑y = 180 ∑x1 = 30

x1y x2y x1x2

0 -2.16667 0 0 4.6960 38.33333 23 36 14.69

0 0 -1.83333 1 3.360 3.333333 0 0 0.69

10 5.833333 2.333333 4 1.3630 31.66667 9.5 9 10.03

∑x1y =100 77 33 ∑ = 50

Please calculate a, b1 and b2 . I have included the required equations.

114

First of all, we calculate b1 and b2.

Then, we substitute the numerical values of b1 and b2 in the following equation to find the intercept.

115