Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions ...1 Introduction 1.1 Avalon-ST...

Transcript of Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions ...1 Introduction 1.1 Avalon-ST...

Intel® Stratix® 10 Avalon-STInterface for PCIe Solutions UserGuideUG-200322017.05.08

Last updated for Intel® Quartus® Prime Design Suite: Quartus Prime Pro v17.1 Stratix 10 Editions

SubscribeSend Feedback

Contents

1 Introduction.................................................................................................................... 61.1 Avalon-ST Interface for PCIe Introduction.................................................................. 61.2 Features................................................................................................................71.3 Release Information ...............................................................................................81.4 Device Family Support ............................................................................................81.5 Recommended Speed Grades................................................................................... 91.6 Performance and Resource Utilization ....................................................................... 91.7 Transceiver Tiles................................................................................................... 101.8 PCI Express IP Core Package Layout........................................................................111.9 Channel Availability...............................................................................................15

2 Quick Start Guide...........................................................................................................172.1 Design Components.............................................................................................. 182.2 Directory Structure............................................................................................... 192.3 Generating the Design...........................................................................................202.4 Simulating the Design........................................................................................... 212.5 Compiling the Design............................................................................................ 23

3 Interface Overview........................................................................................................ 25

4 Parameters ................................................................................................................... 314.1 Stratix 10 Avalon-ST Settings................................................................................. 314.2 Base Address Registers..........................................................................................324.3 Device Identification Registers................................................................................324.4 PCI Express and PCI Capabilities Parameters............................................................ 33

4.4.1 Device Capabilities.................................................................................... 334.4.2 Link Capabilities .......................................................................................334.4.3 MSI and MSI-X Capabilities ....................................................................... 344.4.4 Slot Capabilities .......................................................................................344.4.5 Power Management ..................................................................................354.4.6 Vendor Specific Extended Capability (VSEC)................................................. 36

4.5 Configuration, Debug and Extension Options............................................................ 364.6 PHY Characteristics .............................................................................................. 364.7 Stratix 10 Example Designs....................................................................................37

5 Designing with the IP Core............................................................................................ 385.1 Generation...........................................................................................................385.2 Simulation........................................................................................................... 39

5.2.1 Selecting Serial or PIPE Simulation .............................................................405.2.2 Reducing Counter Values for Simulation....................................................... 40

5.3 Files Generated for Intel FPGA IP Cores and Qsys Pro Systems....................................405.4 Integration and Implementation..............................................................................43

5.4.1 Clock Requirements...................................................................................435.4.2 Reset Requirements.................................................................................. 44

5.5 Required Supporting IP Cores.................................................................................445.5.1 Hard Reset Controller................................................................................ 455.5.2 TX PLL.....................................................................................................45

5.6 Channel Layout and PLL Usage............................................................................... 45

Contents

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide2

5.7 Compiling the Full Design and Programming the FPGA............................................... 50

6 Block Descriptions......................................................................................................... 516.1 Interfaces............................................................................................................52

6.1.1 TLP Header and Data Alignment for the Avalon-ST RX and TX Interfaces.......... 536.1.2 Avalon-ST RX Interface..............................................................................546.1.3 Avalon-ST TX Interface.............................................................................. 576.1.4 TX Credit Interface....................................................................................606.1.5 Interpreting the TX Credit Interface.............................................................616.1.6 Clocks..................................................................................................... 636.1.7 Update Flow Control Timer and Credit Release.............................................. 636.1.8 Resets.....................................................................................................636.1.9 Interrupts................................................................................................ 656.1.10 Transaction Layer Configuration Space Interface..........................................666.1.11 Configuration Extension Bus Interface........................................................686.1.12 Hard IP Status Interface...........................................................................686.1.13 Serial Data Interface............................................................................... 706.1.14 PIPE Interface.........................................................................................706.1.15 Hard IP Reconfiguration........................................................................... 736.1.16 Power Management Interface....................................................................746.1.17 Test Interface......................................................................................... 746.1.18 Message Handling................................................................................... 76

6.2 Errors reported by the Application Layer.................................................................. 776.2.1 Error Handling..........................................................................................78

6.3 Power Management...............................................................................................796.3.1 Endpoint D3 Entry ....................................................................................796.3.2 End Point D3 Exit...................................................................................... 806.3.3 Exit from D3 hot ...................................................................................... 806.3.4 Exit from D3 cold......................................................................................806.3.5 Active State Power Management................................................................. 80

6.4 Transaction Ordering............................................................................................. 806.4.1 TX TLP Ordering........................................................................................806.4.2 RX TLP Ordering....................................................................................... 81

6.5 RX Buffer.............................................................................................................816.5.1 Retry Buffer............................................................................................. 826.5.2 Configuration Retry Status......................................................................... 82

7 Interrupts...................................................................................................................... 837.1 Interrupts for Endpoints.........................................................................................83

7.1.1 MSI Interrupts .........................................................................................837.1.2 MSI-X .....................................................................................................867.1.3 Implementing MSI-X Interrupts.................................................................. 867.1.4 Legacy Interrupts .....................................................................................88

8 Registers....................................................................................................................... 898.1 Configuration Space Registers................................................................................ 89

8.1.1 Type 0 Configuration Space Registers.......................................................... 918.1.2 PCI Express Capability Structures................................................................928.1.3 JTAG Silicon ID ........................................................................................ 948.1.4 Intel Defined VSEC Capability Header ......................................................... 958.1.5 Intel Defined Vendor Specific Header........................................................... 968.1.6 Intel Marker ............................................................................................ 96

Contents

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide3

8.1.7 User Device and Board Type ID ..................................................................968.1.8 General Purpose Control and Status Register................................................ 968.1.9 Uncorrectable Internal Error Status Register ................................................ 968.1.10 Uncorrectable Internal Error Mask Register................................................. 978.1.11 Correctable Internal Error Status Register ..................................................988.1.12 Correctable Internal Error Mask Register ................................................... 98

8.2 Avalon-MM DMA Bridge Registers............................................................................ 998.2.1 PCI Express Avalon-MM Bridge Register Address Map.....................................99

9 Testbench and Design Example ...................................................................................1069.1 Endpoint Testbench ............................................................................................ 1079.2 Chaining DMA Design Examples ........................................................................... 108

9.2.1 BAR/Address Map ...................................................................................1139.2.2 Chaining DMA Control and Status Registers ............................................... 1149.2.3 Chaining DMA Descriptor Tables ............................................................... 116

9.3 Test Driver Module ............................................................................................. 1189.4 DMA Write Cycles ...............................................................................................1199.5 DMA Read Cycles ............................................................................................... 1219.6 Root Port BFM ....................................................................................................122

9.6.1 BFM Memory Map ...................................................................................1249.6.2 Configuration Space Bus and Device Numbering ......................................... 1249.6.3 Configuration of Root Port and Endpoint .................................................... 1249.6.4 Issuing Read and Write Transactions to the Application Layer ....................... 130

9.7 BFM Procedures and Functions .............................................................................1309.7.1 ebfm_barwr Procedure ............................................................................1309.7.2 ebfm_barwr_imm Procedure ....................................................................1319.7.3 ebfm_barrd_wait Procedure .....................................................................1319.7.4 ebfm_barrd_nowt Procedure ....................................................................1329.7.5 ebfm_cfgwr_imm_wait Procedure .............................................................1329.7.6 ebfm_cfgwr_imm_nowt Procedure ............................................................1339.7.7 ebfm_cfgrd_wait Procedure ..................................................................... 1339.7.8 ebfm_cfgrd_nowt Procedure .................................................................... 1349.7.9 BFM Configuration Procedures...................................................................1349.7.10 BFM Shared Memory Access Procedures .................................................. 1369.7.11 BFM Log and Message Procedures ...........................................................1389.7.12 Verilog HDL Formatting Functions ........................................................... 1419.7.13 Procedures and Functions Specific to the Chaining DMA Design Example....... 144

9.8 Setting Up Simulation..........................................................................................1489.8.1 Changing Between Serial and PIPE Simulation ............................................1489.8.2 Using the PIPE Interface for Gen1 and Gen2 Variants .................................. 1499.8.3 Viewing the Important PIPE Interface Signals..............................................1499.8.4 Disabling the Scrambler for Gen1 and Gen2 Simulations ..............................1499.8.5 Disabling 8B/10B Encoding and Decoding for Gen1 and Gen2 Simulations.......149

A Troubleshooting and Observing the Link......................................................................150A.1 Simulation Fails To Progress Beyond Polling.Active State...........................................150A.2 Hardware Bring-Up Issues ...................................................................................150A.3 Link Training ......................................................................................................150A.4 Link Hangs in L0 State.........................................................................................151A.5 Use Third-Party PCIe Analyzer ............................................................................. 152A.6 BIOS Enumeration Issues ....................................................................................152

Contents

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide4

B PCI Express Core Architecture..................................................................................... 154B.1 Transaction Layer ...............................................................................................154B.2 Data Link Layer ................................................................................................. 155B.3 Physical Layer ................................................................................................... 157

C TX Credit Adjustment Sample Code..............................................................................160

D Stratix 10 Avalon-ST Interface for PCIe Solutions User Guide Archive ........................162

E Document Revision History.......................................................................................... 163

Contents

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide5

1 Introduction

1.1 Avalon-ST Interface for PCIe Introduction

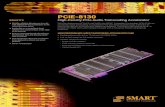

Intel Stratix® 10 FPGAs include a configurable, hardened protocol stack for PCIExpress® that is compliant with PCI Express Base Specification 3.0. The Stratix 10Hard IP for PCI Express IP Core supports Gen1, Gen2 and Gen3 data rates and x1, x2,x4, x8, or x16 configurations.

Figure 1. Stratix 10 PCIe Variant with Avalon-ST InterfaceIn the following figure EMIB abbreviates Embedded Multi-die Interconnect Bridge.

Channels to FPGAAvalon-ST Interface

MappingPLL

Embe

dded

Mult

i-die

Inte

rconn

ect B

ridge

Stratix 10 Avalon-ST Hard IP for PCIe IP Core (altpcie_s10_hip_pipen1b.v)

PCIe Variant with Avalon-ST Interface

H-Tile or L-Tile

PCIe Link

Gen3x16 PCI Express IP Core

Data Link LayerTransaction Layer

Avalon-ST TX

Avalon-ST RX

Hdr & Data Credits

MSI/MSI-X

Clocks

ResetsPCS and PMA

Table 1. PCI Express Data Throughput

The following table shows the theoretical link bandwidth of a PCI Express link for Gen1, Gen2, and Gen3 for 1,2, 4, 8, and 16 lanes. This table provides bandwidths for a single transmit (TX) or receive (RX) channel. Thenumbers double for duplex operation. The protocol specifies 2.5 giga-transfers per second (GT/s) for Gen1,5.0 GT/s for Gen2, and 8.0 GT/s for Gen3. Gen1 and Gen2 use 8B/10B encoding which introduces a 20%overhead.Gen3 uses 128b/130b encoding which introduces about 1.6% overhead.

Link Width

×1 ×2 ×4 ×8 ×16

PCI Express Gen1 (2.5 Gbps) 2 4 8 16 32

PCI Express Gen2 (5.0 Gbps) 4 8 16 32 64

PCI Express Gen3 (8.0 Gbps) 7.87 15.75 31.49 63 126

Related Links

• Introduction to Intel FPGA IP CoresProvides general information about all Intel FPGA IP cores, includingparameterizing, generating, upgrading, and simulating IP cores.

1 Introduction

Intel Corporation. All rights reserved. Intel, the Intel logo, Altera, Arria, Cyclone, Enpirion, MAX, Nios, Quartusand Stratix words and logos are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or othercountries. Intel warrants performance of its FPGA and semiconductor products to current specifications inaccordance with Intel's standard warranty, but reserves the right to make changes to any products and servicesat any time without notice. Intel assumes no responsibility or liability arising out of the application or use of anyinformation, product, or service described herein except as expressly agreed to in writing by Intel. Intelcustomers are advised to obtain the latest version of device specifications before relying on any publishedinformation and before placing orders for products or services.*Other names and brands may be claimed as the property of others.

ISO9001:2008Registered

• Generating a Combined Simulator Setup ScriptCreate simulation scripts that do not require manual updates for software or IPversion upgrades.

• Project Management Best PracticesGuidelines for efficient management and portability of your project and IP files.

• Creating a System QsysProvides information about the Qsys system integration tool that simplifies thetasks of defining and integrating customized IP cores.

1.2 Features

New features in the Quartus Prime Pro v17.1 Stratix 10 ES Editions Software:

• Support for the H-Tile transceiver for up to Gen3 x8 configurations. Refer toTransceiver Tiles for additional information.

• Design examples using the H-Tile available in the Quartus® Prime Pro Editioninstallation directory.

• Support for a Gen3 x16 simulation model that you can use in an Avery testbench.The Avery testbench simulates all 16 lanes. For more information about the Averytestbench, refer to AN-811: Using the Avery BFM for PCI Express Gen3x16Simulation on Intel Stratix 10 Devices. The Intel® simulation testbench supportsGen3 x16 variants by downtraining to Gen3 x8.

The Avalon-ST Stratix 10 Hard IP for PCI Express IP Core supports the followingfeatures:

• Complete protocol stack including the Transaction, Data Link, and Physical Layersimplemented as hard IP.

• ×1, ×2, ×4, ×8 and ×16 configurations with Gen1, Gen2, or Gen3 lane rates forNative Endpoints.

• Avalon®-ST 256-bit interface to the Application Layer.

• Instantiation as a stand-alone IP core from the Quartus Prime Pro Edition IPCatalog or as part of a system design in Qsys.

• Dynamic design example generation.

• PHY interface for PCI Express (PIPE) or serial interface simulation using IEEEencrypted models.

• Testbench bus functional model (BFM) supporting x1, x2, x4, and x8configurations. The x16 configuration downtrains to x8 for Intel (internallycreated) testbench. Alternatively, the Avery testbench that supports allconfigurations is available for a licensing fee.

• Dedicated 69.5 kilobyte (KB) receive buffer.

• End-to-end cyclic redundancy check (ECRC).

Related Links

• Transceiver Tiles on page 10

• AN-811: Using the Avery BFM for PCI Express Gen3x16 Simulation on Intel Stratix10 Devices

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide7

1.3 Release Information

Table 2. Hard IP for PCI Express Release Information

Item Description

Version Quartus Prime Pro v17.1 Stratix 10 ES Editions Software

Release Date May 2017

Ordering Codes No ordering code is required

Related Links

Timing and Power ModelsReports the default device support levels in the current version of the QuartusPrime Pro Edition software.

1.4 Device Family Support

The following terms define device support levels for Intel FPGA IP cores:

• Advance support—the IP core is available for simulation and compilation for thisdevice family. Timing models include initial engineering estimates of delays basedon early post-layout information. The timing models are subject to change assilicon testing improves the correlation between the actual silicon and the timingmodels. You can use this IP core for system architecture and resource utilizationstudies, simulation, pinout, system latency assessments, basic timing assessments(pipeline budgeting), and I/O transfer strategy (data-path width, burst depth, I/Ostandards tradeoffs).

• Preliminary support—the IP core is verified with preliminary timing models forthis device family. The IP core meets all functional requirements, but might still beundergoing timing analysis for the device family. It can be used in productiondesigns with caution.

• Final support—the IP core is verified with final timing models for this devicefamily. The IP core meets all functional and timing requirements for the devicefamily and can be used in production designs.

Table 3. Device Family Support

Device Family Support Level

Stratix 10 Advance support.The Quartus Prime Pro v17.1 Stratix 10 ES Editions Software supports simulation and compilation.Programmer Object File (.pof) support is available for up to Gen3 x8 with an *.ini file.

Other device families No support.Refer to the Intel PCI Express Solutions web page on the Intel website for support information onother device families.

Related Links

• Timing and Power ModelsReports the default device support levels in the current version of the QuartusPrime Pro Edition software.

• PCI Express Solutions Web Page

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide8

1.5 Recommended Speed Grades

Table 4. Stratix 10 Recommended Speed Grades for All Avalon-ST Widths andFrequenciesThe recommended speed grades are for production parts.

Lane Rate Link Width Interface Width Application ClockFrequency (MHz)

RecommendedSpeed Grades

Gen1 x1, x2, x4, x8, x16 256 bits 125 –1, –2, -3

Gen2 x1, x2, x4, x8 256 bits 125 –1, –2, -3

Gen2 x16 256 bits 250 –1, –2, -3

Gen3

x1, x2, x4 256 bits 125 –1, –2, -3

x8 256 bits 250 –1, –2 , -3

x16 256 bits 500 –1, –2

1.6 Performance and Resource Utilization

Because the PCIe protocol stack is implemented in hardened logic, it uses no coredevice resources (no ALMs and no embedded memory).

Related Links

Running the FitterFor information on Fitter constraints.

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide9

1.7 Transceiver Tiles

Stratix 10 introduces several transceiver tile variants to support a wide variety ofprotocols.

Figure 2. Stratix 10 Transceiver Tile Block Diagram

Transceiver Tile (24 Channels) E-Tile

L-Tile and H-Tile

Package Substrate

4

x 10

0GE

EMIB

XCVR

Bank

(6 Ch

anne

ls)

Trans

ceive

r PLL

s

XCVR

Ba

nk(6

Chan

nels)

XCVR

Bank

(6 Ch

anne

ls)PC

Ie H

ard I

P

Refer

ence

Cloc

k Net

work

Clock

Net

work

XCVR

Bank

(6 Ch

anne

ls)

Transceiver Tile (24 Channels) E-Tile

4

x 10

0GE

EMIB

Transceiver Tile (24 Channels) L-Tile/ H-Tile

PCIe

Gen3

Har

d IP

EMIB

®Tra

nsce

iver P

LLs

100G

Ethe

rnet

Har

d IP

Refer

ence

Cloc

k Net

work

Clock

Net

work

Trans

ceive

rs (2

4 Cha

nnels

)

E-Tile

Table 5. Transceiver Tiles Channel Types

Tile Channel TypeChannel Capability

Channel Hard IP accessChip-to-Chip Backplane

L-Tile GX 17.4Gbps (NRZ) 12.5 Gbps (NRZ) PCIe Gen3x16

H-TileGX 17.4 Gbps (NRZ) 17.4 Gbps (NRZ) PCIe Gen3x16

GXT 28.3 Gbps (NRZ) 28.3 Gbps (NRZ) N/A

E-Tile GXE30 Gbps (NRZ),56 Gbps (PAM-4)

30 Gbps (NRZ),56 Gbps (PAM-4)

100G Ethernet

L-Tile and H-Tile

Both L and H transceiver tiles contain four transceiver banks-with a total of 24 duplexchannels, eight ATX PLLs, eight fPLLs, eight CMU PLLs, a PCIe Hard IP block, andassociated input reference and transmitter clock networks. L and H transceiver tilesalso include 10GBASE-KR/40GBASE-KR4 FEC block in each channel.

L-Tiles have transceiver channels that support up to 17.4 Gbps chip-to-chip or12.5Gbps backplane applications. H-Tiles have transceiver channels to support 28Gbpsapplications. H-Tile channels support fast lock-time for Gigabit-capable passive opticalnetwork (GPON).

Stratix GX/SX devices incorporate L-Tiles or H-Tiles. Package migration is availablewith Stratix 10 GX/SX from bL-Tileb to bL-Tileb variants. In addition, device migrationbetween an L-Tile equipped Stratix 10 GX device and an Stratix 10 GX device issupported.

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide10

E-Tile

E-Tiles are designed to support 56 Gbps with PAM-4 signaling or up to 30 Gbpsbackplane with NRZ signaling. E-Tiles do not include any PCIe Hard IP blocks.

1.8 PCI Express IP Core Package Layout

Stratix 10 devices have high-speed transceivers that are implemented on separatetransceiver tiles. The transceiver tiles are located on the left and right sides of thedevice.

Each 24-channel transceiver tile includes one Gen3 x16 PCIe IP Core implemented inhardened logic. The following figures show the layout of PCIe IP cores in Stratix 10devices. Both L- and H-tiles are orange. E-tiles are green.

Figure 3. Stratix 10 GX/SX Devices with 4 PCIe Hard IP Cores and 96 TransceiverChannels

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Package SubstrateEM

IBEM

IB

EMIB

EMIB

Core Fabric

®

GX/SX 1650 UF50 (F2397B)GX/SX 2100 UF50 (F2397B)GX/SX 2500 UF50 (F2397B)GX/SX 2800 UF50 (F2397B)PC

Ie H

IP

PCIe

HIP

PCIe

HIP

PC

Ie H

IP

Figure 4. Stratix 10 GX/SX Devices with 2 PCIe Hard IP Cores and 48 TransceiverChannels

Package Substrate

EMIB

EMIB

Core Fabric

®

GX/SX 850 NF43 (F1760A)GX/SX 1100 NF43 (F1760A)GX/SX 1650 NF43 (F1760A)GX/SX 2100 NF43 (F1760A)GX/SX 2500 NF43 (F1760A)GX/SX 2800 NF43 (F1760A)GX/SX 850 NF48 (F2112A)GX/SX 1100 NF48 (F2112A)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

PCIe

HIP

PCIe

HIP

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide11

Figure 5. Stratix 10 GX/SX Devices with 2 PCIe Hard IP Cores and 48 TransceiverChannels - Transceivers on Both Sides

Package Substrate

Core Fabric

®

GX/SX 650 NF43 (F1760C)

(24 Channels)(24 Channels) EMIB

PCIe

HIP

PCIe

HIP

Transceiver TileTransceiver Tile

EMIB

Figure 6. Stratix 10 Migration Device with 2 Transceiver Tiles and 48 TransceiverChannels

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Package Substrate

EMIB

EMIB

Core Fabric

®

GX 2800 NF45 (F1932)

PCIe

HIP

PCIe

HIP

Note: 1. Stratix 10 migration device contains 2 L-Tiles which match Arria 10 migrationdevice.

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide12

Figure 7. Stratix 10 GX/SX Devices with 1 PCIe Hard IP Core and 24 TransceiverChannels

Package Substrate

Core Fabric

®

GX/SX 400 HF35 (F1152)GX/SX 650 HF35 (F1152)GX/SX 2500 HF55 (F2912A)GX/SX 2800 HF55 (F2912A)GX/SX 4500 HF55 (F2912A)GX/SX 5500 HF55 (F2912A)

Transceiver Tile(24 Channels) PC

Ie H

IP

EMIB

Figure 8. Stratix 10 TX Devices with 1 PCIe Hard IP Core and 144 Transceiver Channels

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Package Substrate

EMIB

EMIB

EMIB

EMIB

EMIB

EMIB

Core Fabric

®

TX 1650 YF55 (F2912B)TX 2100 YF55 (F2912B)

Transceiver Tile(24 Channels) PC

Ie H

IP

Note: 1. Stratix 10 TX Devices use a combination of E-Tiles and H-Tiles.

2. Five E-Tiles support 56G PAM-4 and 30G NRZ backplanes.

3. One H-Tile supports up to 28.3G backplanes.

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide13

Figure 9. Stratix 10 TX Devices with 1 PCIe Hard IP Core and 96 Transceiver Channels

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Package Substrate

EMIB

EMIB

EMIB

EMIB

Core Fabric

®

TX 1650 UF50 (F2397C)TX 2100 UF50 (F2397C)TX 2500 UF50 (F2397C)TX 2800 UF50 (F2397C)

PCIe

HIP

Note: 1. Stratix 10 TX Devices use a combination of E-Tiles and H-Tiles.

2. Three E-Tiles support 56G PAM-4 and 30G NRZ backplanes.

3. One H-Tile supports up to 28.3G backplanes.

Figure 10. Stratix 10 TX Devices with 2 PCIe Hard IP Cores and 72 Transceiver Channels

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Transceiver Tile(24 Channels)

Package Substrate

EMIB

EMIB

EMIB

Core Fabric

®

TX 1650 SF48 (F2212B)TX 2100 SF48 (F2212B)TX 2500 SF48 (F2212B)TX 2800 SF48 (F2212B)

PCIe

HIP

PC

Ie H

IP

Note: 1. Stratix 10 TX Devices use a combination of E-Tiles and H-Tiles.

2. One E-Tile support 56G PAM-4 and 30G NRZ backplanes.

3. Two H-Tiles supports up to 28.3G backplanes.

Related Links

Stratix 10 GX/SX Device OverviewFor more information about Stratix 10 devices.

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide14

1.9 Channel Availability

PCIe Hard IP Channel Restrictions

Each transceiver tile contains a PCIe Hard IP block. The following table and figureshow the possible PCIe Hard IP channel configurations, the number of unusablechannels, and the number of channels available for other protocols. For example, aPCIe x4 variant uses 4 channels and 4 additional channels are unusable.

Table 6. Unusable Channels

PCIe Hard IP Configuration Number of Unusable Channels Number of Channels Available forOther Protocols

PCIe x1 7 16

PCIe x2 6 16

PCIe x4 4 16

PCIe x8 0 16

PCIe x16 16 8

Figure 11. PCIe Hard IP Channel Configurations Per Transceiver Tile

PCIe Hard IP x1

7 ChannelsUnusable

PCIe x1

PCIe Hard IP x2

6 ChannelsUnusable

PCIe x2

PCIe Hard IP x4

4 ChannelsUnusable

PCIe x4

PCIe Hard IP x8

PCIe x8

PCIe Hard IP x16

PCIe x16

Transceiver Tile Transceiver Tile Transceiver Tile Transceiver Tile Transceiver Tile0 0 0 0 0

15

23 23 23 23 23

8 8 87 7

8

134

1

7

2

7

16 ChannelsUsable

16 ChannelsUsable

16 ChannelsUsable

16 ChannelsUsable

8 ChannelsUsable

16

The table below maps all transceiver channels to PCIe Hard IP channels in availabletiles.

Table 7. PCIe Hard IP channel mapping across all tiles

Tile ChannelSequence

PCIe Hard IPChannel

Indexwithin I/O

Bank

Bottom LeftTile BankNumber

Top Left TileBank Number

Bottom RightTile BankNumber

Top Right TileBank Number

23 N/A 5 1F 1N 4F 4N

22 N/A 4 1F 1N 4F 4N

21 N/A 3 1F 1N 4F 4N

continued...

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide15

Tile ChannelSequence

PCIe Hard IPChannel

Indexwithin I/O

Bank

Bottom LeftTile BankNumber

Top Left TileBank Number

Bottom RightTile BankNumber

Top Right TileBank Number

20 N/A 2 1F 1N 4F 4N

19 N/A 1 1F 1N 4F 4N

18 N/A 0 1F 1N 4F 4N

17 N/A 5 1E 1M 4E 4M

16 N/A 4 1E 1M 4E 4M

15 15 3 1E 1M 4E 4M

14 14 2 1E 1M 4E 4M

13 13 1 1E 1M 4E 4M

12 12 0 1E 1M 4E 4M

11 11 5 1D 1L 4D 4L

10 10 4 1D 1L 4D 4L

9 9 3 1D 1L 4D 4L

8 8 2 1D 1L 4D 4L

7 7 1 1D 1L 4D 4L

6 6 0 1D 1L 4D 4L

5 5 5 1C 1K 4C 4K

4 4 4 1C 1K 4C 4K

3 3 3 1C 1K 4C 4K

2 2 2 1C 1K 4C 4K

1 1 1 1C 1K 4C 4K

0 0 0 1C 1K 4C 4K

PCIe Soft IP Channel Usage

PCI Express soft IP PIPE-PHY cores available from third-party vendors are not subjectto the channel usage restrictions described above. Refer to Intel FPGA ➤ Products➤ Intellectual Property for more information about soft IP cores for PCI Express.

1 Introduction

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide16

2 Quick Start GuideUsing Qsys Pro, you can generate a PIO design example for the Avalon-ST Stratix 10Hard IP for PCI Express IP core. The generated design example reflects theparameters that you specify. The PIO example transfers data from a host processor toa target device. It is appropriate for low-bandwidth applications. This design exampleautomatically creates the files necessary to simulate and compile in the Quartus PrimePro software. You can download the compiled design to the Stratix 10 DevelopmentBoard or your own custom hardware.

In addition to the dynamically generated design examples, you can download staticdesign examples from the following directories:

• For the L-Tile transceiver: <install_dir>/ip/altera/altera_pcie/altera_pcie_s10_ed/example_design/s10.

• For the H-Tile transceiver: <install_dir>/ip/altera/altera_pcie/altera_pcie_s10_ed/example_design/s10_htile

The static design examples are available for simulation and compilation.

Figure 12. Development Steps for the Design Example

DesignExample

Generation

Compilation(Simulator)

FunctionalSimulation

Compilation(Quartus Prime)

HardwareTesting

2 Quick Start Guide

Intel Corporation. All rights reserved. Intel, the Intel logo, Altera, Arria, Cyclone, Enpirion, MAX, Nios, Quartusand Stratix words and logos are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or othercountries. Intel warrants performance of its FPGA and semiconductor products to current specifications inaccordance with Intel's standard warranty, but reserves the right to make changes to any products and servicesat any time without notice. Intel assumes no responsibility or liability arising out of the application or use of anyinformation, product, or service described herein except as expressly agreed to in writing by Intel. Intelcustomers are advised to obtain the latest version of device specifications before relying on any publishedinformation and before placing orders for products or services.*Other names and brands may be claimed as the property of others.

ISO9001:2008Registered

2.1 Design Components

Figure 13. Block Diagram for the Qsys PIO Design Example Simulation Testbench

Avalon-STdata

Generated PCIeEndpoint

Variant (DUT)

On-ChipMemory

(MEM)

PCIe Example Design

PIO Application(Memory for Gen3 x16)

hip_serial

hip_pipe

OR

Root Port BFM(RP_BFM)

PCIe Example Design Simulation Testbench

Avalon-MMdata

Figure 14. Important Interfaces and Signals in the Stratix 10 PCIe PIO Design Example

tx_st_hip

rx_st_hip

reset

clk

APPS

hprxms1

reset1

clk1

On-Chip Memory(RAM or ROM)

NPOR

PERST#

REF_CLK

tx_st

rx_st

nreset_status

coreclkout_hip

DUT(Stratix 10 Hard IP

for PCI Express)Serial/PIPE

Interface

Avalon-MM Interface

256 256

256

2 Quick Start Guide

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide18

2.2 Directory Structure

Figure 15. Directory Structure for the Generated Design Example

pcie_s10_hip_0_example_design

pcie_example_design

<top-level design files>

pcie_example_design_tb

pcie_example_design_tb

<simulator script>

testbench component

sim

<simulator>

<simulator script>

<simulator>

ip

pcie_example_design

<design component>.ip

<design component 1>

internal component

sim

synth

<design component <n>

internal component

sim

synth

pcie_example_design.qpf

pcie_example_design.qsf

pcie_example_design.sdc

pcie_example_design.qsys

ip

sim

synth

2 Quick Start Guide

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide19

2.3 Generating the Design

Figure 16. Procedure

Start ParameterEditor

Specify IP Variationand Select Device

SelectDesign Parameters

InitiateDesign Generation

Specify Example Design

Follow these steps to generate your design:

1. In the Quartus Prime Pro software, create a new project (File ➤ New ProjectWizard).

2. Specify the Directory, Name, and Top-Level Entity.

3. For Project Type, accept the default value, Empty project.

4. For Add Files, click Next.

5. For Family, Device & Board Settings, under Family, select Stratix 10(GX/SX) and the Target Device for your design. The Stratix 10 Development Kitincludes the 1SG280HU3F50E3VG device. The following devices are compatiblewith the 1SG280HU3F50E3VG device:

• 1SG280LU3F50E3VGS1

• 1SG280LU3F50E3VG

• 1SG280HU3F50E3VGS1

• 1SG280HU3F50E3VG

In the current release, dynamic design example generation overwrites yourselection with the 1SG280HU3F50E3VG device. Consequently, you can downloadyour design to the Stratix 10 Development Kit.

6. Click Finish.

7. Select Tools ➤ Qsys Pro.

8. For Qsys System or IP Variant, click + and specify a File name. Click Create.Click create again.

9. On the Qsys System Contents tab, delete the clock_in and reset_incomponents that appear by default.

10. In the IP Catalog locate and add the Avalon-ST Stratix 10 Hard IP for PCIExpress.

11. On the IP Settings tabs, specify the parameters for your IP variation.

12. On the System Contents tab, connect refclk to coreclkout_hip.

13. On the Example Designs tab, select the PIO design.

2 Quick Start Guide

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide20

Figure 19. Example Design Tab

Initiates DesignGeneration

14. For Example Design Files, select the Simulation and Synthesis options.

15. For Generated HDL Format, only Verilog is available.

16. For Target Development Kit select the NONE option. Although the Stratix 10Development Kit is not listed, it is available in limited quantities to Betacustomers. Contact Intel.

17. Click the Generate Example Design button. The software generates all filesnecessary to run simulations. Click Close when generation completes.

18. Click Finish.

19. Save your .ip file when prompted.

20. The prompt, Recent changes have not been generated. Generate now?,allows you to create files for simulation and synthesis. Click No to continue tosimulate the design example you just generated.

2.4 Simulating the Design

Figure 20. Procedure

Change to Testbench Directory

Run<Simulation Script>

AnalyzeResults

1. Change to the testbench simulation directory.

2. Run the simulation script for the simulator of your choice. Refer to the table below.

3. Analyze the results.

2 Quick Start Guide

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide21

Table 8. Steps to Run Simulation

Simulator Working Directory Instructions

ModelSim* <example_design>/pcie_example_design_tb/pcie_example_design_tb/sim/mentor/

1. do msim_setup.tcl

2. ld_debug

3. run -all

4. A successful simulation ends with the followingmessage, "Simulation stopped due tosuccessful completion!"

VCS* <example_design>/pcie_example_design_tb/pcie_example_design_tb/sim/synopsys/vcs

1. sh vcs_setup.shUSER_DEFINED_SIM_OPTIONS=""

2. A successful simulation ends with the followingmessage, "Simulation stopped due tosuccessful completion!"

This testbench simulates up to x8 variants. It supports x16 variants by down-trainingto x8. To simulate all lanes of a x16 variant, you can create a simulation model inQsys to use in an Avery testbench. For more information refer to AN-811: Using theAvery BFM for PCI Express Gen3x16 Simulation on Intel Stratix 10 Devices.

The automatically generated testbench completes the following tasks:

• Host allocation of memory

• Reads the DMA read status register

• Sets up two DMA write descriptors in host memory and performs DMA writes

• Checks the status for the DMA writes

• Verifies the data for the DMA writes

The simulation reports, "Simulation stopped due to successful completion" if no errorsoccur.

2 Quick Start Guide

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide22

Figure 21. Partial Transcript from Successful Simulation Testbench

Related Links

AN-811: Using the Avery BFM for PCI Express Gen3x16 Simulation on Intel Stratix 10Devices

2.5 Compiling the Design

You need an Initialization File (.ini) to compile your design in the Quartus Prime Prov17.1 Stratix 10 ES Editions Software.

Be sure that the following assignments are included in yourpcie_example_design.qsf before compiling:

set_instance_assignment -name VIRTUAL_PIN ON -to *pipe_sim_only*set_instance_assignment -name IO_STANDARD "3.0-V LVTTL" -to pcie_rstn_pin_perst

Note: These commands are included in the Quartus Prime Settings Files (*.qsf) for projectsincluded in the <install_dir>ip\altera\altera_pcie\altera_pcie_s10_ed\example_design\s10 and <install_dir>ip\altera\altera_pcie\altera_pcie_s10_ed\example_design\s10_htile directories.

2 Quick Start Guide

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide23

1. On the Processing menu, select Start Compilation.

2. When compilation completes, click Assembler (Generate programming files)to generate an SRAM Object File (.sof).

3. After successfully compiling your design, program the targeted device with theProgrammer and verify the design in hardware.

2 Quick Start Guide

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide24

3 Interface OverviewThe PCI Express Base Specification 3.0 defines a packet interface for communicationbetween a Root Port and an Endpoint. When you select the Avalon-ST interface,Transaction Layer Packets (TLP) transfer data between the Root Port and an Endpointusing the Avalon-ST TX and RX interfaces. The interfaces are named from the point-of-view of the user logic.

Figure 22. Stratix 10 Top-Level Interfaces

Channels to FPGAAvalon-ST Interface

MappingPLL

Embe

dded

Mult

i-die

Inte

rconn

ect B

ridge

Stratix 10 Avalon-ST Hard IP for PCIe IP Core (altpcie_s10_hip_pipen1b.v)

PCIe Variant with Avalon-ST Interface

H-Tile or L-Tile

PCIe Link

Gen3x16 PCI Express IP Core

Data Link LayerTransaction Layer

Avalon-ST TX

Avalon-ST RX

Hdr & Data Credits

MSI/MSI-X

Clocks

ResetsPCS and PMA

The following figures show the PCIe hard IP Core top-level interfaces and theconnections to the Application Layer and system.

3 Interface Overview

Intel Corporation. All rights reserved. Intel, the Intel logo, Altera, Arria, Cyclone, Enpirion, MAX, Nios, Quartusand Stratix words and logos are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or othercountries. Intel warrants performance of its FPGA and semiconductor products to current specifications inaccordance with Intel's standard warranty, but reserves the right to make changes to any products and servicesat any time without notice. Intel assumes no responsibility or liability arising out of the application or use of anyinformation, product, or service described herein except as expressly agreed to in writing by Intel. Intelcustomers are advised to obtain the latest version of device specifications before relying on any publishedinformation and before placing orders for products or services.*Other names and brands may be claimed as the property of others.

ISO9001:2008Registered

Figure 23. Connections: User Application to Stratix 10 Avalon-ST Hard IP for PCIe IPCore

PCIeRX Port

Avalon-STMaster

rx_st_*

PCIeTX Port

Avalon-STSlave

tx_st_*

tx_ph_cdts[7:0]tx_pd_cdts[11:0]tx_nph_cdts[7:0]tx_npd_cdts[11:0]tx_cplh_cdts[7:0]tx_cpld_cdts[11:0]tx_hdr_cdts_consumedtx_data_cdts_consumedtx_cdts_type[1:0]tx_cdts_data_value[1:0]

PCIe Clocks and Resets

coreclkout_hip

refclk

nporcurrent_speed[1:0]

pin_perstpld_clk_inusepld_core_readyreset_statusserdes_pll_locked

PCIe MSI Interface

app_msi_*app_int_stsint_status[3:0]int_status_common[2:0]

PCIe Configuration Space Signals

PCIe Configuration Extension Bus

ceb_*

app_err_*

tl_cfg_*

PCIe Hard IP Status Interface

derr_*int_status[3:0]int_status_common[2:0]lane_act[4:0]link_upltssmstate[5:0]rx_par_errtx_par_err

Application RX Interface

Avalon-STSlave

Application TX Interface

Avalon-STMaster

Application Clocks and Reset Interface

Application MSI Interface

Application Configuration Space Interface

Application Configuration Extension Interface

Application HIP Status Interface

Avalon-ST Interface to the PCIe IP Core Application and System Interfaces

3 Interface Overview

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide26

Figure 24. Connections: PCIe Link and Reconfiguration, Power and Test Interfaces

Hard IP Reconfiguration

(Optional)

Avalon-MMSlave

hip_reconfig_*

PCIe Power Management

pm_*

apps_pm_xmt_pmeapps_ready_entr_123apps_pm_xmt_turnoffapp_init_rstapp_xfer_pending

PCIe Serial Data Interfacerx_in

PCIe PIPE (Simulation Only)

Receive Data

tx_out[<n>-1:0]

[<n>-1:0]

tx *powerdown[1:0]

rate[1:0]rxpolarity

currentrxpreset0[2:0]

currentcoeff0[17:0]rxeqevalrxeqinprogress

rx *

phystatus0

dirfeedback[5:0]

simu_mode_pipesim_pipe_pclk_in

sim_pipe_mask_tx_pll

sim_pipe_rate [1:0]

sim_ltssmstate [5:0]

Hard IP Reconfiguation

(Optional)

Avalon-MMMaster

Application Power Management Interface

test_in[66:0]

testin_zero Application Test Interface

PCIe IP Core Interfaces Application InterfacesPCIe Link

Transmit Data

PCIe Test Interface

The following sections introduce these interfaces. Refer to the Interfaces section in theBlock Description chapter for detailed descriptions and timing diagrams.

Avalon-ST RX

The Transaction Layer transfers TLPs to the Application on this 256-bit interface. TheApplication must assert rx_st_ready before transfers can begin. The latency on thisinterface includes the three-cycle ready latency and the latency of the EmbeddedMulti-die Interconnect Bridge (EMIB) for a total of 17 cycles. Once rx_st_readydeasserts, rx_st_valid will deassert within 17 cycles. Once rx_st_readyreasserts, rx_st_valid will resume data transfer within 17 cycles. To achieve thebest performance the Application must include a receive buffer large enough to avoidthe deassertion of rx_st_ready. Refer to Avalon-ST RX Interface for moreinformation.

Note: Throughout this user guide the terms Application and Application Layer refer to thecustomer logic that interfaces with the PCIe Transaction Layer.

3 Interface Overview

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide27

Avalon-ST TX

The Application transmits TLPs to the Transaction Layer of the IP core on this 256-bitinterface. The Transaction Layer must assert tx_st_ready before transmissionbegins. Transmission must be continuous when tx_st_ready is asserted. The readylatency is three coreclkout_hip cycles. If tx_st_ready deasserts, the Applicationmust stop transmitting data within three cycles. When tx_st_ready reasserts, theApplication must wait for three clock cycles before resuming data transmission. Formore detailed information about the Avalon-ST interface, refer to Avalon-ST TXInterface. The packet layout is shown in detail in the Block Description chapter.

TX Credit

The Transaction Layer TX interface transmits TLPs in the same order as they werereceived from the Application. To optimize performance, the Application can performcredit-based checking before submitting requests for transmission, allowing theApplication to reorder packets to improve throughput. Application reordering isoptional. The Transaction Layer always performs its own credit check beforetransmitting any TLP.

TX and RX Serial Data

This differential, serial interface is the physical link between a Root Port and anEndpoint. The PCIe IP Core supports 1, 2, 4, 8, or 16 lanes. Each lane includes a TXand RX differential pair. Data is striped across all available lanes.

PIPE

This is a 32-bit parallel interface between the PCIe IP Core and PHY. It carries the TLPdata before it is serialized. It is available for simulation only and provides morevisibility for debugging.

ClocksThe PCI Express Card Electromechanical Specification Revision 2.0 defines the inputreference clock. It is a 100 MHz ±300 ppm. The motherboard drives this clock to thePCIe card. The PCIe card is not required to use the reference clock received from theconnector. However, the PCIe card must provide a 100 MHz ±300 ppm to the refclkinput pin.

Table 9. Application Clock FrequencyThe interface to the Application is fixed at 256 bits; consequently, the Application clock frequency depends onlyon the data rate.

Data Rate Clock Frequency

Gen1 x1, x2, x4, x8, and x16 125 MHz

Gen2 x1, x2, x4, and x8 125 MHz

Gen2 x16 250 MHz

Gen3 x1, x2, and x4 125 MHz

Gen3 x8 250 MHz

Gen3 x16 500 MHz

ResetThe PCIe IP core receives two reset inputs:

3 Interface Overview

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide28

• pin_perst is the active low, edge-triggered reset driven from the PCIemotherboard. Logic on the motherboard autonomously generates this fundamentalreset signal.

• npor is an active low, edge-triggered reset signal. The Application drives thisreset signal.

The PCIe IP core reset logic requires a free-running clock input. This free-runningclock becomes stable after the secure device manager (SDM) block assertsiocsrrdy_dly indicating that the I/O Control and Status registers programming iscomplete.

Reset StatusThis interface indicates when the clocks are stable and FPGA configuration is complete.

Hard IP Status

This optional interface includes the following signals that are useful for debugging

• Link status signals

• Interrupt status signals

• TX and RX parity error signals

• Correctable and uncorrectable error signals

Interrupts

The PCIe IP core support Message Signaled Interrupts (MSI), MSI-X interrupts, andLegacy interrupts. MSI, MSI-X, and legacy interrupts are mutually exclusive.

MSI uses the TLP single dword memory writes to implement interrupts. This interruptmechanism conserves pins because it does not use separate wires for interrupts. Inaddition, the single dword provides flexibility for the data presented in the interruptmessage. The MSI Capability structure is stored in the Configuration Space and isprogrammed using Configuration Space accesses.

The Application generates MSI-X messages which are single dword memory writes.The MSI-X Capability structure points to an MSI-X table structure and MSI-X PBAstructure which are stored in memory. This scheme is different than the MSI capabilitystructure, which contains all the control and status information for the interrupts.

Enable Legacy interrupts by programming the Interrupt Disable bit (bit[10]) ofthe Configuration Space Command to 1'b0. When legacy interrupts are enabled, the IPcore emulates INTx interrupts using virtual wires. The app_int_sts port controlslegacy interrupt generation.

Transaction Layer (TL ) tl_cfg ConfigurationThis interface provides time-domain multiplexed (TD) access to a subset of the valuesstored in the Configuration Space registers.

Hard IP ReconfigurationThis optional Avalon Memory-Mapped (Avalon-MM) interface allows you to dynamicallyupdate the value of read-only Configuration Space registers at run-time. It is availablewhen Enable dynamic reconfiguration of PCIe read-only registers is enabled inthe component GUI.

3 Interface Overview

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide29

Power ManagementSoftware uses the power management interface to control the power managementstate machine. The power management output signals indicate the current powerstate. The IP core supports the two mandatory power states: D0 full power and D3preparation for loss of power. It does not support the optional D1 and D2 low-powerstates.

Related Links

• Avalon-ST RX Interface on page 54The Application Layer receives data from the Transaction Layer of the PCIe IPcore over the Avalon-ST RX interface. This is a 256-bit interface.

• Interfaces on page 52This section describes the top-level interfaces in the Avalon-ST Stratix 10 HardIP for PCIe IP core.

• PCI Express Base Specification 3.0

3 Interface Overview

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide30

4 ParametersThis chapter provides a reference for all the parameters of the Stratix 10 Hard IP forPCI Express IP core.

Table 10. System Settings

Parameter Value Description

ApplicationInterface Type

Avalon-ST Selects the interface to the Application Layer.

Hard IP Mode Gen3x16, 256-bit interface, 500 MHzGen3x8, 256-bit interface, 250 MHzGen3x4, 256-bit interface, 125 MHzGen3x2, 256-bit interface, 125 MHzGen3x1, 256-bit interface, 125 MHz

Gen2x16, 256-bit interface, 250 MHzGen2x8, 256-bit interface, 125 MHzGen2x4, 256-bit interface, 125 MHzGen2x2, 256-bit interface, 125 MHzGen2x1, 256-bit interface, 125 MHz

Gen1x16, 256-bit interface, 125 MHzGen1x8, 256-bit interface, 125 MHzGen1x4, 256-bit interface, 125 MHzGen1x2, 256-bit interface, 125 MHzGen1x1, 256-bit interface, 125 MHz

Selects the following elements:• The lane data rate. Gen1, Gen2, and Gen3 are

supported• The Application Layer interface frequencyThe width of the data interface between the hard IPTransaction Layer and the Application Layerimplemented in the FPGA fabric interface is 256-bit.Note: If the Mode selected is not available for the

configuration chosen, an error message displaysin the Message pane.

Port type Native Endpoint Specifies the port type.The Endpoint stores parameters in the Type 0Configuration Space. Only Native Endpoints aresupported in the Quartus Prime Pro v17.1 Stratix 10 ESEditions.

Related Links

PCI Express Base Specification 3.0

4.1 Stratix 10 Avalon-ST Settings

Table 11. System Settings for PCI Express

Parameter Value Description

Enable Avalon-STreset output port

On/Off When On, the generated reset output port clr_st has the samefunctionality as the hip_ready_n port included in the Hard IP Resetinterface. This option is available for backwards compatibility with Arria® 10devices.

Enable byte parityports onAvalon-STinterface

On/Off When On, the RX and TX datapaths are parity protected. Parity is odd. TheApplication Layer must provide valid byte parity in the Avalon-ST TXdirection.

continued...

4 Parameters

Intel Corporation. All rights reserved. Intel, the Intel logo, Altera, Arria, Cyclone, Enpirion, MAX, Nios, Quartusand Stratix words and logos are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or othercountries. Intel warrants performance of its FPGA and semiconductor products to current specifications inaccordance with Intel's standard warranty, but reserves the right to make changes to any products and servicesat any time without notice. Intel assumes no responsibility or liability arising out of the application or use of anyinformation, product, or service described herein except as expressly agreed to in writing by Intel. Intelcustomers are advised to obtain the latest version of device specifications before relying on any publishedinformation and before placing orders for products or services.*Other names and brands may be claimed as the property of others.

ISO9001:2008Registered

Parameter Value Description

This parameter is only available for the Avalon-ST Stratix 10 Hard IP for PCIExpress.

4.2 Base Address Registers

Table 12. BAR Registers

Parameter Value Description

Type Disabled64-bit prefetchable memory32-bit non-prefetchable memory

If you select 64-bit prefetchable memory, 2 contiguous BARsare combined to form a 64-bit prefetchable BAR; you mustset the higher numbered BAR to Disabled. A non-prefetchable 64-bit BAR is not supported because in a typicalsystem, the Root Port Type 1 Configuration Space sets themaximum non-prefetchable memory window to 32 bits. TheBARs can also be configured as separate 32-bit memories.Defining memory as prefetchable allows contiguous data tobe fetched ahead. Prefetching memory is advantageouswhen the requestor may require more data from the sameregion than was originally requested. If you specify that amemory is prefetchable, it must have the following 2attributes:• Reads do not have side effects such as changing the

value of the data read• Write merging is allowed

Size 16 Bytes–8 EBytes Specifies the size of the address space accessible to the BAR.

4.3 Device Identification Registers

Table 13. Device ID RegistersThe following table lists the default values of the read-only Device ID registers. You can use the parametereditor to change the values of these registers. At run time, you can change the values of these registers usingthe optional Hard IP Reconfiguration block signals.

Register Name Default Value Description

Vendor ID 0x00001172 Sets the read-only value of the Vendor ID register. This parameter cannotbe set to 0xFFFF per the PCI Express Base Specification.Address offset: 0x000.

Device ID 0x00000000 Sets the read-only value of the Device ID register.Address offset: 0x000.

Revision ID 0x00000001 Sets the read-only value of the Revision ID register.Address offset: 0x008.

Class code 0x00000000 Sets the read-only value of the Class Code register.Address offset: 0x008.

Subclass code 0x00000000 Sets the read-only value of the Subclass Code register.

continued...

4 Parameters

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide32

Register Name Default Value Description

Address offset: 0x008.

SubsystemVendor ID

0x00000000 Sets the read-only value of Subsystem Vendor ID register in the PCI Type0 Configuration Space. This parameter cannot be set to 0xFFFF per the PCIExpress Base Specification. This value is assigned by PCI-SIG to the devicemanufacturer.Address offset: 0x02C.

Subsystem DeviceID

0x00000000 Sets the read-only value of the Subsystem Device ID register in the PCIType 0 Configuration Space.Address offset: 0x02C

4.4 PCI Express and PCI Capabilities Parameters

This group of parameters defines various capability properties of the IP core. Some ofthese parameters are stored in the PCI Configuration Space - PCI CompatibleConfiguration Space. The byte offset indicates the parameter address.

4.4.1 Device Capabilities

Table 14. Capabilities Registers

Parameter PossibleValues

Default Value Description

Maximumpayload sizessupported

128 bytes256 bytes512 bytes

1024 bytes

128 bytes Specifies the maximum payload size supported. This parametersets the read-only value of the max payload size supported fieldof the Device Capabilities register (0x084[2:0]).Address: 0x084.

Supportextended tagfield

OnOff

On When you turn this option On, the core supports 256 tags,improving the performance of high latency systems. Turning thisoption on turns on the Extended Tag bit in the ConfigurationSpace Device Capabilities register.The IP core tracks tags for Non-Posted Requests. The trackingclears when the IP core receives the last Completion TLP for aMemRd.

4.4.2 Link Capabilities

Table 15. Link Capabilities

Parameter Value Description

Link port number(Root Port only)

0x01 Sets the read-only value of the port number field in the LinkCapabilities register. This parameter is for Root Ports only. It shouldnot be changed.

Slot clockconfiguration

On/Off When you turn this option On, indicates that the Endpoint uses thesame physical reference clock that the system provides on theconnector. When Off, the IP core uses an independent clock regardlessof the presence of a reference clock on the connector. This parametersets the Slot Clock Configuration bit (bit 12) in the PCI ExpressLink Status register.

4 Parameters

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide33

4.4.3 MSI and MSI-X Capabilities

Table 16. MSI and MSI-X Capabilities

Parameter Value Description

MSI messagesrequested

1, 2, 4, 8, 16, 32 Specifies the number of messages the Application Layer canrequest. Sets the value of the Multiple Message Capable fieldof the Message Control register,Address: 0x050[31:16].

MSI-X Capabilities

Implement MSI-X On/Off When On, adds the MSI-X functionality.

Bit Range

Table size [15:0] System software reads this field to determine the MSI-X Table size<n>, which is encoded as <n–1>. For example, a returned value of2047 indicates a table size of 2048. This field is read-only. Legalrange is 0–2047 (216).Address offset: 0x068[26:16]

Table offset [31:0] Points to the base of the MSI-X Table. The lower 3 bits of the tableBAR indicator (BIR) are set to zero by software to form a 64-bitqword-aligned offset. This field is read-only.

Table BAR indicator [2:0] Specifies which one of a function’s BARs, located beginning at 0x10in Configuration Space, is used to map the MSI-X table into memoryspace. This field is read-only. Legal range is 0–5.

Pending bit array (PBA)offset

[31:0] Used as an offset from the address contained in one of thefunction’s Base Address registers to point to the base of the MSI-XPBA. The lower 3 bits of the PBA BIR are set to zero by software toform a 32-bit qword-aligned offset. This field is read-only. 1

Pending BAR indicator [2:0] Specifies the function Base Address registers, located beginning at0x10 in Configuration Space, that maps the MSI-X PBA into memoryspace. This field is read-only. Legal range is 0–5.

4.4.4 Slot Capabilities

Table 17. Slot Capabilities

Parameter Value Description

Use Slot register On/Off This parameter is only supported in Root Port mode. The slot capability isrequired for Root Ports if a slot is implemented on the port. Slot status isrecorded in the PCI Express Capabilities register.Defines the characteristics of the slot. You turn on this option by selectingEnable slot capability. Refer to the figure below for bit definitions.

Slot power scale 0–3 Specifies the scale used for the Slot power limit. The following coefficientsare defined:• 0 = 1.0x• 1 = 0.1x• 2 = 0.01x• 3 = 0.001x

continued...

1 Throughout this user guide, the terms word, dword and qword have the same meaning thatthey have in the PCI Express Base Specification. A word is 16 bits, a dword is 32 bits, and aqword is 64 bits.

4 Parameters

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide34

Parameter Value Description

The default value prior to hardware and firmware initialization is b’00. Writesto this register also cause the port to send the Set_Slot_Power_LimitMessage.Refer to Section 6.9 of the PCI Express Base Specification Revision for moreinformation.

Slot power limit 0–255 In combination with the Slot power scale value, specifies the upper limit inwatts on power supplied by the slot. Refer to Section 7.8.9 of the PCI ExpressBase Specification for more information.

Slot number 0-8191 Specifies the slot number.

Figure 25. Slot Capability

31 19 18 17 16 15 14 7 6 5

Physical Slot Number

No Command Completed SupportElectromechanical Interlock Present

Slot Power Limit ScaleSlot Power Limit Value

Hot-Plug CapableHot-Plug Surprise

Power Indicator PresentAttention Indicator Present

MRL Sensor PresentPower Controller PresentAttention Button Present

04 3 2 1

4.4.5 Power Management

Table 18. Power Management Parameters

Parameter Value Description

Endpoint L0sacceptable latency

Maximum of 64 nsMaximum of 128 nsMaximum of 256 nsMaximum of 512 nsMaximum of 1 usMaximum of 2 usMaximum of 4 usNo limit

This design parameter specifies the maximum acceptable latency thatthe device can tolerate to exit the L0s state for any links between thedevice and the root complex. It sets the read-only value of theEndpoint L0s acceptable latency field of the Device CapabilitiesRegister (0x084).This Endpoint does not support the L0s or L1 states. However, in aswitched system there may be links connected to switches that haveL0s and L1 enabled. This parameter is set to allow system configurationsoftware to read the acceptable latencies for all devices in the systemand the exit latencies for each link to determine which links can enableActive State Power Management (ASPM). This setting is disabled forRoot Ports.The default value of this parameter is 64 ns. This is the safest settingfor most designs.

Endpoint L1acceptable latency

Maximum of 1 usMaximum of 2 usMaximum of 4 usMaximum of 8 usMaximum of 16 usMaximum of 32 usNo limit

This value indicates the acceptable latency that an Endpoint canwithstand in the transition from the L1 to L0 state. It is an indirectmeasure of the Endpoint’s internal buffering. It sets the read-only valueof the Endpoint L1 acceptable latency field of the DeviceCapabilities Register.This Endpoint does not support the L0s or L1 states. However, aswitched system may include links connected to switches that have L0sand L1 enabled. This parameter is set to allow system configurationsoftware to read the acceptable latencies for all devices in the systemand the exit latencies for each link to determine which links can enableActive State Power Management (ASPM). This setting is disabled forRoot Ports.

continued...

4 Parameters

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide35

Parameter Value Description

The default value of this parameter is 1 µs. This is the safest setting formost designs.

4.4.6 Vendor Specific Extended Capability (VSEC)

Table 19. VSEC

Parameter Value Description

User ID registerfrom the VendorSpecific ExtendedCapability

Custom value Sets the read-only value of the 16-bit User ID register from the VendorSpecific Extended Capability. This parameter is only valid for Endpoints.

4.5 Configuration, Debug and Extension Options

Table 20. Configuration, Debug and Extension Options

Parameter Value Description

Enable dynamicreconfiguration ofPCIe read-onlyregisters

On/Off When On, you can use the Hard IP reconfiguration bus to dynamicallyreconfigure Hard IP read-only registers. For more information refer to HardIP Reconfiguration Interface.

Related Links

Hard IP Reconfiguration on page 73The Hard IP reconfiguration interface is an Avalon-MM slave interface with a 21-bitaddress and an 8-bit data bus. You can use this bus to dynamically modify thevalue of configuration registers that are read-only at run time.

4.6 PHY Characteristics

Table 21. PHY Characteristics

Parameter Value Description

Gen2 TX de-emphasis

3.5dB6dB

Specifies the transmit de-emphasis for Gen2. Intel recommends thefollowing settings:• 3.5dB: Short PCB traces• 6.0dB: Long PCB traces.

Enable soft DFEcontroller IP

OnOff

When On, the PCIe Hard IP core includes a decision feedbackequalization (DFE) soft controller in the FPGA fabric to improve the biterror rate (BER) margin. The default for this option is Off because theDFE controller is typically not required. However, short reflective linksmay benefit from this soft DFE controller IP.

VCCR/VCCT supplyvoltage for thetransceiver

1_1V1_0V

Allows you to select the preferred voltage for the transceivers.

4 Parameters

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide36

4.7 Stratix 10 Example Designs

Table 22. Example Designs

Parameter Value Description

Available ExampleDesigns

DMAPIO

When you select the DMA option, the generated example design includes adirect memory access application. This application includes upstream anddownstream transactions. When you select the PIO option, the generateddesign includes a target application including only downstream transactions.

Simulation On/Off When On, the generated output includes a simulation model.

Synthesis On/Off When On, the generated output includes a synthesis model.

Generated HDLformat

Verilog Only Verilog HDL is supported.

Select Board None Stratix 10 development boards are not available at this time.

4 Parameters

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide37

5 Designing with the IP Core

5.1 Generation

Use Qsys Pro to define and generate an Avalon-ST Stratix 10 Hard IP for PCI ExpressIP Core custom component.

Qsys Pro requires you to define a Quartus Prime project before customizing the PCIeIP core in Qsys Pro.

1. In the Quartus Prime Pro software, create a new project (File ➤ New ProjectWizard).

2. Specify the Directory, Name, and Top-Level Entity.

3. For Project Type, accept the default value, Empty project.

4. For Add Files, click Next.

5. For Family, Device & Board Settings, under Family, select Stratix 10(GX/SX) and the Target Device for your design. Refer to Stratix 10 ProductTable for information about package options.

6. Click Finish.

7. To define your Qsys Pro project, select Tools ➤ Qsys Pro.

8. For Qsys System or IP Variant, click + and specify a File name. Click Create.Click create again.

Figure 26. Qsys Pro Create New System

9. In the IP Catalog, locate and select Avalon-ST Stratix 10 Hard IP for PCIExpress.

5 Designing with the IP Core

Intel Corporation. All rights reserved. Intel, the Intel logo, Altera, Arria, Cyclone, Enpirion, MAX, Nios, Quartusand Stratix words and logos are trademarks of Intel Corporation or its subsidiaries in the U.S. and/or othercountries. Intel warrants performance of its FPGA and semiconductor products to current specifications inaccordance with Intel's standard warranty, but reserves the right to make changes to any products and servicesat any time without notice. Intel assumes no responsibility or liability arising out of the application or use of anyinformation, product, or service described herein except as expressly agreed to in writing by Intel. Intelcustomers are advised to obtain the latest version of device specifications before relying on any publishedinformation and before placing orders for products or services.*Other names and brands may be claimed as the property of others.

ISO9001:2008Registered

The parameter editor appears.

10. On the IP Settings tabs, specify the parameters for your IP variation.

Refer to the Parameters chapter for an explanations of all parameters.

11. Click Finish to exit the parameter editor.

— You can now generate a simulation testbench for your customized PCIe IP coreor continue to build your system design.

Related Links

• Parameters on page 31This chapter provides a reference for all the parameters of the Stratix 10 HardIP for PCI Express IP core.

• Creating a System with Qsys

• Stratix 10 Product Table

5.2 Simulation

After you install the Quartus Prime Pro v17.1 Stratix 10 ES Editions Software, you candownload Quartus Prime Archive Files (.qar) from <install_dir>ip\altera\altera_pcie\altera_pcie_s10_ed\example_design\s10. This directoryincludes example designs for the following Endpoint configurations:

• Gen1, Gen2, and Gen3

• x1, x2, x4, x8, and x16

• Avalon-ST, Avalon-MM, and Avalon-MM with DMA

The simulation testbench supports only the Verilog HDL and the following simulators:

• Mentor: ModelSim SE

• Synopsys: VCS

If you choose to simulate an example design from the Quartus Prime Pro installation,the testbench includes the following components:

• An Endpoint (DUT)

• A Root Port BFM

• Memory (MEM)

The examples provided with the Quartus Prime Pro – Stratix 10 Edition 17.1 InterimRelease software installation are static. Consequently, you cannot modify theparameters and regenerate the example design.

Note: The Intel testbench and Root Port BFM provide a simple method to do basic testing ofthe Application Layer logic that interfaces to the variation. This BFM allows you tocreate and run simple task stimuli with configurable parameters to exercise basicfunctionality of the example design. The testbench and Root Port BFM are not intendedto be a substitute for a full verification environment. Corner cases and certain trafficprofile stimuli are not covered. To ensure the best verification coverage possible, Intelsuggests strongly that you obtain commercially available PCI Express verification IPand tools, or do your own extensive hardware testing or both.

5 Designing with the IP Core

Intel® Stratix® 10 Avalon-ST Interface for PCIe Solutions User Guide39

Refer to the Example Design for Avalon-ST Stratix 10 Hard IP for PCI Express chapterto create a simple custom example design using the parameters that you specify.

Related Links

Generating the Design

5.2.1 Selecting Serial or PIPE Simulation

The parameter serial_sim_hwtcl in <working_dir>/<variant>_example_design/pcie_example_design_tb/pcie_example_design_tb/altera_pcie_<dev>_tbed_<ver>/sim/altpcied_<dev>_hwtcl.sv determines the simulation mode. When 1'b1, thesimulation is serial. When 1'b0, the simulation runs in the 32-bit parallel PIPE mode.

5.2.2 Reducing Counter Values for Simulation

You can save simulation time by reducing the value of counters whose default valuesare set appropriately for hardware. The test_in[0] signal controls the values.Setting test_in[0]= 1'b, reduces counter values for simulation. (By default, thegenerated simulation testbench does set test_in[0]= 1'b.)

5.3 Files Generated for Intel FPGA IP Cores and Qsys Pro Systems