Information extraction and …. surroundings · Information extraction and …. surroundings M.T....

Transcript of Information extraction and …. surroundings · Information extraction and …. surroundings M.T....

Chapter 2

Information extraction and …. surroundings M.T. Pazienza Dept. of Computer Science, Systems and Management, University of Roma Tor Vergata, Italy. Abstract

This chapter is intended to be an overall presentation of Information Extraction (IE) motivation, technology and future trends as they appear in the current scientific scenario.

Information Extraction generally refers to automatic approaches to locate important facts in large collections of documents aiming at highlighting specific information to be used for enriching other texts and documents while populating summaries, feeding reports, filling in forms or storing information for further processing (e.g. data mining); the extracted information is usually structured in the form of ‘templates’.

The use of IE systems will support the final user reducing the problem of manually analyzing large amounts of texts for identifying specific pieces of information.

The chapter is organized in several sections. Following topic Introduction, the historical framework is described in which IE approaches get their motivation and related systems were developed. Within such a framework is described the general architecture for IE systems until now considered valid in many kinds of applications.

A key problem for a wide deployment of IE systems is in assessing their flexibility and easy adaptation to new application scenarios. In order to improve such drawbacks, adaptation and portability matters will be discussed. Finally, for exemplification purposes, a few IE systems, developed in the context of European consortia, will be shown. 1 Introduction

The explosion in the amount of textual materials on the Internet, accessible by end-users with different skills, has made the issue of generalized information extraction a central one in natural language processing.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

doi:10.2495/978-1-85312-995-7/02

48 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

Information Extraction (IE) relates to automatic approaches to locate important facts in large collections of documents aiming at highlighting specific information to be used for enriching other texts and documents while populating summaries, feeding reports, filling in forms or storing information for further processing (e.g. data mining); the extracted information is usually structured in the form of ‘templates’. A template is a sort of linguistic pattern (a set of attribute–value pairs with the values being text strings) described by experts to represent the structure of a specific event in a given domain. The process of information extraction [19] may be described in two major steps: the system

– extracts individual ‘facts’ from the text of a document through local text analysis

– integrates these facts producing larger facts or new facts (through inference)

After integration it is possible to represent relevant facts in a specific output format. The template relates to the final output format of extracted information. The scenario identifies the specification of the particular events or relations to be extracted.

Before providing a deeper insight into information extraction framework, let us provide a sort of ‘legenda’ of a few terms that could be confused at a first reading. Meanwhile their comprehension facilitates knowledge of the overall framework of IE and its conceptual surroundings.

1.1 What is information retrieval (IR)?

Information retrieval (IR) is the task of selecting ‘relevant documents’ from a text corpus or collection in response to a user’s information need [35]. Ideally users indicate what information they seek and the system retrieves documents about that topic. The quality of the results is evaluated under recall and precision measures.

A few problems relate the fact that in reality:

1 a user is not able to fully encapsulate its information requirements into a query;

2 it is unlikely that a document author would have used the exact same terminology to express the same content (thus matching query terms against document terms could become fuzzy);

3 as documents are about many topics/subtopics, a user may be interested in only a subset of a document’s content: the query-document matching must account for this.

Then also in the IR context, terminology identification tends to be an important prerequisite.

While the central procedure in an information retrieval operation is the matching operation between text and query, further tasks must be considered in the overall IR framework:

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 49

– categorization (assigning one or more static predefined categories–from closed set of categories–to each document inside a collection).

– filtering (to reflect a fixed information need from a user, it is completed by document streaming against one or more user profiles).

– routing (it requires the distribution of incoming documents to groups or individuals based on content).

– clustering (similar documents are grouped together for subsequent browsing or retrieval).

1.2 What is information extraction?

Information extraction aims to extract facts from documents collections by means of natural language processing techniques [16]. In information extraction we delimit in advance, as part of the specification of the task, the semantic range of the output; the extracted values will feed a previously defined template. The user query is linguistically analyzed both at syntactic and semantic levels searching for the query meaning. The same processing is applied to the document collection for recognizing part of texts with a similar meaning independently from their surface lexical representation.

1.3 What is full text understanding?

With full text understanding we want to represent in an explicit fashion all the information in a text.

Text understanding systems are involved into further tasks as text knowledge extraction and syntheses, summarization, text filtering. They require additional language processing steps for template filling and information extraction from retrieved documents, including reference resolution, sense tagging and summarization.

Text understanding systems way be considered a robust enough technology if trained for a narrowly defined domain. Machine learning and statistical approaches can reduce the effort involved in adapting a text understanding system to a new domain.

1.4 What is mining?

Generally mining deals with inferring information from extracted data, e.g. ‘trends’. It is used for both recognizing phenomena that are not explicit in the data and searching for new relationships among objects represented within one or several documents. For example, defects in a product can be found by using text mining applied on customer’s e-mails: if they all are complaining similar things about a specific product, then we could infer there must be something wrong.

Information extraction (IE) from texts is one of the most important applications of human language technology. Due to the complexity of natural language processing, the overall process could be developed after a few

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

50 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

structuring activities on input text, thus making extraction process more feasible. This process typically begins with lexical analysis (it consists of the assignment of parts-of-speech and features to words and idiomatic phrases through morphological analysis and dictionary lookup) and name recognition (identification of names and other special lexical structures such as dates, currency expressions, etc.).

A further processing activity is related to a syntactic analysis (either full or partial parsing) to identify noun groups, verb groups, and possibly head-complement structures. Only after completion of these activities, task-specific patterns related to facts of interest may be identified.

Being information extraction systems based on linguistic knowledge, there is an intrinsic limitation for their portability in different application domains that requires for new linguistic competences. Moreover, the continuous growing of textual documentation accessible through the web has moved the focus from free-texts-only documents processing to more composite scenarios including semi- and structured texts. Consider that linguistically intensive approaches are difficult to implement or unnecessary in such cases. Within such a framework, traditional IE systems do not fit. In fact those documents are a mixture of grammatical and ungrammatical texts, sometimes telegraphic, including meta-tags (e.g. HTML).

The research trend has then moved in the direction of finding a convergence of natural language processing, Web information integration, and machine learning approaches. The main goal is to produce IE algorithms and systems that can be rapidly adapted to new Web-based domains and scenarios. Important works [2, 32] have been done in the direction of ‘wrapper induction’, in case the documents to be extracted are highly regular, such as HTML text. Hybrid approaches have been also developed to realize an accurate extraction from both regular HTML and natural texts.

1.5 Is there a difference between information extraction from web documents and traditional ones?

The main difference is in the presence of documents’ structure: in fact traditional information extraction only applies on textual data, while in the web the document structure can be used for several purposes, from inferring the content (or document) type to extract relevant information for template filling (consider, for example, the case of the paper’s author name: it is located in a specific position into the document). Nevertheless web documents management involves a few specific problems:

• Web documents structure changes at a high rate. It is impossible to build a universal classifier good-for-all document types, neither is possible to find all kinds of objects within an arbitrary web document. Moreover, the encoding of the structure highly differs site by site.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 51

• The world wide web contains billions of pages of information but even modern search engines just index a fraction of all pages: the rest is never considered.

• The www contains a large amount of information (pages): several of them are useless (e.g. private pages of no interest for the community).

• Several pages being automatically generated by authoring tools are not written by a HTML expert. The output (source HTML code) of such tools cannot be easily used anymore by traditional IE algorithms as the HTML pages contain much more HTML syntax code than text content. Sometimes the text is even embedded within HTML code.

• Very often, also in textual documents, information (i.e. date, price, discount rate, etc.) is stored in pictures: linguistic processors never could recognize them.

• Last but not least, actuality, up-to-dateness and age are problems in web documents which have to be dealt with: o Even if a system is able to handle pictures within a document page it

will become a problem with animated pictures, movies or other moving objects.

o The www very dynamic documents may be temporary and result in a dead link if a system tries to access the page later on; in other cases documents are under construction or updated (more or less) frequently. Then the system gets different information from the newer page.

All previous problems motivated moving research focus towards adaptive IE to involve all together natural language processing, web information integration, machine learning. The main goal is to identify IE approaches and systems that could be adapted to new domains and web based scenarios.

As adaptive Web-based IE systems have been shown to be feasible and successful both from a scientific and application point of view, a variety of real-world applications have started to appear, ranging from Knowledge Management to e-business.

2 Information extraction historical flash-back

Information extraction originated in the natural language processing community under the MUC conferences. MUC conferences started in 1987 and were sponsored by the United States Defence Advanced Research Project Agency (DARPA). Their main objective was the evaluation of IE systems as developed by different research groups in order to extract information from restricted-domain freestyle texts. For each conference was selected a different domain.

MUC-1(1987). Was basically exploratory; Naval Tactical Operations domain for document collection. Each group participant to the competition designed its own format to record the extracted information. MUC-2(1989). Same MUC-1 domain; organizers defined a task: the template filling. A description of naval sightings and engagements, consisting of 10 slots

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

52 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

(type of event, agent, time and place, effect, etc.) was provided to the participants. A template with the relevant information had to be filled for every event of each class. MUC-3(1991). Latin American Terrorism domain documents. The template consisted of 18 slots (type of incident, date, location, perpetrator, target, instrument, etc.). MUC-4(1992). The same MUC-3 task was used. The template was modified to contain up to 24 slots. The evaluation criteria were improved to allow global comparisons among the different competing systems. MUC-5(1993). Further to English, documents in Japanese were also dealt with. Moreover, two different domains were proposed, Joint Ventures (JV) and Microelectronics (ME), which consisted of financial news and spots on microelectronics products, respectively. MUC-6(1995). The Financial domain appeared for the first time. Three main goals were proposed.

a) To identify functions that would be largely domain independent from the component technologies being developed for IE. Named entity (NE) task was proposed to deal with names of persons, organizations, locations and dates, among others.

b) To focus on portability in the IE task to different event classes. The template element (TE) task was proposed to standardize the lowest-level concepts (people, organizations, etc.), since they were involved in many different types of events. Like the NE task, this was also seen as a potential demonstration of the ability of systems to perform a useful, relatively domain independent task with near-term extraction technology. The original MUC IE task, based on a description of a particular class of event, was called scenario template (ST).

c) Three new tasks were proposed: coreference resolution, word-sense-disambiguation and predicate-argument syntactic structuring with the main objective to encourage participants to build up the mechanisms needed for deeper understanding.

MUC-7(1998). The Airline Crashes domain was proposed. The NE task was carried out in Chinese, Japanese and English. Moreover, a new task was evaluated, which focused on the extraction of relations between TEs (as location-of, employee-of and product-of in the Financial domain). Such a new task was called Template Relation (TR).

While IE researches in the United States were carried out in the context of MUC conferences, the European Commission funded, under the LRE (Linguistic Research and Engineering) program, a number of projects devoted to develop tools and components for IE not in a direct competition, but in a stimulating scientific context.

Mainly they dealed with tasks such as automatically/semi-automatically acquiring and tuning lexicons from corpus processing, extracting entities, parsing in a flexible and robust approach, etc.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 53

The LRE program configured as a sort of European approach to IE, that in the absence of a specific application scenario, was interested in developing new IE methodologies valid in a general framework. The lack of both specific document collections and target application did constrain European researchers neither to customize or to optimize resources, nor to optimize systems development under pressure of competition.

On the other hand special attention was devoted to develop robust systems to extract from corpus processing

– domain related resources – in a multilingual framework – by defining performance evaluation metrics inherited from software

engineering context.

Such a research attitude will be revealed to be very appropriate in the forthcoming web scenario.

As a further positive result of the LRE program, it requires to be stressed the novelty for Europe of scientific cooperations among several research groups in the context of the same European project. This produced several outcomes:

– sharing of competences – unification of different approaches and methodologies – system interoperability – training of an high level European generation of IE researchers.

Several important scientific results have been obtained in these projects such as, among others, AVENTINUS, FACILE, SPARKLE, NAMIC, CROSSMARC, MOSES (see later in the chapter for details on two of them). They deal with the most relevant aspects of IE research; several of them were combining information extraction and information retrieval methodologies in different approaches dealing also with ‘tuning’ and automatic adaptation aspects.

3 IE systems architecture

In the framework of MUC competitions several kinds of IE systems were developed; among them a few commonalities emerged related to NLP tasks assumed to be mandatory for any IE systems.

This awareness motivated Hobbs [22] to publish a proposal of a general architecture valid for any IE system: ‘An information extraction system is a cascade of transducers or modules that at each step add structure and often lose information, hopefully irrelevant, by applying rules that are acquired manually and/or automatically’.

Each system could be characterized by its own set of modules belonging to the following set: Text Zoner, Pre-processor, Filter, Pre-parser, Parser, Fragment Combiner, Semantic Interpreter, Lexical Disambiguation, Coreference Resolution or Discourse Processing, Template Generator. Until now most systems adopt this general architecture, although specific implementations customize to the application needs the set of modules.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

54 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

3.1 Text zoning

This module turns a text into a set of text segments. As a minimum result it would separate the formatted from the unformatted regions.

3.2 Pre-processor

This module locates sentence boundaries in the text, and produces for each sentence a sequence of lexical items (words together with their possible parts of speech). It recognizes also multiword (lexical lookup methods). It recognizes and normalizes certain basic types that occur in the genre, such as dates, times, personal and company names, locations, currency amounts, and so on.

3.3 Filter

For speeding processing time this module uses superficial techniques to filter out (from previously recognized ones) the sentences that are likely to be irrelevant, thus turning the text into a shorter text. In any application, subsequent modules will be looking for patterns of words that signal relevant events. If a sentence has none of this words, then there is no reason to process it further.

3.4 Pre-parser

The pre-parsing module recognizes very common small-scale structures, thereby simplifying the task of the parser. A few systems at this level recognize noun groups (noun phrases up through the head noun) as well as verb groups (verbs together with their auxiliaries). Appositives can be attached to their head nouns with high reliability (e.g. Prime Minister, President of the Republic, etc.).

3.5 Parser

This module takes a sequence of lexical items (fragments) and tries to produce a parse tree for the entire sentence.

Recently more and more systems are abandoning full-sentence parsing in information extraction applications being interested just in recognizing fragments, then they try only to locate within the sentence various patterns that are of interest for the application.

3.6 Fragment combination

Systems need an indication on how to combine the previously obtained parse tree fragments. This module may be applied to the parse tree fragments themselves.

3.7 Semantic interpretation

This module translates the parse tree or parse tree fragments into any of: a semantic structure; a logical form or event frame. Often lexical disambiguation takes place at this level as well.

The method for semantic interpretation is function application or an equivalent process that matches predicates with their arguments.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 55

3.8 Lexical disambiguation

Lexical disambiguation allows translating a semantic structure with general or ambiguous predicates into a semantic structure with specific, unambiguous predicates.

More generally, lexical disambiguation generally happens by constraining the interpretation by the context in which the ambiguous word occurs, perhaps together with the ‘a priori’ probabilities of each word sense.

3.9 Co-reference resolution, discourse processing

Discourse analysis, being useful to link related semantic interpretations among sentences, is realized by resolving different kinds of co-references. For example this module resolves co-reference for basic entities such as pronouns, definite noun phrases, and anaphora. It also resolves the reference for more complex entities like events identified either with an event that was found previously or as a consequence of a previously found event, or it may fill a role in a previous event.

Three main criteria are used in determining whether two entities can be merged.

First, semantic consistency, usually as specified by a hierarchy (as WordNet for example); for pronouns, semantic consistency consists of agreement on number and gender, and perhaps on whatever properties can be determined from the pronoun’s context.

Second, there are several measures of compatibility between entities; for example, the merging of two events may be conditioned on the extent of overlap between their sets of known arguments, as well as, on the compatibility of their types.

The third criterion is nearness, as determined by a metric (something more than simply the number of words between the items in the text). For example, in resolving pronouns, measuring nearness along a different path suggests favouring, probably the Subject over the Object in the previous sentence.

3.10 Template generation

Semantic structures generated by the natural language processing modules are used to produce the template as described by the final user only in the case events pass the defined threshold of interest. (See for more details: http://www.itl.nist.gov/iaui/894.02/related_projects/tipster/gen_ie.htm

4 Features of an IE system

The general agreement on architectural matter has been the first step; it has been followed by a convergence on a number of features required for any IE system. Named Entity Recognition It refers to named entities (NE) identification (inside the text) and extraction. NEs generally relate to domain concepts and are associated to semantic classes such as person, organization, place, date, amount, etc.

The accuracy in NE recognition is very high (more than 90%) and comparable with those of humans. As an example in the following (Fig. 1) it is

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

56 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

shown the result of NE recognition activity over a sentence (as it is carried out in the context of MUC competitions).

IE systems take advantage of available lexical resources and general purpose (domain independent) tools for the pre-processing step: among them especially of interest is the NE recognition modules. The process of NE recognition may be quite straightforwardly performed by using finite-state transducers and dictionary look up (domain specific dictionaries, terminological databases, etc.). In spite of this, results depend heavily on the involved information sources.

Co-reference resolution It allows identifying identity relations between previously extracted NEs. Anaphora resolution is widely used to recognize relevant information about either concepts (NE) or events sparse in the text: this activity constitutes an important source of information enabling the system to assign a statistical relevance to recognized events.

Template Element production As a result of the previous activities, an IE system becomes aware of NEs and their descriptions. This represents a first level of template (called TE – ‘Template Element’). The TEs collections may be considered as a basic knowledge base to which the system accesses for getting information on main domain concepts, as they have been recognized in the text.

Figure 1: NE recognition in a test sentence.

TEMPLATE SNOWBOARD TABLES Models Producer Length Cost Release date

[models] [producer_name] [number] [number] [date]

TEMPLATE COMPANY ACQUISITION Acquiring company Acquired company Data of acquisition Cost

[company#ne#] [company#ne#] [date] [currenrcy#ne#]

Figure 2: A few template elements.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 57

Scenario Template production It results in a synthesis of several tasks, mainly the identification of Template Elements that relate among them: it represents an event (scenario) related to the domain under analysis; recognized values are used to fill in a scenario template.

See fig. 3, a document (as detailed in [16]) and related template elements used to fill in the scenario template.

a) DOCUMENT:

<DOC>

<DOCNO> 56 </DOCNO>

<HL> Who’s news: Burns Fry Ltd. </HL>

<DD> 04/13/94 </DD>

<TXT>

<p>

BURNS FRY Ltd. (Toronto> -- Donald Wright, 46 years old, was named executive vice president and director of fixed income at this brokerage firm. Mr. Wright resigned as president of Merrill Lynch Canada Inc., a unit of Merrill Lynch& Co., to succeed Mark Kassirer, 48, who left Burns Fry last month. A Merrill Lynch spokeswoman said it hasn’t named a successor to Mr. Wright, who is expected to begin his new position by the end of the month.

</p>

</TXT>

</DOC>

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

58 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

b) TEMPLATES ST:

<TEMPLATE> := DOC_NR: “ 56 “

CONTENT: <SUCCESSION EVENT 56-1>

<SUCCESSION EVENT 56-1>:=

SUCCESSION_ORG: <ORGANIZATION 56-1>

POST: “executive vice president ”

IN_AND_OUT: <IN_AND_OUT 56-1>

<IN_AND_OUT 56-1>

VACANCY REASON: OTH_UNK

<IN_AND_OUT 56-1>:=

IO_PERSON: <PERSON 56-2>

NEW STATUS: OUT

ON_THE_JOB: NO

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 59

<IN_AND_OUT 56-1>:=

IO_PERSON: <PERSON 56-1>

NEW STATUS: IN

ON_THE_JOB: NO

OTHER ORG: <ORGANIZATION 56-2>

REL_OTHER_ORG: OUTSIDE_ORG

c) TEMPLATES TE:

<ORGANIZATION 56-1>:=

ORG_NAME: “Burns Fry Ltd.”

ORG_ALIAS: “Burns Fry”

ORG_DESCRIPTION: “this brokerage firm”

ORG_TYPE: COMPANY

ORG_LOCALE: Toronto CITY

ORG_COUNTRY: Canada

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

60 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

<ORGANIZATION 56-2>:=

ORG_NAME: “Merrill Lynch Canada Inc.”

ORG_ALIAS: “Merrill Lynch”

ORG_DESCRIPTION: “a unit of Merrill Lynch & Co.”

ORG_TYPE: COMPANY

<PERSON 56-1>:=

PER_NAME: “Donald Wright”

PER_ALIAS: “Wright”

PER_TITLE: “Mr.”

<PERSON 56-2>:=

PER_NAME: “Mark Kassirer”

Figure 3: A document and related template element.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 61

4.1 The parsing role

At the beginning of MUC conferences, traditional NL understanding architectures were adopted for IE technology. They were based on full parsing, followed by both a semantic interpretation of the resulting in-depth syntactic structure, and a discourse analysis. Since MUC-3 simpler approaches were adopted. That of Lehnert’s research group [26] named selective concept extraction was based on the assumption that only those concepts being within the scenario of extraction are relevant to be detected in the documents. Then, syntactic and semantic analysis should be simplified by means of a more restricted, deterministic and collaborative process. As a consequence they replaced the traditional parsing, interpretation and discourse modules with a cascade of: a simple phrasal parser to find local phrases; an event pattern matcher; and a template merging procedure, respectively. The proposed simplification of the understanding process, was successful and has been widely adopted inside the IE community. In general, this is due to different drawbacks on the use of full parsing [18]:

– Full parsing involves a large and relatively unconstrained search space, and is consequently expensive.

– Full parsing is not a robust process, as a global parse tree is not always achieved. In order to overcome such a problem, the parse covering the largest substring of the sentence is retained. Sometimes this global goal may provide incorrect local choices.

– Full parsing may produce multiple ambiguous results: more than one syntactic interpretation is usually provided. In this case, the most correct interpretation must be selected.

– Broad-coverage grammars, needed for full parsing, are difficult to be consistently tuned. Dealing with new domains, new specific syntactic expressions could occur in texts and be unrecognized by broad-coverage grammars. Adding new grammar rules could produce a not-consistent final grammar.

– A full parsing system cannot manage off-vocabulary situations.

Nowadays, most existing IE systems are based on partial parsing, in which not-overlapping parse fragments, being phrasal constituents, are generated. Generally, the process of finding constituents consists in using a cascade of one or more parsing steps against fragments. The resulting constituents are tagged as noun, verb, or prepositional phrases, etc. Sometimes, these components are represented as chunks of words, and the parsing process is named chunking [7].

Once constituents have been parsed, systems may resolve domain-specific dependencies among them, by using semantic restrictions imposed by the extraction scenario. Two different approaches may be adopted to solve such dependencies: pattern matching and grammatical relations.

Pattern matching. Information extraction patterns (or IE rules) may be considered as domain relations among specific concepts, i.e. typical concepts of the domain and named entity classes that hold by definition the special status of

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

62 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

domain concepts. IE rules are used to identify both modifier and argument dependencies between constituents. In fact, such IE rules are sets of ambiguity resolution decisions to be applied during the full parsing process. They can be seen as sets of syntactic-semantic expectations from the different extraction tasks. On one hand, some IE rules allow to identify properties of entities and relations between such entities (TE and TR extraction tasks). On the other hand, IE rules using predicate argument relations (object, subject, modifiers) allow identifying events among entities (ST extraction task). The representation of these IE rules greatly differs inside different IE systems. The task of IE patterns definition is generally hand-made; attempts have been made for automatizing this process. In [3] it is described a terminological perspective to the acquisition of IE patterns based on a novel algorithm for estimating the domain relevance of the relations among domain concepts.

Grammatical relations. Instead of using IE rules, a more flexible syntactic model is proposed in [39] in line with that in [12]. It consists in defining a set of grammatical relations among entities, such as general relations (subject, object and modifier), a few specialized modifier relations (temporal and location) and relations for arguments that are mediated by prepositional phrases, among others. In a similar approach to dependency grammars, a graph is built by following general rules of interpretations for the grammatical relations. Previously detected chunks are nodes within such a graph, while relations among them are labelled arcs.

4.2 The scenario’s role

A well structured IE system needs for architecture customized on both specific application domain and types of information templates to be extracted that is the so-called scenario.

Such a scenario dependency clearly emerges in the specific approaches used for parsing semantic interpretation and discourse processing modules; for each of them it is required to both identify in the text and extract entities related to a specific event (named Entity Recognition), and events related to the specific scenario (Scenario Template Production).

Named Entity Recognition is carried out during parsing phase by means of domain specific extraction rules, while Event Recognition is done by the semantic interpreter. The discourse interpreter is responsible for organizing collected information (both entities and events) into a knowledge base supporting production of templates.

To overcome the limitation for further customization due to the scenario’s role, it is currently agreed to identify any interested kinds of events (as they appear in the corpus) independently from a specific template. Then ‘an event is considered to be interesting in case it is relevant in the corpus collection’. The role of the scenario is, in any case, still active as, since the beginning it determines the composition of the corpus, then moving its influence from processing modules (the architecture) to text collection.

In such an approach the resulting overall architecture appears to be more general and applicable to further contexts. The production of the knowledge base

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 63

is modelled over the corpus thus supporting text processing methodologies based only on linguistic (syntactic and semantic) knowledge.

The process of knowledge modelling may be sketched as in the following. Given the corpus (as model for the knowledge domain under investigation) the activities to be carried out for building the domain ontology are:

• The definition of the named entity classes • A corpus analysis for acquisition of both the most important concepts and

relations among the concepts • The analysis of the extracted domain knowledge for the definition of the

top template • The extraction of all the important concepts and relations among the

concepts and their clustering under the defined template types

For all activities but the first, terminology extraction practice may be very useful for the notion of domain relevance. It is a key notion that helps in showing to the ontology builder only the most relevant IE patterns (a combination of the domain concepts and domain relations). These patterns, sorted according to the domain relevance can drive the definition of the top ‘template types’ that will represent the knowledge of the IE system.

5 Adaptive IE systems

Nowadays, a large part of knowledge is stored in unstructured textual format. Consider, as an example, that several big companies have millions of documents, possibly stored in different parts of the world, but available via intranets, where the knowledge of their employees is stored. Textual documents cannot be queried in a traditional fashion and therefore the stored knowledge can neither be used by automatic systems, nor be easily managed by humans. This means that knowledge is difficult to capture, share and reuse among employees, reducing the company's efficiency and competitiveness. Moreover, a moment in which companies are more and more valued for their ‘intangible assets’ (i.e. the knowledge they own), the presence of unmanageable knowledge implies a loss in terms of company’s value.

IE is the perfect support for knowledge identification and extraction from Web documents as it can–for example–provide support in documents analysis either in an automatic approach (unsupervised extraction of information) or in a semi-automatic one (e.g. as support for human annotators in locating relevant facts in documents, via information highlighting). The first level of complexity in the use of IE for such purposes is the difficulty in adapting IE systems to new scenarios and tasks: a machine-learning approach may be helpful.

A further challenge for IE in the near future is to be applicable in the web scenario where further different kinds of problems exist. In fact the way of encoding information within a web page is not uniform: see, for example, the timetables of the universities that all look different. Mainly in the e-commerce scenario, people realize fancy pages with a picture here and a blinking object

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

64 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

there … Another problem deals with the fact in the web a variety of document formats exists: ascii-text, html, xml, pdf, ….

5.1 Machine-learning for information extraction

The difficulty of exploiting IE technology is partly due to the difficulty of adapting IE systems to deal with new domains, new text styles and new languages. In order to handle these portability drawbacks, the use of machine learning (ML) techniques has increased, mainly due to the success of their application to some lower level NLP tasks. [2, 8, 9, 34] for NE recognition, [10] for chunking, and [1, 11, 28, 29] for co-reference and anaphora resolution, are interesting examples of such approaches. A detailed survey on the use of ML techniques for NLP tasks can be also found in [27, 30, 41].

Most of the research effort in this area has been devoted to applying symbolic inductive learning methods to acquire domain-dependent knowledge that is useful for extraction tasks. Most of the approaches focus on acquiring IE rules from a set of training documents. These rules can be classified either as single-slot rules or multi-slot rules, by taking into account that a concept can be represented as a template (e.g. a template element, a template relation or a scenario template in MUC terms). A single-slot rule is able to extract document fragments related to one slot within a template, whilst a multi-slot rule extracts tuples of document fragments related to the set of slots within a template. The representation of extraction rules depends heavily on the document style, from which rules have to be learned. In general, the less structured the documents are, the higher the variety of linguistic constraints within the rules. Surveys presenting different kind of rules used for IE can be found in [17, 31].

Since MUC competitions, typical IE-rule learners focus on learning rules from free text to deal with event-extraction tasks. With some exceptions, these rules are useful to extract document fragments containing the slot-filler values, and a post-process is needed to trim the exact values from the fragments. However, the large amount of online documents available on the Internet has recently increased the interest in algorithms that can automatically process and mine these documents by extracting relevant exact values.

For the task of Information Extraction, approaches dealing specifically with web pages have centred around the notion of wrapper induction [14, 24, 25, 32, 37] and have used generally machine-learning techniques to identify semantic content directly from features related to strings and the HTML structures in which they occur.

6 IE systems: a few European applications

As a conclusion to this chapter, a couple of IE systems will be described to make evident needs and role of application domain for development purposes. Nevertheless, each of them reflects the general structure of an IE system while being very original either in methodological approach or in technological deployment.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 65

6.1 NAMIC

The system NAMIC (News Agencies Multilingual Information Categorisation) has been developed within a European project in the Human Language Technologies area. NAMIC bases on knowledge intensive and large-scale Information Extraction. The general architecture capitalized robust methods of Information Extraction [13] and large-scale multilingual resources (e.g. EuroWordNet) [40]. NAMIC aims both at extracting relevant facts from the news streams of large European news agencies and newspaper producers, and to provide hypertextual structures within each (monolingual) stream, at the end producing cross-lingual links among them. NAMIC could be used mainly as an automatic authoring system.

Automatic authoring is the activity of processing news items in streams, detecting and extracting relevant information from them and, accordingly, organising texts in a non-linear fashion. While IE systems are oriented towards specific phenomena in restricted domains (for example joint ventures), the scope of automatic authoring is wider. In automatic authoring, the hypertextual structure has to provide navigation guidelines. The final user is only responsible for crossing links after evaluating the relevance of the system suggestions.

6.1.1 Large scale IE for automatic authoring Information Extraction is a very good approach to Automatic Authoring for a number of reasons.

The key components of an IE system are events and objects–the kind of components that trigger hyperlinks in an authoring system.

Co-reference is a significant part of Information Extraction and indeed a necessary component in authoring. Named Entities-people, places, and organizations, etc. – play an important part in authoring and again are firmly addressed in Information Extraction systems.

6.1.2 The role of a world model as a method for event matching and co-referencing The world model is an ontological representation of events and objects for a particular domain or set of domains. The world model is made up of a set of event and object types, with attributes. The event types characterize a set of events in a particular domain and are usually represented in a text by verbs. Object types, on the other hand, are best thought of as characterizing a set of people, places or things and are usually represented in a text by nouns (both proper and common). When used as part of an Information Extraction system, the instances of each type are inserted/added to the world model. Once the instances have been added, a procedure is carried out to link those instances that refer to the same thing achieving co-reference resolution.

In NAMIC, the world model is created using the XI cross-classification hierarchy [15]. The definition of a XI cross-classification hierarchy is referred to as ontology, while together with the association of attributes with its nodes represents the world model. Processing a text acts to populate this initially bare world model with the new instances and relations recognized in the text, converting it into a discourse model specific to the particular text.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

66 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

6.1.3 Named entity matcher The Named Entity Matcher [23] finds named entities through a secondary phase of parsing which uses a named entity grammar and a set of gazetteer lists. It takes as input parsed text from the first phase of parsing and the named entity grammar which contains rules for finding a predefined set of named entities and a set of gazetteer lists containing proper nouns.

The Name Entity Matcher returns the text with the Named Entities marked. The Named Entities in NAMIC are persons, organizations, locations, and dates.

The Named Entity grammar contains rules for co-referring abbreviations as well as different ways of expressing the same named entity such as Dr. Smith, John Smith and Mr. Smith occurring in the same article.

6.1.4 Discourse processor The Discourse Processor module translates the semantic representation produced by the parser into a representation of instances, their ontological classes and their attributes, in the XI knowledge representation language [15].

The semantic representation produced by the parser for a single sentence is processed by adding its instances, together with their attributes, to the discourse model, which has been developed so far for the text.

6.1.5 Ontological and lexical information As NAMIC is facing with large-scale coverage of news in several languages it has been adopted EuroWordNet [40] as a common semantic formalism to support:

– lexical semantic inferences (e.g. generalization, disambiguation) – broad coverage (e.g. lexical and semantical) and – a common interlingua platform for linking events from different

sdocuments.

The NAMIC ontology consists of 40 predefined object classes and 46 attribute types related to Name Entity objects and nearly 1000 objects relating to EuroWordNet base concepts.

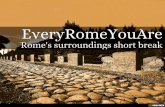

6.1.6 The NAMIC architecture The complexity of the overall NAMIC system required the adoption of a distributed computing paradigm in the design. The system is a distributed object oriented system where services (like text processing or multilingual authoring) are provided by independent components and asynchronous communication is allowed. Independent news streams for the different languages (English, Spanish, and Italian) are provided.

Language specific processors (LPs) are thus responsible for text processing and event matching in independent text units in each stream. LPs compile an objective representation (see fig. 4) for each source texts, including the detected morphosyntactic information, categorization in news standards (IPTC classes) and description of the relevant events. Any later authoring activity is based on this canonical representation of the news. In particular a monolingual process is

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 67

carried out within any stream by the three monolingual Authoring Engines (English AE, Spanish AE, and Italian AE). A second phase is foreseen to take into account links across streams, i.e. multilingual hyper-linking: a Multilingual Authoring Engine (M-AE) is here foreseen. Figure 4 represents the overall flow of information.

The Language Processors are composed by a morphosyntactic (Eng, Ita and Spa MS) and an event-matching component (EM). The lexical interfaces (ELI, SLI and ItLI) to the unified Domain model are also used for event matching. The linguistic processors are in charge of producing the objective representation of incoming news. This task is performed during MS analysis by two main sub processors:

– a modular and lexicalised shallow morphosyntactic parser [4], providing name entity matching and extracting dependency graphs from source sentences. Ambiguity is controlled by part-of-speech tagging and domain verb-sub categorization frames that guide the dependency recognition phase. The name-entity recognition is based on proper name catalogues and specific grammars.

– a statistical linear text classifier based upon some of the derived linguistic features [3] (lemmas, POS tags and proper nouns).

The results are then input to the event matcher that by means of the discourse interpreter [23] derives the objective representation.

The communication interfaces among the MS and EM components as well as among the AEs and the M-AE processors are specified via XML DTDs. (NAMIC project has been funded by the European Union, grant number IST-1999-12392).

Figure 4: NAMIC architecture.

English MS

Italian MS

Spanish MS

World Model

English EM

Italian EM

Spanish EM

Language processors

Hyperlinking

Engine

XML Objective

Representation

Multilingual Hypernews

Engine

NAMIC monitor

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

68 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

6.2 CROSSMARC

CROSSMARC project (CROSSlingual Multi-Agent Retail Comparison) applies state-of-the-art language engineering tools and techniques to achieve commercial strength technology for extracting information from web pages for product comparison making extensive use of domain ontologies.

The advent of e-commerce has given birth to a large number of e-retail stores and many commercial systems have been developed to help Internet shoppers decide what to buy and from where to buy. The majority of these systems, however, assume that product names and features are expressed in a uniform and monolingual (English) manner. Furthermore, they typically provide information only from web sites which form part of their client base and present information derived from databases rather than extracted from web pages. CROSSMARC prototype system handles two domains: laptop computers and job advertisements found on the web sites of computer-related companies. The language of job advertisements tends to consist of well-formed discursive text which requires more NLP techniques than the terse, typically tabular, descriptions on laptop web pages.

The CROSSMARC project builds upon and makes significant advances in domain-specific web crawling, domain-specific spidering of web sites, multi-lingual named-entity recognition, cross-lingual name matching, cross-lingual fact extraction, machine learning, and user modelling and localization. The overall structure of the CROSSMARC system is shown in fig. 5. The Information Extraction component of the CROSSMARC system pays particular attention to XML representations and technology and focuses on the use of ontologies.

6.2.1 The CROSSMARC IE component CROSSMARC Information Extraction component is divided into two tasks: first Named Entity Recognition and Classification (NERC) and then Template Filling (or Fact Extraction (FE)) where relations between Named Entities are established. The need to extract information about products from web pages stressed the ability of the system to add new domains very rapidly. To fulfil the need for rapid portability, first, machine learning techniques have been adopted wherever possible and, then, an ontology for each domain has been developed both to support the IE task and to mediate name matching among different languages.

6.2.2 Cross-lingual named entity recognition and classification The CROSSMARC multilingual NERC component has a simple architecture whereby individual monolingual NERC modules sit side-by-side and pages are routed according to their language. The input to NERC is a web page converted to XHTML using a tool such as Tidy (http://www.w3.org/People/Raggett/tidy/). The output is the same page but with additional XML mark-up indicating the named entities that have been recognized.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 69

© NCSR, Frascati, July 18-19, 2002

CROSSMARC big picture

Domain-specific Web sites

Domain-specific Spidering

Domain Ontology

XHTML pages

WEBFocused Crawling

Web Pages Collection

with NE annotations

NERC-FE

Multilingual NERC and Name Matching

Multilingual andMultimedia Fact

Extraction

XHTML pages

XML pages

Insertion into the data base Products

Database

User Interface End user

Figure 5: CROSSMARC architecture.

The system currently contains four NERC modules for English, French, Greek and Italian; other languages could easily be added. The four language modules use different tools and techniques. The Greek module uses the Ellogon text-engineering platform to add Tipster style annotations to the XHTML document, which can be converted to XML. The English module is exclusively XML-based and the annotation process involves incremental transduction of the XHTML page using a variety of XML-based tools from the LT TTT and LT XML toolsets. The Italian module is implemented as a sequence of processing steps driven by XSLT transformations over the XHTML input structure, by using an XSLT parser with a number of language processing modules plugged in as extensions. The French Module is based on the Lingway XTIRP Annotation tool2. Named entity recognition is based mainly on regular expressions and context.

The NERC part of the CROSSMARC system is described in some detail in [21].

6.2.3 CROSSMARC fact extraction N. Kushmerick [24] first introduced the technique of wrapper induction for Information Extraction from HTML source. The technique works extremely well for highly structured document collections so long as the structure is similar across all documents, however, it is less successful for more heterogeneous collections where structure-based clues do not hold for all documents [36]. In CROSSMARC three distinct machine-learning based Fact Extraction (FE) modules have been implemented, each of which has been applied to all four languages. Two of the FE

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

70 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

modules re-implement well-established wrapper induction techniques: the STALKER system [32] and Soderland’s [37] WHISK approach. The third module treats FE as a classification task and uses a Naive Bayes implementation to perform the classification. The FE modules add extra attributes to the NE, NUMEX and TIMEX entities recognised by the NERC modules to indicate the specific fact slot inside a product description the entities fulfil. Additionally, before storing the extracted facts in the product database, they are normalized against the ontology for user presentation purposes. After normalization is performed, the final processing stage concerns the conversion of the extracted information into a common XML representation according to the FE schema, which is used by the Data Inserter module in order to feed the product database.

6.2.4 CROSSMARC fact definition The CROSSMARC system has been developed in the context of product comparison and the aim of presenting relevant information to online shoppers is the determining factor in deciding what information should be extracted from web pages within a particular domain. Once the facts for a domain have been established, they are encoded in a XML Schema representing the information the monolingual FE modules will output. The purpose of the FE Schema is twofold: it fills the gap between the ontology description and the extracted data, and it can be used to check data values for consistency with the ontology (for example to check that a processor speed is in the expected range). The FE modules output XML documents containing one or more product descriptions. Product description elements have several attributes related to both the ontology and the normalization of the information they cover.

6.2.5 CROSSMARC ontology The CROSSMARC ontology aims to provide a shared domain model for the purpose of extracting structured descriptions of retail products. It constitutes a formal description of the concepts which are relevant in the specific domain. Once a set of appropriate features has been determined for a domain, the semantic content is encoded as concepts in an ontology. The domain ontology constrains the concepts to be used in describing a specific product. The domain ontology can also be seen as the definition of the template that is to be filled by the IE component. The organization of the CROSSMARC ontology has been designed to be flexible enough to be applied to different domains without changing the overall structure; for this reason we planned an ontology architecture with the following layers:

(i) Template Model Layer; (ii) Domain Model Layer; (iii) Instance Layer; (iv) Lexical Layer.

The Template Model describes CROSSMARC ontologies in general. It acts as a meta-conceptual layer and defines a common structure for all specific ontologies. The Domain Model layer is composed of the concepts

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 71

that populate the specific domain of interest and it contains the different features, attributes of features, and values that describe the domain. The Instance layer consists of the different individuals present in the domain of interest, at the time the ontology is considered. The lexicons denote the lexical layer of the CROSSMARC ontology.

A lexicon for each language is set up according to an XML Schema that specifies its valid structure, allowing new domains to be added without changing the processing or data models. The lexicon layer provides access to the most common surface realizations of ontology concepts. Domain lexicons are created for each language and they contain possible instantiations of ontology concepts for each language listed as synonyms. (CROSSMARC project has been funded by the European Union, grant number IST-2000-25366).

References

[1] Aone, C. & Bennet, W., Evaluation Automated and Manual Acquisition of Anaphora Resolution. In E. Riloff, S. Wermter & G. Scheler, eds., LNAI series 1040, Springer-Verlag, 1996

[2] Baluja, S., Mittal, V. & Sukthankar, R., Applying Machine Learning for High Performance Named-Entity Extraction. In Proceedings of the International conference of the Pacific Association for Computational Linguistics (PACLING), 1999.

[3] Basili, R., Moschitti, A. & Pazienza, M.T., Language Sensitive Text Classification. In Proceedings of the 6th RIAO Conference (RIAO 2000) Content-Based Multimedia Information Access, College de France, France 2000.

[4] Basili, R., Pazienza, M.T. & Zanzotto, F.M., Customizable modular lexicalized parsine. In Proceedings of the 6th International Workshop on Parsing Technology, IWPT2000, Trento, Italy 2000.

[5] Basili, R., Moschitti, A., Pazienza, M.T. & Zanzotto, F.M., A contrastive approach to term extraction. In Proceedings of the 4th Terminology and Artificial Intelligence Conference (TIA2001), Nancy (France), May 2001.

[6] Basili, R. & Zanzotto, F.M., Parsing Engineering and Empirical Robustness. In Journal of Natural Language Engineering 8/2-3, June 2002.

[7] Basili, R., Pazienza, M.T. & Zanzotto, F.M., Learning IE patterns: a terminology extraction perspective. In Proceedings of the Workshop of Event Modeling for Multilingual Document Linking at LREC 2002, Canary Islands (Spain), May 2002.

[8] Borthwick, A., A Maximum Entropy Approach to Named Entity Recognition. PhD Thesis, Computer Science Department, New York University, 1999.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

72 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

[9] Borthwick, A., Sterling, J., Agichtein, E. & Grishman, R.. Exploiting Diverse Knowledge Sources via Maximum Entropy in Named Entity Recognition. In Proceedings of the 6th ACL Workshop on very Large Corpora, 1998.

[10] Cardie, C., Daelemans, W., Nédelec, C. & Tjong Kim Sang, E., editors. Proceedings of the 4th Conference on Computational Natural Language Learning, 2000.

[11] Cardie, C. & Wagstaff, K., Noun Phrase Co-reference as Clustering. In Proceedings of the Joint SIGDAT Conference on Empirical Methods in Natural Language Processing and Very Large Corpora (EMNLP-VLC), 1999.

[12] Carrol, J., Briscoe, T. & Sanfilippo, A., Parser Evaluation: A Survey and a New Proposal. in Proceedings of the 1th International Conference on Language Resources and Evaluation (LREC), Granada (Spain), 1998.

[13] Cunningham, C., Gaizauskas, R., Humphreys, K. & Wilks, Y., Experience with a language engineering architecture: 3 years of GATE. In Proceedings of the AISB’99 Workshop on Reference Architecture and Data Standards for NLP, Edinburgh (UK), 1999.

[14] Freitag, D. & Kushmerick, N., Boosted Wrapper Inducction. In Proceedings of the 17th National Conference on Artificial Intelligence (AAAI-2000), 2000.

[15] Gaizauskas, R. & Humphreys, K., XI: a simple prolog-based language for cross-classification and inheritance. In Proceedings of the 6th International Conference on Artificial Intelligence: Methodologies, Systems, Applications (AIMSA 96), 1996.

[16] Gaizauskas, R. & Wilks, Y., Information Extraction: beyond document retrieval. In Journal of Documentation, 54(1): 70-105, 1998.

[17] Glickman, B. & Jones, R., Examining Machine Learning for Adaptable End-to-End Information Extraction Systems. In Proceedings of the AAAI Workshop on Machine Learning for Information Extraction, 1999.

[18] Grishman. R., Where is the syntax? In Proceedings of the 6th Message Understanding Conference (MUC-6), 1995.

[19] Grishman, R., Information Extraction: Techniques and challenges. In M.T. Pazienza ed. Information Extraction, a multidisciplinary approach to an emerging information technology, Springer-Verlag LNAI series1299, 1997.

[20] Grover, C., Matheson, C., Mikheev, A. & Moens, M., LL TTT-a flexible tokenisation tool. In Proceedings of the 2th International Conference on Language Resources and Evaluation (LREC 2000), 2000.

[21] Grover, C., McDonald, S., Nic Gerailt, D., Karkaletsis, V., Farmakiotou, D., Samaritakis, G., Petasis, G., Pazienza, M.T., Vindigni, M., Vichot, F. & Wolinski, F., Multilingual XML-Based Named Entity Recognition for e-retail Domains. In Proceedings of the 3th International Conference on Language Resources and Evaluation (LREC 2002), Canary Islands (Spain), May 2002.

[22] Hobbs, J.R., The generic Information Extraction system. In Proceeding of the 5th Message Understanding Conference (MUC-5), Morgan Kaufman, 1993.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

Text Mining and its Applications to Intelligence, CRM and Knowledge Management 73

[23] Humphreys, K., Gaizauskas, R., Azzam, S., Huyck, C., Mitchell, B., Cunningham, H. & Wilks, Y., Description of the LASIE-II system as used for MUC-7. In Proceedings of the 7th Message Understanding Conferences (MUC-7), 1998, Morgan Kaufman, http://www.saic.com

[24] Kushmerick, N., Wrapper Induction for Information Extraction, Phd thesis, University of Washington, 1997.

[25] Kushmerick, N., Weld, D. & Doorenbos, R., Wrapper Induction for Information Extraction. In Proceedings of the 15th International Conference on Artificial Intelligence, 1997.

[26] Lehnert, W., Cardie, C., Fisher, D., McCarthy, J., Riloff, E. & Soderland, S., Description of the CIRCUS System as used for MUC-4. In Proceedings of the 4th Message understanding Conference (MUC-4), 1992.

[27] Manning, C. & Schutze, H., Foundations of Statistical Natural Language Processing, MIT Press, 1999.

[28] McCarthy, J.F. & Lehnert, W.G., Using Decision Trees for Co-reference Resolution. In Proceedings of the 14th International Joint Conference on Artificial Intelligence (IJCAI), 1995.

[29] Mitkov, R., Robust Pronoun Resolution with Limited Knowledge. In Proceedings of the Joint 17th International Conference on Computational Linguistics and 36th Annual Meeting of the Association for Computational Linguistics (COLING-ACL), 1998.

[30] Mooney, R.J. & Cardie, C., Symbolic Machine Learning for Natural Language Processing. Tutorial in Workshop on Machine Learning for Information Extraction, AAAI, 1999.

[31] Muslea, I., Extraction Patterns for Information Extraction Tasks: A Survey. In Proceedings of the AAAI Workshop on Machine Learning for Information Extraction, 1999.

[32] Muslea, S., Minton, S. & Knoblock, C., STALKER: Learning extraction rules for semi-structured, web-based information sources. In Proceedings of AAAI-98 Workshop on AI and Information Integration, Madison, Wisconsin, 1998.

[33] Pazienza, M.T. & Vindigni, M., Identification and classification of Italian complex proper names. In Proceedings of ACIDCA2000 International Conference, 2000.

[34] Semine, S., Grishman, R. & Shinnou, H., A Decision Tree Method for Finding and Classifying Names in Japanese Texts. In Proceedings of the SIG NL/SI of Information Processing Society of Japan, 1998.

[35] Smeaton, A.F., Information retrieval: still butting heads with Natural Language Processing? In M.T. Pazienza ed. Information Extraction, a multidisciplinary approach to an emerging information technology, Springer-Verlag LNAI series1299, 1997.

[36] Soderland, S., Learning to extract text-based information from the world wide web. In Proceedings of the 3th International Conference in Knowledge Discovery and Data Mining (KDD-97), 1997.

[37] Soderland, S., Learning Information Extraction Rules for Semi-Structured and Free Text. Machine Learning, 1999.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press

74 Text Mining and its Applications to Intelligence, CRM and Knowledge Management

[38] Thompson, H.S., Tobin, R., McKelvie, D. & Brew, C., LT XML Software API and toolkit for XML processing. http://www.ltg.ed.ac.uk/software/

[39] Vilain, M., Inferential Information Extraction. In M.T. Pazienza ed. Information Extraction: Towarde Scalable, Adaptable Systems, LNAI series 1714, Springer Verlag, Berlin, 1999.

[40] Vossen, P., EuroWordNet: A Multilingual Database with Lexical Semantic Networks. Kluwer Academic Publishers, Dordrecht, 1998.

[41] Young, WS. & Bloothooft, G. editors. Corpus-Based Methods in Language and Speech Processing, Kluwer Academic Press, 1997.

www.witpress.com, ISSN 1755-8336 (on-line) WIT Transactions on State of the Art in Science and Engineering, Vol 17, © 2005 WIT Press