· IMAGE FORMATION, PROCESSING AND FEATURES • Image formation – Light, cameras, optics and...

Transcript of · IMAGE FORMATION, PROCESSING AND FEATURES • Image formation – Light, cameras, optics and...

Summary of UNIK4690

12.04.2018

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

Lectures 2018

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

Lectures 2018

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Image formation Light, cameras, optics and color

6

Image formation: • Illumination • Cameras • Optics • Image Capture • Color Sensing.

Image capture

7

Light source

CMOS image sensor (CMOSIS 48Mp)

Scene Imaging system (camera)

Shutter

Shutter: Mechanical / electronic Global / rolling

Detector

Image formation Pose in 2D and 3D

8

• Pose – General properties

• Representing pose in 2D

– Homogeneous transformations

• Representing pose in 3D – Homogeneous transformations – Other alternatives

• Representing rotation in 3D

– Rotation matrix – Euler angles – Angle-axis – Unit quaternion

Pose – Representation in 2D

• The pose of 𝐵𝐵 relative to 𝐴𝐴 can be represented by a homogeneous transformation 𝑇𝑇𝐵𝐵𝐴𝐴 ∈ 𝑆𝑆𝑆𝑆 2

• Properties

• Points are represented in homogeneous coordinates

9

1

A A B A A BB B

A A B A A BC B C C B C

A AB B

TT T T

T

ξξ ξ ξ

ξ −

= ⋅ == ⊕ =

p p p p

11 1

A A BB

A BA A

A BB B

T

x xR

y y

=

=

p p

t0

cos sinsin cos

10 0 1

ABxA A

A A AB BB B By

tR

T tθ θ

ξ θ θ −

= =

t0

𝑥𝑥𝐴𝐴

𝑦𝑦𝐴𝐴

𝐴𝐴

𝐵𝐵

𝑥𝑥𝐵𝐵

𝑦𝑦𝐵𝐵

𝜉𝜉𝐵𝐵𝐴𝐴

𝒑𝒑𝐴𝐴

𝒑𝒑𝐵𝐵 𝑃𝑃

Pose – Representation in 3D

• The pose of 𝐵𝐵 relative to 𝐴𝐴 can be represented by a homogeneous transformation 𝑇𝑇𝐵𝐵𝐴𝐴 ∈ 𝑆𝑆𝑆𝑆 3

• Properties

• Points are represented in homogeneous coordinates

10

11 12 13

21 22 23

31 32 3310 0 0 1

ABx

A A AA A B B By

B B ABz

r r r tR r r r t

Tr r r t

ξ

= =

t0

1

A A B A A BB B

A A B A A BC B C C B C

A AB B

TT T T

T

ξξ ξ ξ

ξ −

= ⋅ == ⊕ =

p p p p

11 1

A A BB

A B

A A A BB B

A B

T

x xy R yz z

=

=

p p

t0

𝑥𝑥𝐴𝐴

𝑦𝑦𝐴𝐴

𝐴𝐴

𝐵𝐵

𝑥𝑥𝐵𝐵

𝑦𝑦𝐵𝐵

𝜉𝜉𝐵𝐵𝐴𝐴 𝑧𝑧𝐵𝐵

𝑧𝑧𝐴𝐴

𝒑𝒑𝐴𝐴

𝒑𝒑𝐵𝐵 𝑃𝑃

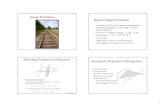

Image formation Basic projective geometry

11

• The projective plane 2 – Homogeneous coordinates – Line at infinity – Points & lines are dual

• The projective space 3

– Homogeneous coordinates – Plane at infinity – Points & planes are dual

• Linear transformations of 2 and 3

– Represented by homogeneous matrices – Homographies ⊃ Affine ⊃ Similarities ⊃

Euclidean ⊃ Translations

Transformations of the projective plane

12

Transformation of 2 Matrix #DoF Preserves Visualization

Translation

𝐼𝐼 𝒕𝒕𝟎𝟎𝑻𝑻 1

2 Orientation + all below

Euclidean

𝑅𝑅 𝒕𝒕𝟎𝟎𝑻𝑻 1

3 Lengths + all below

Similarity

𝑠𝑠𝑅𝑅 𝒕𝒕𝟎𝟎𝑻𝑻 1

4 Angles + all below

Affine 𝑎𝑎11 𝑎𝑎12 𝑎𝑎13𝑎𝑎21 𝑎𝑎22 𝑎𝑎230 0 1

6 Parallelism,

line at infinity + all below

Homography /projective

ℎ11 ℎ12 ℎ13ℎ21 ℎ22 ℎ23ℎ31 ℎ32 ℎ33

8 Straight lines

Image formation The perspective camera model

13

• The perspective camera model – 𝑃𝑃 = 𝐾𝐾 𝑅𝑅, 𝒕𝒕 – The camera matrix – Intrinsic: 𝐾𝐾 – The camera calibration matrix – Extrinsic: 𝑅𝑅, 𝒕𝒕

• Lens distortion

– Radial distortion – Tangential distortion (often ignored)

The perspective camera model

14

• The perspective camera model describes the correspondence between observed points in the world and points in the captured image

𝒖𝒖� = 𝑃𝑃 𝑿𝑿�𝑊𝑊 𝒖𝒖� = 𝐾𝐾 𝑅𝑅 𝒕𝒕 𝑿𝑿�𝑊𝑊

The perspective camera model

15

• The perspective camera model describes the correspondence between observed points in the world and points in the captured image

𝒖𝒖� = 𝑃𝑃 𝑿𝑿�𝑊𝑊 𝒖𝒖� = 𝐾𝐾 𝑅𝑅 𝒕𝒕 𝑿𝑿�𝑊𝑊

Intrinsic Extrinsic

The perspective camera model

16

• The perspective camera model describes the correspondence between observed points in the world and points in the captured image

𝒖𝒖� =𝑓𝑓𝑢𝑢 𝑠𝑠 𝑐𝑐𝑢𝑢0 𝑓𝑓𝑣𝑣 𝑐𝑐𝑣𝑣0 0 1

1 00 10 0

0 00 01 0

𝑅𝑅 𝒕𝒕𝟎𝟎 1 𝑿𝑿�𝑊𝑊

Intrinsic Extrinsic

where 𝑅𝑅 𝒕𝒕𝟎𝟎 1 = 𝜉𝜉𝐶𝐶

−1𝑊𝑊 = 𝜉𝜉𝑊𝑊𝐶𝐶

Practical use

• The geometry of the perspective camera is simple since we assume the pinhole to be infinitely small

• In reality the light passes through a lens that complicates the camera intrinsics

• If we want to use images for geometrical computations based on the perspective camera model, we MUST compensate for distortion

– Radial distortion • Barrel • Pincushion

– Tangential distortion

17

Barrel

Pincushion

Projecting a point in the world to a pixel in the image

18

If we have mounted a perspective camera 𝐶𝐶 on a vehicle 𝑉𝑉 and we know the pose of the camera relative to the vehicle as well as the pose of the vehicle relative to the world. How can we compute the pixel corresponding to a given point , 𝑿𝑿𝑊𝑊 , in the world 𝑊𝑊 ?

{ }W{ }V

{ }C W X

Projecting a point in the world to a pixel in the image

We know • The pose of the camera relative to the vehicle

• The pose of the vehicle relative to the world

• The point in the world We also need to know • The camera intrinsics

19

0 0.1736 0.9848 31 0 0 2

0 0.9848 0.1736 60 0 0 1

VCξ

− − − = − −

75600

W X =

1500 0 8000 1500 6000 0 1

K =

0.8660 0.5000 0 150.5000 0.8660 0 20

0 0 1 00 0 0 1

WVξ

− =

Then we can compute the pose of the world relative to the camera And we can use the perspective camera model directly to compute the pixel Hence we get the pixel

Projecting a point in the world to a pixel in the image

20

( ) 1

0.5000 0.8660 0 7.82050.1504 0.0868 0.9848 10.4220

0.8529 0.4924 0.1736 24.55360 0 0 1

C W VW V Cξ ξ ξ

−

− − − − = = − −

751500 0 800 0.5000 0.8660 0 7.8205 45203.0620

600 1500 600 0.1504 0.0868 0.9848 10.4220 32274.1449

00 0 1 0.8529 0.4924 0.1736 24.5536 68.9557

1

uvw

−

= − − − = − −

[ ]K R=u t X

45203.0620655.537468.9557

32274.1449 468.041568.9557

uv

= =

Reprojecting a pixel...

If we know the pose of a perspective camera relative to the world, how can we reproject a given pixel back into the world?

21

Reprojecting a pixel...

If we know the pose of a perspective camera relative to the world, how can we reproject a given pixel back into the world? • We know that the ray of any pixel contains the projective center given by the cameras

position

• Using the inverse calibration matrix, we can determine another point on the ray

• These two points define the line of sight for the pixel and we can use it to reproject the pixel onto any surface we like

22

11

1

1 1

C X uY K v−

=

0

0

0

000

C CXYZ

=

Reprojecting a pixel...

23

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Image processing • Point operators (pixel-to-pixel)

- Adjustment of brightness, contrast and colour - Histogram equalization

• Image filtering in spatial domain

- Mathematical operations on a local neighborhood - Linear filters (convolution, cross-correlation) - Non-linear filters - Image enhancement (smoothing, sharpening) - Feature extraction (edges, texture etc.)

• Image filtering in frequency domain

- Modification of spatial image frequencies - Noise removal, (re)sampling, image compression - 2D Fourier transform

25

Linear filtering (cross-correlation or convolution)

26

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 90 90 0 0 0 0 0

0 0 0 90 90 90 0 0 0 0

0 0 90 90 90 90 0 0 0 0

0 0 90 90 90 90 90 0 0 0

0 90 90 90 90 90 90 90 0 0

0 0 90 90 90 90 90 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 0 0 0 0 0 0 0 0

0 0 10 20 20 10 0 0 0 0

0 0 20

1 1 1 1 1 1 1 1 1

Filtering in frequency domain

Fourier (1807): Any univariate function can be rewritten as a

weighted sum of sines and cosines of different frequencies (true with some subtle restrictions).

This leads to: • Fourier Series • Fourier Transform (continuous and discrete) • Fast Fourier Transform (FFT)

Jean Baptiste Joseph Fourier (1768-1830)

27

Image processing Image Pyramids: • Downsampling (decimation) • Upsampling (interpolation) • Gaussian Pyramids

28

(Cmglee)

Gaussian Pyramid

29

Blur

Blur

Blur

Downsample Downsample Downsample

Image Processing

30

Laplacian Pyramids: • Laplacian filter • Laplacian pyramid • Image blending

Laplacian pyramid

31

- -

-

Laplacian pyramid

32

Image blending with Laplacian pyramids

Weighted sum for each level of the pyramid

= X X +

L Laplacian

blend

L1 Laplacian of img 1

G Gaussian of mask

L2 Laplacian of img 2

1-G Flipped mask

33

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Feature detection

Line features: • Edge detectors • Line detection with the Hough transform

35

Thinning and thresholding

• Detection of local maxima (i.e. suppression of non-maxima)

• Thresholding

Binary image with isolated edges (single pixels at discrete locations along edge contours)

Edge image (Canny) Edge enhanced image (Sobel)

36

Line detection - Hough transform

37

The set of all lines going through a given point corresponds to a sinusoidal curve in the plane. Two or more points on a straight line will give rise to sinusoids intersecting at the point for that line.

The Hough transform can be generalized to other shapes.

Example

38 Original Edge image (Canny)

Example (2)

39

Detected lines

Feature detection Local keypoint features

40

• Corner detectors – Stable in space – Min eigenvalue, Harris

• Blob detectors

– Stable in scale and space – LoG, DoG

Characteristics of good features

• Repeatability • Distinctiveness

• Efficiency • Locality

41

Local measure of feature distinctiveness

• Consider a small window of pixels around a feature • How does the window change when you shift it?

42

“Flat” region: No change in all directions

“Edge”: No change along edge

“Corner”: Change in all directions

Simplifying the measure even further

43

• Consider a horizontal “slice” of E(u,v):

• This is the equation of an ellipse – Describe the surface using

the eigenvalues of M

[ ]( , )u

E u v u v M constv

≈ =

Corner detection summary

• Compute the gradient at each point in the image using derivatives of Gaussians • Create the second moment matrix M from the entries in the gradient • Compute the eigenvalues • Find points with large response (λmin > threshold) • Choose those points where λmin is a local maximum as features

44

Harris detector properties

• Scaling

45

LoG blob detector

46

• Convolve the image with scale-normalized LoG at several scales • Find maxima of squared LoG response in scale-space

• Approximate with Difference of Gaussians (DoG)

Feature detection Robust estimation with RANSAC

47

• RANSAC – A robust iterative method for estimating the parameters of a mathematical model

from a set of observed data containing outliers – Separates the observed data into “inliers” and “outliers” – Very useful if we want to use better, but less robust, estimation methods

RANSAC Objective Robustly fit a model 𝒚𝒚 = 𝑓𝑓 𝒙𝒙;𝜶𝜶 to a data set 𝑆𝑆 = 𝒙𝒙𝑖𝑖

48

Algorithm 1. Determine a test model 𝒚𝒚 = 𝑓𝑓 𝒙𝒙;𝜶𝜶𝑡𝑡𝑡𝑡𝑡𝑡 from 𝑛𝑛 random data points 𝒙𝒙1,𝒙𝒙2, … ,𝒙𝒙𝑛𝑛

2. Check how well each individual data point in 𝑆𝑆 fits with the test model

– Data points within a distance 𝑡𝑡 of the model constitute a set of inliers 𝑆𝑆𝑡𝑡𝑡𝑡𝑡𝑡 ⊆ 𝑆𝑆 – Data points outside a distance 𝑡𝑡 of the model are outliers

3. If 𝑆𝑆𝑡𝑡𝑡𝑡𝑡𝑡 is the largest set of inliers encountered so far, we keep this model – Set 𝜶𝜶 = 𝜶𝜶𝑡𝑡𝑡𝑡𝑡𝑡 and 𝑆𝑆𝐼𝐼𝐼𝐼 = 𝑆𝑆𝑡𝑡𝑡𝑡𝑡𝑡

4. Repeat steps 1-3 until 𝑁𝑁 models have been tested

RANSAC

Comments • Number of iterations required to achieve

confidence 𝑝𝑝 when testing random models from 𝑛𝑛-tuples of data elements from a dataset with inlier probability 𝜔𝜔

𝑁𝑁 = 𝑙𝑙𝑙𝑙𝑙𝑙 1−𝑝𝑝𝑙𝑙𝑙𝑙𝑙𝑙 1−𝜔𝜔𝑛𝑛

• Typical desired level of confidence

𝑝𝑝 = 0.99

• Inlier probability 𝜔𝜔 is typically unknown, but can be estimated per iteration

𝜔𝜔 =#𝑚𝑚𝑎𝑎𝑥𝑥 𝑒𝑒𝑠𝑠𝑡𝑡𝑒𝑒𝑚𝑚𝑎𝑎𝑡𝑡𝑒𝑒𝑒𝑒 𝑒𝑒𝑛𝑛𝑖𝑖𝑒𝑒𝑒𝑒𝑖𝑖𝑠𝑠

#𝑒𝑒𝑎𝑎𝑡𝑡𝑎𝑎 𝑒𝑒𝑖𝑖𝑒𝑒𝑚𝑚𝑒𝑒𝑛𝑛𝑡𝑡𝑠𝑠

• Instead of operating with a fixed and larger than necessary 𝑁𝑁 we can update 𝑁𝑁 for each iteration

Adaptive RANSAC Objective Robustly fit a model 𝒚𝒚 = 𝑓𝑓 𝒙𝒙;𝜶𝜶 to a data set 𝑆𝑆 = 𝒙𝒙𝑖𝑖

50

Algorithm 1. Let 𝑁𝑁 = ∞, 𝑆𝑆𝐼𝐼𝐼𝐼 = ∅

2. As long as #iterations < 𝑁𝑁 repeat steps 3-5

3. Determine a test model 𝒚𝒚 = 𝑓𝑓 𝒙𝒙;𝜶𝜶𝑡𝑡𝑡𝑡𝑡𝑡 from 𝑛𝑛 random data points 𝒙𝒙1,𝒙𝒙2, … ,𝒙𝒙𝑛𝑛

4. Determine how well the data points in 𝑆𝑆 fits with the test model

– Data points within a distance 𝑡𝑡 of the model constitute a set of inliers 𝑆𝑆𝑡𝑡𝑡𝑡𝑡𝑡 ⊆ 𝑆𝑆

5. If 𝑆𝑆𝑡𝑡𝑡𝑡𝑡𝑡 > 𝑆𝑆𝐼𝐼𝐼𝐼 , update the model parameters 𝜶𝜶, the inlier set 𝑆𝑆𝐼𝐼𝐼𝐼 and the max number of iterations 𝑁𝑁 – Set 𝜶𝜶 = 𝜶𝜶𝑡𝑡𝑡𝑡𝑡𝑡 and 𝑆𝑆𝐼𝐼𝐼𝐼 = 𝑆𝑆𝑡𝑡𝑡𝑡𝑡𝑡

– Compute 𝑁𝑁 = 𝑙𝑙𝑙𝑙𝑙𝑙 1−𝑝𝑝𝑙𝑙𝑙𝑙𝑙𝑙 1−𝜔𝜔𝑛𝑛 using that 𝜔𝜔 = 𝑆𝑆𝐼𝐼𝐼𝐼

𝑆𝑆 and 𝑝𝑝 = 0.99

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Feature matching Feature descriptors and matching

• Matching keypoints – Comparing local patches in canonical scale and orientation

• Feature descriptors

– Robust, distinctive and efficient

• Descriptor types – HoG descriptors – Binary descriptors

• Putative matching

– Closest match, distance ratio, cross check

52

Feature matching From keypoints to feature correspondences

53

1. Detect a set of distinct feature points

2. Define a patch around each point

3. Extract and normalize the patch

4. Compute a local descriptor

5. Match local descriptors

Patch at detected position, scale, orientation

54

SIFT descriptor

• Extract a 16x16 patch around detected keypoint • Compute the gradients and apply a Gaussian weighting function • Divide the window into a 4x4 grid of cells • Compute gradient direction histograms over 8 directions in each cell • Concatenate the histograms to obtain a 128 dimensional feature vector • Normalize to unit length

55

Binary descriptors

• Extremely efficient construction and comparison

• Based on pairwise intensity comparisons – Sampling pattern around keypoint – Set of sampling pairs – Feature descriptor vector is a binary string:

• Matching using Hamming distance:

56

0

1 2

2 ( )

1 if ( ) ( )( )

0 otherwise

aa

a N

r ra a

a

F T P

I P I PT P

≤ ≤

=

>=

∑

1 2

0( , )a a

a NL XOR F F

≤ ≤

= ∑

BRISK sampling pattern

BRISK sampling pairs

Feature matching Estimating homographies from feature correspondences

57

• Homography 𝐻𝐻𝒖𝒖� = 𝒖𝒖�′

𝐻𝐻 =ℎ1 ℎ2 ℎ3ℎ4 ℎ5 ℎ6ℎ7 ℎ8 ℎ9

• Automatic point-correspondences

• Wrong correspondences are common

• RANSAC estimation

– Basic DLT (Direct Linear Transform) on 4 random correspondences

– Inliers determined based on the reprojection error 𝜖𝜖𝑖𝑖 = 𝑒𝑒 𝐻𝐻𝒖𝒖𝑖𝑖 ,𝒖𝒖′𝑖𝑖 + 𝑒𝑒 𝒖𝒖𝑖𝑖 ,𝐻𝐻−1𝒖𝒖′𝑖𝑖

• Improve estimate by normalized DLT on inliers or iterative methods for an even better estimate

Homographies induced by central projection

58

• Homography 𝐻𝐻𝒖𝒖� = 𝒖𝒖�′

𝐻𝐻 =ℎ1 ℎ2 ℎ3ℎ4 ℎ5 ℎ6ℎ7 ℎ8 ℎ9

• Point-correspondences can be determined automatically • Erroneous correspondences are common • Robust estimation is required to find 𝐻𝐻

Direct Linear Transform (DLT)

59

• Solve the equation 𝐻𝐻𝒖𝒖� = 𝒖𝒖�′ for the entries of the homography matrix

1 2 3

4 5 6

7 8 9

1 2 3

4 5 6

7 8 9

1

2

9

1 1

1

0 0 0 1 01 0 0 0 0

0 0 0 0

h h h u uh h h v vh h h

uh vh h uuh vh h vuh vh h

hu v uv vv v

hu v uu vu u

uv vv v uu vu uh

′ ′=

′+ + = ′⇔ + + = + + =

′ ′ ′− − −

′ ′ ′⇔ − − − = ′ ′ ′ ′ ′ ′ − − −

A

⇔ =h 0

60

1 2 3

4 5 6

7 8 9 1

uh vh h uuh vh h vuh vh h

′+ + = ′+ + = + + =

( )( )( ) ( )

1 2 3

4 5 6

1 2 3 4 5 6

1 2 3 4 5 6 0

uh vh h v u vuh vh h u u v

uh vh h v uh vh h uuv h vv h v h uu h vu h u h

′ ′ ′+ + ⋅ = ⋅ ⇒ ′ ′ ′+ + ⋅ = ⋅ ′ ′⇒ + + = + +

′ ′ ′ ′ ′ ′⇒ + + − − − =

( )1 2 3

7 8 9

1 2 3 7 8 9

1 2 3 7 8 9

1

0

uh vh h uuh vh h u u

uh vh h uu h vu h u huh vh h uu h vu h u h

′+ + = ⇒ ′ ′+ + ⋅ = ⋅

′ ′ ′⇒ + + = + +′ ′ ′⇒ + + − − − =

( )4 5 6

7 8 9

4 5 6 7 8 9

4 5 6 7 8 9

1

0

uh vh h vuh vh h v v

uh vh h uv h vv h v huh vh h uv h vv h v h

′+ + = ⇒ ′ ′+ + ⋅ = ⋅

′ ′ ′⇒ + + = + +′ ′ ′⇒ + + − − − =

1

2

9

0 0 0 1 01 0 0 0 0

0 0 0 0

hu v uv vv v

hu v uu vu u

uv vv v uu vu uh

′ ′ ′− − −

′ ′ ′− − − = ′ ′ ′ ′ ′ ′ − − −

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

World geometry from correspondences

Structure (scene geometry)

Motion (camera geometry) Measurements

Pose estimation Known Estimate 3D to 2D correspondences

Triangulation, Stereo Estimate Known 2D to 2D

correspondences

Reconstruction, Structure from Motion Estimate Estimate 2D to 2D

correspondences

62

Camera calibration

• Camera calibration is a process where we estimate the intrinsic parameters 𝑓𝑓𝑢𝑢, 𝑓𝑓𝑣𝑣, 𝑠𝑠, 𝑐𝑐𝑢𝑢, 𝑐𝑐𝑣𝑣 and distortion parameters for a camera

• Zhang’s method requires that the calibration object is planar. This ensures that the 3D-2D relationship is described by a homography that comes with 2 constraints since it is partially a rotation matrix

• The 2 constraints allow us to estimate the intrinsic parameters using linear methods. Non-linear estimation methods can then be used to refine the intrinsic parameters and estimate the distortion parameters

63

[ ] [ ]1 2 3 01 1 1

1

Xu X X

Yv K K Y H Y

= = =

1 2r r r t r r t

Distortion models

64

• A distortion model describes the relationship between undistorted coordinates 𝒙𝒙 and distorted coordinates 𝒙𝒙′ of the normalized image plane

• This example model describes both radial distortion and tangential distortion

( ) ( )( ) ( )

2 4 2 21 2 1 2

2 4 2 21 2 1 2

1 2 2

1 2 2

x x k r k r p x y p r x

y y k r k r p r y p x y

′ ′ ′ ′= + + + + +

′ ′ ′ ′= + + + + +2 2 2where r x y′ ′= +

Undistortion

65

undistortion

[ ]K R=u t X[ ]K R≠u t X

• We can use the distortion model to warp the original image into the so called undistorted image

• The undistorted image satisfy the perspective camera model and are thus well suited for geometrical computations

• Since the distortion model depends on 𝐾𝐾 for the undistorted camera, it is custom to estimate both in a common calibration process

Images: http://www.robots.ox.ac.uk/~vgg/hzbook/

Original image Undistorted image

Single-view geometry Pose from known 3D points

• Pose estimation relative to a world plane • PnP

66

Pose estimation relative to a world plane

67

𝑿𝑿𝝅𝝅

𝐻𝐻𝑊𝑊𝐶𝐶

𝐾𝐾

𝐶𝐶

𝒖𝒖

𝒙𝒙

𝒖𝒖� = 𝑲𝑲 𝑹𝑹 | 𝒕𝒕 𝑿𝑿�𝝅𝝅

𝑧𝑧

𝑥𝑥 𝑦𝑦 𝑊𝑊

𝜋𝜋

[ ]

[ ]

[ ]

1 2 3

1 2

|

, , ,01

, ,1

CW

xy

xy

π

π

=

=

=

=

u K R t X

K r r r t

K r r t

H x

• We can map points on the world plane into image coordinates by using the perspective camera model

1C

W

=

R tT

0

n-Point Pose Problem (PnP)

• Several different methods available – Typically fast non-iterative methods – Minimal in number of points – Accuracy comparable to iterative methods

• Examples

– P3P, EPnP • Estimate pose, known K

– P4Pf • Estimate pose and focal length

– P6P • Estimates P with DLT

– R6P • Estimate pose with rolling shutter

68

Single-view geometry Scene geometry from a single image

• Vanishing points – Perspective projection of infinitely long lines

(non-parallel to the image plane) – Parallel lines have the same vanishing point

• Vanishing lines

– Perspective projection of planes (non-parallel to the image plane)

– Parallel planes have the same vanishing line

69

Vertical structures on a horizontal plane

70

Straight and level camera

Translated downwards

Translated upwards

Translated rightwards

Tilted upwards

Tilted downwards

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Stereo imaging Basic epipolar geometry

72

• Epipolar geometry – Epipolar planes – Epipolar lines – Epipoles

Stereo imaging Stereo imaging

• Stereo imaging – Horizontal epipolar lines – Disparity – 3D from disparity – Stereo rectification

73

Stereo geometry

• Parallel identical cameras – Translated along x-axis

74

𝑥𝑥𝐿𝐿 𝑦𝑦𝐿𝐿

𝑧𝑧𝐿𝐿

𝑏𝑏𝑥𝑥

𝑷𝑷𝐿𝐿 = 𝑋𝑋,𝑌𝑌,𝑍𝑍

Stereo geometry

• Parallel identical cameras – Translated along x-axis

• Horizontal epipolar lines

– Corresponding points lie along the same row in the two images

75

𝑥𝑥𝐿𝐿 𝑦𝑦𝐿𝐿

𝑧𝑧𝐿𝐿

𝑏𝑏𝑥𝑥

𝑷𝑷𝐿𝐿 = 𝑋𝑋,𝑌𝑌,𝑍𝑍

Stereo geometry

• Parallel identical cameras – Translated along x-axis

• Horizontal epipolar lines

– Corresponding points lie along the same row in the two images

• Depth from disparity

76

𝑥𝑥𝐿𝐿 𝑦𝑦𝐿𝐿

𝑧𝑧𝐿𝐿

𝑏𝑏𝑥𝑥

𝑷𝑷𝐿𝐿 = 𝑋𝑋,𝑌𝑌,𝑍𝑍 𝑏𝑏𝑥𝑥

𝑍𝑍

𝑒𝑒

Stereo geometry

• Parallel identical cameras – Translated along x-axis

• Horizontal epipolar lines

– Corresponding points lie along the same row in the two images

• 3D from disparity

77

𝑥𝑥𝐿𝐿 𝑦𝑦𝐿𝐿

𝑧𝑧𝐿𝐿

𝑏𝑏𝑥𝑥

𝑷𝑷𝐿𝐿 = 𝑋𝑋,𝑌𝑌,𝑍𝑍 𝑏𝑏𝑥𝑥

𝑍𝑍

𝑒𝑒

xL

bX xd

= xL

bY yd

=

Stereo rectification

• Reproject image planes onto a common plane parallel to the line between the camera centers

• The epipolar lines are horizontal after this transformation

• Two homographies

• C. Loop and Z. Zhang. Computing Rectifying Homographies for Stereo Vision. IEEE Conf. Computer Vision and Pattern Recognition, 1999.

78

𝑥𝑥𝐶𝐶1 𝑦𝑦𝐶𝐶1

𝑧𝑧𝐶𝐶1

𝑥𝑥𝐶𝐶2 𝑦𝑦𝐶𝐶2

𝑧𝑧𝐶𝐶2

Stereo imaging Stereo processing

• Stereo processing – Sparse vs dense matching – DSI – Typical failures – Removing failures vs smoothness

79

Stereo processing

• Sparse stereo – Extract keypoints – Match keypoints along the same row – Compute 3D from disparity

• Dense stereo – Try to match all pixels along rows – Compute disparity image by finding the best disparity for each pixel – Refine and clean disparity image – Compute dense 3D point cloud or surface from disparity

80

419

657

1038

642

610

566

526

574

534

672

571

667

563

476

562

620

616

638

548

637

584 560

475

617

564

584

1019

567

613

565 569

469

665

578

651

597

431

683

558

512

613

445

473

547

571

630

657

443

657

1046

537

546

668

554

708 693

655

465

623

559

593

511

1063

504

523

664

680

625

573 563

567

691

614

645

799

653

578

512

564

618

567

491

560

535

618

518

655

567

555

447

420 419

614

696

566

694

418

640

514

507

655

423

482

657

648

567

69

415

598

529

557

605

469

508

1041 651

470

721

450

561

554

525

774

654

510

423

599

661

418

587 650

491

530

570

628

622

663

578 546

558

651

555

610 656

488

657

434

595

685

1067

775

556

472

510

395

563

416

494

1040

504

632

601

569

625

476 488

683

530

550

432 418

614

403

419

572 547

515

635

415

640

658

551

696

513

547

540 567

682

487 517

529

1076

462

434

409

416

-300-200-1000100200300

Disparity (pixels)

-0.5

0

0.5

Sim

ilarit

y

Dense stereo matching

81

• For a patch in the left image – Compare with patches along

the same row in the right image – Select patch with highest score

• Repeat for all pixels in the left image

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Two-view geometry Epipolar geometry

83

• Algebraic representation of epipolar geometry – The essential matrix – The fundamental matrix

• Estimating the epipolar geometry

– Estimate 𝐹𝐹: 7pt, 8pt, RANSAC – Estimate 𝑆𝑆: 5pt

𝒖𝒖 𝒖𝒖′

𝐶𝐶

𝒙𝒙 𝒙𝒙′

𝐶𝐶′

𝑿𝑿

𝐹𝐹

𝐾𝐾 𝐾𝐾′

𝑆𝑆

Representations of epipolar geometry • Observing the same points in two views puts a

strong geometrical constraint on the cameras • Algebraically this epipolar constraint is usually

represented by two related 3 × 3 matrices

• The fundamental matrix 𝐹𝐹 𝒖𝒖�′𝑇𝑇𝐹𝐹𝒖𝒖� = 0

• The essential matrix 𝑆𝑆

𝒙𝒙�′𝑇𝑇𝑆𝑆𝒙𝒙� = 0

• These are related through the two calibration matrices 𝐾𝐾 and 𝐾𝐾′

84

𝒖𝒖 𝒖𝒖′

𝐶𝐶

𝒙𝒙 𝒙𝒙′

𝐶𝐶′

𝑿𝑿

𝐹𝐹

𝐾𝐾 𝐾𝐾′

𝑆𝑆

The essential matrix E • Let 𝒙𝒙𝐶𝐶 ↔ 𝒙𝒙′𝐶𝐶𝐶 be corresponding points in the

normalized image planes and let the pose of 𝐶𝐶 relative to 𝐶𝐶′ be

𝜉𝜉𝐶𝐶𝐶𝐶𝐶 = 𝑅𝑅 𝒕𝒕𝟎𝟎 1

• In terms of vectors, the equation for the epipolar

plane can be written like 𝒙𝒙�′𝐶𝐶𝐶 × 𝒕𝒕 ∙ 𝑅𝑅 𝒙𝒙�𝐶𝐶 = 0

• Rewritten in terms of matrices this takes the form

𝒙𝒙�′𝑇𝑇𝐶𝐶𝐶 𝒕𝒕 ×𝑅𝑅 𝒙𝒙�𝐶𝐶 = 0

• This relationship defines the essential matrix 𝑆𝑆 = 𝒕𝒕 ×𝑅𝑅

85

𝒙𝒙�′𝑇𝑇𝑆𝑆𝒙𝒙� = 0

𝒙𝒙𝐶𝐶

𝒙𝒙′𝐶𝐶𝐶

𝐶𝐶

𝐶𝐶′

𝒕𝒕 𝒙𝒙�′𝐶𝐶𝐶

𝒙𝒙�𝐶𝐶

The fundamental matrix F

• The epipolar constraint on image points is naturally connected to the essential matrix by the calibration matrices 𝐾𝐾 and 𝐾𝐾′

• Combined with the epipolar constraint for normalized image points we get

• This defines the fundamental matrix 𝐹𝐹 = 𝐾𝐾′−𝑇𝑇𝑆𝑆𝐾𝐾−1

86

𝒖𝒖�′𝑇𝑇𝐹𝐹𝒖𝒖� = 0

𝒖𝒖 𝒖𝒖′

𝐶𝐶

𝒙𝒙 𝒙𝒙′

𝐶𝐶′

𝑿𝑿

𝐹𝐹

𝐾𝐾 𝐾𝐾′

𝑆𝑆 1

1

C C

C C C T T T

K KK K K

−

′ ′ ′− −

= ⇒ =

′ ′ ′ ′ ′ ′ ′ ′ ′= ⇒ = ⇒ =

x u x ux u x u x u

1

00

C T C

T T

EK EK

′

− −

′ =

′ ′ =

x xu u

Two-view geometry Triangulation

87

• Triangulation – Estimate a 3D point 𝑿𝑿𝑖𝑖 for a noisy 2D correspondence under the assumption that camera matrices 𝑃𝑃 and 𝑃𝑃′ are known

• Minimal 3D error – Choose 𝑿𝑿𝑖𝑖 to be the mid-point between back projected image points

• Minimal algebraic error – Combine the two perspective models to get a homogeneous system of linear equations, then determine 𝑿𝑿𝑖𝑖 by SVD

• Minimal reprojection error – Determine the epipolar plane (and points 𝒖𝒖�𝒊𝒊 and 𝒖𝒖�𝑖𝑖𝐶) that minimize the reprojection error by minimizing a 6th order polynomial

𝐶𝐶

𝐶𝐶′

𝑿𝑿𝑖𝑖

𝒙𝒙𝒊𝒊 𝒙𝒙𝑖𝑖𝐶

𝒙𝒙�𝒊𝒊

𝒙𝒙�𝑖𝑖𝐶

Two-view geometry Pose from epipolar geometry

88

• Pose from epipolar geometry

• Non-planar case – Estimate epipolar geometry – Estimate relative pose from 𝑆𝑆

• Planar case

– Estimate homography – Estimate relative pose from 𝐻𝐻

• Visual odometry

𝜉𝜉𝑘𝑘0 cam0 cam𝑘𝑘

cam𝑘𝑘−1 cam𝑘𝑘+1

𝜉𝜉𝑘𝑘+1𝑘𝑘

Pose from epipolar geometry

89

• Since we only can estimate 𝑆𝑆 up to scale, we can always rescale it so that the SVD of 𝑆𝑆 has the form where det 𝑈𝑈 = det 𝑉𝑉 = 1

• Then one can show that 𝑅𝑅 ∈ 𝑈𝑈𝑊𝑊𝑉𝑉𝑇𝑇 ,𝑈𝑈𝑊𝑊𝑇𝑇𝑉𝑉𝑇𝑇 𝒕𝒕 = ±𝜆𝜆𝒖𝒖3; 𝜆𝜆 ∈ ℝ\0

where

𝑊𝑊 =0 1 0−1 0 00 0 1

[ ]1

1 2 3 2

3

1 0 00 1 00 0 0

T

T T

T

E UDV = =

vu u u v

v

Visual odometry

• Based on what we now know it is possible to do visual odometry, i.e. estimating the motion of a single camera from captured images

• A visual odometry algorithm can look like this – How to compute 𝒕𝒕𝑘𝑘𝑘𝑘+1 from 𝒕𝒕𝑘𝑘−1𝑘𝑘 ? – Determine two scene points 𝑿𝑿𝑘𝑘−1,𝑘𝑘

𝑘𝑘 and 𝑿𝑿′𝑘𝑘−1,𝑘𝑘

𝑘𝑘 by triangulation of two 2D-correspondences 𝒙𝒙𝑘𝑘−1 ↔ 𝒙𝒙𝑘𝑘 and 𝒙𝒙′𝑘𝑘−1 ↔ 𝒙𝒙′𝑘𝑘

– Determine the same two scene points 𝑿𝑿𝑘𝑘,𝑘𝑘+1𝑘𝑘

and 𝑿𝑿′𝑘𝑘,𝑘𝑘+1𝑘𝑘 by triangulation of two 2D-

correspondences 𝒙𝒙𝑘𝑘 ↔ 𝒙𝒙𝑘𝑘+1 and 𝒙𝒙′𝑘𝑘 ↔ 𝒙𝒙′𝑘𝑘+1 – Then

Visual odometry from 2D-correspondences 1. Capture new frame 𝑒𝑒𝑚𝑚𝑖𝑖𝑘𝑘+1 2. Extract and match features between 𝑒𝑒𝑚𝑚𝑖𝑖𝑘𝑘+1

and 𝑒𝑒𝑚𝑚𝑖𝑖𝑘𝑘 3. Estimate the essential matrix 𝑆𝑆𝑘𝑘,𝑘𝑘+1 4. Decompose the 𝑆𝑆𝑘𝑘,𝑘𝑘+1 into 𝑅𝑅𝑘𝑘+1𝑘𝑘 and 𝒕𝒕𝑘𝑘+1𝑘𝑘 to

get the relative pose 𝜉𝜉𝑘𝑘+1𝑘𝑘 = 𝑅𝑅𝑘𝑘+1𝑘𝑘 𝒕𝒕𝑘𝑘+1𝑘𝑘

5. Compute 𝒕𝒕𝑘𝑘+1𝑘𝑘 from 𝒕𝒕𝑘𝑘𝑘𝑘−1 and rescale 𝒕𝒕𝑘𝑘+1𝑘𝑘 accordingly

6. Calculate the pose of camera 𝑘𝑘 + 1 relative to the first camera

𝜉𝜉𝑘𝑘+10 = 𝜉𝜉𝑘𝑘0 𝜉𝜉𝑘𝑘+1𝑘𝑘

90

11, 1,

1 , 1 , 1

k k kk k k k k

k k kk k k k k

−− −

+ + +

′−=

′−

t X X

t X X

𝜉𝜉𝑘𝑘0 cam0 cam𝑘𝑘

cam𝑘𝑘−1 cam𝑘𝑘+1

𝜉𝜉𝑘𝑘+1𝑘𝑘

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Multiple-view geometry Multiple-view geometry

92

• Multiple-view geometry • Correspondences

– Two-view vs Three-view – Fundamental matrix vs Trifocal tensor

Correspondences

93

Three views • As we just saw, point transfer can be done

directly from the epipolar constraints 𝒖𝒖�3 = 𝐹𝐹31𝒖𝒖�1 × 𝐹𝐹32𝒖𝒖�2

• However, this fails for points in the plane

defined by the three camera centers – the trifocal plane – since the epipolar lines then will coincide

• The trifocal tensor allows point transfer also for points in the trifocal plane

img1

𝒖𝒖1 ⊗

img2

𝒖𝒖2 ×

img3

𝒖𝒖3 ⊠

𝐹𝐹31

𝐹𝐹32 𝐹𝐹12

𝐹𝐹31𝒖𝒖�1

𝐹𝐹32𝒖𝒖�2

𝒖𝒖�3 = 𝐹𝐹31𝒖𝒖�1 × 𝐹𝐹32𝒖𝒖�2

Example Point transfer based on epipolar constraints

94

𝒖𝒖1 𝒖𝒖2

𝒖𝒖3

𝐹𝐹31 𝐹𝐹32

𝐹𝐹12

𝒖𝒖�3 = 𝐹𝐹31𝒖𝒖�1 × 𝐹𝐹32𝒖𝒖�2

Uncertainty in feature points transfer to uncertainty in the epipolar lines Hence the reliability of the predicted point depends on the angle between the epipolar lines A large angle is good!

Multiple-view geometry Structure from motion

95

• Structure from motion – Sequential SfM – Bundle adjustment

𝜖𝜖 = ��𝑒𝑒 𝒖𝒖�𝑖𝑖𝑖𝑖 ,𝑃𝑃𝑖𝑖𝑿𝑿�𝑖𝑖2

𝑛𝑛

𝑖𝑖=1

𝑚𝑚

𝑖𝑖=1

Structure from Motion Problem Given 𝑚𝑚 images of 𝑛𝑛 fixed 3D points, estimate the 𝑚𝑚 projection matrices 𝑃𝑃𝑖𝑖 and the 𝑛𝑛 points 𝑿𝑿𝑖𝑖 from the 𝑚𝑚 ∙ 𝑛𝑛 correspondences 𝒖𝒖𝑖𝑖𝑖𝑖 ↔ 𝒖𝒖𝑘𝑘𝑖𝑖 • We can solve for structure and motion when

2𝑚𝑚𝑛𝑛 ≥ 11𝑚𝑚 + 3𝑛𝑛 − 15 • In the general/uncalibrated case, cameras and

points can only be recovered up to a projective ambiguity (𝒖𝒖�𝑖𝑖𝑖𝑖 = 𝑃𝑃𝑖𝑖𝑄𝑄−1𝑄𝑄𝑿𝑿�𝑖𝑖)

• In the calibrated case, they can be recovered up to a similarity (scale)

– Known as Euclidean/metric reconstruction

96

𝒖𝒖�𝑖𝑖𝑖𝑖 = 𝑃𝑃𝑖𝑖𝑿𝑿�𝑖𝑖

Multiple-view geometry Multiple-view stereo

97

• Multi-view stereo – Plane-sweep – Volumetric stereo – Surface expansion

• Surface reconstruction

Plane sweep

• Sweep planes at different depths

98

Robert Collins, A Space-Sweep Approach to True Multi-Image Matching, CVPR 1996. D. Gallup, J.-M. Frahm, P. Mordohai, Q. Yang and M. Pollefeys, Real-Time Plane-Sweeping Stereo with Multiple Sweeping Directions, CVPR 2007

𝑲𝑲𝒌𝒌

Reference camera Camera k

𝑿𝑿

𝐻𝐻

𝑲𝑲𝒓𝒓𝒓𝒓𝒓𝒓

𝑃𝑃𝑟𝑟𝑟𝑟𝑟𝑟 𝑃𝑃𝑘𝑘

𝒖𝒖 𝒖𝒖′

𝒙𝒙 𝒙𝒙′

𝒖𝒖 = 𝑲𝑲𝑟𝑟𝑟𝑟𝑟𝑟 𝐼𝐼 | 𝟎𝟎 𝑿𝑿 𝒖𝒖′ = 𝑲𝑲𝑘𝑘 𝑅𝑅𝑘𝑘 | 𝒕𝒕𝑘𝑘 𝑿𝑿

𝒏𝒏𝑚𝑚−1

𝑒𝑒𝑚𝑚−1

Plane sweep

• Sweep planes at different depths

99

𝑿𝑿

𝐻𝐻

𝑲𝑲𝒓𝒓𝒓𝒓𝒓𝒓

𝑃𝑃𝑟𝑟𝑟𝑟𝑟𝑟 𝑃𝑃𝑘𝑘

𝒖𝒖 𝒖𝒖′

𝒙𝒙 𝒙𝒙′

𝒖𝒖 = 𝑲𝑲𝑟𝑟𝑟𝑟𝑟𝑟 𝐼𝐼 | 𝟎𝟎 𝑿𝑿 𝒖𝒖′ = 𝑲𝑲𝑘𝑘 𝑅𝑅𝑘𝑘 | 𝒕𝒕𝑘𝑘 𝑿𝑿

𝒏𝒏𝑚𝑚

𝑒𝑒𝑚𝑚

Robert Collins, A Space-Sweep Approach to True Multi-Image Matching, CVPR 1996. D. Gallup, J.-M. Frahm, P. Mordohai, Q. Yang and M. Pollefeys, Real-Time Plane-Sweeping Stereo with Multiple Sweeping Directions, CVPR 2007

𝑲𝑲𝒌𝒌

Reference camera Camera k

Plane sweep

• Sweep planes at different depths

100

𝐻𝐻

𝑲𝑲𝒓𝒓𝒓𝒓𝒓𝒓

𝑃𝑃𝑟𝑟𝑟𝑟𝑟𝑟 𝑃𝑃𝑘𝑘

𝒖𝒖 𝒖𝒖′

𝒙𝒙 𝒙𝒙′

𝒖𝒖 = 𝑲𝑲𝑟𝑟𝑟𝑟𝑟𝑟 𝐼𝐼 | 𝟎𝟎 𝑿𝑿 𝒖𝒖′ = 𝑲𝑲𝑘𝑘 𝑅𝑅𝑘𝑘 | 𝒕𝒕𝑘𝑘 𝑿𝑿

𝒏𝒏𝑚𝑚+1

𝑒𝑒𝑚𝑚+1

Robert Collins, A Space-Sweep Approach to True Multi-Image Matching, CVPR 1996. D. Gallup, J.-M. Frahm, P. Mordohai, Q. Yang and M. Pollefeys, Real-Time Plane-Sweeping Stereo with Multiple Sweeping Directions, CVPR 2007

𝑲𝑲𝒌𝒌

Reference camera Camera k

Plane sweep and ambiguities

• Multiple views can resolve ambiguities in difficult areas!

101

Plane sweep through oriented planes

• Fronto-parallel

• Other plane orientations

102

𝑿𝑿

𝐻𝐻

𝑲𝑲𝒓𝒓𝒓𝒓𝒓𝒓

𝑃𝑃𝑟𝑟𝑟𝑟𝑟𝑟 𝑃𝑃𝑘𝑘

𝒖𝒖 𝒖𝒖′

𝒙𝒙 𝒙𝒙′

𝒖𝒖 = 𝑲𝑲𝑟𝑟𝑟𝑟𝑟𝑟 𝐼𝐼 | 𝟎𝟎 𝑿𝑿 𝒖𝒖′ = 𝑲𝑲𝑘𝑘 𝑅𝑅𝑘𝑘 | 𝒕𝒕𝑘𝑘 𝑿𝑿

𝒏𝒏𝑚𝑚

𝑒𝑒𝑚𝑚

Robert Collins, A Space-Sweep Approach to True Multi-Image Matching, CVPR 1996. D. Gallup, J.-M. Frahm, P. Mordohai, Q. Yang and M. Pollefeys, Real-Time Plane-Sweeping Stereo with Multiple Sweeping Directions, CVPR 2007

𝑲𝑲𝒌𝒌

Reference camera Camera k

[ ]( , )

1m

m Tref m

dZ u vu v K −

−=

n

[ ]0 0 1 Tm = −n

( , )m mZ u v d=

Plane sweep with ground normal

103

𝑒𝑒𝑚𝑚 = 200 meter below reference camera

Red:

Green:

Blue:

𝑍𝑍𝑚𝑚 = 790 meter

Plane sweep with ground normal

104

𝑒𝑒𝑚𝑚 = 261 meter below reference camera

Red:

Green:

Blue:

𝑍𝑍𝑚𝑚 = 800 meter

Plane sweep with ground normal

105

𝑒𝑒𝑚𝑚 = 298 meter below reference camera

Red:

Green:

Blue:

𝑍𝑍𝑚𝑚 = 811 meter

𝑍𝑍𝑚𝑚 = 645 meter

Plane sweep with ground normal

106

𝑒𝑒𝑚𝑚 = 471 meter below reference camera

Red:

Green:

Blue:

𝑍𝑍𝑚𝑚 = 2169 meter

𝑍𝑍𝑚𝑚 = 1967 meter

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Image Analysis

Image Segmentation: • Thresholding techniques • Clustering methods for segmentation • Morphological operations.

108

Segmentation methods

Active contours (Snakes, Scissors, Level Sets) Split and merge (Watershed, Divisive & agglomerative clustering, Graph-based segmentation) Gray level thresholding K-means (parametric clustering) Mean shift (non-parametric clustering) Normalized cuts Graph cuts

109

Supervised color based segmentation (region growing)

Image analysis

110

Image feature extraction: • Feature extraction • Feature selection

Feature extraction

The goal is to generate features that exhibit high information-packing properties: • Extract the information from the raw data that is most relevant for

discrimination between the classes • Extract features with low within-class variability and high between

class variability • Discard redundant information. • The information in an image f[i,j] must be reduced to enable reliable

classification (generalization) • A 64x64 image 4096-dimensional feature space!

111

Feature types (regional features)

112

• Colour features • Shape features • Histogram (texture) features:

– Mean gray level – Variance – Skewness – Kurtosis – Entropy – …

Image analysis

Introduction to Machine learning: • Recognition of individuals (instance recognition) • Discrimination between classes (pattern recognition, classification)

113

Supervised learning

Classifiers and training methods

• Bayes classifier • Nearest-neighbors and K-nearest-neighbors • Parzen windows • Linear and higher order discriminant functions • Neural nets • Support Vector Machines (SVM) • Decision trees • Random forest • …

114

IMAGE FORMATION, PROCESSING AND FEATURES • Image formation

– Light, cameras, optics and color – Pose in 2D and 3D – Basic projective geometry – The perspective camera model

• Image processing – Image filtering – Image pyramids – Laplace blending

• Feature detection – Line features – Local keypoint features – Robust estimation with RANSAC

• Feature matching – From keypoints to feature correspondences – Feature descriptors – Feature matching – Estimating homographies from feature

correspondences

WORLD GEOMETRY AND 3D • Single-view geometry

– Camera calibration – Pose from known 3D points – Scene geometry from a single

view • Stereo imaging

– Basic epipolar geometry – Stereo imaging – Stereo processing

• Two-view geometry – Epipolar geometry – Triangulation – Pose from epipolar geometry

• Multiple-view geometry – Multiple-view geometry – Structure from motion – Multiple-view stereo

SCENE ANALYSIS • Image analysis

– Image segmentation – Image feature extraction – Introduction to machine learning

• Object detection – Descriptor-based detection – Introduction to deep learning

Lectures 2018

Object detection

Descriptor-based detection: • Feature descriptors (HoG, SIFT, SURF, …) • Object detection • Instance recognition. Applications: • Pedestrian detection • Face detection • Face recognition.

116

Object detection

117

Introduction to deep learning: • Deep learning • Artificial neural networks • Convolutional neural networks (CNN)

Deep Learning for Object Recognition

«Ship»

Millions of images Millions of parameters Thousands of classes

(AlexNet)

118