Gradient descent

-

Upload

prashant-mudgal -

Category

Data & Analytics

-

view

399 -

download

0

Transcript of Gradient descent

Gradient Descent

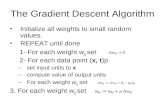

The purpose behind this document is to understand what the methods of gradient descent and Newton’s method are that are used exclusively in the field of optimization and operations research. Gradient descent is used for minimization of the most function and for maximization gradient ascent is used. Conjugate gradient is also one algorithm that does the same work as gradient descent/ascent does, the only difference is that conjugate gradient does the work in fewer number of iterations and takes the next step in the direction of orthogonal vectors[1]. The cost function is the function that we want to minimize J(θ0, θ1) Start with a value of θ0 and θ1 and evaluate the function J e.g. we can use the starting value of 0,0. The idea behind the gradient descent is that one has to take steps towards the steepest descent. Imagine you are standing at a point on the curve in 3-D, look all around you in 360° and decide what direction the steepest descent is. Depending on the various starting point, one can reach various local minima and this is one caveat that gradient descent has. To write down in mathematical equations,

θj = θj - 𝛂 ∂/ ∂ θj ( J(θ0 , θ1)), for j = 0,1

and 𝛂 is the learning rate/step size in the direction of the steepest descent.

One thing to remember is that one has to make the updates in θ0 and θ1

simultaneously i.e. temp0 = θ0 - 𝛂 ∂/ ∂ θ0 ( J(θ0 , θ1))

temp1 = θ1 - 𝛂 ∂/ ∂ θ1 ( J(θ0 , θ1))

θ0 = temp0

θ1 = temp1

To illustrate how gradient descent works, I am attaching pages from my notebook.

!2

I have taken a cost function and iterating to reach the minima by successively computing the values of x and y. The work done here is self explanatory, one requires understanding of basic calculus. We started with (2,3) as the starting points for x and y and calculated the value of partial derivatives at these points which came out to be 4,4 in first iteration. Let’s assume we have to take t steps in the direction of decreasing gradient, t is the hessian matrix. So, our next point will be (2+ 4t,3+4t). We calculate derivative of f(x,y) at the new points, at minima the first derivative should be zero, gʹ(t) = 0 , where gʹ(t) is the first derivative w.r.t. t.

!3

The caveat of the method is that if the step size is small then the number of iterations are high.

!4

The method of Steepest Descent pursues completely independent search directions from one iteration to the next. However, in some cases this causes the method to “zig-zag” from the initial iterate x0 to the minimizer x∗.

Newton’s Method

One question that comes to mind is that how the method is different from the Newton’s method of approximation that assumes quite similar form.

Gradient descent tries to find such a minimum x by using information from the first derivative of f: It simply follows the steepest descent from the current point. Also, the final result depends on the coordinates chosen. Newton’s method is for finding the roots and it doesn’t depends on the initial points chosen as the approximation. Also, it is a 2nd order method that means it not only uses the first derivative but also uses the Hessian matrix in multi variable case. I got interested in knowing whether we can use Newton’s method for optimization or not and it seems that we can do so. xt+1 = xt - g(t) / gʹ(t) ; where xt is the initial approximation and xt+1 is the next approximation found in the successive iteration. For using the Newton’s method for optimization we will use the equation: xt+1 = xt - gʹ(t) / gʹʹ(t), where gʹʹ(t) is the second order derivative. First derivative is used to find the points on a curve where the slope is zero, while second derivative is used to find whether the point is a maxima or a minima. Second Derivative: less than 0 is a maximum, greater than 0 is a minimum The advantage of Newton’s method over gradient descent is that it takes fewer number of iterations to reach the point of minima/maxima in case hessian calculation isn’t a major challenge.

!5

![Stochastic Gradient Descent Tricks - bottou.org2.1 Gradient descent It has often been proposed (e.g., [18]) to minimize the empirical risk E n(f w) using gradient descent (GD). Each](https://static.fdocuments.in/doc/165x107/60bec0701f04811115495619/stochastic-gradient-descent-tricks-21-gradient-descent-it-has-often-been-proposed.jpg)