Galaxy classification: deep learning on the OTELO and ...

Transcript of Galaxy classification: deep learning on the OTELO and ...

A&A 638, A134 (2020)https://doi.org/10.1051/0004-6361/202037697c© ESO 2020

Astronomy&Astrophysics

Galaxy classification: deep learning on the OTELO and COSMOSdatabases

José A. de Diego1,2, Jakub Nadolny2,3, Ángel Bongiovanni4,5, Jordi Cepa2,5,3, Mirjana Povic6,7, Ana María PérezGarcía5,8, Carmen P. Padilla Torres2,3,9, Maritza A. Lara-López10, Miguel Cerviño8, Ricardo Pérez Martínez5,11,

Emilio J. Alfaro7, Héctor O. Castañeda12, Miriam Fernández-Lorenzo7, Jesús Gallego13, J. Jesús González1,J. Ignacio González-Serrano14,5, Irene Pintos-Castro15, Miguel Sánchez-Portal4,5, Bernabé Cedrés2,3,

Mauro González-Otero2,3, D. Heath Jones16, and Joss Bland-Hawthorn17

(Affiliations can be found after the references)

Received 10 February 2020 / Accepted 25 April 2020

ABSTRACT

Context. The accurate classification of hundreds of thousands of galaxies observed in modern deep surveys is imperative if we want to understandthe universe and its evolution.Aims. Here, we report the use of machine learning techniques to classify early- and late-type galaxies in the OTELO and COSMOS databasesusing optical and infrared photometry and available shape parameters: either the Sérsic index or the concentration index.Methods. We used three classification methods for the OTELO database: (1) u − r color separation, (2) linear discriminant analysis using u − rand a shape parameter classification, and (3) a deep neural network using the r magnitude, several colors, and a shape parameter. We analyzed theperformance of each method by sample bootstrapping and tested the performance of our neural network architecture using COSMOS data.Results. The accuracy achieved by the deep neural network is greater than that of the other classification methods, and it can also operate withmissing data. Our neural network architecture is able to classify both OTELO and COSMOS datasets regardless of small differences in thephotometric bands used in each catalog.Conclusions. In this study we show that the use of deep neural networks is a robust method to mine the cataloged data.

Key words. galaxies: general – methods: statistical

1. Introduction

Galaxy morphological classification plays a fundamental role indescriptions of the galaxy population in the universe, and in ourunderstanding of galaxy formation and evolution. Galaxy mor-phology is related to key physical, evolutionary, and environ-mental properties, such as system dynamics (Djorgovski & Davis1987; Gerhard et al. 2001; Debattista et al. 2006; Falcón-Barrosoet al. 2019; Romanowsky & Fall 2012), the stellar formation his-tory (Kennicutt 1998; Bruzual & Charlot 2003; Kauffmann et al.2003; Lovell et al. 2019), gas and dust content (e.g., Lianou et al.2019), galaxy age (Bernardi et al. 2010), and interaction and merg-ing events (e.g., Romanowsky & Fall 2012). Early galaxy classi-fications strategies were based on the visual aspect of the objects,differentiating among spiral, elliptical, lenticular, and irregulargalaxy types according to their resolved morphology. Exam-ples of these strategies are the original classification schemes byHubble (1926) and De Vaucouleurs (1959). This methodology hasreached historic marks during the last decade through the citizenscience initiative known as Galaxy Zoo. It stands out for being thelargest effort made to visually classify more than 900 000 galaxiesfrom the Sloan Digital Sky Survey (SDSS; Fukugita et al. 1996)galaxies brighter than rSDSS = 17.7 with proven reliability (Lintottet al. 2011). After this milestone, this crowd-sourced astronomyproject also included the analysis of datasets from the Kilo-DegreeSurvey (KiDS) imaging data in the Galaxy and Mass Assembly(GAMA) fields, classifying typical edge-on galaxies at z < 0.15(Holwerda et al. 2019), and the quantitative visual classification ofapproximately 48 000 galaxies up to z ∼ 3 in three Hubble SpaceTelescope (HST) fields of the Cosmic Assembly Near-infrared

Deep Extragalactic Legacy Survey (CANDELS; Simmons et al.2017). Using the visual classification approach, the morphologyand size of luminous, massive galaxies at 0.3 < z < 0.7 targetedby the Baryon Oscillation Spectroscopic Survey (BOSS; Dawsonet al. 2013) of SDSS-III were also determined (Masters et al. 2011)using HST and Cosmic Evolution Survey1 (COSMOS; Scovilleet al. 2007) data.

However, the availability of larger telescopes and sophis-ticated instruments has made visual classification unfeasiblebecause most galaxies are barely resolved, making identificationof their morphological type very difficult, and the number of dis-covered galaxies has increased dramatically since the introduc-tion of digital surveys dedicated to probing larger and deepervolumes in the universe. This issue will be even more criticalin the near future when the next generation of large surveyssuch as the Large Synoptic Survey Telescope (Tyson 2002) orthe results from Euclid mission (Laureijs et al. 2011) producepetabytes of information and trigger the need for time-domainastronomy (Hložek 2019) far exceeding the capacity of availablehuman resources to manage this information. For this reason, theautomated classification of galaxies has become an intense areaof research in modern astronomy.

Previous research into automated galaxy-classification algo-rithms has focused on colors, shape, and morphological param-eters related to galaxy light distribution, such as concentra-tion and asymmetry (e.g., Abraham et al. 1994; Bershadyet al. 2000; Conselice 2003, 2006; Povic et al. 2009, 2013,2015; Deng 2013). Joint automated and visual classification1 http://cosmos.astro.caltech.edu

Article published by EDP Sciences A134, page 1 of 15

A&A 638, A134 (2020)

procedures have been implemented in extragalactic surveys suchas for example COSMOS (Cassata et al. 2007; Zamojski et al.2007) and GAMA (Alpaslan et al. 2015). Another approachinvolves the fitting of spectral energy distributions (SEDs) usinggalaxy templates (Ilbert et al. 2009). In a complementary fash-ion, Strateva et al. (2001) investigated the dichotomous classi-fication in early- and late-type (ET and LT) galaxies. For theseauthors, the ET group includes the E, S0, and Sa morphologi-cal types, while the LT group comprises Sb, Sc, and Irr galax-ies. Furthermore, using the well-known tendency of the LT tobe bluer than the ET galaxies, Strateva et al. (2001) propose theu − r color to separate between these galaxy types. Sérsic andconcentration indexes have also been used, alone or in combi-nation with the u − r color, to separate ET from LT galaxies(e.g., Conselice 2003; Kelvin et al. 2012; Deng 2013; Vika et al.2015). A far more complicated and expensive classification, interms of computational and observational resources, consists infitting a set of either empirical or modeled SED templates tothe galaxy continuum (e.g., Coleman et al. 1980; Kinney et al.1996). Currently, there are some public codes that are able to per-form such template-based classifications (e.g., LePhare: Arnoutset al. 1999; Ilbert et al. 2006).

Classification can be addressed in machine learning throughsupervised learning techniques, which consist in training a func-tion that maps inputs to outputs learning from input–output pairs,and using this function to assign new observations in two or morepredefined categories. Supervised learning techniques includedecision trees (Barchi et al. 2020), random forests (Miller et al.2017), linear discriminant analysis (LDA; Murtagh & Heck1987), support vector machines (Huertas-Company et al. 2008),Bayesian classifiers (Henrion et al. 2011), and neural networks(Ball et al. 2004), among others.

Machine Learning algorithms are increasingly used for clas-sification in large astronomical databases (e.g., Abolfathi et al.2018). In particular, LDA is a common classifying method usedin statistics, pattern recognition, and machine learning. Lineardiscriminant analysis classifiers attempt to find linear boundariesthat best separate the data. Recently, LDA has being used forgalaxy classification in spiral and elliptical morphological types(Ferrari et al. 2015), classification of Hickson’s compact groupsof galaxies (Abdel-Rahman & Mohammed 2019), and galaxymerger identification (Nevin et al. 2019).

In recent years, neural networks have become very popularin different research areas because of their ability to performoutstanding accurate classifications, and regression and seriesanalyses. A typical neural network is made up of a number ofhidden layers, each with a certain quantity of neurons that per-form tensor operations. There are several network types whichare oriented to solve different issues (a brief explanation of dif-ferent networks can be found in Baron 2019). Also, Busca &Balland (2018) used a one-dimensional convolutional neural net-work (CNN) for classification and redshift estimates of quasarspectra extracted from the BOSS. Much of the recent researchhas focused on two-dimensional CNN classification of galaxyimages (e.g., Serra-Ricart et al. 1996; Huertas-Company et al.2015; Dieleman et al. 2015; Domínguez Sánchez et al. 2018;Pérez-Carrasco et al. 2019; Walmsley et al. 2020).

In the future, neural networks will probably gain moreimportance and become the primary technique for classificationof astronomical images. However, there are two drawbacks thatlimit the use of CNN in astronomical research at present. Thefirst is the network bandwidth, which prevents the download oflarge amounts of heavy images obtained in remote observato-ries. The second drawback is the computational and hardware

resources needed to train a two-dimension CNN with tens ofthousands of images.

Dense (or fully connected) neural networks (DNN) are usedto solve general classification problems applied to tabulated data.In astronomy, DNNs have been applied to morphological typeclassification in low-redshift galaxies. Thus, Storrie-Lombardiet al. (1992) designed a simple DNN architecture for morpho-logical classification of 5217 galaxies drawn from the ESO-LVcatalog (Lauberts & Valentijn 1989) using 13 parameters (mostof them geometrical) in five different classes, obtaining an accu-racy of 56%. Naim et al. (1995) used the same architecturefor 830 bright galaxies (B ≤ 17) and 24 parameters, reducingthe parameter space dimension through principal componentsanalysis. Serra-Ricart et al. (1993) used DNN autoencoders forunsupervised classification of galaxies into three major classes:Sa+Sb, Sc+Sd, and SO+E. Sreejith et al. (2018) applied a DNNto a sample of 7528 galaxies at redshifts z < 0.06 extractedfrom the Galaxy And Mass Assembly survey (GAMA2) achiev-ing an accuracy of 89.8% for spheroid- versus disk-dominatedclassification.

These earlier works showed that DNNs are capable of per-forming accurate classification tasks on processed data such asphotometry, colors, and shape parameters of low-redshift galax-ies (Storrie-Lombardi et al. 1992; Naim et al. 1995; Ball et al.2004). However, compared with image-oriented CNNs, littleattention has been paid recently to the use of DNNs for galaxyclassification, even if these networks do not require the largequantity of resources used by the CNN. Moreover, both neu-ral network software development (e.g., Tensorflow, Abadi et al.2016) and hardware computation power (both central and graph-ics processing units) have increased dramatically, boosting thecapabilities of DNN applications.

In this paper we extend the use of DNNs to the morpho-logical classification of galaxies up to redshifts z ≤ 2. Wecompare the performance of different galaxy classification tech-niques applied to a sample of galaxies extracted from the photo-metric OTELO database (Bongiovanni et al. 2019) with a fittedSérsic profile (Nadolny et al. 2020). These techniques are (1)the Strateva et al. (2001) u − r color algorithm; (2) the LDAmachine learning algorithm, which includes both the u − r colorand a shape parameter, either the Sérsic index or the concentra-tion index (Kelvin et al. 2012); and (3) a DNN that uses opticaland near-infrared photometry, and shape parameter for objectsavailable in both OTELO and COSMOS catalogs. We find thata simple, easily trainable DNN yields a highly accurate classifi-cation for ET and LT OTELO galaxies. Moreover, we apply ourDNN architecture to a set of tabulated COSMOS data with somedifferences in the photometric bands measured with respect toOTELO, and find that our architecture also performs accurateclassification of COSMOS galaxies. Finally, we use the sameDNN architecture but substituting the Sérsic index with the con-centration index (Shimasaku et al. 2001) for both OTELO andCOSMOS datasets.

This paper is organized as follows. Section 2 describes thedifferent techniques used to classify galaxies. In Sect. 3 weshow the results and compare the different techniques. Finally,in Sect. 4 we present our conclusions.

2. Methodology

The current investigation involves the automatic classificationof galaxies into two dichotomous groups, namely ET and LT

2 http://www.gama-survey.org

A134, page 2 of 15

J. A. de Diego et al.: Galaxy classification: deep learning on the OTELO and COSMOS databases

galaxies, using both photometric measurements and a factor thatdepends on the shape of the galaxys’ light distribution. Machinelearning algorithms for automatic classification parse data andlearn how to assign subjects to different classes. These algo-rithms require both training and test datasets that consist oflabeled data. The training dataset is used to fit the model param-eters, and the test dataset to provide an unbiased assessmentof the model performance. If the algorithm requires tuning themodel hyperparameters, such as the number of layers and hid-den units in a DNN architecture, a third labeled dataset calledthe validation dataset is required to evaluate different model tri-als (the test dataset must be evaluated only by the final model).Once the final model architecture is attained, it is trained joiningboth the training and the validation dataset, and then evaluatedusing the test dataset.

In this section we present our samples of galaxies extractedfrom OTELO and COSMOS. We use the observed photometryand colors, that is, neither k nor extinction corrections were per-formed. In order to maximize the sample size while keeping awell-sampled set in redshift, data have been limited in photo-metric redshift (zphot ≤ 2) but not in flux, thus no cosmologicalinferences can be performed from our sample. However, at theend of Sect. 3 we present a brief analysis of the results obtainedfor flux-limited samples. We describe the photometry and theshape factors of these data. We then present the implementa-tion of the different classification methodologies used: the u − rcolor, LDA, and DNN. Finally, we present the bootstrap pro-cedure that we use to compare the results obtained with thesemethodologies.

2.1. OTELO samples

OTELO is a very deep blind survey performed with the redtunable filter OSIRIS instrument of the 10.4 m Gran Telesco-pio Canarias (Bongiovanni et al. 2019). OTELO data consistof images obtained in 36 adjacent narrow bands (FWHM 12 Å)covering a window of 230 Å around λ = 9175 Å. The catalogincludes ancillary data ranging from X-rays to far infrared. Pointspread function-model photometry and library templates wereused for separating stars, AGNs, and galaxies.

The OTELO catalog comprises 11 237 galaxies. Nadolnyet al. (2020) matched OTELO with the output from GALA-PAGOS2 (Häussler et al. 2007; Häußler et al. 2013) overhigh-resolution HST images. Not all the OTELO galaxieswere detected by GALAPAGOS2, which returned a total of8812 sources. Nadolny et al. (2020) account for automateddetection of multiple matches produced by more than one sourcethat lay inside the OTELO’s Kron radius in a high-resolutionF814W band image. These latter authors attribute these mul-tiple matches to close companions (gravitationally bounded orin projection), mergers, or resolved parts of the host galaxy. Inany case, sources with multiple matches were excluded from ouranalysis because they could affect low-resolution photometry.Finally, we included further constraints to extract our OTELOsamples (see below).

2.1.1. Sérsic index and photometry sample

OTELO uses LePhare templates to fit the SED of galaxies toobtain photometric morphological type classification and red-shift estimates. We used this morphological classification toassign the galaxies to ET and LT classes. The best model fit-ting is recorded under the MOD_BEST_deepN numerical codedentries in the OTELO catalog (Bongiovanni et al. 2019). The

ET class includes galaxies coded as “1” in the OTELO catalog,which were best fitted by the E/S0 template from Coleman et al.(1980). The LT class comprises OTELO galaxies coded from“2” to “10”, which were best fitted by different late-type galaxytemplates, namely Sbc, Scd, and Irr (Coleman et al. 1980), andstarburst-class templates from SB1 to SB6 (Kinney et al. 1996).Bongiovanni et al. (2019) estimate that the fraction of inaccu-rate SED fittings for the galaxies contained in the OTELO cat-alog may amount to up to ∼4%. Therefore, our results may beaffected if there are ET galaxies miscoded differently from “1”in OTELO, or any of the LT galaxies miscoded as “1”. This couldaffect, for example, early-type spirals such as Sa galaxies, whichare not explicitly included in the OTELO template set. However,the UV SED for ellipticals and S0 galaxies is completely dif-ferent from Sa and other LT galaxies. The OTELO catalog alsoincludes GALEX-UV data that allow us to identify ET galax-ies even in the local universe. Thus, we conclude that recodingthe OTELO classification in our galaxy sample as ET and LTclasses yields a negligible number of misclassified objects (cer-tainly much less than the OTELO fraction of ∼4%) and does notaffect our results.

The Sérsic profile is a parametric relationship that expressesthe intensity of a galaxy as a function of the distance from itscenter:

I(R) = Iee−b[(

RRe

)1/n−1

], (1)

where I is the intensity at a distance R from the galaxy cen-ter, Re is the half-light radius, Ie is the intensity at radius Re,b (∼ 2n − 1/3) is a scale factor, and n is the Sérsic index. Sér-sic profiles have been employed for galaxy classification (e.g.,Kelvin et al. 2012; Vika et al. 2015). This index provides a geo-metrical description of the galaxy concentration; for a Sérsicindex n = 4 we obtain the de Vaucouleurs profile typical of ellip-tical galaxies, while setting n = 1 gives the exponential profiledescribing spiral galaxies.

Our OTELO Sérsic index and photometry (OTELO SP) sam-ple consists of 1834 galaxies at redshifts z ≤ 2 extracted fromthe OTELO catalog (listed under the Z_BEST_deepN code).The sample includes ugriz optical photometry from the Canada-France-Hawaii Telescope Legacy Survey3 (CFHTLS), JHKsnear-infrared photometry from the WIRcam Deep Survey4

(WIRDS), and Sérsic index estimates obtained using GALAPA-GOS2/GALFIT (Häußler et al. 2013; Peng et al. 2002, 2010) onthe HST-ACS publicly available data in the F814W band. Thesample comprises only galaxies with Sérsic indexes between n =0.22 and n = 7.9; Sérsic indexes out of this range are not reliablebecause of an artificial limit imposed by the Sérsic-profile-fittingalgorithm in GALAPAGOS2 (Häussler et al. 2007). Besides, thesample does not include galaxies with Sérsic index values lessthan three times their estimate errors. For a detailed descriptionof the Sérsic-profile-fitting process we refer to Nadolny et al.(2020).

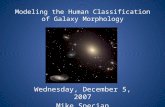

Figure 1 shows the sample distributions of magnitudes in ther band and photometric redshifts extracted from the OTELO cat-alog. We note that the sample is not limited in flux, and thereforeit is not a complete sample in the volume defined by the redshiftlimit zphot ≤ 2 (see the discussion about magnitude-limited sam-ples below). The redshift distribution presents concentrations atredshifts 0.04, 0.11, 0.34, 0.90, and 1.72 superimposed onto abell-like distribution with a maximum around zphot ≈ 0.8 and a

3 http://www.cfht.hawaii.edu/Science/CFHTLS/4 https://www.cadc-ccda.hia-iha.nrc-cnrc.gc.ca/en/cfht/wirds.html

A134, page 3 of 15

A&A 638, A134 (2020)

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

● ●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●●●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

● ●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●●

●

●

●●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

3028

2624

2220

18

zphot

r

0 1 2

0

5015

0

1

100 300

Fig. 1. Comparative distribution of brightnesses in the r band and photo-metric redshifts for the 1834 galaxies in the OTELO SP sample. Bottomleft panel: r magnitude vs. photometric redshift zphot plot shows the galax-ies in the sample, differentiating between LT galaxies (black circles) andET galaxies (red squares). Top panel: SP sample photometric redshiftdistribution. Right panel: SP sample r magnitude distribution.

strong decay from zphot ≈ 1.3. The photometric data are incom-plete, which affects the available number of galaxies for thoseclassification procedures that cannot effectively manage missingdata.

The sample is randomly divided in a training set (70% ofthe available galaxies) used for the algorithm training, and a testset (30%) used to yield an unbiased estimate of the efficiency ofthe model. Choosing the proportions of training and test samplesizes depends on a balance between the model performance andthe variance in the estimates of the statistical parameters (in ourcase the accuracy, True ET Rate and True LT Rate, as explainedbelow). Rule-of-thumb proportions often used in machine learn-ing are 90:10 (i.e., 90% training, 10% testing), 80:20 (inspiredby the Pareto principle), and 70:30 (our choice). In our case, the70:30 proportion is justified because it fulfills the large enoughsample condition (another rule of thumb) that the sample sizemust be at least 30 to ensure that the conditions of the centrallimit theorem are met. Thus, the number of expected ET galax-ies in the SP test sample is: Ngal pet ptest ≈ 30, where Ngal = 1834is the sample size, pet = 1834 is the proportion of ET galaxiesin the SP sample, and ptest = 0.3 is the proportion of galaxies inthe test sample.

2.1.2. Concentration and photometry sample

The concentration is widely used to differentiate ET fromLT galaxies. Concentration provides a direct measurement of theintensity distribution in the image of a galaxy. For that reason,the concentration is easier to obtain than the Sérsic index, whichrequires fitting several parameters to the Sérsic profile.

Here we use the definition (Bershady et al. 2000; Scarlataet al. 2007):

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●●

●

●

●

●●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

● ●

●

●

●●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●● ●

●

●

●

●

● ●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●●●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

● ●

● ●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●

●

●

●

●

●●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●

●●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

● ●

●

●●

●

●

●●

●

● ●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

● ●

●

●●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

3025

20zphot

r0 1 2

0

010

020

0

1

100 300

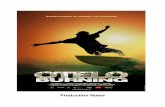

Fig. 2. As Fig. 1 but for the 2292 galaxies in the OTELO CP sample.

C = 5 log10

(r80

r20

), (2)

where r80 and r20 are the 80% and 20% light Petrosian radii,respectively, obtained from the HST F814W band images. Wechose the F814W band concentration for compatibility withCOSMOS (Scarlata et al. 2007). The data were limited to a red-shift z ≤ 2. The final OTELO concentration and photometry(OTELO CP) sample consists of 2292 galaxies, with 114 clas-sified as ET and 2178 as LT. Figure 2 shows the sample distri-butions of magnitudes in the r band and photometric redshifts,which is similar to the case of the SP sample discussed above.The CP sample was also divided in two subsamples: a trainingsubsample containing 1604 (70%) of the objects, and a test sub-sample with 688 (30%) of the galaxies.

2.2. COSMOS samples

We expect that our DNN architecture can be applied to galaxyclassification in other databases. Therefore, we checked its reli-ability using two COSMOS enhanced data products: the ZurichStructure & Morphology Catalog v1.0 (ZSMC, Scarlata et al.2007; Sargent et al. 2007) and the COSMOS photometric red-shifts v1.5 (CPhR, Ilbert et al. 2009). Those catalogs have131 532 and 385 065 entries, respectively. We merged bothdatabases, obtaining 128 442 matches, from which we chose asample of galaxies with Sérsic indexes estimates in the range0.2 < n < 8.8, and another sample with the same concentrationradii used in OTELO. Both samples are limited to redshifts z < 2and include photometry in the CFHT u, Subaru BVgriz, UKIRTJ and CFHT K bands, along with classification entries. Thus,the galaxy records included all the available data from the CPhRbands except the CFGT i′ magnitudes (we chose the Subaru iband also included in the catalog).

The resulting COSMOS Sérsic index and photometry (COS-MOS SP) sample consists of 34 688 galaxies, 28 951 of which

A134, page 4 of 15

J. A. de Diego et al.: Galaxy classification: deep learning on the OTELO and COSMOS databases

had been classified as LT and 5737 as ET. With such a largenumber of galaxies, we can limit the training set to 5000 galax-ies (a fraction of approximately 14% of the sample), and risethe fraction of the testing set up to 29 688 galaxies (approx-imately 86% of the sample) in order to reduce the varianceof the results. Analogously, the COSMOS concentration andphotometry (COSMOS CP) sample consists of 105 758 galax-ies, distributed in 95 781 LT and 9977 ET. We set the corre-sponding training and testing sets to 10 000 and 95 758 galaxies,respectively.

2.3. Classification procedures

We used a classification baseline and three classification meth-ods for the OTELO samples. The baseline consists in classifyingall the galaxies into the most frequent morphological group. Anyclassification by a more sophisticated method should improvethe baseline accuracy. For the COSMOS samples we only usedthe classification baseline and the DNN architecture developedfor the OTELO samples, as we were interested only in probingthis architecture.

2.3.1. Color classification

The first classification method uses a color discriminant. Aftertesting several colors, we focus on the u − r color as proposedby Strateva et al. (2001). These authors use a simple color dis-criminant such that any galaxy with u − r color redder than 2.22is classified as ET, and LT if u − r < 2.22. This method wasapplied only to both SP and CP samples drawn from OTELO.We also investigated other possible color discriminants that willbe presented later. Data records with missing u − r colors weredisregarded, reducing the SP sample to 1787 galaxies and the CPsample to 2189.

2.3.2. Linear discriminant analysis

The second classification method is LDA. The aim of LDA is tofind a linear combination of features which separates differentclasses of objects. These features are interpreted as a hyperplanenormal to the input feature vectors. We note that the Stratevaet al. (2001) u − r color separation method can be regarded as aLDA which defines the u − r = 2.22 plane normal to u − g andg − r vectors. As in the previous method, LDA was only appliedto SP and CP OTELO samples, and data records with missingu − r colors were disregarded.

Two problems with machine learning techniques are themanagement of missing data and the curse of dimensionality.Missing data (e.g., a photometric band) usually results in remov-ing objects with incomplete records from the dataset. The curseof dimensionality appears because increasing the number ofvariables in a classification scheme means that the volume ofthe space increases very quickly and therefore the data becomesparse and difficult to group. The curse of dimensionality can bemitigated by dimensionality reduction techniques such as prin-cipal component analysis (PCA), but dimensionality reductionmay introduce unwanted effects (data loss, nonlinear relationsbetween variables, and the number of components to be keptCarreira-Perpiñán 2001; Shlens 2014) that tend to blur differ-ences between the groups. Alternative methodologies to dealwith these problems are under development, for example by Cai& Zhang (2018) who introduce an adaptive classifier to copewith both missing data and the curse of dimensionality for high-dimensional LDA. To avoid these problems, we chose to limit

our LDA model to the Sérsic index and the single highly discrim-inant u − r color, as it has been already addressed in the galaxyclassification literature (e.g., Kelvin et al. 2012; Vika et al. 2015).

2.3.3. Deep neural network

The third method of classification involves a DNN. The sam-ple was analyzed using the Keras library for deep learning.Keras is a high-level neural network application programminginterface (API) written in Python under GitHub license. Cur-rently, Keras is available for both Python and R computer lan-guages (Chollet 2017; Chollet & Allaire 2017). In astronomy,Keras has already been used for image classification of galaxymorphologies (Pérez-Carrasco et al. 2019; Domínguez Sánchezet al. 2018) and spectral classification and redshift estimates ofquasars (Busca & Balland 2018), and is included in the astroNNpackage5.

As in the other methods, we use a training and a test set toteach and check the DNN model, respectively. The differencefrom the other methods is that the structure of their learning dis-criminant function is predetermined, while the DNN architec-ture should be tuned on the fly. To achieve this goal, we split thetraining set in the OTELO samples in (i) a teaching set (80% ofthe original training set), and (ii) a validation set (the remain-ing 20%). Compared with OTELO, the COSMOS samples con-sist of many more galaxies. Therefore, we limited the numberof the training sets to 5000 for the COSMOS SP sample, and10 000 for the CP sample, and conserved the respective teach-ing set and validation set proportions. We use the teaching set totune the DNN model, and the validation set to check the loss andaccuracy functions that describe the DNN classification capa-bility. Once we have achieved a satisfactory result, the DNNarchitecture has been optimized to classify the validation set, butthe performance may be different for other datasets. To gener-alize the result, we use the whole original training set to retrainthe tuned DNN model, and we then classify the test set galax-ies. Therefore, the test set galaxies were used neither to trainnor to fine tune the DNN model, but only to evaluate the DNNperformance.

An appealing feature of DNNs is the easiness to deal withmissing data. In practice, it is enough to substitute the missingvalues in each normalized variable by zeros to cancel their prod-ucts on the network weights. The DNN then deals with missingvalues as if they do not carry any useful information and willignore them. Of course, it is better if there are not missing val-ues, but DNNs allow the user to treat them without the need ofdropping data entries or estimating missing values from othervariables.

Baron (2019) provides a succinct description of DNNs, and acomplete explanation of Keras elements can be found in Chollet(2017) and Chollet & Allaire (2017). Tuning a DNN is a trial-and-error procedure aimed to find an appropriate architectureand setup. As the numbers of input variables, units, and layersincrease, the DNN tends to overfit if the training set is small. Forthis reason, we kept our DNN model as simple as possible whilstobtaining a high-accuracy classification.

We use standard layers and functions for our model thatare already available from Keras. For the interested reader, ourDNN architecture consists of two dense layers of 64 units eachwith rectified linear unit (ReLU) activations, and an outputdense layer of a single unit with sigmoid activation. The modelwas compiled using an iterative gradient descendent RMSprop

5 https://astronn.readthedocs.io/en/latest/

A134, page 5 of 15

A&A 638, A134 (2020)

optimizer, a binary-cross-entropy loss function, and accuracymetrics. We kept the default values for the Keras RMSprop opti-mizer, i.e., a learning rate of 0.001 and a weight parameter forprevious batches of ρ = 0.9. These values are appropriate formost DNN problems, and moderate changes do not affect theresults. We set the number of training epochs to avoid overfit-ting, and the training batch sizes to appropriate values for thenumber of records in the DNN training sample in each case.

2.3.4. Bootstrap

We used bootstrap (e.g., Efron & Tibshirani 1993; Chihara &Hesterberg 2018) to obtain reliable statistics that describe theperformance of each classification technique. Bootstrapping is awidely used nonparametric methodology for evaluating the dis-tribution of a statistic using random resampling with replace-ment. Thus, we calculated the classification accuracy and otherclassification statistics through 100 runs for the u−r color, LDA,and DNN methods. For each run, we also divided the bootstraprandom sample in a training set (70%) and a test set (30%).

3. Results

To determine a minimal set of attributes that are able to classifybetween ET and LT galaxies, we focus on two directly observ-able characteristics: photometry and shape. Results obtained inprevious studies were limited to nearby galaxies. Thus, Stratevaet al. (2001) used photometry from 147 920 SDSS galaxies withmagnitude g∗ ≤ 21 and redshifts z . 0.4 to build a binary classi-fication model based in the u−r = 2.22 discriminant color, whichthey tested on a sample of 287 galaxies visually labeled as ET orLT, recovering 94 out of 117 (80%) ET, and 112 out of 170 (66%)LT galaxies. Deng (2013) used a sample of 233 669 SDSS-IIIDR8 galaxies with redshifts 0.01 < z < 0.25 and report a con-centration index discriminant to separate ET from LT galaxiesin the r-band that achieved an accuracy of 96.43 ± 0.04. Vikaet al. (2015) used both the u− r color and the Sérsic index in ther-band to classify a sample of 142 nearby (z < 0.01) galaxies,dividing the u− r versus nr plane in quadrants; most ET galaxieswere located at the u − r > 2.3 and nr > 2.5 quadrant (28 out of34, i.e., 82% ETs were correctly classified).

3.1. Sérsic index and photometry samples

3.1.1. Baseline classification

The baseline classification is the simplest classification method.It assigns all the samples to the most frequent class. This clas-sification is helpful for determining a baseline performance thatis used as a benchmark for other classification methods. For thistask, we selected all the galaxies in our OTELO SP sample. Intotal, there are 1834 galaxy records, 99 of them classified asET galaxies (≈5.4%), and 1735 as LT galaxies (≈94.6%). Thetwo groups are unevenly balanced, which results in the baselineclassification achieving a high overall accuracy of 94.6%, whichshould be exceeded by any other classification method.

3.1.2. Color classification

A preliminary study was performed to decipher which colorsyield a split between ET and LT galaxies that outperforms thebaseline. Table 1 shows several examples of the measured accu-racy for selecting appropriate single color discriminants. Wenote that several colors did not perform better than the base-

Table 1. One color separation.

Color Accuracy Ntest Separation

u − J 0.96± 0.01 518 4.1± 0.2u − i 0.96± 0.01 536 2.8± 0.3u − r 0.96± 0.02 536 2.0± 0.2u − H 0.95± 0.01 510 Baselineg − J 0.94± 0.02 527 Baselineg − i 0.94± 0.01 548 Baseline

Notes. Column 1: tested color. Column 2: mean accuracy (proportionof galaxies correctly classified) on 100 random test sets; we notethatthe colors are sorted from the highest to the lowest accuracyscore. Col-umn 3: sample size included in each test set; differences in the samplesize between different colors are due to missing data. Column 4: colordiscriminant value or Baseline if the accuracy score is not statisticallydifferent from the baseline classification.

Table 2. Color u − r confusion matrix.

OTELOET LT Total

ET 25 20 45Color LT 2 489 491

Total 27 509 536

line classification (94.6%), but those involving the u and a redband usually yield the most accurate results. Both u− J and u− icolors perform marginally better than u − r, although u − J hasa larger number of missing records. We present the rest of thecolor analysis based on the u − r color in order for ease of directcomparison with the report of Strateva et al. (2001).

Table 2 shows an example of the confusion matrix for a sin-gle u − r color bootstrap run, yielding an accuracy of 0.959 ±0.009. Table 3 shows the Accuracy, True ET Rate, and True LTRate for the different databases and classification methods usedin this paper, obtained through the bootstrap procedure. The TrueET Rate and True LT Rate both indicate the proportion of ETand LT galaxies, respectively, recovered through the classifica-tion procedure. For the u− r color, the statistics yield an averageAccuracy of 0.96± 0.02, a True ET Rate of 0.8± 0.3, and a TrueLT Rate of 0.97 ± 0.02. The True ET Rate is the least precise ofall the statistics in all the samples because of the relatively lownumber of ET galaxies.

Bootstrap yields a u − r color discriminant for ET and LTseparation of 2.0 ± 0.2, as shown in Fig. 3. The agreement withStrateva et al. (2001), u− r = 2.22 (no error estimate is providedby these authors), is remarkable considering that the galaxiesstudied by these authors have redshifts in the interval 0 < z ≤ 0.4while our sample expands to z ≤ 2. Figure 4 shows the u − rcolor distribution as a function of the redshift for the OTELO SPsample. It is worth noting that LT galaxies in this sample tend tobe bluer at redshifts z . 0.5, possibly due to an enhanced star-forming activity as also pointed out by Strateva et al. (2001).This feature, along with the scarcity of ET galaxies at z > 1(about 13% of all the ET galaxies in the OTELO SP sample),justifies the agreement between (Strateva et al. 2001) results andours despite the redshift differences.

The distribution of the bootstrap Accuracy for the u− r colorclassification is shown in the upper panel of Fig. 5. Most of theu − r color accuracies are larger than the baseline, but the two

A134, page 6 of 15

J. A. de Diego et al.: Galaxy classification: deep learning on the OTELO and COSMOS databases

Table 3. Comparison of classification methods for SP samples.

Database Method Accuracy (a) True ET Rate (a) True LT Rate (a) Sample

Mean Error Mean Error Mean Error size

OTELO Baseline 0.946 0.006 0 . . . 1 . . . 1834OTELO u − r color 0.96 0.02 0.8 0.3 0.97 0.02 536OTELO LDA 0.970 0.008 0.80 0.08 0.979 0.007 536OTELO DNN 0.985 0.007 0.84 0.09 0.993 0.006 551COSMOS Baseline 0.835 0.002 0 . . . 1 . . . 34 688COSMOS DNN 0.967 0.002 0.91 0.03 0.979 0.005 29 688

Notes. (a)On 100 bootstrap runs.

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●● ●

●

●

●

●

●

●

●

● ●

●

●

●●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ● ●

●●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

● ●

●

●

●

●●

●

●

●●

●

●

●

●●

●

●●●

●

●

●●

●

●

●

●

●

●

●●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

● ● ●●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

● ● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●●

●

●

●

●

●

●

● ●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

● ●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●●

●

●●

●

●

● ●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●● ●

●

●

●

●

●

●

●

●

●

●●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

● ●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●●

●

●

●●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●

●