Fast spline smoothing via spectral factorization concepts

-

Upload

giuseppe-de-nicolao -

Category

Documents

-

view

216 -

download

3

Transcript of Fast spline smoothing via spectral factorization concepts

qThis paper was not presented at any IFAC meeting. This paper wasrecommended for publication in revised form by Associate EditorA. Tits under the direction of Editor T. Basar.

*Corresponding author. Tel.: #39-382-505484; fax: #39-382-505373.

1Supported by MURST Project `Identi"cation and control ofindustrial systemsa.

2Supported by the NIH grant RR11095 `Input Estimation ofBiological Systems by Deconvolutiona.

E-mail addresses: [email protected] (G. De Nicolao),[email protected] (G. Ferrari-Trecate), [email protected](G. Sparacino).

Automatica 36 (2000) 1733}1739

Brief Paper

Fast spline smoothing via spectral factorization conceptsq

Giuseppe De Nicolao!,*,1, Giancarlo Ferrari-Trecate!,1, Giovanni Sparacino",2

!Dipartimento di Informatica e Sistemistica, Universita% degli Studi di Pavia, Via Ferrata 1, 27100 Pavia, Italy"Dipartimento di Elettronica e Informatica, Universita% di Padova, Via Gradenigo 6/A, 35122 Padova, Italy

Received 23 June 1999; revised 31 January 2000; received in "nal form 6 April 2000

Abstract

When tuning the smoothness parameter of nonparametric regression splines, the evaluation of the so-called degrees of freedom isone of the most computer-intensive tasks. In the paper, a closed-form expression of the degrees of freedom is obtained for the case ofcubic splines and equally spaced data when the number of data tends to in"nity. State-space methods, Kalman "ltering and spectralfactorization techniques are used to prove that the asymptotic degrees of freedom are equal to the variance of a suitably de"nedstationary process. The closed-form expression opens the way to fast spline smoothing algorithms whose computational complexity isabout one-half of standard methods (or even one-fourth under further approximations). ( 2000 Elsevier Science Ltd. All rightsreserved.

Keywords: Spline smoothing; Bayes estimation; Kalman "lters; Stochastic processes; Spectral factorization

1. Introduction

When observing a function corrupted by noise, splineregression is a popular way to estimate the unknownsignal without specifying its functional form. The interestfor splines in the "eld of automatic control is twofold. Onthe applicative side, they provide a powerful approxima-tion tool and as such have been successfully applied tovarious problems including state-feedback linearization(Borto!, 1997) and robot trajectory planning (De Luca,Lanari & Oriolo, 1991). On the other hand, it is alsoremarkable that the e$cient calculation of smoothingsplines calls for classical state-space techniques. Indeed,the most e$cient algorithm available in the literature isbased on state-space smoothing and Kalman "ltering(Kohn & Ansley, 1989; Kormylo, 1991).

In smoothing splines, the trade-o! between the data "tand the regularity of the estimate is controlled by thesmoothness parameter j. Many criteria for tuning j re-quire the evaluation of the trace of the so-called in#uencematrix, yielding a positive real number q(j) that, inWahba (1983), was termed degrees of freedom. Amongthese criteria, one can mention Generalized Cross Vali-dation (GCV), Unbiased Risk, Hall and Titterington'scriterion and Maximum Likelihood see Golub, Heathand Wahba (1979), Craven and Wahba (1979), Hall andTitterington (1987) and Wecker and Ansley (1983). At"rst sight, the Maximum Likelihood criterion does notseem to involve the degrees of freedom. However, byextending to smoothing splines some results worked outin the general context of Bayesian regression (MacKay,1992), it is possible to see that also the Maximum Likeli-hood estimate of j satis"es a necessary condition involv-ing q(j). The role of the equivalent degrees of freedomgoes also beyond their use for tuning the smoothnessparameter: by exploiting their analogy with the degreesof freedom of parametric models, approximate s2-, F-tests and standard-error bounds were proposed (Hastie& Tibshirani, 1990).

Typically, j is adjusted iteratively until the adoptedcriterion is met with. Noting that the in#uence matrixcontains the inverse of an n]n matrix, where n is thenumber of data, this explains why the computation ofq(j) is one of the most computer-intensive part of the

Aut=1584=Chan=Venkatachala=BG

0005-1098/00/$ - see front matter ( 2000 Elsevier Science Ltd. All rights reserved.PII: S 0 0 0 5 - 1 0 9 8 ( 0 0 ) 0 0 1 0 0 - X

overall regression algorithm. Indeed, by using a genericalgorithm, this task takes O(n3) operations. A number ofauthors contributed to the development of numericallye!ective algorithms for the computation of q(j), that, tothe state of the art, can be evaluated in O(n) operations(see, for instance, Wahba, 1990 and references quotedthere). In particular, Kohn and Ansley (1989), by resort-ing to the Bayesian interpretation of smoothing splines,worked out a state-space O(n) algorithm based onKalman "ltering. In their approach, q(j) is computed byaveraging over the sampling instants the variance of theestimated function, which is obtained by solving a di!er-ence Riccati equation.

Several contributions were also dedicated to the in-vestigation of the asymptotic properties of q(j) as thenumber n of data tends to in"nity. They were motivatedby two reasons. The "rst one was to establish statisticalconsistency and rates of convergence for the spline esti-mate associated with the GCV criterion (Utreras, 1983;Speckman, 1985). The second motivation was the deriva-tion of approximate asymptotic formulas in order todevise fast spline smoothing algorithms (Silverman,1985).

In this paper, we consider cubic smoothing splinesapplied to the estimation of a function from equallyspaced noisy samples. Letting n be the number of datacollected with sampling period ¹, we de"ne the asymp-totic smoothing ratio s(j) as the limit of q(j)/n for n tend-ing to in"nity. The main result of the paper is an explicitformula for s(j). In particular, it turns out that s(j)depends only on the ratio j/¹3 in a nonlinear way. Notethat the new formula, compared to previous approxima-tions such as the formula suggested by Silverman (1985),not only is derived with a di!erent rationale (Silverman'sformula relies on the approximation of the eigenvalues ofthe in#uence matrix, see De"nition 1) but furthermore isexact. Moreover, Silverman's result refers to stochasticsampling whereas the present paper addresses the deter-ministic uniform sampling case.

There are several motivations for investigating thedependence of the asymptotic smoothing ratio on thesmoothing parameter j and the sampling period ¹. Firstof all, j alone is not a measure of the amount of smooth-ing associated with the estimated function: the samej could well yield over"tting in a given problem andunder"tting in another problem with a di!erent samplingperiod. Conversely, the smoothing ratio s(j), which takesvalues in the interval [0,1], provides an objective andnormalized measure. For instance, if n"100, the ap-proximation properties of a smoothing spline withs(j)"0.1 (and hence q(j)K10) are roughly equivalent tothose of a parametric model with 10 parameters. Thismakes apparent the usefulness of a quick method forapproximating q(j). The second main motivation has todo with the development of fast smoothing algorithms,which are crucial in signal processing problems involving

large data sets and/or on-line operations. In this case, it isworth noting that approximating q(j) by ns(j) is largelyacceptable (as a matter of fact, a widespread criterionsuch as GCV is itself an approximation of OrdinaryCross Validation). Finally, the methodology presented inthis paper can be extended to smoothing splines of anyorder and also to state-space deconvolution problemssolved by means of Tychonov regularization techniques(Ferrari-Trecate, 1999).

Like in Kohn and Ansley (1989), the "rst part of thederivation hinges on a state-space formulation of thespline nonparametric regression problem. The secondstep proceeds di!erently, in that we show that thesmoothing ratio coincides with the variance of a suitablediscrete-time process, whose spectrum is characterized.Finally, s(j) is found by evaluating a line integral in thecomplex plane which is solved analytically by means ofan algorithm due to As stroK m (1970). We discuss how theexplicit formula can be exploited in order to derive e$-cient smoothing spline algorithms, at least twice fasterthan the standard ones.

The paper is organized as follows. In Section 2 thestatement of the problem and some preliminary de"ni-tions are given. In Section 3 we work out the spectralcharacterization of q(j). The explicit formula for s(j),together with its possible applications, is presented inSection 4. In Section 5 we report some concluding re-marks pointing out possible future developments.

2. Problem statement

Consider the problem of estimating a continuous-timefunction f (t) : [0,#R]PR from the equally spacedobservations

yk"f (k¹)#e

k, ¹'0, k"1,2, n, (1)

where ekis a white Gaussian noise with variance p2. This

goal can be achieved only when a priori assumptions aremade about the function f ( ) ). In this paper we assumethat f (t) is the solution of

d2f (t)

dt2"w(t), f (0)"

df (0)

dt"0,

where w(t) is a white Gaussian noise with autocovariancefunction EMw(t)w(q)N"c2d(t!q). In the following, wewill make use of the state-space representation

x5 (t)"Ax(t)#Bw(t),(2)

f (t)"Cx(t), x(0)"0,

where x(t)3R2 is the state and

A"A0 0

1 0B, B"A1

0B, C"(0 1).

Aut=1584=Chan=VVC

1734 G. De Nicolao et al. / Automatica 36 (2000) 1733}1739

The Bayes estimate is de"ned as the posterior meanfB(t)GE[ f (t)Dy], where y"5(y

1y2

2 yn)@. It is well

known that the Bayes estimate fB

is the solution of thevariational problem (Wahba, 1990)

fB(t)"argmin

f

n+k/1

Myk!f (k¹)N2#jP

nT

0Gd2f (t)

dt2 H2dt,

(3)

subject to f (0)"df (0)/dt"0, where j"p2/c2. From (3)it is apparent that f

Bis a cubic smoothing spline with

smoothness parameter j (see e.g. Wahba, 1990). Al-though we consider natural splines, this does not a!ectthe generality of our results that do not depend on theassumptions made on the initial conditions in view of theconvergence result given in Lemma 5.

In practice, the value of c2 is seldom known a prioriand j has to be tuned on the basis of the available data.The possible criteria include Generalized Cross Valida-tion, Maximum Likelihood, Unbiased Risk, and Halland Titterington's criterion, see Golub et al. (1979),MacKay (1992), Craven and Wahba (1979) and Hall andTitterington (1987). In all these cases a central role isplayed by the so-called in#uence matrix (Wahba, 1990).

De5nition 1. The n]n matrix H(j) such that

[ fB(¹)2f

B(n¹)]@"H(j)y,

is termed in#uence matrix.

For instance, de"ning the sum of squared residuals asSSR(j)"DDMI!H(j)NyDD2, the Generalized Cross Valida-tion criterion selects the value of j by minimizing the lossfunction

GC<(j)GSSR(j)

nM1!q(j)/nN2, q(j)GtrMH(j)N.

where, for simplicity, we have omitted the dependence ofq(j) and H(j) on the sampling period ¹. To perform thisminimization, an iterative strategy is needed which in-volves the computation of SSR(j) and q(j) for severalvalues of j. The bottleneck is the calculation of q(j) whichrequires O(n3) operations unless suitable algorithms areemployed. There exists an extensive literature dealingwith the computation of q(j) and, by now, e$cient O(n)algorithms are available (see Kohn and Ansley (1989) fora state-space method and Wahba (1990) for a review ofvarious contributions).

Remarkably, q(j) admits a nice interpretation in termsof `degrees of freedoma of the model. In fact it can beshown that

EMSSR(j)N"Mn!q(j)Np2.

This formula resembles the well known relationshipE(SSR)"(n!q)p2 found when trying to "t n noisy data

by means of a qth-order linear-in-parameters model. Byanalogy, the real number q(j) has been termed degrees offreedom of the nonparametric estimator f

B(Wahba, 1990)

Indeed, q(j) goes from 0 to n, as j decreases from #R to0. Therefore, the tuning of j can be seen as the non-parametric equivalent of model order selection.

The purpose of the present paper is to investigate thebehavior of the asymptotic smoothing ratio

s(j)G limn?`=

q(j)

n.

In particular, we will provide the closed-form expressionof s(j) and discuss its possible applications in derivingfast spline smoothing algorithms.

3. Spectral characterization of the degrees of freedom

The rationale of our derivation is as follows. First it isshown that q(j)/n depends only on the statistics of thesampled process f (k¹). Then, we "nd a spectral factor=(z) for such a process. Kalman "ltering is used to showthat the asymptotic smoothing ratio s(j) can becalculated as an integral involving =(e+u). Last, theAs stroK m's algorithm is applied to explicitly solve theintegral, thus yielding the desired formula.

Lemma 1. Let fdG[ f (¹) 2 f (n¹)]@ and !GE( f

df @d)/c2.

Then,

H(j)"(I#j!~1)~1.

Proof. By using the de"nition of Bayes estimate, we have

[ fB(¹) 2 f

B(n¹)]@"E[ f

dDy].

Note that, under the stated assumptions, fd

and y arejointly Gaussian vectors with zero mean. Then, f

dcondi-

tioned on y is Gaussian with mean E ( fdf @d)E(yy@)~1y

(Anderson & Moore, 1979, p. 322). By direct calculationwe obtain

E ( fdfd@)E(yy@)~1y"c2!(c2!#p2I)~1y

"(I#j!~1)~1y

and the result follows from the de"nition of in#uencematrix.

De5nition 2. Let ¸3RnCnp be a lower triangular matrixand consider the linear time-varying system

xk`1

"AMkxk#BM

kuk,

yk"CM

kxk#DM

kuk, x

1"0,

where uk3RpC1 and y

k3R. Then, the quadruplet

(AMk, BM

k,CM

k,DM

k) is said a realization of ¸ if,

[y1

2 yn]@"¸[u@

12 u@

n]@ (4)

Aut=1584=Chan=VVC

G. De Nicolao et al. / Automatica 36 (2000) 1733}1739 1735

for all possible values of the samples u1,2, u

n. Converse-

ly, given the quadruplet (AMk,BM

k,CM

k, DM

k), the associated

matrix ¸ such that (4) holds is termed n-step input}out-put matrix of the system.

De5nition 3. Let ¸3RnCnp be a lower triangular matrixand consider the linear time-varying system

xk`1

"AMkxk#BM

kuk

yk"CM

kxk, x

0"0,

where uk3RpC1 and y

k3R. Then, the triplet (AM

k, BM

k,CM

k)

is said a strictly proper realization of ¸ if,

[y1

2 yn]@"¸[u@

02 u@

n~1]@, (5)

for all the possible values of the samples u0,2,u

n~1.

Conversely, given the triplet (AMk, BM

k, CM

k), the associated

matrix ¸ such that (5) holds is termed n-step strictlyproper input}output matrix of the system.

Lemma 2. Let ¸3RnC2n be the n-step strictly proper in-put}output matrix of the system (F,G,C) where F"eAT

and G3R2C2 is any matrix such that

GG@"PT

0

eA(T~t)BB@ eA{(T~t)dt. (6)

Then, !"¸¸@.

Proof. Consider the discrete-time system

x8k`1

"Fx8k#Gw8

k,

fIk"Cx8

k, x8

0"0,

where w8k3R2C1 is a white Gaussian noise with variance

c2I. By the de"nition of input-output matrix, we havethat E( fI fI @)"c2¸¸@, where fI"( fI

12 fI

n)@. The thesis will

be proven by showing that fI and fd

have the same statis-tics. In fact, with reference to system (2), the Lagrangeformula implies

xM(k#1)¹N"eATx(k¹)#PT

0

eA(T~t)Bw(t) dt.

Hence,

var[xM(k#1)¹N]"F varMx(k¹)NF @

#c2PT

0

eA(T~t)BB@eA{(T~t)dt.

On the other hand,

var(x8k`1

)"F var(x8k)F@#c2GG@,

so that x(k¹) and x8k

have the same variance. It is theneasy to show that x(k¹) and x8

khave the same

autocovariance function so that the thesis follows. h

As a corollary of Lemma 2, letting w8k3R2C1 be a

white Gaussian noise with variance c2I, it turns out thatthe linear system

x8k`1

"Fx8k#Gw8

k,

y8k"Cx8

k#e

k, x8

0"0,

is a stochastic realization of the discrete-time stochasticprocess y

kde"ned by (1) and (2), i.e. y8

khas the same

statistics as yk.

Lemma 3. Let " be a lower triangular matrix s.t.(!#jI)~1""@" and let ¸ be dexned as in Lemma 2.Then it holds that

q(j)"tr["¸¸@"@].

Proof. Recall that, since (!#jI)~1 is positive de"nite,a lower triangular factor " exists. Moreover, fromLemma 2, !"¸¸@ so that

trM(I#j!~1)~1N"trM!(!#jI)~1N"tr[¸¸@"@"]

"tr["¸¸@"@].

Lemma 4. The system W"(F!KkC,K

k, !C(Re

k)~1@2,

(Rek)~1@2), where

Rek"C&

kC@#jI,

Kk"F&

kC@(C&

kC@#j)~1,

&k`1

"F&kF@!F&

kC@(C&

kC@#j)~1C&

kF@#GG@,

&0"0 (7)

is a realization of ".

Proof. Let M 3RnCn be the input}output matrix of thesystem W. De"ne g"c~1 M y. Since the system W is justthe whitening "lter of the process y

k/c, the sequence g

kis

white with var(g)"I (see Anderson and Moore, 1979).Moreover the matrix M is nonsingular, becauseW admitsa causal linear inverse (see Anderson and Moore, 1979).Then, it holds that

var(g)"I"c~2E( M yy@ M @)" M (!#jI) M @,

so that (!#jI)" M ~1( M @)~1. Taking the inverse of thelast formula the desired result is obtained. h

Lemma 5. The following facts hold:

(1) For all possible nonnegative dexnite initial conditions&0, lim

k?`=&k"& and lim

k?`=K

k"K, where

K"F&C@(C&C@#j)~1, & being the unique non-negative dexnite symmetric solution of the algebraicRiccati equation &"&!F&C@(C&C@#j)~1C&F@#GG@;

Aut=1584=Chan=VVC

1736 G. De Nicolao et al. / Automatica 36 (2000) 1733}1739

(2) All the poles of the transfer function matrix=K(z)=(z)

have modulus strictly less than one, where

=K(z)G[I!C(zI!F#KC)~1K](C&C@#jI)~1@2,

=(z)GC(zI!F)~1G.

Proof. The proof is based on some properties of Kalman"ltering (Anderson & Moore, 1979). First, note that thecontinuous-time pair (A,B) is reachable. As a conse-quence, the discrete-time pair (F,G) is reachable as well.Then, the reachability of (F,G) together with the observ-ability of (F, C) imply that the solution &

kof the di!er-

ence Riccati equation (7) converges to a limit & for allpossible nonnegative de"nite initial conditions &

0. Con-

sequently,

limk?`=

Kk"K"F&C@(C&C@#j)~1.

Moreover, it is also well known that & is stabilizing, i.e.all the eigenvalues of F!KC belong to the open unitdisk. Therefore, the system W de"ned in Lemma 4 isstable. As for point 2, note that the zero dynamics of thesystem W is x

k`1"Fx

kso that the zeros of =

K(z)

cancel all the poles of =(z). Hence, the poles of=

K(z)=(z) are all and only those of=

K(z) thus proving

point 2.

Theorem 1. Let '(z)"=(z)=@(z~1). Then,

s(j)"1

2p Pp

~p

'(e+u)'(e+u)#j

du. (8)

Moreover all the roots of the denominator of'(z)/M'(z)#jN have modulus diwerent from one.

Proof. In view of Lemma 3, q(j)"+var(gk) where

gG"¸w8 , w8 3R1C2n being a stochastic vector withvar[w8 ]"I. Given Lemmas 4 and 5, g

kconverges to

a stationary process g6k

as n and kP#R. Moreover,again in view of Lemmas 4 and 5, the spectrum of g6

kis

'g6 g6 (e+u)"D=K(e+u)D2=(e+u)=@(e~+u).

Obviously,

s(j)" limn?`=

+nk/1

var(gk)

n"var(g6

k)

"

1

2p Pp

~p

D=K(e+u)D2=(e+u)=@(e~+u) du. (9)

Next, we prove that=K(z)=

K(z~1)"M=(z)=@(z~1)#

jN~1. In Anderson and Moore (1979) the following

relations are derived:

(=(z)=@(z~1)#j)~1

"[M1#=IK(z)N(C&C@#jI)M1#=I

K(z~1)N]~1,

=IK(z)"

=K(z)

1!=K(z)

,

=IK(z)GC(zI!F)~1K.

Then, after some calculation, one can obtain

[M1#=IK(z)N(C&C@#jI)M1#=I

K(z~1)N]~1

"=K(z)=

K(z~1)

and the desired formula follows. As for the roots of'(z)/M'(z)#jN, consider (9). Recalling the proof ofLemma 5, all the poles of =

K(z)=(z) are all and only

those of =K(z). Then, the thesis is an immediate conse-

quence of Lemma 5. h

Note that, apart from a scale factor p2, expression (8) isformally equivalent to the variance of the error commit-ted by the Wiener smoother used to reconstruct astationary process with spectrum '(e+u) from noisymeasurements having variance p2. Then, one may sus-pect that Theorem 1 could be alternatively derived viaWiener "ltering theory. This approach, however, wouldraise two problems. First, due to its unit-modulus poles,'(e+u) de"ned in Theorem 1 is not the spectrum of a sta-tionary process. Second, Wiener "ltering deals only withthe minimization of the stationary estimation error andcannot say anything about convergence issues, which inour derivation are taken care of by Lemma 5.

By suitably de"ning the polynomials A(z) and B(z),integral (8) can be rewritten as

s(j)"1

2pPp

~p

'(e+u)'(e+u)#j

du

"

1

2pi Q@z@/1

B(z)B(z~1)

A(z)A(z~1)

dz

z

and can be evaluated applying the algorithm given inAs stroK m (1970). This procedure, that is summarized in thenext Lemma, requires that all the roots of A(z) havemodulus strictly less than one, which can always beguaranteed in view of Theorem 1.

Lemma 6. Given the polynomials

A(z)"a0,p

zp#a1,p

zp~1#2#ap,p

,

B(z)"b0,p

zp#b1,p

zp~1#2#bp,p

,

if A(z) is stable it holds

1

2pi Q@z@/1

A(z)A(z~1)

B(z)B(z~1)

dz

z"

1

a0,p

p+i/0

(bi,i

)2

a0,i

,

Aut=1584=Chan=VVC

G. De Nicolao et al. / Automatica 36 (2000) 1733}1739 1737

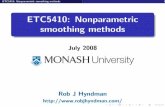

Fig. 1. Asymptotic smoothing ratio s(m) vs. m"c/¹3.

where, for k"p, p!1,2,1

ak"

ak,k

a0,k

, bk"

bk,k

a0,k

,

ai,k~1

"ai,k!a

kak~i,k

, i"0,2, k!1

bi,k~1

"bi,k!b

kak~i,k

, i"0,2, k!1.

4. Explicit formula of the asymptotic smoothing ratio

By using Lemma 6, the following formula for theasymptotic smoothing ratio is obtained:

s(j)"s(m)"a20,2

b21,2

(a40,2

!36m2)

]G(a2

0,2#6m!b2

1,2a0,2

a1,2

)2

(a0,2

#6m)2!a21,2

a20,2

#b41,2H

a1,2

"

(6)1@2!(2#96m)1@2

2

a0,2

"

1!24m2a

1,2

#

1

2GA1!24m

a1,2

B2!24mH

1@2

b1,2

"

(3)1@2#1

(2)1@2

m"j¹3

.

The log-scale plot of the function s(m) is given in Fig. 1.The merit of the explicit formula for the degrees of

freedom is two-fold. First, on the theoretical side, theresult clari"es the dependence of j on the sampling inter-

val, thus explaining why disparate values of j are encoun-tered in practice. To make an example, since s(j) dependsonly on the ratio j/¹3, one cannot state that j"107corresponds to a smoothing ratio close to 0 if the value of¹ is not speci"ed. Hence, when presenting the results ofnonparametric spline regression, one should always pro-vide the associated degrees of freedom since the smooth-ness parameter j alone does not enable the user to assessthe amount of smoothing. With regard to this point, Fig. 1provides a direct link between j and q(j). Conversely, it isalso possible to directly obtain the value of j that guaran-tees the desired smoothing ratio.

Second, the result of the present paper has also a com-putational value. Consider the typical GCV-basedsmoothing spline algorithm: in order to tune j, it isnecessary to compute the spline estimate, which requires17n multiplications (Kohn & Ansley, 1989) as well as toevaluate the equivalent degrees of freedom, which re-quires a supplement of 14n multiplications. All thesecomputations must be repeated for several values ofj until the GCV criterion has been minimized. The newexplicit formula can be exploited to work out a fasterGCV-based algorithm in which the degrees of freedomare calculated through the explicit formula, thus saving14n multiplications. Note that the approximation in-volved by the use of the asymptotic value is not a majorproblem because the GCV itself is nothing but aneasy-to-compute approximation of the Ordinary CrossValidation criterion (Wahba, 1990). It goes withoutsaying that the proposed fast algorithm preserves all theasymptotic properties of the traditional GCV-basedalgorithms.

A natural "eld of application of this fast scheme is theon-line processing of signals, when there are critical timeand memory constraints. To make an example takenfrom electronic instrumentation, in the measurementof blood velocity by means of ultrasound (Dotti &Lombardi, 1996), the smoothing of a single velocitypro"le should be very fast in order to keep the pace withthe rate at which the pro"les are acquired (200Hz). Inthese situations, the algorithm could be made even fasterby computing the estimates using a Wiener smootherapproximation. This would require 8n multiplicationsagainst the 17n multiplications required by the abovecited Kohn and Ansley method.

5. Concluding remarks

An explicit formula for the asymptotic degrees of free-dom of cubic splines has been derived via spectral factor-ization techniques. The same approach can be used toobtain an analogous result for linear splines. Anotherextension could be the assessment of the smoothing ratiofor deconvolution schemes based on Bayesian estimation(De Nicolao, Sparacino & Cobelli 1997).

Aut=1584=Chan=VVC

1738 G. De Nicolao et al. / Automatica 36 (2000) 1733}1739

References

Anderson, B. D. O., & Moore, J. B. (1979). Optimal xltering. EnglewoodCli!s, NJ: Prentice-Hall.

As stroK m, K. A. (1970). Introduction to stochastic control theory. NewYork: Academic Press.

Borto!, S. A. (1997). Approximate state-feedback linearization usingspline functions. Automatica, 33, 1449}1458.

Craven, P., & Wahba, S. (1979). Smoothing noisy data with splinefunctions: estimating the correct degree of smoothing by themethod of generalized cross-validation. Numerical Mathematics, 31,377}403.

De Luca, A., Lanari, L., & Oriolo, G. (1991). A sensitivity ap-proach to optimal spline robot trajectories. Automatica, 27,535}539.

De Nicolao, G., Sparacino, G., & Cobelli, C. (1997). Non parametricinput estimation in physiological system: Problems, methods andcase studies. Automatica, 33, 851}870.

Dotti, D., & Lombardi, R. (1996). Estimation of the angle betweenultrasound beam and blood velocity through correlation functions.IEEE Transactions on Ultrasonic, Ferroelectrics and FrequencyControl, UFFC-43, 864}869.

Ferrari-Trecate, G. (1999). Bayesian methods for nonparametric regres-sion with neural networks. Ph.D. thesis. Universita degli Studi diPavia, Dip. di Informatica e Sistemistica, Pavia, Italy.

Golub, G., Heath, M., & Wahba, G. (1979). Generalized crossvalidation as a method for choosing a good ridge parameter.Technometrics, 21, 215}224.

Hall, P., & Titterington, D. (1987). Common structure of techniques forchoosing smoothing parameters in regression problems. Journal ofthe Royal Statistical Society Series. B, 49, 184}198.

Hastie, T. J., & Tibshirani, R. J. (1990). Generalized additive models.London: Chapman & Hall.

Kohn, R., & Ansley, C. F. (1989). A fast algorithm for signal extraction,in#uence and cross-validation in state space models. Biometrika,76(1), 65}79.

Kormylo, J. (1991). Kalman smoothing via auxiliary outputs.Biometrika, 27(2), 371}373.

MacKay, D. J. C. (1992). Bayesian interpolation. Neural Computation, 4,415}447.

Silverman, B. W. (1985). Some aspects of the spline approach to non-parametric regression curve "tting. Journal of the Royal StatisticalSociety B, 47, 1}52.

Speckman, P. (1985). Spline smoothing and optimal rates of conver-gence in nonparametric regression models. The Annals of Statistics,13, 970}983.

Utreras, F. (1983). Natural spline functions, their associated eigenvalueproblem. Numerische Matematik, 42, 107}117.

Wahba, G. (1983). Bayesian `con"dence intervalsa for the cross-validated smoothing spline. Journal of the Royal Statistical SocietySeries B, 45, 133}150.

Wahba, G. (1990). Spline models for observational data. Philadelphia,PA: SIAM.

Wecker, W., & Ansley, C. (1983). The signal extraction approach tonon-linear regression and spline smoothing. Journal of AmericanStatistical Association, 78, 81}89.

Giuseppe De Nicolao was born in Padova,Italy, on April 24, 1962. He received theDoctor's degree in Electronic Engineering(`Laureaa) in 1986 from the Politecnico ofMilano, Italy. From 1987 to 1988 he waswith the Biomathematics and BiostatisticsUnit of the Institute of PharmacologicalResearches `Mario Negria, Milano. In1988 he entered the Italian National Re-search Council (C.N.R.) as a researcher ofthe Centro di Teoria dei Sistemi in Milano.Since 2000 he is full Professor of Model

Identi"cation in the Dipartimento di Informatica e Sistemistica of theUniversitaH di Pavia (Italy). In the summer of 1991, he held a visitingfellowship at the Department of Systems Engineering of the AustralianNational University, Canberra (Australia). In 1998 he was a plenaryspeaker at the workshop on `Nonlinear model predictive control:Assessment and future directions for researcha, Ascona, Switzerland.He is a Senior Member of the IEEE, and, since 1999, Associate Editor ofthe IEEE Transactions on Automatic Control. His research interestsinclude predictive and receding-horizon control, optimal and robustprediction and "ltering, Bayesian learning and neural networks, linearperiodic systems, deconvolution techniques, modeling and simulationof endocrine systems. On these subjects he has authored or coauthoredmore than 50 journal papers.

Giovanni Sparacino was born in Por-denone, Italy, on November 11, 1967. Hereceived the Laurea degree in ElectronicsEngineering cum laude from the Univer-sity of Padova, Italy, in 1992 and the Ph.D.degree in Biomedical Engineering from thePolytechnic of Milan, Italy, in 1996. FromJanuary 1997 to December 1998 he wasResearch Engineer at the Department ofAudiology and Phoniatrics of the Univer-sity of Padova & Padova City Hospital. InDecember 1998 he joined the Department

of Electronics and Informatics of the University of Padova as a Re-search Scientist of Biomedical Engineering. Since Academic Year1997}1998 he holds courses on Biomedical Engineering and MedicalInformatics at the Faculty of Medicine and at the Faculty of Engineer-ing of the Univeristy of Padova. His current scienti"c interests includedeconvolution, parameter estimation and time-series analysis ofphysiological systems.

Giancarlo Ferrari Trecate was born inCasorate Primo, Italy, in 1970. He receivedthe `Laureaa degree in Computer Engin-eering in 1995 and the Ph.D. degree inElectronic and Computer Engineering in1999, both from the University of Pavia. Inspring 1998 he was visiting researcher atthe Neural Computing Research Group,University of Birmingham, UK. In 1998 hejoined the Swiss Federal Institute of Tech-nology (ETH) as a Postdoctoral fellow. In1999 he won the &&assegno di ricerca'' at the

University of Pavia. His research interests include linear periodicsystems, Bayesian learning, fuzzy systems and hybrid systems.

Aut=1584=Chan=VVC

G. De Nicolao et al. / Automatica 36 (2000) 1733}1739 1739