Example, BP learning function XOR

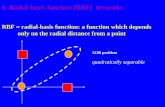

-

Upload

baker-lynch -

Category

Documents

-

view

75 -

download

0

description

Transcript of Example, BP learning function XOR

Example, BP learning function XOR• Training samples (bipolar)

• Network: 2-2-1 with thresholds (fixed output 1)

in_1 in_2 d

P0 -1 -1 -1

P1 -1 1 1

P2 1 -1 1

P3 1 1 1

• Initial weights W(0)

• Learning rate = 0.2

• Node function: hyperbolic tangent

)1,1,1(:

)5.0,5.0,5.0(:

)5.0,5.0,5.0(:

)1,2(

)0,1(2

)0,1(1

w

w

w

))(1))((1(5.0)('))(1)(()('

1)(2)(

;1

1)(

1)(lim

;1

1)tanh()(

xgxgxgxsxsxs

xsxge

xs

xge

exxg

x

x

x

x

pj

W(1,0) W(2,1)

o

0)1(

1x

)1(2x

2

1

0

1

2

(1,0)

1 1 0

(1,0)

2 2 0

(1) 0.5

1 1

(1) 0.5

2 2

( 0.5, 0.5, 0.5) (1, 1, 1) 0.5( 0.5, 0.5, 0.5) (1, 1, 1) 0.5

( ) 2 /(1 ) 1 -0.24492( ) 2 /(1 ) 1

net w pnet w px g net ex g net e

0 0Present P (1, -1, -1) : d -1Forward computing

( 2,1) (1)

-0.24492( 1, 1, 1)(1, -0.24492, -0.24492) -1.48984

( ) -0.63211o

o

net w xo g net

0.22090.6321)0.6321)(1-1(-0.3679))(1))((1()('

-0.36789-0.63211)(1

ooo netgnetglnetgl

odlgpropogatin back Error

-0.207650.24492)(10.24492)-1(1-0.2209)('

-0.207650.24492)(10.24492)-1(1-0.2209)('

2)1,2(

22

1)1,2(

11

netgw

netgw

0.0108)0.0108, 0.0442,(0.2449)- 0.2449,-(1,0.2209)(2.0

)1()1,2(

xw

update Weight

0.0415)0.0415,-0.0415,()1-,1-(1,-0.2077)(2.0

0.0415)0.0415,-0.0415,()1-,1-(1,-0.2077)(2.0

02)0,1(

2

01)0,1(

1

pw

pw

( 2,1) ( 2,1) ( 2,1) ( 1, 1, 1) (-0.0442, 0.0108, 0.0108)(-1.0442, 1.0108, 1.0108)

w w w

0.5415) 0.4585,--0.5415,(0.0415)0.0415,-0.0415,()5.0,5.0,5.0(

0.4585)-0.5415,-0.5415,(0.0415)0.0415,-0.0415,()5.0,5.0,5.0(

)0,1(2

)0,1(2

)0,1(2

)0,1(1

)0,1(1

)0,1(1

www

www

0.102823 to0.135345 from reduced for Error 20 lP

0

0.2

0.4

0.6

0.8

1

1.2

1.4

1.6

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19

MSE reduction:every 10 epochs

Output: every 10 epochs

epoch 1 10 20 40 90 140 190 d

P0 -0.63 -0.05 -0.38 -0.77 -0.89 -0.92 -0.93 -1

P1 -0.63 -0.08 0.23 0.68 0.85 0.89 0.90 1

P2 -0.62 -0.16 0.15 0.68 0.85 0.89 0.90 1

p3 -0.38 0.03 -0.37 -0.77 -0.89 -0.92 -0.93 -1

MSE 1.44 1.12 0.52 0.074 0.019 0.010 0.007

init (-0.5, 0.5, -0.5) (-0.5, -0.5, 0.5) (-1, 1, 1)

p0 -0.5415, 0.5415, -0.4585 -0.5415, -0.45845, 0.5415 -1.0442, 1.0108, 1.0108

p1 -0.5732, 0.5732, -0.4266 -0.5732, -0.4268, 0.5732 -1.0787, 1.0213, 1.0213

p2 -0.3858, 0.7607, -0.6142 -0.4617, -0.3152, 0.4617 -0.8867, 1.0616, 0.8952

p3 -0.4591, 0.6874, -0.6875 -0.5228, -0.3763, 0.4005 -0.9567, 1.0699, 0.9061

)0,1(1w

)0,1(2w )1,2(w

After epoch 1

# epoch

13 -1.4018, 1.4177, -1.6290 -1.5219, -1.8368, 1.6367 0.6917, 1.1440, 1.1693

40 -2.2827, 2.5563, -2.5987 -2.3627, -2.6817, 2.6417 1.9870, 2.4841, 2.4580

90 -2.6416, 2.9562, -2.9679 -2.7002, -3.0275, 3.0159 2.7061, 3.1776, 3.1667

190 -2.8594, 3.18739, -3.1921 -2.9080, -3.2403, 3.2356 3.1995, 3.6531, 3.6468

Network Paralysis

• Increase the initial weights by a factor of 10

initial After 190 epochs

net_o with p1 -10.1339 -10.1255

o -0.9999206 -0.9999199

10 9.999948

MSE 1.999841 1.99984

( 2,1)

1w

( 10) 0.999909; '( 10) 0.00009g g