Estimating Mixtures of Normal Distributions and Switching Regressions: Comment

-

Upload

peter-bryant -

Category

Documents

-

view

214 -

download

0

Transcript of Estimating Mixtures of Normal Distributions and Switching Regressions: Comment

Estimating Mixtures of Normal Distributions and Switching Regressions: CommentAuthor(s): Peter BryantSource: Journal of the American Statistical Association, Vol. 73, No. 364 (Dec., 1978), pp. 748-749Published by: American Statistical AssociationStable URL: http://www.jstor.org/stable/2286272 .

Accessed: 15/06/2014 06:35

Your use of the JSTOR archive indicates your acceptance of the Terms & Conditions of Use, available at .http://www.jstor.org/page/info/about/policies/terms.jsp

.JSTOR is a not-for-profit service that helps scholars, researchers, and students discover, use, and build upon a wide range ofcontent in a trusted digital archive. We use information technology and tools to increase productivity and facilitate new formsof scholarship. For more information about JSTOR, please contact [email protected].

.

American Statistical Association is collaborating with JSTOR to digitize, preserve and extend access to Journalof the American Statistical Association.

http://www.jstor.org

This content downloaded from 188.72.126.181 on Sun, 15 Jun 2014 06:35:03 AMAll use subject to JSTOR Terms and Conditions

748 Journal of the American Statistical Association, December 1978

(1975) used the method to estimate parameters of stable distributions.

The selection of Oj is crucial to the performance of the MGF method. The authors fortunately give some guide- lines for the choice of 0j which appear to work well for the simulation cases that were tried. However, it seems to nie that the choice of the 0j should depend on the scale of the xi's that are available. If the xi's are in the range .001 < xi ? .01, a different choice of the Oj than that offered, by the authors may be needed; perhaps they could give some recommendations that would relate the choice of Oj to the scale of the xi's.

Throughout the discussion there is no mention of choices of initial values of the parameters X, A o-1, A 2,

02. The authors' implementation, of the MGF method uses the iterative Davidon-Fletcher-Powell algorithm for function minimization. Certainly initial values are required for this algorithm. Initial values would be un- important only if the function S. (0) of formula (2.4) does not have local minima. (Such minima would be locations in the S. (0) surface where the gradient is zero.) Is this true? The authors do not say. If there are local minima, then initial values may be important in the minimization of S. (0). If care must be taken in the choice of both the 0j and the initial values, then the MGF loses some of its attractiveness. It has been my experience (Fowlkes 1977) that the choice of initial values can be crucial

for least squares and MLE methods, since local minima can be encountered in difficult cases.

There are several other comments that are appropriate. The authors mention the failures of the MGF method in Monte Carlo simulations because of the algorithm stray- ing into prohibited regions in the parameter space (X < 0 or X > 1 or o-12 < 0.0 or 0-22 < 0.0). The problem can be alleviated by a reparametrization of the original problem. For example, for X, one might consider using X = sin2O. Then, differentiation would be carried out with respect to 0. We are assured that 0 < X < 1.

The authors calculated the Wilk-Shapiro statistic for the coefficient estimates via the MGF and moment methods in their Monte Carlo simulation experiment. It might be very instructive to construct normal quantile- quantile plots for some of the cases.

Cases 5 and 6 are difficult ones for the MGF and I am sure that they would prove difficult for any estimation method. It might be profitable to study these cases further. Are there local minima for these cases? Will care in the choice of initial values decrease the failure rate?

REFERENCES Fowlkes, E.B. (1977), "Some Methods for Studying the Mixture of

Two Normal (Lognormal) Distributions," unpublished Bell Tele- phone Laboratories Technical Memorandum.

Paulson, A.S., Holcomb, E.W., and Leitch, R.A. (1975), "The Estimation of the Parameters of the Stable Laws," Biometrika, 62, 163-170.

Comment PETER BRYANT*

I am happy to see Quandt and Ramsey tackle some of the problems associated with mixture models, particularly in the regression case. Their idea of using the moment generating function is intriguing, and successfully gets around the problem of the singularity in the likelihood function when unequal variances are allowed in the components of the mixture.

I shall comment only on the case of simple univariate data, and not on regression. The numerical complexities of Quandt and Ramsey's method could cause trouble in particular cases, but this is probably not a general problem. The main benefit of their approach is that it allows unequal variances in the components of the mix- ture. Here, I think they are remiss in not citing the work of Wolfe (1965, 1970, 1971), which duplicates much of Day (1969) and provides some extensions to his work.

* Peter Bryant is with the IBM Corporation, P.O. Box 1900, Boulder, CO 80302.

Wolfe makes the point that the singularity of the likeli- hood function when unequal variances are allowed is not generally a severe problem in practice. The singularity occurs, essentially, when NX ? 1. Intuitively, this is similar to having only one data point in a group. The estimate of the variance will be zero, and the likelihood function will be infinite.

By the simple device of restricting NX to be greater than 1, satisfactory answers are usually obtained. Alternatively, if on any iteration NX _ 1, we may revert to assuming a common variance, so that the likelihood function will not be singular. Component probabilities less than 1/N are not estimable, but this is seldom a problem. We can hardly hope to get good-estimates of the parameters in such cases, and techniques designed specifically to detect outliers would probably be more use- ful, anyway. For example, I have had some success with the following procedure: Obtain initial estimates by

This content downloaded from 188.72.126.181 on Sun, 15 Jun 2014 06:35:03 AMAll use subject to JSTOR Terms and Conditions

Clarke and Heathcote: Estimating Mixtures of Normal Distributions 749

classifying the observations into two groups so as to minimize the logarithm of the "classification" likelihood,

n log s12 + (N - n) log S22,

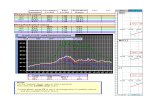

where n is the number of observations in group 1, and s,2 is the within-groups variance for group i. Using these estimates, iterate using Wolfe's (1970) equations and the restrictions mentioned above, and obtain refined esti- mates for the mixture model. I applied this to Sample 1 from Quandt and Ramsey's Table 7, and obtained the answers given below (Quandt and Ramsey's method failed on this data set):

X Al 1 /t2 0T2

"True" .50 -3.00 1.00 3.00 4.00 Wolfe method .19 -3.06 1.09 3.94 3.88

Clearly, this method makes some errors. I suspect it has its strengths and weaknesses, like Quandt and Ramsey's or any other. The squared errors for this

method are noticeably less than the mean squared errors reported for case 4 by Quandt and Ramsey (except for X), and I feel this method is a fair competitor of theirs.

I feel Quandt and Ramsey haven't shown their method to be superior to others in practice. That issue is still unresolved. I do welcome their ideas on the underlying theory and methods, and hope they will pursue the practical aspects further.

REFERENCES Day, N.E. (1969), "Estimating the Components of a Mixture of

Normal Distributions," Biometrika, 56, 463-474. Wolfe, John H. (1965), "A Computer Program for the Analysis of

Types," U.S. Naval Personnel and Training Research Laboratory, San Diego, Technical Bulletin 65-15 (Defense Documentation Center AD 620 026).

(1970), "Pattern Clustering by Multivariate Mixture Analysis," Multivariate Behavioral Research, 5, 329-350.

(1971), "A Monte Carlo Study of the Sampling Distribution of the Likelihood Ratio for Mixtures of Multinormal Distribu- tions," Technical Bulletin STB 72-2, U.S. Naval Personnel and Training Research Laboratory.

Comment B. R. CLARKE and C. R. HEATHCOTE*

The Monte Carlo results presented by Quandt and Ramsey highlight the fact that the method of moments performs poorly compared to their method of minimizing a distance function based on the moment generating function (MGF), at least in the case of a mixture of normal distributions. However, other minimum distance methods may have advantages. Choi and Bulgren (1968) minimize a distance statistic defined by the empirical distributioin function; Beran (1977) uses the Hellinger norm; and Paulson, Holcomb, and Leitch (1975) and Heathcote (1977) use a distance defined in terms of the empirical characteristic function. A comparison between the latter and the Quandt-Ramsey approach may be useful.

Quandt and Ramsey in their expression (2.4) estimate -y by minimizing the case of

Sn (y) = fEn (0) -G ( y) )]2dK(O)

when the weight function K assigns equal mass to the finite set {I 1, ..., k} . Here yn (0) = nZl En exp { OXj } and G(O, ey) is the moment generating function of X. Similarly, the Integrated Squared Error distance of

* B.R. Clarke and C.R. Heatheote are faculty members of the Statistics Department, The Australian National University, Box 4, Canberra, A.C.T. 2600, Australia.

Paulson, Holcomb, and Leitch and Heathcote is

In(Q) = f In(_) - 12(dK(O)

where ,n (0) = >I71 exp {iiXj } is the empirical char- acteristic function with expectation q (0, y). One point is apparent; namely, that because the moment generating function is unbounded in 0, the class of weight functions appropriate to n (Y) must be severely curtailed in order to ensure convergence. On the other hand, the character- istic function is uniformly bounded, thus placing less constraint on the choice of K. This point is worth noting since, as argued by Heathcote (1977), the weight function selected has a significant effect on the efficiency of the estimator. Furthermore, the characteristic function method is applicable in cases when a moment generating function fails to exist, as with certain heavy-tailed distributions.

Perhaps of more importance, the selection of a weight function assigning positive mass to only a finite number of points seems to lead to an estimator with efficiency close to zero for certain subsets of the parameter space. This is a feature common to procedures based on Sn(-y) or I,n (y) . However, the uniform boundedness of the characteristic function appears to lead to better effi- ciencies than is the case with the MGF. (The uniform boundedness has the added advantage of avoiding over-

This content downloaded from 188.72.126.181 on Sun, 15 Jun 2014 06:35:03 AMAll use subject to JSTOR Terms and Conditions