ELEC 599 Project Report Multivariate Dependence …igoodman/papers/elec599.pdfELEC 599 Project...

Transcript of ELEC 599 Project Report Multivariate Dependence …igoodman/papers/elec599.pdfELEC 599 Project...

ELEC 599 Project Report

Multivariate Dependence Measures with Applications to

Neural Ensembles

Ilan N. Goodman

Advisor: Dr. Don H. Johnson

Abstract

In this paper I develop two new multivariate dependence measures to aid in the analysis of

neural population coding. The first, which is based on the Kullback-Leibler distance, results in a

single value that indicates the general level of dependence among several random variables. The

second, which is based on an orthonormal series expansion of the joint density function, provides

more detail about the nature of the dependence. These dependence measures are applied to the

analysis of simultaneous recordings made from multiple neurons, in which dependencies are

time-varying and potentially information bearing.

1

1 Introduction

Traditional models of neural information processing assume that individual neurons process infor-

mation independently. However, recent experimental evidence has revealed that in many cases pop-

ulations exhibit statistical dependencies that may contribute to the way a stimulus is encoded[1].

Investigators have used statistical methods and concepts in information theory to characterizesyn-

ergy in neural populations, the degree to which the population of neurons represents information

better than the sum of the individual neurons contributions. However, these methods fail to capture

the subtleties of the interactions between members of the population; synergy merely tells usif the

neurons are working together, rather than how. The key to understanding population coding is not

only the ability to detect dependencies in neural ensembles, but also the ability to describe the details

of the dependencies.

Quantifying the statistical dependencies among jointly distributed random variables has never

been simple. The most commonly used dependence measure, the correlation coefficient, is a weak

measure of dependence for non-Gaussian random variables, and is restricted to pairs of variables

only. Other concepts in bivariate dependence apply only to families of distributions possessing spe-

cific properties, and are not easily extended to ensembles of more than two variables[2]. In this paper,

I develop two new dependence measures that generalize easily to large ensembles. The first is based

on the information-theoretic Kullback-Leibler distance, and quantifies overall ensemble dependence.

The second is based on an orthonormal series expansion of the joint probability density function,

and provides greater detail about dependencies between subsets of the component random variables

in the ensemble. Section 2 presents the two dependence measures and the justifying theory, as well

as an example illustrating their use on artificially generated data. Section 3 describes how these de-

2

pendence measures are applied to the analysis of neural population recordings. Section 4 provides

several examples of the analysis of real data recorded from the crayfish optic lobe.

2 Multivariate Dependence Measures

The random variables X1, X2, . . . , XN with joint probability distribution

pX1,X2,...,XN(x1, x2, . . . , xN) and marginal distributions pXn(xn), n = 1, 2, . . . , N are said to

be independent ifpX1,X2,...,XN(x1, x2, . . . , xN) =

∏Nn=1 pXn(xn)[3]. The two dependence measures

described in this section quantify dependence in terms of the degree to which the joint distribution

differs from the independent distribution. Thus if the joint distribution and the independent distribu-

tion agree, the random variables are said to be statistically independent, and dependence measures

are zero. Increasingly non-zero dependence measures indicate increasing statistical dependence.

2.1 Distance from Independent

The Kullback-Leibler (KL) distance provides a convenient way of quantifying dependence between

multiple random variables. The KL distance between two probability functionsp(x) and q(x) is

defined to be[5]:

D (p‖q) =

∫p(x) log

p(x)

q(x)dx (1)

The KL distance has the property thatD(p‖q) ≥ 0, with equivalence only ifp and q are equal

everywhere.

Let pX(x),x ∈ X be the distribution function of the jointly defined random variableX =

{X1, X2, . . . , XN} with marginal distributionspXn(xn), n = 1, 2, . . . , N . I define the dependence

measureν to be the KL distance between the joint distribution function and the independent distri-

3

bution, the product of the marginals[6]:

ν =∑x

pX(x) logpX(x)∏N

n=1 pXn(xn)(2)

Due to the properties of the KL distance, this dependence measure is zero if and only if the variables

are statistically independent, and increases as they become increasingly dependent. This measure is

the mutual information generalized to the multivariate case.

In the case of discrete random variables, an upper bound forν is found in terms of the entropies

of the component variables:

ν ≤N∑

n=1

H(Xn)−maxn

H(Xn) ≡ νmax (3)

This bound does not hold for continuous random variables because differential entropy can be nega-

tive. Nevertheless, to analyze discrete data it is convenient to use the normalized dependence measure

ν = ν/νmax. Figure 1(a) shows an example of this dependence measure applied to the ensemble anal-

ysis of three discrete random variables. The data for this example were generated by sampling from

a Bernoulli distribution. Time-varying dependencies were introduced to mimic the behaviour of a

neural population. At each time step,ν was estimated from 500 samples of the ensemble response.

For details on estimatingν and forming confidence intervals, see section 3. Initially the three vari-

ables are independent; however, starting from bin 25 the ensemble possesses a weak dependence,

with ν ≈ 0.1.

4

0 10 20 30 40 50 60 70 80 900

0.1

0.2

Normalized Dependence ν

0 10 20 30 40 50 60 70 80 900

0.2

0.4R

ate

(sp/

bin)

PST of x1

0 10 20 30 40 50 60 70 80 900

0.2

0.4

Rat

e (s

p/bi

n)

PST of x2

0 10 20 30 40 50 60 70 80 900

0.2

0.4

Rat

e (s

p/s)

PST of x3

Bin Number

(a)

0 10 20 30 40 50 60 70 80 90

(1,0,0)

(0,1,0)

(1,1,0)

(0,0,1)

(1,0,1)

(0,1,1)

(1,1,1)

Excess Probability η(x1,x

2,x

3)

Sym

bol

Bin Number

y−scale = 1.5678

(b)

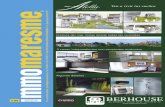

Figure 1:Dependence analysis of three Bernoulli distributed random variables. The dependencies are time-varying to simulate a neural response. The top plot in panel (a) shows the dependence measureν for the data,computed from the type estimates of the joint and marginal distributions. The shaded region highlights the90% confidence interval for the measure, calculated using the bootstrap method[4]. The bottom plots show thetime-varying average of each variable. Panel (b) shows the excess probabilityη(x1, x2, x3) for each possibleoutcome of the ensemble, where the labels(x1, x2, x3) on the vertical axis indicate the joint occurance ofx1

in the first random variable,x2 in the second, andx3 in the third. Since∑

η(x) = 0, the symbol(0, 0, 0) hasbeen omitted, and can be inferred from the remaining symbols. 90% confidence intervals were again computedusing the bootstrap. 5

2.2 Excess Probability

Bivariate probability distributions can be expanded in terms of their marginal distributions[7],[8],[9].

I have extended the expansion for multiple variables. Once again, letpX(x),x ∈ X be the distri-

bution of the jointly defined random variableX = {X1, X2, . . . , XN} with marginal distributions

pXn(xn), n = 1, 2, . . . , N . Then,

pX(x) =N∏

n=1

pXn(xn) ·[1 +

∑i1,i2,...,iN

ai1i2···iN ψi1(x1)ψi2(x2) · · ·ψiN (xN)

](4)

The set of functions{ψin(xn), in = 0, 1, . . .} form an orthonormal basis with respect to a weighting

function equal to the marginal probability functionpXn(xn), and obey the following conditions:

∫ψ2

in(xn)pxn(xn)dxn = 1, in = 1, 2, . . .

∫ψin(xn)ψjn(xn)pxn(xn)dxn = 0, ∀ in 6= jn (5)

In addition, the functions are chosen such thatψ0(xn) = 1. The coefficientsai1i2···iN are given by:

ai1i2···iN =

∫ψi1(x1)ψi2(x2) · · ·ψiN (xN)px(x)dx (6)

Thus the coefficients express a measure of correlation between the orthonormal sets with respect to

the joint distribution. I have shown that the coefficients having completely distinct indicesi1 6= i2 6=

· · · 6= iN can be made to equal zero; see appendix A for proof. Consequently, the sum ranges over the

complement of the set wherein the indices are all distinct (i.e., the indices are at least pairwise equal).

6

The coefficients are related to Pearson’sφ2 measure generalized for a multivariate distribution[10]:

∑i1,i2,...,iN

a2i1i2···iN =

∫p2x(x)∏N

n=1 pxn(xn)dx− 1 ≡ φ2 (7)

In addition, simple bounding arguments show thatν ≤ φ2. It is important to note that the expan-

sion only converges ifφ2 < ∞[11]. φ2 is provably bounded only for certain classes of continuous

distributions, including the bivariate Gaussian distribution with correlation coefficient|ρ| < 1[8].

However,φ2 is bounded for all discrete distributions with finite support, to which class belong all the

practical examples under consideration.

While computing the terms in the expansion can be quite difficult, rearranging equation 4 to iso-

late the dependence terms provides us with a simple and informative statistical dependence measure.

Theexcess probabilityη(x) is defined to be the difference between the joint probability distribution

and the product of the marginals:

η(x) = pX(x)−N∏

n=1

pXn(xn) (8)

=N∏

n=1

pXn(xn) ·∑

i1,i2,...,iN

ai1i2···iN ψi1(x1)ψi2(x2) · · ·ψiN (xN)

For any valuex0 ∈ X , the excess probability measure has the following interpretation:

1. If η(x0) > 0, thenx0 occurs more frequently in the ensemble than it would if the component

variables were independent;x0 therefore defines a value of the random vector for which the

component variables are positively dependent.

2. If η(x0) < 0, thenx0 occurs less frequently in the ensemble than it would if the component

variables were independent;x0 therefore defines a value of the random vector for which the

7

component variables are negatively dependent.

3. If x0 = 0, thenx0 defines a value of the random vector for which the components are statisti-

cally independent.

The excess probability measure thus provides a much more subtle view of the statistical dependencies

within an ensemble than doesν. While ν provides a summary measure of the amount of dependence

in the ensemble,η can also quantify dependence betweensubsetsof the constituent random variables.

Figure 1(b) shows the excess probabilityη(x1, x2, x3) computed for the example described in the

previous section. Beginning at bin 25 there is positive dependence for the symbolx0 = (1, 1, 0)

and negative dependence for the symbols(1, 0, 0) and(0, 1, 0); this indicates a conditional positive

dependence betweenX1 andX2 whenX3 = 0. Similarly, there is a weaker conditional positive

dependence betweenX1 andX2 whenX3 = 1.

3 Neural Ensemble Analysis

3.1 Estimating Probability Distributions of Neural Data

Neural discharges consist of a sequence of identical spikes, which are modeled as a stochastic point

process[12]. In order to analyze a neural response, the discharges are first converted into a symbolic

form that is convenient for computation. For a single neuron, time is divided into discrete bins of

length∆. The response is then represented by a sequence of numbers each of which is number of

spikes occuring in a bin. Estimating the probability distribution for the response can then be done

using the method of types[13]. Let{X(i)n }N

n=1 be the stochastic sequence representingN independent

samples of the neuron discharge in a given bin∆i. X(i)n takes values inX = {0, 1, . . . , D−1}, where

8

D is thealphabet size, equal to one plus the maximum number of spikes occuring across all samples

and all bins in the response. The typePX(x) is equal to the proportion of occurrences of the symbol

x in the sequence[5]:

PX(x) =1

N

N∑n=1

I(Xn = x) (9)

HereI(·) is an indicator function that equals one when its argument is true and zero if it is false. The

type is the maximum likelihood estimate for the true distributionPX(x).

Estimating the joint distribution is done in a similar way, where the symbols now form a|D|-

ary code representing how many spikes occured in a bin for each neuron in the ensemble. For

example, consider a two-neuron population with a maximum of two spikes occuring in any bin.

The tertiary representation21 would represent two spikes for the first neuron, and one spike for

the second. ThusN independent samples of the joint response ofM neurons in a given bin∆i

is represented symbolically as the stochastic sequence{X(i)n }N

n=1, whereX(i)n takes on values in

X = {0, 1, . . . , DM − 1}. The alphabet sizeD is now equal to one plus the maximum number

of spikes for all neurons. Using the new joint alphabetX, the type for the joint response can be

calculated using equation 9.

3.2 Dependence Measure Estimation

The dependence measuresν andη are estimated using the method of types described in the pre-

vious section. GivenN independent observations of the simultaneous response ofM neurons, the

9

estimated dependence measuresν andη are given by:

ν =∑x

pX(x) logpX(x)∏M

m=1 pXm(xm)(10)

η(x) = pX(x)−M∏

m=1

pXm(xm) (11)

wherepX(x) andpXm(xm),m = 1, 2, . . . , M are the types for the joint response and the component

responses respectively. As the number of observationsN approaches∞, ν approachesν and η

approachesη almost surely[10].

3.3 Bootstrap Debiasing and Confidence Intervals

One of the biggest obstacles to analyzing neural recordings is the limited amount of data that

can be obtained; as a result, estimates forν and η may have significant bias. The bootstrap

method[4] is a resampling technique that can be employed to estimate and remove this bias. Let

R = {R1, R2, . . . , RM} be the lengthM dataset from which a given measureθ(R) is estimated. A

bootstrap sampleR∗ = {R∗1, R

∗2, . . . , R

∗M} is a random sample of sizeM drawn with replacement

from the original dataset. Thus some elements of the original dataset may appear in the bootstrap

sample more than once, and some not at all. TakingB bootstrap samples, the bootstrap estimate of

bias for the measureθ is the average bootstrap estimate minus the original estimate:

bias =1

B

B∑

b=1

θ(R∗b)− θ(R) (12)

where{θ(R∗b), b = 1, 2, . . . , B} are the estimates of the measureθ computed from each bootstrap

sample. The debiased estimate ofθ is then2θ(R) − 1B

∑Bb=1 θ(R∗

b). Around 200 bootstrap samples

10

are required to give a good estimate of bias in most cases[4].

The bootstrap method can also be used to compute confidence intervals for the measure estimates.

Let F (a) = #{θ(R∗b) < a}/B be the cumulative density function of the bootstrap estimatesθ(R∗

b).

A simple estimate for the100α % confidence interval is[F−1((1 − α)/2), F−1((1 + α)/2)]. This

corresponds to taking the(1 − α)/2 and (1 + α)/2 percentiles of the bootstrap replications. For

example, the90% confidence interval would be[F−1(.05), F−1(.95)]. The confidence interval for

the debiased estimate is then[2θ(R) − F−1((1 − α)/2), 2θ(R) − F−1((1 + α)/2)]. While 200

bootstrap samples is sufficient for estimating bias, estimating confidence intervals requires on the

order of 2000 samples[14].

4 Analysis of Recordings from the Crayfish Optic Lobe

In this section, the techniques outlined in the previous sections are applied to the analysis of real

neural data. The data considered here come from extracellular recordings of two motoneurons in the

crayfish optic lobe which control eyestalk rotation1. For a description of the experimental setup, see

[15]. Data from two different sets of experiments are considered. For the first set of experiments,

the stimulus consists of a stepwise displacement of a bar of light within the crayfish visual field.

The timing for the stimulus presentation is illustrated in figure 2(a). This experiment is repeated for

various displacement angles. In the second set of experiments, the stimulus was a sinusoidal grating,

so that the intensity of the light stimulus presented to the crayfish visual field increases and decreases

sinusoidally. This experiment is repeated for different spatial frequencies of the grating movement.

The timing for the grating stimulus presentation is illustrated in figure 2(b).

1Experiments on the crayfish optic lobe were carried out by Dr. R.M. Glantz, Department of Biochemistry and CellBiology, Rice University on Feb. 8 and Feb. 14, 2001.

11

0 5 10time (s)

∆θ

(a)

0 2 7 9 14time (s)

∆θ

(b)

Figure 2:Timing of stimuli presented to the crayfish visual field. Panel (a) illustrates timing of the stepwisedisplacement of a bar of light. After 5 seconds the bar is displaced through an angle∆θ; 5 seconds later, thebar is returned to its original position. Panel (b) illustrates the timing of the sinusoidal grating motion. After 2seconds the grating is moved at a constant spatial frequency through an angle∆θ. After a 2 second pause, thegrating is returned to its original position.

In the stepwise displacement experiments, the two neurons exhibit a weak positive dependence

for most of the stimulus presentation. Some examples of the analysis are shown in figure 3. In both

examples, the beginning of an interval in which the neurons are statistically independent coincides

with the displacement of the bar att = 5 seconds.

The movement of the sinusoidal grating elicits a more varied response than the stepwise dis-

placement. Some examples of the response for the grating experiment are shown in figure 4. Over

the course of the stimulus presentation, the neurons exhibit both positive and negative dependencies,

which although small could contribute to encoding of the stimulus. A particularly striking example

of this is figure 4(b), in which the movement of the grating after 2 seconds causes the neurons to

become independent, similar to the behavior in the stepwise displacement examples. The neurons

then become positively dependent during the 2 second pause. The return movement of the grating

coincides with intervals of both positive and negative dependence.

12

0 1 2 3 4 5 6 7 8 9 100

0.2

0.4

ν

0 1 2 3 4 5 6 7 8 9 100

10

20

Rat

e (s

p/s)

0 1 2 3 4 5 6 7 8 9 100

10

20

Rat

e (s

p/s)

Time (s)

(a)

0 1 2 3 4 5 6 7 8 9 10

(1,0)

(2,0)

(0,1)

(1,1)

(2,1)

(0,2)

(1,2)

η(x

1,x

2)

Sym

bol

Time (s)

y−scale = 0.11241

(b)

0 1 2 3 4 5 6 7 8 9 100

0.2

0.4

ν

0 1 2 3 4 5 6 7 8 9 100

10

20

Rat

e (s

p/s)

0 1 2 3 4 5 6 7 8 9 100

10

20

Rat

e (s

p/s)

Time (s)

(c)

0 1 2 3 4 5 6 7 8 9 10

(1,0)

(2,0)

(0,1)

(1,1)

(2,1)

η(x

1,x

2)

Sym

bol

Time (s)

y−scale = 0.13539

(d)

Figure 3: Motoneuron responses to stepwise displacements of a bar of light. Panels (a) and (c) show thedependence measureη estimated at each bin in the response, along with the average response of each neuronfor 30 repetitions of the stimulus. 90% confidence intervals forη are marked in grey; for clarity, confidenceintervals are only shown where the estimate is statistically significant, i.e. the confidence interval does notenclose zero. Panels (b) and (c) show the excess probabilityη(x1, x2); again, confidence intervals are denotedin grey, and only significant values are shown. In addition, only symbols for which at least one bin contains asignificant value are shown.

5 Conclusion

I have presented two new multivariate dependence measures that are useful for analyzing statistical

dependencies between two or more variables. The measureν succinctly expresses the amount of de-

13

0 2 4 6 8 10 120

0.1

0.2

ν

0 2 4 6 8 10 120

10

20

Rat

e (s

p/s)

0 2 4 6 8 10 120

10

20

Rat

e (s

p/s)

Time (s)

(a)

0 2 4 6 8 10 12

(1,0)

(2,0)

(3,0)

(0,1)

(1,1)

(2,1)

(3,1)

η(x1,x

2)

Sym

bol

Time (s)

y−scale = 0.062482

(b)

0 2 4 6 8 10 12 140

0.1

0.2

ν

0 2 4 6 8 10 12 140

5

10

15

Rat

e (s

p/s)

0 2 4 6 8 10 12 140

5

10

15

Rat

e (s

p/s)

Time (s)

(c)

0 2 4 6 8 10 12 14

(1,0)

(2,0)

(0,1)

(1,1)

(2,1)

(0,2)

(1,2)

η(x1,x

2)

Sym

bol

Time (s)

y−scale = 0.11901

(d)

Figure 4:Motoneuron responses to movement of a sinusoidal grating. Panels (a) and (c) show the dependencemeasureη estimated at each bin in the response, along with the average response of each neuron for 30repetitions of the stimulus. Panels (b) and (c) show the excess probabilityη(x1, x2); Only symbols for whichat least one bin contains a significant value are shown. Confidence intervals are marked as in figure 3.

pendence in an ensemble, while the excess probabilityη(x) provides the details of the dependencies.

When used in concert, these two dependence measures can afford a detailed ensemble dependence

analysis. I have shown how these measures are used in the analysis of neural ensembles. The ex-

amples illustrate just how subtle the dependencies in neural populations can be; thus understanding

neural population coding requires more than just identifying synergy in the population, but rather

14

exploring the population dependencies in detail.

A Non-zero terms in the expansion of a multivariate distribution

In [8] it was shown that the expansion of a bivariate distribution can be diagonalized; i.e., the coeffi-

cients with unequal indices in the expansion can be made to equal zero. Here I show that the coeffi-

cents withat leastall pairwise equal combinations of indices must be non-zero in the expansion of a

multivariate distribution function. Hence, while it is not possible to diagonalize the multivariate ex-

pansion, it may be possible to choose the basis functions such that all coefficients having completely

distinct indices equal zero.

Consider a multivariate distributionp(x1, . . . , xn, . . . , xN). The expansion must not violate the

marginalizing condition:

∫p(x1, . . . , xn, . . . , xN) · dxn = p(x1, . . . , xn−1, xn+1, . . . , xN) (13)

It is easy to show that the diagonal assumption violates this condition. Consider the three-variable

distributionp(x, y, z). Under the diagonal assumption,

p(x, y, z) = p(x)p(y)p(z)

[1 +

∑i

aiψi(x)ψi(y)ψi(z)

](14)

15

Integrating with respect toz,

∫p(x, y, z)dz = p(x)p(y)

∫p(z)

[1 +

∑i

aiψi(x)ψi(y)ψi(z)

]dz

= p(x)p(y)

[1 +

∑i

aiψi(x)ψi(y)

∫ψi(z)p(z)dz

]

= p(x)p(y) (15)

This result violates (13); thus the expansion cannot be diagonal in general.

Next, consider an expansion with at least pairwise equal indices. The in-

dices (i, j, k) in the expansion belong to the setI = {i 6= j 6= k} =

{{i = j 6= k} ∪ {i = k 6= j} ∪ {j = k 6= i} ∪ {i = j = k}}.

p(x, y, z) = p(x)p(y)p(z)

[1 +

∑I

aiψi(x)ψi(y)ψi(z)

](16)

= p(x)p(y)p(z)

[1 +

∑

i=j 6=k

aijkψi(x)ψj(y)ψk(z)

+∑

i=k 6=j

aijkψi(x)ψj(y)ψk(z)

+∑

j=k 6=i

aijkψi(x)ψj(y)ψk(z)

+∑

i=j=k

aijkψi(x)ψj(y)ψk(z)

]

16

Rewriting the expansion so that the1 is one of the terms in the sum and integrating with respect toz,

∫p(x, y, z)dz = p(x)p(y)

[ ∑

i=j 6=k

aijkψi(x)ψj(y)

∫ψk(z)p(z)dz

+∑

i=k 6=j

aijkψi(x)ψj(y)

∫ψk(z)p(z)dz

+∑

j=k 6=i

aijkψi(x)ψj(y)

∫ψk(z)p(z)dz

+∑

i=j=k

aijkψi(x)ψj(y)

∫ψk(z)p(z)dz

]

= p(x)p(y)

[1 +

∑

i=j 6=k,k=0

aijkψi(x)ψj(y)

]

= p(x)p(y)

[1 +

∑i

aiψi(x)ψi(y)

]

= p(x, y) (17)

The pairwise equal expansion in three variables satisfies the marginalizing condition; moreover, this

result extends toN variables. I conclude therefore that it may be possible to choose a basis set for

the expansion such that the coefficients with completely distinct indices are zero.

Acknowledgement

I would like to thank Dr. Don H. Johnson for introducing me to this exciting field of research, and

for his help and guidance which have been truly invaluable.

17

References

[1] M. Bezzi, M.E. Diamond, and A. Treves, “Redundancy and synergy arising from pairwise

correlations in neuronal ensembles,”Journal of Computational Neuroscience, vol. 12, no. 3,

pp. 165–174, May-June 2002.

[2] H. Joe,Multivariate Models and Dependence Concepts, Chapman & Hall, 1997.

[3] H. Stark and J.W. Woods,Probability, Random Processes, and Estimation Theory for Engi-

neers, Prentice Hall, 2 edition, 1994.

[4] B. Efron and R. Tibshirani,An Introduction to the Bootstrap, Chapman & Hall, 1993.

[5] T.M. Cover and J.A. Thomas,Elements of Information Theory, John Wiley & Sons, Inc., 1991.

[6] H. Joe, “Majorization, randomness and dependence for multivariate distributions,”Ann. Prob-

ability, vol. 15, no. 3, pp. 1217–1225, July 1987.

[7] O.V. Sarmanov, “Maximum correlation coefficient (nonsymmetric case),” inSelected Transla-

tions in Mathematical Statistics and Probability, vol. 2, pp. 207–210. Amer. Math. Soc., 1962.

[8] H.O. Lancaster, “The structure of bivariate distributions,”Ann. Math. Statistics, vol. 29, no. 3,

pp. 719–736, September 1958.

[9] J.F. Barrett and D.G. Lampard, “An expansion for some second-order probability distributions

and its application to noise problems,”IRE Transactions - Information Theory, vol. 1, pp.

10–15, 1955.

[10] H. Joe, “Relative entropy measures of multivariate dependence,”Journal of the American

Statistical Association, vol. 84, no. 405, pp. 157–164, March 1989.

18

[11] F. Riesz and B. Szent-Nagy,Functional Analysis, Frederick Ungar Publishing Co., 1955.

[12] D.H. Johnson, “Point process models of single-neuron discharge,”J. Computational Neuro-

science, vol. 3, pp. 275–299, 1996.

[13] D.H. Johnson, C.M. Gruner, K. Baggerly, and C. Seshagiri, “Information-theoretic analysis of

neural coding,”J. Computational Neuroscience, vol. 10, no. 1, pp. 47–69, January 2001.

[14] B. Efron, “Better bootstrap confidence intervals,”J. American Statistical Assosiation, vol. 82,

no. 397, pp. 171–185, March 1987.

[15] C.S. Miller, D.H. Johnson, J.P. Schroeter, L.L. Myint, and R.M. Glantz, “Visual signals in an

optomotor reflex: Systems and information theoretic analysis,”J. Computational Neuroscience,

vol. 13, no. 1, pp. 5–21, July 2002.

19