Eindhoven University of Technology MASTER layout and ... · H.2 Example ... 5.4 Scaling an image...

Transcript of Eindhoven University of Technology MASTER layout and ... · H.2 Example ... 5.4 Scaling an image...

Eindhoven University of Technology

MASTER

GLASS

layout and styling system

Golsteijn, B.J.T.

Award date:2007

DisclaimerThis document contains a student thesis (bachelor's or master's), as authored by a student at Eindhoven University of Technology. Studenttheses are made available in the TU/e repository upon obtaining the required degree. The grade received is not published on the documentas presented in the repository. The required complexity or quality of research of student theses may vary by program, and the requiredminimum study period may vary in duration.

General rightsCopyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright ownersand it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights.

• Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain

Take down policyIf you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediatelyand investigate your claim.

Download date: 15. Jun. 2018

TECHNISCHE UNIVERSITEIT EINDHOVENDepartment of Mathematics and Computer Science

Master’s Thesis

GLASS

Layout And Styling System

B.J.T. Golsteijn

Supervisors:

Ir. W. Dees (Philips)Dr. A.T.M. Aerts (TU/e)

Eindhoven, August 2005

Abstract

As more and more electronic devices become connected to a network, applications will nolonger be bound to a single device. They can run on and be run from different devices inthe network. Because of the variation in device characteristics, one single user interface foran application will not suffice anymore. However, creating a distinct user interface for eachspecific target device quickly becomes infeasible as the number of target devices that can beadded to a network grows. To deal with this looming problem, a UI generation frameworkis being created within Philips Research that allows the (semi-)automatic generation of userinterfaces for different target devices. The aim of the GLASS project is to implement oneof the steps in this framework, the ‘Augment AUI’ step. This encompasses the creation of agraphical layout and styling editor, as well as the design and implementation of a data formatto store layout and styling information for graphical user interfaces in such a way, that it canbe used to create user interfaces for multiple target devices without the need to specify theuser interface for each target device in full detail, preferably in form of a multi-level stylesheet.

‘Multi-level Stylesheets’ is an experimental technique for storing layout and styling informa-tion of graphical user interfaces. This technique involves clustering device capabilities andinterrelated style attributes on different levels of abstraction in order to enable the creation ofattractive and intuitive user interfaces for multiple target devices, without the need to specifythe user interface for each target device in full detail.

This document describes the design and implementation of a data structure that uses themulti-level stylesheet technique for storing layout and styling information of graphical userinterfaces. Further, an editor that uses this data format to add layout and styling informationto Abstract UI models is described. Finally, some conclusions are drawn about the feasibilityof using multi-level stylesheets to create attractive and intuitive user interfaces for multipletarget devices without the need to specify the user interface for each target device in fulldetail.

Acknowledgements

This master’s thesis completes my Computer Science study at the Technische UniversiteitEindhoven (TU/e). My graduation project is carried out at the Information ProcessingArchitectures (IPA) group at the Philips Research Laboratories Eindhoven.

First I would like to thank my supervisors Walter Dees and Ad Aerts for the pleasant cooper-ation during my final project. We had interesting discussions and they gave critical commentsand valuable suggestions on my work.

Further, I would like to thank my colleagues at Philips who made my stay at Philips a pleasantone.

Also, I want to thank Jack van Wijk and Marc Voorhoeve for their willingness to be a memberof my examination board and Paul de Bra and Harold Weffers for reviewing my thesis.

Finally, I would like to thank my girlfriend Nicole, my parents and my girlfriend’s parents fortheir love and support during this project.

Bart GolsteijnEindhoven, August 2005

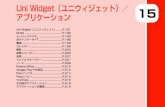

Contents

Abstract iii

Acknowledgements v

1 Introduction 1

1.1 Context . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.1.1 UI-Application modeling . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.2 Abstract UI modeling . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.1.3 Augment AUI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.1.4 Conversion and Implement Application . . . . . . . . . . . . . . . . . 4

1.2 Document Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

1.3 Used Terms, Abbreviations, and Acronyms . . . . . . . . . . . . . . . . . . . 5

2 Layout and Styling of Graphical User Interfaces 7

2.1 Layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.1.1 Layout Descriptions . . . . . . . . . . . . . . . . . . . . . . . . . . . . 8

2.2 Style . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

2.2.1 Cascading Style Sheets . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.2.2 Multi-level stylesheets . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

2.2.3 Existing Layout and Styling Editors . . . . . . . . . . . . . . . . . . . 13

3 Requirements 15

3.1 User Characteristics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.2 General Capabilities . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

4 Design and Implementation of the Multi-level Stylesheet Data Format 17

4.1 Requirement Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 18

4.2 High-level Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

4.3 Layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

4.3.1 Layout Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

4.3.2 Position and Size . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

4.4 Styling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

4.5 Device Characteristics Descriptions . . . . . . . . . . . . . . . . . . . . . . . . 21

5 Design and Implementation of the Layout and Styling Editor 23

5.1 Design Considerations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

5.2 Graphical Editing Framework . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

viii CONTENTS

5.2.1 Risks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

5.3 Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 25

5.4 Plug-in Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

5.5 GLASSEditor . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

5.5.1 Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

5.5.2 Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

5.5.3 View . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

5.6 Design and Implementation of the Widget Viewer . . . . . . . . . . . . . . . . 29

5.6.1 Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 31

5.6.2 Non-resizable areas . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 33

5.6.3 Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

5.6.4 Limitations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

5.7 Creating a New Multi-level Stylesheet . . . . . . . . . . . . . . . . . . . . . . 35

5.8 Editing the Multi-level Stylesheet . . . . . . . . . . . . . . . . . . . . . . . . . 35

5.9 Updating the Multi-level Stylesheet . . . . . . . . . . . . . . . . . . . . . . . . 36

5.9.1 Unanimity Strategy . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

5.9.2 Average strategy . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

5.10 Handling Inconsistencies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

5.11 Case Study . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 40

6 Conclusion 43

6.1 Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

7 Project Evaluation 45

7.1 Project Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

7.2 Reflection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

7.2.1 Time Spent . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

7.2.2 Requirements Phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . 46

7.2.3 Prototype Phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

7.2.4 Intermediate Presentation . . . . . . . . . . . . . . . . . . . . . . . . . 47

7.2.5 Design Phase . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

7.2.6 Implementation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

7.3 Lessons learned . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

7.4 Final Remark . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

A Requirements 49

A.1 Functional Requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

A.1.1 General . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

A.1.2 Task List . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

A.1.3 Layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

A.1.4 Screens . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

A.1.5 Styling . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

A.1.6 Preview and code generation . . . . . . . . . . . . . . . . . . . . . . . 61

A.2 Non-functional requirements . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

A.3 Additional ideas during development . . . . . . . . . . . . . . . . . . . . . . . 62

B Analysis Model 63

CONTENTS ix

C GEF Overview 67C.1 Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67C.2 Architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

C.2.1 Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67C.2.2 View . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67C.2.3 Controller . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

C.3 Editing the Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

D Packages Overview 71D.1 com.philips.glass . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71D.2 com.philips.glass.actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71D.3 com.philips.glass.dnd . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72D.4 com.philips.glass.editors . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72D.5 com.philips.glass.editparts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72D.6 com.philips.glass.editpolicies . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72D.7 com.philips.glass.figures . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73D.8 com.philips.glass.figures.widgetviewer . . . . . . . . . . . . . . . . . . . . . . . 73D.9 com.philips.glass.layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73D.10 com.philips.glass.misc . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73D.11 com.philips.glass.model.auimodel . . . . . . . . . . . . . . . . . . . . . . . . . 73D.12 com.philips.glass.model.mlss . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73D.13 com.philips.glass.model.mlss.commands . . . . . . . . . . . . . . . . . . . . . 73D.14 com.philips.glass.model.mlss.updater . . . . . . . . . . . . . . . . . . . . . . . 74D.15 com.philips.glass.model.targetdevice . . . . . . . . . . . . . . . . . . . . . . . 74D.16 com.philips.glass.model.taskmodel . . . . . . . . . . . . . . . . . . . . . . . . 74D.17 com.philips.glass.views . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74D.18 com.philips.glass.wizards . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

E Task Model DTD 77

F Abstract UI Model DTD 79

G Multi-level Stylesheet DTD 81

H Device Characteristics Description Language 83H.1 DTD . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83H.2 Example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

Bibliography 85

List of Figures

1.1 UI generation framework . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

2.1 Layout . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82.2 Sample structure of a multi-level stylesheet . . . . . . . . . . . . . . . . . . . 12

4.1 Layout and styling structure from requirements analysis . . . . . . . . . . . . 18

5.1 Simplified Analysis Model . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 255.2 Layout and Styling Model used in the editor . . . . . . . . . . . . . . . . . . . 285.3 Model for the Tasklist View . . . . . . . . . . . . . . . . . . . . . . . . . . . . 295.4 Scaling an image versus resizing a widget . . . . . . . . . . . . . . . . . . . . 315.5 Nine-part tiling technique . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 315.6 Widget Viewer architecture . . . . . . . . . . . . . . . . . . . . . . . . . . . . 325.7 Non-resizable area . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 335.8 Bands mapping . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 345.9 Two sample UI trees . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 375.10 Average UI (I) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385.11 Average UI (II) . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 385.12 The GLASS Layout and Styling System while editing a 640x480 user interface 415.13 The 320x240 and the 240x320 user interfaces . . . . . . . . . . . . . . . . . . 415.14 The generated user interface for a 480x360 screen . . . . . . . . . . . . . . . . 42

C.1 GEF Editing Overview . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

Chapter 1

Introduction

As more and more electronic devices become connected to a network, applications will nolonger be bound to a single device. They can run on and be run from different devices inthe network. Because of the variation in device characteristics, one user interface (UI) foran application will not suffice anymore. A user interface built for a 17-inch PC monitor cannot simply be used on a mobile phone, as the result would be a user interface in which thescrollbars play the leading role. Linear scaling provides a slightly better result when thedifferences in available screen size are quite small, but in case of a PC and a mobile phone,the result would be a user interface with unreadable small texts and buttons too small towork with, which is clearly undesirable. On the other hand, creating a distinct user interfacefor each specific target device is undesirable as well, as this quickly becomes infeasible as thenumber of target devices that can be added to a network grows.

To deal with this looming problem, a UI generation framework [2] is being created withinPhilips Research that allows the (semi-)automatic generation of user interfaces for differenttarget devices. The aim of the GLASS (GLASS Layout And Styling System) project is toimplement one of the steps in this framework, the Augment AUI step, which is describedin more detail in section 1.1.3. This encompasses the creation of a graphical layout andstyling editor, as well as the design and implementation of a data format to store layout andstyling information for graphical user interfaces in such a way, that it can be used to createattractive and intuitive user interfaces for multiple target devices from a single layout andstyling description, preferably in form of a multi-level stylesheet [3] [4]. Because the multi-levelstylesheets technique is still in an experimental phase, the GLASS project will also serve asa feasibility study of using multi-level stylesheets for the creation of attractive and intuitiveuser interfaces for multiple target devices.

One application domain for such reusable user interface descriptions is the in-home networkingenvironment. Within the Philips Ambient Intelligence vision, all consumer electronics will beconnected to a wireless network. This creates for example opportunities for remote UI (a userinterface that is provided by an application on one device, but is shown on another device(UI device) that is physically separated from the device on which the application runs) andnomadic UI (a user interface that supports session migration from one display to another andcan adapt to the UI capabilities of a wide range of UI devices, in order to provide access tothe underlying application from anywhere). It is obvious that the current device specific UIdefinitions are unsuited for remote and nomadic UI. The data format for storing layout and

2 Introduction

styling information described in this document can be used within a UI generation frameworkto create attractive and intuitive user interface for multiple target devices, without the needspecify the UI for each target device in full detail.

In-home networking however, is not limited to Philips. Also in other companies, in-homenetworking is gaining importance, as can be noticed in forums like UPnP [6] and DLNA [7],in which many different companies participate.

1.1 Context

Within Philips Research Eindhoven, a UI generation framework is being created that al-lows the (semi-)automatic generation of graphical user interfaces for a variety of differentdevices. [2]. As mentioned in the previous section, the aim of the GLASS project is to im-plement the Augment AUI step of this framework. A visual overview of the UI generationframework is shown in Figure 1.1.

Figure 1.1: UI generation framework

In this figure, the rectangles depict models, the ellipses depict phases, and the cylinders depictdatabases. The lines and arrows depict the connections between them.

The Widget Database contains widget descriptions in a meta widget description language andpreview images of the widgets. These images can be used inside a generic layout and stylingeditor to preview the widgets without the need to implement every widget within the editor.An example of such a widget description can be found in Section 5.6. The Repository containsthe labels, texts and images that will be associated with the UI and the Device CapabilitiesDatabase contains descriptions of the characteristics of various target devices. Note that theexact format of device capabilities descriptions is not yet defined and can be defined accordingto the needs of the GLASS project.

The different phases of the framework are described in the following subsections.

1.1 Context 3

1.1.1 UI-Application modeling

During the UI-Application modeling phase, a task model and a data model are created.

• The task model describes the tasks a user can perform with the underlying application.GLASS uses a simplified task model as input, in which for each task only the inputsand outputs are described. The data format for the final task model (and the othermodels of the framework) have not yet been defined.

Here is an example of a (simplified) task model:

<?xml version="1.0" encoding="UTF-8"?>

<!--Simplified taskmodel (can also be called execution model or UI-application model)

without any preconditions, groupings, links to datamodel, alerts, etc. This model specifies

the input/output variables that are relevant for this task which can be changed by the user,

which are mapped onto widgets in the abstract UI model. -->

<!DOCTYPE taskmodel SYSTEM "C:\GLASS\taskmodels\taskmodel-student.dtd">

<taskmodel name="MP3 Player" version="1.0">

<task id="t1" name="Search song" autoexecute="true">

<input id="i1" name="searchstring"/>

<output id="o1" name="songlist"/>

</task>

</taskmodel>

The DTD [16] for the task models used in this project can be found in Appendix E.

• The data model contains all data relevant for the UI. Within the GLASS project how-ever, the data model is ignored and therefore it is not further described here.

1.1.2 Abstract UI modeling

During the Abstract UI modeling phase, an Abstract UI model is created. An AbstractUI model contains look-and-feel definitions and mappings from the task model’s inputs andoutputs to platform specific widgets. Note that more than one widget can be defined for aspecific input or output and that one widget can implement more than one input or output.Again, GLASS uses a simplified model.

Here is an example of such a (simplified) Abstract UI model:

<?xml version="1.0" encoding="UTF-8"?>

<!-- Abstract UI model: contains, for each target platform that the designer wants to focus

on, a mapping from each input/output element of a task in the task model to a list of target

specific widgets (described on a meta-level) that will be used to implement that task.

Note 1: for each input, output, and trigger there can be more than one widget possible. And

multiple inputs, outputs can be combined and mapped onto a single widget.

Note 2: this version does not support multiple look & feels, doesn’t include alerts and uses

simple navigation widgets unrelated to task links.

Note 3: the target devices are specified independently and saved under a certain name, which

is assumed to be uniquely identifiable.-->

<!DOCTYPE uimodel SYSTEM "C:\GLASS\auimodels\uimodel-student.dtd">

<uimodel version="1.0" taskmodel="taskmodel-student.xml">

4 Introduction

<lookfeeldefinitions>

<!-- contains the various look & feels (i.e. widget sets) to which the tasks in the task model

can be mapped -->

<lookfeel id="awt" name="Java AWT"/>

<lookfeel id="swing" name="Java Swing"/>

<lookfeel id="pocketpc" name="Windows PocketPC"/>

</lookfeeldefinitions>

<targetdevice id="pc" deviceref="C:\GLASS\devices\px.xml" lookfeel="awt">

<widget task="t1" input="i1" widgetref="C:\GLASS\widgets\textfield-meta.xml"/>

<!-- This has to be repeated for each input and output variable. Multiple occurrences

possible per input/output variable.

Note: input and output are comma-separated list of input and output IDREFs, can be mixed

in whichever way you want, since the same widget can perform input and output or multiple

input/output functionality at the same time-->

<trigger task="t1" widgetref="C:\GLASS\widgets\button-meta.xml"/>

<navigationlink widgetref="C:\GLASS\widgets\button-meta.xml"/>

</targetdevice>

<!-- This has to be repeated for that device with a different value for attribute lookfeel,

if more than one look & feel (i.e. widget set) to which the tasks in the task model can be

mapped for that device is available -->

<targetdevice id="ipaq" deviceref=C:\GLASS\devices\ipaq.xml" lookfeel="pocketpc">

<widget task="t1" input="i1" widgetref="C:\GLASS\widgets\textfield-meta-pda.xml"/>

<trigger task="t1" widgetref="C:\GLASS\widgets\button-meta-pda.xml"/>

<navigationlink widgetref="C:\GLASS\widgets\button-meta-pda.xml"/>

</targetdevice>

</uimodel>

The DTD for the Abstract UI models used in this project can be found in Appendix F.

1.1.3 Augment AUI

During the Augment AUI phase, layout and styling information are added to the Abstract UImodel, preferably in the form of a multi-level stylesheet (MLSS) [3] [4]. This is the step theGLASS project implements and which will be discussed in the remainder of this document.

1.1.4 Conversion and Implement Application

During the conversion phase, concrete, device specific user interfaces are created. During theImplement Application the non-UI parts of the application are created. As these two phasesare outside the scope of the GLASS project, they are not further described here.

1.2 Document Structure

Chapter 2 describes layout and styling techniques for graphical user interfaces. Chapter 3gives an overview of the requirements for a generic layout and styling editor. Chapter 4discusses the design of the created data structure for storing layout and styling information.Chapter 5 describes the design and implementation of the created graphical layout and styling

1.3 Used Terms, Abbreviations, and Acronyms 5

editor. In Chapter 6, a summary of the project is given an some conclusions are drawn on thefeasibility of using multi-level stylesheets to create user interfaces for multiple target deviceswithout the need to specify the user interface for each target device in full detail. Finally,Chapter 7 gives an evaluation of the GLASS project.

1.3 Used Terms, Abbreviations, and Acronyms

CSS Cascading Style Sheets [18]. CSS is a mechanism for defining style information. CSS isdescribed in Section 2.2.1.

DTD Document Type Definition [16]. In a DTD, the structure of a class of documents isdescribed via element and attribute-list declarations. Element declarations name theallowable set of elements within the document, and specify whether and how declaredelements and runs of character data may be contained within each element. Attribute-list declarations name the allowable set of attributes for each declared element, includingthe type of each attribute value, if not an explicit set of valid value(s).

GLASS GLASS Layout And Styling System, the name of this project.

GUI Graphical User Interface

Layout The position of the various UI elements in a graphical user interface.

Look-and-feel General appearance and operation of a user interface.

MLSS Multi-level stylesheet

Mock-up A model of something that has not yet been built, which shows how it will lookor operate.

Navigationlink UI element that allows the user to switch to another screen. Within theGLASS project, navigationlinks do exist, but they are not functional.

Style Appearance of the UI elements

Task Unit within the Task model. Defines a task that the user can perform with the systemand is characterized by its inputs and/or outputs. See section 1.1.1 for more informationabout tasks and the Task model.

Trigger UI element that initiates an action to generate the output values from its inputvalues for a task.

TU/e Technical University Eindhoven

UI User interface. A UI defined how a user can interact with a system. Typically a userinterface translates user events to events that can be understood by the underlyingapplication and translates feedback from the application to a form that can be perceivedby a user.

UI elements Elements that make up the user interface: widgets, images, texts, etc.

6 Introduction

UI part Part of UI: screen, area, or UI element.

Widget A mediator between the user and the functionality offered by an application/system,taking input from and/or providing output to the user.

WYSIWYG Acronym for ‘What You See Is What You Get’. A WYSIWYG UI creationsystem displays a (more-or-less) accurate image of what the user interface rendered onthe target platform will look like while editing the user interface.

Chapter 2

Layout and Styling of Graphical

User Interfaces1

As argued in Chapter 1, using one single user interface on multiple target devices with differentcharacteristics is undesirable, as this may lead to unattractive or even unusable user interfaces.On the other hand, to create a tailored user interface for each specific target device becomesinfeasible when the number of target devices grows. This suggests that a solution has to befound in which as much user interface information as possible can be reused in an automatedway, but still with the ability of tailoring the user interface for each specific target device intoan attractive and intuitive one.

But what is an attractive and intuitive user interface and how to create such a user interface?An answer to the first question could be: an intuitive user interface is a user interface inwhich a user can perform his tasks without much mental effort. Attractive is a term that isharder to define; it is quite a subjective measure which has to do with colors, fonts, layout,etc. and is rather time and function dependent. In case of UI design, it is up to a designer todecide what is attractive and what not. Unfortunately, the process of determining whetheror not a UI is attractive can hardly be automated2.

Now the second question on the creation of attractive and intuitive user interfaces. Manyfactors influence the attractiveness and intuitiveness of a user interface. Some of those factorscan be controlled, others cannot. Among the controllable factors, layout and styling playan important role. For example, a user interface with a well-designed layout can guide theuser in performing his tasks, which raises the user’s efficiency and reduces the number oferrors made, whereas a bad layout can actually prevent the user from being able to completehis tasks. Styling obviously influences the attractiveness of a user interface, but styling canalso influence the intuitiveness of a user interface. A button clearly recognizable as a buttonalmost asks for being pressed, whereas a transparent button without any border will hardlybe recognized as a button and therefore decreases the intuitiveness of the user interface.

1This chapter is a (partial) summary of Survey of Layout and Styling Tools and Techniques [1], a documentcreated at the beginning of the project.

2There have been some attempts to automate the evaluation of the attractiveness of user interfaces. Seefor example [21]

8 Layout and Styling of Graphical User Interfaces

2.1 Layout

When talking about the layout of graphical user interfaces, many terms are used, but there isno common consensus about what these terms mean. In the rest of this section, the followingterms are used to describe layout definitions. The virtual screen is the top of the layouthierarchy. In most cases, the virtual screen will be equal to the physical screen, although thisis not necessary. For example, when using four virtual desktops, the virtual screen is largerthan the physical screen. Areas are placed within a virtual screen and consist of a virtualdrawing area. This virtual drawing area can be larger than the visible part of the area, whichis called the viewport. When the virtual drawing area is larger then the viewport, typicallysome kind of scrolling facility is provided to access the non-visual parts. An area can containsub-areas and UI elements.

A graphical overview of these terms is shown in Figure 2.1.

Physical Screen

Virtual Drawing Area

Area

Viewport

(Sub) Area

Widget Widget

Area

Virtual Screen

Widget

Widget Widget Widget

Figure 2.1: Layout

Note that often the term container is used instead of area. This term is not further used here,as this assumes that a container widget concept is available, which might not always be thecase. Within the GLASS project, areas will be used, which might be converted to containerwidgets when the target platform supports container widgets.

2.1.1 Layout Descriptions

Unfortunately, there is no universal way to describe layout. In general, there are two kindsof layout descriptions:

• In an absolute layout description (aka. null-layout or XY-layout), all position informa-tion (and often also size information) is stored in terms of absolute (x,y) coordinates.

• In a relative layout description, the layout is described in terms of various relations.These can be relations between two UI elements, between an area and a UI elementwithin that area, or between two areas. In order to be able to use such relative layout

2.1 Layout 9

descriptions, a so-called layout manager is necessary to perform the layout task. Amongthe relative layout descriptions, two major categories can be distinguished:

– Relative layout descriptions based on areas divide the available screen space into anumber of areas. The layout manager determines how the available screen space isdivided into areas and each area can have its own layout manager. This ‘separationof concerns’ is an advantage for building reusable layout descriptions, as area de-scriptions can be reused. Examples of layout managers that use layout descriptionsbased on areas are BorderLayout [26], GridBagLayout [27], and GridLayout [28].

– Relative layout descriptions based on constraints use guides and/or relations todefine a layout. A guide is a line to which UI elements can be attached or aligned.A relation between two UI elements A and B can for example state that the leftedge of B should be placed 5 pixels from the right edge of A. A disadvantage ofusing constraints to define layout is that splitting a large screen into a numberof smaller screens is difficult because the relations between the different UI ele-ments tie the complete screen together. Examples of layout managers that uselayout descriptions based on constraints are FlowLayout [29], SpringLayout [30],and FormLayout [31].

One problem with most layout managers currently available, is that they do not takeminimum and/or maximum sizes into account. This is a serious problem, as manycustom-made widget sets for CE devices (e.g. TVs and DVD recorders) are not arbi-trarily scalable, as they are in Java for example.

With respect to reusability, relative layout descriptions have one advantage over absolutelayout descriptions: relative layout descriptions can cope better with changes in availablescreen size than absolute layout descriptions. In case of an absolute layout description, anincrease in available screen space can be handled either by adding empty space around theuser interface or by scaling the complete user interface. In the first case the extra space is notused and the result is a larger empty border around the original user interface. In the secondcase the user interface rapidly becomes less attractive or even unusable. For example, verylarge buttons with very large texts on it are not visually pleasing in most cases. And whenthe available screen space decreases, either the whole user interface is scaled down, whichleads to unreadable texts and too small UI elements, or a part of the user interface is cut offand can only be reached by scrolling. And things become even worse when the aspect ratioof the screen changes.

In case of a relative layout description, it is possible to define which parts of the user interfaceare allowed to grow or shrink and where the UI elements should be placed when the amountof available screen space changes. This is a great benefit, as it allows you to specify the waya user interface should react to changes of the available screen size in a more fine-grainedway, which in turn leads to user interfaces that can cope much better with changing screensizes. Note however that the variations in screen size a relative layout description can handleare limited. When the variations in available screen size become too large, more drasticmeasures have to be taken, like changing a row orientation to a column orientation, adjustingthe number of UI elements that are displayed on the screen, or even changing the number ofscreens a UI consists of. At the moment, there are no layout managers that can handle suchlarge variations in available screen size.

10 Layout and Styling of Graphical User Interfaces

Just like absolute layout descriptions, relative layout descriptions have some disadvantages.One major disadvantage is that there is no standard for relative layout definitions. Forexample, there are more than 25 different layout managers for Java alone. This indicatesthat there is no ultimate layout manager that is the best solution for all layout problems.Another disadvantage is that most layout managers do not allow visual editing in an intuitiveway. Because of the way most layout managers work, UI elements may jump around whilethe designer edits the user interface. And the majority of all layout managers are inherentlynot suited for pixel precise editing of a UI.

And this is exactly how UI designers work [22]. They position and size UI elements onlyin absolute terms and not in relative terms. The resulting UI design can therefore not beconverted to a reusable UI for different screen properties. Designers deal with different screenproperties by creating a new UI design when the properties of a screen differ. However, [22]shows that designers sometimes use grids and guides to align UI elements, at least in theirminds. These grids and guides can act as constraints and may help software engineers toformulate layout algorithms.

Within the GLASS project, areas and guides will be used as main layout primitives. Further,the default layout manager will store position and size information in terms of in terms of permills (parts per thousand) for the top, left, bottom, and right side of the UI element, relativeto the parent’s drawing area. This approach has a number of advantages:

1. It is possible to edit UIs in an visual, pixel precise, and intuitive way. There is no needto think in terms of layout constraints and UI parts do not jump around while editingthe UI.

2. It is relatively easy to detect similarities between different UIs based on proportions.For example, an area that occupies the complete width and one third of the total heightof a screen will have the same layout description for different screen sizes when per millsare used to store layout information, whereas the absolute coordinates will probably bequite different.

3. Linear scaling is provided for free.

2.2 Style

Style refers to the appearance of the UI elements. A lot of different style attributes can bedistinguished. Here is a list of common style attributes:

• colors (background, foreground, border, ...)

• images (background, foreground [e.g. on a button])

• text attributes (font, style, size, color, alignment)

• size3 (height, width)

3Note that size can also be treated as a layout attribute and not as a styling attribute. This is especiallythe case when the layout manager determines the sizes of the UI elements.

2.2 Style 11

There are number of ways to style a GUI. One way is to hard-code the style information inthe program code. Another way is to use an external file to define style information. Anadvantage of this approach is that style information can be changed without the need torecompile the entire program. Yet another way to style a GUI is creating custom widgetsthat are drawn by calling methods like ’drawline’, ’drawrectangle’, and ’fill’. Skinning is anextreme form of styling in which all UI elements are rendered from (replaceable) image files.An example of a program with a skinnable user interface is the MP3 player WinAmp4.

2.2.1 Cascading Style Sheets

The most well-known styling technique is probably Cascading Style Sheets (CSS) [18]. CSSis a mechanism for adding style information to Web documents. Different style sheets can bespecified for different types of devices and it is even possible to create more than one stylesheet for type of device, in order to let the user choose one. CSS is supported by all majorinternet browsers and has become the de-facto standard for styling HTML documents. Thebig advantage of using CSS for styling instead of including style information in the HTML fileitself, is reusability. A single CSS file can be used to style a complete website, and when youwant to change a style attribute, you only have to make one change to the CSS file, insteadof changing all occurrences of that style attribute in all HTML files.

The reusability of CSS styling attributes is accomplished by using selectors. Selectors deter-mine the target element(s) of a certain styling attribute. The most used selectors are elementselectors, class selectors and id selectors. Element selectors work on all elements of a certaintype, for example on all tables. Class selectors work on all elements that are member ofa certain class, as specified by the element’s class attribute. And id selectors work on onecertain element with a unique id.

Cascading style sheets are obviously a step into the right direction, as they provide a basisfor reusable layout descriptions. However, CSS is not enough to be able to specify styleinformation for many target devices. For instance, using CSS it is not possible to definedifferent style information for two different types of PDAs, as there is only one device type‘handheld’.

2.2.2 Multi-level stylesheets

Multi-level stylesheets [3] [4] are an (experimental) extension of the existing stylesheet lan-guages, which makes it possible to define style information at different levels of abstraction.This technique involves clustering device capabilities and interrelated style attributes. Bystoring style information in a hierarchical way, it is possible to reuse style information fordevices with overlapping characteristics. General information is stored at a high level in thehierarchy, whereas device specific information is stored at a low level in the hierarchy.

A sample multi-level stylesheet structure is shown in Figure 2.2.

The clustering of style attributes across device capabilities can be done according to severalcriteria [3]:

4WinAmp homepage: http://www.winamp.com/

12 Layout and Styling of Graphical User Interfaces

Graphics

Landscape Portrait

4x3 16x9

800x600640x480

LayoutStyle attributes

Layout

Style attributes

Layout

Style attributes

Layout

Style attributes

Figure 2.2: Sample structure of a multi-level stylesheet

1. If a style attribute always has the same value for all intended target devices, the styleattribute can be placed on the highest level of abstraction.

2. If a style attribute has the same value for a set of devices with a certain capability, asub-tree can be introduced with this device capability and the related style attributedefined as the root of the tree.

3. If a style attribute has the same value for the majority of devices with a certain capability(i.e. only a few exceptions), the same can be applied as for criterion 2. But now the“default”-value is overridden in sub-nodes of this subtree for the exceptions. Similarly,a lowest common denominator could be defined at the root of the subtree as a defaultvalue, which can be overridden in the sub-nodes. Note that if the style attributes donot significantly affect the user interface or if the devices with these exceptions are rare,overriding the default value is often not needed.

4. If the value of a style attribute can be automatically adapted (i.e. derived from anothervalue) across the range of values that is relevant for a cluster of devices, the styleattribute can be placed higher in the device capability tree. Examples of style attributesthat could be automatically adapted for a range of target devices are:

(a) resizing a bitmap to fit the size of the display

(b) transcoding an image from one format to another (e.g. a GIF-image to JPEG)

(c) going from color to grayscale.

To support clustering according to this criterion, thresholds could be defined that holdfor a specific range of target devices, for example to specify that a certain X can beminimally 30 and maximally 100 pixels wide.

Of the available layout and styling techniques, the multi-level stylesheet technique offers thebest perspective for storing layout and styling information for multiple target devices, without

2.2 Style 13

the need to specify all layout and styling information for each device in full detail. Therefore,it will be used as storage format in the GLASS project.

Note however, that that multi-level stylesheets were still in a conceptual phase at the startof the project. There was neither a complete specification nor an implementation. Themulti-level stylesheet data format used in this project is discussed in Chapter 4.

2.2.3 Existing Layout and Styling Editors

A number of existing layout and styling editors are discussed in [1], but none of them is suitablefor creating reusable layout and styling descriptions for a wide range of target devices. Themain problems with these editors are that they are mainly focused toward one target platformand that the ways to specify layout are not suited for this project. Either they use absolutelayout descriptions or they use layout descriptions using complex constraints, which resultsin an editing experience that is unintuitive for UI designers. Therefore, a completely newlayout and styling editor has been created as part of the GLASS project. An overview of therequirements for this editor is given in the next chapter.

Chapter 3

Requirements

3.1 User Characteristics

GLASS will be used by software engineers and by UI designers.

Software engineers have a technical background and think about how to translate the ideasof the UI designer into software by implementing user interfaces on a target platform, andalso how the user interface is linked to the application, as an aspect of the overall softwarearchitecture. In addition, they influence the UI designer with respect to resource constraintsfor the user interface design.

In general, UI designers have a non-technical background. They are used to designing a userinterface for one particular target device. To design a user interface they use a graphicaldesign environment in which they can freely ‘play’ with the user interface by dragging UI ele-ments and changing colors and backgrounds. Further, they think about things as ergonomics,usability, user-centered design, etc.

3.2 General Capabilities

GLASS will add the following features to the generic features available in many of the availablelayout and styling editors as described in [1]:

• Structured input in the form of tasks with inputs and outputs.

• Easy switching between the user interfaces for different target devices.

• Generic editor: different native widget sets can be used to create user interfaces

• Based on two layout primitives: areas and guides.

• The system can use information of existing user interface definitions to create new userinterface definitions for different target devices.

The editor has to be as WYSIWYG as possible, since UI designers work in a graphical,preferably pixel precise, way. The editor consists of four main parts:

16 Requirements

• The Canvas is the place where the actual drawing of UI screens takes place.

• The List of Target Devices lists all target devices defined in the abstract UI model.Selecting a target device in this list will bring the editor into the current editing statefor the selected target device.

• The Task List contains all tasks defined in the task model, together with all widgetsdefined in the abstract UI model for the inputs and outputs of these tasks. Thesewidgets can be dragged from the task list into onto the canvas.

• The List of Screens lists all screens of the current layout and styling description. Select-ing a screen in this list brings the editor shows the selected screen in the canvas. Thelist of screens also provides options to add and remove screens from the current layoutand styling description.

Based on the analysis of Section 2.1, areas and guides will be used as main layout primitiveswithin the editor. Areas can be placed within a screen or within another area. Guides canbe placed within an area. Further, the following types of UI elements can be placed withinan area: widgets, triggers, navigationlinks, images, texts, lines, circles, and rectangles. Eacharea has a layout manager to determine the exact placement of the UI elements it contains.The available layout manager types are: XY layout (default), flow layout, and card layout.When the XY layout manager is used, several tools are available to align and resize selectedUI elements.

To preview widgets, triggers, and navigationlinks as WYSIWYG as possible, widget descrip-tions from the widget database are used to render widget previews. Styling information canbe added for a number of built-in styling attributes, as well as for all the styling attributes de-fined in the widget description1. Further, the content areas defined in the widget descriptioncan be filled with textual or graphical content.

When a screen for a UI has been created, it is stored in such a way that (parts) of this screencan be used within the UIs for other target devices. When a UI screen is used for a devicewith another screen size than for which the screen was designed, the editor can perform anautomatic re-layout.

Finally, mockups can be generated for Java SWING [25] and SWT [13].

A complete list of all collected functional requirements can be found in Appendix A.1. Allnon-functional requirements are listed in Appendix A.2.

1Note that it is not possible to generate a WYSYWYG preview for all styling attributes, as this wouldrequire semantical knowledge of all possible styling attributes.

Chapter 4

Design and Implementation of the

Multi-level Stylesheet Data Format

Multi-level stylesheets (MLSS) [3] [4] [5] is a technique for storing information in a hierarchicalway. As indicated in Section 2.2.2, the multi-level stylesheet technique provides the bestperspective for storing layout and styling information for multiple target devices, withoutthe need to specify all layout and styling information for each device in full detail. However,neither a (complete) specification nor an implementation was available at the start of theGLASS project. Only a partial specification was available from [5]. This partial specification,however, has a number of limitations for the GLASS project:

• All layout and styling attributes are bound to one fixed level in the multi-level stylesheet1.This implies that it must be known beforehand which group of target devices has willhave the same value for a certain attribute and which group of devices will not. This doesnot seem to be a reasonable premise for the GLASS project, as MLSS is a completelynew technique without any available implementations, so there is no prior experience torevert to2. A further consequence of this limitation is that it is not possible to overrideattribute values. For example, when the ‘background-color’ attribute is bound to thehighest level of the MLSS, it is not possible to define different background colors fordifferent devices.

• The means for specifying layout are very limited. It is impossible to specify the layoutof a UI in terms of areas as specified in the requirements.

• Only a limited set of abstract widgets is supported, without any references to theabstract UI model used in the UI generation framework described in Section 1.1.

Because of these limitations, the decision was made to create a completely new multi-levelstylesheet data format for the GLASS project. This data format is based on XML [17],because the rest of the UI adaptation framework [2] uses XML to store data.

1Note that this is not a limitation of the MLSS technique, but only of this particular specification2It is possible that after building enough UIs, fixed levels can be determined for some or all of the layout

and styling attributes. This however, is certainly not the case for the GLASS project and therefore, the levelson which layout and styling attributes are stored will not be fixed in the GLASS project.

18 Design and Implementation of the Multi-level Stylesheet Data Format

4.1 Requirement Analysis

A straightforward analysis of the requirements leads to the diagram in Figure 4.1 for thestructure of a layout and styling description. This diagram shown the elements that constitutea layout and styling description and their attributes. In addition to the attributes shown in

Figure 4.1: Layout and styling structure from requirements analysis

Figure 4.1, additional attributes (e.g. widget specific styling attributes) can be defined forwidgets, triggers, and navigationlinks in their associated widget descriptions.

The analysis of the requirements for the multi-level stylesheet data structure further led tothe following observations:

• Because of requirements with respect to reusability, storing layout and styling informa-tion in a fine-grained way (i.e. small chunks of information) is probably a good idea, asthis enhances the reusability of the layout and styling information.

• The requirement that layout and styling information should be stored in a robust wayimplies that layout and styling information can not be stored as annotations of theabstract UI model, as the annotations would be lost when the abstract UI model isupdated.

4.2 High-level Structure 19

4.2 High-level Structure

A multi-level stylesheet is built as a tree of nodes, each node representing a certain devicecharacteristic or capability. These nodes will be represented by CAP (capability) elementsand correspond to the capabilities in the device characteristics description files (Section 4.5).For example, the sample MLSS structure shown in Figure 2.2 leads to the following CAPstructure:

<?xml version=’1.0’ encoding=’UTF-8’?>

<MLSS>

<CAP type=’modality’ capability=’Graphics’>

...

<CAP type=’orientation’ capability=’Landscape’>

...

<CAP type=’aspect-ratio’ capability=’4x3’>

...

<CAP type=’resolution’ capability=’640x480’>

...

</CAP>

<CAP type=’resolution’ capability=’800x600’>

...

</CAP>

</CAP>

<CAP type=’aspect-ratio’ capability=’16x9’>

...

</CAP>

</CAP>

<CAP type=’orientation’ capability=’Portrait’>

...

</CAP>

</CAP>

</MLSS>

A CAP element can contain three kinds of child elements: LAYOUT elements, PROPERTYelements, and other CAP elements. LAYOUT elements specify the containment relationbetween the different UI parts that constitute the UI. PROPERTY element specify all otherinformation: size and position information, as well as all styling information.

For the sake of simplicity, the CAP structure (i.e. the hierarchy of capabilities) of the multi-level stylesheets will be fixed in the first version of GLASS. A later version can change thenode structure depending on the occurring similarities between the UIs for different targetdevices.

When desired, the data structure can be cut into pieces (e.g. a file per CAP node) andXInclude [20] can be used to combine them again.

4.3 Layout

To enhance the reusability of layout information, layout information is separated into twoparts: (1) layout structure and (2) position and size information.

4.3.1 Layout Structure

The layout structure describes the containment relations between the UI parts that constitutethe UI. In order to maximize the reusability of the layout structure information, it will be cut

20 Design and Implementation of the Multi-level Stylesheet Data Format

into small, reusable chunks of information. For example, an about screen containing threeareas can be described as follows:

<layout>

<screen name="aboutScreen">

<area name="titleArea" />

<area name="textArea" />

<area name="dismissArea" />

</parent>

</layout>

Such a chunk defines exactly one level of layout information. The layout of the child elementsis stored in separate chunks of layout information. When these layout chunks are combined,they form a layout tree that follows the parent-child relationships defined in Figure 4.1.

The layout definition for a UI can contain a number of screens and each screen can containa number of areas. An area can contain other areas and UI elements. By overlapping andnesting areas, it is possible to create almost any layout. In addition, guides can be placedinside an area. A guide can be used to define the way a group of UI elements should be placedon the screen with respect to each other. Note however that strictly speaking, guides are notpart of the layout structure, as they can be turned off and even removed without affectingthe attached UI elements.

4.3.2 Position and Size

As discussed in Section 2.1, storing position and size information using absolute coordinatespractically limits the applicability of a UI description to one screen size, which is clearlyundesirable from a device independence point of view. On the other hand, most relative layoutdescription methods do not allow visual editing in an intuitive way, which is a requirementfor a graphical editor used by UI designers. Therefore, the default XY layout manager willstore position and size information in terms of per mills (parts per thousand) for the top, left,bottom, and right side of the UI element, relative to the parent’s drawing area. For the flowand card layout managers, only index information needs to be stored for each UI element, asthese layout managers determine the position of the various UI elements based on this indexinformation3.

To allow for more layout managers to be added in the future, no exact format will be prescribedfor the storage of position and size information. Each layout manager can use its own wayfor storing layout descriptions using the property elements described in the next section.The default XY layout manager will use four property elements with the names ‘top’, ‘left’,‘bottom’, and ‘right’.

4.4 Styling

Each UI part can be uniquely identified by its name4 attribute. All styling information isstored in property elements. Similar to CSS [18] style attributes, property elements come in

3In addition, the flow layout manager may need to store information about its orientation and the horizontaland vertical spacing between the UI elements.

4Due to limitations on XML IDs, it is not possible to use XML IDs and ID-refs for the identification of UIparts.

4.5 Device Characteristics Descriptions 21

three variants:

• properties that define information for one UI part with a specified name:

<property nameref=’name’ name=’propertyname’>value</property>

• properties that define information for all UI parts within a certain group (class)

<property class=’class’ name=’propertyname’>value</property>

• properties that define information for a certain type of UI parts

<property element=’parttype’ name=’propertyname’>value</property>

Using these property elements, it is possible to store values for all kinds of styling attributes:the predefined attributes shown in Figure 4.1, but also the additional attributes defined inthe widget descriptions. Note that in order to maximize the reusability of structural layoutinformation, the references to a (often platform dependent) widget are also stored as propertyvalues and not in the widget tree.

An alternative was to couple the UI parts and their attributes in a stronger way, but thiswould make the UI part definitions less reusable. By using separate property elements, it iseasier to reuse some attribute values and to overwrite others.

The DTD for multi-level stylesheets can be found in Appendix G.

4.5 Device Characteristics Descriptions

Although the list of target devices must be extensible, the format in which device capabilityinformation is described will be fixed to keep the system as simple as possible. This meansthat a fixed set of target device capabilities will be described in a fixed format. Although anumber of device capability description formats exist, GLASS will use its own format becauseof the close link of the device capabilities description with the multi-level stylesheet structure.

Below is a list with a number of possible target device characteristics for devices within thetarget domain of GLASS. This target domain consists of devices able to present a graphicaluser interface to the user and allow the user to interact with the system using this graphicaluser interface. This list is (partially) based on [37] and [38].

• Device group (PC, PDA, TV, mobile phone, remote control, . . . )A problem is that more and more devices become available that belong to multiplegroups. Consider for example a mobile phone with built-in camera and PDA function-ality.

• Device name (i.e. a device specific type number, for example P-900 or Brilliance 107P)

• Screen (colordepth, orientation, aspect ratio, resolution)

When this becomes a requirement at a certain moment in future, a separate declaration section listingall used UI parts must be added to the MLSS. However, to keep the current MLSS data format as simple aspossible, it has no such declaration section.

22 Design and Implementation of the Multi-level Stylesheet Data Format

• Input types (remote control, touch, mouse, pc-keyboard, phone-keyboard, . . . )

• Processor

• Memory

• Graphical capabilities (hardware acceleration, video blending, image processing, vectorgraphics, 3D support)

• Storage

• Network support

• Multi-media support

• Plug-in support

• Operating System

• UI Toolkit

Since the widget database with its widget descriptions provides a way to abstract from par-ticular UI toolkit implementation in the editor, the only device capabilities that actuallyinfluence the editor are the screen resolution (and thus also the orientation and the aspectratio) and the color-depth. The other capabilities don’t directly influence the editor, but canbe used to create clusters of devices with similar capabilities.

For the sake of simplicity, the first version of GLASS will use only a subset of the above devicecapabilities:

1. color-depth (in bits)

2. screen-orientation (portrait or landscape)

3. min-screenwidth (minimal screen width in pixels)

4. min-screenheight (minimal screen height in pixels)

5. max-screenwidth (maximal screen width in pixels)

6. max-screenheight (maximal screen height in pixels)

7. deviceimage (image of the device and/or the non-usable part of the screen)

Minimum and maximum values are stored for the screen size as there are devices that supportmultiple resolutions. The first 6 characteristics are used to create the node structure of themulti-level stylesheet, the last characteristic is only used within the editor to show an imageof the device and/or the non-usable part of the screen.

The DTD for the device characteristics descriptions and a sample device description can befound in Appendix H.

Chapter 5

Design and Implementation of the

Layout and Styling Editor

This chapter describes the layout and styling editor created during the GLASS project. Thiseditor has been created as a plug-in for the Eclipse [12] platform, as this was a requirement.

5.1 Design Considerations

• Designers do not want to be bothered with a hierarchic data structure and thinkingabout the implications of placing certain information in this data structure at a certainlevel. On the other hand, software engineers want full control over the use and reuse oflayout and styling properties. Therefore, the editor will provide an option to low-leveledit the MLSS.

• It is impossible to offer a true WYSIWYG editing environment to create UIs for morethan one target device at once, as differences in target device characteristics may causethe UIs to look different. Although it is possible to define ’meta’ devices that correspondto nodes higher in the MLSS hierarchy, this introduces a great risk. Editing the UI forthis ’meta’ device feels WYSIWYG, but probably no ‘real’, device specific UI will exactlylook like the UI you created!

• There should be no annoying ‘pseudo-intelligent’ features. Although the goal of MLSSis to reuse as much layout and styling information as possible between the UI definitionsfor different target devices, reuse of layout and styling information should not lead tounintuitive or unpredictable behavior and should leave the user in control.

• Changing a layout or styling property for one target device should not change thatproperty for another target device of which the UI has been edited before. This impliesthat modifications to the UI should (also) be stored at device specific level, such thatthey won’t be overruled by modifications to other target device’s UIs.

• In order to enable the reuse of UI information, especially when the MLSS is only editedat device specific level, some algorithm is required to factor out commonalities betweenthe UI definitions of the different target devices and lift that data to higher levels in

24 Design and Implementation of the Layout and Styling Editor

the MLSS. More information about the design of this algorithm can be found in section5.9.

5.2 Graphical Editing Framework

The Graphical Editing Framework (GEF) [8] [9] [10] [11] for the Eclipse platform [12] is aframework that allows developers to create a rich graphical editor within the Eclipse platform.The framework provides a lot of built-in functionality like selection and resizing support,undo/redo support, zooming support, and alignment support. Using GEF as basis for GLASSsaves a lot of developing time compared to building all the low-level editing features fromscratch. An overview of some of the core concepts of GEF can be found in Appendix C.

5.2.1 Risks

Using a relatively new, open source framework entails some risks:

Lack of documentation

Apart from some small articles and the JavaDoc, there is little (up-to-date) documentationabout GEF. This results into a rather steep learning curve for this quite large and complexframework.

Of great help are the example applications delivered with the framework and the GEF news-group, in which the authors of the framework participate very actively in replying to user’squestions. This makes the newsgroup a very useful source of information on GEF.

Stability

For an open source framework, stability is not guaranteed. However, GEF is around for morethan 3 years and is used for a number of commercial1 and non-commercial projects. Further,as GLASS is part of a research project, stability is not a primary concern.

Licensing issues

Some open-source licenses, like GPL [23], oblige you to release your software under that licenseagain, when your software uses software under that license. GEF and Eclipse are licensedunder the EPL[24] license, that permits creating commercial and/or closed-source softwareand does not require you to use the EPL license for your software.

1For example the Omondo UML tool, available at http://www.omondo.com, is built using GEF.

5.3 Analysis 25

5.3 Analysis

In order to get an overview of the system that has to be created, an analysis model hasbeen created. The first version of this analysis model was a rather straightforward copy ofthe requirements into a number of classes with some methods and attributes and connectionbetween them. Subsequently, this model has been refined a number of times. During these re-finements, a number of unnecessary connections (i.e. connection between objects who alreadyhave an indirect connection) have been removed and some classes where merged.

Globally, the analysis model consists of four parts: the editor, input models (the task modeland the abstract UI model), the multi-level stylesheet, and some external data sources (thetarget device capabilities, the widget database, and the repository). A simplified versionof the Analysis model is shown in Figure 5.1, the complete analysis model can be found inAppendix B.

Figure 5.1: Simplified Analysis Model

26 Design and Implementation of the Layout and Styling Editor

5.4 Plug-in Structure

Eclipse contains two kinds of visual components: views and editors. A view is typically usedto navigate a hierarchy of information, or display properties for the active editor. Only oneinstance of a view can be open at a certain point in time. An editor is typically used to editor browse a document or input object. Multiple editor instances can be open at a certainpoint in time.

To make it possible that multiple editors can be opened in the plug-in with their own models,state information will be stored in each editor instance, instead of in a separate object inthe plug-in. Further, the List of Target Devices and the Task List have been combined intoone view because of their close relation. This leads to an plug-in architecture with threecomponents:

GLASSEditor: a GEF-based editor containing a drawing canvas and state information.The drawing canvas represents the screen of the selected target device. The stateinformation includes the current layout and styling description, the currently selectedtarget device, the current CAP node (i.e. the CAP node in the layout and stylingdescription that corresponds with the current target device), an input model for thetask list, and selected screen being edited in the drawing canvas. Further, the stateincludes the internal editor state (e.g. the undo stack, the zoom manager, etc.). Moreinformation about the GLASSEditor can be found in Section 5.5.

TaskListView: contains the List of Target Devices and the Task List.The List of Target Devices shows all target devices defined in the abstract UI modelfor the layout and styling description that is being edited in the active editor. Whena device is selected in this list, the editor state is updated. When another editor isactivated, the content of this list is updated.The Task List shows all tasks of the task model and all associated widgets from theabstract UI model for the layout and styling description that is being edited in theactive editor. When another editor is activated, the content of this list is updated. Thewidgets of the Task List can be dragged into the drawing canvas of the GLASS Editor.

ScreensView: shows a list of all screens defined in the layout and styling description for thecurrently selected target device. When another editor or target device is selected, thecontent of this list is updated. The screens view also contains options to create a newscreen within the UI of the currently selected target device and to remove the currentlyselected screen from the UI of the currently selected target device.

Further, the Properties View provided by the Eclipse Platform is used to provide an easy wayto edit the properties of the object currently selected in the editor. Figure 5.12 on page 41contains a screenshot of the editor and the views.

5.5 GLASSEditor

Being a GEF-based editor, the structure of the GLASSEditor is largely determined by GEF.The GLASSEditor is build using an MVC (Model-View-Controller) architecture [15]. This

5.5 GLASSEditor 27

means that there is a separation between the data model (model), the visual representationof the data model on the screen (view), and the control logic in between (controller). In GEF,there is no direct connection between the model and the view.

5.5.1 Model

The layout and styling model used in the editor is quite similar to the model shown in Figure4.1. The only differences are that a common super-class MLSSElement has been addedand that some abstract classes such as ‘UIElement’ have been added to factor out commonattributes. The MLSSElement super-class provides each element with a name attribute, anupdate notification mechanism, and methods to get and set property values. These propertyvalues can be values of predefined properties or properties defined in widget descriptions. Theupdate notification mechanism is required by GEF and provides a way for interested objectsto register them self as a property change listener to the model element in order to be notifiedof model modifications. A graphical overview of the layout and styling model used in theeditor is shown in Figure 5.2.

More information about creating a new layout and styling model for an existing abstract UImodel can be found in Section 5.7. More information about editing a layout and styling modelcan be found in Section 5.8. As described in Section 5.1, an updating algorithm is required toupdate a MLSS after the layout and styling description for a certain target device has beenchanged. Updating a MLSS is described in Section 5.9.

In addition to the layout and styling model for the editor, the editor also contains a modelthat serves as input for the List of Target Devices and for the Task List. This model is createdby combining the task model and the abstract UI model. The model structure is chosen insuch a way that it contains all devices that serve as input for the list of target devices, andthat the child objects of a device object form the input for the Task List when that particulardevice is selected as active target device. The structure of this model is shown in Figure 5.3.The ‘description’ attribute of tasks, widgets, triggers, and navigationlinks contains a labelthat is used in the task list. The text of this description is based on the name of the task,task input(s), or task output(s) in the task model.

5.5.2 Controller

The controller forms the heart of the editor. The structure of the controller is largely deter-mined by GEF. One root controller (RootEditPart in GEF terminology) is created for theeditor, to which sub-controllers are added for all models elements. These sub-controllers formthe bridge between the model elements and the graphical figures that represent them on thescreen. These sub-controllers (EditParts in GEF terminology) perform the following tasks:

• Creating a view for a model element

• Creating commands to update the model

• Updating the view when the associated model element is changed. In order to knowwhen the view has to be updated, the controller is registered as property change listenerto the associated model element to receive update notification when the associated modelelement is changed.

28 Design and Implementation of the Layout and Styling Editor

Figure 5.2: Layout and Styling Model used in the editor

5.6 Design and Implementation of the Widget Viewer 29

Figure 5.3: Model for the Tasklist View

In addition to these editparts, the controller contains the state information2 of the editor anda number of tools (e.g. arrow selection tool, marquee selection tool, and area creation tool)and actions (e.g. alignment actions, actions for changing the spacing between selected UIparts, and actions to enable layout aids, such as a grid) the user of the editor can use.

More information about the internal structure of GEF-based editors can be found in AppendixC. An overview of all packages created for the GLASS Layout And Styling System is includedin Appendix D.

5.5.3 View

A number of graphical components have been created for rendering a WYSIWYG previewof the model to the screen. Most of these components are quite simple, but there is onecomplex one: the Widget Viewer, used to create WYSIWYG widget previews. The designand implementation of this Widget Viewer is described in the next section.

5.6 Design and Implementation of the Widget Viewer

In order to make the widget previews in the editor as WYSIWYG as possible without theneed of an actual widget implementation for all widgets, widget previews are based on the

2This is edit-session specific information, such as the undo stack and the current zoom-level, that has notto be persisted and is therefore not part of the model.

30 Design and Implementation of the Layout and Styling Editor

widget descriptions from the widget database. These widget descriptions contain:

• A description of the ‘real’, platform specific widget (e.g. UI toolkit, implementationreference, documentation reference, rendered and generated data types, etc.)

• A screenshot description, which contains the non-resizable areas, the content areas, andthe subitem areas of the widget screenshot. A non-resizable area is a part of a widgetscreenshot that should not be resized when the widget is resized. A content area is apart of a widget screenshot where textual or graphical content can be placed. A subitemarea is a part of a widget screenshot where subitems can be placed. A subitem can forexample be an item in a list and is defined by another screenshot description.

• Resource attributes, which describe the content-type and default values of the widget’scontent areas.

• Styling attributes, which describe the available styling attributes for the widget. Notethat not all style attributes are interpreted and used for the WYSIWYG preview, asthis would require semantical knowledge of all possible style attributes.

Here is an example of such an widget description:

<?xml version="1.0" encoding="UTF-8"?>

<widget name="Swing Button">

<implref>javax.swing.JButton</implref>

<implrefpackage>javax.swing</implrefpackage>

<uitoolkit>Java Swing</uitoolkit>

<uitoolkitlibref osdependency="none">rt.jar</uitoolkitlibref>

<targetlanguage minversion="1.2" maxversion="unspecified">Java</targetlanguage>

<docref>http://java.sun.com/j2se/1.4.2/docs/api/javax/swing/JButton.html</docref>

<supportslookfeel>name of look and feel</supportslookfeel>

<inputmodality>mouse/touch</inputmodality>

<inputmodality>keyboard</inputmodality>