EE 505 – Introductionssli.ee.washington.edu/classes/ee505/notes/intro_9.25.pdfEE 505 –...

Transcript of EE 505 – Introductionssli.ee.washington.edu/classes/ee505/notes/intro_9.25.pdfEE 505 –...

-

EE 505 – Introduction

What do we mean by “random” with respect to variables and signals?

• unpredictable from the perspective of the observer

• information bearing signal (e.g. speech)

• phenomena that are not under our control (noise, interference, etc.)

• measurement uncertainty

• variability of the source (e.g. different speakers)

• imperfect models

Applications with random signals:

• Signal processing (noise removal, prediction, identification)

• Communications (compression, signal design, receiver design)

• Biomedical diagnostics, neuroengineering

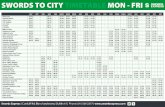

• Transportation planning

• Electric power system integration of renewables

Examples of random signals:

• coin flips

• bus arrival times

• traffic on a highway

• web page hits per hour

• speech, image, video, text

1

-

Probability Basics – Review

Probability space: (Ω,F , P )

P (Ω) = 1

P (A) ≥ 0 ∀A ∈ F

P (∪∞i=1Ai) =∞∑

i=1

P (Ai) if Ai ∩Aj = ∅ ∀ i '= j

P (∅) = 0 P (A) = 1− P (Ā)

P (A ∪B) = P (A) + P (B)− P (A ∩B)

2

-

Example 1: Two Coin flips

Example 2: Roulette wheel

3

-

Conditional Probability

P (A|B) = P (A ∩B)P (B)

B '= ∅

Definition: The events {Ai} form a partition if ∪iAi = Ω and Ai ∩Aj = ∅ (non-overlapping). Forsuch a partition you have:

Total probability:

P (B) = P (B ∩ Ω) =∑

j

P (B ∩Aj) =∑

j

P (B|Aj)P (Aj)

Bayes’ Rule:

P (Ai|B) =P (Ai ∩B)

P (B)=

P (B|Ai)P (Ai)∑j P (B|Aj)P (Aj)

Independence of Events

P (A ∩B) = P (A)P (B)

4

-

Random Variable: X : Ω → *

Map a random experiment outcome into a real number, or taking a measurement on the outcomeof the random experiment.

Note that there can be more than one measurement that you might take, depending on theexperiment.

5

-

Mapping a random experiment outcome into a real number allows us to characterize the randomexperiment with

• Probability distributions: probability mass function (pmf) for discrete RVs, probability den-sity function (pdf) for continuous RVs

• Cumulative distribution functions: F (x) = Pr(X ≤ x)

Discrete variables: pmf pX(x) x ∈ Z

pX(x) ≥ 0,∑

x

pX(s) = 1

PX(A) =∑

x∈ApX(x)

FX(x) =x∑

k=−∞P (k)

Continuous variables: pdf fX(x) x ∈ *

fX(x) ≥ 0,∫ ∞

−∞f(v)dv = 1

PX(A) =∫

Af(v)dv

FX(x) =∫ x

−∞f(v)dv

Mixed distributions:fX(x) = αf(x) + (1− α)

∑

i

piδ(x− xi)

6

-

Expectations: A way to partially characterize random experiments

• Expectation

E(X) =∞∑

k=−∞kP (k) Discrete X

E(X) =∫ ∞

−∞vp(v)dv Continuous X

• General Expectations

E(g(X)) =∞∑

k=−∞g(k)P (k) Discrete X

E(g(X)) =∫ ∞

−∞g(v)p(v)dv Continuous X

Important special cases: moments E(Xk)

• Mean = 1st moment E(X)

• Variance = 2nd central moment E[(X − E(X))2] = E(X2)− E(X)2

7

-

Important Random Variables

Discrete-Valued XName Range Parameters pmf p(x) Mean Variance GX(z) = E[zX ]Bernoulli {0, 1} 0 ≤ p ≤ 1 px(1− p)(1−x) p p(1− p) 1− p + pz

Binomial {0, . . . , n} 0 ≤ p ≤ 1(

nx

)

px(1− p)(n−x) np np(1− p) (1− p + pz)n

Geometric {0, 1, . . .} 0 < p < 1 (1− p)xp 1−pp1−pp2

p1−(1−p)z

Poisson {0, 1, . . .} 0 < λ λxe−λx! λ λ eλ(z−1)

Continuous-Valued XName Range Parameters pdf f(x) Mean Variance ΨX(w) = E[ejwX ]Uniform [a, b] a < b 1b−a

a+b2

(b−a)212

ejwb−ejwajw(b−a)

Gaussian [−∞,∞] µ,σ2 1√2πσ

e−(x−µ)2/2σ2 µ σ2 e(jwµ−

σ2w2

2)

Exponential [0,∞] α > 0 αe−αx 1α1

α2α

α−jwErlang [0,∞] α > 0, n > 0 αnx(n−1)e−αx(n−1)!

nα

nα2

αn

(α−jw)n

Gamma [0,∞] α, r > 0 α(αx)(r−1)e−αx

Γ(r)rα

rα2

α(α−jw)r

Laplacian [−∞,∞] α > 0 α2 e−α|x| 0 2

α2α2

w2+α2

Rayleigh [0,∞] α2 xα2

e−x2/2α2 α√

π/2(2− π2 )α

2 not incl.

8

-

Which distribution would you use?

A modem transmits over an error-prone channel, repeating every “0” or “1” bit transmission fivetimes. The channel changes an input bit to its complement with probability p = 0.1, independentlyfor each bit. A modem receiver takes a majority vote of the five received bits to estimate the signal.

Heat must be removed from a system according to how fast it is generated. Suppose the systemhas eight components, each of which is active with probability 0.25, independent of the others.Events are the number of systems that are active.

A kid is sitting on the corner watching cars go by, waiting to see a Tesla. The probability thata driver in Seattle owns a Tesla is p = .005. X is the number of cars that he sees before a Tesladrives by.

9

-

EE 505 – Class Assignment 1

1. Two transmitters send messages through bursts of radio signals to an antenna. During eachtime slot each transmitter sends a message with probability 1/3. Simultaneous transmissionsresult in loss of the messages. Let X be the number of time slots until the first message getsthrough. What type of random variable would characterize this problem? Specify the samplespace and the parameter(s).

2. A data center has 10,000 disk drives. A disk drive fails on a given day with probability 10−3.What distribution would you use to determine the number of spares to keep on hand? Whatother information would you need to solve the problem?

1

-

Another useful case: the Zipf RV

SX = {1, 2, . . . , L} P (k) ∝1k

10