Ed Snelson. Counterfactual Analysis

-

Upload

volha-banadyseva -

Category

Software

-

view

2.663 -

download

3

description

Transcript of Ed Snelson. Counterfactual Analysis

Counterfactual analysis: a Big Data case-study using Cosmos/SCOPE

Ed Snelson

Work by

Jonas Peters Joaquin Quiñonero Candela

Denis Xavier Charles D. Max Chickering

Elon Portugaly Dipankar Ray

Patrice Simard Ed Snelson

Léon Bottou

http://jmlr.org/papers/v14/bottou13a.html

I. MOTIVATION

Search ads

The search ads ecosystem

User Advertiser

Queries

Ads &Bids

Ads Prices

Clicks (and consequences)Learning

ADVERTISER FEEDBACK LOOP

LEARNINGFEEDBACK LOOP

USER FEEDBACK

LOOP

Search-engine

Learning to run a marketplace

• The learning machine is not a machine but is an organization with lots of people doing stuff!

How can we help?

• Goal: improve marketplace machinery such that its long term revenue is maximal

• Approximate goal by improving multiple performance measures (KPIs) related to all players• Provide data for decision making• Automatically optimize parts of the

system

Outline from here on

II. Online Experimentation

III. Counterfactual measurements

IV. Cosmos/SCOPE

V. Implementation details

II. ONLINE EXPERIMENTATION

How do parameters affect KPIs?

• We want to determine how certain auction parameters affect KPIs

• Three options:

1. Offline log analysis – “correlational”

2. Auction simulation

3. Online experimentation – “causal”

The problem with correlation analysis(Simpson’s paradox)

Trying to decide whether a drug helps or not

• Historical data:

• Conclusion: don’t give the drug

But what if the Drs. were saving the drug for the severe cases?

• Conclusion reversed: drug helps for both severe and mild cases

All Survived DiedSurvival Rate

Treated 5,000 2,100 2,900 42%

Not Treated 5,000 2,900 2,100 58%

Severe cases (treatment rate 80%)

All Survived DiedSurvival Rate

Treated 4,000 1,200 2,800 30%

Not Treated 1,000 100 900 10%

Mild case (treatment rate 20%)

All Survived DiedSurvival Rate

Treated 1,000 900 100 90%

Not Treated 4,000 2,800 1,300 70%

Overkill?

Pervasive causation paradoxes in ad data!

Example.– Logged data shows a positive correlation between

event A “First mainline ad gets a high quality score” and event B “Second mainline ad receives a click”.

– Do high quality ads encourage clicking below?

– Controlling for event C ”Query categorized as commercial” reverses the correlation for both commercial and non-commercial queries.

Randomized experimentsRandomly select who to treat

• Selection independent of all confounding factors

• Therefore eliminates Simpson’s paradox and allows:

Counterfactual estimates

• If we had given drug to 𝑥% of the patients,the success rate would have been 60% × 𝑥 + 40% × 1 − 𝑥

All population (treatment rate 30%)

All Survived DiedSurvival Rate

Treated 3,000 1,800 1,200 60%

Not Treated 7,000 2,800 4,200 40%

Experiments in the online world

• A/B tests are used throughout the online world to compare different versions of the system

– A random fraction of the traffic (a flight) uses click-prediction system A

– Another random fraction uses click-prediction system B

• Wait for a week, measure KPIs, choose best!

• Our framework takes this one step further…

III. COUNTERFACTUALMEASUREMENTS

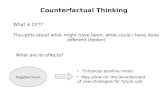

Counterfactuals

Measuring something that did not happen

“How would the system have performed if, when the data was collected, we had used 𝑠𝑦𝑠𝑡𝑒𝑚∗ instead of 𝑠𝑦𝑠𝑡𝑒𝑚?”

Replaying past data

Classification example

• Collect labeled data in existing setup

• Replay the past data to evaluate what the performance would have been if we had used classifier θ.

• Requires knowledge of all functions connecting the point of change to the point of measurement.

𝑠*

Concrete example: mainline reserve (MLR)

MainlineSidebar

Ad Score > MLR

Online randomizationQ: Can we estimate the results of a change counterfactually

(without actually performing the change)?

A: Yes, if 𝑠𝑦𝑠𝑡𝑒𝑚∗ and 𝑠𝑦𝑠𝑡𝑒𝑚 are non-deterministic (and close enough)

𝑃(𝑀𝐿𝑅)𝑃∗(𝑀𝐿𝑅)

MLR MLR

𝑀𝐿𝑅 𝑀𝐿𝑅 ∗

Deterministic Randomized

For each auction, a random MLR is used online, drawn from the data-collection distribution 𝑃(𝑀𝐿𝑅)

Estimating counterfactual KPIs

𝐶𝑙𝑖𝑐𝑘𝑠𝑡𝑜𝑡𝑎𝑙∗ ~

𝑖

𝑤𝑖∗𝐶𝑙𝑖𝑐𝑘𝑠(𝑎𝑢𝑐𝑡𝑖𝑜𝑛𝑖)

𝐶𝑙𝑖𝑐𝑘𝑠𝑡𝑜𝑡𝑎𝑙 =

𝑖

𝐶𝑙𝑖𝑐𝑘𝑠(𝑎𝑢𝑐𝑡𝑖𝑜𝑛𝑖)

Usual additive KPI:

Counterfactual KPI:• Weighted sum: auctions with MLRs “closer” to the counterfactual

distribution get higher weight

𝑤𝑖∗ =𝑃∗(𝑀𝐿𝑅𝑖)

𝑃 𝑀𝐿𝑅𝑖

Exploration

𝑃(𝜔) 𝑃∗(𝜔) Quality of the estimation

• Confidence intervals reveal whether the data collection distribution 𝑃 𝜔 performs sufficient exploration to answer the counterfactual question of interest.

𝑃(𝜔) 𝑃∗(𝜔)

Clicks vs MLRInner

“exploration” intervalOuter “sample-

size” interval

Control with no randomization

Control with 18% lower MLR

Number of Mainline Ads vs MLR

This is easy to estimate

Revenue vs MLR

Revenue has always high

sample variance

More with the same data

How is this related to A/B testing?

• A/B testing tests 2 specific settings against each other

• Need to know what questions you want to ask beforehand!

Big advantage of more general randomization:

• Collect data first, choose question(s) later

• Randomizing more stuff increases opportunities

But…

• Requires more sophisticated offline log processing

IV. COSMOS/SCOPE

Ad Auction Logs

• ≈ 10TB per day ad-auction logs

• Cooked and joined from various raw logs

• Stored in Cosmos, queried via SCOPE

• Small fraction of total Bing logs and jobs:

– Tens of thousands SCOPE jobs daily

– Tens of PBs read/write daily

Cosmos/SCOPE

≈ PIG/HIVE

≈ HDFS

http://research.microsoft.com/en-us/um/people/jrzhou/pub/Scope.pdf

http://research.microsoft.com/en-us/um/people/jrzhou/pub/scope-vldbj.pdf

Cosmos

• Microsoft’s internal distributed data store

• Tens of thousands of commodity servers≈ HDFS, GFS

• Append-only file system, optimized for sequential I/O

• Data replication and compression

Data Representation

1. Unstructured streams– Custom Extractors: converts a sequence of bytes into

a RowSet, specifying a schema for the columns

2. Structured streams– Data stored alongside metadata information: a well-

defined schema, and structural properties (e.g. partitioning and sorting information)

– Can be horizontally partitioned into tens of thousands of partitions e.g. hash or range partitioning

– Indexes for random access and index-based joins

SCOPE scripting language

• SQL-like (in syntax) declarative language specifying data transformation pipeline

• Each scope statement takes as input one or more RowSets, and outputs another RowSet

• Highly extensible with C# expressions, custom operators and data types

• Scope compiler and optimizer responsible for generating a data flow DAG for an efficient parallel execution

C# Expressions and functions

R1 = SELECT A+C AS ac, B.Trim() AS B1

FROM R

WHERE StringOccurs(C, “xyz”) > 2;

#CS

public static int StringOccurs(string str, string ptrn)

{

int cnt=0;

int pos=-1;

while (pos+1 < str.Length)

{

pos = str.IndexOf(ptrn, pos+1);

if (pos < 0) break;

cnt++;

}

return cnt;

}

#ENDCS

C# String method

C# String expression

C# User-defined types (UDTs)

– Arbitrary C# classes can be used as column types in scripts

– Extremely convenient for easy serialization/deserialization

– Can be referenced in external dlls, C# backing files, and in-script (#CS … #ENDCS)

SELECT UserId, SessionId,

new RequestInfo(binaryData)

AS Request

FROM InputStream

WHERE Request.Browser.IsIE();

C# User-defined operators

– User defined aggregates

• Aggregate Interface: Intialize, Accumulate, Finalize

• Can be declared recursive: allows partial aggregation

– MapReduce-like extensions

• PROCESS

• REDUCE– Can be declared recursive

• COMBINE

SCOPE compilation and executionSELECT query, COUNT() AS count FROM "search.log“

USING LogExtractor

GROUP BY query

HAVING count > 1000

ORDER BY count DESC;

OUTPUT TO "qcount.result";

Runtime cost-based optimizer

SCOPE: Pros/Cons (an opinion)

• Pros:– Very quick to write simple queries without thinking

about parallelization and execution

– Highly extensible with deep C# integration

– UDT columns and C# functions

– Easy development and debugging from VS• Intellisense

• Cons:– No loop/iteration support means a poor fit for many

ML algorithms

– Batch, rather than interactive

V. IMPLEMENTATION

Counterfactual computation

• Ideal for Map-Reduce setting

• Map: 𝑎𝑢𝑐𝑡𝑖𝑜𝑛𝑖 → 𝐾𝑃𝐼(𝑎𝑢𝑐𝑡𝑖𝑜𝑛𝑖)

• Reduce: 𝑖𝑤𝑖∗…

𝐾𝑃𝐼𝑡𝑜𝑡𝑎𝑙∗ =

𝑖

𝑤𝑖∗ 𝐾𝑃𝐼(𝑎𝑢𝑐𝑡𝑖𝑜𝑛𝑖)

Counterfactual grid

SCOPE pseudo-code for counterfactualsAuctionLogs = VIEW CosmosLogPath;

SELECT Auction

FROM AuctionLogs;

SELECT ComputeKPIs(Auction) AS KPIs,

ComputeWeightGrid(Auction) AS WeightGrid;

SELECT ComputeWeightedKPIs(KPIs, GridPoint) AS wKPIs,

CROSS APPLY WeightGrid AS GridPoint;

SELECT AggregateKPIs(wKPIs) AS TotalKPIs

GROUP BY GridPoint;

SELECT GridPoint, TotalKPIs.Finalize() AS FinalKPIs

OUTPUT TO “Results.tsv”;

C# UDT: Wraps all logged info about a single auction

C# UDFs

Call instance method on “TotalKPIs” UDT

Recursive Aggregator: 𝑤𝑖, 𝑤𝑖𝐾𝑃𝐼𝑖

etc.

Unroll the weight grid

Conclusions

• There are systems in the real world that are too complex to easily formalize

• Causal inference clarifies many problems

– Ignoring causality => Simpson’s paradox

– Randomness allows inferring causality

• The counterfactual framework is modular

– Randomize in advance, ask later

• Counterfactual analysis ideally suited to batch map-reduce