Do Humans Have Conceptual Models About Geographic …staffEiffel Tower in Paris, as shown in Figure...

Transcript of Do Humans Have Conceptual Models About Geographic …staffEiffel Tower in Paris, as shown in Figure...

Do Humans Have Conceptual Models About GeographicObjects? A User Study

Ahmet Aker*Department of Computer Science, University of Sheffield, 211 Portobello, S1 4DP, Sheffield, United Kingdom.E-mail: [email protected]

Laura PlazaDpto. de Ingeniería del Software e Inteligencia Artificial, Universidad Complutense de Madrid, C/ Prof. JoséGarcía Santesmases s/n, 28040 Madrid, Spain. E-mail: [email protected]

Elena LloretDpto. de Lenguajes y Sistemas Informáticos, Universidad de Alicante, Apdo. de correos, 99, E-03008 Alicante,Spain. E-mail: [email protected]

Robert GaizauskasDepartment of Computer Science, University of Sheffield, 211 Portobello, S1 4DP, Sheffield, United Kingdom.E-mail: [email protected]

In this article, we investigate what sorts of informationhumans request about geographical objects of the sametype. For example, Edinburgh Castle and Bodiam Castleare two objects of the same type: “castle.” The questionis whether specific information is requested for theobject type “castle” and how this information differs forobjects of other types (e.g., church, museum, or lake).We aim to answer this question using an online survey.In the survey, we showed 184 participants 200 imagespertaining to urban and rural objects and asked them towrite questions for which they would like to know theanswers when seeing those objects. Our analysis of the6,169 questions collected in the survey shows thathumans have shared ideas of what to ask about geo-graphical objects. When the object types resemble eachother (e.g., church and temple), the requested informa-tion is similar for the objects of these types. Otherwise,the information is specific to an object type. Our resultsmay be very useful in guiding Natural Language Pro-cessing tasks involving automatic generation of tem-plates for image descriptions and their assessment, aswell as image indexing and organization.

Introduction

In everyday life, we see and categorize things in our builtor natural environment. For instance, if we look at differentcastles, we see that each of them has a different style, a

different look, and a different size; some of them are newerthan the others; and so on, but still we are able to categorizeall of them as “castles.”

For categorization purposes, visual attributes such as thestyle and size of a castle might be enough; however, toseparately report about each castle, we need more attributeswhose values make each one distinguishable from others.

Knowing what sets of attributes people use to describegeographical objects has several applications. Automatictext summarization applied to generation of descriptions forgeographical objects would be improved by incorporatinghuman preferences into the output summaries. Template-based summarization methods, for example, have been usedsuccessfully for news-event summarization (McKeown &Radev, 1995; Radev & McKeown, 1998). The same strategycan be applied to the task of generation of descriptions forgeographical objects (e.g., for purposes of generatingdescriptions for images of these objects). Templates canbe derived from the set of attributes relevant to humansto bias the summarization system toward the text units con-taining the values for these attributes. Furthermore, theattributes could be used in a guided summarization task asorganized by the Document Understanding Conferences(DUC)1 and the Text Analysis Conference (TAC).2 In addi-tion, the attributes can be used to evaluate the output ofan automatic summarization system by testing whether

Received April 4, 2012; revised June 11, 2012; accepted June 12, 2012

© 2013 ASIS&T • Published online in Wiley Online Library(wileyonlinelibrary.com). DOI: 10.1002/asi.22756

1http://duc.nist.gov/2http://www.nist.gov/tac/

JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY, ••(••):••–••, 2013

highly relevant attributes are covered in the output summary.Furthermore, the attribute lists present a guide for what toindex for images pertaining to locations. If informationhighly relevant for humans is used for indexing, this couldlead to better retrieval and organization of those images. Ininformation extraction, automatic generation of domaintemplates has been widely investigated (Banko, Cafarella,Soderland, Broadhead, & Etzioni, 2007; Banko & Etzioni,2008; Filatova, Hatzivassiloglou, & McKeown, 2006; Li,Jiang, & Wang, 2010; Sudo, Sekine, & Grishman, 2003).The set of attributes could be used to generate such tem-plates for geographic objects and/or to evaluate the output ofsuch systems.

For these applications, it is relevant to know whetherthere is a general set of attributes that people use to describeany geographical object or, given that humans categorizeobjects into types (e.g., church, museum, lake, etc.), if thereare sets of attributes specific to single object types orperhaps shared between object types of similar function(e.g., church and temple).

In this article, we aim to address these questions. Specifi-cally, we analyze three research questions: When seeing ageographic object, what is the set of attributes related to theobject for which people would like to know the values? Isthis set of attributes specific to a particular object type (e.g.,church) or shared between different object types? Do humaninterests correlate to what can be found in real documents?

Our goal is to answer these questions using an onlinesurvey conducted on Amazon’s Mechanical Turk. In theexperiment, we showed participants different images ofobjects from around the world. Our set of images containsonly images of static features of the built or natural land-scape (i.e., objects with persistent geocoordinates, e.g.,buildings and mountains, and not images of objects whichmove about in such landscapes, e.g., people, cars, clouds,etc.). We asked the participants to write questions for whichthey would like to know the answers when seeing eachimage. We collected 7,644 questions from 184 participants.The results of our analysis reveal that people share ideasregarding what to ask about geographical objects in general.When the object types resemble each other, the requestedinformation is similar. In contrast, if the object types aredistinct enough, then this information is specific to eachobject type.

The remainder of the article is organized as follows. Wefirst introduce related work. We next describe MechanicalTurk, present the experimental setting of our survey, andoutline the preprocessing of the data. We later present ouranalysis and report, and then discuss the results. Finally, weextract the main conclusions of the study and outline futurework.

Related Work

Cognitive psychology has offered several theories andsubstantial empirical evidence for the existence of categoriesor concepts and explanation of what constitutes them

(Eysenck & Keane, 2005). Theories agree that conceptsare characterized by sets of attributes, although theydiffer in whether a set of attributes is necessary andsufficient to define a concept (defining-attributes theories)or whether the concepts are more fuzzy in their specificationin terms of attributes (prototype theories), so that someobjects are more representative of a concept than others.If humans use concepts to organize the knowledge aboutthe world, then we assume that they will have ways todescribe these concepts in natural language. Our aim is toanalyze this hypothesis because it will be complementaryto reasoning theories (Gordon, 2004; Gordon, Bejan, &Sagae, 2011; Gordon & Hobbs, 2011), thus providing someinsights about how humans perceive and communicate theinformation.

To our knowledge, similar analyses of what concepts orinformation types human associate with geographicalobjects have not been reported previously. However, earlierwork has studied image-related captions or descriptions tounderstand how people describe images by looking at querylogs or existing image descriptions (Armitage & Enser,1997; Balasubramanian, Diekema, & Goodrum, 2004; Choi& Rasmussen, 2002; Greisdorf & O’Connor, 2002; Hollink,Schreiber, Wielinga, & Worring, 2004; Jörgensen, 1996,1998). These studies were aimed at understanding howpeople index images in general and were not specific togeographic objects. Our work also is somewhat similar tothe one described in Rosch (1999), who investigated whatattributes people associate with objects such as fish, tree,fruit, and so on whereas our focus is on geographic objects.Finally, other studies have analyzed the impact of images inmemory, focusing on the differences between men andwomen when describing a seen image (Marks, 1973), whichis outside the scope of our work.

Experimental Setting

Mechanical Turk

Mechanical Turk (MTurk) is a crowdsourcing service runby Amazon. It allows users (also called “requesters”) toupload tasks and obtain results within a very short time. Thetasks are performed by MTurk workers. Each worker has theability to scroll existing tasks (also called “human intelli-gence task,” or “HITs”) and complete them for a fee offeredby the requester.

The general advantage of MTurk, the ability to collectresults in a time- and cost-efficient manner, makes it a suit-able platform for conducting this experiment. MTurk hasbeen widely used for language processing and informationretrieval tasks (Aker, El-Haj, Albakour, & Kruschwitz,2012; Dakka & Ipeirotis, 2008; El-Haj, Kruschwitz, & Fox,2010; Kaisser, Hearst, & Lowe, 2008; Kittur, Chi, & Suh,2008; Snow, O’Connor, Jurafsky, & Ng, 2008; Yang et al.,2009). Several studies have shown that the quality of resultsproduced by MTurk workers is comparable to that oftraditionally employed experiment participants (Alonso &

2 JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013DOI: 10.1002/asi

Mizzaro, 2009; Snow et al., 2008; Su, Pavlov, Chow, &Baker, 2007).

Experiment Design

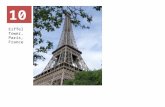

Our experimental design is similar to the one describedby Filatova et al. (2006), who were interested in knowingwhat information people expect to know when they read anarticle about an event. The authors used four different events(terrorist attack, earthquake, presidential election, and air-plane crash). They asked 10 humans to provide questions forwhich they would like to get the answers when they read anarticle about each of those events. We set up our experimentin a similar way and asked humans to provide questionsabout places for which they would like to know the answerswhen they see the place. We obtained the questions viaMTurk workers. In the experiment, we showed the workersan image pertaining to a particular object or place (e.g., the

Eiffel Tower in Paris, as shown in Figure 1). We also pre-sented the worker with the name of the place (Eiffel Tower)and its object type (tower). The workers were asked to takethe role of a tourist and provide 10 questions for which theywould like to know the answers when they see the placeshown in the image.

The experiment design illustrated in Figure 1 allows us toaddress some of the quality issues associated with conduct-ing experiments on MTurk. Related work has reported prob-lems with spammers and unethical workers, who produceincomplete or absurd output (Feng, Besana, & Zajac, 2009;Kazai, 2011; Mason & Watts, 2010). In our experiment, forexample, text fields were provided for writing down eachquestion. In this way, we were able to control the quality ofthe questions and reject all absurd input such as strings ofarbitrary characters, and so on. Aker et al. (2012) alsoreported that text fields are a good design selection to detectunwilling or less careful workers.

FIG. 1. Design of the online MTurk experiment. [Color figure can be viewed in the online issue, which is available at wileyonlinelibrary.com.]

JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013 3DOI: 10.1002/asi

Workers also were required to provide the complete set of10 questions. This was not always fulfilled; however, wealso accepted lists containing less than 10 questions, pro-vided that the questions were of “good quality.”

We showed images picturing places of 40 different objecttypes randomly chosen from our entire set of 107 objecttypes; this set is described in Aker and Gaizauskas (2010).From these 40 object types, 25 are urban and 15 rural types:

• Urban types: abbey, aquarium, avenue, basilica, boulevard,building, cathedral, cemetery, church, gallery, house, monu-ment, museum, opera house, palace, parliament, prison,railway, railway station, residence, square, stadium, temple,tower, university, and zoo.

• Rural types: beach, canal, cave, garden, glacier, island, lake,mountain, park, peak, river, ski resort, village, and volcano.

For each object type, five different places were shown.For example, for the object type tower, the images of EiffelTower, Flag Tower of Hanoi, BT Tower, and Munttoren,Bettisons Folly were shown. These places (towers) weremanually selected from Wikipedia.3 Each image was shownto 5 different workers. Note that we did not want to experi-ment with specific places (i.e., Eiffel Tower), but we wantedeach worker to pose questions on the same five places tocollect a great variety of questions for the same place and tostudy the correlation between different users; that is the whywe manually chose the images within Wikipedia.

We ran the experiment for 4 weeks. In total, we collected7,644 questions for 187 different places (We did not getresults for 13 of 200 images.) The questions came from 184different workers. The expected number of questions was10,000 (40 Types ¥ 5 Objects ¥ 5 Workers ¥ 10 Questions).However, there are some objects for which we only havequestions from a few workers. Table 1 shows the totalnumber of questions collected for each object type. Therewere 4,815 questions for the urban types and 2,829 ques-tions for rural ones. We think that objects of urban types

such as a church are more familiar to humans than areobjects of rural types and that they are thus able to generatemore questions for the urban objects than for the rural ones.We believe that this might be one reason why the number ofquestions for the urban objects is much greater than for therural objects.

Question Preprocessing

We manually analyzed all the questions to assess theirquality. Approximately 2% of the questions were emptybecause not all the workers wrote 10 questions for eachobject. Moreover, some questions were related only to theimage itself rather than to the place shown in the image (e.g.,“When the picture is taken?” “How many flowers you foundin the image?” “Is there a bus in the picture?”). In additionto these, some questions presented nonresolved references,so it was impossible to know to which object they refer (e.g.,“What language do they [emphasis added] speak?”). Finally,there were questions which bore no relation at all with theobject in the image (e.g., “How is the manager?”). Thesequestions did not address the task, which was to ask ques-tions about the object shown in the image, not about theimage itself or related information. Therefore, we catego-rized all these questions as “noise,” making up 19% of theentire question set (1,479 of 7,648 questions).

We categorized the remaining 81% of the questions(6,169 of 7,648) by the attribute for which the worker wasseeking the value with his or her question. An attribute is anabstract grouping of similar questions. We regard two ormore questions as similar if their answers refer to the sameinformation type. For instance, we regard the questions“where is Garwood glacier?” and “where exactly is Edmon-ton?” as similar because both aim for answers related to theinformation type location. We manually established differ-ent abstract grouping categories, and each attribute wasnamed according to the information type to which it refers(e.g., location). Table 2 shows question examples for thetop-10 attributes (i.e., the 10 attributes which have the mostquestions), defined next:

• Visiting: Sentences containing information about, forexample, visiting times, prices, and so on.

• Location: Sentences containing information about where theobject is located.

• Foundationyear: Sentences containing information aboutwhen a building was built, when an organization was estab-lished, and so on.

• Surrounding: Sentences containing information about whatother objects are close to the main object.

• History: Sentences containing information about interestingpast events related to the main object.

• Size: Sentences containing information about the spatialdimensions of the main object, such as the height of a tower,the length of a mountain, and so on.

• Design: Sentences containing information about the style,structure of a building, or even the technology used for itsconstruction.3http://www.wikipedia.org/

TABLE 1. Object types with their number of questions: object type(count, percentage).

Top-10

university (225, 2.94), lake (225, 2.94), cemetery (216, 2.82), cave (216,2.82), park (216, 2.82), church (216, 2.82), gallery (216, 2.82), canal(216, 2.82), cathedral (216, 2.82), square (216, 2.82), palace (216,2.82), opera house (216, 2.82), river (216, 2.82), mountain (207, 2.7),beach (207, 2.7), volcano (207, 2.7), zoo (207, 2.7), museum (207,2.7), temple (207, 2.7), residence (207, 2.7), ski resort (207, 2.7),garden (207, 2.7), glacier (199, 2.6), peak (198, 2.59), monument (198,2.59), building (198, 2.59), aquarium, (198, 2.59), boulevard (189,2.47), basilica (189, 2.47), railway station (180, 2.35), house (180,2.35), abbey (180, 2.35), tower (180, 2.35), stadium (180, 2.35), island(173, 2.26), avenue (171, 2.23), village (135, 1.76), parliament (81,1.06), railway (81, 1.06), prison (45, 0.59)

4 JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013DOI: 10.1002/asi

• Mostattraction: Sentences containing information about thefeature or attribute of the main object which makes peoplewant to visit it.

• Naming: Sentences containing information about the originof the name object name or about alternative names for it.

• Features: Sentences containing information about otheroutstanding qualities or characteristics of the main object.

After this analysis, we obtained a total of 146 attributes;however, 95 of them contained less than five questions, sowe ignored these attributes in further analysis.4 We analyzedthe remaining set of 51 attributes (see Table 2), each ofwhich has at least five questions related to it.

Analysis and Results

In this section, we carry out the analysis with respect tothe three proposed research questions. Each subsection isdevoted to a different question. Note that the analysis forsome research drew upon data from other questions thatwere studied.

RQ1: Is there a set of attributes that people generallyassociate with geographic objects?

In Table 3, the attributes along with the number of ques-tions for each attribute from all object types are given. As thetable shows, some attributes in the left column (“Top-10”)are very frequently addressed by the participants. Theseattributes cover the majority of the questions. More than65% of the questions can be categorized by these 10attributes. This means that people do share ideas as to whattypes of information are required about a place, and the setof top-10 attributes captures these information types.

Table 4 lists the most frequent eight attributes for eachobject type. From this table, we can see that the top-10attributes are present for most of the object types, indicatingthat the same type of information is relevant for severalobject types. However, although these attributes occur formany object types, their popularity is not the same in all theobject types with which they are associated.

We define the popularity of an attribute for an object typeas the number of questions categorized under this attribute

for that particular object type. Attribute popularity indicateshow important the type of information represented by theattribute is for the particular object type. For instance, theattribute visiting is the most popular attribute (i.e., hasthe maximum number of 52 questions) for the object typemuseum (see Table 4). This indicates that it is very importantfor people to know how much the entry to the museum costsor when the museum opens. This information is morerelevant than, for instance, knowing when the museum wasbuilt. However, if we look at the object type house, we can

4The total number of questions discarded with these 95 categoriesis 150.

TABLE 2. Top-10 attributes with related questions.

Visiting where i can buy the ticket? is this tower available to be visited the whole year? when is the best time to visit? how to get there?Location where is garwood glacier? where exactly is edmonton? where it’s located?Foundationyear when was it build? which year was this zoo opened? when it was established?Surrounding what are the landmarks found nearby seima palace? what are the nearby places to visit? what are some nearby attractions?Features are there any waterfalls in the park? what does the zoo house?History what is the history of it? any history related to george mason university? what role did the palace play in the history of france?Size how big is the complex? how big is the temple? how big is it, meaning what are the dimensions?Design what is the architectural structure style? what style of architecture is this house built in? is it constructed by ancient technology?Mostattraction what is the speciality of this abbey? what is the best in this island? what are the extraordinary facts about this place?Naming was it named after a person? how did the abbey get its name? how the name for this boulevard came?

TABLE 3. Attributes used to categorize the questions. For each category,we give its number of questions and the percentage value of that numberover the total number of categorized questions: attributeName(count,percentage)

Top-10 Below top-10

visiting (1068, 17.31), location(861, 13.96), foundationyear(465, 7.54), surrounding (426,6.91), features (357, 5.79),history (239, 3.87), size (214,3.47), design (211, 3.42),mostattraction (165, 2.67),naming (153, 2.48)

purpose (136, 2.20),istouristattraction (132, 2.14),height (132, 2.14), visitors (126,2.04), founder (113, 1.83), owner(109, 1.77), events (104, 1.69),type (102, 1.65), status (86,1.39), habitants (74, 1.20),designer (69,1.12),constructioninfo (63, 1.02),temperature (58, 0.94), length(56, 0.91), eruptioninfo (42,0.68), comparison (42, 0.68),capacity (38, 0.62), depth (36,0.58), maintainance (35, 0.57),arts (27, 0.44), studentsinfo (26,0.42), subjectsofferred (25, 0.41),gravesinfo (23, 0.37), preacher(22, 0.36), firstdiscovery (20,0.32), parkinginfo (20, 0.32),religioninfo (19, 0.31),destination (16, 0.26), origin (15,0.24), workers (12, 0.19), width(12,0.19), travellers (11, 0.18),travelcost (9, 0.15), waterinfo (9,0.15), travelling (9, 0.15),firstclimber (9, 0.15), tributaries(8, 0.13), prayertime (6, 0.10),personinmonument (6, 0.10),snowinformation (6, 0.10),firstfounder (5, 0.08)

JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013 5DOI: 10.1002/asi

see that the same attribute visiting is not the most popular. Itoccurs at Position 5 with only 11 questions. For this objecttype, instead, people seem to be most interested in knowingthe design of the house.

The remaining 35% of the questions are spread over theremaining 41 attributes as shown in the right column(“Below top-10”) of Table 3. From this list, we canobserve that there are some specific attributes that areonly present in a particular object type or in few objecttypes. For instance, as shown in Table 4, the attribute erup-tioninfo is associated only with the object type volcano.This attribute contains questions related to the eruption ofdifferent volcanoes: “How often this volcano erupts infuji?” “Has it erupted before?” “When was the last timeit erupted?” From Table 4, we can see that this attributealso is the most popular for the volcano object typewhile information related to visiting, location, and so on,

which is generally most frequently asked for, comes aftereruptioninfo.

From this analysis, we can conclude that even thoughobject types share the top-10 attributes, the differences inattribute popularity are an indication that these are notequally important for all object types. Therefore, the ques-tion arises as to whether each object type has an associatedspecific set of attributes, or whether there are similar objecttypes that can be grouped according to which information isrequired to describe them. This is our second research ques-tion, which is analyzed next.

RQ2: Is each set of attributes specific to a particular objecttype or shared between several object types?

To address this question, we compare different objecttypes and groups of object types and investigate the degreeof similarity between them. If two object types are similar,

TABLE 4. List of attributes (eight most frequent) for each object type. The numbers within the brackets attached to each attribute indicate how manyquestions were categorized by that attribute.

Object type Attributes

Abbey foundationyear (21), location (20), visiting (16), history (14), design (10), founder (7), height (5), mostattraction (4)Aquarium visiting (63), features (30), location (19), foundationyear (10), size (7), visitors (6), events (5), surrounding (5)Avenue location (28), surrounding (16), visiting (11), foundationyear (8), features (8), type (6), parkinginfo (5), visitors (5)Basilica visiting (25), location (23), foundationyear (21), surrounding (16), founder (7), visitors (7), history (7), purpose (6)Beach visiting (44), location (24), surrounding (23), features (18), visitors (12), mostattraction (7), naming (4), depth (4)Boulevard surrounding (23), location (17), visiting (17), features (13), naming (13), foundationyear (7), length (6), visitors (5)Building location (22), design (22), visiting (18), surrounding (10), foundationyear (10), height (9), constructioninfo (9), owner (9)Canal visiting (23), location (21), surrounding (18), purpose (16), length (12), foundationyear (12), depth (9), istouristattraction (7)Cathedral location (30), visiting (23), foundationyear (16), history (12), founder (9), size (8), design (8), preacher (6)Cave visiting (46), location (25), surrounding (15), features (15), firstdiscovery (13), history (12), naming (7), mostattraction (6)Cemetery location (30), visiting (29), gravesinfo (23), foundationyear (18), istouristattraction (11), surrounding (8), size (7), naming (5)Church foundationyear (26), location (22), visiting (18), preacher (12), events (9), history (9), design (9), features (7)Gallery visiting (43), location (23), arts (21), foundationyear (16), surrounding (10), design (8), events (5), mostattraction (5)Garden visiting (34), features (23), location (20), foundationyear (11), size (9), constructioninfo (6), surrounding (6), owner (5)Glacier location (24), visiting (24), status (13), foundationyear (11), size (10), history (9), surrounding (8), naming (8)House design (27), location (22), foundationyear (16), owner (13), visiting (11), history (10), istouristattraction (9), founder (9)Island visiting (21), location (19), habitants (19), features (15), size (13), surrounding (11), temperature (6), mostattraction (5)Lake visiting (61), surrounding (32), location (29), size (12), depth (10), features (7), mostattraction (6), purpose (6)Monument foundationyear (23), location (20), visiting (14), surrounding (13), history (12), purpose (11), designer (11), height (9)Mountain visiting (35), location (23), surrounding (18), height (18), features (14), naming (8), temperature (6), size (5)Museum visiting (52), location (22), foundationyear (22), mostattraction (10), features (9), history (8), arts (5), owner (5)Opera House visiting (33), foundationyear (20), location (17), events (17), mostattraction (10), surrounding (10), designer (9), owner (8)Palace location (26), history (23), foundationyear (21), visiting (17), size (12), surrounding (12), design (12), owner (8)Park visiting (41), features (39), location (26), size (12), surrounding (10), foundationyear (9), mostattraction (5), events (4)Parliament visiting (13), location (7), foundationyear (7), surrounding (6), design (5), history (4), mostattraction (3), type (3)Peak visiting (58), location (27), height (20), surrounding (18), temperature (10), features (6), history (6), istouristattraction (5)Prison foundationyear (7), design (5), history (4), capacity (4), location (3), prisonersinfo (3), type (2), size (2)Railway location (7), travelcost (7), travelers (6), traveling (4), technique (3), foundationyear (3), status (2), owner (2)Railway Station foundationyear (22), location (19), visiting (7), naming (5), travelers (5), history (5), design (5), type (4)Residence design (28), location (21), foundationyear (15), visiting (13), habitants (10), purpose (8), history (8), size (7)River visiting (25), location (19), length (18), surrounding (16), purpose (15), origin (12), status (9), features (8)Ski Resort visiting (40), features (26), location (18), surrounding (8), height (7), events (6), size (6), temperature (5)Square visiting (28), location (26), surrounding (22), history (17), foundationyear (14), naming (13), mostattraction (8), istouristattraction (8)Stadium location (30), events (25), visiting (23), capacity (15), surrounding (11), foundationyear (11), type (6), size (6)Temple location (28), visiting (22), foundationyear (17), design (17), visitors (12), surrounding (8), religioninfo (8), size (7)Tower visiting (24), location (21), foundationyear (18), design (16), purpose (15), height (14), features (6), designer (5)University location (32), studentsinfo (26), subjectsofferred (25), visiting (11), foundationyear (10), size (9), maintainance (8), history (6)Village habitants (18), surrounding (17), visiting (16), location (11), features (10), mostattraction (5), size (5), naming (4)Volcano eruptioninfo (42), visiting (26), location (21), surrounding (20), height (15), status (14), foundationyear (7), naming (6)Zoo visiting (41), features (34), location (19), foundationyear (14), size (10), mostattraction (8), surrounding (5), owner (5)

6 JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013DOI: 10.1002/asi

then they will not only share a set of attributes but theattributes within this set also will have similar popularityranking.

We use Kendall’s t rank correlation coefficient as ametric, which indicates similarity between object typeswhile considering the attribute popularity ranking in theirattribute sets.5 Kendall’s t correlation coefficient is equal orclose to 1 if two events are highly correlated in rankings, andclose to 0 if there exists little or no correlation between theranks of the two events.

Attributes are ranked according to their popularity (i.e.,the number of questions contained under each attribute). Ifthe attributes of two different object types have similar rank-ings, this is an indication that these attributes are of similarimportance for both object types. Kendall’s t for theseobject types will return a high correlation for the pair, andwe will refer to such object types as similar. A difference inattribute rankings between different object types indicatesthat these attribute sets are more specific to one object typeand not shared by the other(s). In this case, Kendall’s t willreturn a low correlation, and we will refer to such objecttypes as dissimilar.

Within this research question, we can go further andanalyze two more questions that directly derive from this.On one hand, we first report comparisons between singleobject types. On the other hand, we report similaritybetween these object-type higher level groups. Both ques-tions are discussed next.

RQ3: How similar are single object types?

Table 5 shows three groups of object-type pairs in rural andurban areas, respectively. In the first column (Always highcorrelation), the object-type pairs whose correlation coeffi-cient is higher than the mean for all attributes are shown. Thesecond column (High correlation in top-10 and low correla-tion in below top-10 attributes) shows the object-type pairswhose correlation is higher than the mean in the top-10attributes, but drops below the mean for the below top-10

attributes. Finally, in the third column (Always low correla-tion), the pairs that are correlated with the coefficient lowerthan the mean for all attributes are presented. On average, theurban object types correlate with 0.55 (Mdn = 0.57), and therural types correlate with 0.53 (Mdn = 0.53). The mean andmedian correlation coefficients lie close to each other, whichindicates that the distribution of highly correlating objecttypes is similar to that of object types with low correlation.From Table 5, we can see that high correlation in attributes isalways present when the object types have, for instance, thesame look, design, or purpose.

For the urban areas, we have object types such as church,basilica, abbey, cathedral, and temple, which correlate witha coefficient higher than the mean of 0.55 when all attributesare considered.Asimilar picture can be drawn for other urbanobject types such as house-residence, building-residence, ormuseum-operahouse. In rural areas, object types related tomountainous areas (e.g., glacier, mountain, peak, volcano,ski resort, etc.) are correlated above the mean of 0.53. Thesame is valid for water bodies such as canal, lake, river, andso on. All these object types clearly share features such aslook, design, purpose, and so on. The correlation coefficientis always low for object types that do not share these aspects,such as the ones shown in the third column of Table 5. Thisindicates that aspects used for categorizing objects intoobject types also play a role in deciding which object typesare similar for purposes of describing them.

However, the second column of the table also highlightsthe importance of shared ideas of what information is rel-evant for describing geographic objects for object-type simi-larity (RQ1). The object types shown in the second columnof the table are correlated above the mean for top-10attributes, and their correlation drops below the mean whenbelow top-10 attributes are used, leading to a new researchsubquestion (RQ4).

Based on these results, we can derive groups of similarobject types. Such groups can be built from object typesshown in column 1 of Table 5, and we can investigatewhether such groups of object types are similar.

RQ4: How similar are groups of similar object types?5We discarded attributes which do not occur in both lists of attributes,

which makes approximately 2% of the questions used for categorization.

TABLE 5. Ten object-type pairs from urban and rural areas with high correlation in all attribute sets, with high correlation in the top-10 attributes, and withlow correlation, respectively.

Always high correlationHigh correlation in top-10 and low correlation

in below- top-10 attributes Always low correlation

church-temple, abbey-temple, building-tower,building-residence, abbey-basilica,house-residence, abbey-church,basilica-temple, basilica-cathedral,museum-operahouse, house-palace,gallery-museum

Urban areas prison-aquarium, monument-railway,railway-cathedral, university-railway,museum-prison, temple-railway,railway-operahouse, parliament-railway,abbey-railway, tower-railway

house-prison, museum-railway, basilica-railway,monument-prison, university-cathedral,prison-palace, prison-cathedral,cemetery-aquarium, railwaystation-cathedral, cemetery-parliament

mountain-volcano, mountain-skiresort,mountain-peak, peak-volcano, peak-glacier,mountain-glacier, glacier-skiresort,park-garden, lake-canal, canal-river

Rural areas lake-volcano, canal-island, lake-glacier,glacier-river, canal-village, lake-garden,village-garden, glacier-village, island-glacier,park-cave

village-river, lake-island, canal-volcano,island-river, mountain-river, peak-lake,island-garden, canal-glacier, skiresort-garden,mountain-canal

JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013 7DOI: 10.1002/asi

Based on Table 5, we group the always-high correlatingobject types. To build a group from two object types A andB, we merge their attributes. The set of these higher levelcategories can be seen in Table 6.6 For the attributes thatexist in both object types, we sum their counts from bothtypes and keep only one occurrence of the attributes. Theattributes then are ranked according to their popularity.

The correlation between groups of object types from thesame group is given in Table 7. In this table, we reportcomparisons based on three different sets of attributes: all,top-10, and below top-10. All is the entire set of attributesshown in Table 3 (attributes from columns top-10 and belowtop-10). The top-10 attributes include those that are sharedbetween most of the object types and thus are more likely torender similarity. The below top-10 set contains all remain-ing attributes, which are rather object-type specific, as pre-viously assumed.

Urban object types are grouped into the following threecategories: religious places (church, basilica, cathedral,abbey, temple), cultural attractions (museum, opera house,gallery), and buildings (tower, house, building, residence,palace). From Table 7, we see that a high correlation existsbetween each of these groups when all attributes are takeninto consideration. Religious places and buildings correlatewith t = 0.71. The correlation between religious placesand cultural attractions is t = 0.71, and between culturalattractions and buildings, t = 0.67. When the attribute set issplit into top-10 most frequent attributes and the remainder,we see that religious places correlate with buildings morehighly in the top-10 attributes than in the remainder whiletheir correlation with cultural attractions is spread evenlybetween both attribute sets. Cultural attractions and build-ings are correlated most highly when the entire set ofattributes (all) is taken into consideration. For both the top-10attributes and the remainder, the correlation is lower than forthe full set of attributes, but the correlation in the belowtop-10 set of attributes is higher than in the top-10 attributes.

Based on Table 5, the rural object types are grouped intotwo groups: water bodies and mountainous areas. These twogroups correlate moderately with t = 0.47. However, theresults in Table 7 show a high correlation (t = 0.74) intop-10 attributes while the remaining attributes are not cor-related (t = 0.29).

From these results, we can conclude that groups of urbanobject types are generally similar. However, for some grouppairs (e.g., religious places–buildings), the similarity is sub-stantially higher in more frequently asked information typesthan in those less frequently asked. In other cases, we see areverse picture (e.g., cultural attractions–buildings) or anequal similarity in both attribute sets (e.g., religious places–cultural attractions). Rural object-type groups are onlysimilar when the 10 most frequent attributes are considered.This means that for these groups, the frequently asked infor-mation types are of similar importance for all object typeswithin these groups while the remaining information typesare object-type specific. This may be due to the fact that forthe urban type, we collected 4,815 questions whereas thenumber of questions for the rural object types was only2,829. Due to this difference, the correlation between theinformation users are interested in for the rural objects islower than that for the urban objects. To analyze whetherboth object types correlate with each other, we also com-pared the urban groups with rural ones. From Table 7, we cansee that there is, in general, moderate or low correlationbetween these groups. This indicates that people have differ-ent ideas of what attributes to associate with urban and rural

6Although there exist different clustering hierarchies for geographicalplaces such as the one used in the CORINE Land Cover project (http://www.eea.europa.eu/publications/COR0-landcover), we generated our clus-ters of object types based on their look, design, and functionality.

TABLE 6. High-level groups and subgroups of object types.

Urban objects Religious places (e.g., basilica, abbey, church)Buildings (e.g., palace, stadium, university)Cultural attractions (e.g., museum, opera house, gallery)

Rural objects Water bodies (e.g., beach, canal, lake)Mountainous areas (e.g., ski resort, volcano, peak)

TABLE 7. Correlation coefficients between groups of object types.

Categories Area pair Kendall’s t

Groups in urbanAll (religious places)-(buildings) 0.71Top-10 (religious places)-(buildings) 0.85Bellow-top-10 (religious places)-(buildings) 0.63All (religious places)-(cultural attractions) 0.71Top-10 (religious places)-(cultural attractions) 0.71Bellow-top-10 (religious places)-(cultural attractions) 0.71All (buildings)-(cultural attractions) 0.67Top-10 (buildings)-(cultural attractions) 0.44Bellow-top-10 (buildings)-(cultural attractions) 0.59Groups in ruralAll (water bodies)-(mountainous areas) 0.47Top-10 (water bodies)-(mountainous areas) 0.74Bellow-top-10 (water bodies)-(mountainous areas) 0.29Groups in urban against groups in ruralAll (religious places)-(mountainous areas) 0.41Top-10 (religious places)-(mountainous areas) 0.40Bellow-top-10 (religious places)-(mountainous areas) 0.18All (buildings)-(mountainous areas) 0.41Top-10 (buildings)-(mountainous areas) 0.40Bellow-top-10 (buildings)-(mountainous areas) 0.18All (cultural attractions)-(mountainous areas) 0.39Top-10 (cultural attractions)-(mountainous areas) 0.25Bellow-top-10 (cultural attractions)-(mountainous areas) 0.18All (religious places)-(water bodies) 0.38Top-10 (religious places)-(water bodies) 0.41Bellow-top-10 (religious places)-(water bodies) 0.17All (buildings)-(water bodies) 0.46Top-10 (buildings)-(water bodies) 0.25Bellow-top-10 (buildings)-(water bodies) 0.32All (cultural attractions)-(water bodies) 0.39Top-10 (cultural attractions)-(water bodies) 0.30Bellow-top-10 (cultural attractions)-(water bodies) 0.20

8 JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013DOI: 10.1002/asi

object types. We think that one of the reasons for this lowcorrelation is that humans do use the visual and functionalaspects of objects to associate attributes with them. Sinceurban objects (mainly human-made constructions, e.g.,buildings, bridges, do not look like or have similar functionsas the rural objects (mainly natural formations, e.g., moun-tains, rivers), the list of attributes that humans associate withthem differ substantially; thus, when compared with eachother, they achieve very low correlations.

RQ5: Do human interests correlate to what can be found inexisting text documents?

To carry out the analysis, we first randomly selected 15object types from both the urban and rural categories. Spe-cifically, seven objects were selected from the urban cat-egory (cathedral, gallery, museum, opera, palace, stadium,and university) whereas eight were chosen from the ruralcategory (beach, cave, island, lake, mountain, park, river,and volcano). For each object type, we collected 10 Wiki-pedia articles about objects of this type.

Once the articles were retrieved, three annotators ana-lyzed the sentences of the first paragraph of each article.7

The objective of the analysis was to determine if theattributes identified as relevant by the MTurk workers alsocould be found in Wikipedia articles. The most relevantattributes identified by MTurk workers were shown inTable 4, where the eight most frequently addressedattributes for each object type were listed. For each sentencefrom the first paragraph of Wikipedia articles, it was

determined whether that sentence describes one or moreattributes from this list, and if so, which one(s). The result ofthis analysis is shown in Table 8.

The table shows that most of the attributes can be iden-tified in the first paragraph of Wikipedia articles. Approxi-mately half of the attributes for each object type may beidentified in the first paragraph of Wikipedia articles. Themost common attribute is location, which is found in almostall the objects that were analyzed. For urban types, historicand designing information, including the year of foundation,is typically reported. For rural objects, we usually find dataabout the dimensions of the object and other informationconcerning the surroundings of the object. However, we alsoobserved that some very specific information for severalobject types may not always be found. For instance, tem-perature is a detail difficult to find in Wikipedia articlesrelated to islands or mountains, though it may be interestingaccording to the MTurk workers. Sometimes this is due tothe fact that only the first paragraph in the Wikipedia articleis analyzed, but it is usually because the information is notavailable in the article. Nonetheless, it also may be that theselected Wikipedia articles do not contain such information,but it may be included in other articles concerning the sameobject type that have not been chosen. To analyze this, wewould have to investigate a larger corpus of articles for eachobject type, which is beyond the scope of this article. More-over, note that the order of frequencies of the attributes foreach object varies with respect to the manual analysis. Whilethe MTurk workers gave preference to the visiting attributefor these selected objects (see Table 4), in Wikipediaarticles, we found that this attribute, despite also being rel-evant, is not always among the two most frequent ones. Onereason may be that this kind of information varies over timeand must be constantly updated. In Wikipedia articles, weobserve that the type of information more frequent for each

7We chose to analyze the first paragraph only instead of the entireWikipedia article to reduce the labor needed for the experiment. Ourrandom analyses of Wikipedia articles showed that the most relevant infor-mation is contained in the first paragraph, provided it is of a considerablelength. Therefore, we only select Wikipedia articles if their first paragraphis at least 10 sentences or longer.

TABLE 8. Percentage of documents in the set of Wikipedia articles that contain the eight most frequent attributes from Table 3.

Object type Attributes

Beach location (100%), features (100%), visiting (100%), surrounding (60%), mostattraction (40%), naming (40%), visitors (10%), depth (10%)Cathedral location (100%), design (100%), history (70%), foundationyear (50%), visiting (40%), preacher (30%), founder (20%), size (10%)Cave location (100%), naming (100%), firstdiscovery (80%), features (70%), visiting (70%), history (60%), mostattraction (50%),

surrounding (0%)Gallery location (100%), events (100%), arts (90%), foundationyear (80%), visiting (30%), surrounding (10%), design (10%), mostattraction (0%)Island location (100%), size (70%), features (50%), habitants (50%), visiting (50%), surrounding (20%), mostattraction (10%), temperature (0%)Lake location (100%), features (100%), size (80%), surrounding (70%), visiting (60%), depth (40%), mostattraction (20%), purpose (20%)Mountain naming (90%), visiting (90%), height (90%), location (80%), surrounding (70%), size (50%), features (10%), temperature (0%)Museum location (100%), features (70%), history (70%), foundationyear (70%), arts (50%), visiting (50%), mostattraction (20%), owner (10%)Opera House location (100%), foundationyear (100%), events (70%), designer (70%), owner (70%), surrounding (20%), visiting (20%),

mostattraction (0%)Palace location (100%), history (100%), visiting (80%), design (70%), foundationyear (40%), owner (30%), size (30%), surrounding (20%)Park features (100%), location (90%), size (90%), foundationyear (80%), visiting (70%), mostattraction (40%), surrounding (20%),

events (10%)River location (100%), length (70%), surrounding (70%), origin (60%), features (60%), status (0%), visiting (0%), purpose (0%)Stadium location (100%), capacity (100%), type (100%), foundationyear (80%), events (60%), surrounding (20%), size (20%), visiting (0%)University location (100%), history (90%), studentsinfo (70%), size (60%), foundationyear (50%), subjectsofferred (20%), visiting (10%),

maintainance (0%)Volcano location (100%), surrounding (70%), status (60%), foundationyear (60%), eruptioninfo (50%), height (50%), visiting (30%),

naming (30%)

JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013 9DOI: 10.1002/asi

object type varies depending on the object type itself. Forinstance, the most frequent attributes for the object typegallery are arts. This means that articles about galleriesfocus more on the features of the exhibitions they showrather than on any other type of information (e.g., concern-ing the entry fees, opening hours, etc.). In contrast, we havefound that most of the information in the first paragraph ofthe Wikipedia articles can be categorized under the mostfrequent attributes in Table 4. We only found a few excep-tions, such as the capacity and dimensions for the objecttype stadium or the characteristics of sea wildlife for theobject type beach.

After completing this analysis, we also were interested inknowing whether the popularity of the attributes obtainedwithin Wikipedia articles correlates with the popularity ofthe attributes obtained through the MTurk workers. To dothis, we again compute Kendall’s t correlation. Table 9shows the results of computing the Kendall’s t correlationcoefficient between the Wikipedia and the MTurk results foreach of the object types analyzed.

The results obtained show acceptable correlation coeffi-cients for most of the object types. Note that the correlationfor rural objects is higher, being the object type park, the onewhich has the highest correlation between MTurk workersand Wikipedia articles (t = 0.79). The lowest correlation canbe seen in the river and museum object types. On average,the correlation for all 15 object types is t = 0.33, indicatinga moderate correlation in the popularity of the attributes.Although the correlation is moderate, our analysis showsthat humans have conceptual models about objects of spe-cific types which they use to guide themselves in seekinginformation or writing about objects of particular types. Thiswas not trivial or obvious when we wanted to test our initialhypothesis since the MTurk workers who participated in thesurvey may have completely different backgrounds ornationalities that were unknown to us. Consequently, eachperson may have his or her own interests regarding the sort

of information that he or she would like to recall from anobject type. In light of these results, it would be interestingto analyze whether this also applies to other languages anddomains.

Discussion

Our analyses have shown that people taking the role of atourist do share ideas as to what information is relevant fordescribing geographic objects, and we have identified a setof information types that reflects these ideas. However,although we could say that there clearly are several types ofinformation which people like to know when seeing anygeographic object, it also was clear that not every type ofinformation is equally relevant for each object type. Thesefindings prompted the question whether each object type hasa specific set of information associated with it or whetherobject types can be grouped according to how they aredescribed.

We found that some object types are similar and not onlyshare the information types associated with them but also theimportance ranking of these types. Such similar objectswere mostly objects of like purpose, look, or design (e.g.,churches and temples, mountains and peaks, etc.). This indi-cates that sets of features that people use to categorizeobjects also play a role in deciding which informationshould be used to describe these objects. However, someobject types that do not share these features are still similarin terms of which information is required to describe them.In these cases, people will refer to the shared set of fre-quently requested attributes that we identified.

An attempt to group similar object types and investigatesimilarity between the resulting groups revealed that groupsof urban object types are similar. All these objects areobjects of the built landscape that can be visited as touristattractions. For this reason, people taking the role of a touristare interested in information related to visiting, location,foundation year, and so on. This is not the case for ruralobject-type groups or for pairs of urban and rural object typegroups, as was reflected in our results by the dissimilaritybetween these object-type groups.

Moreover, we have studied to what extent existing docu-ments (Wikipedia articles) contain the information in whichtourists are interested and showed that even though most ofthe general information is found in the articles, other veryspecific information for several object types may not alwaysbe found (e.g., entry fees or opening hours). Obviously, thisspecific touristic information is not the focus of Wikipediaarticles, and it is more likely to be found in other kind ofdocuments such as travel guides and tourist-informationwebsites and forums.

These results can be used to automatically generate infor-mation extraction (IE) style templates (Banko et al., 2007;Banko & Etzioni, 2008; Filatova et al., 2006; Li et al., 2010;Sekine, 2006; Sudo et al., 2003). These templates aredescriptions of types of information relevant to a specificdomain such as different events reported in the news (e.g.,

TABLE 9. Kendall’s t correlation coefficient between Wikipedia andMechanical Turk results for each object type.

Object type Kendall’s t

Beach 0.67Cathedral 0.37Cave 0.12Gallery 0.33Island 0.56Lake 0.52Mountain 0.47Museum -0.07Opera House 0.11Palace 0.48Park 0.79River -0.07Stadium 0.22University 0.14Volcano 0.37

10 JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013DOI: 10.1002/asi

terrorist attacks, plane crashes, etc.) or person-specific infor-mation. Currently, there exist no templates for descriptionsof geographic objects, and the questions collected in thesurvey could be used to automatically build such templates.

Wikipedia articles also could be categorized by objecttypes to create object-type corpora. Each object-type corpuswould contain articles about objects of that object type. Forinstance, the church object-type corpus would contain all theWikipedia church articles. Then, from these object-typecorpora, different types of information could be extracted,such as IE templates.

This would contribute to populating knowledge data-bases with geographic information, which could be usedto index images pertaining to geographic objects. If infor-mation highly relevant for humans is used for indexing,this could lead to better retrieval and organization of thoseimages. In addition, templates can be used in template-based automatic summarization (e.g., for purposes of auto-matically generating descriptions for images showinggeographic objects). Furthermore, the highly relevant infor-mation types could be used in a guided summarization taskas organized by the DUC and the TAC. In this task, theautomated summaries could aim to answer the questionsgrouped by the attributes relevant for geographic objects.

Furthermore, our results can guide the decision as towhich information types should be included in templates.For creating the knowledge database, the full set of attributesis needed to capture the maximal amount of informationabout object types. However, for indexing and summariza-tion, it is important to know the information types mostrelevant to a particular object type or groups of similarobject types. In this work, we show these information typesand indicate which object types can be grouped together.

Evaluation of such automatically created summariesalso would profit from the findings reported in this article.Each automatically generated summary about an objectcould be presented to a user along with a set of questionsrelated to the frequently asked attributes about the objecttype. The user would be asked to indicate whether thesummary answers those questions. This would be similar tothe summary content units (SCUs) presented within thepyramid method (Nenkova & Passonneau, 2004). In thepyramid method, however, the SCUs are extracted fromhuman-generated model summaries and presented to theevaluator along with automated summaries. The evaluator isasked whether the automated summary contains that SCU.In our case, the process of extraction of SCUs from refer-ence summaries would be redundant, as the SCUs would bereplaced by the questions related to frequently occurringattributes.

Conclusions and Future Work

In this article, we investigated which information types(i.e., attributes) humans associate with geographic objectsfrom urban and rural landscapes. We identified a set ofattributes that were relevant for any geographic object

type, but also found that an appreciable proportion ofattributes are object-type specific. Even in the set of sharedinformation types, not all information types were equallyimportant for each object type. Based on the importanceranking of information types for each object type, we wereable to identify similar object types. Furthermore, weshowed that urban object types can be grouped togetheraccording to what information is used to describe them.We also showed that some of the attributes MTurk workersidentified as relevant for the various object types can befound in existing documents such as Wikipedia articles,but others (especially those concerning very specific trav-eling information) are not likely to be found in this kind ofdocument. We believe that our results can guide tasksinvolving generation of image descriptions. Furthermore,they can aid assessment of such descriptions as well asimage indexing and organization. In addition, these resultscan be useful for understanding human reasoning (Gordon,Bejan, & Sagae, 2011) and providing some insights for thefuture development of automatic tools capable of perform-ing reasoning.

Having shown that there may be a conceptual model ofgeographic objects, our short-term goal is to study andanalyze whether such models also can be applied to otherlanguages such as Spanish or German. One limitationencountered in this study is the manual selection of imagesto be shown to the annotators. Our aim in the future is tobroaden this experiment by randomly selecting differentimages pertaining to object of the same type to verify ourfindings, and to generalize the experiment, we will notrestrict ourselves to Wikipedia documents but also addgeneral documents about tourist places (e.g., informationfrom tourist websites). Furthermore, we aim to perform thefuture experiments by selecting images pertaining to objectsof diverse types rather than restricting ourselves to imagespertaining to only locations. In this way, we will be able tocollect conceptual models for different types and reuse themfor describing images that show, for example, a beach wherea person goes for a walk with a dog. We believe that acombination of image features and conceptual models canhelp to automatically describe such images. The descrip-tions also can be evaluated by the conceptual models (i.e., todetermine if the generated descriptions entail what is said inthe conceptual models).

Finally, we believe that our findings can be used as abaseline approach to compare any automatic techniqueaiming to collect similar pieces of information about loca-tions. The automatic approaches could derive such informa-tion from existing resources such as Wikipedia (see Aker &Gaizauskas, 2010) and compare their results with thosereported in this article.

References

Aker, A., El-Haj, M., Albakour, D., & Kruschwitz, U. (2012). Assessingcrowdsourcing quality through objective tasks. Proceedings of the Lan-guage Resources and Evaluation Conference (pp. 1456–1461). Istanbul,Turkey.

JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013 11DOI: 10.1002/asi

Aker, A., & Gaizauskas, R. (2010). Generating image descriptions usingdependency relational patterns. Proceedings of the 48th annual meetingof the Association for Computational Linguistics (pp. 1250–1258),Uppsala, Sweden.

Alonso, O., & Mizzaro, S. (2009). Can we get rid of TREC assessors?Using Mechanical Turk for relevance assessment. Proceedings of SpecialInterest Group on Information Retrieval (SIGIR ’09), The Future of IREvaluation Workshop (pp. 15–16). Boston, MA.

Armitage, L., & Enser, P. (1997). Analysis of user need in image archives.Journal of Information Science, 23(4), 287–299.

Balasubramanian, N., Diekema, A., & Goodrum, A. (2004). Analysis ofuser image descriptions and automatic image indexing vocabularies: Anexploratory study. Proceedings of the International Workshop on Multi-disciplinary Image, Video, and Audio Retrieval and Mining. Quebec,Canada.

Banko, M., Cafarella, M., Soderland, S., Broadhead, M., & Etzioni, O.(2007). Open information extraction from the web. Proceedings of theInternational Joint Conference on Artificial Intelligence (pp. 2670–2676), Hyderabad, India.

Banko, M., & Etzioni, O. (2008). The tradeoffs between open and tradi-tional relation extraction. Proceedings of the 46th annual meetingof the Association for Computational Linguistics: Human LanguageTechnologies (pp. 28–36), Columbus, OH.

Choi, Y., & Rasmussen, E. (2002). Users’ relevance criteria in imageretrieval in American history. Information Processing & Management,38(5), 695–726.

Dakka, W., & Ipeirotis, P.G. (2008). Automatic extraction of useful facethierarchies from text databases. Proceedings of the Institute of Electricaland Electronics Engineers 24th International Conference on Data Engi-neering (pp. 466–475), Washington, DC.

El-Haj, M., Kruschwitz, U., & Fox, C. (2010). Using Mechanical Turk tocreate a corpus of Arabic summaries. Proceedings of the LanguageResources and Evaluation Conference Workshop on Semitic Languages(pp. 36–39), Valletta, Malta.

Eysenck, M.W., & Keane, M.T. (2005). Cognitive psychology: A student’shandbook. Erlbaum/Psychology Press, United Kingdom.

Feng, D., Besana, S., & Zajac, R. (2009). Acquiring high quality non-expertknowledge from on-demand workforce. Proceedings of the Workshop onThe People’s Web Meets NLP: Collaboratively Constructed SemanticResources (People’s Web ’09) (pp. 51–56), Morristown, NJ.

Filatova, E., Hatzivassiloglou, V., & McKeown, K. (2006). Automaticcreation of domain templates. Proceedings of the Joint Conference of theInternational Committee on Computational Linguistics and the Associa-tion for Computational Linguistics on Main Conference Poster Sessions(pp. 207–214), Sydney, Australia.

Gordon, A.S., (2004). Strategy representation: An analysis of planningknowledge. Erlbaum/Psychology Press, United Kingdom.

Gordon, A.S., Bejan, C.A. & Sagae, K. (2011). Commonsense causalreasoning using millions of personal stories. Proceedings of the 25thAAAI Conference on Artificial Intelligence, San Francisco, CA.

Gordon, A.S., & Hobbs, J. (2011). A commonsense theory of mind–body interaction. Association for the Advancement of Artificial Intelli-gence Spring Symposium: Logical Formalizations of CommonsenseReasoning. March 21–23, Stanford, CA.

Greisdorf, H., & O’Connor, B. (2002). Modelling what users see when theylook at images: A cognitive viewpoint. Journal of Documentation, 58(1),6–29.

Hollink, L., Schreiber, A., Wielinga, B., & Worring, M. (2004). Classifi-cation of user image descriptions. International Journal of Human–Computer Studies, 61(5), 601–626.

Jörgensen, C. (1996). Indexing images: Testing an image description tem-plate. Proceedings of the annual meeting of the American Society forInformation Science, 33, 209–213.

Jörgensen, C. (1998). Attributes of images in describing tasks. InformationProcessing & Management, 34(2–3), 161–174.

Kaisser, M., Hearst, M.A., & Lowe, J.B. (2008). Improving search resultsquality by customizing summary lengths. Proceedings of the 48th annualmeeting of the Association for Computational Linguistics: Human Lan-guage Technologies (pp. 701–709), Columbus, OH.

Kazai, G. (2011). In search of quality in crowdsourcing for search engineevaluation. Advances in Information Retrieval—33rd European Confer-ence on IR Research (ECIR’11) Lecture Notes in Computer Science: Vol.6611 (pp. 165–176). Amsterdam: Springer.

Kittur, A., Chi, E.H., & Suh, B. (2008). Crowdsourcing user studies withMechanical Turk. Proceedings of the 26th annual Special Interest Groupon Computer–Human Interaction Conference on Human Factors in Com-puting Systems (pp. 453–456), New York, NY.

Li, P., Jiang, J., & Wang, Y. (2010). Generating templates of entity sum-maries with an entity-aspect model and pattern mining. Proceedings ofthe 48th annual meeting of the Association for Computational Linguis-tics (pp. 640–649).

Marks, D.F. (1973). Visual imagery differences in the recall of pictures.British Journal of Psychology, 64, 17–24.

Mason, W., & Watts, D.J. (2010). Financial incentives and the “perfor-mance of crowds.” Association for Computing Machinery’s SpecialInterest Group on Knowledge Discovery and Data Mining (SIGKDD)Explorations Newsletter, 11, 100–108.

McKeown, K., & Radev, D. (1995). Generating summaries of multiplenews articles. Proceedings of the 18th annual International Associationfor Computing Machinery Special Interest Group on InformationRetrieval (ACM SIGIR) Conference on Research and Development inInformation Retrieval (pp. 74–82).

Nenkova, A., & Passonneau, R. (2004). Evaluating content selection insummarization: The Pyramid method. Proceedings of the HumanLanguage Technology/North American Chapter of the Association forComputational Linguistics Conference (pp. 145–152), Boston, MA.

Radev, D., & McKeown, K. (1998). Generating natural language summa-ries from multiple on-line sources. Computational Linguistics, 24(3),470–500.

Rosch, E. (1999). Principles of categorization. Cambridge, MA: MIT Press,Concepts: Core Readings.

Sekine, S. (2006). On-demand information extraction. Proceedings of theJoint Conference of the International Committee on ComputationalLinguistics and the Association for Computational Linguistics onMain Conference Poster Sessions (pp. 731–738), Sydney, Australia.

Snow, R., O’Connor, B., Jurafsky, D., & Ng, A.Y. (2008). Cheap and fast,but is it good? Evaluating non-expert annotations for natural languagetasks. Proceedings of the Conference on Empirical Methods in NaturalLanguage Processing (pp. 254–263), Morristown, NJ.

Su, Q., Pavlov, D., Chow, J.H., & Baker, W.C. (2007). Internet-scalecollection of human-reviewed data. Proceedings of the 16th InternationalWorld Wide Web Conference (pp. 231–240), New York, NY.

Sudo, K., Sekine, S., & Grishman, R. (2003). An improved extractionpattern representation model for automatic IE pattern acquisition. Pro-ceedings of the 41st annual meeting of the Association for ComputationalLinguistics (pp. 224–231), Sapporo, Japan.

Yang, Y., Bansal, N., Dakka, W., Ipeirotis, P., Koudas, N., & Papadias, D.(2009). Query by document. Proceedings of the Second ACM Interna-tional Conference on Web Search and Data Mining (pp. 34–43), NewYork, NY.

12 JOURNAL OF THE AMERICAN SOCIETY FOR INFORMATION SCIENCE AND TECHNOLOGY—•• 2013DOI: 10.1002/asi