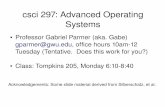

Distributed File Systems CSCI 444/544 Operating Systems Fall 2008.

-

date post

19-Dec-2015 -

Category

Documents

-

view

218 -

download

4

Transcript of Distributed File Systems CSCI 444/544 Operating Systems Fall 2008.

Distributed File Systems

CSCI 444/544 Operating Systems

Fall 2008

Agenda

•Distributed file systems • File service model•Directory service (naming)• Stateful vs stateless file servers•Caching•NFS vs AFS

What is a distributed file system?

Client

Client

Client

Client

File Servers

Network

Support network-wide sharing of files and disks

Distributed File SystemDistributed file system (DFS) – a distributed

implementation of the classical time-sharing model of a file system, where multiple users share files and storage resources

A DFS manages set of dispersed storage devices

Overall storage space managed by a DFS is composed of different, remotely located, smaller storage spaces

There is usually a correspondence between constituent storage spaces and sets of files

Benefits

Why are distributed file systems useful?• Access from multiple clients

– Same user on different machines can access same files

• Simplifies sharing– Different users on different machines can read/write to same

files

• Simplifies administration– One shared server to maintain (and backup)

• Improve reliability– Add RAID storage to server

ChallengesTransparent access

• User sees single, global file system regardless of location

Scalable performance• Performance does not degrade as more clients are added

Fault Tolerance• Client and server identify and respond appropriately when other

crashes

Consistency• See same directory and file contents on different clients at same

time

Security• Secure communication and user authentication

Tension across these goals • Example: Caching helps performance, but hurts consistency

File Service

Two models for file services• upload/download: files move between server and

clients, few operations (read file & write file), simple, requires storage at client, good if whole file is accessed

• remote access: files stay at server, rich interface with many operations, less space at client, efficient for small accesses

File Service Model

Transfer Models

(a) upload/download model

(b) remote access model

(a)(b)

Directory Service

• Provides naming usually within a hierarchical file system

• Clients can have the same view (global root directory) or different views of the file system (remote mounting)

• Naming: mapping between logical and physical objects- Multilevel mapping – abstraction of a file that hides the details of how and where on the disk the file is actually stored

Transparency: a transparent DFS hides the location where in the network the file is stored

Naming Structures Location transparency – file name does not reveal

the file’s physical storage location• The file location is invisible to the user• File name still denotes a specific, although hidden, set of

physical disk blocks• Convenient way to share data

Location independence – file name does not need to be changed when the file’s physical storage location changes • Better file abstraction• Promotes sharing the storage space itself• Separates the naming hierarchy form the storage-devices

hierarchy

Naming Schemes

Files named by combination of their host name and local name; guarantees a unique systemwide name• Neither location transparent nor location independent

Attach remote directories to local directories, giving the appearance of a coherent directory tree• only previously mounted remote directories can be accessed

transparently

Total integration of the component file systems• A single global name structure spans all the files in the system• If a server is unavailable, some arbitrary set of directories on

different machines also becomes unavailable

Server System Structure

File + directory service• Cache directory hints at client to accelerate the path

name look up – directory and hints must be kept coherent

State information about clients at the server• stateless server: no client information is kept between

requests• stateful server: servers maintain state information about

clients between requests

Stateful File ServerMechanism

• Client opens a file• Server fetches information about the file from its disk,

stores it in its memory, and gives the client a connection identifier unique to the client and the open file

• Identifier is used for subsequent accesses until the session ends

• Server must reclaim the main-memory space used by clients who are no longer active

Increased performance• Fewer disk accesses• Stateful server knows if a file was opened for sequential

access and can thus read ahead the next blocks

Stateless File ServerAvoids state information by making each

request self-contained

Each request identifies the file and position in the file

No need to establish and terminate a connection by open and close operations

- run over UDP

Caching

Three possible places: server’s memory, client’s disk, client’s memory

• Caching in server’s memory: avoids disk access but still network access• Caching at client’s disk (if available): tradeoff between disk access and

remote memory access• Caching at client in main memory

- inside each process address space: no sharing at client- in the kernel: kernel involvement on hits- in a separate user-level cache manager: flexible and efficient if paging can

be controlled from user-level

Server-side caching eliminates coherence problem. Client-side cache coherence?

Caching

Cache ConsistencyIs locally cached copy of the data consistent with the

master copy?

Client-initiated approach (poll)• Client initiates a validity check• Server checks whether the local data are consistent with

the master copy– Delayed write in NFS

Server-initiated approach (push)• Server records, for each client, the (parts of) files it caches • When server detects a potential inconsistency, it must

react – Callback in AFS

Case Study: NFS

Sun’s Network File System• Introduced in 1980s, multiple versions (v2, v3, v4)• has become a common standard for distributed UNIX file access

Key idea #1: Stateless server• Server not required to remember anything (in memory)

– Which clients are connected, which files are open, ...

• Implication: All client requests have enough info to complete operation (self-contained)

– Example: Client specifies offset in file to write to

• One Advantage: Server state does not grow with more clients

Key idea #2: Idempotent server operations• Operation can be repeated with same result (no side effects)

Basic Feature

NFS runs over LANs (even over WANs – slowly)

Basic feature• allow a remote directory to be “mounted” (spliced) onto

a local directory• Gives access to that remote directory and all its

descendants as if they were part of the local hierarchy

Pretty much exactly like a “local mount” or “link” on UNIX• except for implementation and performance …

NFS Overview

NFS is based on the remote access model• Remote Procedure Calls (RPC) for communication between client

and server

Client Implementation• Provides transparent access to NFS file system

– UNIX contains Virtual File system layer (VFS)– Vnode: interface for procedures on an individual file

• Translates vnode operations to NFS RPCs

Server Implementation• Stateless: Must not have anything only in memory• Implication: All modified data written to stable storage before

return control to client

NSF Protocols

Two client-server protocols:

• The first NFS protocol handles mounting- Establishes initial logical connection between server and client

• The second NFS protocol is for directory and file access

NFS Mounting Protocol

• A client can send a path name to a server and request permission to mount that directory somewhere in its directory hierarchy

• If the path name is legal and the directory specified has been exported, the server returns a file handle to the client

• Export list – specifies local file systems that server exports for mounting, along with names of client machines that are permitted to mount them

• File handle contains fields uniquely identifying the file system type, disk, i-node number of the directory, access right information

04/18/23 23

2nd NSF Protocols

Second NFS Protocol is for directory and file access•Clients send messages to servers to

manipulate directories, read and write files•Clients access file attributes•NFS ‘read’ operation

– Lookup operation – returns file handle– Read operation – uses file handle to read the file– Advantage: stateless server – open() and close() is intentionally missed

Basic NFS Operations

Operations• lookup(dirfh, name) returns (fh, attributes)

– Use mount protocol for root directory• create(dirfh, name, attr) returns (newfs, attr)• remove(dirfs, name) returns (status)• read(fh, offset, count) returns (attr, data)• write(fh, offset, count, data) returns attr• gettattr(fh) returns attr

Three Major Layers of NFS Architecture

UNIX file-system interface (based on the open, read, write, and close calls, and file descriptors)

Virtual File System (VFS) layer – distinguishes local files from remote ones, and local files are further distinguished according to their file-system types• The VFS activates file-system-specific operations to handle local

requests according to their file-system types • Calls the NFS protocol procedures for remote requests

NFS service layer – bottom layer of the architecture• Implements the NFS protocol over RPC

Schematic View of NFS Architecture

VFS

Virtual file system provides a standard interface, using vnodes as file handles.

vnode -- network wide unique (like an inode but for a network) • A vnode describes either a local or remote file

Path name look up (past mount point) requires RPC per name.

Client cache of remote vnodes for remote directory names

Mapping UNIX System Calls to NFS Operations

Unix system call: fd = open(“/dir/foo”)• Traverse pathname to get filehandle for foo

– dirfh = lookup(rootdirfh, “dir”);– fh = lookup(dirfh, “foo”);

• Record mapping from fd file descriptor to fh NFS filehandle• Set initial file offset to 0 for fd• Return fd file descriptor

Unix system call: read(fd,buffer,bytes)• Get current file offset for fd• Map fd to fh NFS filehandle• Call data = read(fh, offset, bytes) and copy data into buffer• Increment file offset by bytes

Unix system call: close(fd)• Free resources associated with fd

04/18/23 29

NSF Layer Structure

Client-side Caching

Caching needed to improve performance• Reads: Check local cache before going to server• Writes: Only periodically write-back data to server• Avoid contacting server

– Avoid slow communication over network– Server becomes scalability bottleneck with more clients

Two client caches• data blocks• attributes (metadata)

Case Study: AFSA distributed computing environment (Andrew) under

development since 1983 at Carnegie-Mellon University, purchased by IBM and released as Transarc DFS, now open sourced as OpenAFS

AFS tries to solve complex issues such as uniform name space, location-independent file sharing, client-side caching (with cache consistency), secure authentication (via Kerberos)• Also includes server-side caching (via replicas), high

availability• Can span 5,000 workstations• Consists of dedicated file servers

AFS Overview• AFS is based on the upload/download model

– Opening a file causes it to be cached, in its entirety, on the local disk

– Client does as much as possible locally and interact as little as possible with the server

• AFS is stateful– The server keeps track of which files are opened by which clients

• AFS provides location independence, as well as location transparency

– The physical storage location of the file can be changed, without having to change the path of file

– Has a single name space

• AFS provides callback promise– Inform all clients with open files about any updates made to that file by

a client

AFS

Client's view

AFS – Andrew File System• workstations grouped into cells for administrative purposes • note position of venus and vice

/afs

File Sharing

AFS enables users to share remote files as easily as local files. To access a file on a remote machine in AFS, you simply specify the file's pathname.

AFS users can see and share all the files under the /afs root directory, given the appropriate privileges. An AFS user who has the necessary privileges can access a file in any AFS cell, simply by specifying the file's pathname. File sharing in AFS is not restricted by geographical distances or operating system differences

Another Client View

/ (root)

tmp bin cmuvmunix. . .

bin

SharedLocal

Symboliclinks

ANDREW File OperationsAndrew caches entire files from servers into local disk

• A client workstation interacts with Vice servers only during opening and closing of files

Venus – caches files from Vice when they are opened, and stores modified copies of files back when they are closed

Reading and writing bytes of a file are done by the kernel without Venus intervention on the cached copy

Venus caches contents of directories and symbolic links, for path-name translation

Exceptions to the caching policy are modifications to directories that are made directly on the server responsibility for that directory

AFS NamingClients are presented with a partitioned space of file names: a

local name space and a shared name space

Dedicated servers, called Vice, present the shared name space to the clients as an homogeneous, identical, and location transparent file hierarchy

The local name space is the root file system of a workstation, from which the shared name space descends

Workstations run the Virtue protocol to communicate with Vice, and are required to have local disks where they store their local name space

Servers collectively are responsible for the storage and management of the shared name space

ANDREW Shared Name SpaceThe storage disks in a computer are divided into

sections called partitions. AFS further divides partitions into units called volumes

A fid identifies a Vice file or directory - A fid is 96 bits long and has three equal-length components:• volume number• vnode number – index into an array containing the inodes of

files in a single volume• uniquifier – ensures that file identifiers are not reused

Fids are location transparent; therefore, file movements from server to server do not invalidate cached directory contents

Location information is kept on a volume basis, and the information is replicated on each server

AFS caching

Write-on-close: writes are propagated to the server side copy only when the client closes the local copy of the file

The client assumes that its cache is up to date, unless it receives a callback message from the server saying otherwise• on file open, if the client has received a callback on the

file, it must fetch a new copy; otherwise it uses its locally-cached copy

ANDREW ImplementationClient processes are interfaced to a UNIX kernel with

the usual set of system calls

Venus carries out path-name translation component by component

The UNIX file system is used as a low-level storage system for both servers and clients• The client cache is a local directory on the workstation’s

disk

Both Venus and server processes access UNIX files directly by their inodes to avoid the expensive path name-to-inode translation routine

System call interception in AFS

UNIX filesystem calls

Non-local fileoperations

Workstation

Localdisk

Userprogram

UNIX kernel

Venus

UNIX file system

Venus

AFS Cache Implementation Venus manages two separate caches:

• one for status• one for data

LRU algorithm used to keep each of them bounded in size

The status cache is kept in virtual memory to allow rapid servicing of stat (file status returning) system calls

The data cache is resident on the local disk, but the UNIX I/O buffering mechanism does some caching of the disk blocks in memory that are transparent to Venus

AFS Commands

AFS commands are grouped into three categories:

File server commands (fs) - lists AFS server information - set and list ACLs (access control list)Protection commands (pts) - create and manage (ACL) groupsAuthentication commands - klog, unlog, kpasswd, tokens