02 mc will v6 operation and support system(by song yongliang&zhao chunping)

Decoupling Sparsity and Smoothness in the Discrete Hierarchical Dirichlet Process Chong Wang and...

-

Upload

annabella-tucker -

Category

Documents

-

view

224 -

download

0

Transcript of Decoupling Sparsity and Smoothness in the Discrete Hierarchical Dirichlet Process Chong Wang and...

Decoupling Sparsity and Smoothness in the Discrete Hierarchical Dirichlet Process

Chong Wang and David M. BleiNIPS 2009

Discussion led by Chunping Wang

ECE, Duke University

March 26, 2010

Outline

• Motivations

• LDA and HDP-LDA

• Sparse Topic Models

• Inference Using Collapsed Gibbs sampling

• Experiments

• Conclusions

1/16

Motivations

2/16

• Topics modeling with the “bag of words” assumption

• An extension of the HDP-LDA model

• In the LDA and the HDP-LDA models, the topics are drawn from an exchangeable Dirichlet distribution with a scale parameter . As approaches zero, topics will be

o sparse: most probability mass on only a few terms

o less smooth: empirical counts dominant

• Goal: to decouple sparsity and smoothness so that these two properties can be achieved at the same time.

• How: a Bernoulli variable for each term and each topic is introduced.

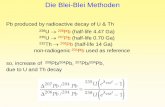

LDA and HDP-LDA

3/16

wzθα β

ND K

LDA

u

)(Dir~ αθd

)(Mult~ ddiz θ

)(Mult~dizdiw β

)(Dir~ uβ k

wzθα β

ND

HDP-LDA

u),(DP~ αθ d

)(GEM~ α

)(Dir~ uβ k

)(Mult~ ddiz θ

)(Mult~dizdiw β

topic : k

document : d

word : i

topic : k

document : d

word : i

Nonparametric form of LDA, with the

number of topics unbounded

Base measure

weights

Sparse Topic Models

4/16

)(Dir~ uβ

The size of the vocabulary is V

)(Dir~ bβ

Defined on a V-1-simplex Defined on a sub-simplex specified by b

b : a V-length binary vector composed of V Bernoulli variables

)(Bern~ kkvb ),(Beta~ srk one selection proportion for each topic

Sparsity: the pattern of ones in , controlled by

Smoothness: enforced over terms with non-zero ’s through

kkb

kvb Decoupled!

Sparse Topic Models

5/16

Inference Using Collapsed Gibbs sampling

6/16

Inference Using Collapsed Gibbs sampling

6/16

As in the HDP-LDA

Topic proportions and topic distributions are integrated out. θ β

Inference Using Collapsed Gibbs sampling

6/16

Topic proportions and topic distributions are integrated out.

The direct-assignment method based on the Chinese restaurant franchise (CRF) is used for and an augmented variable, table counts

θ β

As in the HDP-LDA

α,z m

Inference Using Collapsed Gibbs sampling

7/16

Notation:

: # of customers (words) in restaurant d (document) eating dish k (topic)

: # of tables in restaurant d serving dish k

: marginal counts represented with dots

K, u: current # of topics and new topic index, respectively

: # of times that term v has been assigned to topic k

: # of times that all the terms have been assigned to topic k

conditional density of under the topic k given all data except

dkn

dkm

..... ,,, mmmn kdd

)(vkn

(.)kn

)},'',:,{|()|( '''''' diidkzzwvwpvwf idididdidiw

kdi

diw diw

Inference Using Collapsed Gibbs sampling

8/16

Recall the direct-assignment sampling method for the HDP-LDA

Sampling topic assignments

if a new topic is sampled, then sample , and let and and

Sampling stick length

Sampling table counts

),,,(Dir~| .1. Kmm mα

ukwf

kwfnkzp

diw

uu

diw

kkdidkdidi

di

di

if)(

usedpreviouslyif)()(),,|( ,

αmz

newk

mkdk

dkk

kdkdk mns

nmmp ))(,(

)(

)(),,|(

αmz

Inference Using Collapsed Gibbs sampling

8/16

Recall the direct-assignment sampling method for HDP-LDA

Sampling topic assignments

ukwf

kwfnkzp

diw

uu

diw

kkdidkdidi

di

di

if)(

usedpreviouslyif)()(),,|( ,

αmz

)(

,)( vdikdi

wk nvwf difor HDP-LDA

kvv

dikkdiw

k bnvwf di )()|( )(,

bfor sparse TM

Instead, the authors integrate out for faster convergence. kb

k

dikidididkkkdikkdi

wk diidkzzwpvwpdvwf

b

bβββ )},'',:,{|,()|()|( ''''''

Since there are total possible , this is the central computational challenge for the sparse TM.

kbV2

straightforward

Inference Using Collapsed Gibbs sampling

9/16

where

define vocabularyset of terms that have word assignments in topic k

kBv

kvbX

This conditional probability depends on the selector proportions.

Inference Using Collapsed Gibbs sampling

10/16

Inference Using Collapsed Gibbs sampling

10/16

Inference Using Collapsed Gibbs sampling

11/16

Sampling Bernoulli parameter ( using as an auxiliary variable)

Sampling hyper-parameters o : with Gamma(1,1) priorso : Metropolis-Hastings using symmetric Gaussian proposal

Estimate topic distributions from any single sample of z and b

kb

define set of terms with an “on” b

o sample conditioned on ;o sample conditioned on .

kb

kbk

k

,

β

sparsity

smoothness on the selected terms

Experiments

12/16

arXiv: online research abstracts, D = 2500, V = 2873

Nematode Biology: research abstracts, D = 2500, V = 2944

NIPS: NIPS articles between 1988-1999, V = 5005. 20% of words for each paper are used.

Conf. abstracts: abstracts from CIKM, ICML, KDD, NIPS, SIGIR and WWW, between 2005-2008, V = 3733.

Four datasets:

Two predictive quantities:

where the topic complexity kk Bcomplexity

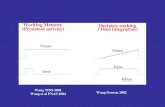

Experiments

13/16

better perplexity, simpler models

larger : smoother

less topics

similar # of terms

Experiments

14/16

Experiments

15/16

small (<0.01)

Experiments

15/16

small (<0.01)

lack of smoothness

( )

(.)ˆ

vk

kvk

n

n V

Experiments

15/16

small (<0.01)

Need more topics to explain all kinds of patterns of empirical word counts

lack of smoothness

( )

(.)ˆ

vk

kvk

n

n V

Experiments

15/16

Infrequent words populate “noise” topics.

small (<0.01)

Need more topics to explain all kinds of patterns of empirical word counts

lack of smoothness

Vn

n

k

vk

kv

(.)

)(

Conclusions

16/16

A new topic model in the HDP-LDA framework, based on the “bag of words” assumption;

Main contributions:

• Decoupling the control of sparsity and smoothness by introducing binary selectors for term assignments in each topic;

• Developing a collapsed Gibbs sampler in the HDP-LDA framework.

Held out performance is better than the HDP-LDA.

![Exploiting Potential Citation Papers in Scholarly Paper ...kanmy/talks/JCDL13-Kaz-Ver2.pdf · Scholarly Paper Recommendation [Wang and Blei., KDD’11]: Collaborative topic regression](https://static.fdocuments.in/doc/165x107/6006b895502554211a65849e/exploiting-potential-citation-papers-in-scholarly-paper-kanmytalksjcdl13-kaz-ver2pdf.jpg)