CS 378 Programming for Performance Single-Thread Performance: Pipelining

description

Transcript of CS 378 Programming for Performance Single-Thread Performance: Pipelining

CS 378Programming for Performance

Single-Thread Performance: Pipelining

Adopted fromSiddhartha Chatterjee

Spring 2009

2

A B C D

Pipelining: It’s Natural! Laundry Example Ann, Brian, Cathy, Dave

each have one load of clothes to wash, dry, and fold

Washer takes 30 minutes

Dryer takes 40 minutes

“Folder” takes 20 minutes

3

Sequential laundry takes 6 hours for 4 loads If they learned pipelining, how long would laundry take?

A

B

C

D

30 40 20 30 40 20 30 40 20 30 40 20

6 PM 7 8 9 10 11 Midnight

Task

Order

Time

Sequential Laundry

4

Pipelined laundry takes 3.5 hours for 4 loads

A

B

C

D

6 PM 7 8 9 10 11 Midnight

Task

Order

Time

30 40 40 40 40 20

Pipelined Laundry: Start work ASAP

5

A

B

C

D

6 PM 7 8 9

Task

Order

Time

30 40 40 40 40 20

Pipelining Lessons Pipelining doesn’t help latency

of single task, it helps throughput of entire workload

Pipeline rate limited by slowest pipeline stage

Multiple tasks operating simultaneously

Potential speedup = Number pipe stages

Unbalanced lengths of pipe stages reduces speedup

Time to “fill” pipeline and time to “drain” it reduces speedup

6

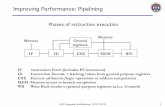

Ifetch: Instruction fetch Fetch the instruction from the instruction memory

Reg/Dec: Instruction decode and register read Exec: Calculate the memory address Mem: Read the data from the data memory WrB: Write the data back to the register file

Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5

Ifetch Reg/Dec Exec Mem WrBLoad

The Five Stages of a Load Instruction

7

The load instruction has 5 stages:

Five independent functional units to work on each stage

• Each functional unit is used only once! A second load can start doing Ifetch as soon as the first load finishes

its Ifetch stage Each load still takes five cycles to complete

• The latency of a single load is still 5 cycles The throughput is much higher

• CPI approaches 1 • Cycle time is ~1/5th the cycle time of the single-cycle implementation

Instructions start executing before previous instructions complete execution

Ifetch Reg/Dec Exec Mem WrBLoad

Key Ideas Behind Instruction Pipelining

CPI Cycle time

8

Pipelining the Load Instruction

The five independent pipeline stages are: Read next instruction: The Ifetch stage Decode instruction and fetch register values: The Reg/Dec stage Execute the operation: The Exec stage Access data memory: The Mem stage Write data to destination register: The WrB stage

One instruction enters the pipeline every cycle One instruction comes out of the pipeline (completed) every cycle The “effective” CPI is 7/3 (tends to 1)

Clock

Cycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7

Ifetch Reg/Dec Exec Mem WrB1st lw

Ifetch Reg/Dec Exec Mem WrB2nd lw

Ifetch Reg/Dec Exec Mem WrB3rd lw

9

Wr

ClkCycle 1

Multiple Cycle Implementation:

Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8 Cycle 9Cycle 10

Load Ifetch Reg Exec Mem Wr

Ifetch Reg Exec MemLoad Store

Pipelined Implementation:

Ifetch Reg Exec Mem WrStore

Clk

Single Cycle Implementation:

Load Store Waste

IfetchR-type

Ifetch Reg Exec Mem WrR-type

Cycle 1 Cycle 2

Ifetch Reg Exec Mem

Single Cycle vs. Multiple Cycle vs. Pipelined

10

Basics of Pipelining Time

Discrete time steps Represented as 1, 2, 3, …

Space Pipe stages or segments (things that do processing) Represented as F, D, X, M, W (for the DLX pipeline)

Operands Instructions or data items Things that flow through, and are processed by, the pipeline Represented as a, b, c, …

In drawing pipelines, we conceal the obvious fact that each operand undergoes some changes in each pipe stage

11

Basic Terms Filling a pipeline Flushing or draining a pipeline Stage or segment delay

Each stage may have a different stage delay Beat time (= max stage delay) Number of stages End-to-end latency

number of stages × beat time Stages are separated by latches (registers)

12

Speedup & Throughput of a Linear Pipeline

ttNntN

TN

NnNn

nNTT

nN

nNtT

tNntT

nNtTtNn

p

p

seq

p

p

seq

1)1(

Throughput

),min(1

pipeline of Speedup

)CPI1( 11 pipelining with CPI

)1( pipelining with timeExecution

pipelining without timeExecution delay Stage

1 operands ofNumber 1 stages pipe ofNumber

13

End of Cycle 4: Load’s Mem, R-type’s Exec, Store’s Reg, Beq’s Ifetch

End of Cycle 5: Load’s WrB, R-type’s Mem, Store’s Exec, Beq’s Reg

End of Cycle 6: R-type’s WrB, Store’s Mem, Beq’s Exec End of Cycle 7: Store’s WrB, Beq’s Mem

ClockCycle 1 Cycle 2 Cycle 3 Cycle 4 Cycle 5 Cycle 6 Cycle 7 Cycle 8

Ifetch Reg/Dec Exec Mem WrB0: Load

Ifetch Reg/Dec Exec Mem WrB4: R-type

Ifetch Reg/Dec Exec Mem WrB8: Store

Ifetch Reg/Dec Exec Mem WrB12: Beq (target is 1000)

End ofCycle 4

End ofCycle 5

End ofCycle 6

End ofCycle 7

A More Extensive Pipelining Example

14

Data Hazard: SetupInstruction u D(u): domain of instruction u

The set of all memory locations, registers (including implicit ones), flags, condition codes etc. that may be read by instruction u

R(u): range of instruction u The set of all memory locations, registers (including implicit ones), flags, condition codes etc. that may be written by instruction u

Instruction uInstruction v

u < v is a relation that means that instructionu precedes instruction v in the original programorder (i.e., on an unpipelined machine)• The relation < is irreflexive, anti-symmetric, and transitive

15

Data Hazard: Definition

Given two instructions u and v, such that u < v, there is adata hazard between them if any of the following conditionsholds:

hazard (WAW) Write-After-ite Wr)()(hazard (WAR) Read-After-ite Wr)()(hazard (RAW) Write-After-Read )()(

vRuRvRuDvDuR

The existence of one of these conditions means that a changein the order of reading/writing operands by the instructions fromthe order seen by sequentially executing instructions on anunpipelined machine could violate the intended semantics.

16

Data Hazard: Effect on Compiler

Intended semantics Semantic violationRAW add r1, r2, r3 add r4, r1, r2

add r4, r1, r2 add r1, r2, r3WAR add r1, r2, r3 add r2, r3, r4

add r2, r3, r4 add r1, r2, r3WAW add r1, r2, r3 add r1, r4, r5

add r1, r4, r5 add r1, r2, r3

17

Pipelining changes relative timing of instructions Reads and writes occur at fixed positions of the pipeline So, if two instructions are “too close” (function of pipeline

structure), order of reads and writes could change and produce incorrect values

This instruction sequence exchanges values in R1 and R2 On unpipelined DLX, back-to-back execution of sequence

produces correct results On current pipelined DLX, initiation of sequence in

consecutive cycles produces incorrect results Reads are early, writes are late, so RAW hazards would be violated

XOR R2, R2, R1XOR R1, R1, R2XOR R2, R2, R1

Why Data Hazards Occur

18

Data Dependence and Hazards True (value, flow) dependence between instructions u and v

means u produces a result value that v uses This is a producer-consumer relationship This is a dependence based on values, not on the names of the

containers of the values Every true dependence is a RAW hazard Not every RAW hazard is a true dependence

Any RAW hazard that cannot be removed by renaming is a true dependence

Original program1: A = B+C2: A = D+E3: G = A+H

True dependence: (2,3)RAW hazard: (1,3), (2,3)

Renamed program1: X = B+C2: A = D+E3: G = A+H

True dependence: (2,3)RAW hazard: (2,3)

19

More on Hazards RAW hazards corresponding to value dependences are

most difficult to deal with, since they can never be eliminated The second instruction is waiting for information produced by the first

instruction WAR and WAW hazards are name dependences

Two instructions happen to use the same register (name), although they don’t have to

Can often be eliminated by renaming, either in software or hardware• Implies the use of additional resources, hence additional cost• Renaming is not always possible: implicit operands such as accumulator,

PC, or condition codes cannot be renamed These hazards don’t cause problems for DLX pipeline

• Relative timing does not change even with pipelined execution, because reads occur early and writes occur late in pipeline

20

Data Hazard: Effect on Pipelining1 2 3 4 5 6 7 8 9

ADD F D X M WSUB F D X M WAND F D X M WOR F D X M W

XOR F D X M W

1 2 3 4 5 6 7 8 9F 1 2 3 4 5D - 1 2 3 4 5X - - 1 2 3 4 5M - - - 1 2 3 4 5W - - - - 1 2 3 4 5

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25ADD F D X M WSUB F D X M WAND F D X M WOR F D X M W

XOR F D X M W

1:ADD R1, R2, R32:SUB R4, R5, R13:AND R6, R1, R74:OR R8, R1, R95:XOR R10, R1, R11

RAW hazards(1,2), (1,3), (1,4),(1,5)

If executed in the pipeline discussed so far, this data hazard would lead to incorrect execution for the SUB and AND instructions, as they would access the old value of register R1.

21

Solution: Interlocks and Stalling

Add interlocks (additional control logic) between pipeline stages to detect hazard condition and to stall instruction in current pipeline stage until preceding instructions move sufficiently forward in the pipeline to guarantee correct results LW stalls in D stage waiting for ADD to complete its write to R1 in cycle 5

• We are assuming a split-phase clock, so that the write happens in the first half of cycle 5 and the read in the second half of cycle 5, so that LW can move to X stage in cycle 6

This causes following instructions to stall as well (e.g., SW stalls in F stage because LW is stalled in D stage)

It would also be possible to achieve a similar effect by inserting NOPs between the instructions as spacers

1:ADD R1, R2, R32:LW R4, 0(R1)3:SW 12(R1), R4

1 2 3 4 5 6 7 8 9 10 11ADD F D X M WLW F D s s X M WSW F s s D s s X M W

Execution with interlocks: stall as necessary

22

Optimization: Value Forwarding There is slack in how soon a value is actually available and

how late it is actually required in the pipeline Result of R-type available at end of X stage Operand of dependent R-type not needed until beginning of X stage

Communication of values among instructions happens through register file Globally known names of containers of values Accessed at fixed stages of pipeline (read in D, written in W)

Forwarding/bypassing/short-circuiting corresponds to establishing a direct path between the producer of a value and its consumer, bypassing the container Allows us to exploit slack Requires additional resources (forwarding paths and controller)

23

Example of Forwarding

1:ADD R1, R2, R32:LW R4, 0(R1)3:SW 12(R1), R4

1 2 3 4 5 6 7 8 9 10 11ADD F D X M WLW F D s s X M WSW F s s D s s X M W

Execution with interlocks: stall as necessary

1 2 3 4 5 6 7ADD F D X M WLW F D X M WSW F D X M W

Execution with forwarding1 2 3 4 5 6 7

F 1 2 3D - - 2 3X - - 1 2 3M - - - 1 2 3W - - - - 1 2 3

24

Forwarding & Stalling

L1:LW R2, 40(R8)L2:LW R3, 60(R8)A:ADD R4, R2, R3S:SW 60(R8), R4

1 2 3 4 5 6 7 8 9L1 F D X M WL2 F D X M WA F D s X M WS F s D X M W

1 2 3 4 5 6 7 8 9F L1 L2 A S SD - L1 L2 A A SX - - L1 L2 - A SM - - - L1 L2 - A SW - - - - L1 L2 - A S

• Load has a latency of one cycle that cannot be hidden, as seen between L2 and A

25

Compile-Time SchedulingA = B + C;D = E - F;

L1: LW Rb, BL2: LW Rc, CA: ADD Ra, Rb, RcS1: SW A, RaL3: LW Re, EL4: LW Rf, FS: SUB Rd, Re, RfS2: SW D, Rd

L1: LW Rb, BL2: LW Rc, CL3: LW Re, EA: ADD Ra, Rb, RcL4: LW Rf, FS1: SW A, RaS: SUB Rd, Re, RfS2: SW D, Rd

1 2 3 4 5 6 7 8 9 10 11 12 13 14F L1 L2 A S1 S1 L3 L4 S S2 S2D L1 L2 A A S1 L3 L4 S S S2X L1 L2 A S1 L3 L4 S S2M L1 L2 A S1 L3 L4 S S2W L1 L2 A S1 L3 L4 S S2

1 2 3 4 5 6 7 8 9 10 11 12F L1 L2 L3 A L4 S1 S S2D L1 L2 L3 A L4 S1 S S2X L1 L2 L3 A L4 S1 S S2M L1 L2 L3 A L4 S1 S S2W L1 L2 L3 A L4 S1 S S2

26

Control Hazard A peculiar kind of RAW hazard involving the program

counter PC written by branch instruction PC read by instruction fetch unit (not another instruction)

Possible misbehavior is that instructions fetched and executed after the branch instruction are not the ones specified by the branch instruction

27

More on Control Hazards Branch delay: the length of the control hazard What determines branch delay?

We need to know that we have a branch instruction We need to have the branch target address We need to know the branch outcome So, we have to wait until we know all of these quantities

DLX pipeline as currently designed …computes branch target address (BTA) in EX …computes branch outcome in EX …changes PC in MEM

To reduce branch delay, we need to move these to earlier pipeline stages Can’t move up beyond ID (need to know it’s a branch instruction)

28

Delayed Branches on DLX One branch delay slot on redesigned DLX Always execute instruction in branch delay slot (irrespective

of branch outcome) Question: What instruction do we put in the branch delay

slot? Fill with NOP (always possible, penalty = 1) Fill from before (not always possible, penalty = 0) Fill from target (not always possible, penalty = 1-T)

• BTA is dynamic• BTA is another branch

Fill from fall-through (not always possible, penalty = T)

29

Pipelining Multicycle Operations Assume five-stage pipeline Third stage (execution) has two functional units E1 and E2

Instruction goes through either E1 or E2, but not both E1 and E2 are not pipelined Stage delay of E1 = 2 cycles Stage delay of E2 = 4 cycles No buffering on inputs of E1 and E2

Stage delay of other stages = 1 cycle Consider an instruction sequence of five instructions

Instructions 1, 3, 5 need E1 Instructions 2, 4 need E2

30

Space-Time Diagram: Multicycle OperationsDelay 1 2 3 4 5 6 7 8 9 10 11 12 13

1 IF 1 2 3 4 5 5 51 ID 1 2 3 4 4 4 52 E1 1 1 3 3 5 54 E2 2 2 2 2 4 4 4 41 MEM 1 3 2 5 41 WB 1 3 2 5 4

Out-of-order completion 3 finishes before 2, and 5 finishes before 4

Instructions may be delayed after entering the pipeline because of structural hazards Instructions 2 and 4 both want to use E2 unit at same time Instruction 4 stalls in ID unit This causes instruction 5 to stall in IF unit

31

Floating-Point Operations in DLX

IF ID

MEM

WB

A1 A2 A3 A4

M1 M2 M3 M4 M5 M6 M7

EX

DIV (24)

Structural hazard:not fully pipelined

Structural hazard:instructions havevarying running

times

WAW hazardspossible; WAR

hazards notpossible

Longer operationlatency impliesmore frequentstalls for RAW

hazards

Out-of-ordercompletion; hasramifications for

exceptions