Cross-lingual Structure Transfer for Relation and Event ...ate a representation from the...

Transcript of Cross-lingual Structure Transfer for Relation and Event ...ate a representation from the...

Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processingand the 9th International Joint Conference on Natural Language Processing, pages 313–325,Hong Kong, China, November 3–7, 2019. c©2019 Association for Computational Linguistics

313

Cross-lingual Structure Transfer for Relation and Event ExtractionAnanya Subburathinam1*, Di Lu1* , Heng Ji2,

Jonathan May3, Shih-Fu Chang4, Avirup Sil5, Clare Voss61Computer Science Department, Rensselaer Polytechnic Institute

2Computer Science Department, University of Illinois at Urbana-Champaign3University of Southern California Information Sciences Institute

4Electrical Engineering Department, Columbia University5IBM T.J. Watson Research Center

6Army Research [email protected]

Abstract

The identification of complex semantic struc-tures such as events and entity relations, al-ready a challenging Information Extractiontask, is doubly difficult from sources writtenin under-resourced and under-annotated lan-guages. We investigate the suitability of cross-lingual structure transfer techniques for thesetasks. We exploit relation- and event-relevantlanguage-universal features, leveraging bothsymbolic (including part-of-speech and depen-dency path) and distributional (including typerepresentation and contextualized representa-tion) information. By representing all entitymentions, event triggers, and contexts into thiscomplex and structured multilingual commonspace, using graph convolutional networks, wecan train a relation or event extractor fromsource language annotations and apply it tothe target language. Extensive experimentson cross-lingual relation and event transferamong English, Chinese, and Arabic demon-strate that our approach achieves performancecomparable to state-of-the-art supervisedmod-els trained on up to 3,000 manually annotatedmentions: up to 62.6% F-score for RelationExtraction, and 63.1% F-score for Event Ar-gument Role Labeling. The event argumentrole labeling model transferred from Englishto Chinese achieves similar performance as themodel trained from Chinese. We thus findthat language-universal symbolic and distribu-tional representations are complementary forcross-lingual structure transfer.

1 Introduction

Advanced Information Extraction (IE) tasks entailpredicting structures, such as relations between en-tities, and events involving entities. Given a pair ofentity mentions, Relation Extraction aims to iden-tify the relation between the mentions and clas-sify it by predefined type. Event Extraction aimsto identify event triggers and their arguments in

Figure 1: Information Network example constructed byEvent (blue) and Relation (green dashed) Extraction.

unstructured texts and classify them respectivelyby predefined types and roles. As Figure 1 il-lustrates, both tasks entail predicting an informa-tion network (Li et al., 2014) for each sentence,where the entity mentions and event triggers arenodes, and the relations and event-argument linksare edges labeled with their relation and argumentroles, respectively.There are certain relations and events that are

of primary interest to a given community and soare reported predominantly in the low-resourcelanguage data sources available to that commu-nity. For example, though English language newswill occasionally discuss the Person Aung SanSuu Kyi, the vast majority of Physical-Locatedrelations and Meeting events involving her areonly reported locally in Burmese news, and thus,without Burmese relation and event extraction,a knowledge graph of this person will lack thisavailable information. Unfortunately, publicly-available gold-standard annotations for relationand event extraction exist for only a few languages(Doddington et al., 2004; Getman et al., 2018), andBurmese is not among them. Compared to otherIE tasks such as name tagging, the annotations forRelation and Event Extraction are also more costlyto obtain, because they are structured and requirea rich label space.Recent research (Lin et al., 2017) has found that

relational facts are typically expressed by identi-fiable patterns within languages and has shown

314

Tree Representations

Graph Convolutional Networks Encoder

Max-pooling

Softmax

Common Space Structural Representations

ff f f

hm1 hsent hm2ht

TRANSPORT (event)

Origin-ArgArtifact-Arg PHYS-LOCATED(relation)

Concatenation

DET

AUX

ADP

DET

VERB

nsubj:pass

det

obl

aux:pass

amodcase

det

VERB

NOUN(PER)

NOUN(FAC)

Команды врачей были замечены в упакованныхотделениях скорой помощи

(teams of doctors were seen in packed emergency rooms)

NOUN

AUX

ADP

NOUN

VERB

nsubj:pass

nmod

obl

aux:pass

amodcase

nmod

VERB

NOUN(PER)

NOUN(FAC)

amod multilingual wordembeddingpart-of-speechembeddingentity-typeembedding

ADJ

ff f f

hm1 hsent hm2ht

PHYS-LOCATED(relation)

The detainees were taken to aprocessing center

dependencyembedding

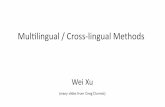

Figure 2: Multilingual common semantic space and cross-lingual structure transfer.

that the consistency in patterns observed acrosslanguages can be used to improve relation extrac-tion. Inspired by their results, we exploit language-universal features relevant to relation and event ar-gument identification and classification, by wayof both symbolic and distributional representa-tions. For example, language-universal POS tag-ging and universal dependency parsing is avail-able for 76 languages (Nivre et al., 2018), en-tity extraction is available for 282 languages (Panet al., 2017), and multi-lingual word embeddingsare available for 44 languages (Bojanowski et al.,2017; Joulin et al., 2018). As shown in Figure 2,even for distinct pairs of entity mentions (coloredpink and blue, in both English and Russian), thestructures share similar language-universal sym-bolic features, such as a common labeled depen-

dency path, as well as distributional features, suchas multilingual word embeddings.

Based on these language-universal representa-tions, we then project all entity mentions, eventtriggers and their contexts into one multilingualcommon space. Unlike recent work on multilin-gual common space construction that makes use oflinear mappings (Mikolov et al., 2013; Rothe et al.,2016; Zhang et al., 2016; Lazaridou et al., 2015;Xing et al., 2015; Smith et al., 2017) or canonicalcorrelation analysis (CCA) (Ammar et al., 2016;Faruqui and Dyer, 2014; Lu et al., 2015) to trans-fer surface features across languages, our majorinnovation is to convert the text data into struc-tured representations derived from universal de-pendency parses and enhanced with distributionalinformation to capture individual entities as well

315

as the relations and events involving those entities,so we can share structural representations acrossmultiple languages.Then we construct a novel cross-lingual struc-

ture transfer learning framework to project sourcelanguage (SL) training data and target language(TL) test data into the common semantic space, sothat we can train a relation or event extractor fromSL annotations and apply the resulting extractorto TL texts. We adopt graph convolutional net-works (GCN) (Kipf and Welling, 2017; Marcheg-giani and Titov, 2017) to encode graph structuresover the input data, applying graph convolutionoperations to generate entity and word represen-tations in a latent space. In contrast to other en-coders such a Tree-LSTM (Tai et al., 2015), GCNcan cover more complete contextual informationfrom dependency parses because, for each word,it captures all parse tree neighbors of the word,rather than just the child nodes of the word. Usingthis shared encoder, we treat the two tasks of re-lation extraction and event argument role labelingas mappings from the latent space to relation typeand to event type and argument role, respectively.Extensive experiments on cross-lingual relation

and event transfer among English, Chinese, andArabic show that our approach achieves highlypromising performance on both tasks.

2 Model

2.1 Overview

Our cross-lingual structure transfer approach (seeFigure 2) consists of four steps: (1) Convert eachsentence in any language into a language-universaltree structure based on universal dependency pars-ing. (2) For each node in the tree structure, cre-ate a representation from the concatenation ofmultilingual word embedding, language-universalPOS embedding, dependency role embedding andentity-type embedding, so that all sentences, inde-pendent of their language, are represented withinone shared semantic space. (3) Adopt GCN togenerate contextualized word representations byleveraging information from neighbors derivedfrom the dependency parsing tree, and (4) Usingthis shared semantic space, train relation and eventargument extractors with high-resource languagetraining data, and apply the resulting extractors totexts of low-resource languages that do not haveany relation or event argument annotations.

2.2 Tree Representations

Most previous approaches regard a sentence asa linear sequence of words, and incorporatelanguage-specific information such as word or-der. Unlike sequence representations, tree repre-sentations such as constituency trees and depen-dency trees are typically constructed following acombination of syntactic principles and annotationguidelines designed by linguists. The resultingstructures, such as the verb – subject relationand the verb – object relation, are found acrosslanguages. In this paper, we choose dependencytrees as the sentence representations because thecommunity has made great efforts at developinglanguage-universal dependency parsing resourcesacross 83 languages (Nivre et al., 2016).We define the dependency-based tree represen-

tation for a sentence as G = (V,E), whereV = {v1, v2, ..., vN} is a set of words, and E ={e1, e2, ..., eM} is a set of language-universal syn-tactic relations. N is the number of words in thesentence and M is the number of dependency re-lations between words. To make this tree repre-sentation language-universal, we first convert eachtree node into a vector which is a concatenation ofthree language-universal representations at word-level: multilingual word embedding, POS embed-ding (Nivre et al., 2016), entity-type embedding,and dependency relation embedding. More detailsare reported in Section 3.2.

2.3 GCN Encoder

Structural information is important for relation ex-traction and event argument role labeling, thus weaim to generate contextualized word representa-tions by leveraging neighbors in dependency treesfor each node.Our GCN encoder is based on the monolingual

design by Zhang et al. (2018b). The graphicalsentence representation obtained from dependencyparsing of a sentence with N tokens is convertedinto an N × N adjacency matrix A, with addedself-connections at each node to help capture infor-mation about the current node itself, as in Kipf andWelling (2017). Here, Ai,j = 1 denotes the pres-ence of a directed edge from node i to node j in thedependency tree. Initially, each node contains dis-tributional information about the ith word, includ-ingword embeddingxw

i , embeddings for symbolicinformation including its POS tag xp

i , dependencyrelation xd

i and entity type xei .

316

We represent this initial representation h0i as fol-

lows:h(0)i = xw

i ⊕ xpi ⊕ xd

i ⊕ xei

Then, at the kth layer of convolution, the hiddenrepresentation is derived from the representationsof its neighbors at the (k − 1)th layer. Thus, thehidden representation at the kth layer for the ith

node is computed as:

h(k)i = ReLU

(N∑j=0

AijW(k)h

(k−1)j

di + b(k)

)

where W is the weight vector, b is the bias term,and di represents the degree of the ith node. Thedenominator in the equation denotes the normal-ization factor to neutralize the negative impact ofnode degree (Zhang et al., 2018b). The final hid-den representation of each node after the kth layeris the encoding of each word h(k)

i in our languageuniversal common space, and incorporates infor-mation about neighbors up to k hops away in thedependency tree.

2.4 Application on Relation ExtractionThe GCN encoder generates the final hidden rep-resentation, h(k)i , for each of the n nodes. We per-form max-pooling over these final node represen-tations to obtain a single vector representation forthe sentence, hs. Similar to previous work (Zhanget al., 2018b), we also use the following recipe toobtain relation type classification results for eachmention pair in a sentence: (1) max-pooling overthe final representations of the nodes represent-ing entity mentions, to get a single vector repre-sentation for each mention in a pair under con-sideration, hm1 and hm2 , (2) a concatenation ofthree results of max-pooling: ([hm1 ;hs;hm2 ]) tocombine contextual sentence information with en-tity mention information (Santoro et al., 2017;Lee et al., 2017; Zhang et al., 2018b), (3) a lin-ear layer to generate a combined representation ofthese concatenated results, and (4) a Softmax out-put layer for relation type classification. The ob-jective function used here is as follows:

Lr =

N∑i=1

Li∑j=1

yij log(σ(U r · [hm1i ;hs

ij ;hm2j ]))

(1)

where U r is a weight matrix.

2.5 Application on Event Argument RoleLabeling

Event argument role labeling distinguishes argu-ments from non-arguments and classifies argu-ments by argument role. To label the role of anargument candidate (xaj ) for an event trigger (xti),we first generate the sentence representation hs,argument candidate representation ha, and triggerrepresentationht by applying pooling on sentence,argument candidate xaj and event trigger xti respec-tively, which is the same as that for relation extrac-tion. The mapping function from the latent spaceto argument roles is composed of a concatenationoperation ([ht;hs;ha]), a linear layer (Ua) and aSoftmax output layer:

La =N∑i=1

Li∑j=1

yij log(σ(Ua · [hti;h

sij ;h

aj ])) (2)

where N is the number of event mentions, Li

is the number of argument candidates for ith eventmention and σ is the Sigmoid function.

3 Experiments

3.1 Data and Evaluation Metrics

RelationMentions

EventMentions

EventArguments

English 8,738 5,349 9,793Chinese 9,317 3,333 8,032Arabic 4,731 2,270 4,975

Table 1: Data statistics.

We choose the Automatic Content Extraction(ACE) 2005 corpus (Walker et al., 2006) for ourexperiments because it contains the most compre-hensive gold-standard relation and event annota-tions for three distinct languages: English, Chineseand Arabic (see Table 1). Our target ontology in-cludes the 7 entity types, 18 relation subtypes and33 event subtypes defined in ACE. We randomlychoose 80% of the corpus for training, 10% fordevelopment and 10% for blind test. We down-sample the negative training instances by limitingthe number of negative samples to be no more thanthe number of positive samples for each document.For data preprocessing, we apply the Stanford

CoreNLP toolkit (Manning et al., 2014) for Chi-nese word segmentation and English tokenization,and the API provided by UDPipe (Straka and

317

Straková, 2017) for Arabic tokenization. We useUDPipe1 for POS tagging and dependency parsingfor all three languages.We follow the following criteria in previous

work (Ji and Grishman, 2008; Li et al., 2013; Liand Ji, 2014) for evaluation:

• A relation mention is considered correct if itsrelation type is correct, and the head offsets ofthe two related entity mention arguments areboth correct.

• An event argument is correctly labeled if itsevent type, offsets, and role label match anyof the reference argument mentions.

3.2 Training DetailsWe use themultilingual word embeddings releasedby (Joulin et al., 2018) which are based on aligningmonolingual embeddings learned by fastText (Bo-janowski et al., 2017) from Wikipedia. We adoptthe universal POS tag set (17 word categories) and37 categories of dependency relations defined bytheUniversal Dependencies program (Nivre et al.,2016), and seven entity types defined in ACE: per-son, geopolitical entity, organization, facility, lo-cation, weapon and vehicle. Table 2 shows the hy-perparameters of our model.

Hyperparameter Valueword embedding size 300POS embedding size 30entity embedding size 30dependency relation embedding size 30hidden dimension size 200dropout 0.5number of layers 2pooling function max poolingmlp layers 2learning rate 0.1learning rate decay 0.9batch size 50optimization SGD

Table 2: GCN hyperparameters.

3.3 Overall PerformanceIn order to fully analyze the cross-lingual learn-ing capabilities of our framework, we assess its

1Universal Dependencies 2.3 models: english-ewt-ud-2.3-181115.udpipe, chinese-gsd-ud-2.3-181115.udpipe,arabic-padt-ud-2.3-181115.udpipe

performance by applying models trained with var-ious combinations of training and test data fromthese three languages, as shown in Tables 3 and4. We can see that the results are promising.For both tasks, the models trained from Englishare best, followed by Chinese, and then Arabic.We find that extraction task performance degradesas the accuracy of language-dependent tools (forsentence segmentation, POS tagging, dependencyparsing) degrades.

Using English as training data, our cross-lingualtransfer approach achieves similar performanceon Chinese event argument role labeling (59.0%),compared to the model trained from Chinese an-notations (59.3%), which is much higher than thebest reported English-to-Chinese transfer result onevent argument role labeling (47.7%) (Hsi et al.,2016).

We also show polyglot results for event argu-ment role labeling in Table 4, by combing the train-ing data from multiple languages. We observethat our model benefits from the combination oftraining data of multiple languages. The polyglottransfer learning does not provide further gains torelation extraction because the model convergesquickly on a small amount of training data.

TrainTest English Chinese Arabic

English 68.2 42.5 58.7Chinese 62.6 69.4 54.0Arabic 58.6 35.2 67.4

Table 3: Relation Extraction: overall performance (F-score %) using perfect entity mentions.

TrainTest English Chinese Arabic

English 63.9 59.0 61.8Chinese 51.6 59.3 60.6Arabic 43.1 50.1 64.0English + Chinese – – 63.1English + Arabic – 60.1 –Chinese + Arabic 51.9 – –

Table 4: Event Argument Role Labeling results (F1 %)with perfect event triggers.

318

Feature Chinese ArabicMultilingual Word Embedding 40.2 53.8+ POS Embedding 40.1 54.9+ Entity Embedding 40.8 56.9+ Dependency Relation Embedding 42.5 58.7

Table 5: Relation Extraction: Contribution of addingeach language-universal feature consecutively for rela-tion extraction on Arabic and Chinese test sets, usingthe model trained on English (F1 scores (%)).

3.4 Ablation StudyTables 5 and 6 show the impact of each feature cat-egory. We can see that all categories help exceptChinese POS features for Relation Extraction andArabic POS features for Event Argument Role La-beling.Many Chinese word segmentation errors oc-

cur on tokens involved in names. For example,“总统(NOUN, president) 萨姆(PROPN, Samuel)·(PUNCT) 努乔马(PROPN, Nujoma)” is mis-takenly tagged as “总(NOUN) 统萨姆(PROPN)·(PUNCT)努乔(NOUN)马(NOUN)”.In Arabic, sometimes one word is a combination

of Noun and Verb. For example, the single word”الإسرائيلي“ means “Israeli conflict” in English, in-cluding both a trigger and an argument, which arenot separated by our tokenizer. In contrast, two en-tity mentions are unlikely to be combined into oneword in Arabic, thus Relation Extraction does notsuffer from tokenization errors and correspondingPOS features.

Feature Chinese ArabicMultilingual Word Embedding 52.4 56.6+ POS Embedding 57.1 37.8+ Entity Embedding 58.3 41.4+ Dependency Relation Embedding 59.0 61.8

Table 6: Event Extraction: Contributions of addingeach language-universal feature consecutively for eventargument role labeling on Arabic and Chinese testdatasets, using a model trained on English (F1 scores(%)).

3.5 Comparison with Supervised ApproachWe also compare the results with supervisedmonolingual models trained from manual anno-tations in the same language. Figures 3 and 4show the learning curves of these supervised mod-els. For event argument role labeling, we cansee that without using any annotations for the tar-get language, our approach achieves performancecomparable to the supervised models trained from

more than 3,000 manually annotated event ar-gument mentions, which equal to approximately1,326 Chinese sentences and 1,141 Arabic sen-tences based on the statistics of ACE data.Our model performs particularly well on

relation types or argument roles that requiredeep understanding of wide contexts involv-ing complex syntactic and semantic struc-tures, such as PART-WHOLE:Artifact, PART-WHOLE:Geographical, GEN-AFF:Org-Locationand ORG-AFF:Employment relations, and Injure:Victim argument role. Despite only having 14training instances, our model achieves near100% F-score on PART-WHOLE:Artifact rela-tions when transferred from English to Chinese.Our model achieves 86% F1 score on PART-WHOLE:Geographical relations when transferredfrom English to Arabic, and 73% and 79% F1scores on GEN-AFF:Org-Location relationswhen transferred from English to Chinese andto Arabic, respectively. In an Injure event, aPerson can either be an Agent or a Victim. Surfacelexical embedding features are often not sufficientto disambiguate them. Our model is effectiveat transferring structural information such asdependency relations between words, and obtains72.97% F1 score on labeling Injure: Victim whentransferred from English to Chinese, and 75.43 %from English to Arabic.In addition, our model achieves very high per-

formance on event argument roles for which entitytype is a strong indicator. For example, a weaponis much more likely to play as an Instrument ratherthan a Target in an Attack. Our model achieves89.9% F1 score on Attack: Instrument and 91.4%F1 score onPersonnel: POSITION argument roleswhen transferred from English to Chinese.

3.6 Using System Extracted Name Mentions

Target Language F1 scoreChinese 56.9Arabic 60.1

Table 7: Event Argument Role Labeling results (F1 %)on Chinese and Arabic using English as training data(with system generated entity mentions)

Table 7 shows the results of event argument rolelabeling on Chinese and Arabic entity mentionsautomatically extracted by Stanford CoreNLP in-stead of manually annotated mentions. The sys-tem extracted entity mentions introduce noise and

319

(a) Target Language: Chinese (b) Target Language: Arabic

Figure 3: Relation Extraction: Comparison between supervised monolingual GCNmodel and cross-lingual transferlearning.

(a) Target Language: Chinese (b) Target Language: Arabic

Figure 4: Event Argument Role Labeling: Comparison between supervised monolingual GCN model and cross-lingual transfer learning.

thus decrease the performance of the model, butthe overall results are still promising.

4 Related Work

A large number of supervised machine learningtechniques have been used for English event ex-traction, including traditional techniques based onsymbolic features (Ji and Grishman, 2008; Liaoand Grishman, 2011), joint inference models (Liet al., 2014; Yang and Mitchell, 2016), and re-cently with neural networks (Nguyen and Grish-man, 2015a; Nguyen et al., 2016; Chen et al., 2015;Nguyen and Grishman, 2018; Liu et al., 2018b; Luand Nguyen, 2018; Liu et al., 2018a; Zhang et al.,2018a, 2019). English relation extraction in theearly days also followed supervised paradigms (Liand Ji, 2014; Zeng et al., 2014; Nguyen and Gr-ishman, 2015b; Miwa and Bansal, 2016; Pawaret al., 2017; Bekoulis et al., 2018; Wang et al.,2018b). Recent efforts have attempted to reduceannotation costs using distant supervision (Mintz

et al., 2009; Surdeanu et al., 2012;Min et al., 2013;Angeli et al., 2014; Zeng et al., 2015; Quirk andPoon, 2017; Qin et al., 2018; Wang et al., 2018a)through knowledge bases (KBs), where entitiesand static relations are plentiful. Distant super-vision is less applicable for the task of event ex-traction because very few dynamic events are in-cluded in KBs. These approaches, however, incor-porate language-specific characteristics and thusare costly in requiring substantial amount of anno-tations to adapt to a new language (Chen and Vin-cent, 2012; Blessing and Schütze, 2012; Li et al.,2012; Danilova et al., 2014; Agerri et al., 2016; Hsiet al., 2016; Feng et al., 2016).

Regardless of the recent successes in applyingcross-lingual transfer learning to sequence label-ing tasks, such as name tagging (e.g., (Mayhewet al., 2017; Lin et al., 2018; Huang et al., 2019)),only limited work has explored cross-lingual rela-tion and event structure transfer. Most previousefforts working with cross-lingual structure trans-

320

fer rely on bilingual dictionaries (Hsi et al., 2016),parallel data (Chen and Ji, 2009; Kim et al., 2010;Qian et al., 2014) or machine translation (Faruquiand Kumar, 2015; Zou et al., 2018). Recent meth-ods (Lin et al., 2017; Wang et al., 2018b) aggre-gate consistent patterns and complementary infor-mation across languages to enhance Relation Ex-traction, but they do so exploiting only distribu-tional representations.Event extraction shares with Semantic Role La-

beling (SRL) the task of assigning to each event ar-gument its event role label, in the process of com-pleting other tasks to extract the full event struc-ture (assigning event types to predicates and morespecific roles to arguments). Cross-lingual transferhas been very successful for SRL. Early attemptsrelied onword alignment (Van der Plas et al., 2011)or bilingual dictionaries (Kozhevnikov and Titov,2013). Recent work incorporates universal de-pendencies (Prazák and Konopík, 2017) or multi-lingual word embeddings for Polyglot SRL (Mul-caire et al., 2018). Liu et al. (2019) and Mulcaireet al. (2019) exploit multi-lingual contextualizedword embedding for SRL and other Polyglot NLPtasks including dependency parsing and name tag-ging. To the best of our knowledge, our work is thefirst to construct a cross-lingual structure transferframework that combines language-universal sym-bolic representations and distributional represen-tations for relation and event extraction over textswritten in a language without any training data.GCN has been successfully applied to sev-

eral individual monolingual NLP tasks, includ-ing relation extraction (Zhang et al., 2018b),event detection (Nguyen and Grishman, 2018),SRL (Marcheggiani and Titov, 2017) and sentenceclassification (Yao et al., 2019). We apply GCN toconstruct multi-lingual structural representationsfor cross-lingual transfer learning.

5 Conclusions and Future Work

We show how cross-lingual relation and event ar-gument structural representations may be trans-ferred between languages without any trainingdata for the target language, and conclude thatlanguage-universal symbolic and distributionalrepresentations are complementary for cross-lingual structure transfer. In the future we willexplore more language-universal representationssuch as visual features from topically-related im-ages and videos and external background knowl-

edge.

Acknowledgement

This research is based upon work supported in partby U.S. DARPA LORELEI Program HR0011-15-C-0115, the Office of the Director of Na-tional Intelligence (ODNI), Intelligence AdvancedResearch Projects Activity (IARPA), via con-tract FA8650-17-C-9116, and ARL NS-CTA No.W911NF-09-2-0053. The views and conclusionscontained herein are those of the authors andshould not be interpreted as necessarily represent-ing the official policies, either expressed or im-plied, of DARPA, ODNI, IARPA, or the U.S. Gov-ernment. The U.S. Government is authorized toreproduce and distribute reprints for governmen-tal purposes notwithstanding any copyright anno-tation therein.

ReferencesRodrigo Agerri, Itziar Aldabe, Egoitz Laparra, Ger-

man Rigau Claramunt, Antske Fokkens, Paul Hui-jgen, Rubén Izquierdo Beviá, Marieke van Erp, PiekVossen, Anne-Lyse Minard, et al. 2016. Multilin-gual event detection using the newsreader pipelines.In Proceedings of International Conference on Lan-guage Resources and Evaluation 2016.

Waleed Ammar, George Mulcaire, Yulia Tsvetkov,Guillaume Lample, Chris Dyer, and Noah A Smith.2016. Massively multilingual word embeddings.arXiv preprint arXiv:1602.01925.

Gabor Angeli, Julie Tibshirani, Jean Wu, and Christo-pher D Manning. 2014. Combining distant and par-tial supervision for relation extraction. In Proceed-ings of the 2014 Conference on Empirical Methodsin Natural Language Processing.

Giannis Bekoulis, Johannes Deleu, Thomas Demeester,and Chris Develder. 2018. Adversarial trainingfor multi-context joint entity and relation extrac-tion. In Proceedings of the 2018 Conference onEmpirical Methods in Natural Language Process-ing, pages 2830–2836, Brussels, Belgium. Associ-ation for Computational Linguistics.

Andre Blessing and Hinrich Schütze. 2012. Crosslin-gual distant supervision for extracting relations ofdifferent complexity. In Proceedings of the 21stACM international conference on Information andknowledge management.

Piotr Bojanowski, Edouard Grave, Armand Joulin, andTomas Mikolov. 2017. Enriching word vectors withsubword information. Transactions of the Associa-tion for Computational Linguistics.

321

Chen Chen and Ng Vincent. 2012. Joint modelingfor Chinese event extraction with rich linguistic fea-tures. In Proceedings of the 24th International Con-ference on Computational Linguistics.

Yubo Chen, Liheng Xu, Kang Liu, Daojian Zeng, andJun Zhao. 2015. Event extraction via dynamicmulti-pooling convolutional neural networks. In Proceed-ings of the 53rd Annual Meeting of the Associationfor Computational Linguistics and the 7th Interna-tional Joint Conference on Natural Language Pro-cessing (Volume 1: Long Papers).

Zheng Chen and Heng Ji. 2009. Can one languagebootstrap the other: a case study on event extraction.In Proceedings of the NAACL HLT 2009 Workshopon Semi-Supervised Learning for Natural LanguageProcessing.

Vera Danilova, Mikhail Alexandrov, and XavierBlanco. 2014. A survey of multilingual event extrac-tion from text. In Proceedings of the InternationalConference on Applications of Natural Language toData Bases/Information Systems.

George R Doddington, Alexis Mitchell, Mark A Przy-bocki, Lance A Ramshaw, Stephanie M Strassel, andRalph M Weischedel. 2004. The automatic contentextraction (ACE) program-tasks, data, and evalua-tion. In Proceedings of the Fourth InternationalConference on Language Resources and Evaluation.

Manaal Faruqui and Chris Dyer. 2014. Improving vec-tor space word representations using multilingualcorrelation. In Proceedings of the 14th Conferenceof the European Chapter of the Association for Com-putational Linguistics.

Manaal Faruqui and Shankar Kumar. 2015. Multilin-gual open relation extraction using cross-lingual pro-jection. In Proceedings of the 2015 Conference ofthe North American Chapter of the Association forComputational Linguistics: Human Language Tech-nologies.

Xiaocheng Feng, Lifu Huang, Duyu Tang, Heng Ji,Bing Qin, and Ting Liu. 2016. A language-independent neural network for event detection. InProceedings of the 54th Annual Meeting of the As-sociation for Computational Linguistics (Volume 2:Short Papers).

Jeremy Getman, Joe Ellis, Stephanie Strassel, ZhiyiSong, and Jennifer Tracey. 2018. Laying the ground-work for knowledge base population: Nine years oflinguistic resources for TACKBP. In Proceedings ofthe Eleventh International Conference on LanguageResources and Evaluation (LREC-2018).

Andrew Hsi, Yiming Yang, Jaime Carbonell, andRuochen Xu. 2016. Leveraging multilingual train-ing for limited resource event extraction. In Pro-ceedings of COLING 2016, the 26th InternationalConference on Computational Linguistics: Techni-cal Papers.

Lifu Huang, Heng Ji, and Jonathan May. 2019. Cross-lingual multi-level adversarial transfer to enhancelow-resource name tagging. In Proceedings of the2019 Conference of the North American Chapter ofthe Association for Computational Linguistics: Hu-man Language Technologies, Volume 1 (Long andShort Papers).

Heng Ji and Ralph Grishman. 2008. Refining event ex-traction through cross-document inference. In Pro-ceedings of the 46th Annual Meeting of the Associ-ation for Computational Linguistics: Human Lan-guage Technologies.

Armand Joulin, Piotr Bojanowski, Tomas Mikolov,Hervé Jégou, and Edouard Grave. 2018. Loss intranslation: Learning bilingual word mapping with aretrieval criterion. In Proceedings of the 2018 Con-ference on Empirical Methods in Natural LanguageProcessing.

Seokhwan Kim, Minwoo Jeong, Jonghoon Lee, andGary Geunbae Lee. 2010. A cross-lingual annota-tion projection approach for relation detection. InProceedings of the 23rd International Conference onComputational Linguistics.

Thomas N. Kipf and Max Welling. 2017. Semi-supervised classification with graph convolutionalnetworks. In International Conference on LearningRepresentations.

Mikhail Kozhevnikov and Ivan Titov. 2013. Cross-lingual transfer of semantic role labeling models. InProceedings of the 51st Annual Meeting of the Asso-ciation for Computational Linguistics.

Angeliki Lazaridou, Georgiana Dinu, and Marco Ba-roni. 2015. Hubness and pollution: Delving intocross-space mapping for zero-shot learning. In Pro-ceedings of the 53rd Annual Meeting of the Associ-ation for Computational Linguistics and the 7th In-ternational Joint Conference on Natural LanguageProcessing (Volume 1: Long Papers).

Kenton Lee, Luheng He, Mike Lewis, and Luke Zettle-moyer. 2017. End-to-end neural coreference resolu-tion. In Proceedings of the 2017 Conference on Em-pirical Methods in Natural Language Processing.

Peifeng Li, Guodong Zhou, Qiaoming Zhu, and LibinHou. 2012. Employing compositional semantics anddiscourse consistency in chinese event extraction. InProceedings of the 2012 Joint Conference on Empir-ical Methods in Natural Language Processing andComputational Natural Language Learning.

Qi Li and Heng Ji. 2014. Incremental joint extraction ofentity mentions and relations. In Proceedings of the52nd Annual Meeting of the Association for Compu-tational Linguistics (Volume 1: Long Papers).

Qi Li, Heng Ji, and Liang Huang. 2013. Joint eventextraction via structured prediction with global fea-tures. In Proceedings of the 51st Annual Meeting ofthe Association for Computational Linguistics (Vol-ume 1: Long Papers).

322

Qi Li, Heng Ji, HONG Yu, and Sujian Li. 2014.Constructing information networks using one singlemodel. In Proceedings of the 2014 Conference onEmpirical Methods in Natural Language Process-ing.

Shasha Liao and Ralph Grishman. 2011. Acquiringtopic features to improve event extraction: in pre-selected and balanced collections. In Proceedingsof the International Conference Recent Advances inNatural Language Processing 2011.

Yankai Lin, Zhiyuan Liu, and Maosong Sun. 2017.Neural relation extraction with multi-lingual atten-tion. In Proceedings of the 55th Annual Meeting ofthe Association for Computational Linguistics (Vol-ume 1: Long Papers).

Ying Lin, Shengqi Yang, Veselin Stoyanov, and HengJi. 2018. A multi-lingual multi-task architecturefor low-resource sequence labeling. In Proc. The56th Annual Meeting of the Association for Compu-tational Linguistics.

Jian Liu, Yubo Chen, Kang Liu, and Jun Zhao. 2018a.Event detection via gated multilingual attentionmechanism. In Proceedings of the Thirty-SecondAAAI Conference on Artificial Intelligence.

Nelson F. Liu, Matt Gardner, Yonatan Belinkov,Matthew E. Peters, and Noah A. Smith. 2019. Lin-guistic knowledge and transferability of contextualrepresentations. In Proceedings of the Conferenceof the North American Chapter of the Association forComputational Linguistics.

Xiao Liu, Zhunchen Luo, and Heyan Huang. 2018b.Jointlymultiple events extraction via attention-basedgraph information aggregation. In Proceedings ofthe 2018 Conference on Empirical Methods in Nat-ural Language Processing.

Ang Lu, Weiran Wang, Mohit Bansal, Kevin Gimpel,and Karen Livescu. 2015. Deep multilingual corre-lation for improved word embeddings. In Proceed-ings of the 2015 Conference of the North AmericanChapter of the Association for Computational Lin-guistics: Human Language Technologies.

Weiyi Lu and Thien Huu Nguyen. 2018. Similar butnot the same: Word sense disambiguation improvesevent detection via neural representation matching.In Proceedings of the 2018 Conference on EmpiricalMethods in Natural Language Processing.

Christopher Manning, Mihai Surdeanu, John Bauer,Jenny Finkel, Steven Bethard, and David McClosky.2014. The Stanford CoreNLP natural language pro-cessing toolkit. InProceedings of 52nd annual meet-ing of the Association for Computational Linguis-tics: system demonstrations.

Diego Marcheggiani and Ivan Titov. 2017. Encodingsentences with graph convolutional networks for se-mantic role labeling. In Proceedings of the 2017Conference on Empirical Methods in Natural Lan-guage Processing.

Stephen Mayhew, Chen-Tse Tsai, and Dan Roth. 2017.Cheap translation for cross-lingual named entityrecognition. In Proceedings of the 2017 Conferenceon EmpiricalMethods in Natural Language Process-ing.

Tomas Mikolov, Quoc V Le, and Ilya Sutskever. 2013.Exploiting similarities among languages for machinetranslation. arXiv preprint arXiv:1309.4168.

Bonan Min, Ralph Grishman, Li Wan, Chang Wang,and David Gondek. 2013. Distant supervision forrelation extraction with an incomplete knowledgebase. In Proceedings of the 2013 Conference of theNorth American Chapter of the Association for Com-putational Linguistics: Human Language Technolo-gies.

Mike Mintz, Steven Bills, Rion Snow, and Dan Juraf-sky. 2009. Distant supervision for relation extrac-tion without labeled data. InProceedings of the JointConference of the 47th Annual Meeting of the ACLand the 4th International Joint Conference on Natu-ral Language Processing of the AFNLP.

Makoto Miwa and Mohit Bansal. 2016. End-to-end re-lation extraction using LSTMs on sequences and treestructures. In Proceedings of the 54th Annual Meet-ing of the Association for Computational Linguistics(Volume 1: Long Papers).

Phoebe Mulcaire, Jungo Kasai, and Noah A. Smith.2019. Polyglot contextual representations improvecrosslingual transfer. In Proceedings of the 2019Conference of the North American Chapter of theAssociation for Computational Linguistics: HumanLanguage Technologies, Volume 1 (Long and ShortPapers).

Phoebe Mulcaire, Swabha Swayamdipta, and Noah A.Smith. 2018. Polyglot semantic role labeling. InProceedings of the 56th Annual Meeting of the As-sociation for Computational Linguistics (Volume 2:Short Papers).

Thien Huu Nguyen, Kyunghyun Cho, and Ralph Grish-man. 2016. Joint event extraction via recurrent neu-ral networks. In Proceedings of the 2016 Conferenceof the North American Chapter of the Association forComputational Linguistics: Human Language Tech-nologies.

Thien Huu Nguyen and Ralph Grishman. 2015a. Eventdetection and domain adaptation with convolutionalneural networks. In Proceedings of the 53rd AnnualMeeting of the Association for Computational Lin-guistics and the 7th International Joint Conferenceon Natural Language Processing (Volume 2: ShortPapers).

Thien Huu Nguyen and Ralph Grishman. 2015b. Rela-tion extraction: Perspective from convolutional neu-ral networks. In Proceedings of North AmericanChapter of the Association for Computational Lin-guistics: Human Language Technologies.

323

Thien Huu Nguyen and Ralph Grishman. 2018. Graphconvolutional networks with argument-aware pool-ing for event detection. In Thirty-Second AAAI Con-ference on Artificial Intelligence.

Joakim Nivre, Mitchell Abrams, Željko Agić, LarsAhrenberg, Lene Antonsen, Katya Aplonova,Maria Jesus Aranzabe, Gashaw Arutie, MasayukiAsahara, Luma Ateyah, Mohammed Attia, AitziberAtutxa, Liesbeth Augustinus, Elena Badmaeva,Miguel Ballesteros, Esha Banerjee, SebastianBank, Verginica Barbu Mititelu, Victoria Basmov,John Bauer, Sandra Bellato, Kepa Bengoetxea,Yevgeni Berzak, Irshad Ahmad Bhat, Riyaz Ah-mad Bhat, Erica Biagetti, Eckhard Bick, RogierBlokland, Victoria Bobicev, Carl Börstell, CristinaBosco, Gosse Bouma, Sam Bowman, AdrianeBoyd, Aljoscha Burchardt, Marie Candito, BernardCaron, Gauthier Caron, Gülşen Cebiroğlu Eryiğit,Flavio Massimiliano Cecchini, Giuseppe G. A.Celano, Slavomír Čéplö, Savas Cetin, FabricioChalub, Jinho Choi, Yongseok Cho, Jayeol Chun,Silvie Cinková, Aurélie Collomb, Çağrı Çöltekin,Miriam Connor, Marine Courtin, Elizabeth David-son, Marie-Catherine de Marneffe, Valeria de Paiva,Arantza Diaz de Ilarraza, Carly Dickerson, PeterDirix, Kaja Dobrovoljc, Timothy Dozat, KiraDroganova, Puneet Dwivedi, Marhaba Eli, AliElkahky, Binyam Ephrem, Tomaž Erjavec, AlineEtienne, Richárd Farkas, Hector Fernandez Al-calde, Jennifer Foster, Cláudia Freitas, KatarínaGajdošová, Daniel Galbraith, Marcos Garcia, MoaGärdenfors, Sebastian Garza, Kim Gerdes, FilipGinter, Iakes Goenaga, Koldo Gojenola, MemduhGökırmak, Yoav Goldberg, Xavier Gómez Guino-vart, Berta Gonzáles Saavedra, Matias Grioni,Normunds Grūzītis, Bruno Guillaume, CélineGuillot-Barbance, Nizar Habash, Jan Hajič, JanHajič jr., Linh Hà Mỹ, Na-Rae Han, Kim Harris,Dag Haug, Barbora Hladká, Jaroslava Hlaváčová,Florinel Hociung, Petter Hohle, Jena Hwang, RaduIon, Elena Irimia, Ọlájídé Ishola, Tomáš Jelínek,Anders Johannsen, Fredrik Jørgensen, HünerKaşıkara, Sylvain Kahane, Hiroshi Kanayama,Jenna Kanerva, Boris Katz, Tolga Kayadelen,Jessica Kenney, Václava Kettnerová, Jesse Kirch-ner, Kamil Kopacewicz, Natalia Kotsyba, SimonKrek, Sookyoung Kwak, Veronika Laippala,Lorenzo Lambertino, Lucia Lam, Tatiana Lando,Septina Dian Larasati, Alexei Lavrentiev, JohnLee, Phương Lê Hồng, Alessandro Lenci, SaranLertpradit, Herman Leung, Cheuk Ying Li, JosieLi, Keying Li, KyungTae Lim, Nikola Ljubešić,Olga Loginova, Olga Lyashevskaya, Teresa Lynn,Vivien Macketanz, Aibek Makazhanov, MichaelMandl, Christopher Manning, Ruli Manurung,Cătălina Mărănduc, David Mareček, Katrin Marhei-necke, Héctor Martínez Alonso, André Martins,Jan Mašek, Yuji Matsumoto, Ryan McDonald,Gustavo Mendonça, Niko Miekka, Margarita Misir-pashayeva, Anna Missilä, Cătălin Mititelu, YusukeMiyao, Simonetta Montemagni, Amir More, LauraMoreno Romero, Keiko Sophie Mori, ShinsukeMori, Bjartur Mortensen, Bohdan Moskalevskyi,

Kadri Muischnek, Yugo Murawaki, Kaili Müürisep,Pinkey Nainwani, Juan Ignacio Navarro Horñiacek,Anna Nedoluzhko, Gunta Nešpore-Bērzkalne,Lương Nguyễn Thị, Huyền Nguyễn Thị Minh,Vitaly Nikolaev, Rattima Nitisaroj, Hanna Nurmi,Stina Ojala, Adédayọ̀ Olúòkun, Mai Omura, PetyaOsenova, Robert Östling, Lilja Øvrelid, NikoPartanen, Elena Pascual, Marco Passarotti, Ag-nieszka Patejuk, Guilherme Paulino-Passos, SiyaoPeng, Cenel-Augusto Perez, Guy Perrier, SlavPetrov, Jussi Piitulainen, Emily Pitler, BarbaraPlank, Thierry Poibeau, Martin Popel, LaumaPretkalniņa, Sophie Prévost, Prokopis Prokopidis,Adam Przepiórkowski, Tiina Puolakainen, SampoPyysalo, Andriela Rääbis, Alexandre Rademaker,Loganathan Ramasamy, Taraka Rama, CarlosRamisch, Vinit Ravishankar, Livy Real, SivaReddy, Georg Rehm, Michael Rießler, LarissaRinaldi, Laura Rituma, Luisa Rocha, MykhailoRomanenko, Rudolf Rosa, Davide Rovati, ValentinRo�ca, Olga Rudina, Jack Rueter, Shoval Sadde,Benoît Sagot, Shadi Saleh, Tanja Samardžić,Stephanie Samson, Manuela Sanguinetti, BaibaSaulīte, Yanin Sawanakunanon, Nathan Schneider,Sebastian Schuster, Djamé Seddah, WolfgangSeeker, Mojgan Seraji, Mo Shen, Atsuko Shimada,Muh Shohibussirri, Dmitry Sichinava, NataliaSilveira, Maria Simi, Radu Simionescu, KatalinSimkó, Mária Šimková, Kiril Simov, AaronSmith, Isabela Soares-Bastos, Carolyn Spadine,Antonio Stella, Milan Straka, Jana Strnadová,Alane Suhr, Umut Sulubacak, Zsolt Szántó, DimaTaji, Yuta Takahashi, Takaaki Tanaka, IsabelleTellier, Trond Trosterud, Anna Trukhina, ReutTsarfaty, Francis Tyers, Sumire Uematsu, ZdeňkaUrešová, Larraitz Uria, Hans Uszkoreit, SowmyaVajjala, Daniel van Niekerk, Gertjan van Noord,Viktor Varga, Eric Villemonte de la Clergerie,Veronika Vincze, Lars Wallin, Jing Xian Wang,Jonathan North Washington, Seyi Williams, MatsWirén, Tsegay Woldemariam, Tak-sum Wong,Chunxiao Yan, Marat M. Yavrumyan, Zhuoran Yu,Zdeněk Žabokrtský, Amir Zeldes, Daniel Zeman,Manying Zhang, and Hanzhi Zhu. 2018. Universaldependencies 2.3. LINDAT/CLARIN digital libraryat the Institute of Formal and Applied Linguistics(ÚFAL), Faculty of Mathematics and Physics,Charles University.

Joakim Nivre, Marie-Catherine De Marneffe, FilipGinter, Yoav Goldberg, Jan Hajic, Christopher DManning, Ryan T McDonald, Slav Petrov, SampoPyysalo, Natalia Silveira, et al. 2016. Universal de-pendencies v1: A multilingual treebank collection.In Proceedings of the Tenth International Confer-ence on Language Resources and Evaluation.

Xiaoman Pan, Boliang Zhang, Jonathan May, JoelNothman, Kevin Knight, and Heng Ji. 2017. Cross-lingual name tagging and linking for 282 languages.In Proceedings of the 55th Annual Meeting of the As-sociation for Computational Linguistics (Volume 1:Long Papers).

324

Sachin Pawar, Pushpak Bhattacharyya, and Girish K.Palshikar. 2017. End-to-end relation extraction us-ing neural networks and Markov logic networks. InProceedings of the 15th Conference of the EuropeanChapter of the Association for Computational Lin-guistics.

Lonneke Van der Plas, Paola Merlo, and James Hen-derson. 2011. Scaling up automatic cross-lingual se-mantic role annotation. In Proceedings of the 49thAnnual Meeting of the Association for Computa-tional Linguistics: Human Language Technologies.

Ondrej Prazák and Miloslav Konopík. 2017. Cross-lingual SRL based upon universal dependencies. InProceedings of the International Conference RecentAdvances in Natural Language Processing, RANLP2017.

Longhua Qian, Haotian Hui, Ya’nan Hu, GuodongZhou, and Qiaoming Zhu. 2014. Bilingual activelearning for relation classification via pseudo par-allel corpora. In Proceedings of the 52nd AnnualMeeting of the Association for Computational Lin-guistics (Volume 1: Long Papers).

Pengda Qin, Weiran Xu, and William Yang Wang.2018. Dsgan: Generative adversarial training fordistant supervision relation extraction. In Proceed-ings of the 56th Annual Meeting of the Associationfor Computational Linguistics (Volume 1: Long Pa-pers).

Chris Quirk and Hoifung Poon. 2017. Distant super-vision for relation extraction beyond the sentenceboundary. In Proceedings of the 15th Conference ofthe European Chapter of the Association for Compu-tational Linguistics: Volume 1, Long Papers.

Sascha Rothe, Sebastian Ebert, and Hinrich Schütze.2016. Ultradense word embeddings by orthogonaltransformation. In Proceedings of the 2016 Confer-ence of the North American Chapter of the Associ-ation for Computational Linguistics: Human Lan-guage Technologies.

Adam Santoro, David Raposo, David G Barrett, Ma-teusz Malinowski, Razvan Pascanu, Peter Battaglia,and Timothy Lillicrap. 2017. A simple neural net-work module for relational reasoning. In Proceed-ings of Advances in Neural Information ProcessingSystems.

Samuel L Smith, David HP Turban, Steven Hamblin,and Nils Y Hammerla. 2017. Offline bilingual wordvectors, orthogonal transformations and the invertedsoftmax. arXiv preprint arXiv:1702.03859.

Milan Straka and Jana Straková. 2017. Tokenizing,POS tagging, lemmatizing and parsing UD 2.0 withUDPipe. In Proceedings of the CoNLL 2017 SharedTask: Multilingual Parsing from Raw Text to Uni-versal Dependencies.

Mihai Surdeanu, Julie Tibshirani, Ramesh Nallapati,and Christopher D. Manning. 2012. Multi-instancemulti-label learning for relation extraction. In Pro-ceedings of the 2012 Joint Conference on EmpiricalMethods in Natural Language Processing and Com-putational Natural Language Learning.

Kai Sheng Tai, Richard Socher, and Christopher D.Manning. 2015. Improved semantic representationsfrom tree-structured long short-term memory net-works. In Proceedings of the 53rd Annual Meetingof the Association for Computational Linguistics andthe 7th International Joint Conference on NaturalLanguage Processing (Volume 1: Long Papers).

Christopher Walker, Stephanie Strassel, Julie Medero,and Kazuaki Maeda. 2006. Ace 2005 multilin-gual training corpus. Linguistic Data Consortium,Philadelphia, 57.

Guanying Wang, Wen Zhang, Ruoxu Wang, YalinZhou, Xi Chen, Wei Zhang, Hai Zhu, and HuajunChen. 2018a. Label-free distant supervision for rela-tion extraction via knowledge graph embedding. InProceedings of the 2018 Conference on EmpiricalMethods in Natural Language Processing.

Xiaozhi Wang, Xu Han, Yankai Lin, Zhiyuan Liu, andMaosong Sun. 2018b. Adversarial multi-lingualneural relation extraction. In Proceedings of the27th International Conference on ComputationalLinguistics.

Chao Xing, DongWang, Chao Liu, and Yiye Lin. 2015.Normalized word embedding and orthogonal trans-form for bilingual word translation. In Proceedingsof the 2015 Conference of the North American Chap-ter of the Association for Computational Linguistics:Human Language Technologies.

Bishan Yang and Tom M. Mitchell. 2016. Joint extrac-tion of events and entities within a document context.In Proceedings of the 2016 Conference of the NorthAmerican Chapter of the Association for Computa-tional Linguistics: Human Language Technologies.

Liang Yao, Chengsheng Mao, and Yuan Luo. 2019.Graph convolutional networks for text classification.In Proceedings of the Thirty-Third AAAI Conferenceon Artificial Intelligence.

Daojian Zeng, Kang Liu, Yubo Chen, and Jun Zhao.2015. Distant supervision for relation extraction viapiecewise convolutional neural networks. In Pro-ceedings of the 2015 Conference on Empirical Meth-ods in Natural Language Processing.

Daojian Zeng, Kang Liu, Siwei Lai, Guangyou Zhou,and Jun Zhao. 2014. Relation classification via con-volutional deep neural network. In Proceedings ofthe 25th International Conference on ComputationalLinguistics.

Jingli Zhang, Wenxuan Zhou, Yu Hong, Jianmin Yao,and Min Zhang. 2018a. Using entity relation to im-prove event detection via attention mechanism. InProceedings of NLPCC 2018.

325

Tongtao Zhang, Heng Ji, and Avirup Sil. 2019. Jointentity and event extraction with generative adversar-ial imitation learning. Data Intelligence Vol 1 (2).

Yuan Zhang, David Gaddy, Regina Barzilay, andTommi S Jaakkola. 2016. Ten pairs to tag-multilingual pos tagging via coarse mapping be-tween embeddings. In Proceedings of the 2016 Con-ference of the North American Chapter of the Asso-ciation for Computational Linguistics: Human Lan-guage Technologies.

Yuhao Zhang, Peng Qi, and Christopher D. Manning.2018b. Graph convolution over pruned dependencytrees improves relation extraction. In Proceedings ofthe 2018 Conference on Empirical Methods in Nat-ural Language Processing.

Bowei Zou, Zengzhuang Xu, Yu Hong, and GuodongZhou. 2018. Adversarial feature adaptation forcross-lingual relation classification. In Proceedingsof the 27th International Conference on Computa-tional Linguistics.