Conditional Random Fields Advanced Statistical Methods in NLP Ling 572 February 9, 2012 1.

-

Upload

lester-hubbard -

Category

Documents

-

view

213 -

download

0

Transcript of Conditional Random Fields Advanced Statistical Methods in NLP Ling 572 February 9, 2012 1.

1

Conditional Random Fields

Advanced Statistical Methods in NLPLing 572

February 9, 2012

2

RoadmapGraphical Models

Modeling independenceModels revisitedGenerative & discriminative models

Conditional random fieldsLinear chain models

Skip chain models

3

PreviewConditional random fields

Undirected graphical modelDue to Lafferty, McCallum, and Pereira, 2001

4

PreviewConditional random fields

Undirected graphical modelDue to Lafferty, McCallum, and Pereira, 2001

Discriminative modelSupports integration of rich feature sets

5

PreviewConditional random fields

Undirected graphical modelDue to Lafferty, McCallum, and Pereira, 2001

Discriminative modelSupports integration of rich feature sets

Allows range of dependency structuresLinear-chain, skip-chain, generalCan encode long-distance dependencies

6

PreviewConditional random fields

Undirected graphical modelDue to Lafferty, McCallum, and Pereira, 2001

Discriminative modelSupports integration of rich feature sets

Allows range of dependency structuresLinear-chain, skip-chain, generalCan encode long-distance dependencies

Used diverse NLP sequence labeling tasks:Named entity recognition, coreference resolution, etc

7

Graphical Models

8

Graphical ModelsGraphical model

Simple, graphical notation for conditional independence

Probabilistic model where:Graph structure denotes conditional independence

b/t random variables

9

Graphical ModelsGraphical model

Simple, graphical notation for conditional independence

Probabilistic model where:Graph structure denotes conditional independence

b/t random variables

Nodes: random variables

10

Graphical ModelsGraphical model

Simple, graphical notation for conditional independence

Probabilistic model where:Graph structure denotes conditional independence

b/t random variables

Nodes: random variablesEdges: dependency relation between random

variables

11

Graphical ModelsGraphical model

Simple, graphical notation for conditional independence Probabilistic model where:

Graph structure denotes conditional independence b/t random variables

Nodes: random variablesEdges: dependency relation between random variables

Model types: Bayesian Networks Markov Random Fields

12

Modeling (In)dependenceBayesian network

13

Modeling (In)dependenceBayesian network

Directed acyclic graph (DAG)

14

Modeling (In)dependenceBayesian network

Directed acyclic graph (DAG)Nodes = Random VariablesArc ~ directly influences, conditional

dependency

15

Modeling (In)dependenceBayesian network

Directed acyclic graph (DAG)Nodes = Random VariablesArc ~ directly influences, conditional

dependency

Arcs = Child depends on parent(s)No arcs = independent (0 incoming: only a priori)Parents of X = For each X need

)(X))(|( XXP

16

Example I

Russel & Norvig, AIMA

17

Example I

Russel & Norvig, AIMA

18

Example I

Russel & Norvig, AIMA

19

Simple Bayesian NetworkMCBN1

A

B C

D E

A B depends on C depends on D depends on E depends on

Need: Truth table

20

Simple Bayesian NetworkMCBN1

A

B C

D E

A = only a prioriB depends on C depends on D depends on E depends on

Need:P(A)

Truth table2

21

Simple Bayesian NetworkMCBN1

A

B C

D E

A = only a prioriB depends on AC depends onD depends onE depends on

Need:P(A)P(B|A)

Truth table22*2

22

Simple Bayesian NetworkMCBN1

A

B C

D E

A = only a prioriB depends on AC depends on AD depends on E depends on

Need:P(A)P(B|A)P(C|A)

Truth table22*22*2

23

Simple Bayesian NetworkMCBN1

A

B C

D E

A = only a prioriB depends on AC depends on AD depends on B,CE depends on C

Need:P(A)P(B|A)P(C|A)P(D|B,C)P(E|C)

Truth table22*22*22*2*22*2

24

Holmes Example (Pearl)Holmes is worried that his house will be burgled. Forthe time period of interest, there is a 10^-4 a priori chanceof this happening, and Holmes has installed a burglar alarmto try to forestall this event. The alarm is 95% reliable insounding when a burglary happens, but also has a false positive rate of 1%. Holmes’ neighbor, Watson, is 90% sure to call Holmes at his office if the alarm sounds, but he is alsoa bit of a practical joker and, knowing Holmes’ concern, might (30%) call even if the alarm is silent. Holmes’ otherneighbor Mrs. Gibbons is a well-known lush and often befuddled, but Holmes believes that she is four times morelikely to call him if there is an alarm than not.

25

Holmes Example: Model

There a four binary random variables:

26

Holmes Example: Model

There a four binary random variables:B: whether Holmes’ house has been burgledA: whether his alarm soundedW: whether Watson calledG: whether Gibbons called

B A

W

G

27

Holmes Example: Model

There a four binary random variables:B: whether Holmes’ house has been burgledA: whether his alarm soundedW: whether Watson calledG: whether Gibbons called

B A

W

G

28

Holmes Example: Model

There a four binary random variables:B: whether Holmes’ house has been burgledA: whether his alarm soundedW: whether Watson calledG: whether Gibbons called

B A

W

G

29

Holmes Example: Model

There a four binary random variables:B: whether Holmes’ house has been burgledA: whether his alarm soundedW: whether Watson calledG: whether Gibbons called

B A

W

G

30

Holmes Example: Tables

B = #t B=#f

0.0001 0.9999

A=#t A=#fB

#t#f

0.95 0.05 0.01 0.99

W=#t W=#fA

#t#f

0.90 0.10 0.30 0.70

G=#t G=#fA

#t#f

0.40 0.60 0.10 0.90

31

Bayes’ Nets: Markov Property

Bayes’s Nets:Satisfy the local Markov property

Variables: conditionally independent of non-descendents given their parents

32

Bayes’ Nets: Markov Property

Bayes’s Nets:Satisfy the local Markov property

Variables: conditionally independent of non-descendents given their parents

33

Bayes’ Nets: Markov Property

Bayes’s Nets:Satisfy the local Markov property

Variables: conditionally independent of non-descendents given their parents

34

Simple Bayesian NetworkMCBN1 A

B C

D E

A = only a prioriB depends on AC depends on AD depends on B,CE depends on C

P(A,B,C,D,E)=

35

Simple Bayesian NetworkMCBN1 A

B C

D E

A = only a prioriB depends on AC depends on AD depends on B,CE depends on C

P(A,B,C,D,E)=P(A)

36

Simple Bayesian NetworkMCBN1 A

B C

D E

A = only a prioriB depends on AC depends on AD depends on B,CE depends on C

P(A,B,C,D,E)=P(A)P(B|A)

37

Simple Bayesian NetworkMCBN1 A

B C

D E

A = only a prioriB depends on AC depends on AD depends on B,CE depends on C

P(A,B,C,D,E)=P(A)P(B|A)P(C|A)

38

Simple Bayesian NetworkMCBN1 A

B C

D E

A = only a prioriB depends on AC depends on AD depends on B,CE depends on C

P(A,B,C,D,E)=P(A)P(B|A)P(C|A)P(D|B,C)P(E|C)There exist algorithms for training, inference on BNs

39

Naïve Bayes Model

Bayes’ Net: Conditional independence of features given class

Y

f1 f2 f3 fk

40

Naïve Bayes Model

Bayes’ Net: Conditional independence of features given class

Y

f1 f2 f3 fk

41

Naïve Bayes Model

Bayes’ Net: Conditional independence of features given class

Y

f1 f2 f3 fk

42

Hidden Markov ModelBayesian Network where:

yt depends on

43

Hidden Markov ModelBayesian Network where:

yt depends on yt-1

xt

44

Hidden Markov ModelBayesian Network where:

yt depends on yt-1

xt depends on yt

y1 y2 y3 yk

x1 x2 x3 xk

45

Hidden Markov ModelBayesian Network where:

yt depends on yt-1

xt depends on yt

y1 y2 y3 yk

x1 x2 x3 xk

46

Hidden Markov ModelBayesian Network where:

yt depends on yt-1

xt depends on yt

y1 y2 y3 yk

x1 x2 x3 xk

47

Hidden Markov ModelBayesian Network where:

yt depends on yt-1

xt depends on yt

y1 y2 y3 yk

x1 x2 x3 xk

48

Generative ModelsBoth Naïve Bayes and HMMs are generative

models

49

Generative ModelsBoth Naïve Bayes and HMMs are generative

models

We use the term generative model to refer to a directed graphical model in which the outputs topologically precede the inputs, that is, no x in X can be a parent of an output y in Y.

(Sutton & McCallum, 2006)State y generates an observation (instance) x

50

Generative ModelsBoth Naïve Bayes and HMMs are generative

models

We use the term generative model to refer to a directed graphical model in which the outputs topologically precede the inputs, that is, no x in X can be a parent of an output y in Y.

(Sutton & McCallum, 2006)State y generates an observation (instance) x

Maximum Entropy and linear-chain Conditional Random Fields (CRFs) are, respectively, their discriminative model counterparts

51

Markov Random Fieldsaka Markov Network

Graphical representation of probabilistic modelUndirected graph

Can represent cyclic dependencies(vs DAG in Bayesian Networks, can represent induced

dep)

52

Markov Random Fieldsaka Markov Network

Graphical representation of probabilistic modelUndirected graph

Can represent cyclic dependencies(vs DAG in Bayesian Networks, can represent induced

dep)

Also satisfy local Markov property:where ne(X) are the neighbors of X

53

Factorizing MRFsMany MRFs can be analyzed in terms of cliques

Clique: in undirected graph G(V,E), clique is a subset of vertices v in V, s.t. for every pair of vertices vi,vj, there exists E(vi,vj)

Example due to F. Xia

54

Factorizing MRFsMany MRFs can be analyzed in terms of cliques

Clique: in undirected graph G(V,E), clique is a subset of vertices v in V, s.t. for every pair of vertices vi,vj, there exists E(vi,vj)

Maximal clique can not be extended

Example due to F. Xia

55

Factorizing MRFsMany MRFs can be analyzed in terms of cliques

Clique: in undirected graph G(V,E), clique is a subset of vertices v in V, s.t. for every pair of vertices vi,vj, there exists E(vi,vj)

Maximal clique can not be extendedMaximum clique is largest clique in G.

Clique:

Maximal clique:

Maximum clique:

Example due to F. Xia

A

B C

E D

56

MRFsGiven an undirected graph G(V,E), random vars:

X

Cliques over G: cl(G)

Example due to F. Xia

57

MRFsGiven an undirected graph G(V,E), random vars:

X

Cliques over G: cl(G)

B C

E D

Example due to F. Xia

58

MRFsGiven an undirected graph G(V,E), random vars:

X

Cliques over G: cl(G)

B C

E D

Example due to F. Xia

59

Conditional Random FieldsDefinition due to Lafferty et al, 2001:

Let G = (V,E) be a graph such that Y=(Yv)vinV, so that Y is indexed by the vertices of G. Then (X,Y) is a conditional random field in case, when conditioned on X, the random variables Yv obey the Markov property with respect to the graph: p(Yv|X,Yw,w!=v)=p(Yv|X,Yw,w~v), where w∼v means that w and v are neighbors in G

60

Conditional Random FieldsDefinition due to Lafferty et al, 2001:

Let G = (V,E) be a graph such that Y=(Yv)vinV, so that Y is indexed by the vertices of G. Then (X,Y) is a conditional random field in case, when conditioned on X, the random variables Yv obey the Markov property with respect to the graph: p(Yv|X,Yw,w!=v)=p(Yv|X,Yw,w~v), where w∼v means that w and v are neighbors in G.

A CRF is a Markov Random Field globally conditioned on the observation X, and has the form:

61

Linear-Chain CRFCRFs can have arbitrary graphical structure, but..

62

Linear-Chain CRFCRFs can have arbitrary graphical structure, but..

Most common form is linear chain Supports sequence modelingMany sequence labeling NLP problems:

Named Entity Recognition (NER), Coreference

63

Linear-Chain CRFCRFs can have arbitrary graphical structure, but..

Most common form is linear chain Supports sequence modelingMany sequence labeling NLP problems:

Named Entity Recognition (NER), CoreferenceSimilar to combining HMM sequence w/MaxEnt

modelSupports sequence structure like HMM

but HMMs can’t do rich feature structure

64

Linear-Chain CRFCRFs can have arbitrary graphical structure, but..

Most common form is linear chain Supports sequence modelingMany sequence labeling NLP problems:

Named Entity Recognition (NER), CoreferenceSimilar to combining HMM sequence w/MaxEnt

modelSupports sequence structure like HMM

but HMMs can’t do rich feature structure

Supports rich, overlapping features like MaxEnt but MaxEnt doesn’t directly supports sequences labeling

65

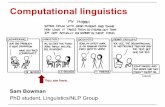

Discriminative & Generative

Model perspectives (Sutton & McCallum)

66

Linear-Chain CRFsFeature functions:

In MaxEnt: f: X x Y {0,1}e.g. fj(x,y) = 1, if x=“rifle” and y=talk.politics.guns, 0

o.w.

67

Linear-Chain CRFsFeature functions:

In MaxEnt: f: X x Y {0,1}e.g. fj(x,y) = 1, if x=“rifle” and y=talk.politics.guns, 0

o.w.

In CRFs, f: Y x Y x X x T Re.g. fk(yt,yt-1,x,t)=1, if yt=V and yt-1=N and xt=“flies”,0

o.w.frequently indicator function, for efficiency

68

Linear-Chain CRFsFeature functions:

In MaxEnt: f: X x Y {0,1}e.g. fj(x,y) = 1, if x=“rifle” and y=talk.politics.guns, 0

o.w.

In CRFs, f: Y x Y x X x T Re.g. fk(yt,yt-1,x,t)=1, if yt=V and yt-1=N and xt=“flies”,0

o.w.frequently indicator function, for efficiency

69

Linear-Chain CRFs

70

Linear-Chain CRFs

71

Linear-chain CRFs:Training & Decoding

Training:

72

Linear-chain CRFs:Training & Decoding

Training: Learn λj

Approach similar to MaxEnt: e.g. L-BFGS

73

Linear-chain CRFs:Training & Decoding

Training: Learn λj

Approach similar to MaxEnt: e.g. L-BFGS

Decoding:Compute label sequence that optimizes P(y|x)Can use approaches like HMM, e.g. Viterbi

74

Skip-chain CRFs

75

MotivationLong-distance dependencies:

76

MotivationLong-distance dependencies:

Linear chain CRFs, HMMs, beam search, etcAll make very local Markov assumptions

Preceding label; current data given current labelGood for some tasks

77

MotivationLong-distance dependencies:

Linear chain CRFs, HMMs, beam search, etcAll make very local Markov assumptions

Preceding label; current data given current labelGood for some tasks

However, longer context can be usefule.g. NER: Repeated capitalized words should get same

tag

78

MotivationLong-distance dependencies:

Linear chain CRFs, HMMs, beam search, etcAll make local Markov assumptions

Preceding label; current data given current labelGood for some tasks

However, longer context can be usefule.g. NER: Repeated capitalized words should get same

tag

79

Skip-Chain CRFsBasic approach:

Augment linear-chain CRF model withLong-distance ‘skip edges’

Add evidence from both endpoints

80

Skip-Chain CRFsBasic approach:

Augment linear-chain CRF model withLong-distance ‘skip edges’

Add evidence from both endpoints

Which edges?

81

Skip-Chain CRFsBasic approach:

Augment linear-chain CRF model withLong-distance ‘skip edges’

Add evidence from both endpoints

Which edges? Identical words, words with same stem?

82

Skip-Chain CRFsBasic approach:

Augment linear-chain CRF model withLong-distance ‘skip edges’

Add evidence from both endpoints

Which edges? Identical words, words with same stem?

How many edges?

83

Skip-Chain CRFsBasic approach:

Augment linear-chain CRF model withLong-distance ‘skip edges’

Add evidence from both endpoints

Which edges? Identical words, words with same stem?

How many edges?Not too many, increases inference cost

84

Skip Chain CRF ModelTwo clique templates:

Standard linear chain template

85

Skip Chain CRF ModelTwo clique templates:

Standard linear chain templateSkip edge template

86

Skip Chain CRF ModelTwo clique templates:

Standard linear chain templateSkip edge template

87

Skip Chain CRF ModelTwo clique templates:

Standard linear chain templateSkip edge template

88

Skip Chain NERNamed Entity Recognition:

Task: start time, end time, speaker, locationIn corpus of seminar announcement emails

89

Skip Chain NERNamed Entity Recognition:

Task: start time, end time, speaker, locationIn corpus of seminar announcement emails

All approaches:Orthographic, gazeteer, POS features

Within preceding, following 4 word window

90

Skip Chain NERNamed Entity Recognition:

Task: start time, end time, speaker, locationIn corpus of seminar announcement emails

All approaches:Orthographic, gazeteer, POS features

Within preceding, following 4 word window

Skip chain CRFs: Skip edges between identical capitalized words

91

NER Features

92

Skip Chain NER Results

Skip chain improves substantially on ‘speaker’ recognition- Slight reduction in accuracy for times

93

SummaryConditional random fields (CRFs)

Undirected graphical modelCompare with Bayesian Networks, Markov Random

Fields

94

SummaryConditional random fields (CRFs)

Undirected graphical modelCompare with Bayesian Networks, Markov Random

Fields

Linear-chain modelsHMM sequence structure + MaxEnt feature models

95

SummaryConditional random fields (CRFs)

Undirected graphical modelCompare with Bayesian Networks, Markov Random

Fields

Linear-chain modelsHMM sequence structure + MaxEnt feature models

Skip-chain modelsAugment with longer distance dependencies

Pros:

96

SummaryConditional random fields (CRFs)

Undirected graphical modelCompare with Bayesian Networks, Markov Random

Fields

Linear-chain modelsHMM sequence structure + MaxEnt feature models

Skip-chain modelsAugment with longer distance dependencies

Pros: Good performanceCons:

97

SummaryConditional random fields (CRFs)

Undirected graphical modelCompare with Bayesian Networks, Markov Random

Fields

Linear-chain modelsHMM sequence structure + MaxEnt feature models

Skip-chain modelsAugment with longer distance dependencies

Pros: Good performanceCons: Compute intensive

98

HW #5

99

HW #5: Beam Search Apply Beam Search to MaxEnt sequence

decoding

Task: POS tagging

Given files:test data: usual formatboundary file: sentence lengthsmodel file

Comparisons:Different topN, topK, beam_width

Tag ContextFollowing Ratnaparkhi ‘96, model uses previous

tag (prevT=tag) and previous tag bigram (prevTwoTags=tagi-2+tagi-1)

These are NOT in the data file; you compute them on the fly.

Notes:Due to sparseness, it is possible a bigram may not

appear in the model file. Skip it.These are feature functions: If you have a different

candidate tag for the same word, weights will differ.

100

101

UncertaintyReal world tasks:

Partially observable, stochastic, extremely complex

Probabilities capture “Ignorance & Laziness”Lack relevant facts, conditions

Failure to enumerate all conditions, exceptions

102

MotivationUncertainty in medical diagnosis

Diseases produce symptoms In diagnosis, observed symptoms => disease IDUncertainties

Symptoms may not occurSymptoms may not be reportedDiagnostic tests not perfect

False positive, false negative

How do we estimate confidence?