COMP 551 -Applied Machine Learning Lecture 9 ---Instance...

Transcript of COMP 551 -Applied Machine Learning Lecture 9 ---Instance...

COMP 551 - Applied Machine LearningLecture 9 --- Instance learningWilliam L. Hamilton(with slides and content from Joelle Pineau)* Unless otherwise noted, all material posted for this course are copyright of the instructor, and cannot be reused or reposted without the instructor’s written permission.

William L. Hamilton, McGill University and Mila 1

MiniProject 2

§ Joint leaderboard with UdeM students: http://www-ens.iro.umontreal.ca/~georgeth/kaggle2019/

William L. Hamilton, McGill University and Mila 2

Variable Ranking§ Idea: Rank features by a scoring function defined for individual

features, independently of the context of others. Choose the m’highest ranked features.

§ Pros / cons:

§ Need to select a scoring function.

§ Must select subset size (m’): cross-validation

§ Simple and fast – just need to compute a scoring function m times and sort m scores.

William L. Hamilton, McGill University and Mila 3

Scoring function: Correlation Criteria

slide by Michel VerleysenR( j) =

(xij −i=1:n∑ x j )(yi − y )

(xij −i=1:n∑ x j )2 (yi − y )

2

i=1:n∑William L. Hamilton, McGill University and Mila 4

Scoring function: Mutual information§ Think of Xj and Y as random variables.

§ Mutual information between variable Xj and target Y:

§ Empirical estimate from data (assume discretized variables):

I( j) = p(x j, yY∫ )logp(x j, y)p(x j )p(y)

dxdyXj∫

I( j) = P(Xj = x j,Y = y)logp(Xj = x j,Y = y)p(Xj = x j )p(Y = y)Y∑X j

∑

William L. Hamilton, McGill University and Mila 5

Nonlinear dependencies with MI

§ Mutual information identifies nonlinear relationships between variables.

§ Example:§ x uniformly distributed over [-1 1]§ y = x2 + noise§ z uniformly distributed over [-1 1]§ z and x are independent

William L. Hamilton, McGill University and Mila 6

Variable Ranking§ Idea: Rank features by a scoring function defined for individual

features, independently of the context of others. Choose the m’highest ranked features.

§ Pros / cons:

§ Need to select a scoring function.

§ Must select subset size (m’): cross-validation

§ Simple and fast – just need to compute a scoring function m times and sort m scores.

§ Scoring function is defined for individual features (not subsets).

William L. Hamilton, McGill University and Mila 7

Best-Subset selection

§ Idea: Consider all possible subsets of the features, measure performance on a validation set, and keep the subset with the best performance.

§ Pros / cons?

§ We get the best model!

§ Very expensive to compute, since there is a combinatorial number of subsets.

William L. Hamilton, McGill University and Mila 8

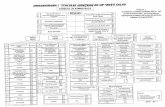

Search space of subsets

n features, 2n – 1 possible feature subsets!

Kohavi-John, 1997

William L. Hamilton, McGill University and Mila 9

Subset selection in practice§ Formulate as a search problem, where the state is the feature set

that is used, and search operators involve adding or removing feature set

§ Constructive methods like forward/backward search

§ Local search methods, genetic algorithms

§ Use domain knowledge to help you group features together, to reduce size of search space

§ e.g., In NLP, group syntactic features together, semantic features, etc.

William L. Hamilton, McGill University and Mila 10

Regularization§ Idea: Modify the objective function to constrain the model choice.

Typically adding term (∑j=1:m wjp)1/p.§ Linear regression -> Ridge regression, Lasso

§ Challenge: Need to adapt the optimization procedure (e.g. handle non-convex objective).

§ Regularization can be viewed as a form of feature selection.

§ This approach is often used for very large natural (non-constructed) feature sets, e.g. images, speech, text, video.

William L. Hamilton, McGill University and Mila 11

Steps to solving a supervised learning problem

1. Decide what the input-output pairs are.

2. Decide how to encode inputs and outputs.

• This defines the input space X and output space Y.

3. Choose a class of hypotheses / representations H.• E.g. linear functions.

4. Choose an error function (cost function) to define best hypothesis.• E.g. Least-mean squares.

5. Choose an algorithm for searching through space of hypotheses.

(If doing k-fold cross-validation, re-do feature selection for each fold.)

Evaluate onvalidation set

Evaluate final model/hypothesis on test set

William L. Hamilton, McGill University and Mila 12

Parametric supervised learning§ So far, we assumed we have a dataset of labeled examples.

§ From this, learn a parameter vector of a fixed size such that some error measure based on the training data is minimized.

§ These methods are called parametric, and main goal is to summarize the data using the parameters.

§ Parametric methods are typically global = one set of parameters for the entire data space.

William L. Hamilton, McGill University and Mila 18

Non-parametric learning methods§ Key idea: just store all training examples <xi,yi>.

§ When a query is made, computer the value of the new instance based on the values of the closest (most similar) points.

William L. Hamilton, McGill University and Mila 19

Non-parametric learning methods§ Key idea: just store all training examples <xi,yi>.

§ When a query is made, computer the value of the new instance based on the values of the closest (most similar) points.

§ Requirements:§ A distance function.§ How many closest points (neighbors) to look at?§ How do we compute the value of the new point based on the

existing values?

William L. Hamilton, McGill University and Mila 20

What kind of distance metric?§ Euclidean distance?

William L. Hamilton, McGill University and Mila 21

What kind of distance metric?§ Euclidean distance?

§ Weighted Euclidean distance (with weights based on domain knowledge): d(x,x’)=∑j=1:m wj (xj – xj’)2?

§ Maximum or minimum difference along any axis?

William L. Hamilton, McGill University and Mila 22

What kind of distance metric?§ Euclidean distance?

§ Weighted Euclidean distance (with weights based on domain knowledge): d(x,x’)=∑j=1:m wj (xj – xj’)2?

§ Maximum or minimum difference along any axis?

§ An arbitrary distance or similarity function d, specific for the application at hand (works best, if you have one.)

William L. Hamilton, McGill University and Mila 23

Simple idea: Connect the dots!

What kind of distance metric?

• Euclidian distance

• Maximum/minimum di�erence along any axis

• Weighted Euclidian distance (with weights based on domain knowledge)

d(x,x0) =nX

j=1

uj(xj � x0j)

2

where xj denotes the value of the jth feature in the vector / instance x

• An arbitrary distance or similarity function d, specific for the application

at hand (works best, if you have one)

COMP-652, Lecture 7 - September 27, 2012 15

Simple idea: Connect the dots!

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

73;.30*/7319<=-.

Wisconsin data set, classification

COMP-652, Lecture 7 - September 27, 2012 16

William L. Hamilton, McGill University and Mila 24

Simple idea: Connect the dots!

What kind of distance metric?

• Euclidian distance

• Maximum/minimum di�erence along any axis

• Weighted Euclidian distance (with weights based on domain knowledge)

d(x,x0) =nX

j=1

uj(xj � x0j)

2

where xj denotes the value of the jth feature in the vector / instance x

• An arbitrary distance or similarity function d, specific for the application

at hand (works best, if you have one)

COMP-652, Lecture 7 - September 27, 2012 15

Simple idea: Connect the dots!

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

73;.30*/7319<=-.

Wisconsin data set, classification

COMP-652, Lecture 7 - September 27, 2012 16

William L. Hamilton, McGill University and Mila 25

Simple idea: Connect the dots!Simple idea: Connect the dots!

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e to r

ecurr

ence

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e to r

ecurr

ence

Wisconsin data set, regression

COMP-652, Lecture 7 - September 27, 2012 17

One-nearest neighbor

• Given: Training data {(xi, yi)}mi=1, distance metric d on X .

• Learning: Nothing to do! (just store data)

• Prediction: for x ⌃ X– Find nearest training sample to x.

i⇤ ⌃ argmini

d(xi,x)

– Predict y = yi⇤.

COMP-652, Lecture 7 - September 27, 2012 18

William L. Hamilton, McGill University and Mila 26

Simple idea: Connect the dots!Simple idea: Connect the dots!

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e to r

ecurr

ence

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e to r

ecurr

ence

Wisconsin data set, regression

COMP-652, Lecture 7 - September 27, 2012 17

One-nearest neighbor

• Given: Training data {(xi, yi)}mi=1, distance metric d on X .

• Learning: Nothing to do! (just store data)

• Prediction: for x ⌃ X– Find nearest training sample to x.

i⇤ ⌃ argmini

d(xi,x)

– Predict y = yi⇤.

COMP-652, Lecture 7 - September 27, 2012 18

William L. Hamilton, McGill University and Mila 27

One-nearest neighbor§ Given: Training data X, distance metric d on X.

§ Learning: Nothing to do! (Just store the data).

§ Prediction: For a new point xnewFind nearest training sample xi*

xi* =argmini d(xi,xnew)Predict ynew =yi*

William L. Hamilton, McGill University and Mila 28

One-nearest neighbor§ Given: Training data X, distance metric d on X.

§ Learning: Nothing to do! (Just store the data).

William L. Hamilton, McGill University and Mila 29

What does the approximator look like?

§ Nearest-neighbor does not explicitly compute decision boundaries.

§ But the effective decision boundaries are a subset of the Voronoi diagram for the training data.

§ Each decision boundary is a line segment that is equidistant between two points of opposite classes.

What does the approximator look like?

• Nearest-neighbor does not explicitly compute decision boundaries

• But the e�ective decision boundaries are a subset of the Voronoi diagramfor the training data

Each line segment is equidistant between two points of opposite classes.

COMP-652, Lecture 7 - September 27, 2012 19

Distance metric is really important!

!

"#$%&'()*+,+-../0+-..10+234&56+78+9##&5 :3;*<3=5>?<;54+@5<&3'3(A+B@'45+//

9C@*'D<&'<*5+E';*<3=5+95*&'=;BC$$#;5+*)5+'3$C*+D5=*#&;+F/0+F-0+GF3+<&5+*6#+4'H53;'#3<@A

!/ I+J+F// 0+F/- K+0+!- I+J+F-/ 0+F-- K+0+G!L I+J+FL/ 0+FL- K8

M35+=<3+4&<6+*)5+35<&5;*>35'()?#&+&5('#3;+'3+'3$C*+;$<=58

E';*J!'0!NK+IJF'/ O FN/K-PJQF'- O QFN-K

-E';*J!'0!NK+I+JF'/ O FN/K- P+JF'- O FN-K

-

R)5+&5@<*'D5+;=<@'3(;+'3+*)5+4';*<3=5+H5*&'=+<SS5=*+&5('#3+;)<$5;8

"#$%&'()*+,+-../0+-..10+234&56+78+9##&5 :3;*<3=5>?<;54+@5<&3'3(A+B@'45+/-

TC=@'45<3+E';*<3=5+95*&'=

M*)5&+95*&'=;G

U 9<)<@<3#?';0+V<3W>?<;540+"#&&5@<*'#3>?<;54+

JB*<3S'@@P7<@*X0+9<5;Y+V'3(#+;%;*5HGK

! "

#####

$

%

&&&&&

'

(

)*

)

+)

*

*

!

"

!

!

!

#

!!

!$$

$!$

$$!

%&'()*'&'()*'%%&'(*'+

(%%&'(*'+

!

!!!!

!

!

!

"

"""

#

$$# ,

6)5&5

M&+5ZC'D<@53*@%0

Left: both attributes weighted equally; Right: second attributes weighted more

COMP-652, Lecture 7 - September 27, 2012 20

William L. Hamilton, McGill University and Mila 30

Choice of distance metric is important!

What does the approximator look like?

• Nearest-neighbor does not explicitly compute decision boundaries

• But the e�ective decision boundaries are a subset of the Voronoi diagramfor the training data

Each line segment is equidistant between two points of opposite classes.

COMP-652, Lecture 7 - September 27, 2012 19

Distance metric is really important!

!

"#$%&'()*+,+-../0+-..10+234&56+78+9##&5 :3;*<3=5>?<;54+@5<&3'3(A+B@'45+//

9C@*'D<&'<*5+E';*<3=5+95*&'=;BC$$#;5+*)5+'3$C*+D5=*#&;+F/0+F-0+GF3+<&5+*6#+4'H53;'#3<@A

!/ I+J+F// 0+F/- K+0+!- I+J+F-/ 0+F-- K+0+G!L I+J+FL/ 0+FL- K8

M35+=<3+4&<6+*)5+35<&5;*>35'()?#&+&5('#3;+'3+'3$C*+;$<=58

E';*J!'0!NK+IJF'/ O FN/K-PJQF'- O QFN-K

-E';*J!'0!NK+I+JF'/ O FN/K- P+JF'- O FN-K

-

R)5+&5@<*'D5+;=<@'3(;+'3+*)5+4';*<3=5+H5*&'=+<SS5=*+&5('#3+;)<$5;8

"#$%&'()*+,+-../0+-..10+234&56+78+9##&5 :3;*<3=5>?<;54+@5<&3'3(A+B@'45+/-

TC=@'45<3+E';*<3=5+95*&'=

M*)5&+95*&'=;G

U 9<)<@<3#?';0+V<3W>?<;540+"#&&5@<*'#3>?<;54+

JB*<3S'@@P7<@*X0+9<5;Y+V'3(#+;%;*5HGK

! "

#####

$

%

&&&&&

'

(

)*

)

+)

*

*

!

"

!

!

!

#

!!

!$$

$!$

$$!

%&'()*'&'()*'%%&'(*'+

(%%&'(*'+

!

!!!!

!

!

!

"

"""

#

$$# ,

6)5&5

M&+5ZC'D<@53*@%0

Left: both attributes weighted equally; Right: second attributes weighted more

COMP-652, Lecture 7 - September 27, 2012 20

What does the approximator look like?

• Nearest-neighbor does not explicitly compute decision boundaries

• But the e�ective decision boundaries are a subset of the Voronoi diagramfor the training data

Each line segment is equidistant between two points of opposite classes.

COMP-652, Lecture 7 - September 27, 2012 19

Distance metric is really important!

!

"#$%&'()*+,+-../0+-..10+234&56+78+9##&5 :3;*<3=5>?<;54+@5<&3'3(A+B@'45+//

9C@*'D<&'<*5+E';*<3=5+95*&'=;BC$$#;5+*)5+'3$C*+D5=*#&;+F/0+F-0+GF3+<&5+*6#+4'H53;'#3<@A

!/ I+J+F// 0+F/- K+0+!- I+J+F-/ 0+F-- K+0+G!L I+J+FL/ 0+FL- K8

M35+=<3+4&<6+*)5+35<&5;*>35'()?#&+&5('#3;+'3+'3$C*+;$<=58

E';*J!'0!NK+IJF'/ O FN/K-PJQF'- O QFN-K

-E';*J!'0!NK+I+JF'/ O FN/K- P+JF'- O FN-K

-

R)5+&5@<*'D5+;=<@'3(;+'3+*)5+4';*<3=5+H5*&'=+<SS5=*+&5('#3+;)<$5;8

"#$%&'()*+,+-../0+-..10+234&56+78+9##&5 :3;*<3=5>?<;54+@5<&3'3(A+B@'45+/-

TC=@'45<3+E';*<3=5+95*&'=

M*)5&+95*&'=;G

U 9<)<@<3#?';0+V<3W>?<;540+"#&&5@<*'#3>?<;54+

JB*<3S'@@P7<@*X0+9<5;Y+V'3(#+;%;*5HGK

! "

#####

$

%

&&&&&

'

(

)*

)

+)

*

*

!

"

!

!

!

#

!!

!$$

$!$

$$!

%&'()*'&'()*'%%&'(*'+

(%%&'(*'+

!

!!!!

!

!

!

"

"""

#

$$# ,

6)5&5

M&+5ZC'D<@53*@%0

Left: both attributes weighted equally; Right: second attributes weighted more

COMP-652, Lecture 7 - September 27, 2012 20

William L. Hamilton, McGill University and Mila 31

Distance metric tricks

§ You may need to do feature preprocessing:§ Scale the input dimensions (or normalize them).

§ Remove noisy inputs.

§ Determine weights for attributes based on cross-validation (or information-theoretic methods).

William L. Hamilton, McGill University and Mila 32

Distance metric tricks

§ You may need to do feature preprocessing:§ Scale the input dimensions (or normalize them).

§ Remove noisy inputs.

§ Determine weights for attributes based on cross-validation (or information-theoretic methods).

§ Distance metric is often domain-specific.§ E.g. string edit distance in bioinformatics.

§ E.g. trajectory distance in time series models for walking data.

§ Distance can be learned sometimes.

William L. Hamilton, McGill University and Mila 33

k-nearest neighbor (kNN)§ Given: Training data X, distance metric d on X.

§ Learning: Nothing to do! (Just store the data).

§ Prediction:

§ For a new point xnew, find the k nearest training samples to x.§ Let their indices be i1,i2,…,ik.§ Predict: y=mean/median of {yi1,yi2,…,yik}for regression

y=majority of {yi1,yi2,…,yik}for classification, or empirical probability of each class.

William L. Hamilton, McGill University and Mila 34

Classification, 2-nearest neighborClassification, 2-nearest neighbor, empirical distribution

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

$!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 23

Classification, 3-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

%!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 24

William L. Hamilton, McGill University and Mila 35

Classification, 3-nearest neighbor

Classification, 2-nearest neighbor, empirical distribution

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

$!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 23

Classification, 3-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

%!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 24

William L. Hamilton, McGill University and Mila 36

Classification, 5-nearest neighborClassification, 5-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

#!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 25

Classification, 10-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

!"!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 26

William L. Hamilton, McGill University and Mila 37

Classification, 10-nearest neighbor

Classification, 5-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

#!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 25

Classification, 10-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

!"!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 26

William L. Hamilton, McGill University and Mila 38

Classification, 15-nearest neighborClassification, 15-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

!#!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 27

Classification, 20-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

$"!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 28

William L. Hamilton, McGill University and Mila 39

Classification, 20-nearest neighbor

Classification, 15-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

!#!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 27

Classification, 20-nearest neighbor

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

$"!73;.30*/7319<=-.>/,3;7

COMP-652, Lecture 7 - September 27, 2012 28

William L. Hamilton, McGill University and Mila 40

Regression, 2-nearest neighborRegression, 2-nearest neighbor, mean prediction

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e t

o r

ecurr

en

ce

COMP-652, Lecture 7 - September 27, 2012 29

Regression, 3-nearest neighbor

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e t

o r

ecurr

en

ce

COMP-652, Lecture 7 - September 27, 2012 30

William L. Hamilton, McGill University and Mila 41

Regression, 3-nearest neighbor

Regression, 2-nearest neighbor, mean prediction

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e to r

ecurr

ence

COMP-652, Lecture 7 - September 27, 2012 29

Regression, 3-nearest neighbor

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e to r

ecurr

ence

COMP-652, Lecture 7 - September 27, 2012 30

William L. Hamilton, McGill University and Mila 42

Regression, 5-nearest neighborRegression, 5-nearest neighbor

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e t

o r

ecu

rre

nce

COMP-652, Lecture 7 - September 27, 2012 31

Regression, 10-nearest neighbor

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e t

o r

ecu

rre

nce

COMP-652, Lecture 7 - September 27, 2012 32

William L. Hamilton, McGill University and Mila 43

Regression, 10-nearest neighbor

Regression, 5-nearest neighbor

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e t

o r

ecu

rre

nce

COMP-652, Lecture 7 - September 27, 2012 31

Regression, 10-nearest neighbor

10 12 14 16 18 20 22 24 26 280

10

20

30

40

50

60

70

80

nucleus size

tim

e t

o r

ecu

rre

nce

COMP-652, Lecture 7 - September 27, 2012 32

William L. Hamilton, McGill University and Mila 44

Bias-variance trade-off§ What happens if k is low?

§ What happens if k is high?

William L. Hamilton, McGill University and Mila 45

Bias-variance trade-off§ What happens if k is low?

§ Very non-linear functions can be approximated, but we also capture the noise in the data. Bias is low, variance is high.

§ What happens if k is high?§ The output is much smoother, less sensitive to data variation. High

bias, low variance.

§ A validation set can be used to pick the best k.

William L. Hamilton, McGill University and Mila 46

Limitations of k-nearest neighbor (kNN)

§ A lot of discontinuities!

§ Sensitive to small variations in the input data.

§ Can we fix this but still keep it (fairly) local?

William L. Hamilton, McGill University and Mila 47

k-nearest neighbor (kNN)§ Given: Training data X, distance metric d on X.

§ Learning: Nothing to do! (Just store the data).

§ Prediction:

§ For a new point xnew, find the k nearest training samples to x.§ Let their indices be i1,i2,…,ik.§ Predict: y=mean/median of {yi1,yi2,…,yik}for regression

y=majority of {yi1,yi2,…,yik}for classification, or empirical probability of each class.

William L. Hamilton, McGill University and Mila 48

Distance-weighted (kernel-based) NN§ Given: Training data X, distance metric d, weighting function w:R→R.

§ Learning: Nothing to do! (Just store the data).

§ Prediction:

§ Given input xnew.

§ For each xi in the training data X,compute wi =w(d(xi,xnew)).§ Predict: y=∑i wiyi /∑i wi .

William L. Hamilton, McGill University and Mila 49

Distance-weighted (kernel-based) NN§ Given: Training data X, distance metric d, weighting function w:R→R.

§ Learning: Nothing to do! (Just store the data).

§ Prediction:§ Given input xnew.§ For each xi in the training data X,compute wi =w(d(xi,xnew)).§ Predict: y=∑i wiyi /∑i wi .

§ How should we weigh the distances?

William L. Hamilton, McGill University and Mila 50

Some weighting functions

Distance-weighted (kernel-based) nearest neighbor

• Inputs: Training data {(xi, yi)}mi=1, distance metric d on X , weighting

function w : R ⌥⌅ R.• Learning: Nothing to do!

• Prediction: On input x,

– For each i compute wi = w(d(xi,x)).– Predict weighted majority or mean. For example,

y =

PiwiyiPiwi

• How to weight distances?

COMP-652, Lecture 7 - September 27, 2012 35

Some weighting functions

1

d(xi,x)

1

d(xi,x)21

c+ d(xi,x)2e�

d(xi,x)2

�2

COMP-652, Lecture 7 - September 27, 2012 36

William L. Hamilton, McGill University and Mila 51

Gaussian weighting, small !Example: Gaussian weighting, small ⇤

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

;<+001<7!=319>*3?/73<.30*/7319>@-./=1*>/!A"&$#

COMP-652, Lecture 7 - September 27, 2012 37

Gaussian weighting, medium ⇤

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

;<+001<7!=319>*3?/73<.30*/7319>@-./=1*>/!A$

COMP-652, Lecture 7 - September 27, 2012 38

William L. Hamilton, McGill University and Mila 52

Gaussian weighting, medium !

Example: Gaussian weighting, small ⇤

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

;<+001<7!=319>*3?/73<.30*/7319>@-./=1*>/!A"&$#

COMP-652, Lecture 7 - September 27, 2012 37

Gaussian weighting, medium ⇤

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

;<+001<7!=319>*3?/73<.30*/7319>@-./=1*>/!A$

COMP-652, Lecture 7 - September 27, 2012 38

William L. Hamilton, McGill University and Mila 53

Gaussian weighting, large !Gaussian weighting, large ⇤

!" !# $" $# %"

"

"&$

"&'

"&(

"&)

!

*+,-./0123/4,,56

7-7!.38+..179/4"6/:/.38+..179/4!6

;<+001<7!=319>*3?/73<.30*/7319>@-./=1*>/!A#

All examples get to vote! Curve is smoother, but perhaps too smooth.

COMP-652, Lecture 7 - September 27, 2012 39

Locally-weighted linear regression

• Weighted linear regression: di�erent weights in the error function for

di�erent points (see answer to homework 1)

• Locally weighted linear regression: weights depend on the distance tothe query point

• Uses a local linear fit (rather than just an average) around the query

point

• If the distance metric is well tuned, it can lead to really good results (can

represent non-linear functions easily and faithfully)

COMP-652, Lecture 7 - September 27, 2012 40

All examples get to vote! Curve is smoother, but perhaps too smooth?

William L. Hamilton, McGill University and Mila 54

Lazy vs eager learning

§ Lazy learning: Wait for query before generalization.

§ E.g. Nearest neighbour.

§ Eager learning: Generalize before seeing query.

§ E.g. Logistic regression, LDA, decision trees, neural networks.

William L. Hamilton, McGill University and Mila 55

Pros and cons of lazy and eager learning§ Eager learners create global approximation.

§ Lazy learners create many local approximations.

§ If they use the same hypothesis space, a lazy learner can represent more complex functions (e.g., consider H = linear function).

William L. Hamilton, McGill University and Mila 56

Pros and cons of lazy and eager learning§ Eager learners create global approximation.

§ Lazy learners create many local approximations.

§ If they use the same hypothesis space, a lazy learner can represent more complex functions (e.g., consider H = linear function).

§ Lazy learning has much faster training time.

§ Eager learner does the work off-line, summarizes lots of data with few parameters.

William L. Hamilton, McGill University and Mila 57

Pros and cons of lazy and eager learning§ Eager learners create global approximation.

§ Lazy learners create many local approximations.

§ If they use the same hypothesis space, a lazy learner can represent more complex functions (e.g., consider H = linear function).

§ Lazy learning has much faster training time.

§ Eager learner does the work off-line, summarizes lots of data with few parameters.

§ Lazy learner typically has slower query answering time (depends on number of instances and number of features) and requires more memory (must store all the data).

William L. Hamilton, McGill University and Mila 58

Scaling up

§ kNN in high-dimensional feature spaces?

William L. Hamilton, McGill University and Mila 59

Scaling up

§ kNN in high-dimensional feature spaces?§ In high dim. spaces, the distance between near and far points appears similar.

§ A few points (“hubs”) show up repeatedly in the top kNN (Radovanovic et al., 2009).

William L. Hamilton, McGill University and Mila 60

Scaling up

§ kNN in high-dimensional feature spaces?§ In high dim. spaces, the distance between near and far points appears similar.§ A few points (“hubs”) show up repeatedly in the top kNN (Radovanovic et al., 2009).

§ kNN with larger number of datapoints?§ Can be implemented efficiently, O(log n) at retrieval time, if we use smart data

structures:§ Condensation of the dataset.§ Hash tables in which the hashing function is based on the distance metric.§ KD-trees (Tutorial: http://www.autonlab.org/autonweb/14665)

William L. Hamilton, McGill University and Mila 61

When to use instance-based learning§ Instances map to points in Rn . Or else a given distance metric.

§ Not too many attributes per instance (e.g. <20), otherwise all points look at a similar distance, and noise becomes a big issue.

§ Easily fooled by irrelevant attributes (for most distance metrics.)

§ Can produce confidence intervals in addition to the prediction.

§ Provides a variable resolution approximation (based on density of points).

William L. Hamilton, McGill University and Mila 62

ApplicationHays & Efros, Scene Completion Using Millions of Photographs, CACM, 2008.http://graphics.cs.cmu.edu/projects/scene-completion/scene_comp_cacm.pdf

William L. Hamilton, McGill University and Mila 63

What you should know§ Difference between eager vs lazy learning.

§ Key idea of non-parametric learning.

§ The k-nearest neighbor algorithm for classification and regression, and its properties.

§ The distance-weighted NN algorithm and locally-weighted linear regression.

William L. Hamilton, McGill University and Mila 64

![index []...p 104—109 comp. 190 p 110—115 comp. 191 p 116—121 comp. 192 p 122—127 comp. 193 p 128—133 comp. 194 p 134—139 comp. 195 p 140—147 comp. 196 p 148—153 comp.](https://static.fdocuments.in/doc/165x107/5f95526362174b59db2f2d15/index-p-104a109-comp-190-p-110a115-comp-191-p-116a121-comp-192.jpg)

![Cultural Integration Post Merger WLH Consulting & Pfizer [Compatibility Mode]](https://static.fdocuments.in/doc/165x107/552516914a79598a498b4977/cultural-integration-post-merger-wlh-consulting-pfizer-compatibility-mode.jpg)