Collaborative Heuristic Evaluation, UPA2010

-

Upload

lucy-buykx -

Category

Technology

-

view

1.152 -

download

1

description

Transcript of Collaborative Heuristic Evaluation, UPA2010

Collaborative Heuristic Evaluation:improving the effectiveness of

heuristic evaluation

Helen Petrie, Lucy BuykxHuman Computer Interaction Research Group

Department of Computer ScienceUniversity of York, UK

Standard heuristic evaluation (SHE)

Strengths• Independent evaluator viewpoints• High volume of potential problems

Weaknesses• Laborious, boring, frustrating• Time consuming to combine problems from individual

evaluators• High proportion of trivial problems

Collaborative Heuristic Evaluation (CHE)

Method– Evaluators work in a group– Propose potential usability problems– Create problem list in real time– Independently rate severity

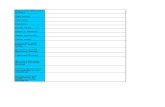

CHE Problem rating sheet

12345

Experiment method

• 4 websites• airline, train, tourist information sites

• 2 groups of 5 usability professionals

• 2 methods: SHE and CHE– each professional performed each method on

two websites

Experiment design

Group 1Group 1 Group 2Group 2

CHECHENational RailVisit Britain

British TownsEasy Jet

SHESHEEasy Jet

British TownsVisit BritainNational Rail

Analysis of data

Made master problem list for SHE– Only included problems found on pages visited

by all evaluators– Relaxed matching of problems

Master list for CHE already there

Analysed how many evaluators found each problem in each method

Results

SHE: 22.9 usability problems per websitePool of 250 problems on pages visited by all

evaluators

CHE: 42.7 usability problems per websitePool of 153 problems

(smaller pool, because of greater overlap between evaluators …)

Inter-evaluator agreement

National Rail website

SHE CHE

Any-two agreement: measure of evaluator effect

SHESHE CHECHE

Agree it’s a Agree it’s a problemproblem

8.9% 91.6%

Agree severity Agree severity ratingrating

36.0%navg = 19

37.5%navg = 38

Conclusion

CHE ….• Exposes evaluators to each other ideas • Creates more agreement about problems• More efficient as a method

- creates master problem list in the session– larger number of problems per hour

• Generates discussion/ideas for solving problems

• More enjoyable for evaluators

Matching process

• “don’t know what overtaking trains means”• “don’t know what ‘include overtaking trains

in journey results’ means”

• “want to be able to select any time of day but drop down only offers 4 hour segments”

• “specified times is well hidden and inflexible - want to go from any time”