Cluster Computers. Introduction Cluster computing –Standard PCs or workstations connected by a...

-

Upload

alexandra-mckinney -

Category

Documents

-

view

226 -

download

8

Transcript of Cluster Computers. Introduction Cluster computing –Standard PCs or workstations connected by a...

Introduction

• Cluster computing– Standard PCs or workstations connected by a fast network– Good price/performance ratio– Exploit existing (idle) machines or use (new) dedicated machines

• Cluster computers versus supercomputers– Processing power is similar: based on microprocessors– Communication performance was the key difference– Modern networks (Myrinet, Infiniband) have bridged this gap

Overview

• Cluster computers at our department– DAS-1: 128-node Pentium-Pro / Myrinet cluster (gone)– DAS-2: 72-node dual-Pentium-III / Myrinet-2000 cluster– DAS-3: 85-node dual-core dual Opteron / Myrinet-10G cluster

• Part of a wide-area system: Distributed ASCI Supercomputer• Network interface protocols for Myrinet

– Low-level systems software– Partly runs on the network interface card (firmware)

Node configuration

• 200 MHz Pentium Pro• 128 MB memory• 2.5 GB disk• Fast Ethernet 100 Mbit/s• Myrinet 1.28 Gbit/s (full duplex)• Operating system: Red Hat Linux

DAS-2 Cluster (2002-2006)

• 72 nodes, each with 2 CPUs (144 CPUs in total)• 1 GHz Pentium-III• 1 GB memory per node• 20 GB disk• Fast Ethernet 100 Mbit/s• Myrinet-2000 2 Gbit/s (crossbar)• Operating system: Red Hat Linux• Part of wide-area DAS-2 system (5 clusters with 200 nodes in total)

Myrinet switch

Ethernet switch

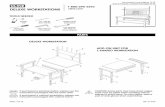

DAS-3 Cluster (Sept. 2006)

• 85 nodes, each with 2 dual-core CPUs(340 cores in total)

• 2.4 GHz AMD Opterons (64 bit)• 4 GB memory per node• 250 GB disk• Gigabit Ethernet • Myrinet-10G 10 Gb/s (crossbar)• Operating system: Scientific Linux• Part of wide-area DAS-3 system (5 clusters with 263 nodes in total),

using SURFnet-6 optical network with 40-80 Gb/s wide-area links

Nortel 5530 + 3 * 5510ethernet switch

85 compute nodes

85 * 1 Gb/s ethernet

Myri-10G switch

85 * 10 Gb/s Myrinet

10 Gb/s ethernet blade

8 * 10 Gb/s eth (fiber)

Nortel OME 6500with DWDM blade

80 Gb/s DWDMSURFnet6

1 or 10 Gb/s Campus uplink

Headnode(10 TB mass storage)

10 Gb/s Myrinet

10 Gb/s ethernet

Myrinet

Nortel

DAS-3 Networks

DAS-1 Myrinet

Components:• 8-port switches• Network interface card for each node (on PCI bus)• Electrical cables: reliable links

Myrinet switches:• 8 x 8 crossbar switch• Each port connects to a node (network interface) or another switch• Source-based, cut-through routing• Less than 1 microsecond switching delay

128-node DAS-1 cluster

• Ring topology would have:– 22 switches– Poor diameter: 11– Poor bisection width: 2

Topology 128-node clusterPC

PC

PC

PC

• 4 x 8 grid withwrap-around– Each switch is connected

to 4 other switchesand 4 PCs

– 32 switches (128/4)– Diameter: 6– Bisection width: 8

Performance

• DAS-2:– 9.6 μsec 1-way null-latency– 168 MB/sec throughput

• DAS-3:– 2.6 μsec 1-way null-latency– 950 MB/sec throughput