Changes Over Time Practice Milton Mermikides

-

Upload

milton-mermikides -

Category

Documents

-

view

1.525 -

download

4

description

Transcript of Changes Over Time Practice Milton Mermikides

Changes Over Time:

Practice A portfolio of collaborative electroacoustic works demonstrating the heterogenous reapplication of jazz

improvisational and time-feel techniques and models.

2

Contents

Introduction

Portfolio Contents

Compositional Notes

1. Hidden Music

2. Extended Solo Instruments

3. Reworked Ensemble

4. Research Output (Performances, Recordings and Events)

5. Ongoing and Upcoming Projects

References

3

Introduction

This portfolio comprises seven CDs and five DVDs from which several

representational extracts are compiled (CD 2 Core Works). Although there are many

compositional mechanisms and themes in common among all the works, the selected

pieces have been separated into three main categories. These are:

1) Hidden Music. Acousmatic electronic works that employ the mapping of

external physical phenomena to generative quasi-improvisational - M-Space

and expressive contour – systems: Primal Sound (2004, 2007), Head Music

(2004, 2007), Bloodlines (2005), Microcosmos (2006), Dendro (2009) and

Membrane (2009).

2) Reworked Ensemble. Works for a variety of mixed ensemble, employing

electronics alongside traditional instruments, M-Space modelling and

progressive interactive improvisational strategies. Selfish Theme (2006) and

extracts from the Rat Park Live (2007), 11th Light (2008), Rumori (2008) and

Jazz Reworked (2010) projects.

3) Extended Solo Instruments. Pieces for solo instruments and live electronics

involving a range of improvisational elements and technological extensions

to the instrument: String Theory (2006), Event Horizon (2007), Omnia 5:58

(2008), Assini (2009), Dark:Light (2009) and Blue Tension (2010).

This categorization allows for a more logical narrative through the works while

maintaining a chronology within each category. References are also made to other pieces

in the portfolio and ongoing projects. Score notation is not often used in these works,

and on the occasions when it is, its chief function is usually as an aide memoire, concealing

a range of concepts born from collaborative dialogue in rehearsal and implicit stylistic

mechanisms - not unlike those found in a jazz chart. Many pieces in this portfolio were

4

conceived - and are performed - with little, or no, use of notation. Graphic

representations, electronic flow diagrams and software screenshots of some works are

used here as illustrations of compositional approaches. However these are often a form

of post-hoc illustration of compositions that originated in well-defined but unwritten

compositional ideas, technological experimentation or collaborative improvisation; and

are rarely made before the work is performed or recorded. Even the idea of a completed

piece is somewhat nebular; pieces are often reworked and include major improvisational

elements, electronic systems are transplanted and spliced into other works, and no

identical, or even ‘ideal’ performance of any work exists. The work itself, borrowing

terminology from M-Space, may be seen as a higher-level field grouping a myriad of

varying, but related, possible performances. In this way, the composer in these works

migrates between roles, from the traditional conceiver of the entire conception of the

piece, to a facilitator deferring various portions of creative responsibility to performers,

random input and external physical phenomena. Within this portfolio, ‘jazz composer’,

Varèse’s notion of an ‘organiser of sound’ and ‘generative sound artist’ are all roles that

contribute in varying measures to that of the traditional composer.

The commentaries of each work vary in the level of detail and particular technical

focus so as to introduce compositional techniques in a logical sequence, maintain a

narrative and avoid unnecessary duplication of similar material. The works have been

selected in order to present a range of compositional approaches and musical contexts,

which may be explored more deeply across the whole portfolio at the examiner’s

discretion.

Following the compositional notes on is a list of performances, research events and

exhibitions that feature works from the submitted portfolio, and a survey of ongoing and

upcoming creative research projects.

5

Portfolio Contents

CD 1 Audio Examples (Supporting audio material)

CD 2 Core Works (Selected works from the portfolio 2004-10)

CD 3 Hidden Music (Lucid 2008)

CD 4 21st Century Bow: New Works for HyperBow (RAM 2007)

CD 5 SPEM (CoffeeLoop 2007)

CD 6 Tensions (Electronic works for harp, cello and guitar 2004-10)

CD 7 Jazz Reworked (DeWolfe 2010)

CD 8 Terminal (Mute 2010)

CD 9 Nexus (Selected ensemble works 2004-9)

DVD 1 Martino:Unstrung (Feature-length documentary, Sixteen Films 2007)

DVD 2 Microcosmos (Video installation, Wellcome Trust 2006)

DVD 3 Wake Up And Smell The Coffee (Planetarium Movie, SEEDA 2009)

DVD 4 Rat Park: Live (Live ensemble performance, Lucid 2006)

DVD 5 Organisations of Sound (Selected performances from UK, USA, Sardinia,

China, Japan)

6

Compositional Notes

1 Hidden Music

1.1 Primal Sound

1.2 Head Music

1.3 Bloodlines

1.4 Microcosmos

1.5 Membrane

2 Reworked Ensemble

2.1 The Selfish Theme

2.2 Rat Park Live

2.3 11th Light

2.4 Rumori

2.5 Double Back

3 Extended Solo Instruments

3.1 String Theory and Event Horizon

3.2 Omnia 5:58

3.3 Assini

3.4 Dark:Light

3.5 Blue Tension

7

1. Hidden Music Electronic quasi-improvisational works guided by external physical phenomena

1.1 Primal Sound (2004-7)

Artist & sculptor Angela Palmer has received much acclaim and publicity for her

science/art crossover works, which include MRI images of an Egyptian mummy, a

public exhibition of felled Amazonian trees in Trafalgar Square and a photography

exhibit of the most, and least, polluted places on earth (Palmer 2010). She approached

the author in January 2004 with a particular project in mind; having been introduced to a

piece of writing from Rainer Rilke’s Ur-Geräusch (1919, p 1085-1093) which set out an

irresistible challenge.

The coronal suture of the skull (which should now be chiefly

investigated) has let us assume a certain similarity to the

closely wound line that the needle of a phonograph cuts into

the receptive, revolving cylinder of the machine. Suppose, for

instance, one played a trick on this needle and caused it to

retrace a path not made by the graphic translation of a sound,

but self-sufficing and existing in nature – good, let us say it

boldly, if it were (e.g.) even the coronal suture – what would

happen? A sound must come into being, a sequence of

sounds, music…Feelings of what sort? Incredulity, awe, fear,

reverence yes, which of all these feelings prevents me from

proposing a name for the primal sound that would then come

to birth?

Ur-Geräusch (Rilke 1919, p 1087)

8

Palmer hoped to commission a piece of music inspired by this text, to be used in a

gallery exhibition alongside the skull of an unknown Victorian woman (Figure 1.1.1).

Figure 1.1.1 Image of the coronal suture of an unknown Victorian woman, source material for Primal Sound’s expressive contours (©2004 Angela Palmer).

The translation of physical shapes to musical parameters, or millimetrization, has been

explored in composition, perhaps most famously by Villa Lobos in New York Skyline

Melody (Frey 2010), and the idea of melodic contour in general is well-researched (Adams

1976). Rilke proposes something unusual in the field by suggesting that a physical shape

could represent amplitude - rather than Villa Lobos’s approach of melody - against time.

With the resources available, a simple ‘phonographic’ rendering of this contour alone did

not provide enough complexity for a piece of any significant length, so the decision to

map its shape to a variety of parameters was made. This may be thought as a form of

reverse engineering of the expressive contours concept (see Changes Over Time: Theory 1.6

p 56-68). As opposed to many contours emerging as spontaneous gestures during a jazz

improvisation, in this scenario one particular contour is employed to manipulate a host

9

of parameters. This concept may be termed isokinetos (‘equal gesture’) (see Changes Over

Time: Theory 1.4 p 20) and allows in this case the creation of a quasi-improvisational work,

whereby compositional decisions are deferred to a physical pattern. Although the types

of relationships between gesture and particular parameters are determined by the

composer, the bulk of the resulting musical outcome is not known. There is a sense of

discovery in this compositional process quite unlike the experience of tinkering at a

skeletal melodic concept, for example.

Rilke’s mapping was achieved electronically using Max/MSP to inscribe a virtual

record groove with a scan of the suture (Figure 1.1.2 shows a mapping together with an

electron microscopic image of a record groove for comparison). The resulting sound,

though short, is rather effective (CD1.47).

Figure 1.1.2 ‘Phonographic’ translation of the coronal suture in Figure 1.1.1 shown above an electron

microscopic image of a record groove (lower image ©2005 Chris Supranowitz, University of Rochester). The resulting audio is played 3 times on CD1.47.

10

To create more material, this same contour is also rendered as a microtonal melody.

CD1.48 plays the musical translation, of the sample indicated in Figure 1.1.3, as a sine

wave following the contour within the frequency range indicated.

Figure 1.1.3 The coronal suture in Figure 1.1.1 rendered as a microtonal melodic expressive contour

(CD1.48).

In order to form a harmonic backdrop, a just intonated scale (Pythagorean A

Lydian) is mapped against a tracing of the suture (Figure 1.1.4). This slow translation

introduces single sine-wave tones that split as the loops of the contour curve away

creating a gradually converging and diverging harmonic texture. The musical translation

that emerges from the sample indicated in Figure 1.1.4, with its characteristic beating

frequencies, becomes a recurrent motif in the piece (CD1.49).

Figure 1.1.4 Sine waves released by the coronal suture as it hits predefined trigger points, forming a harmonic texture (CD1.49).

11

Musical events in Primal Sound are created - and also organized structurally -

according to systems guided by the coronal suture itself. For example, new events are

triggered by each looping point of the contour, as illustrated in Figure 1.1.5 (many of the

events have been omitted on the figure for visual clarity). The pan position of the

triggered event is determined by the vertical position of the loop point, moving through

the stereo field until it is faded at the crossing of the central line. Since, on occasion,

adjacent loops occur on the same side of the central line, multiple musical events may

coexist.

Figure 1.1.5 Compositional structure of Primal Sound. Musical events are triggered at loop points at the pan positions determined by their vertical position (many trigger points are omitted for visual clarity). The suture is employed forwards, and then backwards, following a complete circumnavigation of the skull

to form a continuous piece.

The initial commission of Primal Sound was for an art installation piece (running in

one exhibition for five months continuously at the Royal College of Surgeons) and so its

‘loopability’ needed to be considered. The compositional structure was determined by

following the coronal suture over the top of the skull, and back around the inside to end

at the starting point for the next repetition of the piece. This is represented in Figure

1.1.5 by the mirrored contour, reflected at the midpoint of the piece.

12

All visual-audio translations were created using MAX/MSP and Jitter, and recorded

into Logic 7 for compositional structuring, panning & mixing. Some modulation effects,

using fragments of the suture as automation data, was also incorporated. An audio

extract of the piece appears on Core Works (CD2.1) and the complete work on Hidden

Music (CD3.1).

Primal Sound is essentially an isokinetic expressive contour study, and a

demonstration of the extensive use of tightly limited musical material (See Changes Over

Time: Theory 1.4 p 17-24). However, despite its specificity, it has been employed

successfully in contexts divorced from its aesthetic origin - most notably in

Martino:Unstrung (DVD 1) where the piece was requested by both director Ian Knox and

Pat Martino as a representation of Martino’s geometrical musical vision and prolific

creativity. A complete list of its use in art installations and soundtracks, along with the

other works from the portfolio, appears in Section 5 (Research Output).

13

1.2 Head Music (2004, 2007)

Primal Sound represented the first in a series of compositions employing biological

phenomena as source material. Head Music (2004), another commission by Palmer, was

written to accompany an exhibition of works created from MRI scans. Palmer’s

ingenuous art works were produced by inscribing MRI images of the human head on to a

series of glass tiles. MRI imagery is performed in multiple cross-sectional ‘slices’ which

are consolidated to form a three-dimensional model. Similarly, the stacking of multiple

inscribed glass tiles would recreate the three-dimensional model of magnetic resonances.

When underlit in a darkened gallery, a ghostly sculpture would emerge, making

permanent the transitory resonances engendered by a momentary brain state (Figure

1.2.1).

Figure 1.2.1 Self-Portrait. MRI images inscribed on a series of glass tiles and underlit (©2004 Angela Palmer).

MRI imagery uses a strong magnetic field to align, and rotate, the hydrogen atoms in

an object. The resulting magnetic fields of hydrogen nuclei will differ for the various

constituent soft tissues. In effect, tissue consistency is translated into patterns of light

and shade, and are captured by Palmer as art works. A parallel translation process was

performed to create the accompanying music. Slivers of the original MRI images were

employed as expressive contours and a translation system (using Max/MSP/Jitter

programming) mapped these to various musical parameters. Whereas Primal Sound

14

employed just one flat contour, this work has three dimensions of material with which to

work providing countless contours for musical mapping (Figure 1.2.2). Expressive

contours were derived electronically by translating a range of lightness values (0-127) for

each sliver into settings for 1) LFO (low frequency oscillator) frequency, 2) Cut off

frequency for low-pass filter parameters, 3) a pitch and rhythmically quantized ‘lattice’

melody, 4) pan position and 5) gliding microtonal monophonic melody. The derivation

of the first three of these expressive contours is illustrated in Figure 1.2.2. The melody in

contour 3 is formed using a ‘Villa-Lobos lattice’ with only a few allowable rhythms and

equal tempered scale notes. Melodic fragments like this appear at 3:09 in the piece

(CD2.2 and CD3.2), and this process also forms the long skeletal notes at the start of the

piece to which LFO, filtering and pan position contours are applied. The microtonal

glides may be heard from 0:34 on CD2.2.

Figure 1.2.2 Illustration of expressive contour derivations from three-dimensional slices of MRI

images in Head Music (CD2.2 and CD3.2). The top image is a scan of Pat Martino’s head, from which a reworked 2007 version of the piece was derived (Image ©2007 Sixteen Films).

15

The LFO frequency contour provides a special case in its treatment of time.

Whereas most contours simple follow the absolute level of a musical variable, LFO

frequency determines the rate at which a parameter is altered – in this case the amplitude

of a synthesized note. This process may be heard from the start of CD2.2 (and CD3.2)

together with image-derived filtering and panning contours. At 1:40-2:00 the effect of a

dark area of the image is heard with a very slow rate of amplitude modulation. Multiple

contours and mappings for various three-dimensional slivers were combined and

overlaid, and an intuitive mixing and focusing was undertaken in real-time, guided by the

listening experience.

Head Music has been performed within the context of Palmer’s exhibitions and, as a

purely audio work, in scientific and musical conferences and events. In 2007, it was

reworked using the startling MRI images of Martino’s devastating head trauma, and

appears on the soundtrack of Martino:Unstrung (DVD 1).

16

1.3 Bloodlines (2004-5)

While undergoing treatment for leukaemia in an isolated ward at Charing Cross

Hospital in 2004-2005, the author underwent daily tests for a range of blood cells types.

These were monitored closely for signs of infection, relapse and immunity level. This

close inspection revealed patterns of population growth, and the idea of mapping these

to a musical composition formed quickly. Primal Sound and Head Music used relatively

few contours in order to create multiple musical layers, but the 14 blood cell types in

Bloodlines allowed for a parallel approach, with each contour controlling just one 14

consynchronous musical elements (Figure 1.3.1).

Figure 1.3.1 A screenshot of blood cell tests, part of the source material for Bloodlines, with expressive contours derived from their changing values. CD2.3 and CD1.48 play audio extracts from two versions of

the work with, and without, guitar respectively.

These contours are derived in a variety of ways from fairly simple microtonal

interpretations (white blood cells) and MIDI pitch translations of values (platelets) to

17

complex mappings of digits to audio samples. For example, haematocrit (HCT) testing

of red blood cells was provided in the form of four digit numbers, each digit calling up

one of ten samples (0-9) for each semiquaver subdivision of a beat.

Each day of treatment is translated into one second of music, and the undulations in

health can be heard musically as the piece progresses. In particular, the prominent

microtonal swell can be heard to descend as the white blood cell count starts extremely

high due to the leukaemic cells, and is massively reduced by chemotherapy until the body

reaches a vulnerable neutropaenia (0:30 – 0:40 on CD1.50). The ‘autobiological’ nature

of the work engenders an emotive response and memory of the journey through

treatment. This human response was later added into the piece itself with a completely

improvised guitar part, responding to the memories of each point in time as it progresses

through the work. In this way, the contours of each blood cell type and the improvised

responsive ‘human’ expressive contours coexist. Extracts of the 2005 version with guitar

appears on CD2.3 and CD3.3, and from the purely electronic version on CD1.50.

Bloodlines has been disseminated at conferences, used in exhibitions, and the guitar

part improvised live in concert (see section 5 for details).

18

1.4 Microcosmos (2007)

A 2007 Wellcome Trust grant provided the opportunity to explore yet more

complex ‘hidden’ musical concepts in a large scale work, and with scientific

collaboration. Microcosmos (DVD 2) is an audio/video installation that presents high-

quality images of bacterial colonies (shot by photographer Steve Downer (Blue Planet)) set

to an original 4.1 surround sound track. Scientific supervision of the microbacterial

colonies and collaborative discourse was provided by Dr. Simon Park (University of

Surrey). The project’s aims included the construction of an electronic compositional

system that could create a satisfying sound design from overt visual aspects of the video

material (colour and form) as well as hidden source material (DNA sequences, protein

production and sound grains).

Rather than impose an anthropocentric ‘emotional’ film score to the video footage,

there was an incentive to design a system that would automatically respond to video and

biological data in a way that was both aesthetically satisfying, complex and not

distractingly predictable. The sound design aimed to mirror aspects of the growth of

microbacterial colonies: emergent large scale structures from the interactive behaviour of

simple components.

The electronic system designed for the work became absolutely integral and

indispensable to the sound design (the interactions being far too complex to be

undertake ‘manually’) and once constructed, allowed for a virtually ‘hands-off’

compositional process. The translation of colour, shape and DNA sequences into

musical material in Microcosmos is centred around the concept of M-Space mapping: the

superimposition of a set variables (e.g. the red, green & blue content of a pixel on screen)

on to a set of musical parameters (e.g. cut-off frequency, resonance & effects

modulation). However, unlike Bloodlines where physical parameters controlled coexisting

19

yet independently generated musical layers, Microcosmos constructs a complex

interrelationship between variables. This interdependence of parameters does not set up

a simple one-to-one response between one particular input value and one isolated

musical event. Small changes in one colour can pass a tipping point and trigger a series

of non-linear events in a way that feels improvisational, reactive and unpredictable.

The core of the compositional system is dependent on patches written in Cycling

‘74’s MAX/MSP 4.5 and Jitter 1.6 (What’s The Point? ©2007 Mermikides and Gene Genie

©2007 Mermikides). Ableton Live 6 was also used in synchronization with Max/MSP

for audio synthesis and manipulation. Recording, editing and mastering was conducted

with Logic Pro 7, an Audient Mixing Console and an M&K 5.1 monitoring system.

IMovie and DVD Studio Pro were employed in the finalising process.

The installation was first exhibited in the Lewis Elton Gallery, University of Surrey

6-23 March 2007 as part of the Guildford International Music Festival using a Samsung

52” plasma screen, four Eclipse TD Monitors and an Eclipse sub-bass speaker. It was

later shown (as large scale projections) in the 2008 Digiville event (Brighton), a 2008

York Gate research event (Royal Academy of Music, London), Dana Centre (London

Science Museum) - as part of the 2008 Infective Art series - and the 2010 Art Researches

Science conference (Antwerp, Belgium).

Here follows a comprehensive technical description, followed by an illustrative

representation of the compositional system of Microcosmos. The complete video

installation (in surround format) used in performance is found on DVD 2 An audio

extract of the work appears on Core Works (CD2.4) with the full 19 minutes of audio on

Hidden Music (CD3.4). Audio examples in this commentary are found on the Audio

Examples CD (CD1.51-58).

The sound design is divided into four layers namely:

1) The DNA Code Layer

20

2) The Protein Layer

3) The Background Layer

4) The Grain Layer

These four layers exist simultaneously and, though independent, are manipulated by

a common set of parameters namely:

a) The red colour content (R) of various ‘hotspots’ of the video (0-255).

b) The green colour content (G) of various ‘hotspots’ of the video (0-255).

c) The blue colour content (B) of various ‘hotspots’ of the video (0-255).

d) The DNA coding from the 16s RNA sequence of the particular bacteria on

screen. This is coded in strings of A,C,T and G.

e) The protein manufactured by the correlation of three adjacent DNA codes (or

one ‘codon’). This is one of the 20 common amino acids as determined by the codon’s

particular sequence of codes. For example the sequence AAA produces lysine, while

GAA produces glutamic acid. Some code sequences (e.g. TGA) sends a STOP message,

which produce silence in Microcosmos.

The process by which input parameters a-e are translated into each of the four

musical layers is described below.

DNA Layer

DNA is a string of information comprising only four distinct units termed A, C, T

and G (in RNA, the code U is used in the place of T). The video display a series of

microbacterial colonies (their names are available on the ‘subtitles’ menu) and for each,

the relevant 16s RNA sequence is employed. In this layer, these codes are translated into

MIDI pitches via their ASCII code value (Figure 1.4.1).

DNA Code A C T G ASCII 65 67 84 71 MIDI Pitch F3 G3 C5 B3

Figure 1.4.1 Translations of DNA codes into MIDI pitches via ASCII (CD1.51).

21

These four derived notes are played on CD 1.50. DNA code is read in groups of

three (code1, code2 and code3), which allows 64 permutations of ACTG. These are

heard sequentially on CD1.52 at a fixed tempo. However, Microcosmos employs a variable

tempo determined by the brightness of the central point in the screen, with brighter

colours producing faster tempi (Figure 1.4.2).

Tempo (ms) = 1010 - (330*(R+G+B))

or

Tempo (bpm) = 60000/(1010 - (330*(R+G+B)))

Figure 1.4.2 Tempo determination of DNA layer via brightness of centre point in screen.

Note length and velocity (0-127) of each code is also determined by colour data

(Figure 1.4.3). CD1.53 demonstrates the effect of colour change on the permutations of

CD1.53.

Code1: Note length(ms) = G Velocity = R/2

Code2: Note length(ms) = B Velocity = G/2

Code3: Note length(ms) = R Velocity = B/2

Figure 1.4.3 Note length and velocity determination of Code Layer (CD1.53).

The timbre of each code is a triangle wave but with a band-pass filter (+10dB)

applied according to the rules of Figure 1.4.4, with an audio demonstration on CD1.54.

Code1 filter frequency(Hz) = 20+ (75 * R) Code2 filter frequency(Hz) = 20+ (75 * G) Code3 filter frequency(Hz) = 20+ (75 * B)

Figure 1.4.4 Timbral filtering of Code Layer in Microcosmos (CD1.54).

22

The three codes are positioned around the four speakers in a triangular

configuration, which is slowly rotated until it reaches its starting point at the next repeat

of the video sequence (Figure 1.4.5).

Code 1 Surround angle Video position (seconds) * 2.91

Code 2 Surround angle 120 + (Video position (seconds) * 2.91 (mod 360))

Code 3 Surround angle 240 + (Video position (seconds) * 2.91 (mod 360))

Figure 1.4.5 Spatialisation of Code Layer in Microcosmos.

Protein Layer

In nature, every group of three codes (or one codon) produces a specific amino acid.

The 16s RNA coding of each bacteria is decoded into a series of amino acids (of which

20 are used) – the specific amino acid that is produced may be checked against a codon

usage table. An analogous process is constructed in Microcosmos where each amino acid is

represented by a specific chord, and a codon usage table is employed in the electronic

system to select automatically one of a library of 20 chords. Codons that in biology

Speaker 3 Speaker 4

Code 1

Code 2 Code 3

Speaker 1 Speaker 2

23

produce a STOP message, here, create no chord. These ‘protein’ chords are manipulated

timbrally by RGB parameters from a continuously definable ‘hotspot’ on screen, which

allows the viewer to track the centre point, or representative colour of a bacterial colony.

The Protein Layer uses an additive synthesis program with parameters controlled by

RGB colour content (Figure 1.4.6).

Low Pass Filter Frequency (Hz) 60+ (60 * R)

Low Pass Resonance (Range 0 (flat)-127 (+12dB)) G/2

Modulation Effects Level (0 (Silent) - 127

(100% relative to dry signal))

B/2

Delay Feedback Percentage on Low Frequency Content R/2.5

Delay Feedback Percentage on Mid Frequency Content G/2.5

Delay Feedback Percentage on High Frequency Content B/2.5

Figure 1.4.6 Synthesis manipulation of Protein Layer by colour content in Microcosmos.

Background Layer

A background is also created to provide a low frequency foundation to each

bacterial colony, and comprises a microtonally intonated low-frequency arpeggiated

three-note chord for each key image. A low pass filter, whose parameters are controlled

by the overall lightness of the image, modulates this chord. The specific notes in the

chord is derived from the RGB data of the background colour of each image. The MIDI

notes for each voice in the triad are calculated via the What’s The Point? software patch

(Figure 1.4.7).

Voice 1 MIDI Note 10 + (0.6 * R)

Voice 2 MIDI Note 11 + (0.13 * G)

Voice 3 MIDI Note 15 + (0.35 * B)

24

Figure 1.4.7 Three-note chord construction algorithm of Protein Layer.

The constructed chords are rounded to discretely identified pitch values. However,

RGB values are taken at both the beginning and end of the allotted time-frame for each

chord, and any colour discrepancies that occur within these limits are interpreted as

microtonal glides. Four examples of the colour-chord translation process of specific

images are shown in Figure 1.4.8 and may be heard on CD1.55-58. This process may be

thought of as the dynamic superimposition of a three-dimensional visual subset (RGB)

on to a three-dimensional subset of M-Space.

Figure 1.4.8 Example three-note chord translations of images in Background Layer (CD1.55-58).

A low-pass filter is applied to the chord with the specific settings laid out in Figure

1.4.9. For this, RGB values are averaged across five hotspots on the screen in order to

provide an overall measure of brightness. With this process, a simple synaesthetic

relationship is created: a very dark image, for example, would only allow very low

frequencies to be heard, while a bright image would open the filter to its fullest extent,

with a continuum of timbral signatures between these extremes.

Low Pass Filter Cut (Hz) 60+ (20 * R * G * B) Low Pass Resonance [0 (flat) – 127 (+12dB)] 0.15 * R * G * B

Figure 1.4.9 Cut-off and resonance algorithm in Background Layer.

25

Grain Layer

DNA is digital information, but is stored in an organic medium and its instructions

are followed in the biophysical world. This digital/biological duality is reflected in

Microcosmos with the blending of synthetic and naturally occurring sounds; the Grain

Layer is constructed to manipulate the acoustic sounds of various materials using the

RGB data within the images. Each bacterial species is assigned a particular signature

timbre: a fragment (or ‘grain’) of acoustically occurring sound.

1. Chromobacterium violaceum A human voice

2. Serratia marcescens A single bell tone

3. Vogesella indigofera A resonating glass

4. Bacillus atrophaeus A struck stone

5. Pseudomonas aeruginosa A struck cymbal

6. Staphylococcus aureus (MRSA) A resonating piece of wood

These sound grains are manipulated by the colour content of the bacterial colonies.

The duration, pitch and starting point of each of these grains are controlled by the red,

green and blue colour content of the bacterial colonies respectively. More specifically, a

relationship between frequency in the colour and aural spectra is identified. This simple

mapping procedure creates highly complex and evocative musical responses to the

evolving imagery1. Between one and four grain layers are applied simultaneously

depending on points of colour interest, selected by the author in the rendering process.

1 For an overview of the use of grains in electronic music see Roads (2004).

26

Furthermore, the locations of selected colour points on screen are interpreted spatially

among the four speakers (Figure 1.4.10).

Figure 1.4.10 An illustration of Grain Layer manipulation and spatialisation in Microcosmos.

The sound grains are manipulated in terms of the speed they are played (defining

their pitch), the duration of each grain and the position in the sample from which the

grain is derived (affecting timbral elements). These three elements are controlled by the

RGB colour content of the selected on screen points as defined in Figure 1.4.11.

Grain Quantization (% of sample duration) R/5.1 Grain Duration (ms) G * 2 Grain Frequency (pitch) multiplier (0-2) (B/127) - 1

Figure 1.4.11 Grain manipulation via RGB content in Microcosmos.

27

Summary

The translation of DNA and colour information in Microcosmos may be conceived as

the transplantation of multi-dimensional parameters from one medium (DNA, protein

synthesis, colour, location etc.) to another (musical parameters). In other words, M-

Space exploration is guided by physical phenomena rather than a human improvisational

performance. An impression of the mappings is given in Figure 1.4.12.

Figure 1.4.12 The mapping of visual and biological data to M-Space subsets in Microcosmos.

Although there is fairly limited data input, the interactive complexity is sufficient to

have an interesting emergent response. Some points of interest:

1) The bell theme caused by the close red tones (DVD2 0:10-0:31).

28

2) The slow coding & subdued timbre with the dark screen and blue microbacteria

(DVD2 6:15-6:35).

3) The dramatic change in activity from a white screen (fast tempo and high

frequency timbres) to the instant when the vogesella indigofera colony crosses the centre

point of the screen (6:50-7:03).

Microcosmos represents a complex M-Space/expressive contours mapping system

evolved from experience with Bloodlines, Primal Sound and Head Music. This mapping

technique is employed in a more stylistically accessible context in Membrane (1.5, p 29)

and is developed further in Palmer’s ongoing Ghostforest (see Section 4). The relationship

between live visual and musical material is also explored in Rat Park Live (3.2), this time

in reverse, whereby musical improvisations create corresponding visual imagery.

29

1.5 Membrane (2009)

An opportunity to explore M-Space mapping in a different stylistic context to

Microcosmos was presented in the Wake Up and Smell the Coffee project with collaborators

Dr. Anna Tanczos and Dr. Martin Bellwood (University of Surrey). The immersive

planetarium movie medium, together with a now well-established theoretical framework

allowed an appropriate context in which to employ a direct mapping of the M-Space

model throughout the film. This section focuses on a short segment of the film as a

demonstration of the type of techniques employed.

Wake Up and Smell the Coffee (DVD3) is an educational film explaining the effect of

caffeine on the human body, and includes an extended animated sequence following the

journey of a caffeine molecule from ingestion to its docking with an adenosine receptor

in the brain. The Membrane clip (DVD3 11:12-13:15) follows the molecule’s passage

through the cell membrane of the villi into the nucleus of the cell, and its return

trajectory in a different trajectory. The molecule follows a clear flight path above and

through the cell membrane and this three-dimensional trajectory is mapped musically

using M-Space concepts. As well as a pad and bass-line, the sound design in this scene

includes two layers of a virtually modeled instrument (reminiscent of a marimba). These

layers each consist of simple two bar phrases (consisting of Coltranesque three note

pentatonic cells (see Changes Over Time:Theory 1.5 p 25-26) with three subdivided zones of

proximity - derived from the spatial position of the molecule (Figure 1.5.1 p30). Four

zones are identified (A-D) with corresponding phrases for Layers 1 and 2 (1a-d and 2a-

d). Each phrase represents a proximal musical relationship based on the physical

position of the molecule. Fields B and D are within the same physical zone and so have

similar corresponding phrases, however for variation: Phrase 1d is a retrograde

transformation of 1b, while 2d and 2b are identical, at least in terms of notational pitch

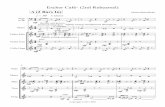

30

and rhythm. These two layers are each transformed independently in terms of

continuous (timbral and time-feel) parameter subsets based on the molecule’s three-

dimensional position. The avoidance of overly obvious parameters, such as chromatic

transposition and volume, helps keep the correlations subliminal and unearths otherwise

unvisited parameter modulations and interactions. The Membrane sequence may be seen

on DVD3 11:12-13:15 and heard, without narration on CD2.5 and CD3.5.

Figure 1.5.1 M-Space mappings on Layers 1 and 2 in Membrane (from Wake Up and Smell the Coffee) derived from the motion of the caffeine molecule through the membrane of the villi. Four zones are

identified to create proximal relationships in each layers, and the molecules motion is mapped to continuous musical parameters as indicated (CD2.5, CD3.5 and 11:12-13:15).

Hidden Music presented a brief survey of the heterogenous mapping of unorthodox

source material on to a concept of musical space derived from jazz improvisational

theory. Some of these techniques may also be redeployed in the context of acoustic (and

electric) instrumental performance again with the extensive use of electronics. The next

section presents a selection of works employing solo instrumentation with electronics

31

and the use of M-Space and expressive contour concepts in performance, improvisation

and composition.

32

2. Reworked Ensemble Improvisational works for ensemble and live electronics

2.1 The Selfish Theme

33

3. Extended Solo Instruments Works for solo instruments and live electronics

3.1 String Theory (2006) and Event Horizon (2007)

Tod Machover’s long established and distinguished career with electronic music,

starting with his collaborations at IRCAM with Pierre Boulez, is well documented2

(Machover 1992, 2004, 2006 and 2010). In 2004, Machover set up a project with the

Royal Academy of Music and MIT Media Lab to develop repertoire and research output

for the Hyperbow for cello - an electronic cello bow - designed and built by Dr. Diana

Young3. As director of the Royal Academy Music wing of the Hyperbow project, the

author had the opportunity to work closely with Machover, Young and the Royal

Academy composers and cellists. This led to the staging of a concert at the Duke’s Hall

in 2007 and the production of a CD of new works (CD3 21st Century Bow: New Works for

Hyperbow RAM 2007) from which Event Horizon is taken.

The Hyperbow is a modified cello bow fitted with electronic sensors that detect

seven parameters of motion and force. These are: distance from a bridge sensor of the

bow tip (1) and frog (2), the downward (3) and lateral (4) force on the bow, and three

dimensions (X, Y, Z) of acceleration (5-7). The location of these sensors is indicated in

Figure 2.1.1 (p 33). Values of the seven parameters are sent wirelessly to a computer for

recording, analysis or mapping. Young’s Hyperbow for violin was used extensively for

performance research, in particular the cataloguing bowing techniques and the analysis of

their relationship to sound production (Young 2007). However, the outputted parameter

2 See Machover (1992, 2004, 2006, 2010). 3 See Young (2002, 2003), Young & Serafin (2003) and Young, Nunn & Vassiliev (2006).

34

values during performance may also be used to control and manipulate musical

parameters, and it is this creative mapping approach that was taken in the RAM/MIT

Hyperbow project, and in Event Horizon.

Figure 2.1.1 Sensors on Young’s Hyperbow for cello (Image ©2006 Yael Maguire)

The use of continuous electronic controllers in musical manipulation is of course

well established; from early electronic instruments like the Theremin, the guitarist’s wah-

wah pedal, to the huge range of midi controllers now available. However these may all

be thought of as auxiliary controls that do not interfere significantly with normal

instrumental execution (in the case of the guitarist’s foot pedal for example) or as the

sole focus in performance as demonstrated by the new breed of laptop musician. Tools

such as the Hyperbow have a unique function as the control signals generated have an

interrelationship with normal performance. Furthermore, the nuanced control of these

parameters is aided by the performer’s technical discipline rather than a novel skill to be

learned. The electronic systems in Event Horizon rely on sophisticated bow control, both

away from the instrument and during sound production, and so it may be seen as a piece

that requires an extended instrumental technique rather than the learning of a auxiliary

parallel skill.

strain gauge

force sensors

accelerometerswireless

module

battery

tip position

signal

frog position

signal

35

What is also apparent from Figure 2.1.2 (A screenshot from Hyperactivity (2006)4 a

MAX/MSP by the author routing hyperbow data to midi controller values and triggers)

is the familiarity of the sensor data; clearly there are expressive contours here readily

available for mapping to musical parameters.

Figure 2.1.2 A screenshot from Hyperactivity, a MAX/MSP patch that routes hyperbow data to midi controller values. Thresholds for parameter values may also be specified that when exceeded send out

midi notes to trigger discrete events.

The cellist may consciously control these contours while the cello is not played, or

they may be teased out during performance with for example, a slight twisting of the

bow or a little more downward force during a passage. Other contour relationships may

occur with little potential control or conscious awareness, but may still have musical

effect. Hyperactivity allows the specification of threshold values for Hyperbow data that,

when exceeded, may trigger discrete events via MIDI notes. For example a sharp flick of

the bow may trigger a (predetermined or randomised) harmonic change in the electronic

backing, or when downward force surpasses a certain level, a filter may be engaged with

4 Hyperactivity includes a MAX/MSP object for data calibration programmed by Patrick Nunn (2006).

36

its characteristics controlled continuously by Hyperbow data. A demonstration of the

types of mappings and trigger events possible with the Hyperbow and Hyperactivity, most

of which are used in Event Horizon, is presented as a video on DVD 4.1 with the cellist

Peter Gregson playing an Eric Jensen five-string electric cello. The video represents an

early technical sketch of Event Horizon, dubbed String Theory, and the first recorded audio

take appears in the soundtrack of Martino:Unstrung (DVD1 43:23-44:03) with additional

versions on CD2.2 and CD4.2. String Theory is an example of a work that, once the

electronic system is constructed, allows an open-ended improvisational approach by the

soloist who is free to control structure, melodic detail and extreme timbral

characteristics, even if some of the backing material and mapping relationships are

prepared. Another example of this approach is found in Dublicity – a solo guitar

improvisation by the author (CD4.3). With this system the electric guitar’s effects may

be selected with a foot pedal, but the output of the guitar is also mapped to MIDI data,

which is separated into overlapping zones depending on string and pitch range. This

allows for the simultaneous performance and control of electric guitar, MIDI controlled

keyboard, bass, effects and rhythmic fragments. As in String Theory, the electronic system

may be exploited with instrumental proficiency - in this case techniques such as two-

handed fretboard and string-crossing mechanics – and jazz sensibilities, rather than the

typical pedalboard, assistant technician or mouse and laptop approach.

The Hyperbow project is the author’s first of many collaborations with Gregson - an

enthusiastic champion of the use of electronics in contemporary music - which include

four CD releases, research events and international concert performances of cello and

electronics programmes.

Event Horizon

References

Adams, C. (1976) Melodic Contour Typology in Ethnomusicology 20, 179-215. Frey, D. (2010) New York Skyline. [online] Red Deer Public Library. Available: http://www.villalobos.ca/ny-skyline [Accessed 6 February 2010]. Palmer, A. S. (2010) Projects [Online] Available: http://www.angelaspalmer.com/category/press/ [Accessed 10 January 2010]. Rilke, R. M. (1919) Ur-Geräusch in Sämtliche Werke, vol. 6. 1987 Revised Edition. Frankfurt: Insel. Roads, C. (2004) Microsound. Paperback edition. Cambridge: MIT Press. Young, D. (2002) The Hyperbow Controller: Real-Time Dynamics Measurement of Violin Performance in 2002 Conference on New Instruments for Musical Expression, Dublin, Ireland. 24-26 May 2002. Young, D. (2003) Wireless Sensor System for Measurement of Violin Bowing Parameters in Stockholm Music Acoustics Conference (SMAC 03) Stockholm, 6-9 August 2003.

Young, D. & Serafin, S. (2003) Playability Evaluation of a Virtual Bowed String Instrument in Conference on New Interfaces for Musical Expression (NIME). Montreal, 26 May 2003.

Young, D., Nunn, P. & Vassiliev, A. (2006) Composing for Hyperbow: a collaboration between MIT and the Royal Academy of Music in 2006 Conference on New Instruments for Musical Expression (NIME). Paris, 4-8 June 2006.

Young, D. (2007) A Methodology for Investigation of Bowed String Performance through Measurement of Bowing Technique. PhD Thesis. Boston: MIT. Machover, T. (1992) Hyperinstruments - A Progress Report 1987 – 1991. [Online] MIT Media Laboratory, January 1992. Available: http://opera.media.mit.edu/hyper_rprt.pdf [Accessed 7 January 2010]. Machover, T. (2004) Shaping Minds Musically [Online] BT Technology Journal, 22.4, 171-9. Available: http://www.media.mit.edu/hyperins/articles/shapingminds.pdf [Accessed 10 October 2009].

Machover, T. (2006) Dreaming a New Music [Online] Available: http://opera.media.mit.edu/articles/dreaming09_2006.pdf [Accessed 2 January 2010].

Machover, T. (2010) On Future Performance [Online] The New York Times, 13 January 2010. Available: http://opinionator.blogs.nytimes.com/2010/01/13/on-future-performance/ [Accessed 6 February 2010].