B.Tech Thesis

-

Upload

susobhit-sen -

Category

Documents

-

view

29 -

download

0

Transcript of B.Tech Thesis

i

Vision Based Robotic Manipulation of

Objects

A Graduate Project Report submitted to Manipal University in partial

fulfilment of the requirement for the award of the degree of

BACHELOR OF TECHNOLOGY

In

Instrumentation and Control Engineering

Submitted by

Susobhit Sen

Under the guidance of

Prof. (Dr.) Santanu Chaudhury

Professor, Dept. of Electrical Engineering, IIT Delhi

And

Prof. P Chenchu Sai Babu

Assistant Professor, Dept. of Instrumentation and Control Engineering,

MIT, Manipal

DEPARTMENT OF INSTRUMENTATION AND CONTROL ENGINEERING

MANIPAL INSTITUTE OF TECHNOLOGY

(A Constituent College of Manipal University)

MANIPAL – 576104, KARNATAKA, INDIA

July 2015

ii

DEPARTMENT OF INSTRUMENTATON AND CONTROL ENGINEERING

MANIPAL INSTITUTE OF TECHNOLOGY

(A Constituent College of Manipal University)

MANIPAL – 576 104 (KARNATAKA), INDIA

Manipal

July 3rd

2015

CERTIFICATE

This is to certify that the project titled Vision Based Robotic Manipulation of

Objects is a record of the bonafide work done by SUSOBHIT SEN (110921352)

submitted in partial fulfilment of the requirements for the award of the Degree of

Bachelor of Technology (B.Tech) in INSTRUMENTATION AND CONTROL

ENGINEERING of Manipal Institute of Technology Manipal, Karnataka, (A

Constituent College of Manipal University), during the academic year 2014-15.

P Chenchu Sai Babu

Assistant Professor

I & CE

M.I.T, Manipal

Prof. Dr. Dayananda Nayak

HOD, I & CE.

M.I.T, MANIPAL

iii

iv

ACKNOWLEDGMENTS

Throughout my engineering years at Manipal, it has been my privilege to work with and learn

from excellent faculty and friends. They all have had a significant impact on the quality of

my research, education and my professional growth. It is impossible to list and thank all of

them, but I would like to acknowledge everyone at the Department of Instrumentation and

Control Engineering for enabling access to quality education and an excellent learning

environment to students.

I would like to start by expressing my sincerest gratitude to my research project guide at

Indian Institute of Technology, Delhi (IIT Delhi) Professor (Dr.) Santanu Chaudhury for his

wisdom, patience, and for giving me the opportunity to pursue my final semester project in

PAR (Programme in Autonomous Robotics) Lab. His guidance and support were the most

important assets that led the completion of this report. I also thank him for making me

accustomed to new advances in the field of computer vision, robotics and object

identification also, helping me figure out my research interest in the field of robotics and

computer vision.

I would also like to offer my sincere gratitude to Dr. Dayananda Nayak, HOD, Dept. of

Instrumentation and Control Engineering, MIT, Manipal and Mr P Chenchu Sai Babu,

Associate Professor, Dept. of Instrumentation and Control Engineering, MIT Manipal, for

allowing me to complete my final year project in IIT Delhi and for providing their valuable

insights into my project.

Also I will like to thank Mrs Shraddha Chaudhary, Mr Ashutosh Kumar and other members

at PAR Lab and IIT Delhi for helping me complete my project. I had a great time learning

from them and hopefully our paths will cross again.

v

ABSTRACT

This project addresses manipulating pellets in a cluttered 3D environment using a robotic

manipulator with help of a fixed camera and a laser range finder. The output data from the

camera and the laser sensor, after processing, guide the manipulator and the gripper to

perform the task at hand. The intention is to have an autonomous system to perform the task

of picking and inserting in a tube. This is not a straightforward implementation as it involves

synergistic combination of data from the Micro Epsilon ScanCONTROL Laser Scanner and

the camera with the position KUKA KR-5, a 6 DOF robot with a two finger and a suction

gripper. The fusion of data has been achieved through derivation of a robust calibration

between the optical sensors. The depth profile is added to the image information adding an

extra dimension. To pick up the workpiece from a collection of work pieces in a random

clutter scenario, it is important to know the precise location of the workpiece. This accuracy

was achieved to 0.4 mm combined with 0.1 mm intrinsic position error of the KUKA, trials

carried out gave good 90% repeatability in locating the pellet (with respect to the base frame).

The pick up using a suction gripper increased the repeatability to further 96% due to the

bellow effect of the gripper and tolerances of the bellow.

Today, robotics has taken a lot of complex responsibilities and has served the mankind in

innumerable ways. From assisting technicians on assembly line of an automobile plant to

performing complex laparoscopy procedures, they have proved to have great repeatability,

accuracy and precision. Lot of manufacturing facilities remands the classical “bin picking”

procedure. This project deals with this classical problem combined with complexities such as

identical and texture less work pieces and more importantly bin picking with a 3D

arrangement of work pieces. In order to cater to the clutter and occlusion, the data required

for processing has to be three dimensional. This is achieved by sensor fusion and by

obtaining synchronised data from the sensors.

vi

LIST OF TABLES

Table No Table Title Page No

3.1 Sensor Frame to TCP Calibration Matrix 28

3.2 TCP frame to Sensor frame calibration matrix 30

4.1 Rotation Matrix elements for camera calibration 44

4.2 Translation Matrix elements for camera calibration 44

4.3 Camera Intrinsic Matrix elements for camera calibration 44

4.4 Joint Calibration results (1) 44

4.5 Joint Calibration results (2) 45

4.6 URG Sensor results 46

vii

LIST OF FIGURES

Figure No Figure Title Page No

2.1 Algorithm for pellet pickup 17

2.2 Surface Fitting to cylinders 18

2.3 Estimation of object orientation using vision system 18

2.4 Query edge mapping 19

2.5 Application of canny edge detection and depth edges in clutter 19

3.1 Experimental setup with robot end effector, bin and workspace 21

3.2 Different reference frames (Left) and sensor mounted on robot end

effector(Right)

22

3.3 Pinhole Camera Model 23

3.4 Camera calibration feature points 23

3.5 Camera orientation (Left) and re-projection error (Right) 25

3.6 Laser and camera joint calibration 26

3.7 Camera and laser calibration using coplanar points 27

3.8 Laser scan of 3 different height objects 28

3.9 Single pellet from two different views 29

3.10 Pellets kept in random (clutter) arrangement 29

3.11 Image from camera(left), Plot of points in X-Z plane(middle), Plot

of points in Y-Z plane (right)

30

3.12 Point Cloud Data (sorted) MATLAB. 31

3.13 Point Cloud Data Visualisation in MeshLab 32

3.14 Matlab Plot of data from laser scanner showing 3D arrangement of

pellets

33

3.15 Simple block diagram representation of the algorithm. 34

3.16 Hough Transform of a standing and two lying pellets showing the

longest edge and centre.

35

3.17 3D arrangement of standing pellets 36

3.18 Images showing the different levels with pellets 36

3.19 Application of Hough Transforms gives distinct circles. 36

3.20 Algorithm for pickup of the pellet 37

3.21 Laser data for a single pellet with color variation on basis of height 38

3.22 Transformation of laser data onto Image data 38

3.23 Mapping of laser data onto Image data for cluttered arrangement 39

3.24 Image showing cylinder fitting (a), optimized results (b) 40

3.25 Normals and plane passing through a point on the cylinder 40

3.26 Dimension and Range data of the Micro Epsilon SCANControl 41

viii

Laser Scanner

3.27 GUI of SCANControl Software 41

4.1 Depth profile from URG Sensor 45

4.2 Hough Transforms detecting circles for complete data as well as

for partial data

46

ix

Contents

Page No

Acknowledgement i

Abstract ii

List Of Figures iii

List Of Tables vi

Chapter 1 INTRODUCTION

1.1 Introduction 10

1.1.1 Area of work 11

1.1.2 Present day scenario of work 11

1.2 Motivation 11

1.2.1 Shortcomings of previous work 12

1.2.2 Importance of work in present context 12

1.2.3 Significance of End result 12

1.2.4 Objective of the project 12

1.3 Project work Schedule 13

1.4 Organization of Project Report 14

Chapter 2 BACKGROUND

2.1 Introduction 16

2.2 Literature Review and about the project 16

Chapter 3 METHODOLOGY

3.1 Introduction 21

3.2 Methodology 22

3.2.1 Calibration 22

3.2.2 Data Interpretation 31

3.2.3 Algorithms for Pick Up 32

3.2.4 Component Selections and Justifications 40

Chapter 4 RESULT ANALYSIS

4.1 Introduction 43

4.2 Results 43

4.2.1 Calibration Results 43

4.2.2 Laser Sensor Evaluation Results 43

4.2.3 URG Sensor results 45

4.2.4 Repeatability Tests and Analysis 46

Chapter 5 CONCLUSION AND FUTURE SCOPE

5.1 Work Conclusion 47

5.2 Future Scope of Work 47

REFERENCES 48

10

CHAPTER 1

INTRODUCTION

1.1 INTRODUCTION

As biological organisms we are constantly inundated with stimuli informing us of a rich

variety of factors in the environment that may affect our well-being. These stimuli may be

sensory, social or informational. The ability to organize these simultaneous stimuli into

meaningful groups is a fundamental property of intelligence. In the practice of science, we

expose observations of nature to similar analysis. Robots on the other hand unlike us, lack the

ability to understand or interpret the data obtained from environment. Building smarter, more

flexible, and independent robots that can interact with the surrounding environment is a

fundamental goal of robotics research. Potential applications are wide-ranging, including

automated manufacturing, entertainment, in-home assistance, and disaster rescue. One of the

long-standing challenges in realizing this vision is the difficulty of ‘perception’ and

‘cognition’, i.e. providing the robot with the ability to understand its environment and make

inferences that allow appropriate actions to be taken. Perception through inexpensive contact-

free sensors such as cameras and laser are essential for continuous and fast robot operation. In

this research, we address the challenge of robot perception in the context of industrial

robotics.

For any task that requires manipulation of objects in a workspace, an autonomous robotic

system would require data depending on the type of task at hand. Direct application may be

to solve a wide industrial problem of bin picking. Often human labor is employed to load an

automatic machine with work-pieces. Such jobs are monotonous and do not add any value to

the skill set of the workers. While the cost of labor is increasing, human performance remains

essentially constant. Furthermore, the environment in manufacturing areas is generally

unhealthy. Also, when work-pieces are inserted into machines by hands limbs are often

exposed to danger. This is where the concept of process automation using computer vision

plays a vital role. Use of computer vision in such cases is an obvious solution.

A lot of research has already been put into automation of industrial practices. One of the

classical computer vision problems is estimation of 3D pose of the work piece. The currently

widely used research techniques employ use of camera for pose and orientation estimation.

This works out well but only for the 2D or planar arrangement of workpiece but fails when it

is arranged in 3D or in cluttered form. The reason here is because an image from a stationary

camera (fixed focus) would only give output in 2 dimensions whereas the requirement is for 3

11

dimensional data. To obtain the 3D data from the workpiece that is pellets in this case, we

would require some other research methodology. In this project, identical pellets (cylindrical

in shape) textureless and with same colour are to be picked from a bin using a robotic arm.

Pellets are located using data from a Laser scanner adding the aforementioned extra

dimension for further computations. This scanner has a resolution of 24 micro meter. Scan

line of 14.9 cm and a variable tunable frequency of 200 – 2000 Hz . This high accuracy and

precision enables fast scanning of the workspace. Manipulation of data has been done using

OpenCV and PCL (Point Cloud Library) on Microsoft Visual Studio 2010 .

1.1.1 Area of work

This research mainly deals with information fusion between two sensors and subsequently

locating a pellet that can be picked up. In this project, data from camera and a laser range

finder are analyzed, synthesized and combined together so that the exact location of pellets

may be found out. Our idea here is to pick the pellet which is least occluded. This would

include calibration of individual sensors, subsequent optimization, image processing, Point

cloud processing and finding point to pixel correspondence for data from the laser to the

camera. Finally, mathematical and heuristic calculations yield us the least occluded pellet for

picking up.

1.1.2 Present day Scenario of work

Robotics has found a strong foothold in numerous manufacturing industries. The present

progress with respect to bin picking has been restricted to only planar arrangements. A planar

arrangement may be defined as a 2D arrangement of the object of interest. Recent advances

have dealt with problem minor occlusion by using different approaches using data from

camera. The novelty of this project lies in additional use of a range sensor for localization of

object. Presently, a lot of algorithms deal with the planar arrangement of identical objects.

This include heuristic approaches or using machine learning algorithms[4] (SVM,SVD etc.).

Main disadvantage with SVM and SVD is requirement of a large data set which is to be used

for learning.

12

1.2 MOTIVATION

Industries generally rely on robots for automation to save time and money. One reason is that when

humans do the same task repetitively they feel uninterested and their efficiency to perform the task

with accuracy decreases over time. Another reason is the cost of human labor. Robots are designed

in a manner that they can perform these repetitive tasks with the same precision in lesser time. Pick

and place of object is one of the essential tasks in industries. After manufacturing a product, one has

to pick the manufactured object from plates/bin and organize them for shipment or storage. One

solution is to use manual labor for the whole process, where laborers pick objects and organize them

suitably. Another solution can be that humans arrange those objects in a tray and pass it to a robot.

A robot then picks them one by one and places them in suitable fashion. Robot can be trained to go

to particular locations in a tray again and again and humans can arrange the objects in the tray

accordingly. But the use of a robot becomes inevitable when the workspace environment is not

suitable for humans. Nuclear reactor and hot furnace are some of the few examples of such

environment. One cannot survive after a specified quantized amount of exposure to radioactive

materials. So for the handling of such materials we need the help of robot. Our motivation comes

from similar situations, where no kind of human intervention is possible and the whole process

needs to be automated. This will not only save time, but will also save humans from being exposed

to unhealthy environment. General solution to this kind of problem is to make use of stereo setup. In

stereo setup one can use two cameras to generate 3D reconstruction of a workspace and estimate

pose of objects. To create 3D reconstruction one needs to find correspondence between two

images taken by two cameras of a given scene, i.e., match same points on an object that are

common in both images. Generally objects used in industries are simple and featureless. Finding

point correspondence for such objects becomes very difficult and even impossible in certain cases.

In this thesis we are proposing an approach that uses mono-vision model combined with a laser

range finder data based recognition to solve pose estimation problem.

1.2.1 Shortcoming of previous work

Long-time back, bin picking problem was tackled using mechanical vibratory feeders, where vision

feedback was not available. But this solution had the problem of mechanical parts getting jammed

and it was highly dedicated. Due to these shortcomings, next generation of bin picking system

performed grasping and manipulation operations using vision feedback. Computer vision has

made rapid progress in the last decade, moving closer to definitive solutions for longstanding

problems in visual perception such as object detection [9],[10],[11] object recognition and

pose estimation. While the huge strides made in these fields lead to important lessons, most

of these methods cannot be readily adapted to industrial robotics because many of the

common assumptions are either violated or invalid in such settings.

13

1.2.2 Importance of work in present context

This work has a lot of significance in the present context as an industrial alternative. This

may have a vast application in manufacturing set ups. A combination of laser range finder

with a camera can solve complex problems of object localisation and identification. In cases

of bin picking, this method is again very beneficial. The main advantage is in the fact that the

data from both sensors combined together and the resultant data will be an image with depth

information.

1.2.3 Significance of the end result

This project deals with improving the conventional methods of object identification, pose

estimation and picking using robotic arm given that the workpiece is identical in shape, size

and texture. The end result showed a repeatability of 96% with a tolerable error of 0.3 mm.

1.2.4 OBJECTIVE

The project aims to:-

Joint Calibration of optical sensors

“Hand Eye Calibration” of visual sensors with the robotic arm

Find pose , orientation of objects placed in a 3D arrangement

Fusion of data from two independent visual sensors

Pellet picking with help of suction gripper

Result and model verification

14

1.4 PROJECT SCHEDULE

January 2015

o Processing of image from camera.

o Review of existing literature

February 2015

o Feature based contour extraction and Hough circle estimation.

o Processing data from Micro Epsilon SCANControl Laser Scanner

o Practical Calibration of camera to define camera coordinate system, Intrinsic as

well as extrinsic parameters

March 2015

o Algorithm and code designing for the use of above coordinate system in real time

using OpenCV.

o Extrinsic calibration of the Laser Scanner to the camera.

April 2015

o Algorithm Design for the pick up of pellet from a bin.

o Working with KUKA Robot to train it as per the problem statement.

May 2015

o Robustness analysis of algorithms.

o Final Implementation, revision and modifications.

June 2015

o PCL Point Cloud data analysis.

o Project report and Documentation.

1.5 ORGANISATION OF REPORT

CHAPTER 1: This chapter briefly describes about the project and the aim of the project. The

chapter also describes about the shortcomings of the previous work and the steps taken to

overcome the problems. The project objectives and schedule are also included in this chapter.

15

CHAPTER 2: This chapter briefly describes about the project title and the content of the project.

It also lays emphasis of the work in the present day scenario and the literature review which was

done to carry out this project. Theoretical discussions along with conclusion marks the end of this

chapter.

CHAPTER 3: This chapter describes about the methodology which is used in this project. Various

component which are used are explained along with software while doing this project. The main

block diagram of the project is also explained in this chapter.

CHAPTER 4: In this chapter the result obtained are explained and also the explanations for the

result obtained are discussed .The significance of the result obtained has also been discussed in

this chapter.

CHAPTER 5: This chapter highlights the brief summary of the work and the problem faced while

doing the project. It also discusses about the significance of the result which is obtained along

with future scope for the project.

16

CHAPTER 2

BACKGROUND THEORY

2.1 INTRODUCTION

This chapter deals with the project title and the literature review which was done to carry out

this project. General theoretical discussions are carried out as well as a brief description of the

latest research in this field are cited. This chapter also explains the important references that

have been used in derivation and algorithm designing.

2.2 LITERATURE REVIEW AND ABOUT THE PROJECT

Object identification and estimation of parameters cannot be done using a single camera.

Image segmentation doesn’t work in these cases as with increasing height, the background

essentially merges with the object and causes failure of conventional edge detection

algorithms such as canny edge detectors.

Fig 2.1 Algorithm for pellet pickup

17

To solve this problem by using vision solution initially was based on modeling parts using

2D surface representation and these 2D representation were invariant shape descriptors [19].

There were other system as well, which recognized, scene objects from range data using

various volumetric primitives such as cylinders ([20]).

2.2.1 EXTRACTION OF CYLINDERS AND ESTIMATION OF THEIR PARAMETERS FROM

POINT CLOUDS

This is a method [21] devised in order to perfectly approximate the position and orientation

of cylinders from a point cloud data. The main idea is of region growing. The author takes a

point on the surface of cylinder and then selects a neighbourhood . The norm at these points

give the axis of the cylinder. RANSAC algorithm is used here. Once the axis of the cylinder

is found out, the other points that were not in the neighbourhood are taken and projections on

to the axis are made and the model gets optimized.

One of the disadvantages of this method is that it requires a complete model of the cylinder

and they should be sparse. This is not possible in our case as the cylinders are in cluttered

environment. Due to the clutter, we only obtain partial point cloud

2.2.2 EFFICIENT HOUGH TRANSFORM FOR AUTOMATIC DETECTION OF

CYLINDERS IN POINT CLOUDS

This research [22] essentially converts the 3D problem into a 2D problem. The initial steps

are same as a point is selected and a region is defined around it. This point selection is made

on the basis of curvature. Next, a base point and a Gaussian sphere is defined around it. Once

this is done, author creates normal projections on to the sphere passing through the origin.

Next step is application of Hough circles on the intersection of a sphere and a plane (normal

to the axis) which would give a circle. The center can be easily found out once the circles and

their centers are detected.

Again, this method also works well for sparse cylinders. Also they have assumed the

dimensions. So for two different radii cylinders, this method would fail.

18

Fig 2.2 Surface Fitting to cylinders

2.2.3 3D VISION SYSTEM FOR INDUSTRIAL BIN-PICKING APPLICATIONS

This paper[18] explains the same problem of bin picking in a 3D cluttered environment. Here

they propose an experimental setup using two laser projectors and a camera. The idea is

somewhat similar to our methodologies. They also use hough transforms for detection. Here

pose estimation is a two step process. This methodology was tested on bigger work pieces

and the surface area ratio as compared to our workpiece is approximately 6:1 and hence even

the error tolerance for this model is higher than our system. So, it cannot be applied directly

for our problem statement

Fig 2.3 Estimation of Object Orientation using vision system

19

2.2.4 FAST OBJECT LOCALIZATION AND POSE ESTIMATION IN HEAVY CLUTTER

FOR ROBOTIC BIN PICKING

This research[9] also addresses bin picking in cluttered environment. They have designed and

introduced FCDM algorithm. Fast directional chamber matching algorithm is based on

finding alignment between template edge map and query edge map. This is further calculated

using a warping and distance cost function.

Fig 2.4 Query edge mapping

Next step is matching of directional edge pixels. Further they propose an edge image.

Fig 2.5 Application of canny edge detection and depth edges in clutter

This algorithm only retains edge points with continuity and sufficient support, therefore the noise and isolated edges are filtered out. In addition, the directions recovered through the fitting procedure has been shown to be more precise than as compared to image gradients.

20

2.2.5 TRAINING BASED OBJECT RECOGNITION IN CLUTTERED 3D POINT CLOUDS

This paper[17] combines machine learning with object identification in 3D point cloud. The

algorithm is divided into two parts, Training and detection. First the detector detects the point

cloud and followed by training. Firstly weak classifier candidates are generated and trained.

Once training is completed, objects are classified. This is done on a basis of object template

defined as collection of grids and matching templates. A feature value is also defined which

corresponds the ratio of matching grids.

2.3 CONCLUSION

On the basis of analysis from the above papers, the algorithm we decided had to first localize

the pellets. Once that is being done, find normal and subsequently the curvature. Curvature

can further be used to classify cylindrical surface and planes. This is to be followed by rough

cylinder fitting. This could give rough values of the axis and the center with some error.

Further this can be optimized by using machine learning procedures and voting . Once the

pellet is picked, those points are to be deleted.

21

CHAPTER 3

METHODOLOGY

3.1 INTRODUCTION

This chapter briefly describes about the methodology which is being used in the project. The

idea here is to pick up pellet from a 3D clutter. The pellets may be tilted, standing or

sleeping. This chapter includes the detailed methodology in order to solve the problem

statement. This includes assumptions and approximations made. Block diagrams to deal with

different devised approaches. Component specifications and justification of different

apparatus used, preliminary result analysis followed by conclusions.

3.2 METHODOLOGY

In order to find exact position of the pellets and to pick up, we used three different

approaches. In any cluttered environment combined with occlusion we may classify objects

(identical in shape) in three cases as standing, sleeping and tilted cylindrical pellets. Below

diagram shows the experimental setup with the robot, pellets in bin and vertical tubes (for

insertion).

Fig 3.1 Experimental Setup with robot end effector, bin and workspace

22

3.2.1 CALIBRATION

This section discusses the various calibration procedures that were used in this project.

Calibration is one of the very important aspects of sensor fusion. When multiple sensors are

in play, it is essential to analyze data in a common frame of reference. Due to complexity of

the problem here, dealing with 3D data, various methods for calibration were used in order to

obtain a robust transformation.

Fig 3.2 Different reference frames(left) and sensors mounted on robot end effector(right)

To start with, it is important to understand the importance reference frames. Every sensor

kept in the real world has its own reference frame. Hence it is important to map these

different reference frames into a common reference frame so as to enable robotic

manipulation of objects. For a combined use of the camera and the laser range finder, it is

important to first have a common reference system. Calibration is the process of estimating

the relative position and orientation between the laser range finder and the camera. It is

important as it effects the geometric interpretation of measurements. We describe theoretical

and experimental results for extrinsic calibration of a robotic arm consisting of a camera and

a 2D laser range finder. The calibration process is based on observing a planar checkerboard

and solving the constraints between “views” of a planar checkerboard calibration pattern

from a camera and a laser range finder. The entire calibration process has been explained

below as subsections for each of the visual devices.

23

3.2.1.1 CAMERA CALIBRATION

Camera is also used to obtain the 2D data. The image maps a 3D world into a 2D world. The

schematic of a pinhole camera is shown below [5]:

Fig 3.3 Pinhole camera model

Calibration of camera is also required to obtain the intrinsic as well as extrinsic properties of

the camera. Intrinsic properties tend to stay constant whereas the extrinsic properties depend

on the position and orientation of the camera. Calculation of extrinsic parameters may be

further classified into two parts. Calculation of rotation matrix and transformation matrix. A

combination of both the matrices maps the 3D world to pixels of an image. There are

different ways of camera calibration. [1] is a widely used method of calibration.

Fig 3.4 Camera calibration feature points

24

Figure 1 shows a camera with centre of projection O and the principal axis parallel to Z axis.

Image plane is at focus and hence focal length ‘f’ away from O. A 3D point P = (X; Y; Z) is

imaged on the camera’s image plane at coordinate Pc = (u; v). As discussed above, We first

find the camera calibration matrix C which maps 3D P to 2D Points. Using similar triangles

as :

(3.1)

This is equivalent to

(3.2)

(3.3)

From the above equations 3.2 and 3.3, we can write a generalized homogeneous coordinates

for Pc as :

(3.4)

For the case when the origin does not coincide with of the 2D image coordinate system does

not coincide with where the Z axis intersects the image plane, we need to translate Pc to the

desired origin. Let this translation be defined by ( , ). Hence, now (u, v) is

(3.5)

(3.6)

This can be written in similar form 3.4 as

(3.7)

25

The above matrix denotes the intrinsic characteristics of the camera. It is important to note

the units of focal lengths and the offset from the centre. To find the extrinsic calibration

matrix, we need a rotation and translation to make the camera coordinate system coincide

with the configuration in Figure 1. Let the camera translation to origin of the XYZ coordinate

be given by T (Tx, Ty, Tz). Let the rotation applied to coincide the principal axis with Z axis

be given by a 3 x 3 rotation matrix R. Then the matrix formed by first applying the translation

followed by the rotation is given by the 3 x 4 matrix.

E=(R|RT) (3.8)

Hence combining the above two equations, we get

(3.9)

Fig 3.5 Camera orientations(left) and reprojection error(right)

The camera matrix C gives Pc that is the projection of P. C is a 3 x 4 matrix usually called the

complete camera calibration matrix. Note that since C is 3 x 4 we need P to be in 4D

homogeneous coordinates and Pc derived by CP will be in 3D homogeneous coordinates. The

exact 2D location of the projection on the camera image plane will be obtained by dividing

the first two coordinates of Pc by the third.

3.2.1.2 EXTRINSIC CALIBRATION OF CAMERA AND LASER (Zhang and Pless, IROS

2004)

This method is a one of the earliest work done in calibration of laser range finder and a

camera. The experimental results verify the calibration. One of the restrictions is the

constraint that requires the checkerboard to be in “view” to both the laser range finder as well

as the camera. Method here is to reduce the algebraic error in the constraint. This data is then

further modified by non-linear refinement which minimizes a re-projection error. The goal of

26

this paper was to study a calibration method that finds the rotation ⱷ and the translation ∆

which transform points in the camera coordinate system to points in the laser coordinate

system

The basic method here is to decompose the calibration into two parts: Extrinsic and Intrinsic.

The external calibration parameters are the position and orientation of the sensor relative to

some reference coordinate system.[6] Authors have assumed that the internal parameters of

the camera are already calibrated. The arrangement is shown below in fig 3.6

Fig 3.6 Laser and camera joint calibration

From equations K is the camera intrinsic matrix, R a 3 x 3 orthonormal matrix representing

the camera’s orientation, and T a 3-vector representing its position. In real cases, the camera

can exhibit significant lens distortion, which can be modelled as a 5-vector parameter

consisting of radial and tangent distortion coefficients. The laser range finder reports laser

readings which are distance measurements to the points on a plane parallel to the floor. A

laser coordinate system is defined with an origin at the laser range finder, and the laser scan

plane is the plane Y = 0. Suppose a point P in the camera coordinate system is located at a

point in the laser coordinate system, and the rigid transformation from the camera

coordinate system to laser coordinate system can be described by:

(3.10)

Where, ⱷ is a 3x3 orthonormal matrix representing the camera’s orientation relative to the

laser ranger finder and ∆ is a 3-vector corresponding to its relative position.

Without loss of generality, we assume that the calibration plane is the plane Z = 0 in the

world coordinate system. In the camera coordinate system, the calibration plane can be

parameterized by 3-vector N such that N is parallel to the normal of the calibration plane, and

its magnitude, ||N||, equals the distance from camera to the calibration plane. Using (1) we

can derive that:

(3.11)

Where, is the 3rd column of rotation matrix R, and t the centre of the camera, in world

coordinates. Since the laser points must lie on the calibration plane estimated from the

27

camera, we get a geometric constraint on the rigid transformation between the camera

coordinate system and the laser coordinate system. Given a laser point in the laser

coordinate system, from (2.10), we can determine its coordinate P in the camera reference

frame as . Since the point P is on the calibration plane defined by N, we

have

(3.12)

The solution to the above problem was done in the following two ways, first a linear solution

is found by using the constraints. In the subsequent steps, it is further refined by optimisation.

A notable fact about this paper is that the camera calibration matrix is further refined and

hence accurate as compared to the camera calibration data using Zhang’s method [8].

3.2.1.3 CALIBRATION USING COPLANAR POINTS COMMON TO WORKSPACE

(Optimised using gradient descent optimisation methods)

Fig 3.7 Camera and laser calibration using coplanar points

This calibration method leveraged on the fact that laser scan line is also visible to the camera.

Calibration required 4 points common to both the camera as well as the laser range finder in

order to find the intrinsic as well as the extrinsic calibration matrices. Furthermore, after

obtaining initial values, the resultant calibration matrix was further optimized using gradient

descent method of optimization. This required additional 10 points.

28

In this method, two point vectors are first taken which are accurately known in both base as

well as in the sensor frame of references. Cross product yields us a direction vector that is

perpendicular to the base frame. Further direction cosines when taken with another point, this

gives the other two axes. In order to calculate accurately for 3D data, we take another point

Fig 3.8 Laser scan of 3 different height objects

that is non-coplanar and has (x,y,z) coordinate. These calculations provide an initial

estimation to the calibration matrices. After optimization, we found following result:

0.8001 0.5972 -0.0565 -39.2808

0.5996 -0.7989 0.0474 -14.6918

-0.0168 -0.0718 -0.9973 329.3757

0 0 0 1

Table 3.1 Sensor Frame to TCP Calibration Matrix

The above table shows the calibration matrix from the sensor frame to the the TCP

(Tool Centre Point) frame. The first 3 rows and the 3 columns are the rotation matrices and

the last column element are the transformation matrix elements.

29

Fig 3.9 Single pellet from two different views

The above figure shows the cylindrical pellet. For computational standardisation, all pellets are of

same dimensions and color.

Fig 3.10 Pellets kept in random (clutter) arrangement

The transformation from the sensor frame to the base frame is done in two steps. The first one

is to find the rigid transformation to the TCP (Tool Centre Point) frame and then to find the

transformation to the Base frame. For every motion of the robot end effector during the

scanning procedure, this transformation will change.

To find the TCP to Base conversion, we make use of the angles from the KUKA end effector.

These being the roll, pitch and yaw angles. The rotation parameters are combination of three

matrices :

30

The multiplication of the above matrix gives us the complete rotational matrix element. The

3x1 transformation matrix would directly be the positional coordinates of the end effector.

Q=

0.5490 0.8350 0.0169 177.35

-0.8348 -0.5500 0.0178 227.68

-0.0240 0.004 -0.9996 189.67

0 0 0 1

Table 3.2 TCP frame to Sensor frame calibration matrix

The above matrix is the calibration matrix that maps the points in the TCP frame to the base frame.

It is important to note that the 1st row and 4th column element is to change for every scan line. This is

because the position of the robot changes for every scan line. This can be shown by the below

figures:

Fig 3.11 Image from camera(left), Plot of points in X-Z plane(middle), Plot of points in Y-Z plane

(right)

31

Fig 3.12 Point Cloud Data (sorted) MATLAB.

3.2.2 DATA INTERPRETATION (LASER SCANNER)

A robust algorithm has been designed for picking up pellet from the clutter. After calibration

is done and a common reference frame is decided, the next step is data acquisition. The

MicroEpsilon SCANControl Laser Scanner gives a 2D profile of points along the X-Z axis.

The X coordinate of the point depicts the location in the sensor frame of reference and the Z

axis give the height with least count of 1 micro-meter.

We found that incase of standing pellets, the location of pellets may be found out by only

using the laser data. The 2D profile from the laser is converted to a 3D reconstruction of the

scene. This 3D data is a collection of points as shown in the figure. The X-Z axis are obtained

from the laser scanner and the Y axis is obtained by the motion of the robotic arm. The

resolution among the Y axis is 1 mm. This may be varied. A higher resolution would give a

sparce data whereas a lower resolution will yield a dense data and might increase

computational load.

32

Fig 3.12 Point Cloud Data Visualisation in MeshLab

The point cloud data represents the environment and the 3D structures. The First step is to

process the data. The Point cloud data is saved in a .csv(comma separated value) or a .txt file.

This data is obtained in “append” mode from the SDK C++ code of the Laser Scanner

manufacturer Micro Epsilon. Once the data acquisition is done, the data set is reduced to only

set of points above a fixed threshold of about 0.5mm. This rejects all the points on the

ground. Due to very low resolution and high accuracy, one can very well remain certain

about the very low levels of noise.

3.2.3 ALGORITHMS FOR PICKUP

After the data preprocessing, the next step is to precisely find the location of the pellet. As

mentioned earlier, the pellet that is least occluded has to be picked up first. This is because of

many benefits such as, the least occluded pellet will have the most approachable path. Thus

the movment of the robotics arm wont disturb the other pellets. It is also important to know

that as the scanning takes time, it would be ideal to have the entire cleanup with least number

of scans. In order to achieve that, pellet that least disturbs the arrangement is the best option

to pick and is often the least occluded pellet.

We have developed different approaches in order to address the pick up. These can be

subdivided into following approaches.

3.2.3.1 PELLET PICKUP USING POSITION ESTIMATION (HEURISTIC APPROACH)

In this approach, we first try to find the least occluded pellet. This pellet has to be on the top

of the clutter given that no other pellet lie outside the boundary of the clutter and inside the

workspace. The pellet on top will have the topmost point in the point cloud. This idea is used

to sort the data from top to bottom. The topmost point on the stack would correspond to the

pellet on top.

33

Fig 3.13 Matlab Plot of data from laser scanner showing 3D arrangement of pellets

As it can be seen from the above scanning, the top most point corresponds to a point on the

top pellet. In order to have the best pick up point on the surface area, that is the centre on the

circle in case of standing pellet and centre of the curved surface area in case of sleeping

pellet, we need to determine that position.

In order to do so, we use an averaging filter to the data set of the surface points. Surface

points are extracted by applying a tolerance to the Z value of topmost point. For example, If

the Zmax value is 165.89 mm , a tolerance of approximately 2 mm takes care of all the

manufacturing errors of the pellets. A usual standing pellet has approximately 1500 points

and a sleeping pellet has approximately 2500 points. These number of points vary with

position and orientation of the pellet .

The cases with two or more pellets at the same level, and are occluding, the calculations yield

a wrong location as it uses averaging. This was avoided by sorting the values again on the

basis of the X coordinate. The least value of X coordinate will give the leftmost point on the

workspace. The corresponding Y coordinate and combined with the sorted X value is then

used to find the location of the pellet.

34

Fig 3.14 Simple block diagram representation of the alorithm.

3.2.3.2 PELLET PICKUP USING HOUGH TRANSFORM

Mathematical approximation in the above methodology does not always hold good as the

averaging method sometimes give out erroneous results. This may be due to even one single

error value as it directly hampers the average and other statistical computations. Error values

are often encountered maybe due to shadowing effect or back reflections

Inorder to design a more robust system, we use Hough transforms to locate the centre of

pellet in case of standing pellets and longest edge algorithm for sleeping pellets. This method

turned out to be robust as after setting the hough transform parameters, the algorithm could

decide the circles and also the centre of the circles.

The Hough transform is a feature extraction technique used in image analysis, computer

vision, and digital image processing. The purpose of the technique is to find imperfect

instances of objects within a certain class of shapes by a voting procedure. This voting

procedure is carried out in a parameter space, from which object candidates are obtained as

local maxima in a so-called accumulator space that is explicitly constructed by the algorithm

for computing the Hough transform. The classical Hough transform was concerned with the

35

identification of lines in the image, but later the Hough transform [3] has been extended to

identifying positions of arbitrary shapes, most commonly circles or ellipses.

Fig 3.15 Hough Transform of a standing and two lying pellets showing the longest edge and centre.

Above image shows hough transform approximation for a single layer application. This

usually fails with a multi-layer arrangement. We tried out with multi-dimensional

checkerboard grid detection and used the data for calibration of camera. This enhancement

enabled us to have hough transforms for 2 layer as well. Due to the error rate and failure to

identify the circle centre, we applied hough transform on the 3D point cloud data directly.

In order to apply Hough transform to the 3D data, we first segregated the point cloud data

into different levels. These levels may be defined as n and d where n and d are the length and

diameter of the pellets respectively. The quantisation of point cloud data is distributing the

point cloud into n, 2n, 3n…. and d, 2d, 3d …. Categories. As the values of diameter and the

length are known, these values are compared to the Z coordinate of the point cloud data. The

above mentioned tolerance value come into play while distributing the points into the

quantised levels.

After the quantisation is done, we observe some set of points in each of the levels. For

example if we have a 2 layer data of standing pellets only then we will finds points in levels n

and 2n. Incase we have points in d level then that would mean that there are sleeping pellets

also present in the arrangement.

36

Fig 3.16 3D arrangement of standing pellets

Level 1 – Ground level Level 2 Level 3

Level 4- Top Level

Fig 3.17 Images showing the different levels with pellets

Using OpenCV, and by application of hough transform on the above data we obtain the

following result

Fig 3.18 Application of Hough Transforms gives distinct circles.

37

As we observe that perfect circles are detected in the above hough transform from the point

cloud data. These two centres are the centres of pellets in the second level. Once the precise

centre are obtained, these values are transferred to the KUKA and the end effector picks up

the pellet.

Fig 3.19 Algorithm for pickup of the pellet

The advantage of this method is that the number of scans required are reduced. The averaging

procedure explained in the above section fails in a lot many cases. This system is robust and

gives better results. Although in some cases , with change of illumination or color of pellet,

and keeping the Hough parameters constant, this method did not work.

3.2.3.3 MERGING POINT CLOUD DATA WITH IMAGE DATA

The point cloud data from the laser scanner has a point to pixel correspondence with the

image taken from the camera. This was found out using the calibration matrices correlating

the point cloud data to the pixels. This does have some errors which may be due to

approximations and errors during the process of calibration.

38

Fig 3.20 Laser data for a single pellet with color variation on basis of height

Fig 3.21 Transformation of laser data onto Image data

In the above figure we can see the mapping of points on a pellet. The laser scan line is shown

in red colour. Similar mapping can be done with surfaces on pile of pellets as follows:

39

Fig 3.21 Mapping of laser data onto Image data for cluttered arrangement

The use of mapping gives an error of 2% and approximately 7-8 pixels. This mapping of data

may be avoided in case of standing or sleeping pellets but it has to be used in case of tilted

pellets. Tilted case is a special case when the points do not fall to either of the above defined

levels. This case may arise when we find residue points outside the quantised levels. These

residue points are taken, mapped into image and from the image, older algorithms of pose

estimation are applied.

3.2.3.4 PELLET POSE ESTIMATION USING POINT CLOUD LIBRARY

A complete and accurate pose estimation can be obtained when we locate the axis of the

cylindrical pellet along with the centre through which it passes. The estimation of the exact

axis may be obtained using curve fitting methods. Algorithms such as RANSAC fits surfaces

or solids to the point cloud data. Most of point cloud curve/solid fitting algorithm work on the

basis of normal fitted to the surface. The cos product between normals on same surface is

different as compared to the normals at the edge of the surface or at edges or vertices. This

property of normals help fit curves to the point cloud data.

40

Fig 3.22 Image showing cylinder fitting (a), optimized results (b)

Fig 3.23 Normals and plane passing through a point on the cylinder

3.2.4 COMPONENT SPECIFICATION AND JUSTIFICATION

The main component used here along with camera is the Micro Epsilon SCANControl Laser

Scanner. The model number which we used here is 29xx-100. The main specifications is the

Z axis range being 290mm with line length as 100mm. The frequency of operation is variable

between 300 – 2000 Hz and there are a maximum of 1280 points that may be obtained per

profile. The laser characteristics are 658nm wavelength with 8mW power. This is a class 3B

standardized and should not come in direct contact with eyes. Inputs and outputs are

configurable using Ethernet or RS422 which follows half duplex communication.

41

Fig 3.24 Dimension and Range data of the Micro Epsilon SCANControl Laser Scanner

Fig 3.25 GUI of SCANControl Software

There are other existing alternatives for this problem statement. Microsoft Kinect, is a widely

used software for 3D data acquisition in computer vision. But this revolutionary technology

also has drawbacks. Error in acquired data is one. Secondly, dynamic environment requires

re-calibration of the RGB-D sensor. Small changes in workspace such as change in intensity

of light may trigger different output from the sensor. Moreover it might not be feasible to

42

mount the sensor on the end effector of an industrial robot. Other alternative maybe use of 3D

[2] laser sensors but these are also bulky and poses a similar challenge as explained above.

The dataset of a 3D scan is usually exceptionally big. This data set refers to the 3D point

cloud data. A bigger data poses a challenge in computational capabilities and time required as

the entire process has to be done online and continuous till the bin is empty. Hence this laser

scanner hits a sweet spot in terms of frequency, range, compactness and optimum cloud data

output.

43

CHAPTER 4

RESULT ANALYSIS

4.1 INTRODUCTION

This chapter deals with the analysis of the result which is obtained during our project.

Various graphs are plotted along with the explanation of the result. The significance of the

result obtained is also discussed in this chapter.

4.2 RESULTS

This section consist of results with respect to calibration, pixel errors and optimisation results

and repeatability for different cases.

4.2.1 CALIBRATION RESULTS

Calibration is the process of estimating the relative position and orientation between the

laser range finder and the camera. It is important as it effects the geometric interpretation

of measurements. This section has calibration result for both the optical devices camera as

well as the laser range finder.

4.2.1.1 CAMERA CALIBRATION RESULTS

The camera calibration results are as follows:

Focal Length: fc = [ 2399.95141 2208.98499 ] ± [ 65.88081 131.74685 ]

Principal point: cc = [ 1200.15931 1307.56970 ] ± [ 55.39357 213.85137 ]

Skew: alpha_c = [ 0.00000 ] ± [ 0.00000 ] => angle of pixel axes = 90.00000 ±

0.00000 degrees

Distortion: kc = [ -0.17778 0.13136 -0.00637 0.00124 0.00000 ] ± [ 0.03964

0.09832 0.01405 0.00429 0.00000 ]

Pixel error: err = [ 0.80067 1.64925 ]

These results effect the intrinsic parameters. The camera calibration matrix is given by:

44

R Matrix as shown below is the rotation matrix.

0.999449 -0.033176 0.000906

-0.033184 -0.999377 0.011981

0.000508 -0.012005 -0.999928 Table 4.1 Rotation Matrix elements for camera calibration

T Matrix as shown below is the translation matrix.

T=

Table 4.2 Translation Matrix elements for camera calibration

CI matrix is the camera Intrinsic parameter matrix. This is intrinsic parameter matrix which

depends on the focal length and pixel sizes.

1428.525269 0.000000 1241.642390

0.000000 1427.679987 1035.951211

0.000000 0.000000 1.000000 Table 4.3 Camera Intrinsic Matrix elements for camera calibration

The above results are obtained from the Bouget’s toolbox which uses Zhang’s calibration

algorithm for pinhole camera.

4.2.1.2 LASER CALIBRATION RESULTS

The laser calibration was a two step procedure. This is done by first converting the laser

sensor frame to the tool centre point frame (TCP) . The next step is to convert the points from

the tool centre point frame to the base frame.

The sensor to TCP frame calibration is given by the following matrix :

0.8001 0.5972 -0.0565 -39.2808

0.5996 -0.7989 0.0474 -14.6918

-0.0168 -0.0718 -0.9973 329.3757

0 0 0 1

Table 4.4 Joint Calibration results (1)

-178.640255

161.384730

191.422883

45

After the coordinates are obtained in the TCP frame, the next conversion is to the base frame

using the following matrix.

0.5490 0.8350 0.0169 177.35

-0.8348 -0.5500 0.0178 227.68

-0.0240 0.004 -0.9996 189.67

0 0 0 1

Table 4.5 Joint Calibration results (2)

The multiplication of the above two matrices to the point in the sensor frame converts it into

the reference or the base frame. These values are the optimised values by using least squares

approach.

4.2.2 URG SENSOR RESULTS

As to seek different alternatives to the laser scanner, we used Hokuyo URG laser scanner as

well. This scanner scans in angular form. The error is significantly higher as compared to the

Micro Epsilon SCANControl Laser Scanner which was hence used for all the algorithms and

manipulations.

Fig 4.1 a. Grey scale image Fig b. Depth profile Fig c. Fused segmented image

In depth analysis of measurement errors may be found in the following table.

46

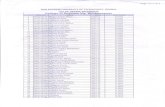

S.no Coordinates w.r.t Depth scanner(mm) x y

3D Coordinates w.r.t camera(mm) x y z

3D Coordinates w.r.t camera(Estimated)(mm) x y z

Error (mm) x y z

1 265 -209 230 -67 36 221 -46 41 9 -21 -5

2 279 -57 87 101 46 66 101 40 21 0 6

3 285 51 -32 -108 56 -43 -127 51 11 19 5

4 269 119 -108 -104 53 -115 -126 72 7 22 -19

5 307 -49 57 -121 22 62 -94 17 -5 -27 5

6 326 -114 128 122 4 102 117 10 26 5 -6

7 325 -98 113 -122 34 115 -124 48 -2 2 -14

8 334 -58 79 -116 36 75 90 25 4 -26 -11

Table 4.5 URG Sensor results

Due to high error and low least count, this was not an ideal sensor for robotic manipulation.

4.2.3 REPEATABILITY TESTS AND ACCURACY ANALYSIS

Robustness of a system can only be checked if the system has high repeatability and a good

accuracy. This plays a vital role in the field of robotics as the least counts are low and

precision is of utmost importance. Repeatability is the variability of the measurements

obtained by one person while measuring the same item repeatedly. This is also known as the

inherent precision of the measurement equipment. Keeping in mind that minimum number of

scanning as ideal, the use of Hough transforms turn out as a more robust scenario. This is due

to its ability to synthesize all the data and fit possible approximate circles to all these points

and not just to perfect circles.

Fig 4.2 Hough Transforms detecting circles for complete data as well as for partial data

47

4.2.4 RESULT ANALYSIS OF DIFFERENT ALGORITHMS

We started this project of pellet picking using robot end effector keeping in mind the design

aspects, speed and accuracy. Along the way, we devised various algorithm that reduces the

number of scanning required for the modeling of the workspace and subsequently the pick up

of the pellet from the stack. This project report mainly discusses the three main algorithms .

These being averaging and finding the centre, point cloud analysis and using Hough

Transforms. The following table gives the experimental results pertaining to the following

algorithms.

ALGORITHM NUMBER OF

PELLETS PICKED

PER SCAN(average)

ACCURACY IN

ESTIMATION OF

POSITION(Pick

Up Point)

ACCURACY IN

PICKING UP THE

PELLET USING

SUCTION GRIPPER

MAXIMUM NUMBER OF PELLETS CORRECTLY LOCATED FROM ONE SCAN LINE

AVERAGING

METHOD

1 78% 76% 4

HOUGH

TRANSFORM

METHOD

5 96% 96% 8

48

CHAPTER 5

CONCLUSION AND FUTURE SCOPE OF WORK

5.1 SUMMARY

This project majorly dealt with pose, orientation and estimation of pellets on the basis of data fusion

from image and laser scan of the workspace. After this is achieved, the pellet is to be picked up from

the occlusion using a suction gripper.

Initially both the sensors required calibration which was achieved with high accuracy by

using optimisation method (Gradient Descent).

Once, data was available in the base frame, we first designed an algorithm that sorted the

top points and used an averaging method and norm calculation to find a pick up point. The

result were accurate only in cases where error values were absent.

For a better and reliable methodology, we introduced to concept of hough transform

implementation on 3D point cloud data for standing pellets and sleeping pellets. In order to

achieve this, we quantized the point cloud data into levels on the basis of height. This

improved the accuracy upto 96% .

Algorithm was derived for finding the surface on a tilted pellet . A better method has been

proposed with the application of PCL (point cloud library). This is essentially curve fitting on

a set of data points.

The least occluded pellet has to be found out. This is so as to reduce the disturbance on the clutter

due to movement of the robot end effector. The application of Hough transform enabled us to

correctly find the centre from the cloud data. This worked for occluded pellets as well and hence

reducing the number of scans required. The final proposed PCL algorithm is a fail proof method to

obtain the axis of the cylinders as well, together with the surface for the pick up.

49

5.2 CONCLUSION

To conclude, an implementation of bin picking of identical objects was carried out. This was

done by design of a robust algorithm that used data from an image (from a camera) and point

cloud (2D laser scanner). Further, in order to reduce the scanning process, Hough transform

was implemented in the project. From the results we found 96% accuracy in locating the

pellet and its pick up. The novelty in this entire research has been the increased pace, Correct

calibration with optimisation methods. The above calibration has not been done yet for

industrial robot and the existing calibration methods which have lesser accuracy have been

mainly devised for sensor fusion in mobile robotics and SLAM. A picking algorithm has also

been devised which is based on Hough transforms application on the cloud data. This enabled

better results and also more increased the ratio of number of pellets picked per scans taken,

hence increasing the speed of the entire process.

It is very important to reduce the time in industrial processes. This can be done when the

number of pellets correctly identified with accurate sensor is high. If this is less then there is a

requirement for another scan which will further take time. Our Hough transform routine takes

care of this aspect locating correctly 8 pellets and picking them with an accuracy of 96% as

the centre calculated were exact. The averaging method is not exactly ideal as it requires

scanning each time a pellet is picked. This is due to the statistical approach in this method

and the result is a function of each and every point in the point cloud data.

5.3 FUTURE SCOPE

A complete fitting of cylinder would be truly accurate when the axis can be determined from

a set of point clouds. There is a lot of future scope pertaining to our specific case as the data

may not be complete and the conventional approaches of cylinder fitting based on complete

models may not suffice. Mathematical methods of planes are being investigated . A method

that would use image data as a foreground-background segmentation and further a voting

pattern may be introduced. After this is achieved, the idea is to fit parametric equations to the

points or maybe use Gaussian distribution to estimate the surface.

50

REFERENCES

Journal / Conference Papers

[1] Z. Zhang. "A flexible new technique for camera calibration", IEEE Transactions on

Pattern Analysis and Machine Intelligence, 22(11):1330-1334, 2000

[2] Nadia Payet and Sinisa Todorovic,"From Contours to 3D Object Detection and Pose

Estimation", 2011 IEEE International Conference on Computer Vision

[3] P. Tiwan, Riby A. Boby, Sumantra D. Roy, S. Chaudhury, S.K.Saha,"Cylindrical Pellet

Pose Estimation in Clutter using a Single Robot Mounted Camera",2013 ACM July 04-

06 2013.

[4] Rigas Kouskouridas, Angelos Amanatiadis and Antonios Gasteratos, "Pose Manifolds

for Efficient Visual Servoing", 2012 IEEE.

[5] Hartley, R. and Zisserman, A. Multiple View Geometry in Computer Vision,

Cambridge University Press, Cambridge 2001.

[6] Q. Zhang and R. Pless, “Extrinsic Calibration of a Camera and Laser Range Finder”,

IEEE Intl. Conference on Intelligent Robots and Systems (IROS) 2004.

[7] Zhang, Z., 1998. A flexible new technique for camera calibration. In: IEEE

Transactions on Pattern Analysis and Machine Intelligence. pp. 133 to 334.

[8] Aliakbarpour, H., Nunez, P., Prado, J., Khoshhal, K., Dias, J., 2009. An efficient

algorithm for extrinsic calibration between a 3d laser range finder and a stereo camera

for surveillance. In: 14th International Conference on Advanced Robotics, Munich,

Germany. pp. 1 to 6.

[9] Fast object localization and pose estimation in heavy clutter for robotic bin picking

Ming-Yu Liu, Oncel Tuzel, Ashok Veeraraghavan, Yuichi Taguchi, Tim K Marks and

Rama Chellappa The International Journal of Robotics Research published online 8

May 2012

[10] Viola P and Jones M (2001) Rapid object detection using a boosted cascade of simple

features. In Proceedings of the IEEE Conference on Computer Vision and Pattern

Recognition, vol. 1, pp. 511–518.

[11] Dalal N and Triggs B (2005) Histograms of oriented gradients for human detection.

In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition,

pp. 886–893.

[12] Ashutosh Saxena, Justin Driemeyer and Andrew Y. Ng, "Learning 3-D Object

Orientation from Images", NIPS workshop on Robotic Challenges for Machine

Learning, 2007.

[13] Eric Royer, MaximeLhuillier, Michel Dhome, and Jean-Marc Lavest. 2007.

"Monocular Vision for Mobile Robot Localization and Autonomous Navigation". Int. J.

51

Comput. Vision 74, 3 (September 2007), 237-260. DOI=10.1007/s11263-006-0023-y

http://dx.doi.org/10.1007/s11263-006-0023-y

[14] Danica Kragic and Markus Vincze. 2009."Vision for Robotics". Found. Trends Robot

1, 1 (January 2009), 1-78. DOI=10.1561/2300000001

http://dx.doi.org/10.1561/2300000001

[15] Michael J. Tarr and Isabel Gauthier. 1999. "Do viewpoint-dependent mechanisms

generalize across members of a class?. In Object recognition in man, monkey, and

machine" MIT Press, Cambridge, MA, USA 73-110.

[16] S. Belongie, J. Malik, and J. Puzicha. 2002."Shape Matching and Object Recognition

Using Shape Contexts". IEEE Trans. Pattern Anal. Mach. Intell. 24, 4 (April 2002),

509-522. DOI=10.1109/34.993558 http://dx.doi.org/10.1109/34.993558

[17] Guan Pang; Neumann, U., "Training-Based Object Recognition in Cluttered 3D Point

Clouds," 3D Vision - 3DV 2013, 2013 International Conference on , vol., no., pp.87,94,

June 29 2013-July 1 2013 doi: 10.1109/3DV.2013.20

[18] Pochyly, A.; Kubela, T.; Singule, V.; Cihak, P., "3D vision systems for industrial bin-

picking applications," MECHATRONIKA, 2012 15th International Symposium , vol.,

no., pp.1,6, 5-7 Dec. 2012

[19] Zisserman, A.; Forsyth, D.; Mundy, J.; Rothwell, C.; Liu, J. & Pillow, N. (1994)."3D

object recognition using invariance", Technical report, Robotics Research

Group,University of Oxford, UK.

[20] Zerroug, M. & Nevatia, R. (1996)."3-D description based on the analysis of the

invariant and cvasi-invariant properties of some curved-axis generalize dcylinders",

IEEE Trans.Pattern Anal. Mach. Intell., vol. 18, no. 3, pp. 237-253.

[21] Trung-Thien Tran, Van-Toan Cao, Denis Laurendeau. “Extraction of cylinders and

estimation of their parameters from point clouds” Computers & Graphics, Feb @015,

Pages 345-357

[22] Tahir Rabbani, Frank can den Heuvel, “Efficient Hough Transform for Automatic

Detection of Cylinders in Point Clouds” ISPRS Workshop ,”Laser Scanning ”

September 12-14, 2005.

52

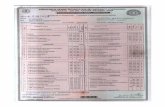

PROJECT DETAILS

Student Details

Student Name Susobhit Sen

Register Number 110921352 Section / Roll

No

A / 30

Email Address [email protected] Phone No (M) 09971798476

Project Details

Project Title Robotic Manipulation of Objects Using Vision

Project Duration 24 weeks Date of reporting 18th

Jan,2015

Organization Details

Organization Name Indian Institute of Technology, Delhi (IIT Delhi)

Full postal address

with pin code

Hauz Khas, New Delhi, 110016

Website address www.iitd.ac.in

Supervisor Details

Supervisor Name Dr. (Prof) Santanu Chaudhury

Designation Professor, Electrical Engineering

Full contact address

with pin code

Electrical Engineering Department, IIT Delhi, Hauz Khas, New Delhi,

110016

Email address [email protected] Phone No (M) 011-26512402

Internal Guide Details

Faculty Name Mr. P Chenchu Sai Babu

Full contact address

with pin code

Dept of Instrumentation and Control Engineering, Manipal Institute of

Technology, Manipal – 576 104 (Karnataka State), INDIA

Email address [email protected]