Blade c7000 Con Msa2000

-

Upload

mauricio-loaiza-zerega -

Category

Documents

-

view

306 -

download

4

Transcript of Blade c7000 Con Msa2000

Using the HP Direct Connect Shared SAS storage solution with VMware ESX virtualization software technology brief

Abstract.............................................................................................................................................. 2 Introduction......................................................................................................................................... 2

HP Direct Connect Shared SAS storage solution................................................................................... 3 VMware virtualization software ......................................................................................................... 7

Guidelines for installing and configuring HP hardware............................................................................. 8 c-Class component interconnects ........................................................................................................ 8 Drive array configuration .................................................................................................................. 8

Guidelines for installing and configuring VMware ESX software ................................................................ 9 Virtual drives and VMFS volumes ....................................................................................................... 9 LUN masking................................................................................................................................. 10 Multipathing.................................................................................................................................. 10 Storage volume naming .................................................................................................................. 10

Conclusion........................................................................................................................................ 11 Appendix: Procedures for setting up HP Direct Connect Shared SAS hardware ......................................... 12

Installing and configuring HP hardware ............................................................................................ 12 Configuring hardware for boot-from-shared storage ........................................................................... 16

For more information.......................................................................................................................... 17 Call to action .................................................................................................................................... 17

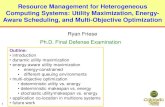

Abstract The HP BladeSystem c-Class and HP Direct-Connect Shared SAS storage technology combines with VMware ESX server software to provide a highly available, virtualized infrastructure. This technology brief describes implementation of VMware virtualization technology on an HP Direct Connect Shared SAS storage solution to achieve server and storage consolidation, simplified server and storage deployment, and advanced active load balancing using virtual machine migration technology. An appendix delineates the essential steps for installing and configuring HP hardware and for configuring the hardware for boot-from-shared storage functionality.

Introduction Shared storage systems such as the HP MSA2012sa Shared SAS solution reduce storage costs and enable advanced computing architectures with multiple physical servers that require access to the same storage assets. The ability to store the complete software image of a server (OS, applications, and data) on one or more volumes of shared storage and to boot from shared storage enhances software management and eliminates the need for additional local (in-server) storage (Figure 1).

Figure1. Shared storage block diagram

SAS Interconnect

SAS Storage Arrays

Server Blade 1

Server Blade 2

Server Blade 3

Server Blade n

OS, Apps & Data

Ethernet LAN

OS, Apps & Data

OS, Apps & Data

OS, Apps & Data

Virtualization tools allow administrators to migrate virtual machines dynamically from one physical server to another to balance hardware utilization for increased system efficiency and reduced power usage.

Used together, the following solutions provide the basis for an automated data center:

• HP Direct Connect Shared SAS storage solution • VMware ESX 3.5 U3 and later or VMware ESX 4.0 and later, and VMotion

2

HP Direct Connect Shared SAS storage solution The HP Direct Connect Shared SAS1 storage solution combines HP BladeSystem c-Class components with the HP StorageWorks MSA 2012sa storage array (Figure 2). This combination creates a complete system of servers and storage contained in a single rack. Supported c-Class server blades include at least one slot that accepts the HP Smart Array P700m Controller mezzanine card for interconnectivity with one or two pairs of HP 3Gb SAS BL switches.

Each HP 3Gb SAS Switch provides 16 internal connections with the servers and eight external ports for SAS storage connectivity. HP recommends using a pair of switches to provide redundancy paths between the servers and the HP StorageWorks Modular Smart Array (MSA) 2012sa.

Figure 2. Components of the HP Direct Connect SAS storage solution

HP c-Class Server Blade

HP 3Gb SAS BL Switch

Front

HP c7000 enclosure with server blades

Front Back

HP StorageWorks MSA2012sa Array Enclosure

Back

HP Smart Array P700m Controller mezzanine card

Figure 3 shows the connection paths for a basic HP Direct Connect Shared SAS storage system. In this example, the HP MSA2012sa Array Enclosure includes dual controllers (A and B). Each controller provides two ports, with each port connected to a different SAS switch to maintain storage availability should a switch, cable, or MSA controller fail.

Figure 3. Basic configuration block diagram of a HP Direct Connect Shared SAS system

Midplane

HP 3Gb SAS switch (in odd bay)

Server blade

Smart Array P700m Controller Mezz Card

HP MSA 2012sa Array Enclosure

Controller A

Controller B

c7000 Blade enclosure

HP 3Gb SAS switch (in even bay)

15

15

Other server blades

1 SAS is an acronym for Serial Attached SCSI.

3

Figure 4 shows the external connectivity of the configuration in Figure 3. While two connections (one per switch/array controller) are adequate, the additional connections enhance performance and availability.

Figure 4. Basic external connectivity for an HP Direct Connect SAS system

HP 3GB SAS switches

HP MSA 2012sa Array Enclosure

Array controllers

Figure 4 shows a typical c-Class configuration supporting 16 servers. A system with 32 servers (16 dual-density server blades) would require an additional pair of SAS switches and a second MSA 2012sa Array Enclosure.

The HP MSA 2012sa can operate as a standalone 12-drive array or with additional MSA2000 Drive Enclosures. Figure 5 shows a maximum configuration of four drive arrays, each with three additional MSA enclosures, for a total capacity of 192 (4 x48) drive slots.

Figure 5. Maximum storage configuration of an HP Direct Connect Shared SAS system

MSA 2000 Drive

Enclosure A

MSA 2000 Drive

Enclosure B

MSA 2000 Drive

Enclosure C

MSA 2000 Drive

Enclosure A

MSA 2000 Drive

Enclosure B

MSA 2000 Drive

Enclosure C

MSA 2000 Drive

Enclosure A

MSA 2000 Drive

Enclosure B

MSA 2000 Drive

Enclosure C

c-Class Blade Enclosure

3Gb SAS switch 2

MSA 2012sa Array Enclosure

# 2

MSA 2012sa Array Enclosure

# 3

MSA 2012sa Array Enclosure

# 4 2

2

2 MSA 2012sa Array Enclosure

# 1

2

2

2

2

2

MSA 2000 Drive

Enclosure A

MSA 2000 Drive

Enclosure B

MSA 2000 Drive

Enclosure C

3Gb SAS switch 1

4

Figure 6 shows a method of connecting cascaded MSA 2000 drive enclosures from one MSA 2012sa array enclosure as suggested previously in Figure 5. The “A” controllers of the array and drive enclosures are cascaded together, as are the “B” controllers.

Figure 6. Cascaded drive enclosures

HP MSA 2012sa Array Enclosure

HP MSA 2000 Drive Enclosures

HP 3Gb SAS Switches

The HP Direct Connect SAS system offers wide flexibility in configuring a shared storage system that meets strategic goals. Figures 7 and 8 show two configurations possible with the c-Class blade system.

NOTE The MSA2012sa shared storage solution primarily supports one or two c-Class enclosures. To support more than two c-Class enclosures with ESX servers needing access to the same shared storage, a more extensible shared storage solution would be required, such as the MSA2012i (iSCSI) or the MSA2012fc (Fiber Channel) product.

5

Figure 7 depicts a single c7000 enclosure accessing two storage enclosure groups. This approach offers more storage space on a per-server basis for applications that use large data files.

Figure 7. Two storage groups accessed by a single blade enclosure

Figure 8 depicts two c7000 enclosures sharing a storage enclosure group. This solution may apply to higher density server environments that do not have large storage demands.

Figure 8. A single storage enclosure shared by two blade enclosures

6

VMware virtualization software HP has a partnership with VMware, makers of VMware ESX virtualization software. VMware ESX is an enterprise-class virtualization solution that consolidates multiple physical servers or workloads into a single physical server. VMware ESX implements a hypervisor operating system in which all other supported operating systems execute as a guest OS in a virtual machine. Multiple virtual machines running a variety of operating systems and applications can run simultaneously on the same physical server hardware. This consolidation concept better utilizes space and power, and reduces server acquisition and maintenance costs. Virtual machines can be quickly deployed with a standard configuration and set of applications to respond dynamically to variable loads.

When deployed in a shared storage environment, ESX has additional capabilities, including the ability to migrate a virtual machine from one physical ESX server to another for load balancing, scheduled maintenance, or other reasons. If VMotion technology is enabled on these servers, the migration can happen live while the VM is online and without affecting the OS and applications that are running. In Figure 9, a virtual machine (VM 3) is migrated live from one VMware ESX Server to another. Each VM runs its own (guest) operating system (Microsoft Windows, Linux, or Unix) while the Virtualization Layer of ESX Server manages all resource requirements.

Figure 9. Migrating a virtual machine (VM3) using VMotion technology

VMotion technology

Server 1

Server 2

VM 1

App

OS

VM 2 App

OS

VM 3 App

OS

VM 3

App

OS

VM n App

OS

VMware ESX Server

Virtualization Layer (Virtual Hardware

and Integrated OS)

Virtualization Layer (Virtual Hardware

and Integrated OS)

VMware ESX Server

During a live migration, the network identity and connections of the VM are preserved, and the network router is updated with the new physical location of the VM. The process takes a matter of seconds on a GbE network and is transparent to users.

7

Guidelines for installing and configuring HP hardware The HP Direct Connect Shared SAS Storage system offers wide flexibility in two key areas of shared storage systems: component interconnects and drive array configuration.

c-Class component interconnects The BladeSystem c-Class enclosure uses a hardwired interconnect method of routing data between the server blade and the SAS switches. The slot position used by a mezzanine card determines data routing. Table 1 shows the midplane interconnect correlation between the server blade mezzanine slots and the interconnect bays where the SAS switches are installed.

Table 1. c-Class enclosure midplane interconnect mapping

Server blade mezzanine slot

c3000 interconnect bay

c7000 interconnect bay

Half-height blade: Slot 1, ports 1 and 2 Slot 2, ports 1 and 2 Slot 2, ports 3 and 4

n/a 3 and 4 3 and 4

3 and 4 5 and 6 7 and 8

Full-height blade: Slot 1, ports 1 and 2 Slot 2, ports 1 and 2 Slot 2, ports 3 and 4 Slot 3, ports 1 and 2 Slot 3, ports 3 and 4

n/a 3 and 4 3 and 4 3 and 4 3 and 4

3 and 4 5 and 6 7 and 8 7 and 8 3 and 4

Dual-density blade: Server A slot, ports 1 and 2 Server B slot, ports 1 and 2

3 and 4 3 and 4

5 and 6 7 and 8

For optimum connectivity, SAS switches should be installed in pairs, even if not all server blades are installed or configured for shared storage.

Drive array configuration The MSA2012sa array includes a web-based Storage Management Utility that provides a graphical user interface for configuring and managing the storage system. Users can configure the 12 hard drives of each MSA array into virtual disks (Vdisks). The Vdisk is the unit of failover for the MSA2012sa. Each MSA controller owns one or more Vdisks. If an MSA controller fails or is taken offline, the Vdisks for that controller transition to the other MSA controller. The Vdisks transition back to the original controller when it comes back on line.

Vdisks are not presented directly to servers. Instead, Vdisks are divided into storage volumes. The storage volumes are then mapped to servers that will have access. HP recommends that as each storage volume is configured on the MSA2012sa, users complete the volume mapping for a single server. Then, using the ESX tools on that server, users should assign the ESX volume name before presenting the volume to any other servers and before configuring additional volumes. It is easier to select and name the volume when only one volume is presented at a time. Refer to the section “Guidelines for installing and configuring VMware ESX software.”

If the configuration includes multiple MSA2012sa shared storage arrays, users should present and name all the volumes from the first MSA array before presenting any volumes from the next. This makes selection and naming tasks easier.

8

Guidelines for installing and configuring VMware ESX software

Virtual drives and VMFS volumes Each virtual machine can be configured with one or more virtual disk drives. These virtual drives appear to the VM as SCSI drives but actually are files on a Virtual Machine File System (VMFS) volume (Figure 10). When a guest operating system on a VM issues SCSI commands to a virtual drive, the ESX Virtualization Layer translates the commands to VMFS file operations. While a VM is up and running, VMFS locks the associated files to prevent other ESX servers from updating the files. The VMFS also ensures that a VM cannot be opened by more than one ESX server in the cluster.

Figure 10. VMFS volume containing virtual drives

VM 1 App

OS

VM 2 App

OS

VM 3 App

OS

VMware ESX Server A

Virtualization Layer (Virtual Hardware

and Integrated OS)

VM 1 App

OS

VM 2 App

OS

VM 3 App

OS

VMware ESX Server B

Virtualization Layer (Virtual Hardware

and Integrated OS)

VMFS Volume with virtual drives

VMFS allows virtualization to scale beyond the boundaries of a single system, and increases resource utilization by allowing multiple VMs to share a consolidated pool of clustered storage. A VMFS volume can extend over multiple Logical Unit Numbers (LUNs). VMFS3 volumes must be 1200 MB (1.2 GB) or larger and can extend over 32 physical storage elements. This allows pooling of storage and flexibility in creating the storage volume necessary for virtual machines. To increase disk space as required by VMs, a VMFS volume can be extended while virtual machines are running on the volume.

There are two basic approaches for setting up VMFS volumes and LUNs:

• Many small LUNs, each with a VMFS volume: With a number of smaller LUNs and VMFS volumes, fewer ESX server hosts and VMs contend for each VMFS volume, which maximizes performance but makes management more complex.

• One large LUN (or many LUNs) with one VMFS volume extended across all LUNs: With only one VMFS volume, management is simpler and creating VMs and resizing virtual drives are easier; however, performance can be affected.

9

The recommended strategy falls somewhere in between. Using a few VMFS volumes extended over several LUNs more evenly distributes server access and storage load across two controllers. The HP Direct Connect Shared SAS solution is currently limited to 64 total LUNs per ESX server when all four paths are in use. This means 64 LUNs * 4 paths = 256 LUN-paths. This is a per-server limit

regardless of the number of shared storage arrays in the configuration. The initial installation should strive for a balance of VMs across ESX servers and storage because a highly shared VMFS volume can result in reduced performance.

LUN masking Proper LUN masking is critical for boot-from-shared storage systems.

Each ESX server booting from shared storage must have a dedicated boot volume visible only to that server. While it may seem like a good idea to configure all of the boot volumes from a single Vdisk on the array, distributing them creates better balance and improves performance across two controllers. No ESX server should have access to the boot LUNs of other ESX server hosts.

A volume presented to a particular ESX server on multiple paths (multiple SAS IDs) should have a consistent LUN value on each of the paths. A volume shared by multiple ESX servers should have a consistent LUN value for all servers.

Multipathing Multipath redundancy provided by dual-controller and dual-switch configurations causes each LUN exposed to a particular ESX server blade to appear four times (four total paths to the LUN). Typically, each LUN will appear as the same LUN number on four different targets. For example, the VMware command esxcfg-mpath reveals four paths to a boot LUN:

• Vmhba0:T1:L1 • Vmhba0:T2:L1 • Vmhba0:T3:L1 • Vmhba0:T4:L1

Multipathing functionality in VMware ESX software monitors the status of each path and resolves the paths into a single view of the LUN for the operating system. ESX chooses the most viable path based on path availability and controller preference.

Storage volume naming In a shared storage environment, it is important to use a consistent and rich naming convention when creating storage volumes. The ESX software allows users to assign a meaningful name to a storage volume as it is initialized for use in ESX. Volume names should include location information that may not be apparent in the device path naming at the ESX operating system level. The suggested format is to include storage array name, virtual disk name, and storage volume name.

The volume names can also contain purpose or content information if they are intended for a particular organizational group or project purpose. Good naming techniques allow an administrator to quickly locate and solve problems with storage volumes when ESX reports an issue or space becomes tight on an ESX server.

10

Conclusion The HP Direct Connect Shared SAS storage solution integrates well with VMware ESX server and supports the advanced functionality of VMware tools. The HP Direct Connect Shared SAS storage solution has been tested and certified with VMware software and economically provides high levels of hardware redundancy.

11

Appendix: Procedures for setting up HP Direct Connect Shared SAS hardware

NOTE For detailed instructions on hardware installation, refer to the user or installation guides for the appropriate c-Class and StorageWorks components.

Installing and configuring HP hardware The following steps describe the basic procedure for installing the HP Direct Connect shared SAS Storage hardware and configuring the drive arrays.

1. Install the HP Smart Array P700m Controllers on the mezzanine slots of the server blades and install the server blades into the c-Class enclosure.

2. Install the HP 3Gb SAS BL Switches into the interconnect bays of the c-Class enclosure (refer to Table 1 for correlation between interconnect bays and mezzanine slots).

3. Install and connect power to the MSA2012sa array enclosure(s) and, if applicable, the MSA 2000 drive enclosures, but do not connect the data cables from the SAS switches to the MSA enclosures.

4. Upgrade the firmware (if necessary) on all P700m Controllers, 3Gb SAS Switches, and MSA2012sa enclosures.

5. Connect the data cables from the MSA2012sa Array Enclosure(s) to the 3Gb SAS Switches. 6. Using the Onboard Administrator of the c-Class enclosure, determine and record the SAS Device

IDs for the P700m controller ports. 7. Using a web browser and the appropriate IP address of the MSA2012sa enclosure, access the

Storage Management Utility (SMU) (Figure 11) to establish the RAID sets and create Vdisks.

NOTE Previous HP MSA and Smart Array storage solutions were managed with the HP Array Configuration Utility (ACU), an application downloadable from HP. The SMU referenced in this procedure is included in the MSA2012sa firmware and provides functionality similar to the ACU.

Each MSA2012sa enclosure contains 12 dual-ported SAS drives accessed through the two MSA controllers. The RAID level and Vdisk configuration depend on the desired mix of performance/redundancy/capacity, and on the number of disks available for each RAID set.

12

Figure 11. Creating Vdisks with the HP Storage Management Utility

13

8. Using the SMU, configure the Vdisks into storage volumes.

Figure 12 shows the SMU volume management page that allows the user to add a volume to a Vdisk. In this example, the Vdisk “vdisk2sas” has been highlighted and will be configured with a storage volume. The size of the volume should be large enough to accommodate the ESX server software, the applications, and data for the estimated number of virtual machines.

Figure 12. Creating storage volumes with the HP Storage Management Utility

Logical Unit Number (LUN) numbering is controlled through storage volume mapping, which is discussed in the next step.

9. If you are configuring the system for boot from shared storage operation, go to the section “Configuring hardware for boot-from-shared storage”; otherwise, enter the volume name “vmfs3”and click Add Volume.

14

10. Map the storage volume (Figure 13).

Volume mapping is used to control which servers get what type of access (read/write) by specifying the Host WWNs (SAS device IDs) to which the volume may be connected. The SAS device ID (recorded in step 6) uniquely identifies the port on a server blade’s Smart Array P700m controller. Each P700m controller has four ports, thus four SAS device IDs. For c3000 enclosures, all four ports connect to SAS switches in bays 3 and 4. For c7000 enclosures, only two ports will be connected to a pair of switches (in bays 3 and 4, 5 and 6, or 7 and 8), so that 4-port connectivity will require two pairs of SAS switches.

The mapping of all ports (SAS device IDs) of a server’s P700m controller should specify the same LUN number for a storage volume. For VMware ESX servers, a storage volume exposed to multiple servers should be mapped to the same LUN number for each server. If the LUN is mapped as LUN 100 to the four SAS device IDs of the first server, it should also be mapped as LUN 100 to all other ESX servers that require shared access to that LUN. Storage volume mapping also specifies which MSA controller ports are used for accessing the volume, which is designated with a LUN value. Typically, all four MSA2012sa ports (A0, A1, B0, B1) are enabled to provide the best redundancy and minimize management overhead.

Figure 13. Creating storage volumes with the SMU

15

NOTE When configuring storage, be aware of the controller-to-Vdisk assignments. Ensure that the load is distributed evenly across both MSA controllers. A numerically even distribution of Vdisks across both controllers does not necessarily equate to an even distribution, since some Vdisks may contain applications more heavily used than others.

Configuring hardware for boot-from-shared storage Boot-from-shared storage is an optional capability of the HP Direct Connect Shared SAS storage system. The following procedure establishes the relationship between one server blade and a boot volume.

NOTE The following steps are described in detail in the white paper “Booting From Shared Storage with Direct Connect SAS Storage for HP BladeSystem and the HP StorageWorks MSA2012sa” available at http://h20195.www2.hp.com/PDF/4AA2-3667ENW.pdf

1. Using the Onboard Administrator of the c-Class enclosure, determine and record the Device IDs for the P700m controller ports.

2. Using a web browser and the appropriate IP address of the MSA2012sa enclosure, access the Storage Management Utility (SMU) to configure and set access to a server boot volume. Create a storage volume large enough to contain the ESX OS. Use the volume mapping function to enable server blade access to the volume by entering the Device IDs recorded in step 1. For each Device ID mapping, set the following parameters: - Enable read/write access - Enable all four MSA controller ports (A0, A1, B0, B1) for LUN access - Present the volume as LUN1 - Record the SAS ID (WWN) of the MSA 2012sa and serial number of the boot volume - Present only the boot volume (no data volumes) at this time (this will make it easier to find the boot volume from the server’s setup utility)

3. Using the ROM Based Setup Utility (RBSU) of the server blade, configure the P700m Controller as a boot controller device.

4. Boot the server blade to verify access to the boot device. During POST, the controller should register four logical disks (assuming four paths have been set up for high availability).

5. Configure the server blade for the boot LUN. Step 2 configured the MSA2012sa to present the boot volume as LUN1 to the server blade. Since there may be other MSA2012sa enclosures in the system with the same setting, the P700m Controller must be set to recognize a specific MSA2012sa as the boot device. Enter the option ROM utility of the P700m Controller (press F8 during server boot) and select the WWN of the MSA2012sa configured in step 2. Once the proper MSA2012sa is selected, a sub-selection menu will appear with a selection of presented LUNs. Select the LUN ID of the LUN configured as the boot device for this server.

6. Repeat steps 1 through 5 for all server blades and MSA 2012sa enclosures. 7. Install VMware ESX software onto the configured boot LUN.

16

For more information For additional information, refer to the resources listed below.

Resource Hyperlink

HP white paper “Booting From Shared Storage with Direct Connect SAS Storage for HP BladeSystem and the HP StorageWorks MSA2012sa”

http://h20195.www2.hp.com/PDF/4AA2-3667ENW.pdf

“HP Direct Connect Shared Storage for HP BladeSystem Solution Deployment Guide”

http://bizsupport2.austin.hp.com/bc/docs/support/SupportManual/c01634313/c01634313.pdf

HP MSA2012sa support documents (installation and user guides)

http://h20000.www2.hp.com/bizsupport/TechSupport/DocumentIndex.jsp?contentType=SupportManual&lang=en&cc=us&docIndexId=64179&taskId=101&prodTypeId=12169&prodSeriesId=3758996

HP MSA2000sa reference manuals http ://h20000.www2.hp.com/bizsupport/TechSupport/DocumentIndex.jsp ?lang=en&cc=us&taskId=101&prodClassId=-1&contentType=SupportManual&docIndexId=64180&prodTypeId=12169&prodSeriesId=3971509

VMware compatibility guide http://www.vmware.com/resources/compatibility/search.php

Call to action Send comments about this paper to: [email protected].

© 2009 Hewlett-Packard Development Company, L.P. The information contained herein is subject to change without notice. The only warranties for HP products and services are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. HP shall not be liable for technical or editorial errors or omissions contained herein.

17

TC090701TB, July 2009