Beyond php - it's not (just) about the code

-

Upload

wim-godden -

Category

Technology

-

view

571 -

download

0

Transcript of Beyond php - it's not (just) about the code

Wim GoddenCu.be Solutions@wimgtr

Beyond PHP :It's not (just) about the code

Who am I ?

Wim Godden (@wimgtr)

Where I'm from

Where I'm from

Where I'm from

Where I'm from

Where I'm from

Where I'm from

My town

My town

Belgium the traffic

Who am I ?

Wim Godden (@wimgtr)

Founder of Cu.be Solutions (http://cu.be)

Open Source developer since 1997

Developer of PHPCompatibility, PHPConsistent, Nginx SLIC, ...

Speaker at PHP and Open Source conferences

Cu.be Solutions ?

Open source consultancy

PHP-centered (ZF2, Symfony2, Magento, Pimcore, ...)

Training courses

High-speed redundant network (BGP, OSPF, VRRP)

High scalability developmentNginx + extensions

MySQL Cluster

Projects :mostly IT & Telecom companies

lots of public-facing apps/sites

Who are you ?

Developers ?

Anyone setup a MySQL master-slave ?

Anyone setup a site/app on separate web and database server ? How much traffic between them ?

5kbit/sec or 100Mbit/sec ?

The topic

Things we take for grantedFamous last words : "It should work just fine"

Works fine today might fail tomorrow

Most common mistakes

PHP code PHP ecosystem

It starts with...

code !

First up : database

Let's talk about code

Without : we don't exist

What are most common mistakes in ecosystem

Let's start with the database

Database queries complexity

SELECT DISTINCT n.nid, n.uid, n.title, n.type, e.event_start, e.event_start AS event_start_orig, e.event_end, e.event_end AS event_end_orig, e.timezone, e.has_time, e.has_end_date, tz.offset AS offset, tz.offset_dst AS offset_dst, tz.dst_region, tz.is_dst, e.event_start - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND AS event_start_utc, e.event_end - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND AS event_end_utc, e.event_start - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND + INTERVAL 0 SECOND AS event_start_user, e.event_end - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND + INTERVAL 0 SECOND AS event_end_user, e.event_start - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND + INTERVAL 0 SECOND AS event_start_site, e.event_end - INTERVAL IF(tz.is_dst, tz.offset_dst, tz.offset) HOUR_SECOND + INTERVAL 0 SECOND AS event_end_site, tz.name as timezone_name FROM node n INNER JOIN event e ON n.nid = e.nid INNER JOIN event_timezones tz ON tz.timezone = e.timezone INNER JOIN node_access na ON na.nid = n.nid LEFT JOIN domain_access da ON n.nid = da.nid LEFT JOIN node i18n ON n.tnid > 0 AND n.tnid = i18n.tnid AND i18n.language = 'en' WHERE (na.grant_view >= 1 AND ((na.gid = 0 AND na.realm = 'all'))) AND ((da.realm = "domain_id" AND da.gid = 4) OR (da.realm = "domain_site" AND da.gid = 0)) AND (n.language ='en' OR n.language ='' OR n.language IS NULL OR n.language = 'is' AND i18n.nid IS NULL) AND ( n.status = 1 AND ((e.event_start >= '2010-01-31 00:00:00' AND e.event_start = '2010-01-31 00:00:00' AND e.event_end = '2010-02-01 00:00:00' AND event_start = '2010-02-01 00:00:00' AND event_end explain select * from product where category=5 and stock=1;+----+-------+---------------+---------------+---------+------+------------+| id | TYPE | possible_keys | KEY | key_len | ROWS | Extra |+----+-------+---------------+---------------+---------+------+------------+| 1 | ref | categorystock | categorystock | 8 | 1 | |+----+-------+---------------+---------------+---------+------+------------+

+--------------+---------------+------+-----+------------+----------------+| Field | Type | Null | Key | Default | Extra |+--------------+---------------+------+-----+------------+----------------+| id | int(11) | NO | PRI | NULL | auto_increment || category | int(11) | YES | MUL | NULL | || stock | int(11) | YES | MUL | NULL | || description | varchar(255) | YES | | NULL | |...

mysql> show index from product;+----------+------------+---------------------+--------------+---------------|| Table | Non_unique | Key_name | Seq_in_index | Column_name |+----------+------------+---------------------+--------------+---------------+| product | 0 | PRIMARY | 1 | id || product | 1 | categorystock | 1 | category || product | 1 | categorystock | 2 | stock |...

Databases covering indexes

mysql>explain select category, stock, id from product where category=5 and stock=1;+----+-------+---------------+---------------+---------+-----+-------------+| id | TYPE | possible_keys | KEY | key_len | ROWS| Extra |+----+-------+---------------+---------------+---------+-----+-------------+

| 1 | ref | categorystock | categorystock | 8 | 1 | Using index |

+----+-------+---------------+---------------+---------+-----+-------------+

+--------------+---------------+------+-----+------------+----------------+| Field | Type | Null | Key | Default | Extra |+--------------+---------------+------+-----+------------+----------------+| id | int(11) | NO | PRI | NULL | auto_increment || category | int(11) | YES | MUL | NULL | || stock | int(11) | YES | MUL | NULL | || description | varchar(255) | YES | | NULL | |...

mysql> show index from product;+----------+------------+---------------------+--------------+---------------|| Table | Non_unique | Key_name | Seq_in_index | Column_name |+----------+------------+---------------------+--------------+---------------+| product | 0 | PRIMARY | 1 | id || product | 1 | categorystock | 1 | category || product | 1 | categorystock | 2 | stock |...

Databases when to use / not to use

Good at :Fetching data

Storing data

Searching through data

Bad at :select `name` from `room` where ceiling(`avgNoOfPeople`) = 8 full table scan creates temporary table

select `name` from `room` where avgNoOfPeople >= 7 and avgNoOfPeople filterByState('MN') ->find();

foreach ($customers as $customer) {

$contacts = ContactsQuery::create() ->filterByCustomerid($customer->getId()) ->find();

foreach ($contacts as $contact) { doSomestuffWith($contact); }

}

Get back to what I said

Lots of people use ORM- easier- don't need to write queries- object-oriented

but people start doing this

Imagine 10000 customers 10001 queries

Joins

$contacts = mysql_query(" select contacts.* from customer join contact on contact.customerid = customer.id where state = 'MN' ");while ($contact = mysql_fetch_array($contacts)) { doSomeStuffWith($contact);}

(or the ORM equivalent)

Not best code

Uses deprecated mysql extension

no error handling

Better...

10001 1 query

Sadly : people still produce code with query loops

Usually :Growth not anticipated

Internal app Public app

The origins of this talk

Customers :Projects we built

Projects we didn't build, but got pulled intoFixes

Changes

Infrastructure migration

15 years of 'how to cause mayhem with a few lines of code'

Client X

Jobs search site

Monitor job views :Daily hits

Weekly hits

Monthly hits

Which user saw which job

Client X

Originally : when user viewed job details

Now : when job is in search result

Search for 'php' 50 jobs = 50 jobs to be updated 50 updates for shown_today 50 updates for shown_week 50 updates for shown_month 50 inserts for shown_user= 200 queries for 1 search !

Client X : the code

foreach ($jobs as $job) {

$db->query(" insert into shown_today( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 "); $db->query(" insert into shown_week( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 ");

$db->query(" insert into shown_month( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 "); $db->query(" insert into shown_user( jobId, userId, when ) values ( " . $job['id'] . ", " . $user['id'] . ", now() ) ");

}

Client X : the graph

Client X : the numbers

600-1000 inserts/sec (peaks up to 1600)

400-1000 updates/sec (peaks up to 2600)

16 core machine

Client X : panic !

Mail : "MySQL slave is more than 5 minutes behind master"

We set it up who did they blame ?

Wait a second !

Client X : what's causing those peaks ?

Client X : possible cause ?

Code changes ? According to developers : none

Action : turn on general log, analyze with pt-query-digest 50+-fold increase in 4 queries Developers : 'Oops we did make a change'

After 3 days : 2,5 days behind

Every hour : 50 min extra lag

Client X : But why is the slave lagging ?

Master

SlaveFile :master-bin-xxxx.logFile :master-bin-xxxx.log

Slave I/O threadBinlog dumpthreadSlave SQLthreadMaster : 16 CPU cores12 cores for SQL1 core for binlog dumprest for system

Slave : 16 CPU cores1 core for slave I/O1 core for slave SQL

Client X : Master

Client X : Slave

Client X : fix ?

foreach ($jobs as $job) {

$db->query(" insert into shown_today( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 "); $db->query(" insert into shown_week( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 ");

$db->query(" insert into shown_month( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 "); $db->query(" insert into shown_user( jobId, userId, when ) values ( " . $job['id'] . ", " . $user['id'] . ", now() ) ");

}

Client X : the code change

insert into shown_today values (5, 1), (8, 1), (12, 1), (18, 1), on duplicate key ;

insert into shown_week values (5, 1), (8, 1), (12, 1), (18, 1), on duplicate key ;

insert into shown_month values (5, 1), (8, 1), (12, 1), (18, 1), on duplicate key ;

insert into shown_user values (5, 23, "2015-10-12 12:01:00"), (8, 23, "2015-10-12 12:01:00"), ;

Grouping

Works fine, but :

maximum size of string ?

PHP = no limit

MySQL = max_allowed_packet

Client X : the code change

$todayQuery = " insert into shown_today( jobId, number ) values ";

foreach ($jobs as $job) { $todayQuery .= "(" . $job['id'] . ", 1),";}

$todayQuery = substr($todayQuery, 0, strlen($todayQuery) - 1);

$todayQuery .= " on duplicate key update number = number + 1 ";

$db->query($todayQuery);

Grouping

Works fine, but :

maximum size of string ?

PHP = no limit

MySQL = max_allowed_packet

Client X : the chosen solution

$db->autocommit(false);

foreach ($jobs as $job) {

$db->query(" insert into shown_today( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 "); $db->query(" insert into shown_week( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 ");

$db->query(" insert into shown_month( jobId, number ) values( " . $job['id'] . ", 1 ) on duplicate key update number = number + 1 "); $db->query(" insert into shown_user( jobId, userId, when ) values ( " . $job['id'] . ", " . $user['id'] . ", now() ) ");

}$db->commit();

All in a single commit

Note : transaction has max. size

Possible : combination with previous solution

Client X : conclusion

For loops are bad (we already knew that)

Add master/slave and it gets much worse

Use transactions : it will provide huge performance increase

Better yet : use MariaDB 10 or higher slave_parallel_threads

Result : slave caught up 5 days later

Database Network

Customer Y

Top 10 site in Belgium

Growing rapidly

At peak traffic :Unexplicable latency on database

Load on webservers : minimal

Load on database servers : acceptable

Client Y : the network

took few moments to figure out

No network monitoring iptraf 100Mbit/sec limit packets dropped connections dropped

Customer : upgrade switch

Us : why 100Mbit/sec ?

Client Y : the network

60GB

700GB

700GB

Client Y : network overload

Cause : Drupal hooks retrieving data that was not needed

Only load data you actually need

Don't know at the start ? Use lazy loading

Caching :Same story

Memcached/Redis are fast

But : data still needs to cross the network

Databases network

What other network related issues ?

Network trouble : more than just traffic

Customer Z

150.000 visits/day

News ticker :XML feed from other site (owned by same customer)

Cached for 15 min

Customer Z fetching the feed

if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) {

unlink(APP_DIR . '/tmp/cacheFile.xml');

file_put_contents( APP_DIR . '/tmp/cacheFile.xml', file_get_contents('http://www.scrambledsitename.be/xml/feed.xml') );

}

$xmlfeed = ParseXmlFeed(APP_DIR . '/tmp/cacheFile.xml');

What's wrong with this code ?

Customer Z no feed without the source

Feed source

Customer Z no feed without the source

Feed source

Server on which feed located : crashed

Fine for few minutes (cache)

15 minutes : file_get_contents uses default_socket_timeout

Customer Z : timeout

default_socket_timeout : 60 sec by default

Each visitor : 60 sec wait time

People keep hitting refresh more load

More active connections more load

Apache hits maximum connections entire site down

Customer Z fetching the feed

if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) { unlink(APP_DIR . '/tmp/cacheFile.xml'); file_put_contents( APP_DIR . '/tmp/cacheFile.xml', file_get_contents('http://www.scrambledsitename.be/xml/feed.xml') );

}

$xmlfeed = ParseXmlFeed(APP_DIR . '/tmp/cacheFile.xml');

Customer Z : timeout fix

$context = stream_context_create( array( 'http' => array( 'timeout' => 5 ) ));if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) { unlink(APP_DIR . '/tmp/cacheFile.xml'); file_put_contents( APP_DIR . '/tmp/cacheFile.xml', file_get_contents( 'http://www.scrambledsitename.be/xml/feed.xml', false, $context ) );}$xmlfeed = ParseXmlFeed(APP_DIR . '/tmp/cacheFile.xml');

Better, not perfect.

What else is wrong ?

Multiple visitors hit expiring cache file delete xml feed hit a lot

Customer Z : don't delete from cache

$context = stream_context_create( array( 'http' => array( 'timeout' => 5 ) ));if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) { unlink(APP_DIR . '/tmp/cacheFile.xml'); file_put_contents( APP_DIR . '/tmp/cacheFile.xml', file_get_contents( 'http://www.scrambledsitename.be/xml/feed.xml', false, $context ) );}$xmlfeed = ParseXmlFeed(APP_DIR . '/tmp/cacheFile.xml');

Better, not perfect.

What else is wrong ?

Multiple visitors hit expiring cache file delete xml feed hit a lot

Customer Z : don't delete from cache

$context = stream_context_create( array( 'http' => array( 'timeout' => 5 ) ));if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) { file_put_contents( APP_DIR . '/tmp/cacheFile.xml', file_get_contents( 'http://www.scrambledsitename.be/xml/feed.xml', false, $context ) );}

$xmlfeed = ParseXmlFeed(APP_DIR . '/tmp/cacheFile.xml');

Better, not perfect.

What else is wrong ?

Multiple visitors hit expiring cache file delete xml feed hit a lot

Customer Z : don't delete from cache

$context = stream_context_create( array( 'http' => array( 'timeout' => 5 ) ));if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) { $feed = file_get_contents( 'http://www.scrambledsitename.be/xml/feed.xml', false, $context ); if ($feed !== false) { file_put_contents( APP_DIR . '/tmp/cacheFile.xml', $feed ); }}$xmlfeed = ParseXmlFeed(APP_DIR . '/tmp/cacheFile.xml');

Better, not perfect.

What else is wrong ?

Multiple visitors hit expiring cache file delete xml feed hit a lot

Customer Z : process early

$context = stream_context_create( array( 'http' => array( 'timeout' => 5 ) ));if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) { $feed = file_get_contents( 'http://www.scrambledsitename.be/xml/feed.xml', false, $context ); if ($feed !== false) { file_put_contents( APP_DIR . '/tmp/cacheFile.xml', ParseXmlFeed($feed) ); }}

Better, not perfect.

What else is wrong ?

Multiple visitors hit expiring cache file delete xml feed hit a lot

Customer Z : file_[get|put]_contents atomicity

if (filectime(APP_DIR . '/tmp/cacheFile.xml') < time() - 900) { $feed = file_get_contents( 'http://www.scrambledsitename.be/xml/feed.xml', false, $context ); if ($feed !== false) { file_put_contents( APP_DIR . '/tmp/cacheFile.xml', ParseXmlFeed($feed) ); }}

Relying on user concurrent requests possible data corruption

Better : run every 15min through cronjob

Better, not perfect.

What else is wrong ?

Multiple visitors hit expiring cache file delete xml feed hit a lot

Network resources

Use timeouts for all :fopen

curl

SOAP

Data source trusted ? setup a webservice let them push updates when their feed changes less load on data source no timeout issues

Add logging early detection

Logging

Logging = good

Logging in PHP using fopen bad idea : locking issues Use monolog : file, syslog, mail, Pushover, HipChat, Graylog, Rollbar, ElasticSearch (and 50 more)

For Firefox : FirePHP (add-on for Firebug)

Debug logging = bad on production

Watch your logs !

Don't log on slow disks I/O bottlenecks

File system : I/O bottlenecks

Causes :Excessive writes (database updates, logfiles, swapping, )

Excessive reads (non-indexed database queries, swapping, small file system cache, )

How to detect ?top

iostat

See iowait ? Stop worrying about php, fix the I/O problem !

Cpu(s): 0.2%us, 3.0%sy, 0.0%ni, 61.4%id, 35.5%wa, 0.0%hi

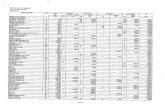

avg-cpu: %user %nice %system %iowait %steal %idle 0.10 0.00 0.96 53.70 0.00 45.24

Device: tps Blk_read/s Blk_wrtn/s Blk_read Blk_wrtnsda 120.40 0.00 123289.60 0 616448sdb 2.10 0.00 4378.10 0 18215dm-0 4.20 0.00 36.80 0 184dm-1 0.00 0.00 0.00 0 0

File system

Worst of all : NFSPHP files lstat calls

Templates same

Sessions locking issues corrupt data store sessions in database, Memcached, Redis, ...

Step-by-step : most common issues

Using NFS ? Get rid of it ;-)

iowait on database serverI/O reads (use iostat) missing/wrong indexes too many queries select *

I/O writes no transactions too many queries bad DB engine settings

iowait on webserver (logs ? static files ?)

CPU on database server (missing/wrong/too many indexes)

CPU on webserver (PHP)

Much more than code

DBserver

Webserver

User

Network

XML feed

How do you treat your data :- where do you get it- how long did you have to wait to get it- how is it transported- how is it processed

minimize the amount of data :retrievedtransportedprocessed,sent to db and users

Look beyond PHP (or Perl, Ruby, Python, ...) !

Questions ?

Questions ?

Contact

Twitter @wimgtr

Slides http://www.slideshare.net/wimg

E-mail [email protected]

Thanks !

Please provide feedback through Joind.in : https://joind.in/15535

![Beyond Laravel - php[architect]](https://static.fdocuments.in/doc/165x107/61f0c23360a6fd1e00003124/beyond-laravel-phparchitect.jpg)